ECE 5424 Introduction to Machine Learning Topics Neural

![Visualizing Learned Filters (C) Dhruv Batra Figure Credit: [Zeiler & Fergus ECCV 14] 42 Visualizing Learned Filters (C) Dhruv Batra Figure Credit: [Zeiler & Fergus ECCV 14] 42](https://slidetodoc.com/presentation_image_h2/94dad62edce0e7bb3c144b9a95ce2f80/image-42.jpg)

![Visualizing Learned Filters (C) Dhruv Batra Figure Credit: [Zeiler & Fergus ECCV 14] 43 Visualizing Learned Filters (C) Dhruv Batra Figure Credit: [Zeiler & Fergus ECCV 14] 43](https://slidetodoc.com/presentation_image_h2/94dad62edce0e7bb3c144b9a95ce2f80/image-43.jpg)

![Visualizing Learned Filters (C) Dhruv Batra Figure Credit: [Zeiler & Fergus ECCV 14] 44 Visualizing Learned Filters (C) Dhruv Batra Figure Credit: [Zeiler & Fergus ECCV 14] 44](https://slidetodoc.com/presentation_image_h2/94dad62edce0e7bb3c144b9a95ce2f80/image-44.jpg)

![Sparse DBNs [Lee et al. ICML ‘ 09] (C) Dhruv Batra Figure courtesy: Quoc Sparse DBNs [Lee et al. ICML ‘ 09] (C) Dhruv Batra Figure courtesy: Quoc](https://slidetodoc.com/presentation_image_h2/94dad62edce0e7bb3c144b9a95ce2f80/image-48.jpg)

- Slides: 50

ECE 5424: Introduction to Machine Learning Topics: – Neural Networks – Backprop Readings: Murphy 16. 5 Stefan Lee Virginia Tech

Recap of Last Time (C) Dhruv Batra 2

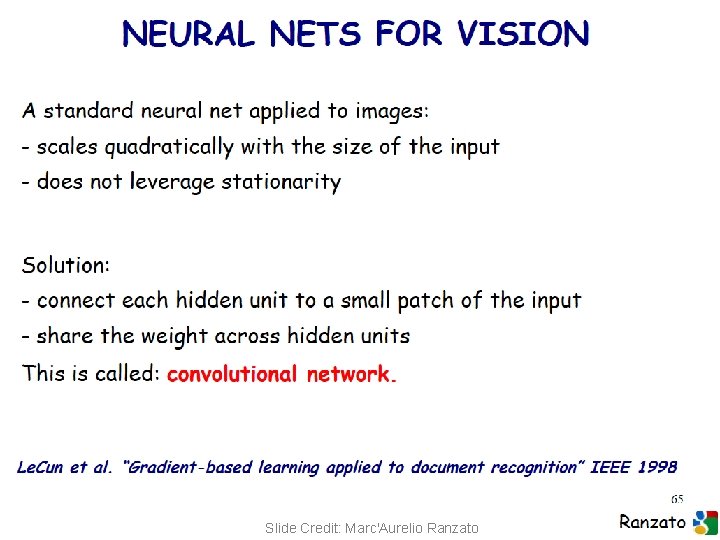

Not linearly separable data • Some datasets are not linearly separable! – http: //www. eee. metu. edu. tr/~alatan/Courses/Demo/Applet. SV M. html

Addressing non-linearly separable data – Option 1, non-linear features • Choose non-linear features, e. g. , – Typical linear features: w 0 + i wi xi – Example of non-linear features: • Degree 2 polynomials, w 0 + i wi xi + ij wij xi xj • Classifier hw(x) still linear in parameters w – As easy to learn – Data is linearly separable in higher dimensional spaces – Express via kernels (C) Dhruv Batra Slide Credit: Carlos Guestrin 4

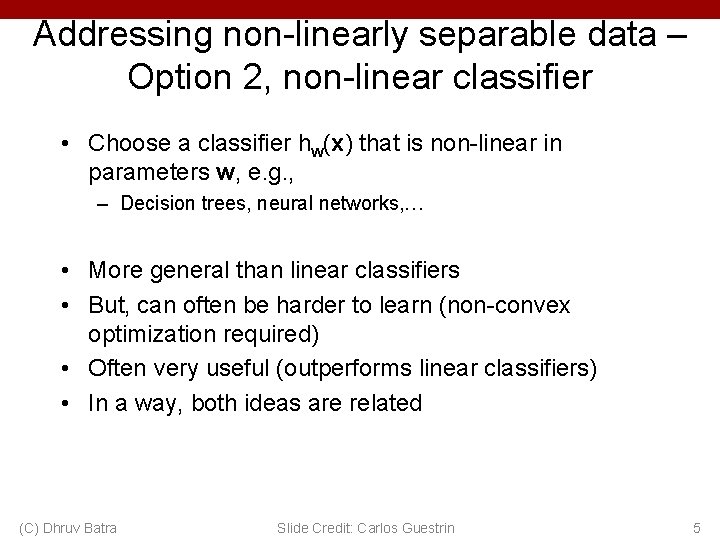

Addressing non-linearly separable data – Option 2, non-linear classifier • Choose a classifier hw(x) that is non-linear in parameters w, e. g. , – Decision trees, neural networks, … • More general than linear classifiers • But, can often be harder to learn (non-convex optimization required) • Often very useful (outperforms linear classifiers) • In a way, both ideas are related (C) Dhruv Batra Slide Credit: Carlos Guestrin 5

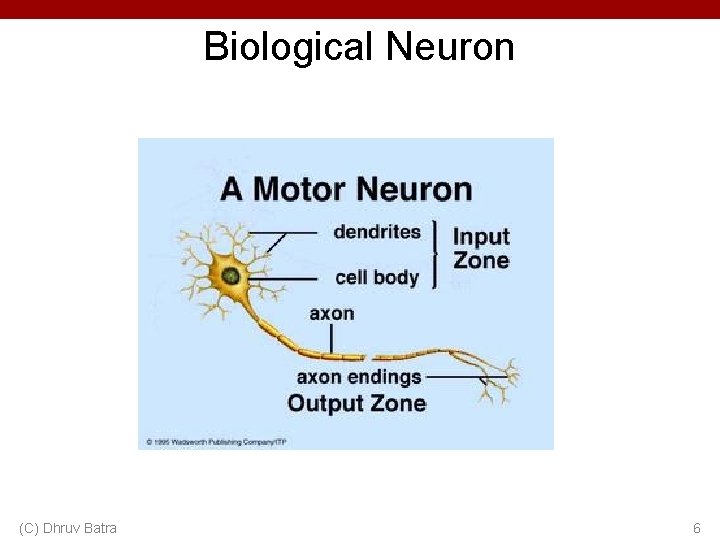

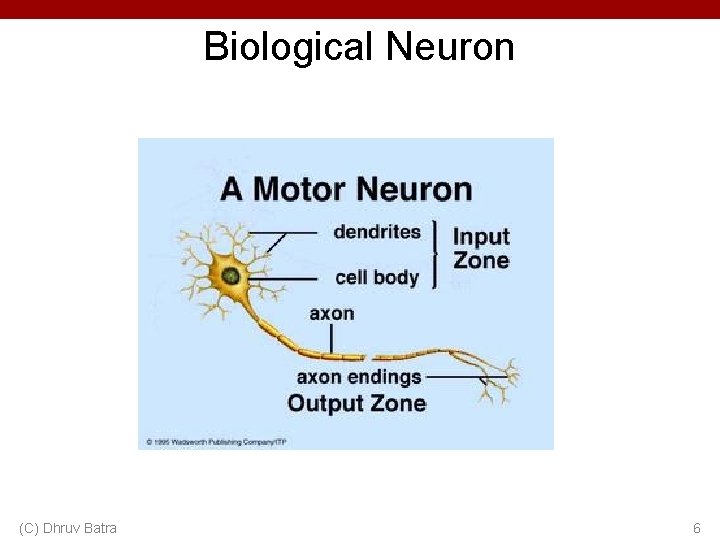

Biological Neuron (C) Dhruv Batra 6

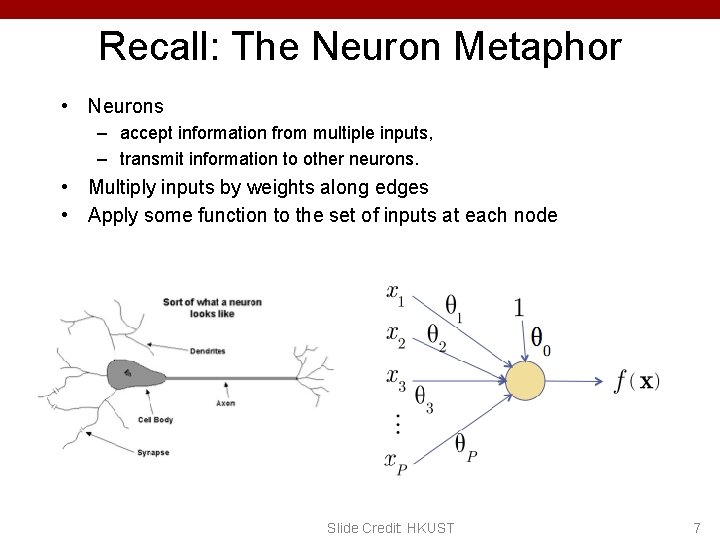

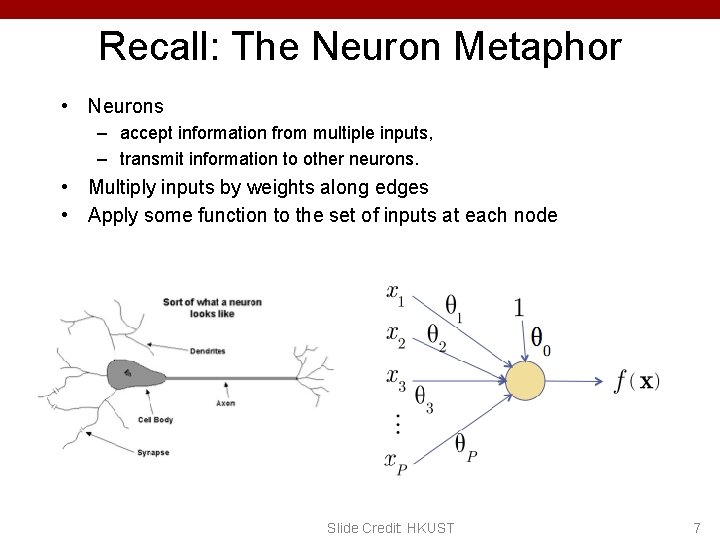

Recall: The Neuron Metaphor • Neurons – accept information from multiple inputs, – transmit information to other neurons. • Multiply inputs by weights along edges • Apply some function to the set of inputs at each node Slide Credit: HKUST 7

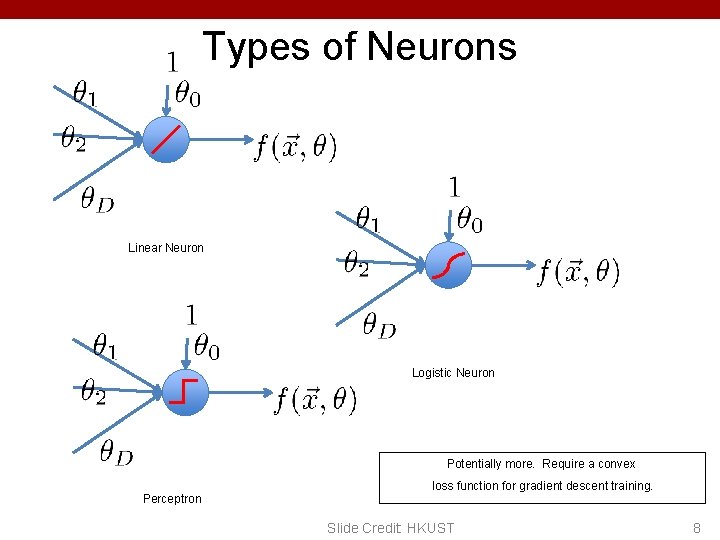

Types of Neurons Linear Neuron Logistic Neuron Potentially more. Require a convex Perceptron loss function for gradient descent training. Slide Credit: HKUST 8

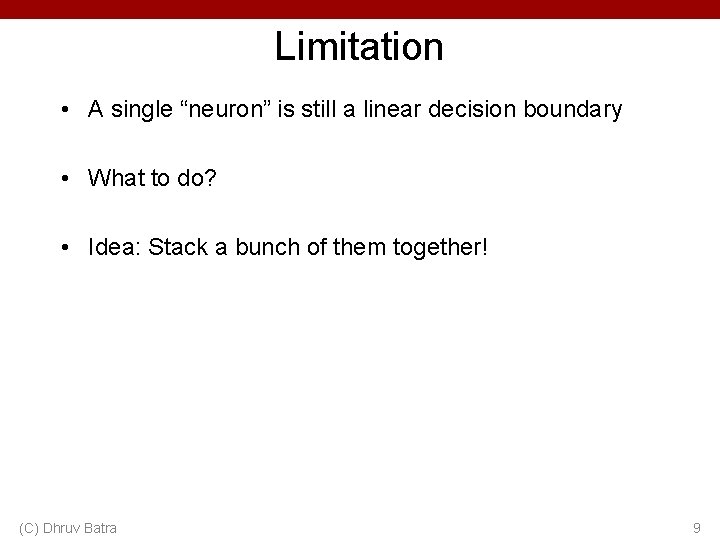

Limitation • A single “neuron” is still a linear decision boundary • What to do? • Idea: Stack a bunch of them together! (C) Dhruv Batra 9

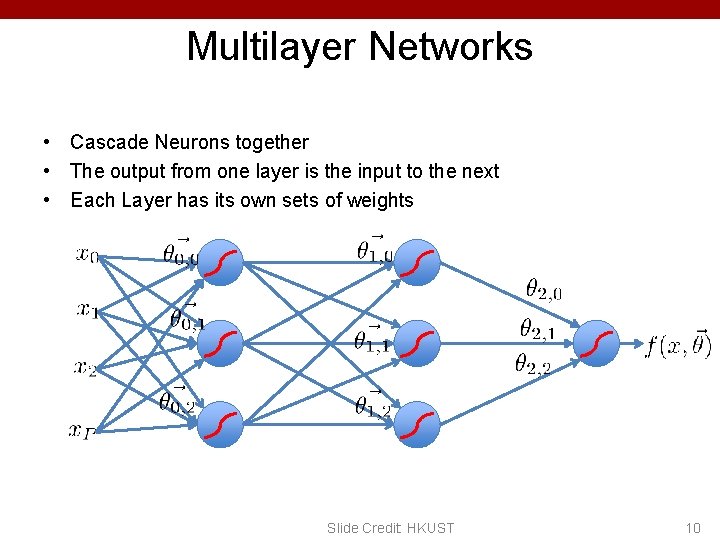

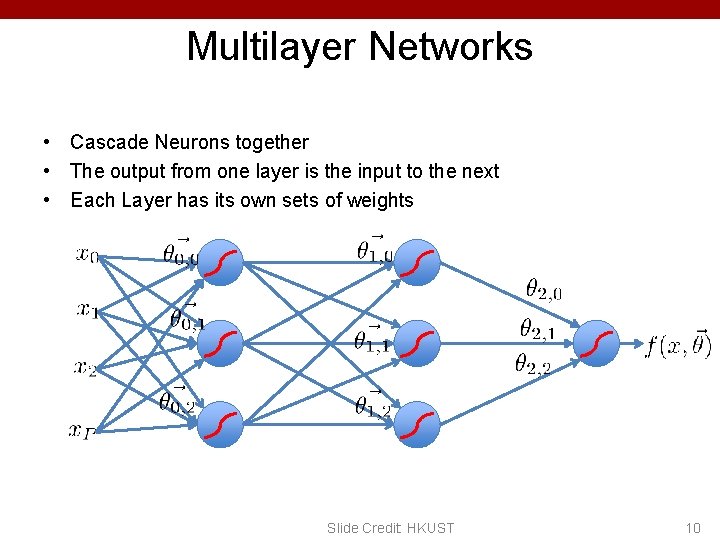

Multilayer Networks • Cascade Neurons together • The output from one layer is the input to the next • Each Layer has its own sets of weights Slide Credit: HKUST 10

Universal Function Approximators • Theorem – 3 -layer network with linear outputs can uniformly approximate any continuous function to arbitrary accuracy, given enough hidden units [Funahashi ’ 89] (C) Dhruv Batra 11

Plan for Today • Neural Networks – Parameter learning – Backpropagation (C) Dhruv Batra 12

Forward Propagation • On board (C) Dhruv Batra 13

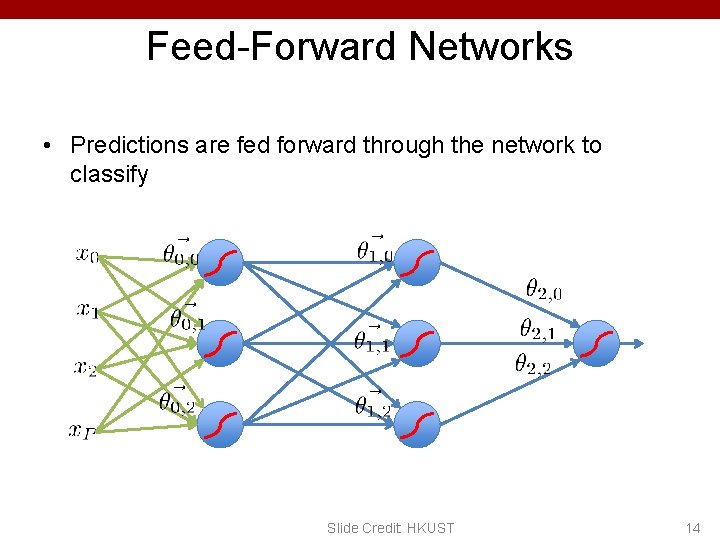

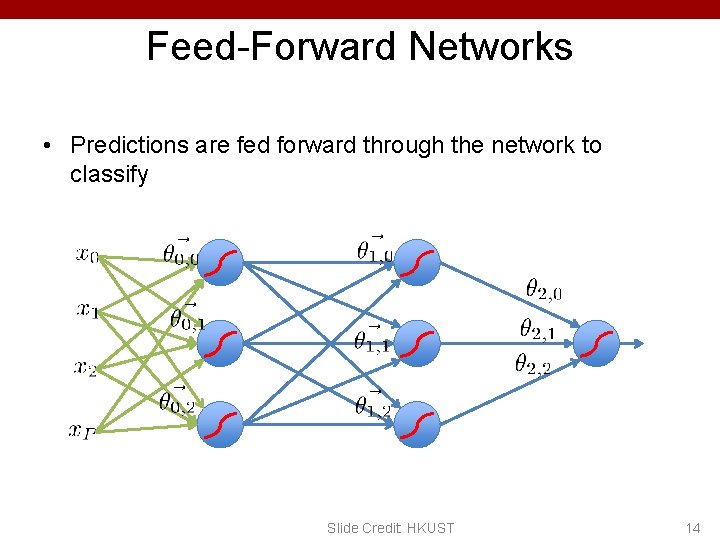

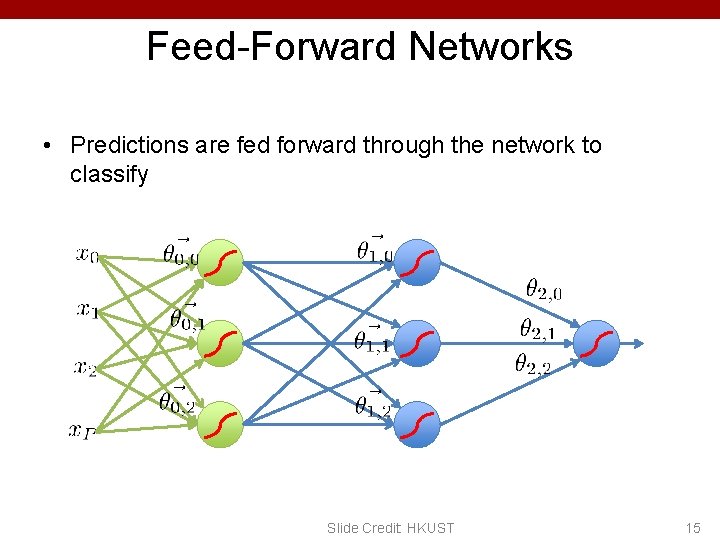

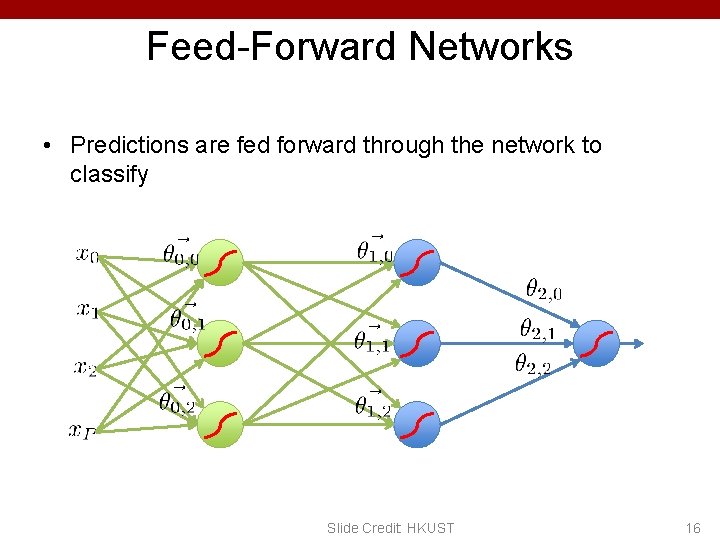

Feed-Forward Networks • Predictions are fed forward through the network to classify Slide Credit: HKUST 14

Feed-Forward Networks • Predictions are fed forward through the network to classify Slide Credit: HKUST 15

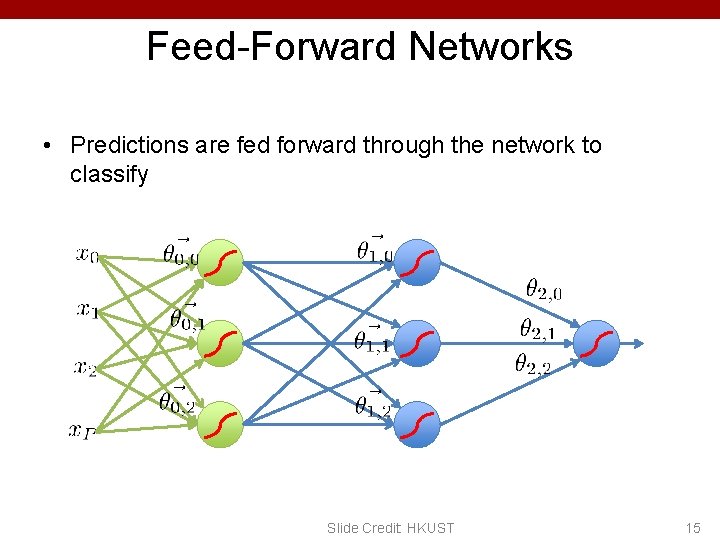

Feed-Forward Networks • Predictions are fed forward through the network to classify Slide Credit: HKUST 16

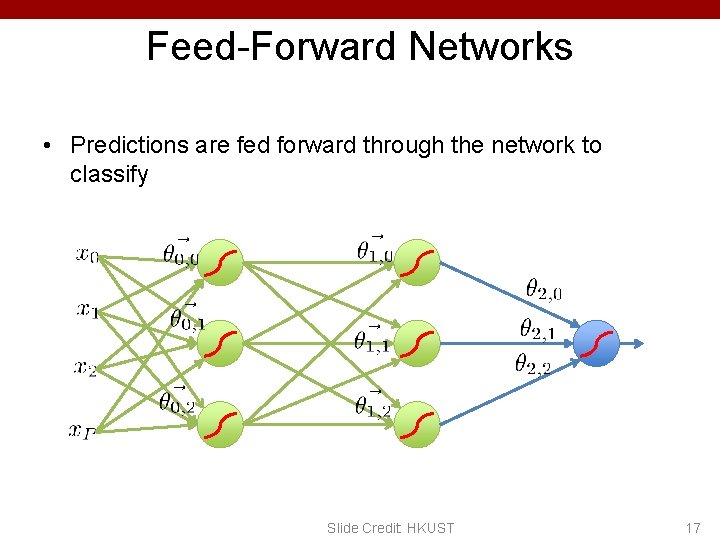

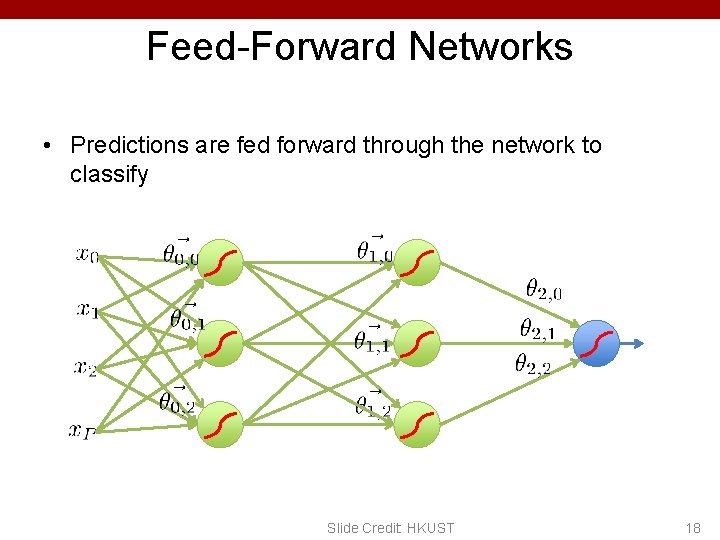

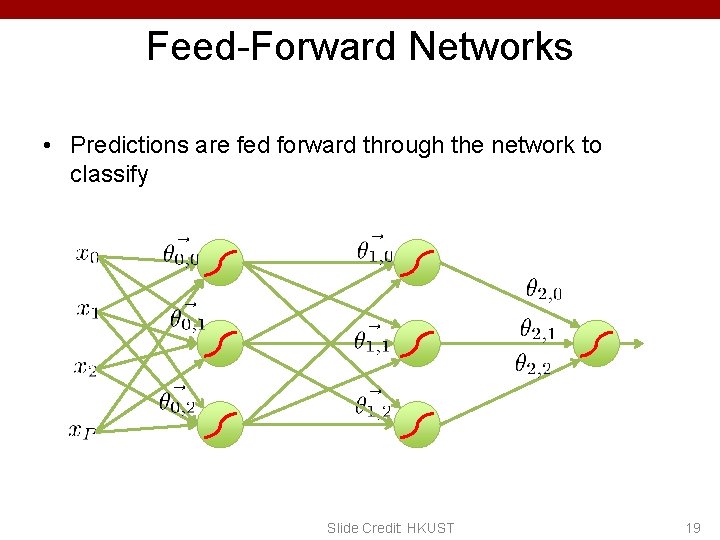

Feed-Forward Networks • Predictions are fed forward through the network to classify Slide Credit: HKUST 17

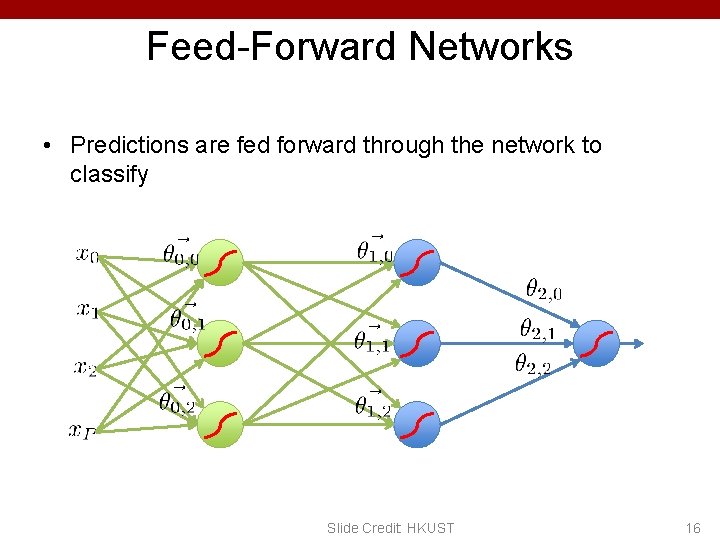

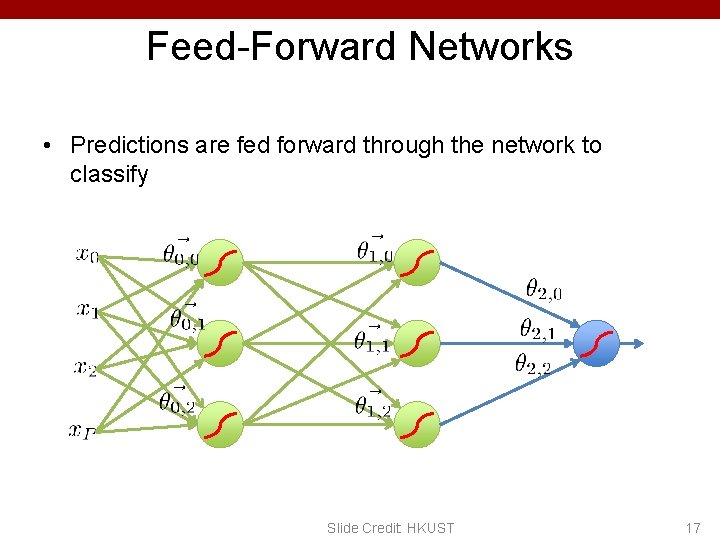

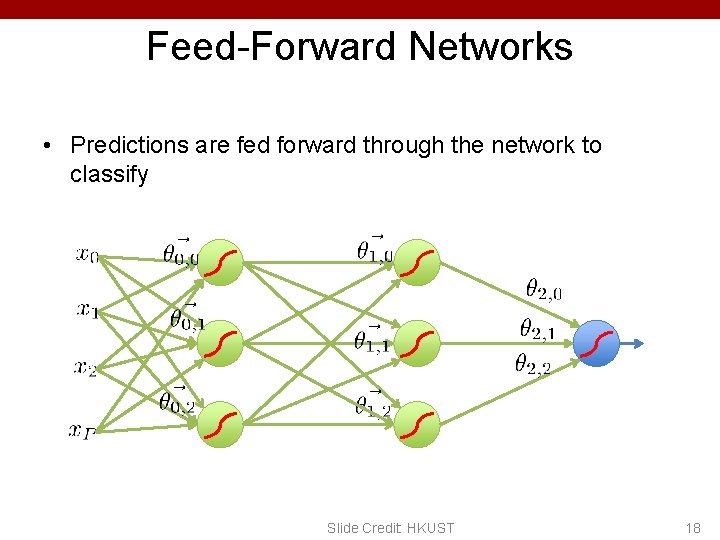

Feed-Forward Networks • Predictions are fed forward through the network to classify Slide Credit: HKUST 18

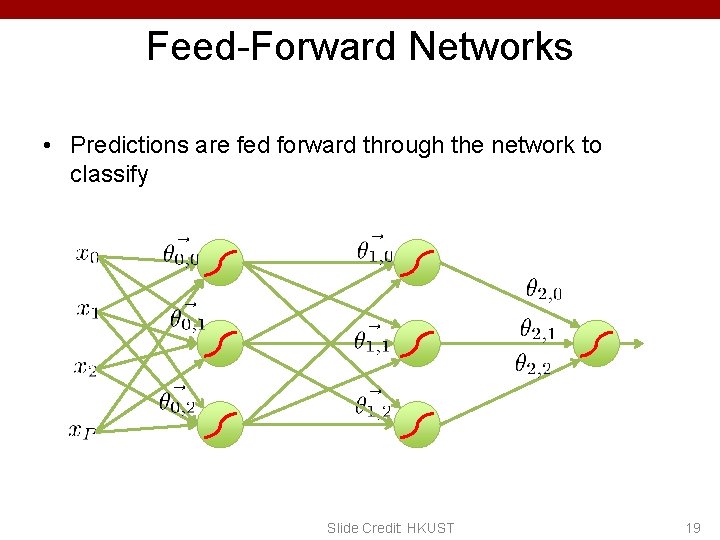

Feed-Forward Networks • Predictions are fed forward through the network to classify Slide Credit: HKUST 19

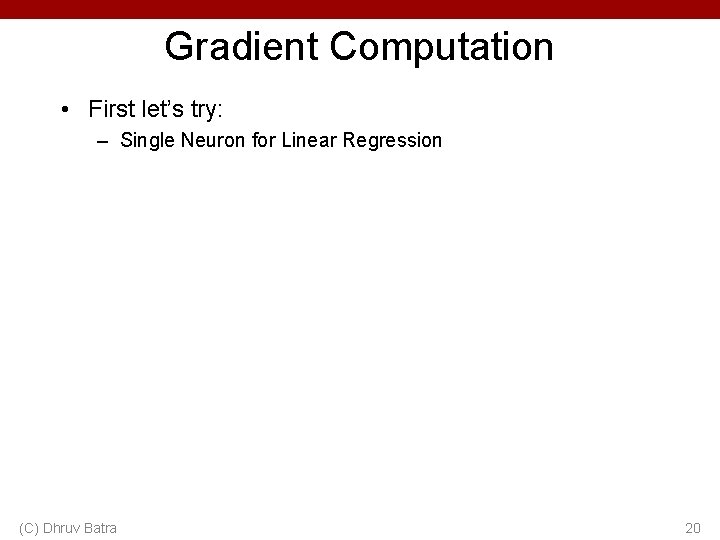

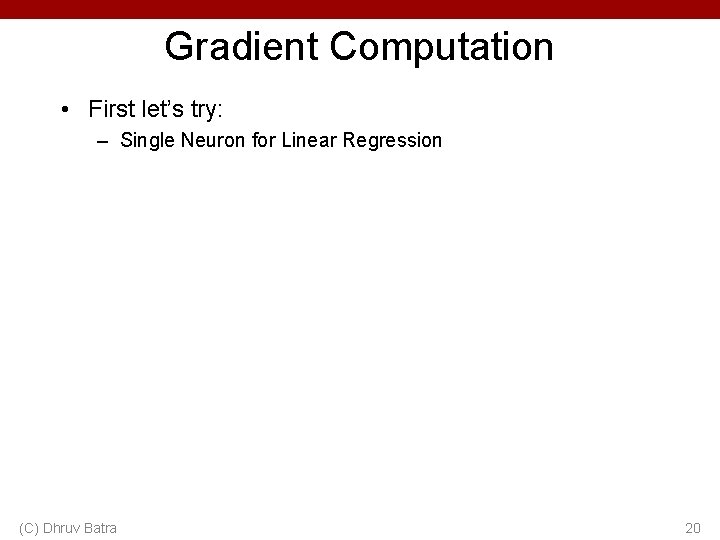

Gradient Computation • First let’s try: – Single Neuron for Linear Regression (C) Dhruv Batra 20

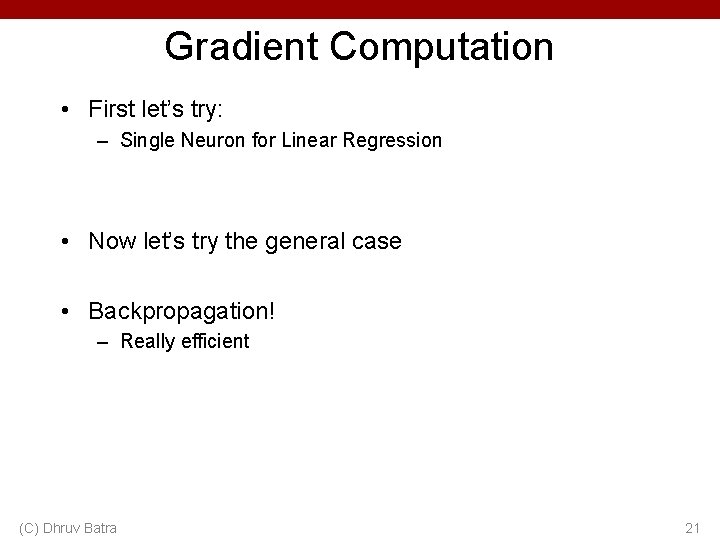

Gradient Computation • First let’s try: – Single Neuron for Linear Regression • Now let’s try the general case • Backpropagation! – Really efficient (C) Dhruv Batra 21

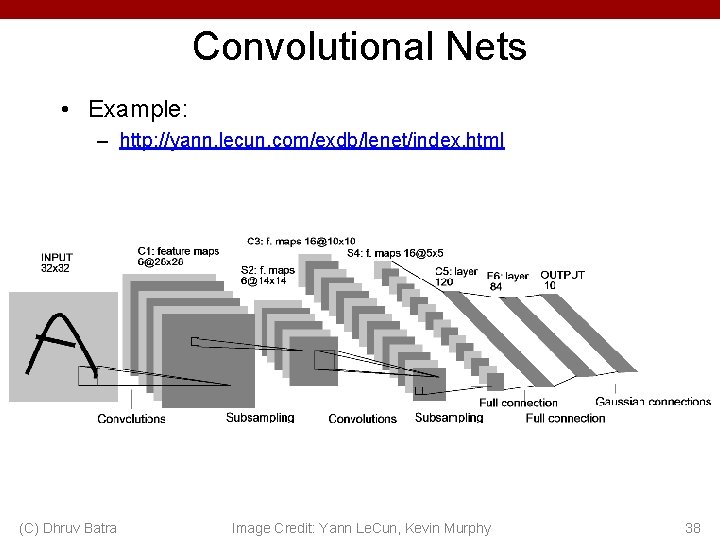

Neural Nets • Best performers on OCR – http: //yann. lecun. com/exdb/lenet/index. html • Net. Talk – Text to Speech system from 1987 – http: //youtu. be/t. XMa. Fh. O 6 d. IY? t=45 m 15 s • Rick Rashid speaks Mandarin – http: //youtu. be/Nu-nl. Qq. FCKg? t=7 m 30 s (C) Dhruv Batra 22

Historical Perspective (C) Dhruv Batra 23

Convergence of backprop • Perceptron leads to convex optimization – Gradient descent reaches global minima • Multilayer neural nets not convex – – – Gradient descent gets stuck in local minima Hard to set learning rate Selecting number of hidden units and layers = fuzzy process NNs had fallen out of fashion in 90 s, early 2000 s Back with a new name and significantly improved performance!!!! • Deep networks – Dropout and trained on much larger corpus (C) Dhruv Batra Slide Credit: Carlos Guestrin 24

Overfitting • Many many parameters • Avoiding overfitting? – More training data – Regularization – Early stopping (C) Dhruv Batra 25

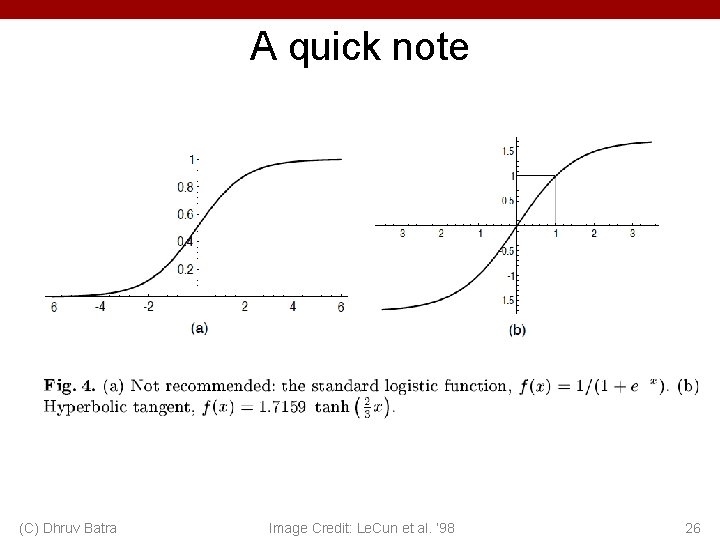

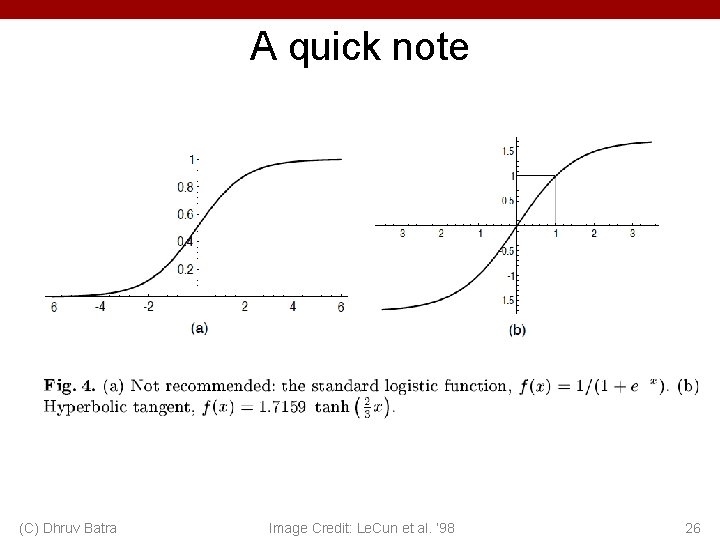

A quick note (C) Dhruv Batra Image Credit: Le. Cun et al. ‘ 98 26

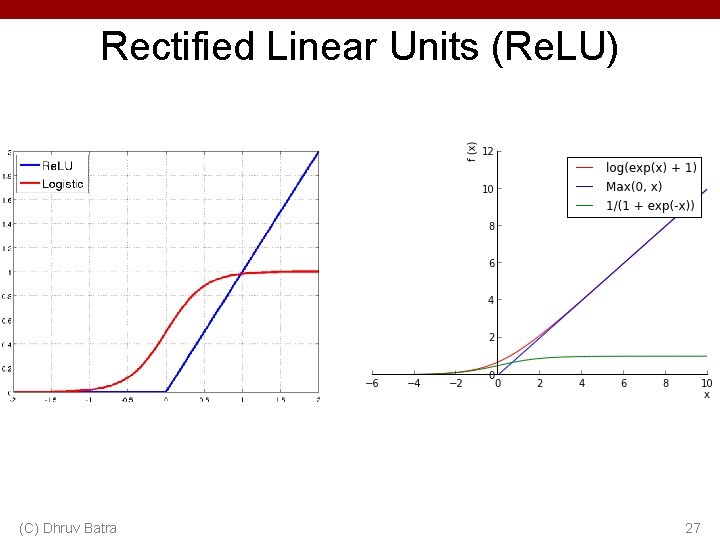

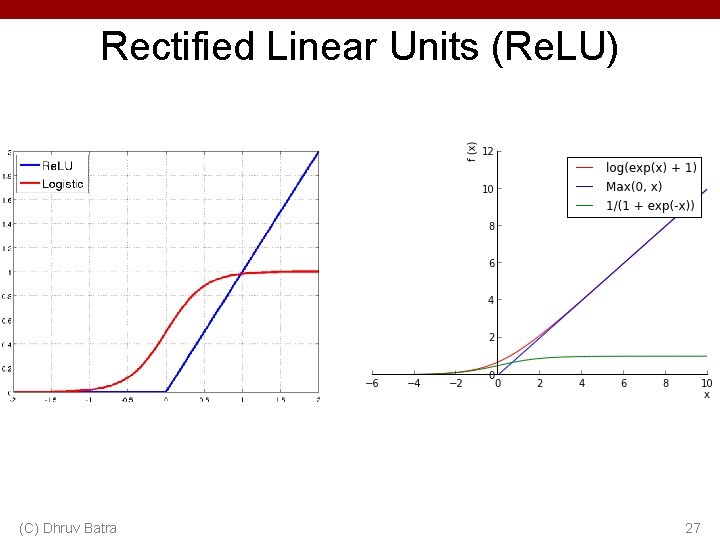

Rectified Linear Units (Re. LU) (C) Dhruv Batra 27

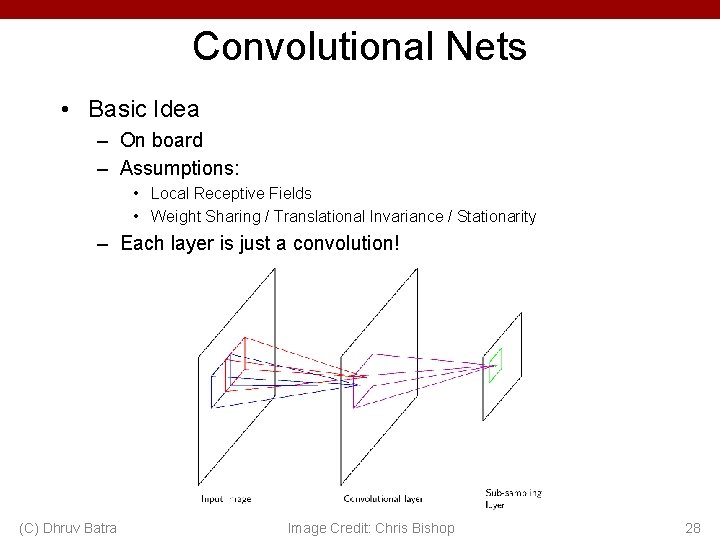

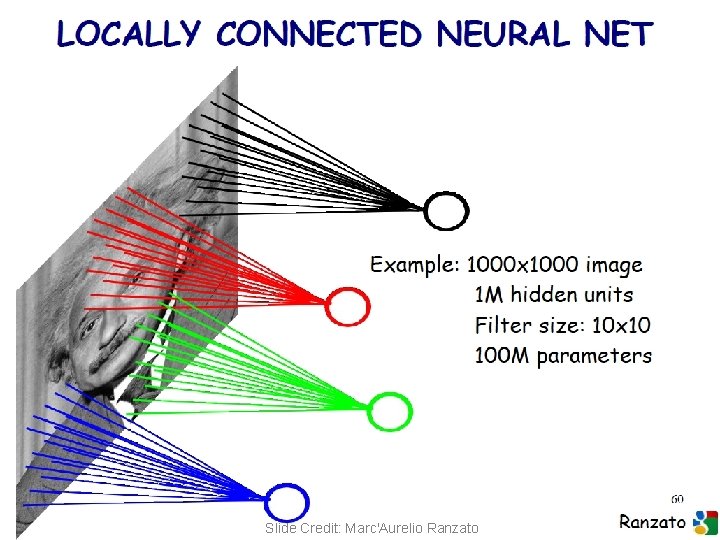

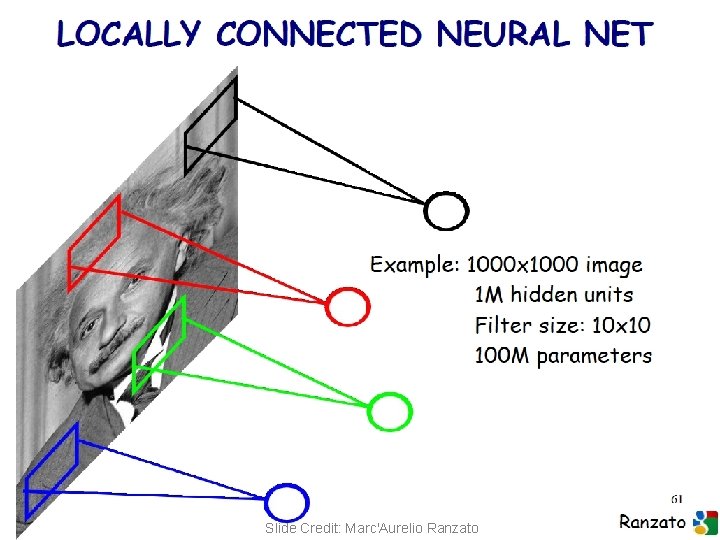

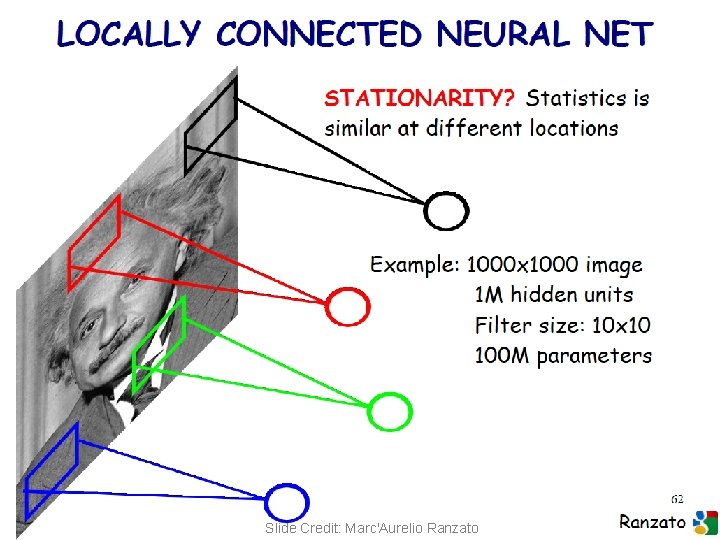

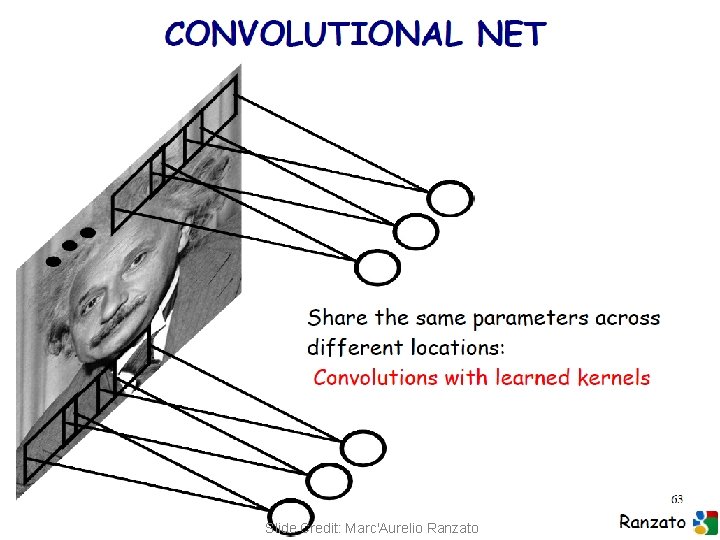

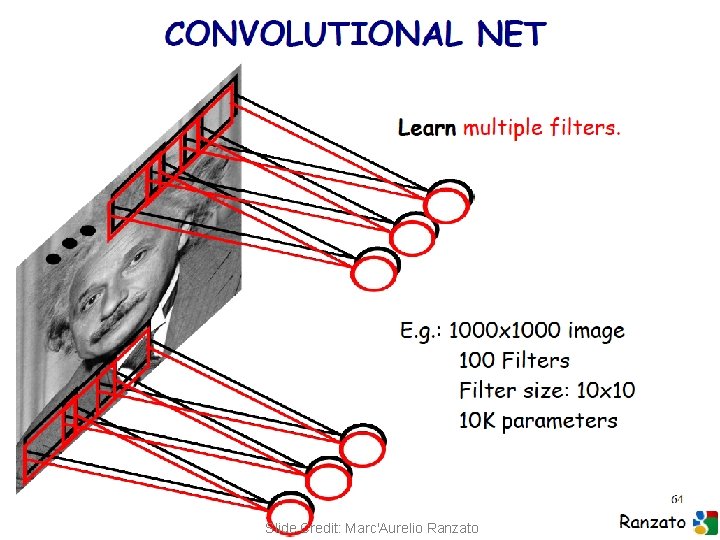

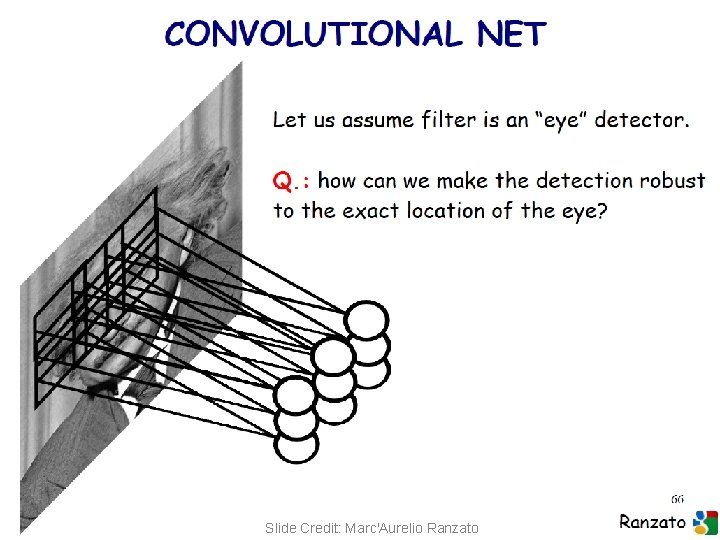

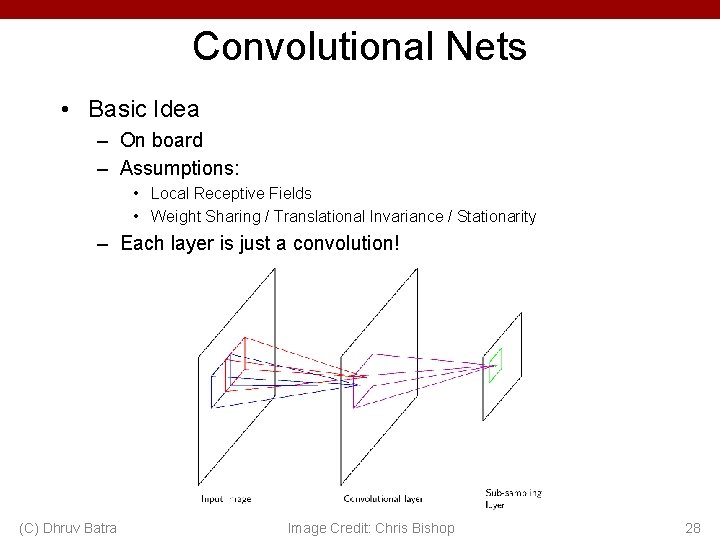

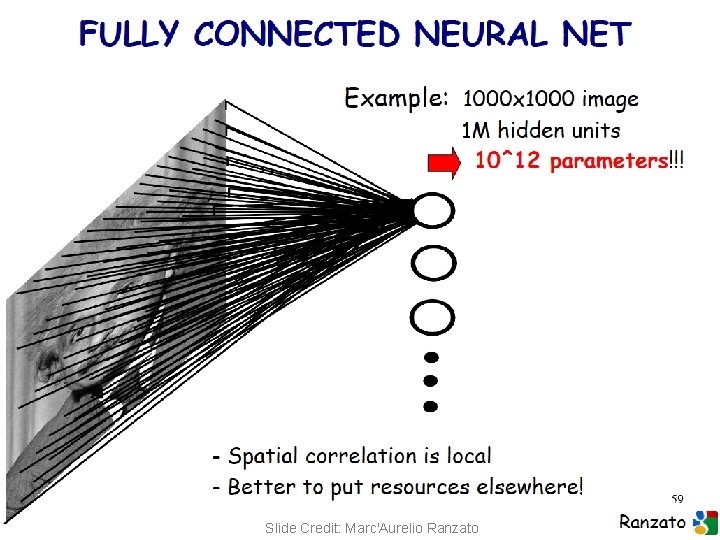

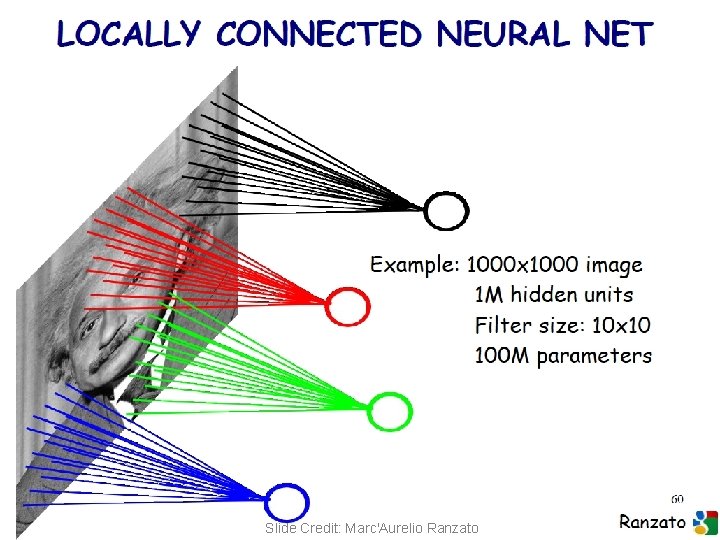

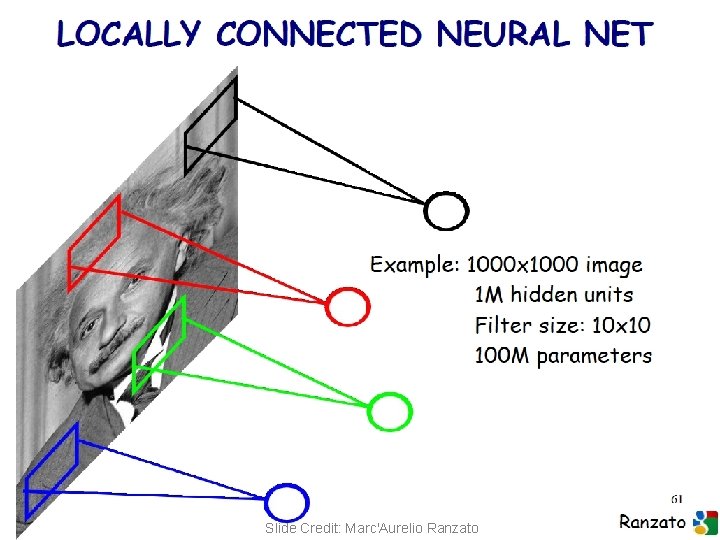

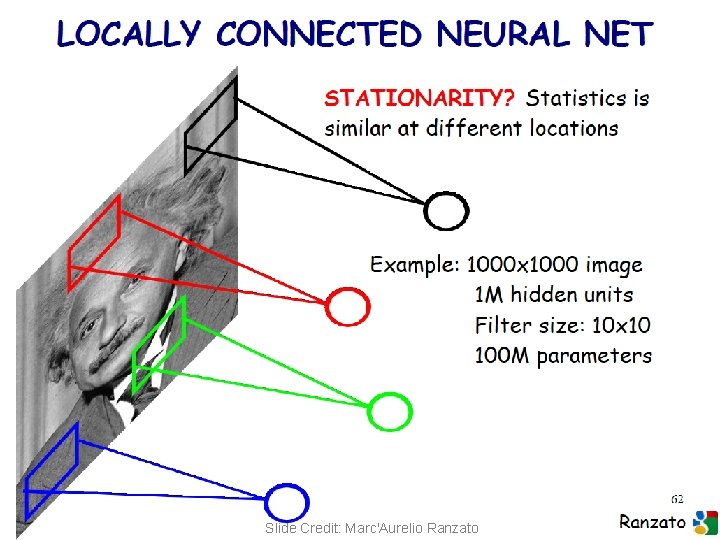

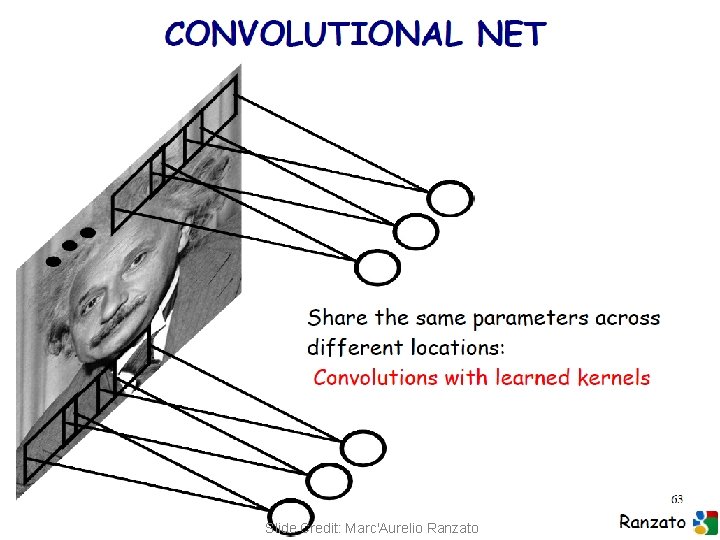

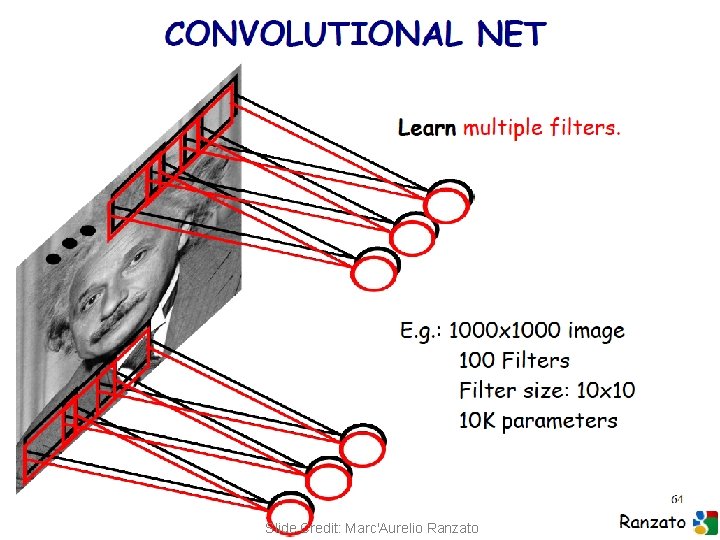

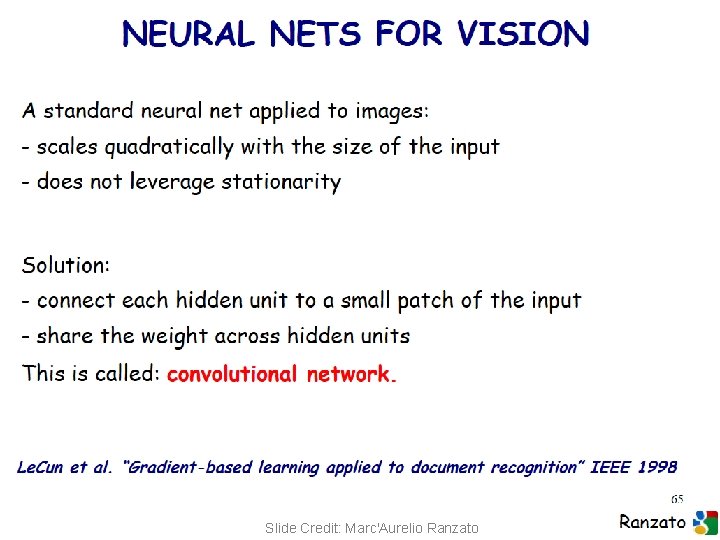

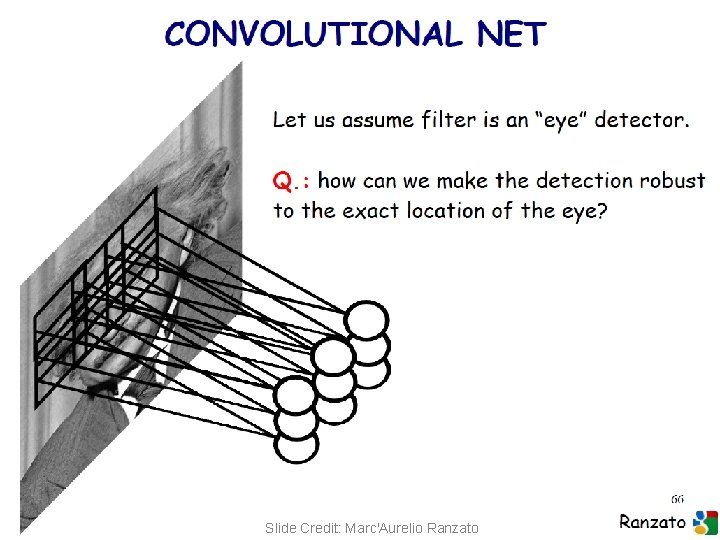

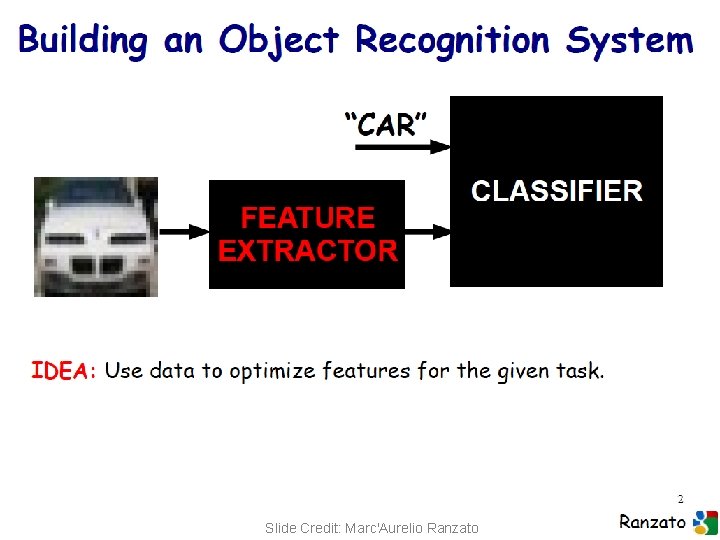

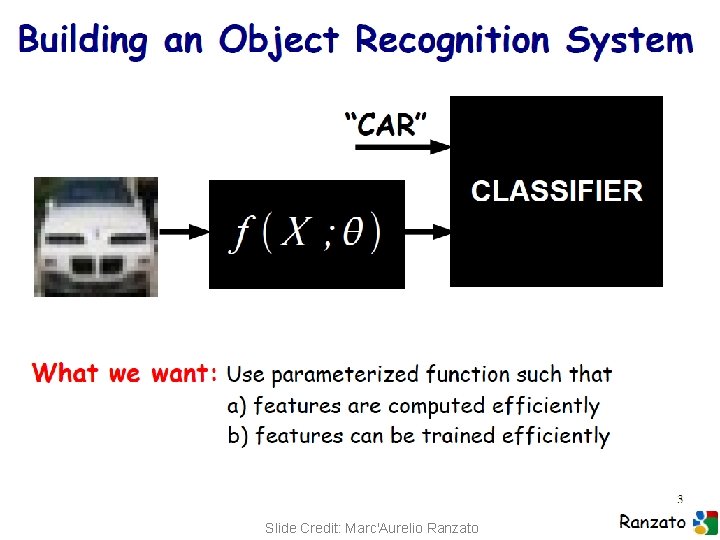

Convolutional Nets • Basic Idea – On board – Assumptions: • Local Receptive Fields • Weight Sharing / Translational Invariance / Stationarity – Each layer is just a convolution! (C) Dhruv Batra Image Credit: Chris Bishop 28

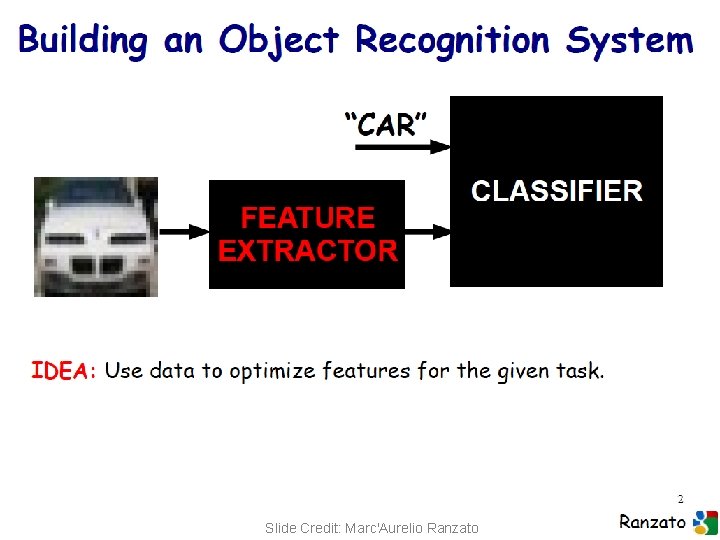

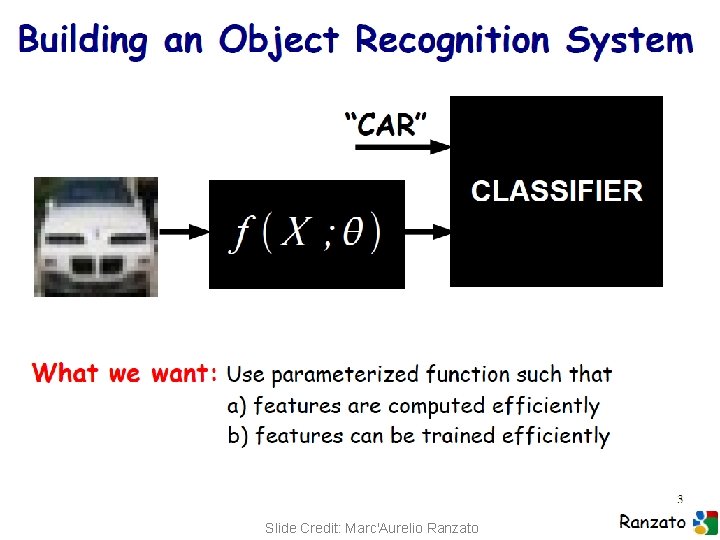

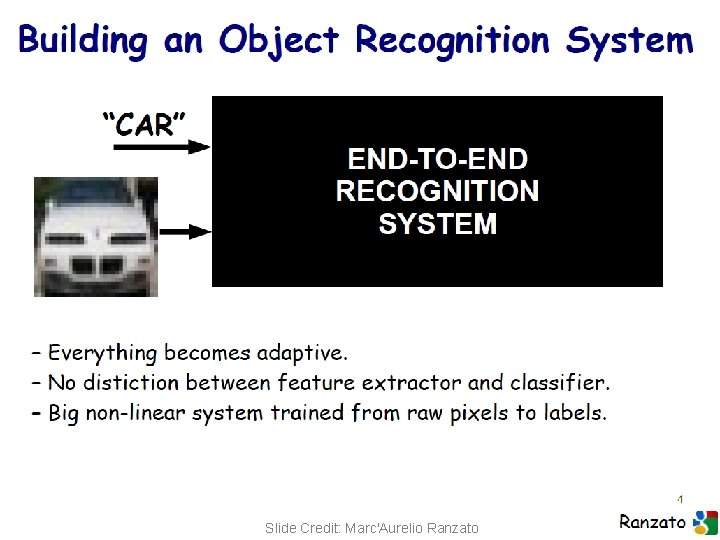

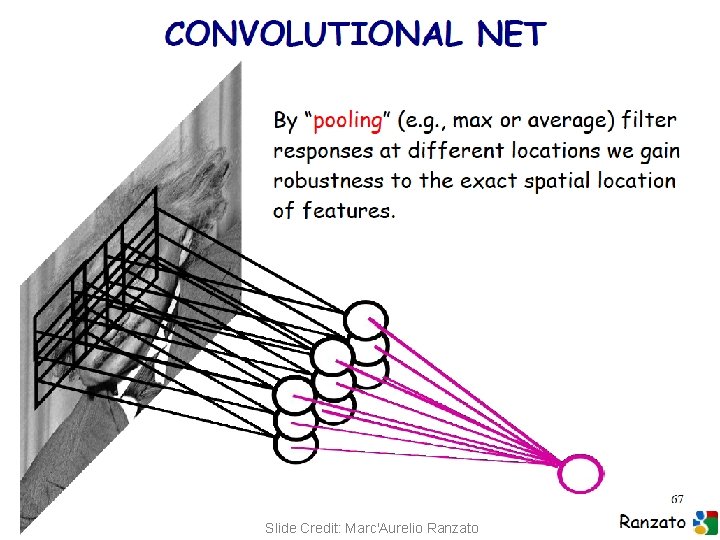

(C) Dhruv Batra Slide Credit: Marc'Aurelio Ranzato 29

(C) Dhruv Batra Slide Credit: Marc'Aurelio Ranzato 30

(C) Dhruv Batra Slide Credit: Marc'Aurelio Ranzato 31

(C) Dhruv Batra Slide Credit: Marc'Aurelio Ranzato 32

(C) Dhruv Batra Slide Credit: Marc'Aurelio Ranzato 33

(C) Dhruv Batra Slide Credit: Marc'Aurelio Ranzato 34

(C) Dhruv Batra Slide Credit: Marc'Aurelio Ranzato 35

(C) Dhruv Batra Slide Credit: Marc'Aurelio Ranzato 36

(C) Dhruv Batra Slide Credit: Marc'Aurelio Ranzato 37

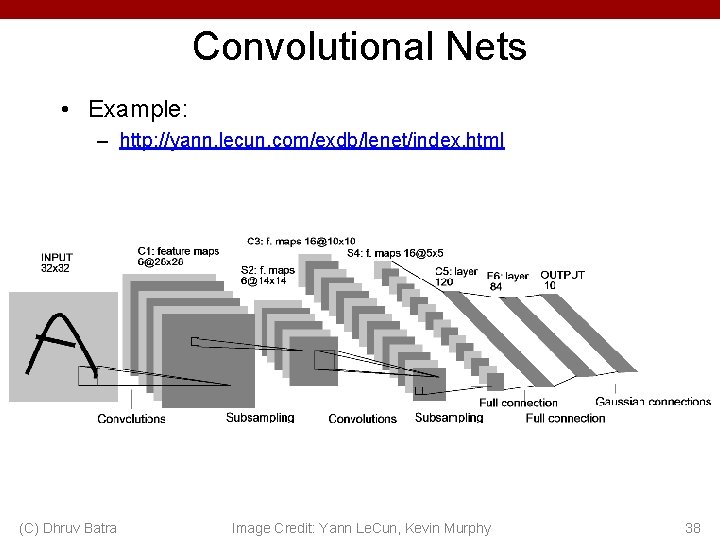

Convolutional Nets • Example: – http: //yann. lecun. com/exdb/lenet/index. html (C) Dhruv Batra Image Credit: Yann Le. Cun, Kevin Murphy 38

(C) Dhruv Batra Slide Credit: Marc'Aurelio Ranzato 39

(C) Dhruv Batra Slide Credit: Marc'Aurelio Ranzato 40

(C) Dhruv Batra Slide Credit: Marc'Aurelio Ranzato 41

![Visualizing Learned Filters C Dhruv Batra Figure Credit Zeiler Fergus ECCV 14 42 Visualizing Learned Filters (C) Dhruv Batra Figure Credit: [Zeiler & Fergus ECCV 14] 42](https://slidetodoc.com/presentation_image_h2/94dad62edce0e7bb3c144b9a95ce2f80/image-42.jpg)

Visualizing Learned Filters (C) Dhruv Batra Figure Credit: [Zeiler & Fergus ECCV 14] 42

![Visualizing Learned Filters C Dhruv Batra Figure Credit Zeiler Fergus ECCV 14 43 Visualizing Learned Filters (C) Dhruv Batra Figure Credit: [Zeiler & Fergus ECCV 14] 43](https://slidetodoc.com/presentation_image_h2/94dad62edce0e7bb3c144b9a95ce2f80/image-43.jpg)

Visualizing Learned Filters (C) Dhruv Batra Figure Credit: [Zeiler & Fergus ECCV 14] 43

![Visualizing Learned Filters C Dhruv Batra Figure Credit Zeiler Fergus ECCV 14 44 Visualizing Learned Filters (C) Dhruv Batra Figure Credit: [Zeiler & Fergus ECCV 14] 44](https://slidetodoc.com/presentation_image_h2/94dad62edce0e7bb3c144b9a95ce2f80/image-44.jpg)

Visualizing Learned Filters (C) Dhruv Batra Figure Credit: [Zeiler & Fergus ECCV 14] 44

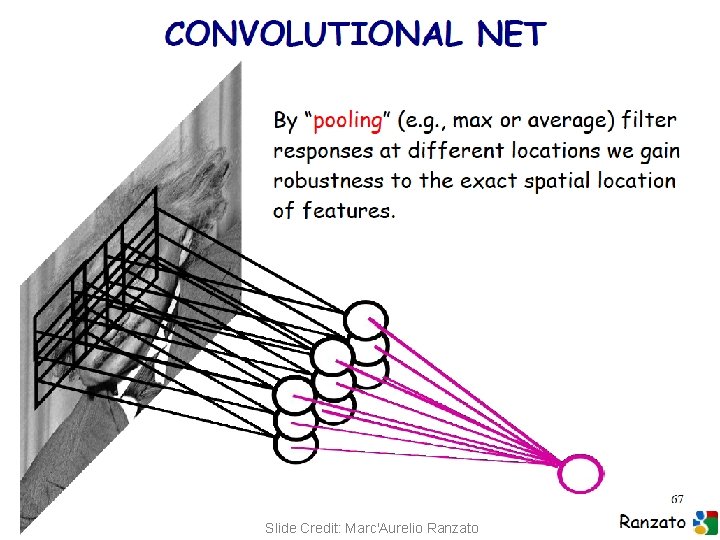

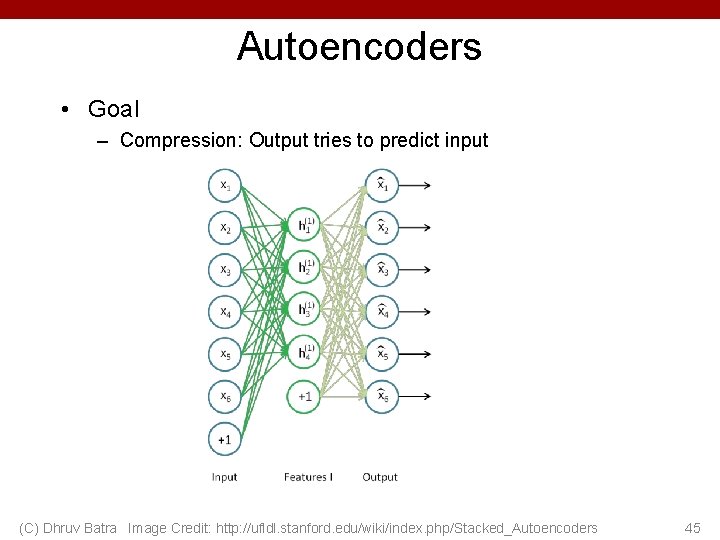

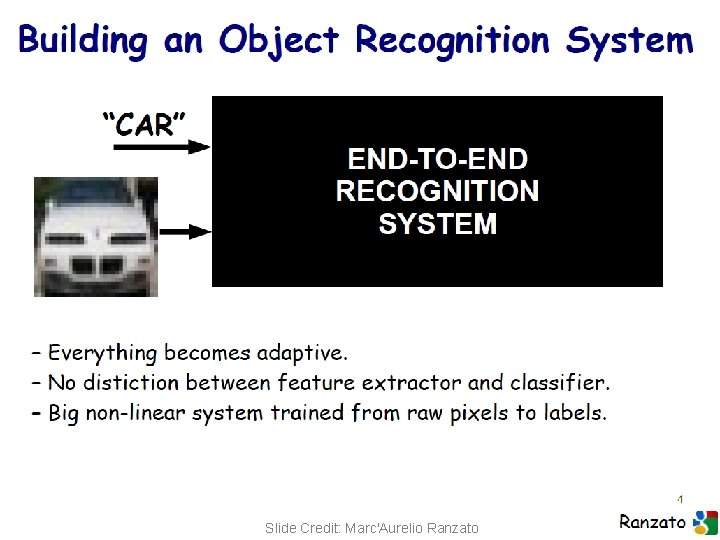

Autoencoders • Goal – Compression: Output tries to predict input (C) Dhruv Batra Image Credit: http: //ufldl. stanford. edu/wiki/index. php/Stacked_Autoencoders 45

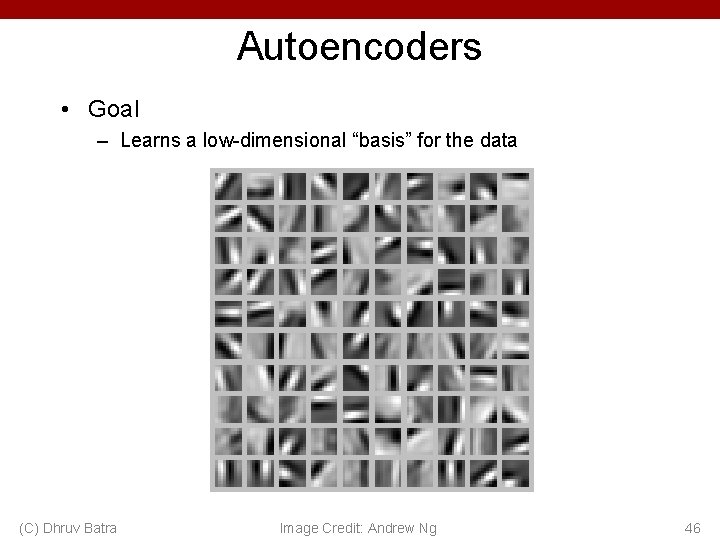

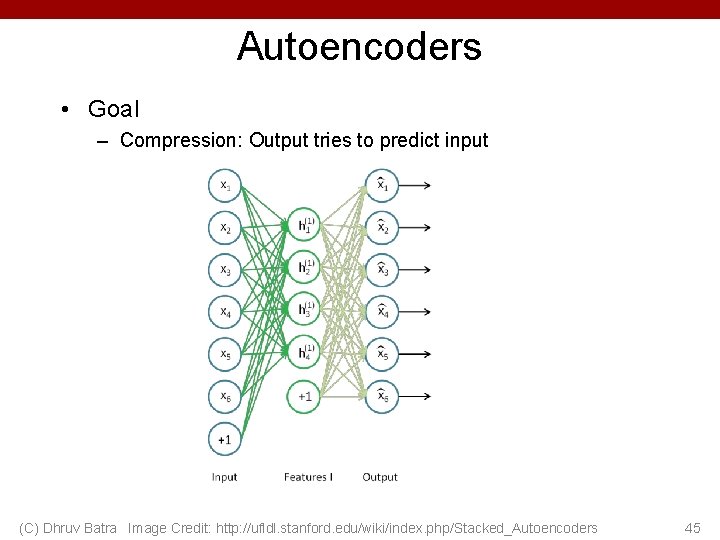

Autoencoders • Goal – Learns a low-dimensional “basis” for the data (C) Dhruv Batra Image Credit: Andrew Ng 46

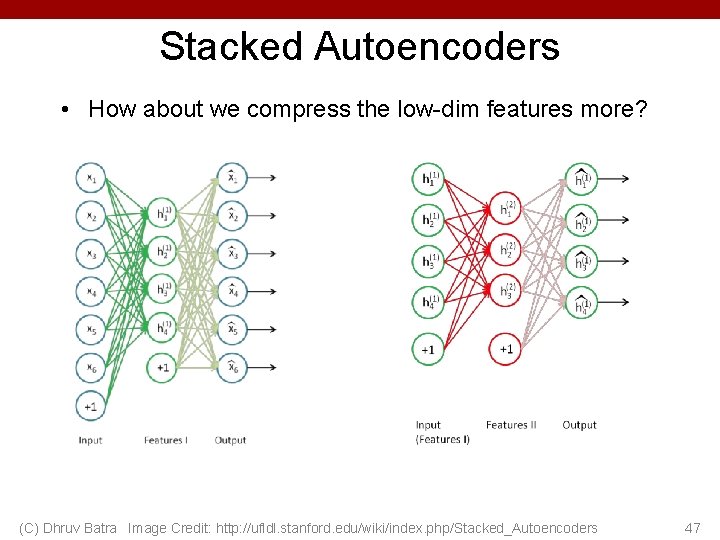

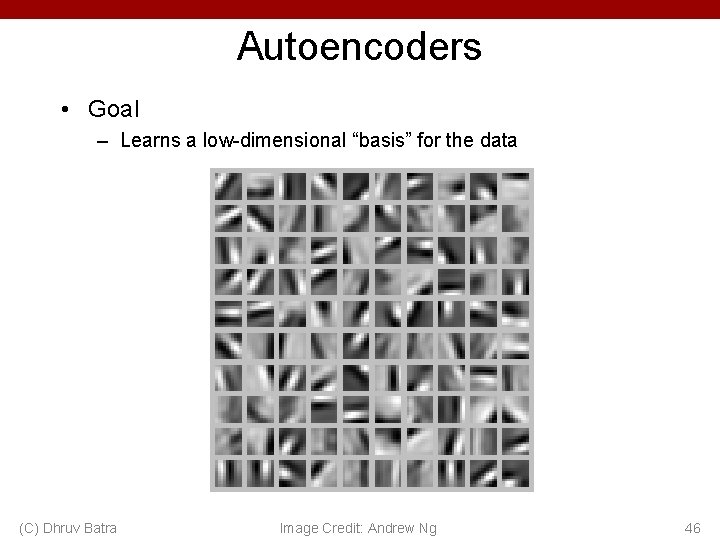

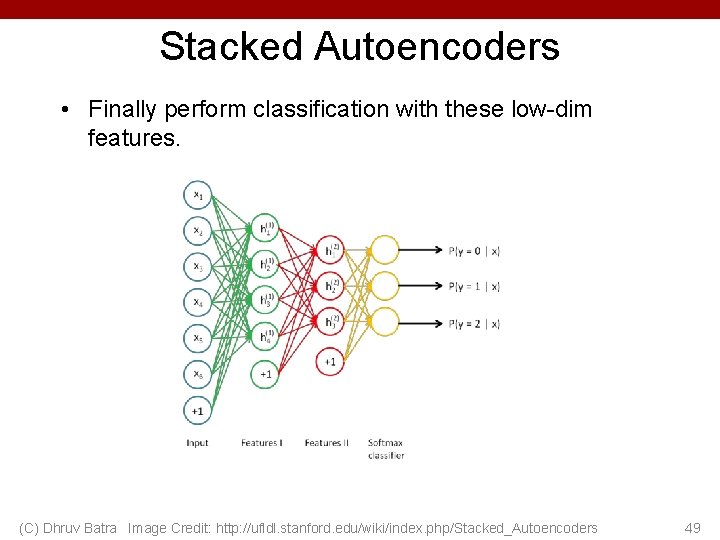

Stacked Autoencoders • How about we compress the low-dim features more? (C) Dhruv Batra Image Credit: http: //ufldl. stanford. edu/wiki/index. php/Stacked_Autoencoders 47

![Sparse DBNs Lee et al ICML 09 C Dhruv Batra Figure courtesy Quoc Sparse DBNs [Lee et al. ICML ‘ 09] (C) Dhruv Batra Figure courtesy: Quoc](https://slidetodoc.com/presentation_image_h2/94dad62edce0e7bb3c144b9a95ce2f80/image-48.jpg)

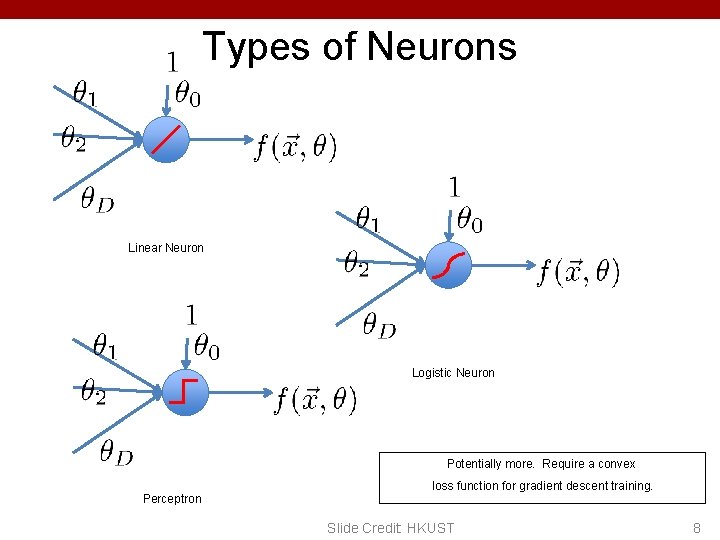

Sparse DBNs [Lee et al. ICML ‘ 09] (C) Dhruv Batra Figure courtesy: Quoc Le 48

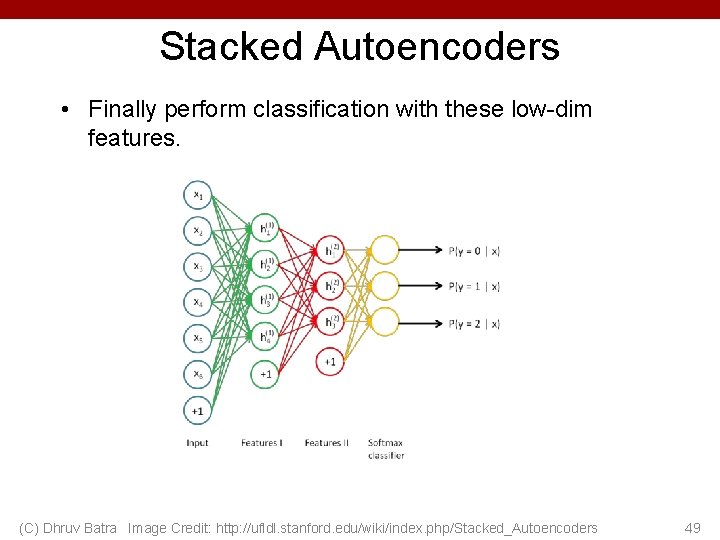

Stacked Autoencoders • Finally perform classification with these low-dim features. (C) Dhruv Batra Image Credit: http: //ufldl. stanford. edu/wiki/index. php/Stacked_Autoencoders 49

What you need to know about neural networks • Perceptron: – Representation – Derivation • Multilayer neural nets – – Representation Derivation of backprop Learning rule Expressive power