ECE 526 Network Processing Systems Design Computer Architecture

- Slides: 19

ECE 526 – Network Processing Systems Design Computer Architecture: traditional network processing systems implementation Chapter 4: D. E. Comer

Goals • Examine architecture of conventional computer system • Discuss operation of network interface Ning Weng ECE 526 2

Outline • Computer System Architecture • Bus Interconnect ─ 3 different bus lines ─ Bandwidth, size of address space and basic operation • Network Interface Card (NIC) ─ Functionality • The procedure to receive/send a packet ─ Problems of traditional NIC ─ 4 optimization techniques • Lab 1 announcement Ning Weng ECE 526 3

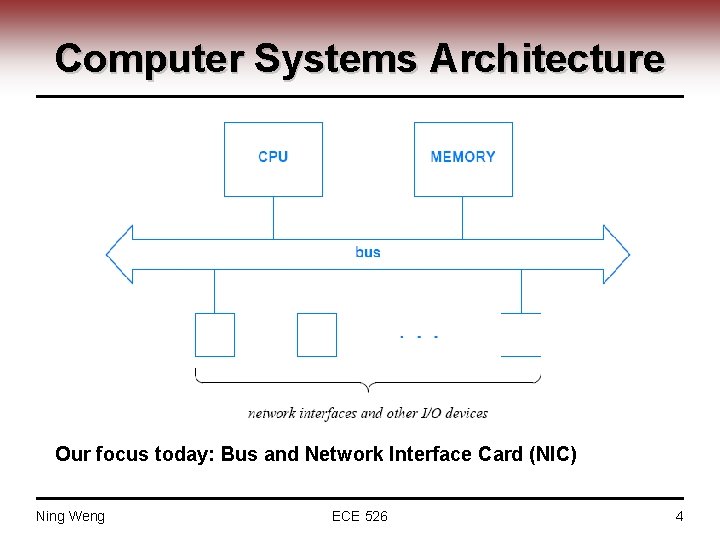

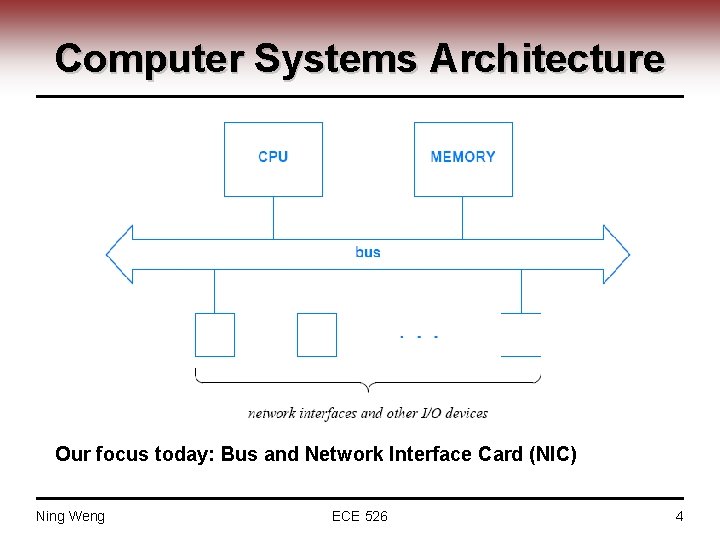

Computer Systems Architecture Our focus today: Bus and Network Interface Card (NIC) Ning Weng ECE 526 4

Computer Systems Functionality • Computation ─ Arithmetic and logic operation, protocol processing and etc ─ CPU • Storage ─ Keep short-time or long-time data ─ register, cache, memory, disk, tape and etc • Communication ─ Medium or device for communication and/or networking ─ Bus, Network Interface Card Ning Weng ECE 526 5

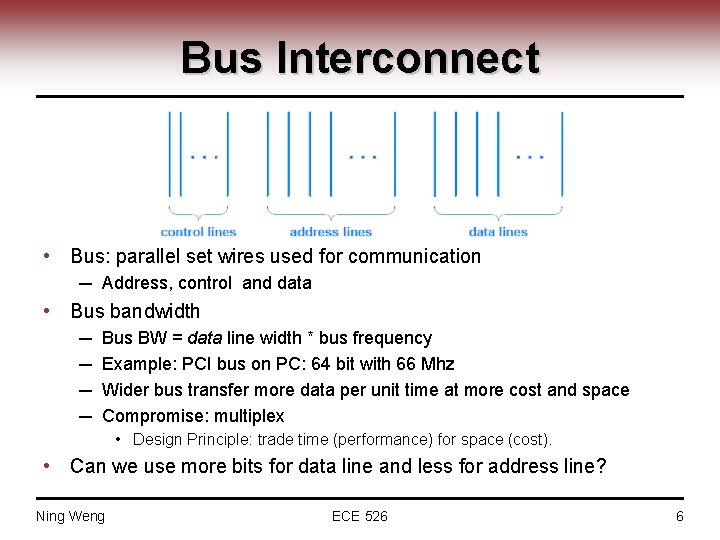

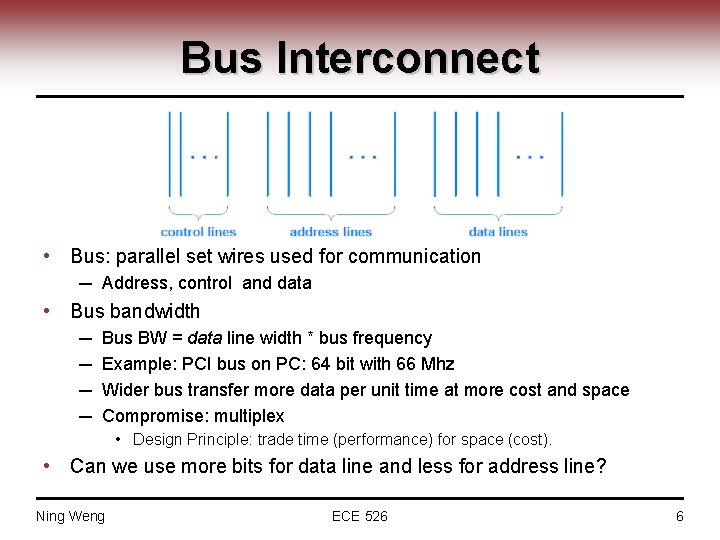

Bus Interconnect • Bus: parallel set wires used for communication ─ Address, control and data • Bus bandwidth ─ ─ Bus BW = data line width * bus frequency Example: PCI bus on PC: 64 bit with 66 Mhz Wider bus transfer more data per unit time at more cost and space Compromise: multiplex • Design Principle: trade time (performance) for space (cost). • Can we use more bits for data line and less for address line? Ning Weng ECE 526 6

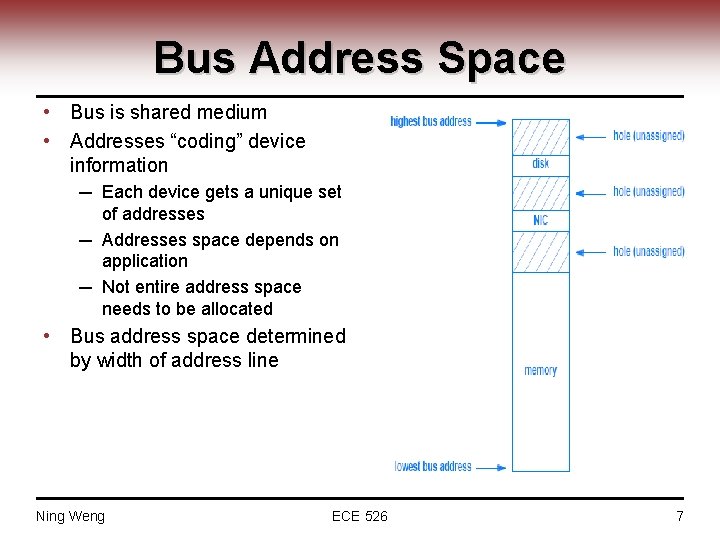

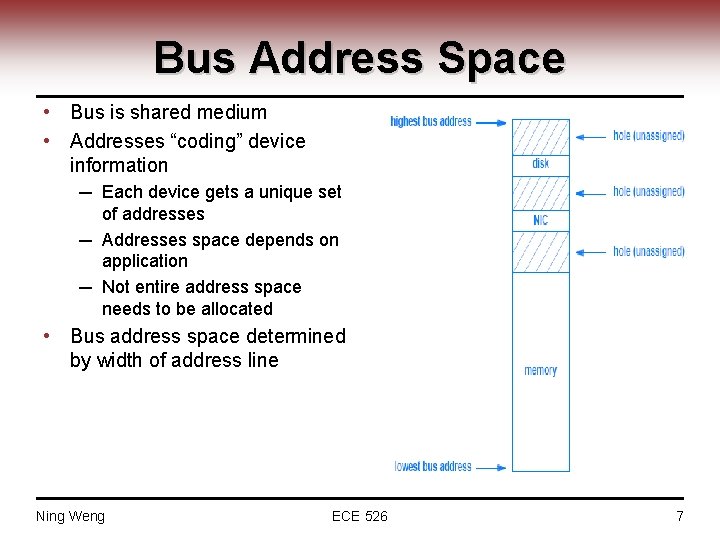

Bus Address Space • Bus is shared medium • Addresses “coding” device information ─ Each device gets a unique set of addresses ─ Addresses space depends on application ─ Not entire address space needs to be allocated • Bus address space determined by width of address line Ning Weng ECE 526 7

Bus Operation: fetch and store • Fundamental paradigm ─ Used in computer systems ─ “Coded” by control lines • Fetch: load data from device to bus ─ ─ • Place addresses of a device on address lines Issue fetch on control lines Wait for device that owns the address to respond If successful, extract value from data lines Store: put data from bus to device ─ ─ ─ Ning Weng Place addresses of a device on address lines Place value on data lines Issue store on control lines Wait for device that owns the address to respond If unsuccessful, report error ECE 526 8

Bus: summary • Shared, parallel communication medium ─ Data lines: determining the bus bandwidth ─ Address lines: determining the address space ─ Control lines: encoding fetch, store operations • Real busses are more complicated ─ ─ Bus arbiter implements accesses rules, e. g. , priorities Some busses allow split-transactions Some busses transfer data on each edge of the clock cycle Etc • Other share communication medium ─ Crossbar ─ Shared memory Ning Weng ECE 526 9

Network Interface Card • I/O device ─ Transferring packet between network and memory ─ Controlled by CPU • Send packet to network ─ CPU assembling a packet in memory ─ CPU transferring the packet to NIC ─ NIC Transmitting the packet to network • Receive packet from network ─ ─ CPU informed NIC the storage location for coming packet NIC waiting for packet from network NIC placing packet into specified location NIC informing CPU Ning Weng ECE 526 10

Several Facts & Inefficiencies • Ethernet shared medium => • NIC receiving a lot of packets that intended for other stations • NIC wasting CPU for wrong interruption and forwarding wrong packet • Network traffic burst => • Packet loss if NIC can’t forward packets • Packet size bigger than the bus width => • Multiple transfer required for one packet • Multiple CPU interrupt • Bus interconnect shared medium => • NIC waiting for transfer turn to access memory • How to improve it? Ning Weng ECE 526 11

NIC Optimization • Solution: ─ Avoid using CPU whenever possible ─ Improve NIC own processing, storage and communication ability • Four optimization techniques ─ ─ Onboard address recognition and filtering Onboard packet buffering Direct memory accesses (DMA) Data chaining and operation chaining Ning Weng ECE 526 12

Onboard Address Recognition & Filtering • Recognition of unicast and broadcast addresses ─ NIC check each frame destination MAC address with its own • True: forwarding to CPU over bus • No: discard without interrupt CPU and BUS • Multicast addresses more complex ─ Multicast address is dynamic process ─ Might be a lot of multicast addresses associated one station • Hash • False positive • Shift “processing” duty from CPU to NIC Ning Weng ECE 526 13

Onboard Packet Buffering • NIC has its own memory to buffer packet due to ─ burst traffic ─ contention on bus interconnect • NIC can receive packets while transferring others to CPU • How to decide the right size of buffer memory? • Shift “storage” from main memory to NIC buffer Ning Weng ECE 526 14

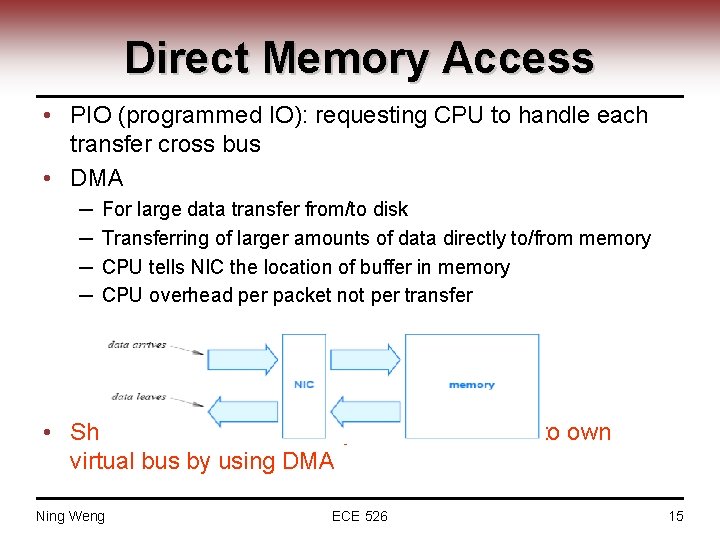

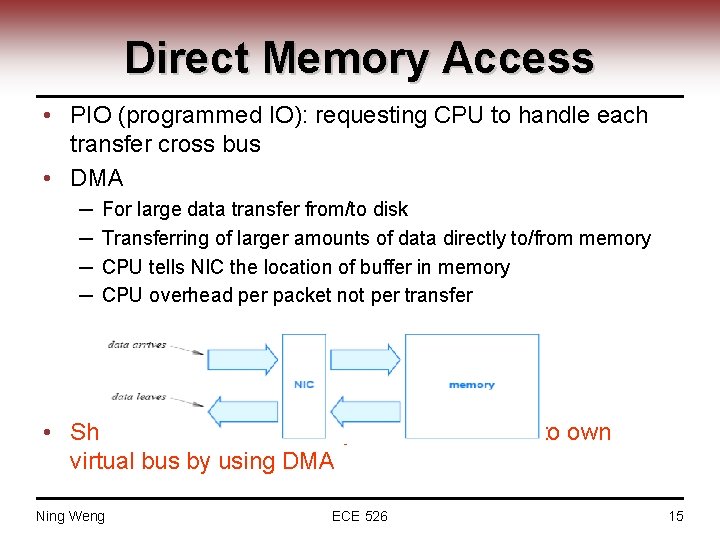

Direct Memory Access • PIO (programmed IO): requesting CPU to handle each transfer cross bus • DMA ─ ─ For large data transfer from/to disk Transferring of larger amounts of data directly to/from memory CPU tells NIC the location of buffer in memory CPU overhead per packet not per transfer • Shift “communication” duty from shared bus to own virtual bus by using DMA Ning Weng ECE 526 15

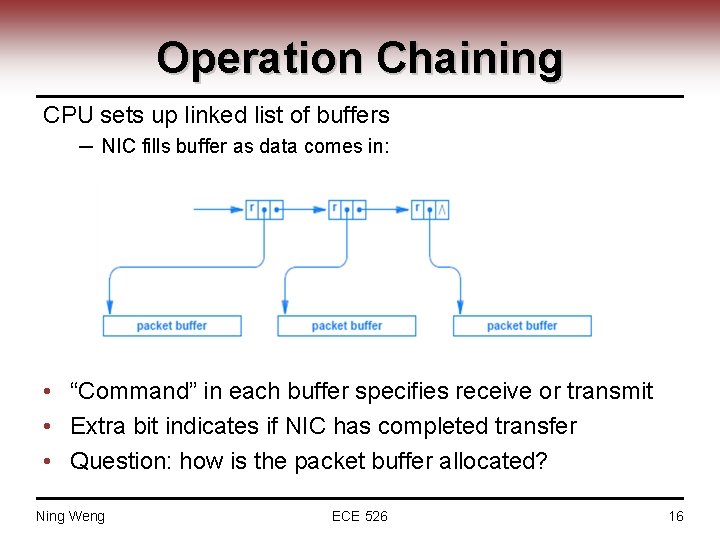

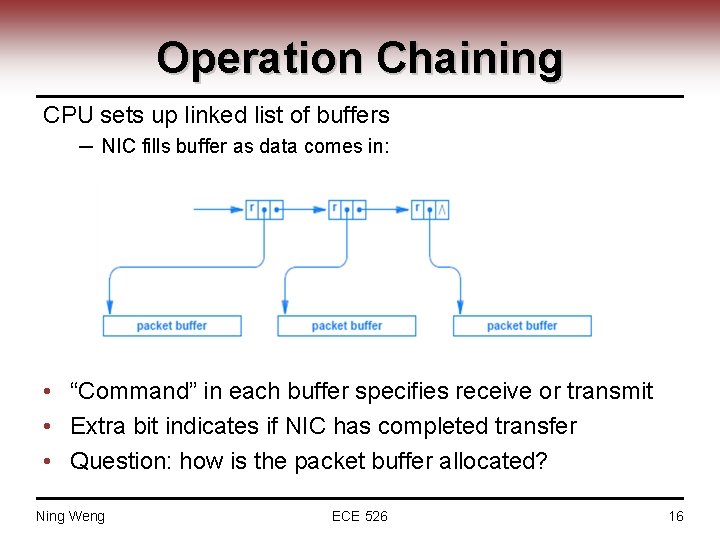

Operation Chaining CPU sets up linked list of buffers ─ NIC fills buffer as data comes in: • “Command” in each buffer specifies receive or transmit • Extra bit indicates if NIC has completed transfer • Question: how is the packet buffer allocated? Ning Weng ECE 526 16

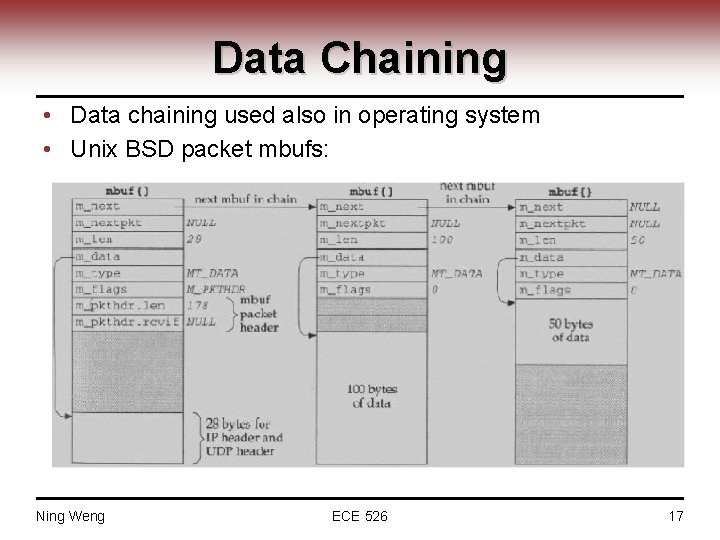

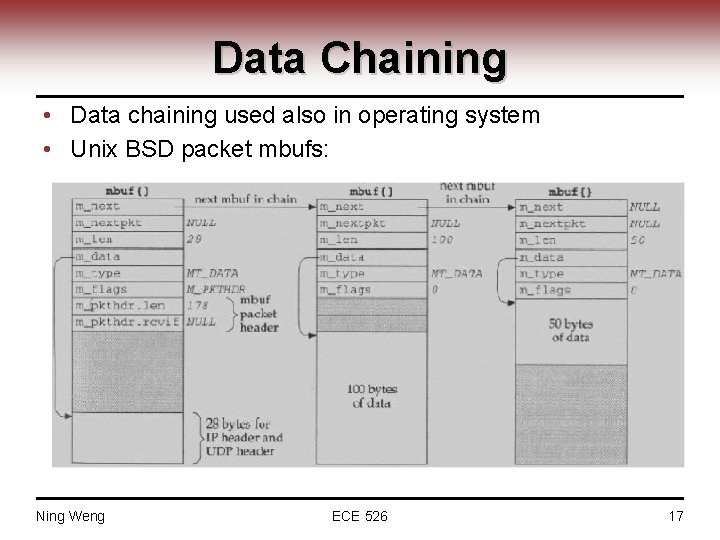

Data Chaining • Data chaining used also in operating system • Unix BSD packet mbufs: Ning Weng ECE 526 17

NIC: Summary • Traditional operation ─ transferring packet from/to network link to/from memory over shared bus, controlled by CPU for each transfer • Several Facts & Inefficiencies • Optimization techniques ─ ─ On-board filtering On-board buffering DMA Operation and data chaining Ning Weng ECE 526 18

For Next Class • Read Comer Chapter 5 Ning Weng ECE 526 19