ECE 517 Reinforcement Learning in Artificial Intelligence Lecture

- Slides: 17

ECE 517 Reinforcement Learning in Artificial Intelligence Lecture 21: Deep Machine Learning November 8, 2010 Dr. Itamar Arel College of Engineering Department of Electrical Engineering and Computer Science The University of Tennessee Fall 2010 1

RL and General AI RL seems like a good AI framework Some pieces are missing n n n Long/short term memory: what is the optimal value (or costto-go) function to be used? How do we treat multi-dimensional reward signals? How do we deal with high-dimensional inputs (observations)? How to generalize to address an near-infinite state space? How long will it take to train such a system? If we want to use hardware – how do we go about doing it? n n n Storage capacity – human brain ~1014 synapses (i. e. weights) Processing power - ~1011 neurons Communications – fully or partially connected architectures ECE 517 - Reinforcement Learning in AI 2

Why Deep Learning? Mimicking the way the brain represents information is a key challenge n n Deals efficiently with high-dimensionality Handle multi-modal data fusion Capture temporal dependencies spanning large scales Incremental knowledge acquisition The challenge with high-dimensionality n n Real-world problem Curse of dimensionality (Bellman) Spatial and temporal dependencies How to represent key features? ? ECE 517 - Reinforcement Learning in AI 3

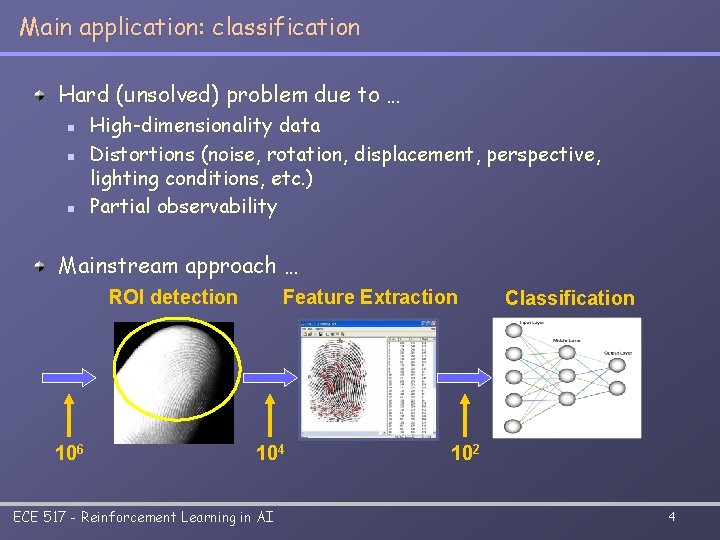

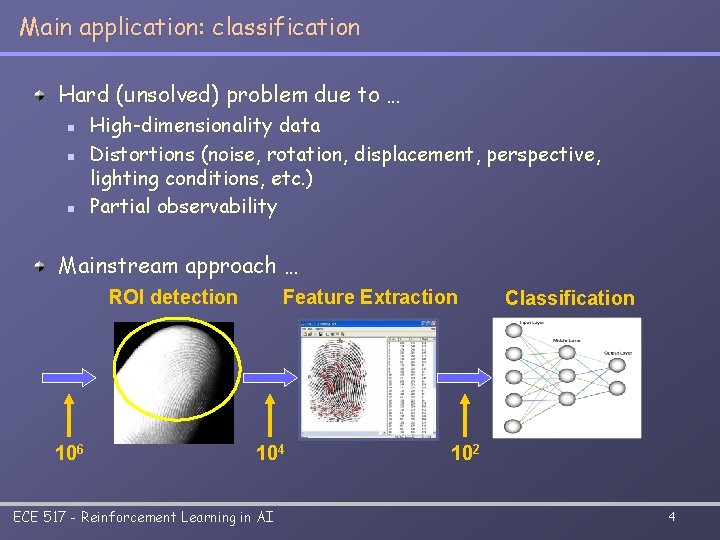

Main application: classification Hard (unsolved) problem due to … n n n High-dimensionality data Distortions (noise, rotation, displacement, perspective, lighting conditions, etc. ) Partial observability Mainstream approach … ROI detection 106 Feature Extraction 104 ECE 517 - Reinforcement Learning in AI Classification 102 4

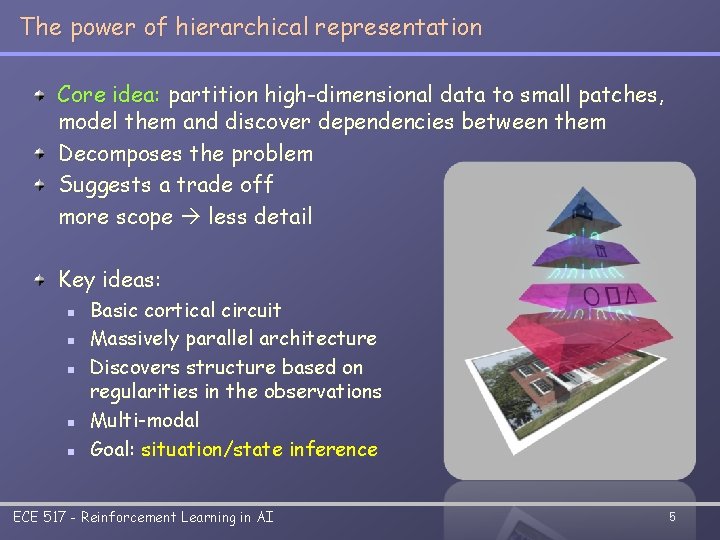

The power of hierarchical representation Core idea: partition high-dimensional data to small patches, model them and discover dependencies between them Decomposes the problem Suggests a trade off more scope less detail Key ideas: n n n Basic cortical circuit Massively parallel architecture Discovers structure based on regularities in the observations Multi-modal Goal: situation/state inference ECE 517 - Reinforcement Learning in AI 5

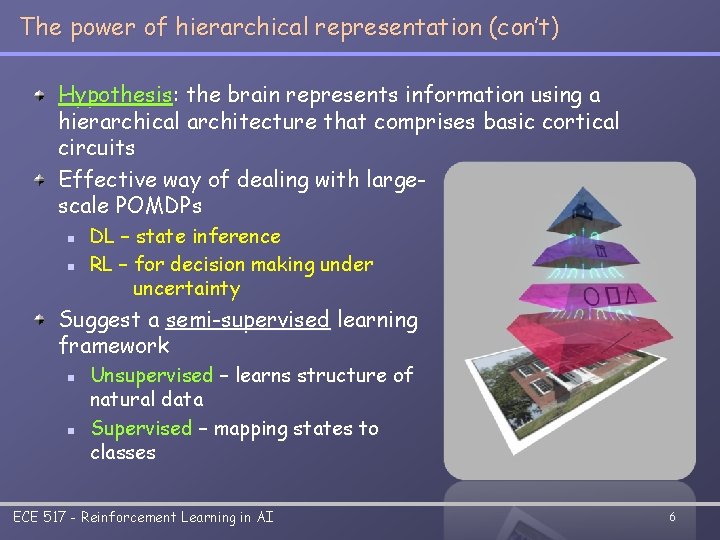

The power of hierarchical representation (con’t) Hypothesis: the brain represents information using a hierarchical architecture that comprises basic cortical circuits Effective way of dealing with largescale POMDPs n n DL – state inference RL – for decision making under uncertainty Suggest a semi-supervised learning framework n n Unsupervised – learns structure of natural data Supervised – mapping states to classes ECE 517 - Reinforcement Learning in AI 6

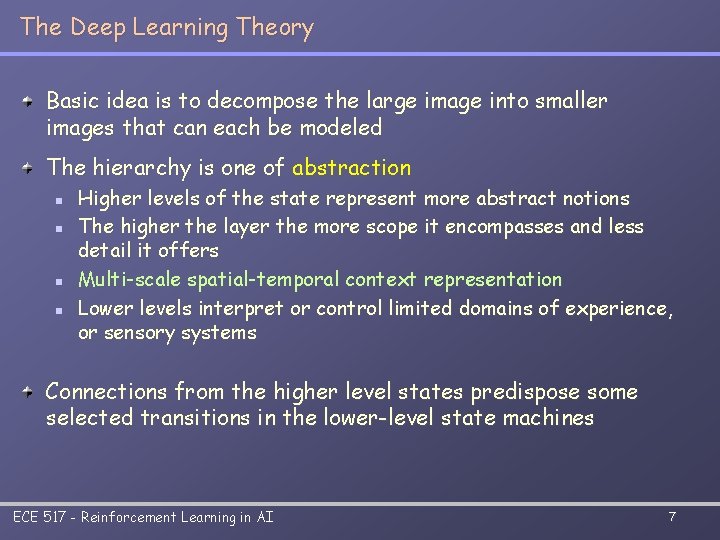

The Deep Learning Theory Basic idea is to decompose the large image into smaller images that can each be modeled The hierarchy is one of abstraction n n Higher levels of the state represent more abstract notions The higher the layer the more scope it encompasses and less detail it offers Multi-scale spatial-temporal context representation Lower levels interpret or control limited domains of experience, or sensory systems Connections from the higher level states predispose some selected transitions in the lower-level state machines ECE 517 - Reinforcement Learning in AI 7

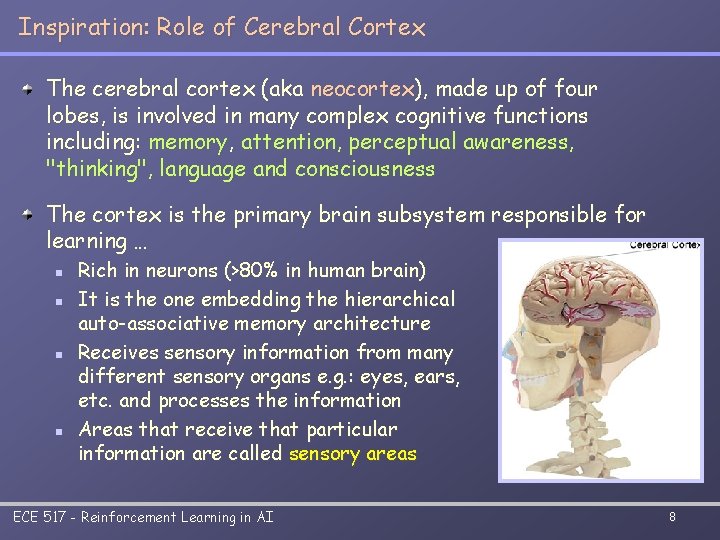

Inspiration: Role of Cerebral Cortex The cerebral cortex (aka neocortex), made up of four lobes, is involved in many complex cognitive functions including: memory, attention, perceptual awareness, "thinking", language and consciousness The cortex is the primary brain subsystem responsible for learning … n n Rich in neurons (>80% in human brain) It is the one embedding the hierarchical auto-associative memory architecture Receives sensory information from many different sensory organs e. g. : eyes, ears, etc. and processes the information Areas that receive that particular information are called sensory areas ECE 517 - Reinforcement Learning in AI 8

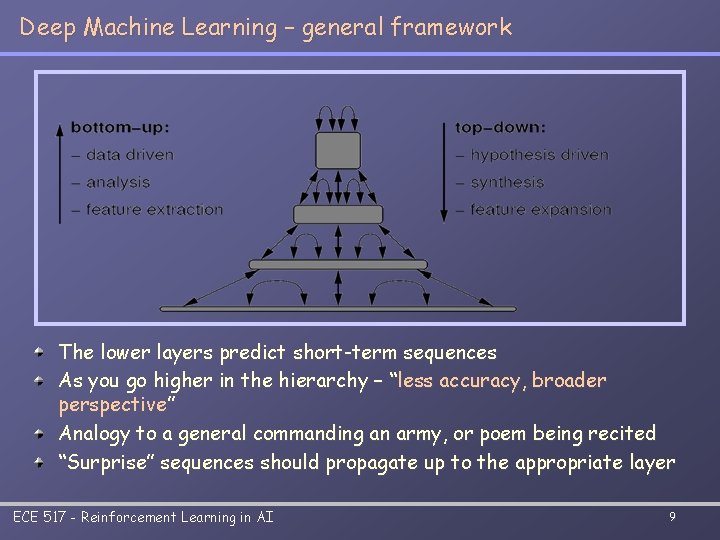

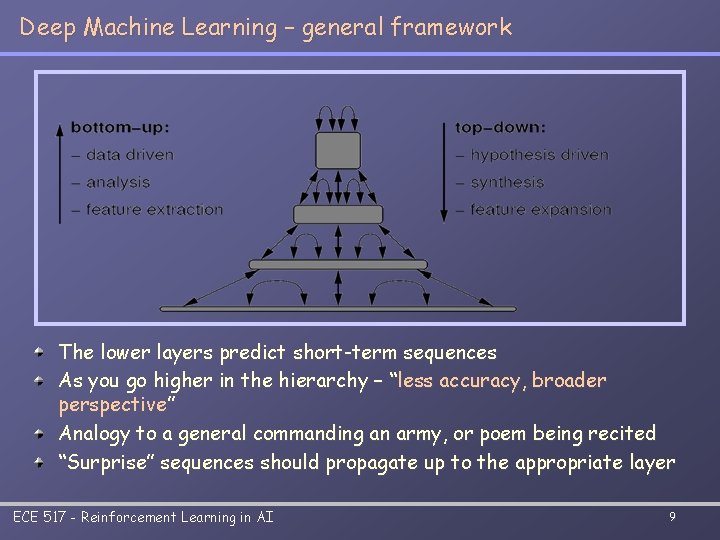

Deep Machine Learning – general framework The lower layers predict short-term sequences As you go higher in the hierarchy – “less accuracy, broader perspective” Analogy to a general commanding an army, or poem being recited “Surprise” sequences should propagate up to the appropriate layer ECE 517 - Reinforcement Learning in AI 9

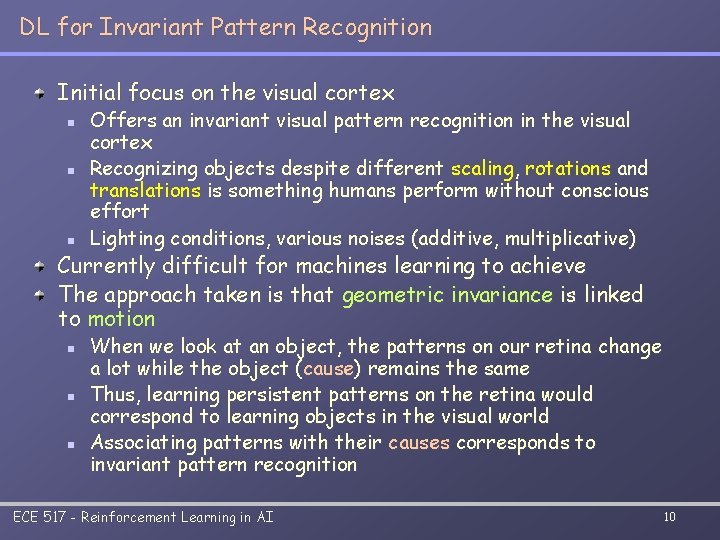

DL for Invariant Pattern Recognition Initial focus on the visual cortex n n n Offers an invariant visual pattern recognition in the visual cortex Recognizing objects despite different scaling, rotations and translations is something humans perform without conscious effort Lighting conditions, various noises (additive, multiplicative) Currently difficult for machines learning to achieve The approach taken is that geometric invariance is linked to motion n When we look at an object, the patterns on our retina change a lot while the object (cause) remains the same Thus, learning persistent patterns on the retina would correspond to learning objects in the visual world Associating patterns with their causes corresponds to invariant pattern recognition ECE 517 - Reinforcement Learning in AI 10

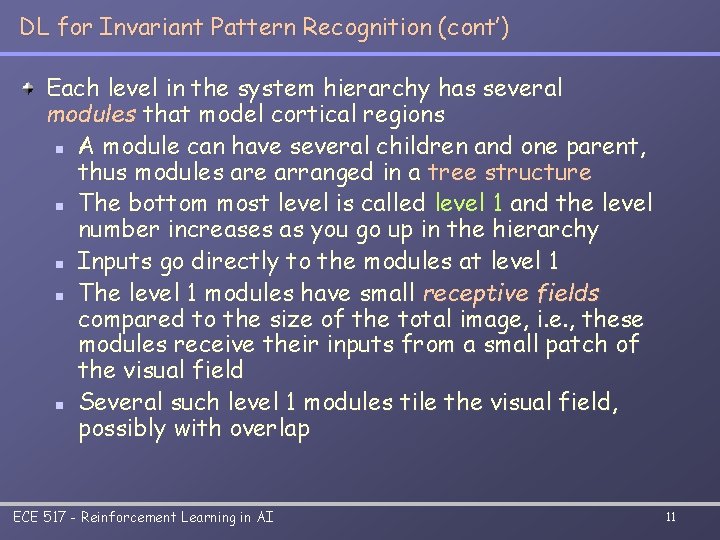

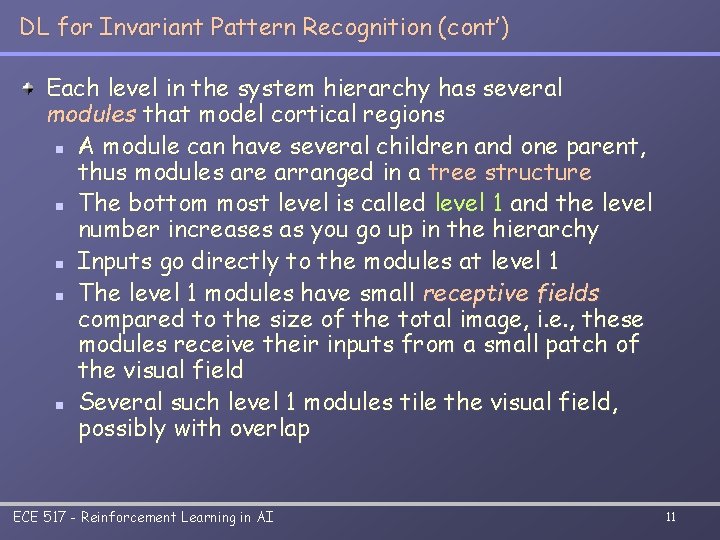

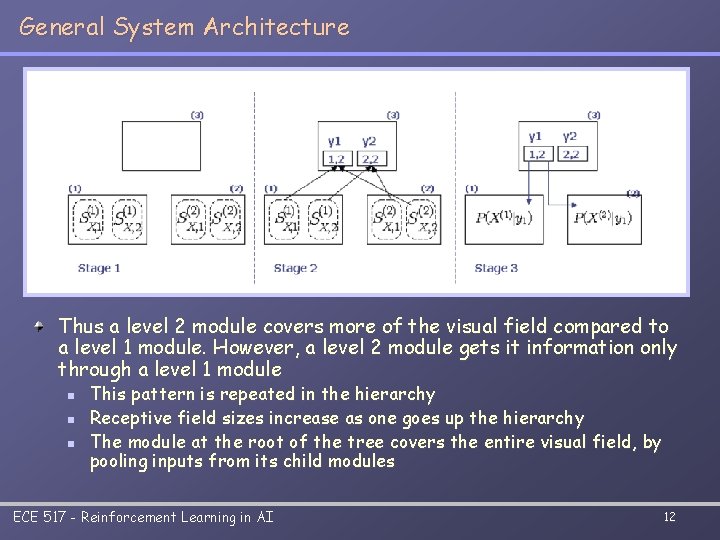

DL for Invariant Pattern Recognition (cont’) Each level in the system hierarchy has several modules that model cortical regions n A module can have several children and one parent, thus modules are arranged in a tree structure n The bottom most level is called level 1 and the level number increases as you go up in the hierarchy n Inputs go directly to the modules at level 1 n The level 1 modules have small receptive fields compared to the size of the total image, i. e. , these modules receive their inputs from a small patch of the visual field n Several such level 1 modules tile the visual field, possibly with overlap ECE 517 - Reinforcement Learning in AI 11

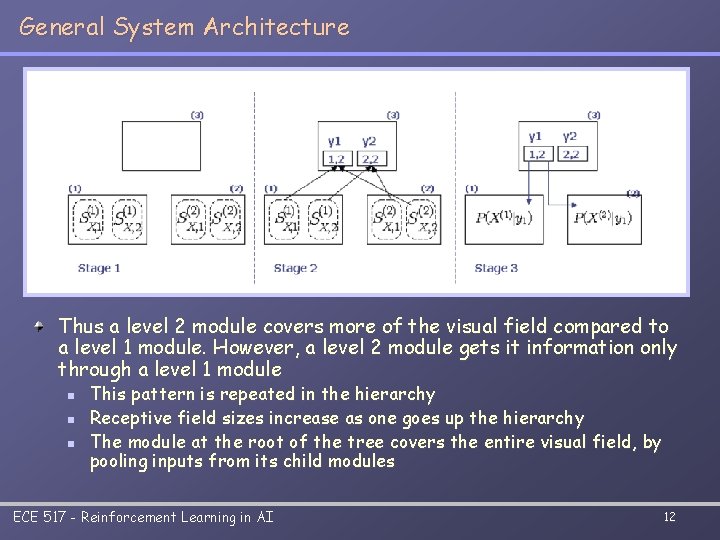

General System Architecture Thus a level 2 module covers more of the visual field compared to a level 1 module. However, a level 2 module gets it information only through a level 1 module n n n This pattern is repeated in the hierarchy Receptive field sizes increase as one goes up the hierarchy The module at the root of the tree covers the entire visual field, by pooling inputs from its child modules ECE 517 - Reinforcement Learning in AI 12

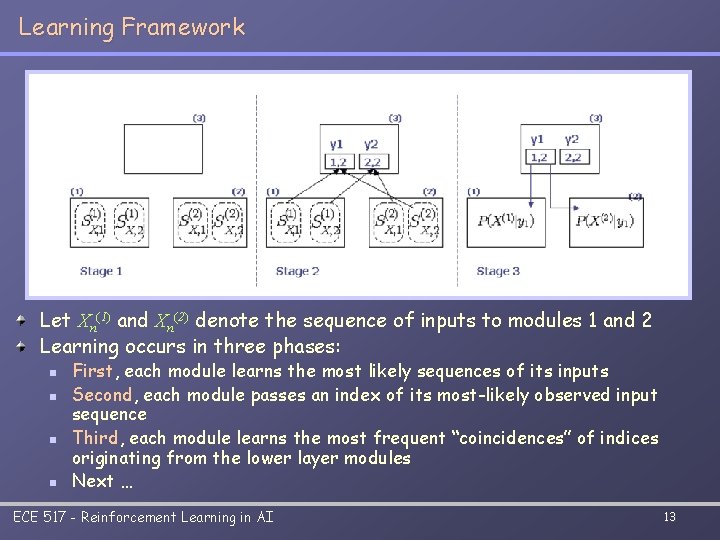

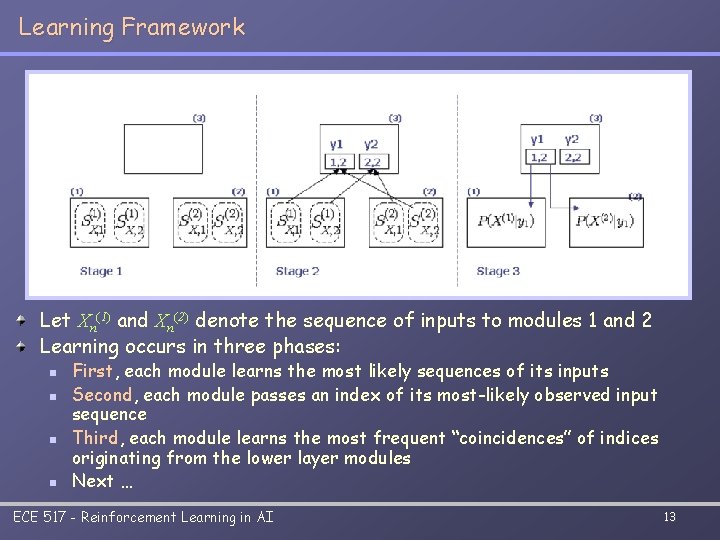

Learning Framework Let Xn(1) and Xn(2) denote the sequence of inputs to modules 1 and 2 Learning occurs in three phases: n n First, each module learns the most likely sequences of its inputs Second, each module passes an index of its most-likely observed input sequence Third, each module learns the most frequent “coincidences” of indices originating from the lower layer modules Next … ECE 517 - Reinforcement Learning in AI 13

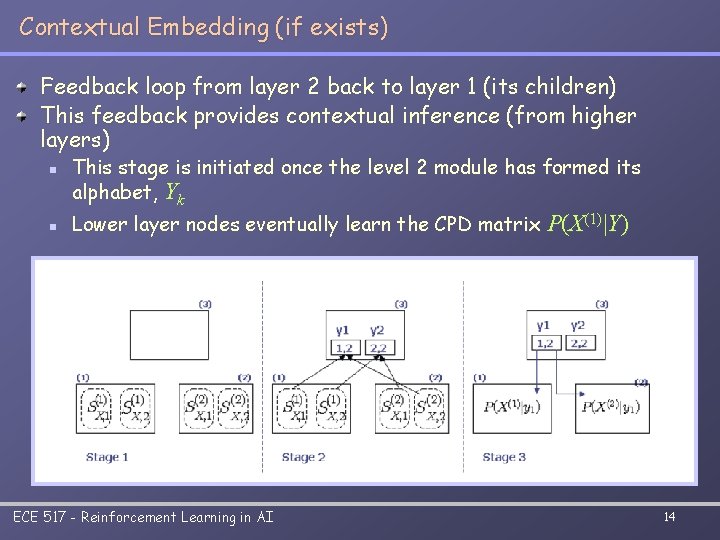

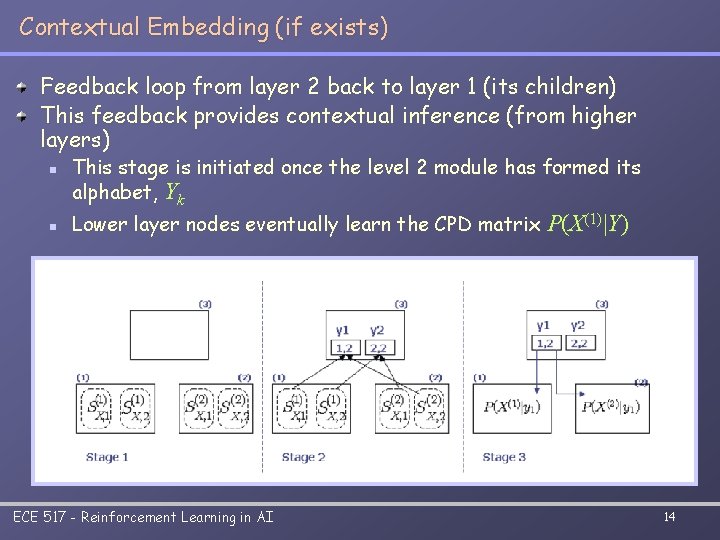

Contextual Embedding (if exists) Feedback loop from layer 2 back to layer 1 (its children) This feedback provides contextual inference (from higher layers) n n This stage is initiated once the level 2 module has formed its alphabet, Yk Lower layer nodes eventually learn the CPD matrix P(X(1)|Y) ECE 517 - Reinforcement Learning in AI 14

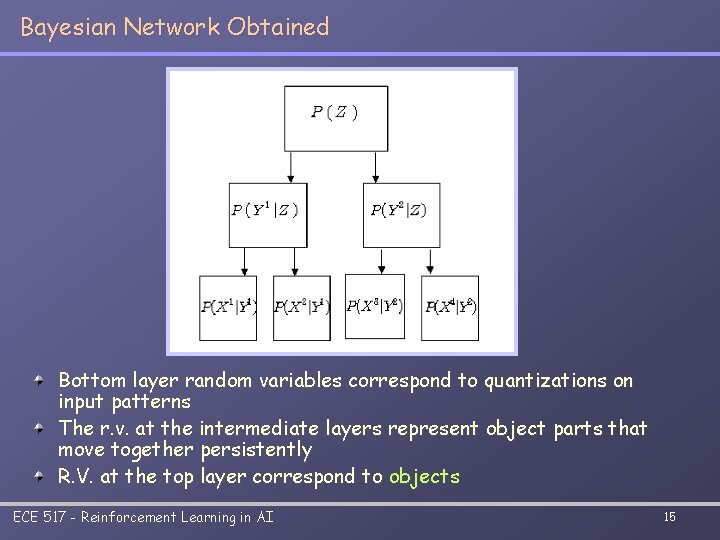

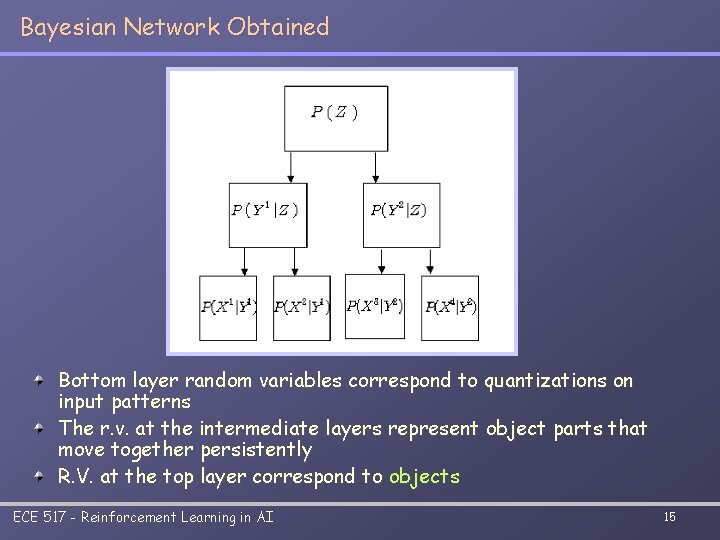

Bayesian Network Obtained Bottom layer random variables correspond to quantizations on input patterns The r. v. at the intermediate layers represent object parts that move together persistently R. V. at the top layer correspond to objects ECE 517 - Reinforcement Learning in AI 15

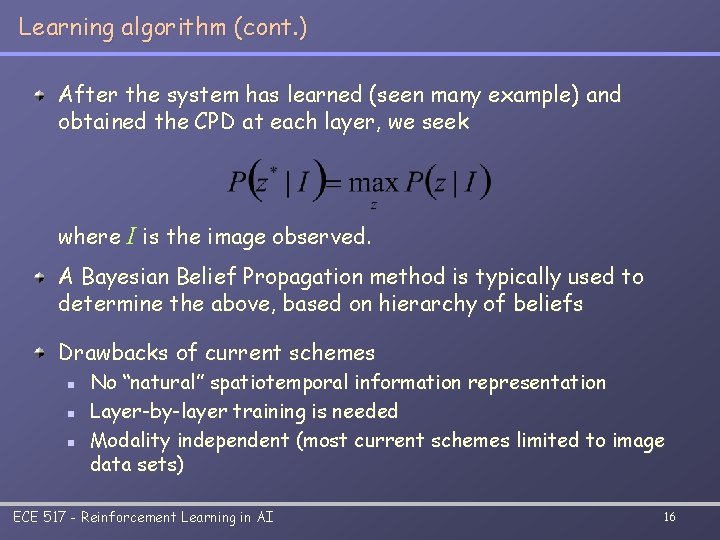

Learning algorithm (cont. ) After the system has learned (seen many example) and obtained the CPD at each layer, we seek where I is the image observed. A Bayesian Belief Propagation method is typically used to determine the above, based on hierarchy of beliefs Drawbacks of current schemes n n n No “natural” spatiotemporal information representation Layer-by-layer training is needed Modality independent (most current schemes limited to image data sets) ECE 517 - Reinforcement Learning in AI 16

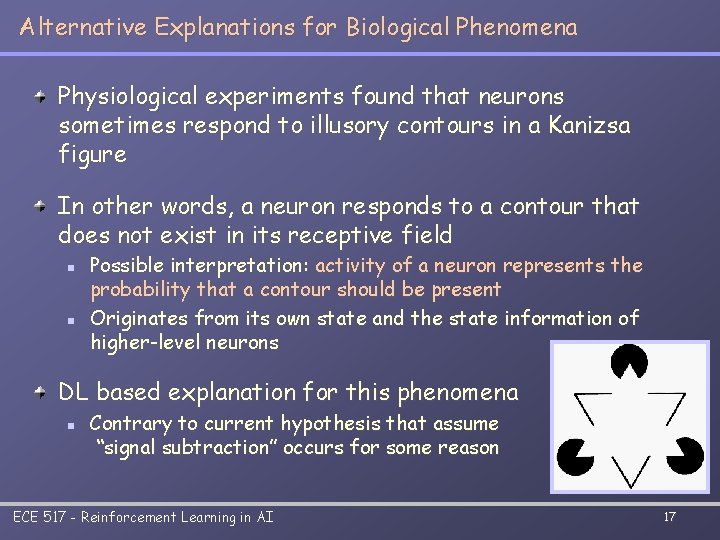

Alternative Explanations for Biological Phenomena Physiological experiments found that neurons sometimes respond to illusory contours in a Kanizsa figure In other words, a neuron responds to a contour that does not exist in its receptive field n n Possible interpretation: activity of a neuron represents the probability that a contour should be present Originates from its own state and the state information of higher-level neurons DL based explanation for this phenomena n Contrary to current hypothesis that assume “signal subtraction” occurs for some reason ECE 517 - Reinforcement Learning in AI 17