ECE 453 CS 447 SE 465 Software Testing

![Halstead's Software Science Metrics • Halstead[1977] based his "software science" on: – common sense, Halstead's Software Science Metrics • Halstead[1977] based his "software science" on: – common sense,](https://slidetodoc.com/presentation_image_h/8c5b0411cce8ecde21ad69f3d541d03c/image-37.jpg)

![Case Study 2 • another interesting case study was performed by Basili[2] et al. Case Study 2 • another interesting case study was performed by Basili[2] et al.](https://slidetodoc.com/presentation_image_h/8c5b0411cce8ecde21ad69f3d541d03c/image-63.jpg)

- Slides: 68

ECE 453 – CS 447 – SE 465 Software Testing & Quality Assurance Instructor Kostas Kontogiannis 1

Overview èSoftware Reverse Engineering èBlack Box Metrics èWhite Box Metrics èDevelopment Estimates èMaintenance Estimates 2

Software Metrics • Black Box Metrics – Function Points – COCOMO • White Box Metrics – – – LOC Halstead’s Software Science Mc. Cabe’s Cyclomatic Complexity Information Flow Metric Syntactic Interconnection 3

Software Metrics • S/W project planning consists of two primary tasks: • analysis • estimation (effort of programmer months, development interval, staffing levels, testing, maintenance costs, etc. ). • important: – most common cause of s/w development failure is poor (optimistic) planning. 4

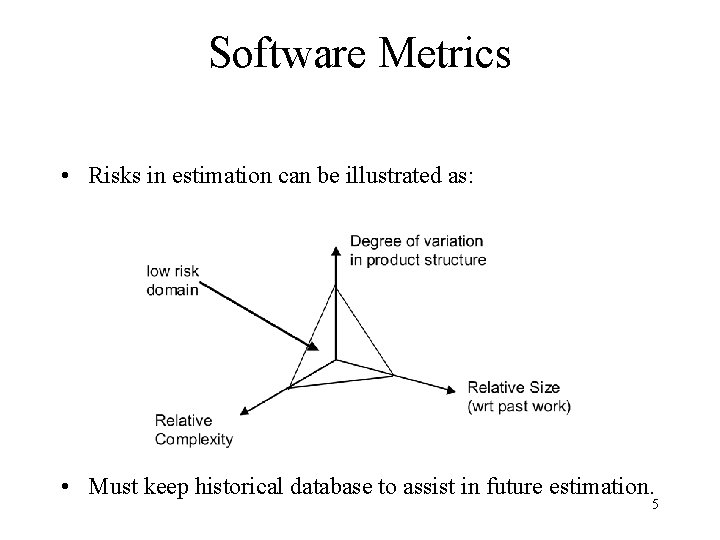

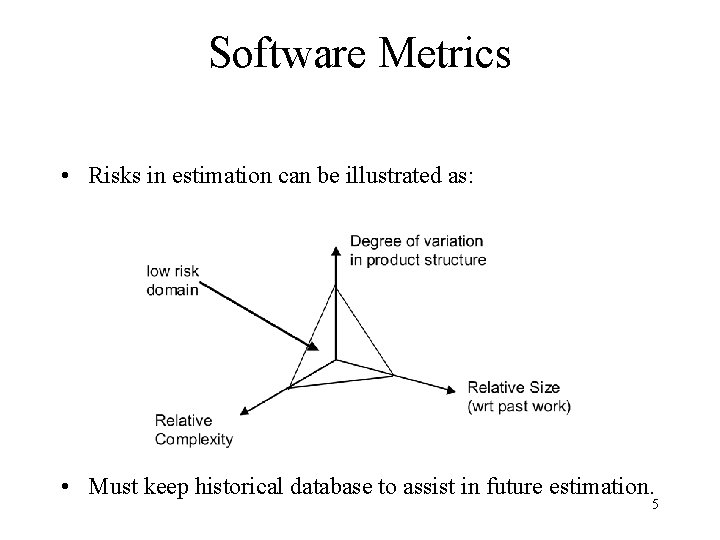

Software Metrics • Risks in estimation can be illustrated as: • Must keep historical database to assist in future estimation. 5

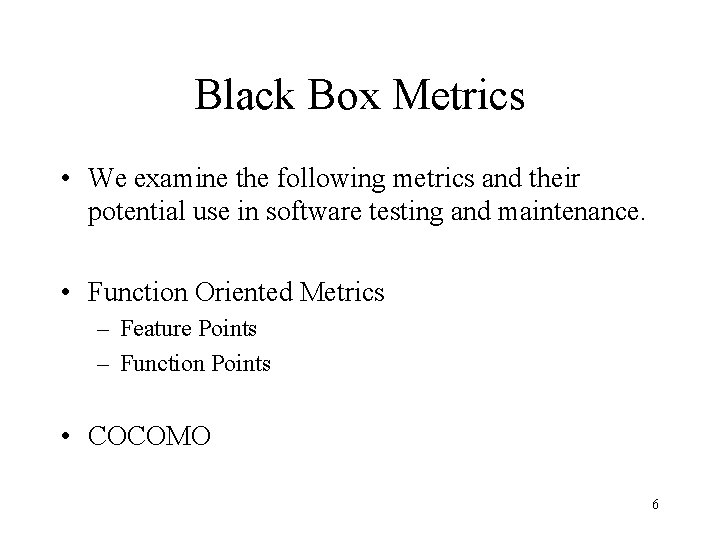

Black Box Metrics • We examine the following metrics and their potential use in software testing and maintenance. • Function Oriented Metrics – Feature Points – Function Points • COCOMO 6

Function Oriented Metrics • Mainly used in business applications • The focus is on program functionality • A measure of the information domain + a subjective assessment of complexity • Most common are: – function points, and – feature points (FP). Reference: R. S. Pressman, “Software Engineering: A Practitioner’s Approach, 3 rd Edition”, Mc. Graw Hill, Chapter 2. 7

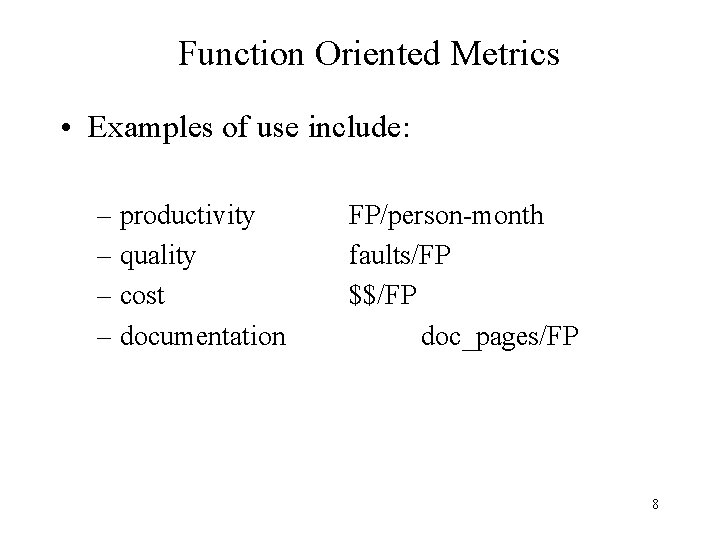

Function Oriented Metrics • Examples of use include: – productivity – quality – cost – documentation FP/person-month faults/FP $$/FP doc_pages/FP 8

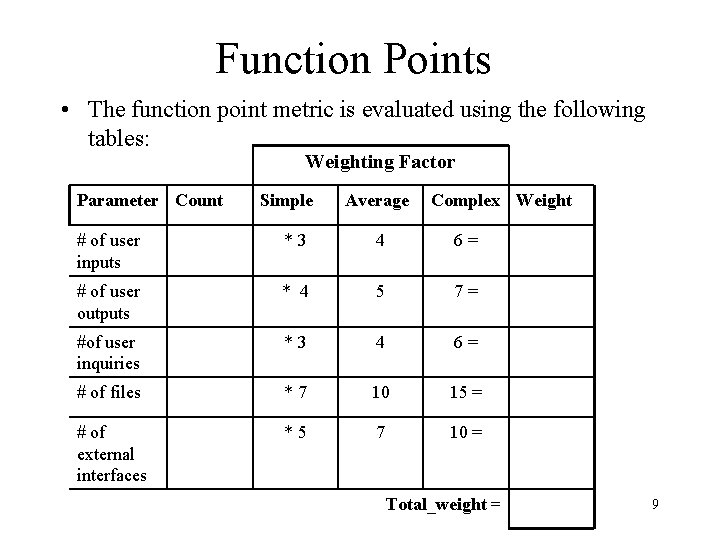

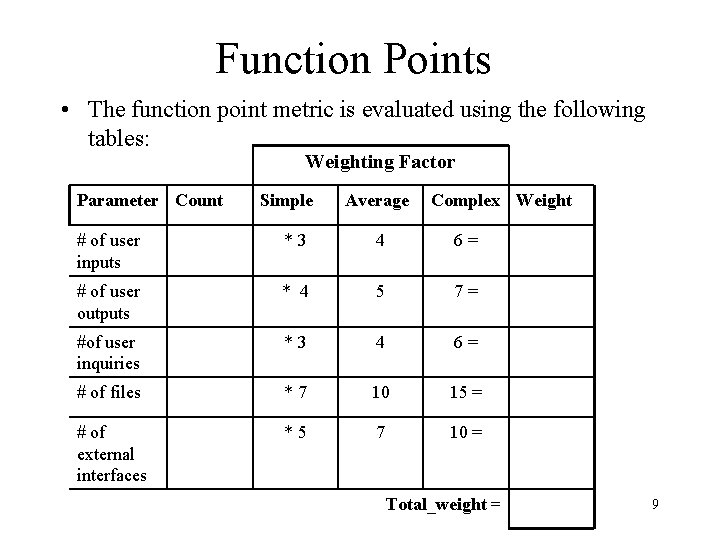

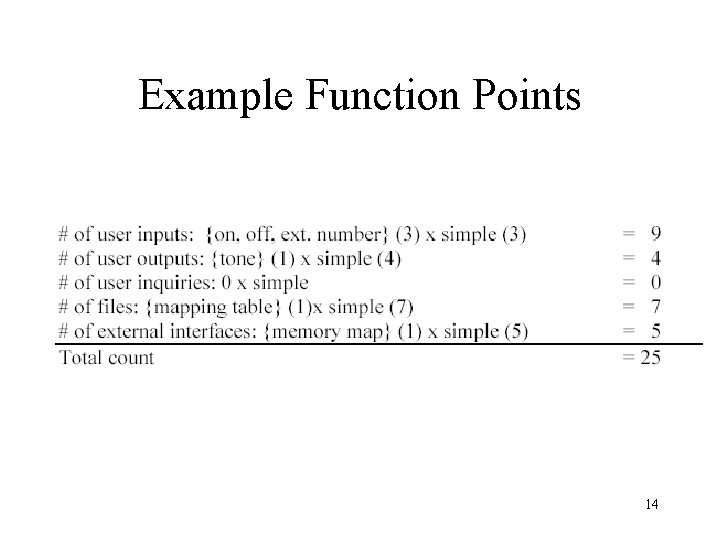

Function Points • The function point metric is evaluated using the following tables: Weighting Factor Parameter Count Simple Average Complex Weight # of user inputs * 3 4 6 = # of user outputs * 4 5 7 = #of user inquiries * 3 4 6 = # of files * 7 10 15 = # of external interfaces * 5 7 10 = Total_weight = 9

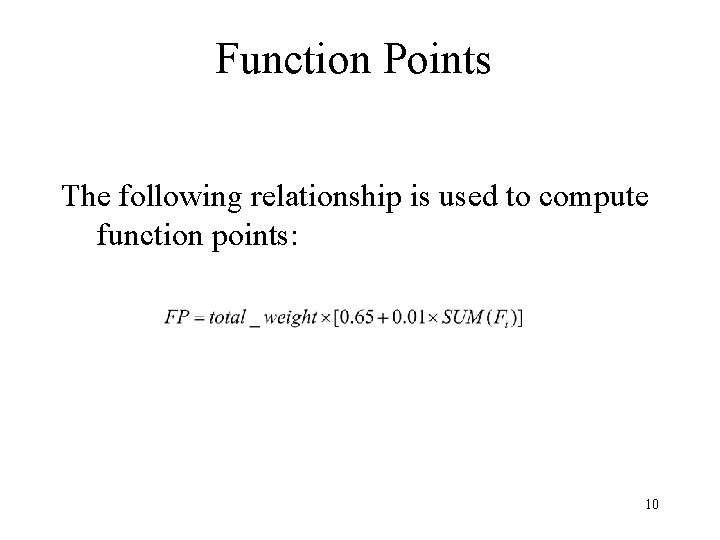

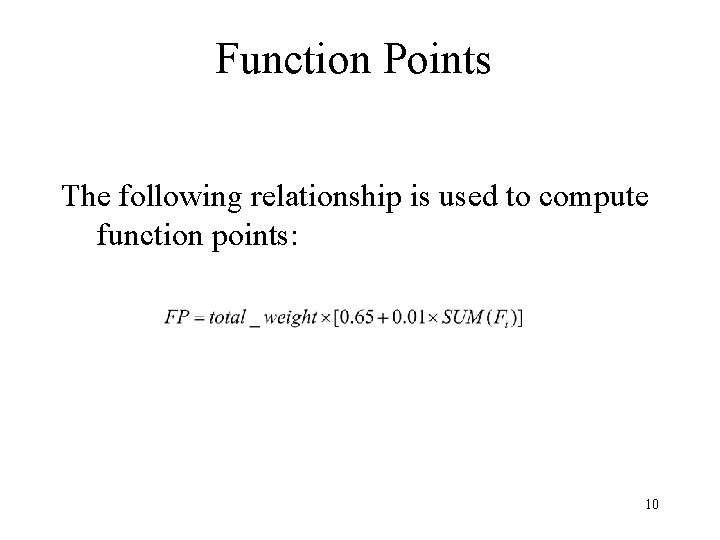

Function Points The following relationship is used to compute function points: 10

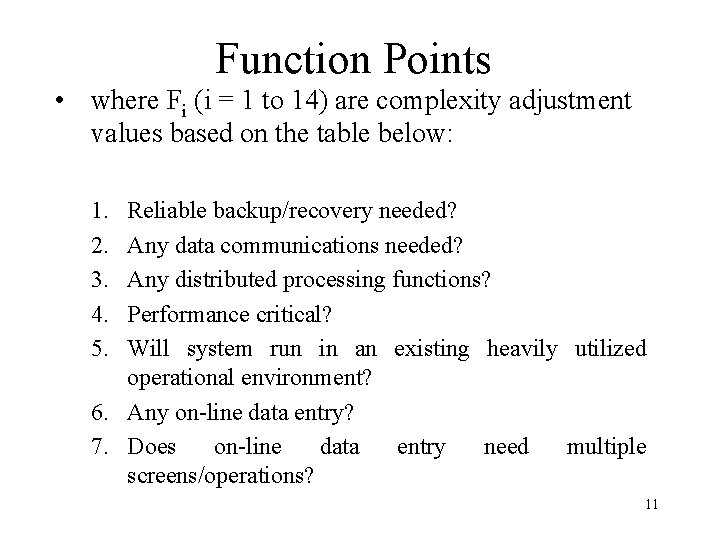

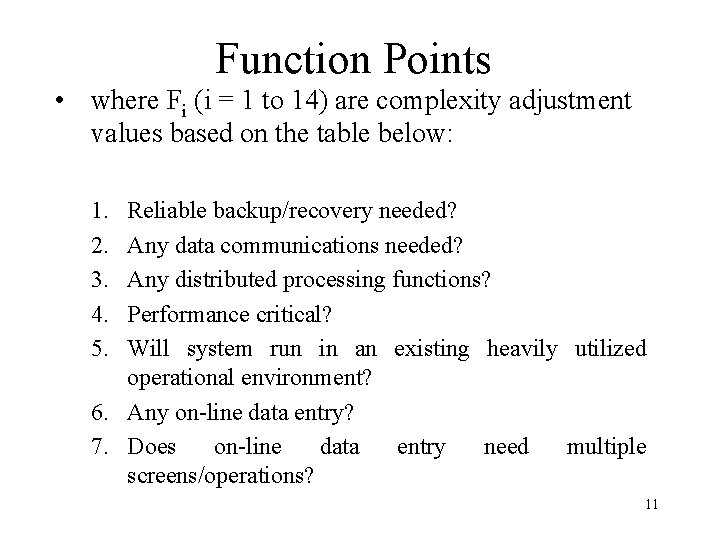

Function Points • where Fi (i = 1 to 14) are complexity adjustment values based on the table below: 1. 2. 3. 4. 5. Reliable backup/recovery needed? Any data communications needed? Any distributed processing functions? Performance critical? Will system run in an existing heavily utilized operational environment? 6. Any on-line data entry? 7. Does on-line data entry need multiple screens/operations? 11

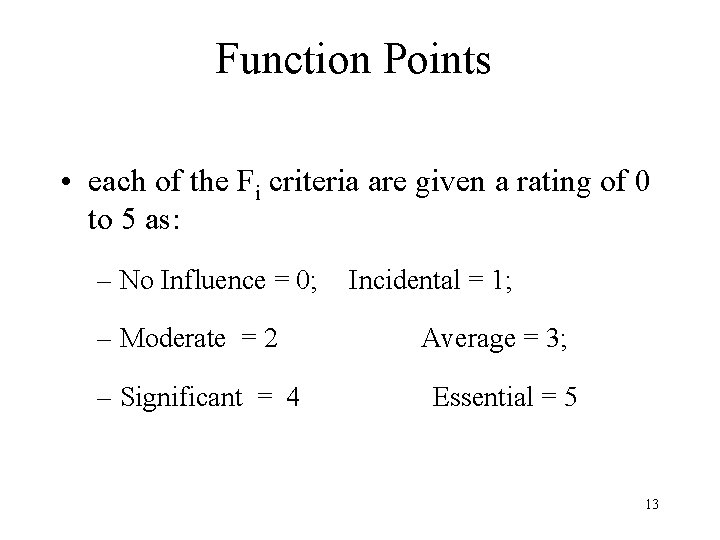

Function Points 8. Are master files updated on-line? 9. Are inputs, outputs, queries complex? 10. Is internal processing complex? 11. Must code be reusable? 12. Are conversion and installation included in design? 13. Is multiple installations in different organizations needed in design? 14. Is the application to facilitate change and ease of use by user? 12

Function Points • each of the Fi criteria are given a rating of 0 to 5 as: – No Influence = 0; – Moderate = 2 – Significant = 4 Incidental = 1; Average = 3; Essential = 5 13

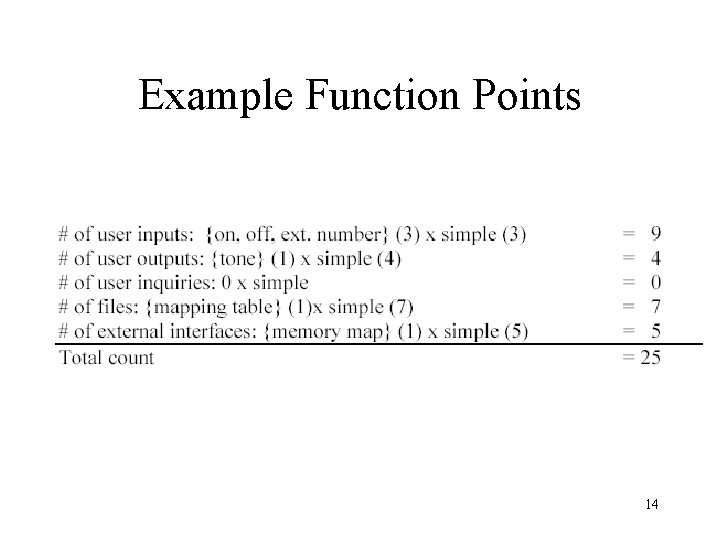

Example Function Points 14

Example: Your PBX project • Total of FPs = 25 • F 4 = 4, F 10 = 4, other Fi’s are set to 0. Sum of all Fi’s = 8. • FP = 25 x (0. 65 + 0. 01 x 8) = 18. 25 • Lines of code in C = 18. 25 x 128 LOC = 2336 LOC • For the given example, developers have implemented their projects using about 2500 LOC which is very close to predicted value of 2336 15 LOC

COCOMO • The overall resources for software project must be estimated: – – development costs (i. e. programmer-months) development interval staffing levels maintenance costs • General approaches include: – expert judgment (past experience times judgmental factor accounting for differences). – algorithmic (empirical models). 16

Empirical Estimation Models • Several models exit with various success and ease/difficulty of use. • We consider the COCOMO (Construtive Cost Model). • Decompose the s/w into small enough units to be able to estimate the LOC. • Definitions: – KDSI as kilo delivered source instructions (statements) • not including comments, test drivers, etc. – PM - person months (152 hours). • 3 levels of the Cocomo model: Basic, Intermediate and, Detailed (will not see the last one here) 17

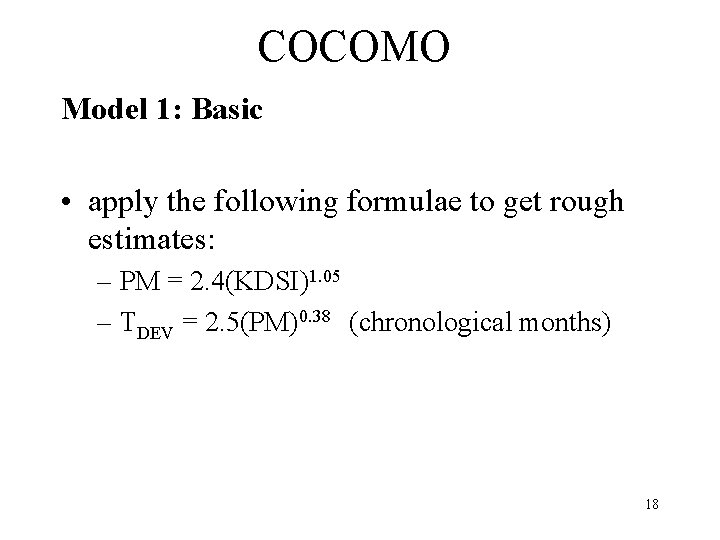

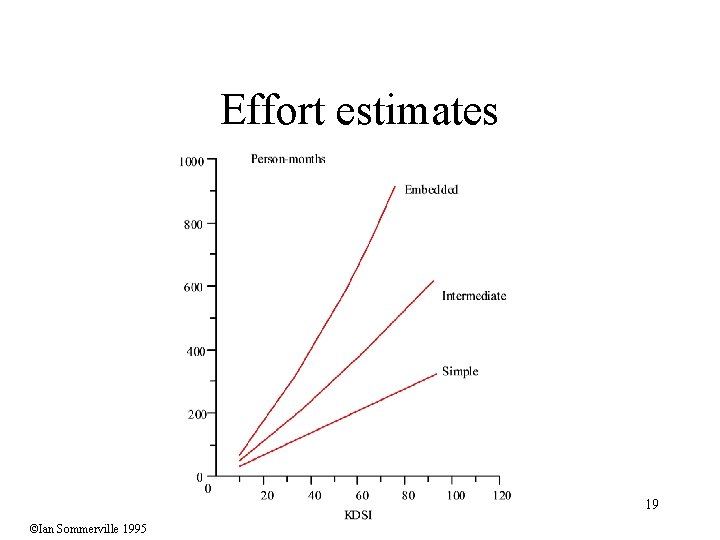

COCOMO Model 1: Basic • apply the following formulae to get rough estimates: – PM = 2. 4(KDSI)1. 05 – TDEV = 2. 5(PM)0. 38 (chronological months) 18

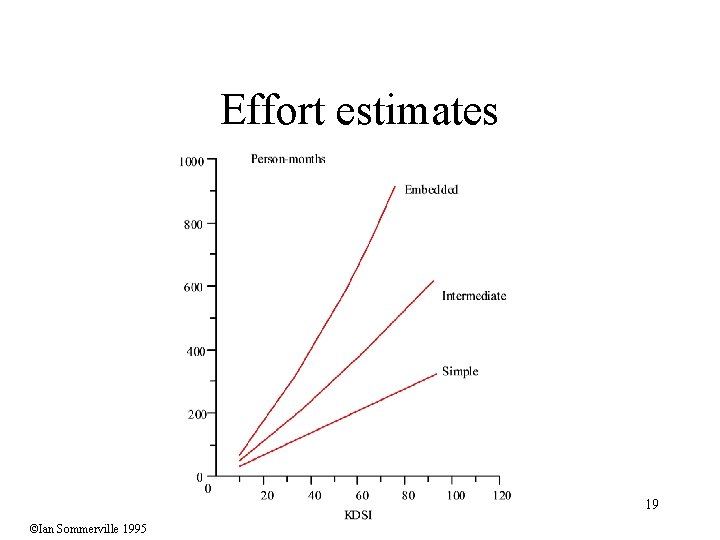

Effort estimates 19 ©Ian Sommerville 1995

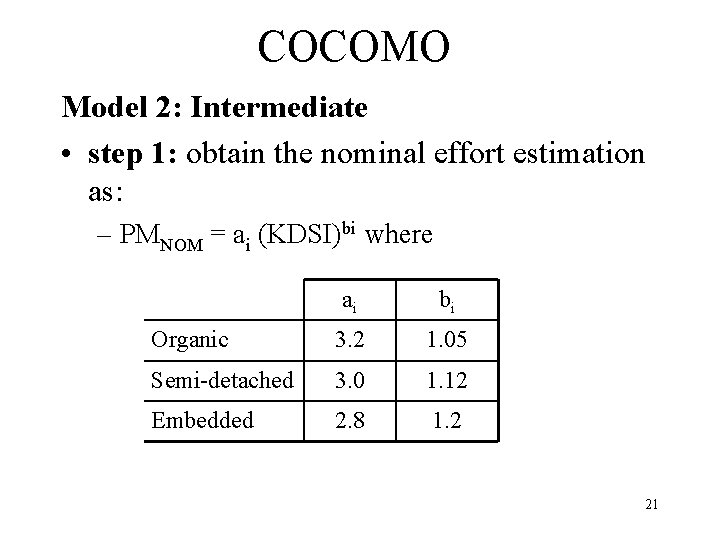

COCOMO examples • Organic mode project, 32 KLOC – PM = 2. 4 (32) 1. 05 = 91 person months – TDEV = 2. 5 (91) 0. 38 = 14 months – N = 91/14 = 6. 5 people • Embedded mode project, 128 KLOC – PM = 3. 6 (128)1. 2 = 1216 person-months – TDEV = 2. 5 (1216)0. 32 = 24 months – N = 1216/24 = 51 20 ©Ian Sommerville 1995

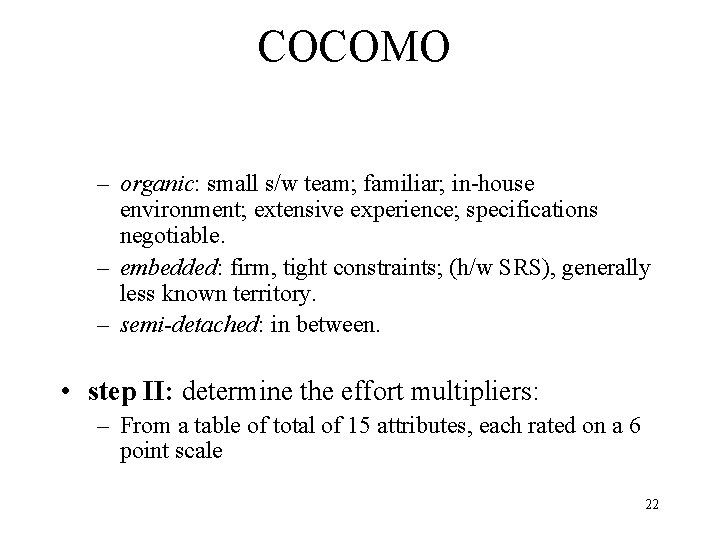

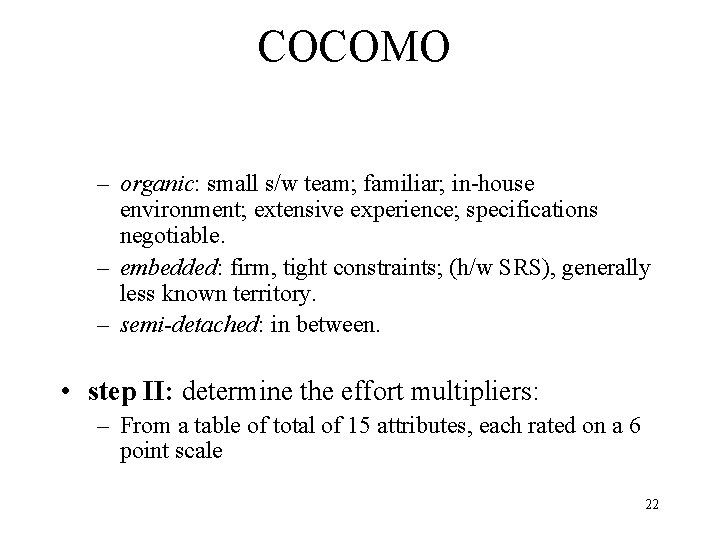

COCOMO Model 2: Intermediate • step 1: obtain the nominal effort estimation as: – PMNOM = ai (KDSI)bi where ai bi Organic 3. 2 1. 05 Semi-detached 3. 0 1. 12 Embedded 2. 8 1. 2 21

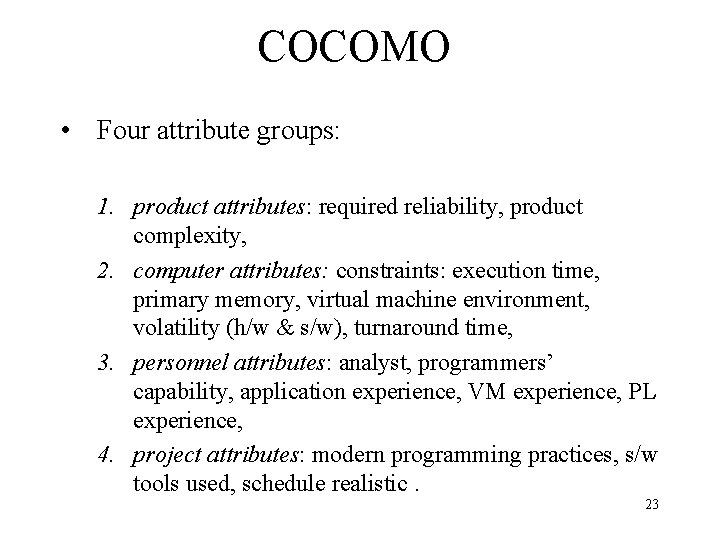

COCOMO – organic: small s/w team; familiar; in-house environment; extensive experience; specifications negotiable. – embedded: firm, tight constraints; (h/w SRS), generally less known territory. – semi-detached: in between. • step II: determine the effort multipliers: – From a table of total of 15 attributes, each rated on a 6 point scale 22

COCOMO • Four attribute groups: 1. product attributes: required reliability, product complexity, 2. computer attributes: constraints: execution time, primary memory, virtual machine environment, volatility (h/w & s/w), turnaround time, 3. personnel attributes: analyst, programmers’ capability, application experience, VM experience, PL experience, 4. project attributes: modern programming practices, s/w tools used, schedule realistic. 23

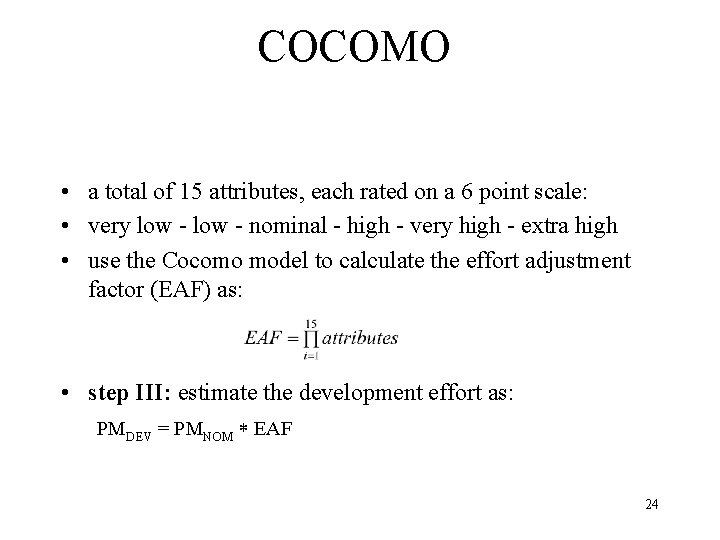

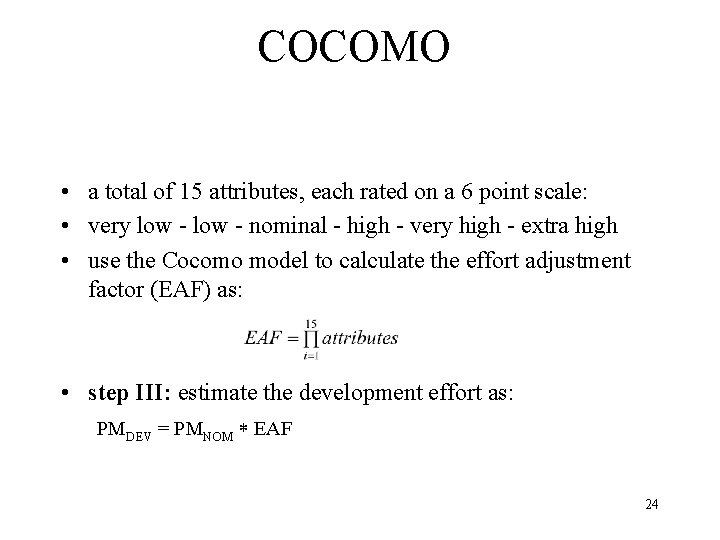

COCOMO • a total of 15 attributes, each rated on a 6 point scale: • very low - nominal - high - very high - extra high • use the Cocomo model to calculate the effort adjustment factor (EAF) as: • step III: estimate the development effort as: PMDEV = PMNOM EAF 24

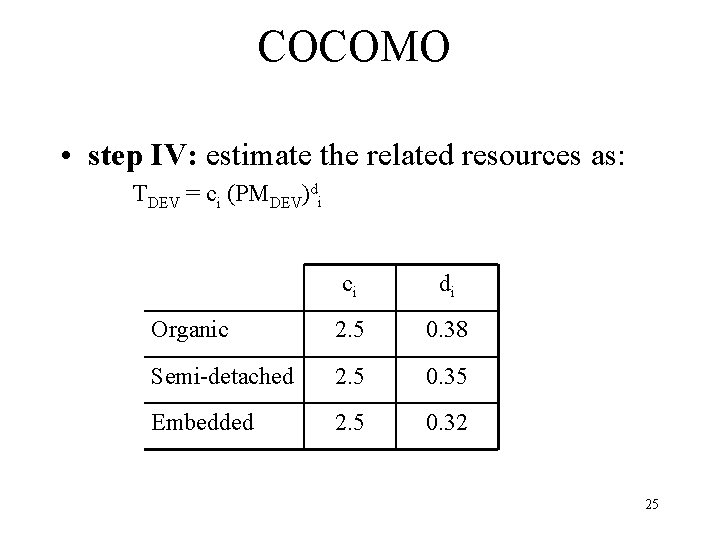

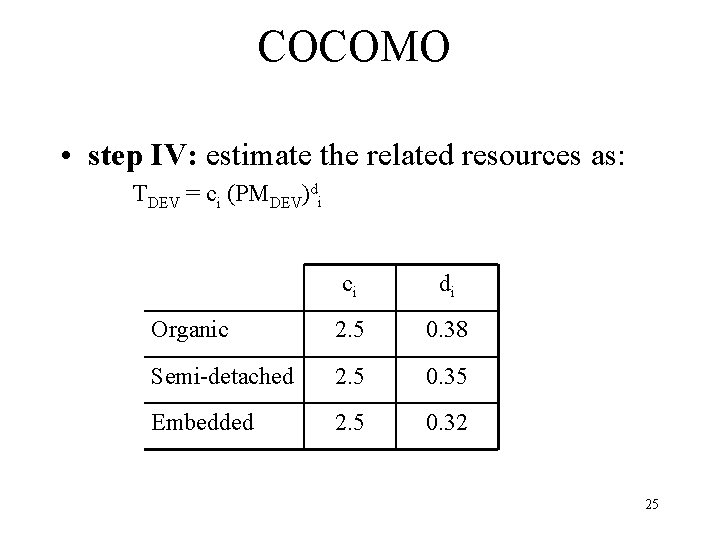

COCOMO • step IV: estimate the related resources as: TDEV = ci (PMDEV)di ci di Organic 2. 5 0. 38 Semi-detached 2. 5 0. 35 Embedded 2. 5 0. 32 25

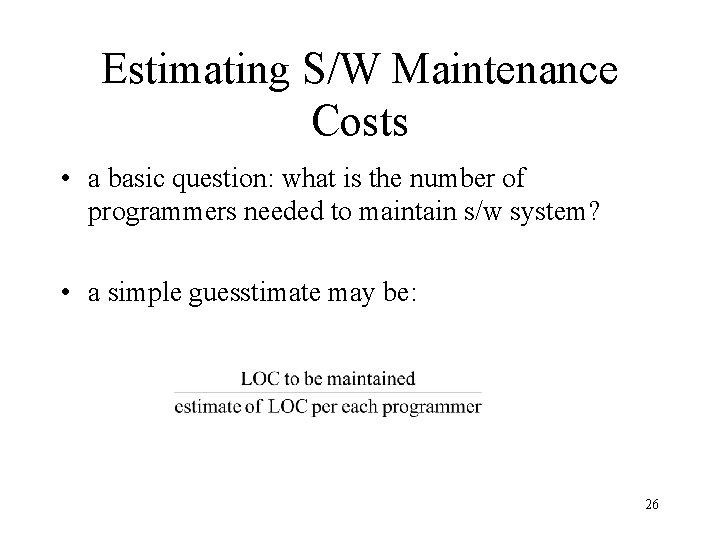

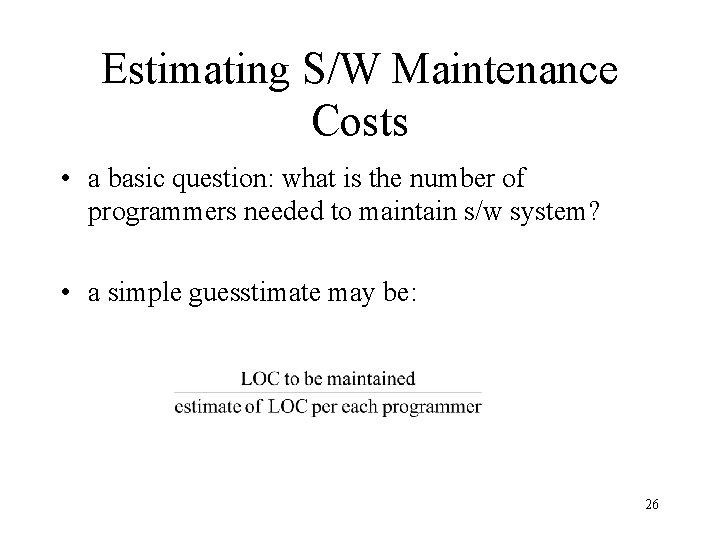

Estimating S/W Maintenance Costs • a basic question: what is the number of programmers needed to maintain s/w system? • a simple guesstimate may be: 26

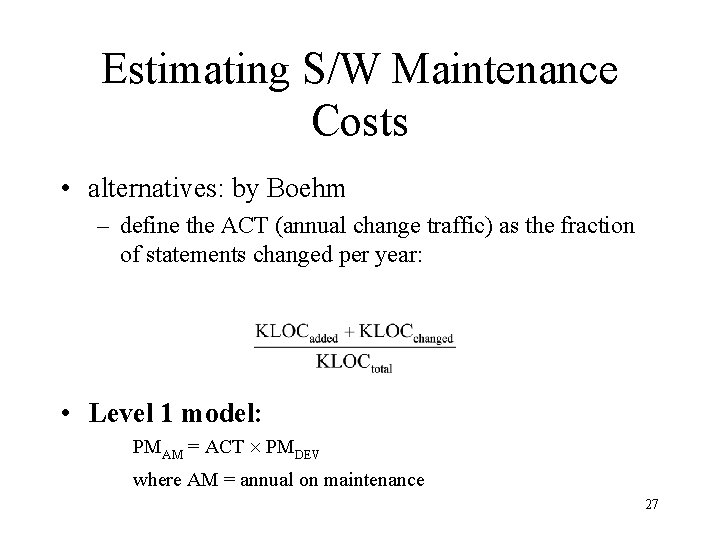

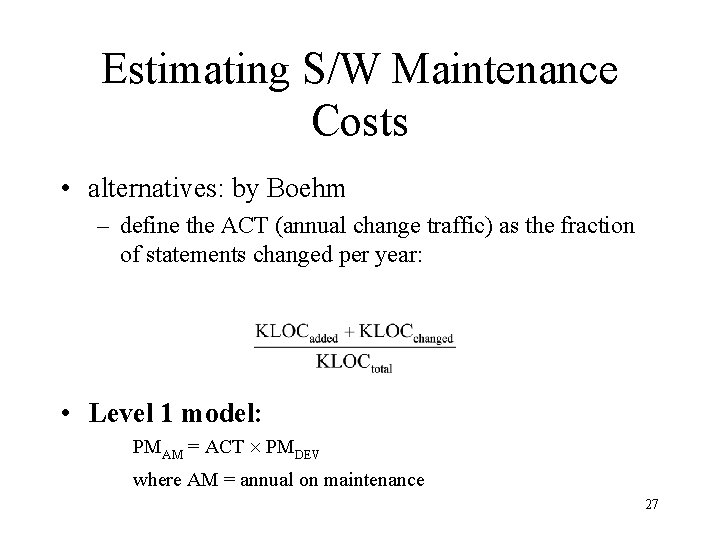

Estimating S/W Maintenance Costs • alternatives: by Boehm – define the ACT (annual change traffic) as the fraction of statements changed per year: • Level 1 model: PMAM = ACT PMDEV where AM = annual on maintenance 27

Estimating S/W Maintenance Costs • Level 2 model: PMAM = ACT PMDEV EAFM where the EAFM may be different from the EAF for development since: • different personnel • experience level, motivation, etc 28

Estimating S/W Maintenance Costs • Factors which influence s/w productivity (also maintenance): 1. People Factors: the size and expertise of the development (maintenance) organization. 2. Problem Factors: the complexity of the problem and the number of changes to design constraints or requirements. 29

Estimating S/W Maintenance Costs 3. Process Factors: the analysis, design, test techniques, languages, CASE tools, etc. 4. Product Factors: the required reliability and performance of the system. 5. Resource Factors: the availability of CASE tools, hardware and software resources. 30

White Box Metrics • White Box Metrics – Linguistic: • LOC • Halstead’s Software Science – Structural: • Mc. Cabe’s Cyclomatic Complexity • Information Flow Metric – Hybrid: • Syntactic Interconnection 31

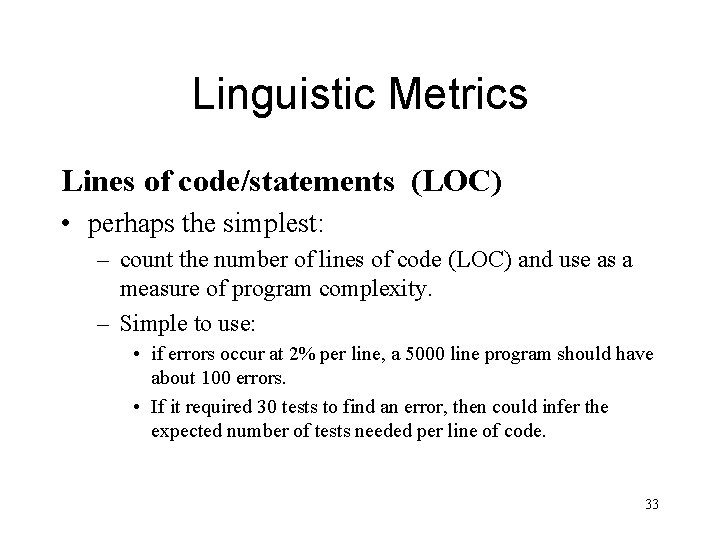

Types of White Box Metrics – Linguistic Metrics: • measuring the properties of program/specification text without interpretation or ordering of the components. – Structural Metrics: • based on structural relations between objects in program; • usually based on properties of control/data flowgraphs [e. g. number of nodes, links, nesting depth], fan-ins and fan-outs of procedures, etc. – Hybrid Metrics: • based on combination (or on a function) of linguistic and structural properties of a program. 32

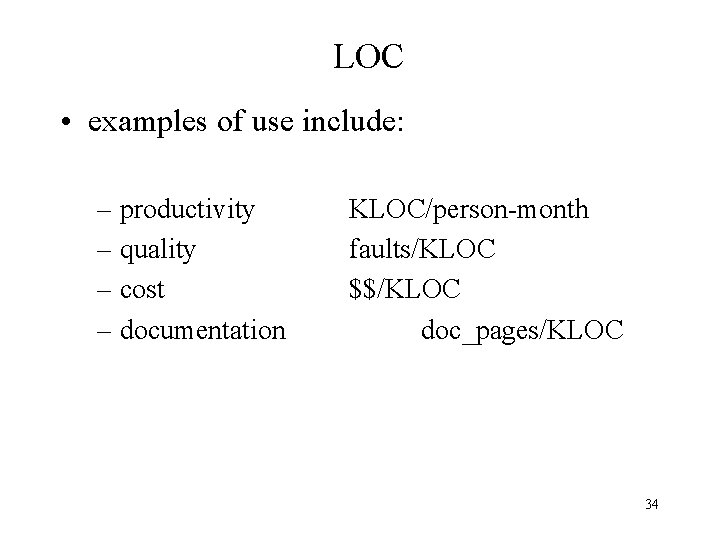

Linguistic Metrics Lines of code/statements (LOC) • perhaps the simplest: – count the number of lines of code (LOC) and use as a measure of program complexity. – Simple to use: • if errors occur at 2% per line, a 5000 line program should have about 100 errors. • If it required 30 tests to find an error, then could infer the expected number of tests needed per line of code. 33

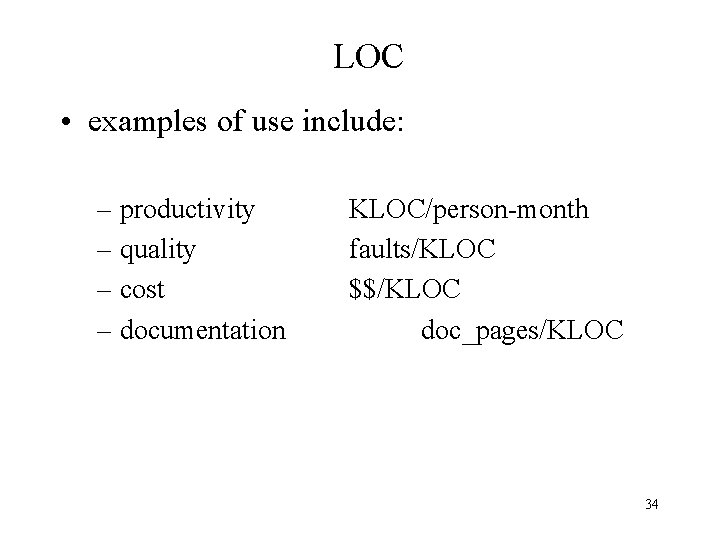

LOC • examples of use include: – productivity – quality – cost – documentation KLOC/person-month faults/KLOC $$/KLOC doc_pages/KLOC 34

LOC • Various studies indicate: – error rates ranging from 0. 04% to 7% when measured against statement counts; – LOC is as good as other metrics for small programs – LOC is optimistic for bigger programs. – LOC appears to be rather linear for small programs (<100 lines), – but increases non-linearity with program size. – Correlates well with maintenance costs – Usually better than simple guesses or nothing at all. 35

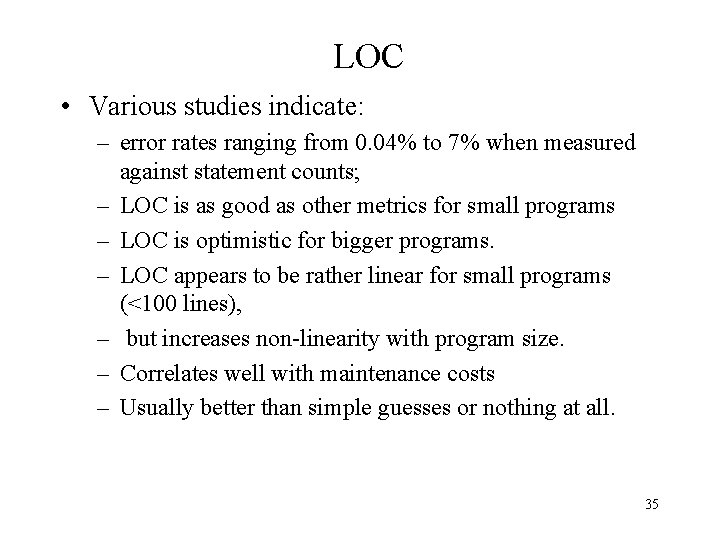

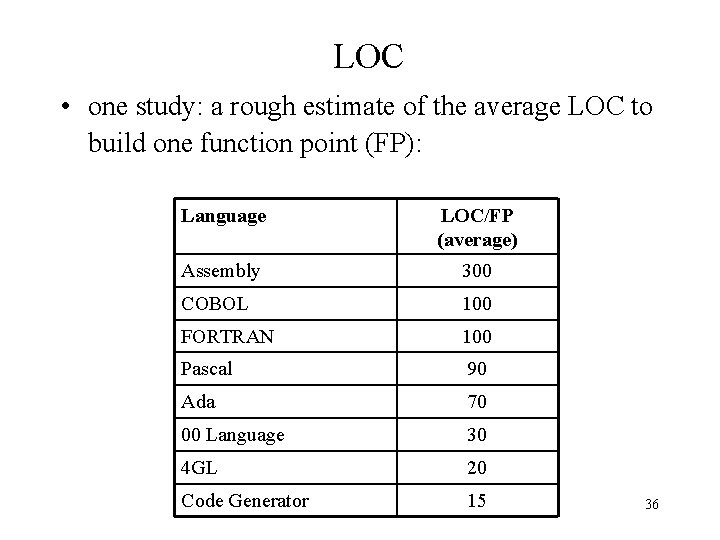

LOC • one study: a rough estimate of the average LOC to build one function point (FP): Language LOC/FP (average) Assembly 300 COBOL 100 FORTRAN 100 Pascal 90 Ada 70 00 Language 30 4 GL 20 Code Generator 15 36

![Halsteads Software Science Metrics Halstead1977 based his software science on common sense Halstead's Software Science Metrics • Halstead[1977] based his "software science" on: – common sense,](https://slidetodoc.com/presentation_image_h/8c5b0411cce8ecde21ad69f3d541d03c/image-37.jpg)

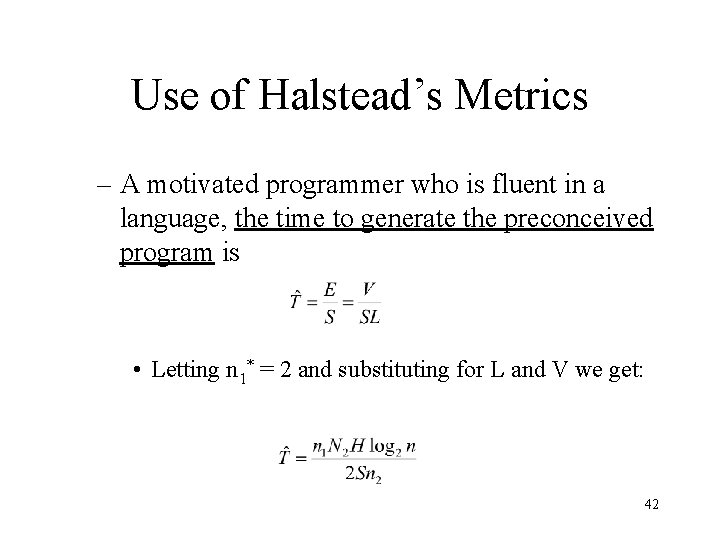

Halstead's Software Science Metrics • Halstead[1977] based his "software science" on: – common sense, – information theory and – psychology. – His 'theory' is still a matter of much controversy. • A basic overview: 37

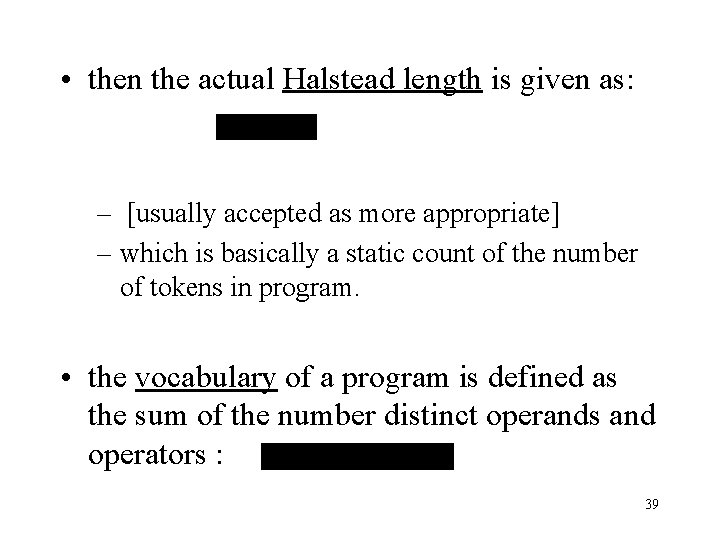

• based on two easily obtained program parameters: – the number of distinct operators in program – the number of distinct operands in program • paired operators such as: "begin. . . end", "repeat. . . until" are usually treated as a single operator. • the program length is defined by: • define the following: – N 1 = total count of all operators in program. – N 2 = total count of all operands in program. 38

• then the actual Halstead length is given as: – [usually accepted as more appropriate] – which is basically a static count of the number of tokens in program. • the vocabulary of a program is defined as the sum of the number distinct operands and operators : 39

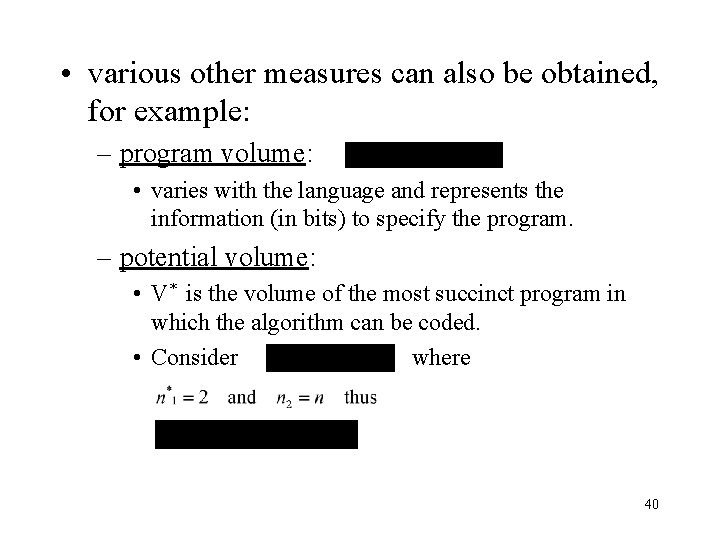

• various other measures can also be obtained, for example: – program volume: • varies with the language and represents the information (in bits) to specify the program. – potential volume: • V* is the volume of the most succinct program in which the algorithm can be coded. • Consider where 40

• program level: – which is a measure of the abstraction of the formulation of the algorithm. – also given as : • program effort: – the number of mental discriminations needed to implement the program (the implementation effort) – correlates with the effort needed for maintenance for small programs. 41

Use of Halstead’s Metrics – A motivated programmer who is fluent in a language, the time to generate the preconceived program is • Letting n 1* = 2 and substituting for L and V we get: 42

Known weaknesses: – call depth not taken into account • a program with a sequence 10 successive calls more complex than one with 10 nested calls – An if-then-else sequence given same weight as a loop structure. – added complexity issues of nesting if-then-else or loops not taken into account, etc. 43

Structural Metrics – Mc. Cabe’s Cyclomatic Complexity • Control Flow Graphs – Information Flow Metric 44

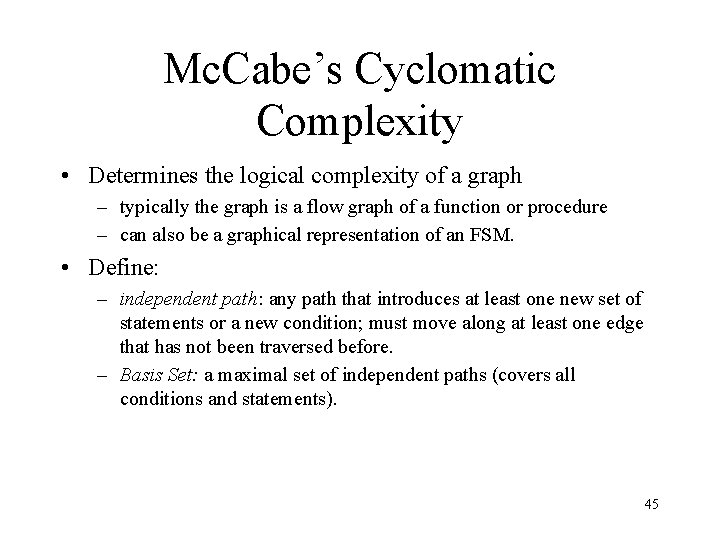

Mc. Cabe’s Cyclomatic Complexity • Determines the logical complexity of a graph – typically the graph is a flow graph of a function or procedure – can also be a graphical representation of an FSM. • Define: – independent path: any path that introduces at least one new set of statements or a new condition; must move along at least one edge that has not been traversed before. – Basis Set: a maximal set of independent paths (covers all conditions and statements). 45

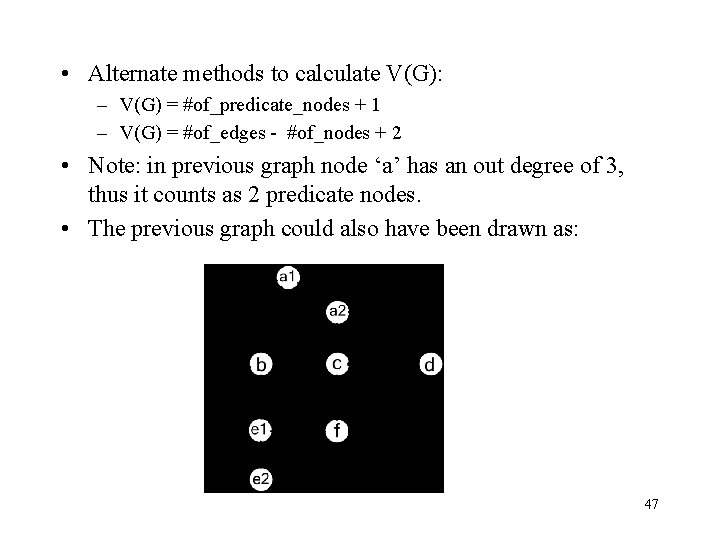

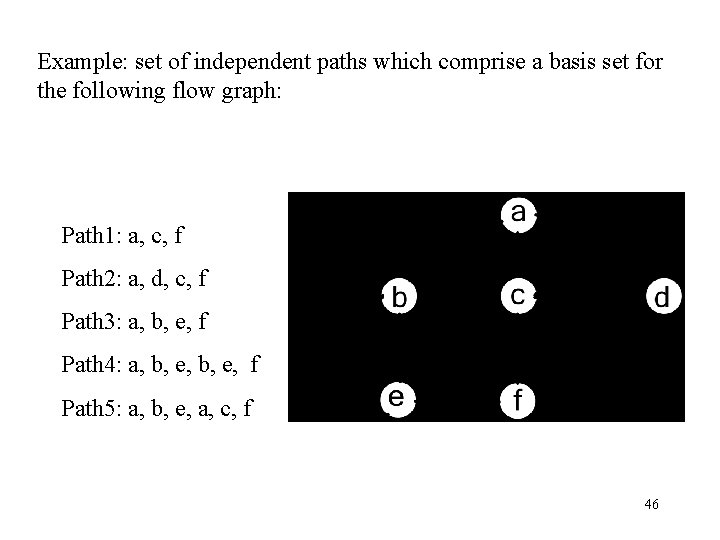

Example: set of independent paths which comprise a basis set for the following flow graph: Path 1: a, c, f Path 2: a, d, c, f Path 3: a, b, e, f Path 4: a, b, e, f Path 5: a, b, e, a, c, f 46

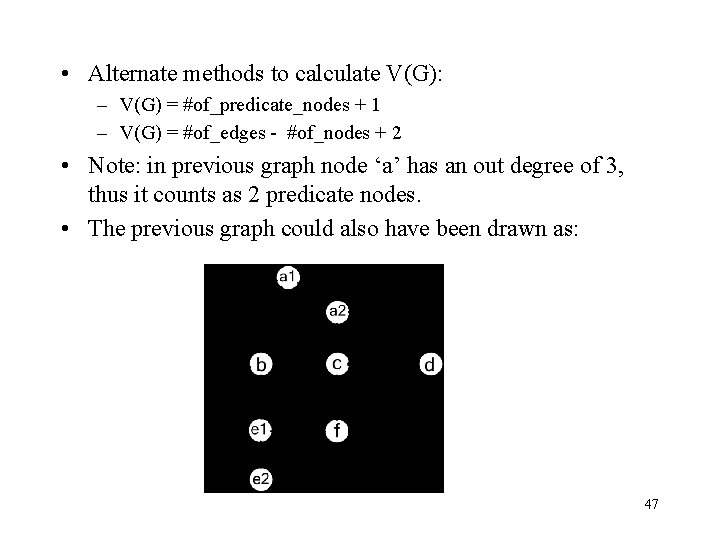

• Alternate methods to calculate V(G): – V(G) = #of_predicate_nodes + 1 – V(G) = #of_edges - #of_nodes + 2 • Note: in previous graph node ‘a’ has an out degree of 3, thus it counts as 2 predicate nodes. • The previous graph could also have been drawn as: 47

• A predicate node is a node in the graph with 2 or more out going arcs. • In the general case, for a collection of C control graphs with k connected components, the complexity is equal to the summation of their complexities. That is 48

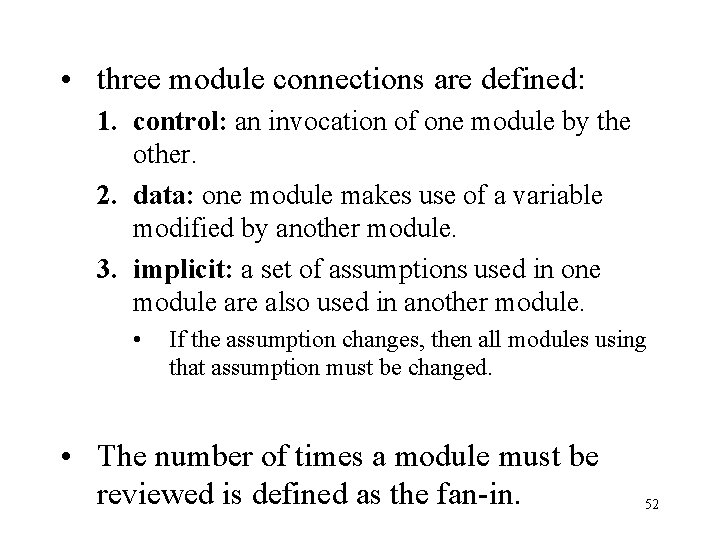

Information Flow Metric • by Henry and Kafura: – attempts to measure the complexity of the code by measuring the flow of information from one procedure to another in terms of fanins and fan-outs. • fan-in: number of local flows into a procedure plus the number of global structures read by the procedure. • fan-out: number of local flow from a procedure plus the number of global structures updated by the procedure. 49

• flows represent: – the information flow into a procedure via the argument lists and – flows from the procedure due to return values of function calls. • Thus, the complexity of the procedure, p, is given by Cp = (fan-in fan-out)2 50

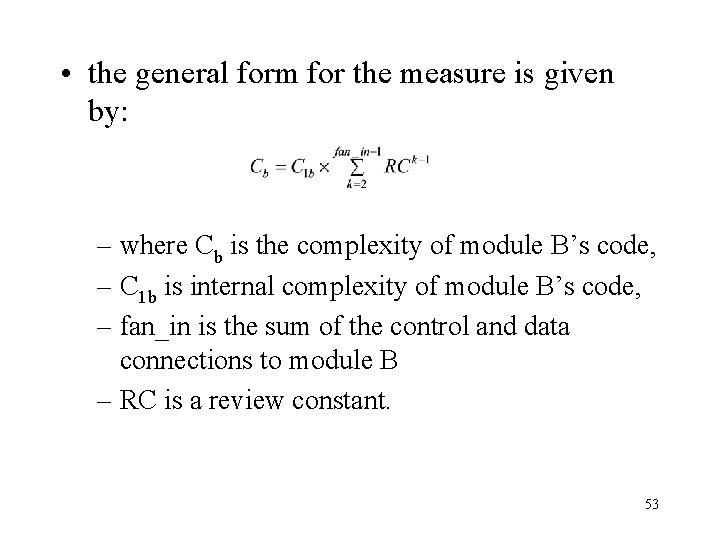

Hybrid Metrics • one example is Woodfield’s Syntactic Interconnection Model – attemps to relate programming effort to time. • a connection relationship between two modules A and B: – is a partial ordering between the modules – i. e. to understand the function of module A • one must first understand the function of module B – denoted as: A B 51

• three module connections are defined: 1. control: an invocation of one module by the other. 2. data: one module makes use of a variable modified by another module. 3. implicit: a set of assumptions used in one module are also used in another module. • If the assumption changes, then all modules using that assumption must be changed. • The number of times a module must be reviewed is defined as the fan-in. 52

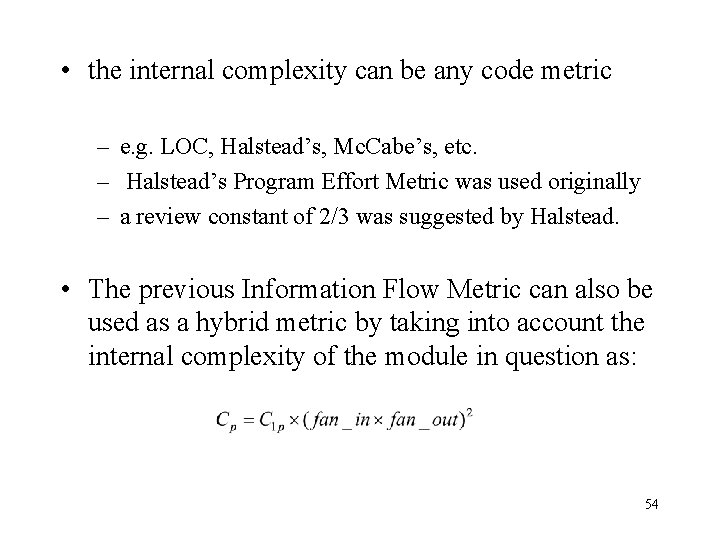

• the general form for the measure is given by: – where Cb is the complexity of module B’s code, – C 1 b is internal complexity of module B’s code, – fan_in is the sum of the control and data connections to module B – RC is a review constant. 53

• the internal complexity can be any code metric – e. g. LOC, Halstead’s, Mc. Cabe’s, etc. – Halstead’s Program Effort Metric was used originally – a review constant of 2/3 was suggested by Halstead. • The previous Information Flow Metric can also be used as a hybrid metric by taking into account the internal complexity of the module in question as: 54

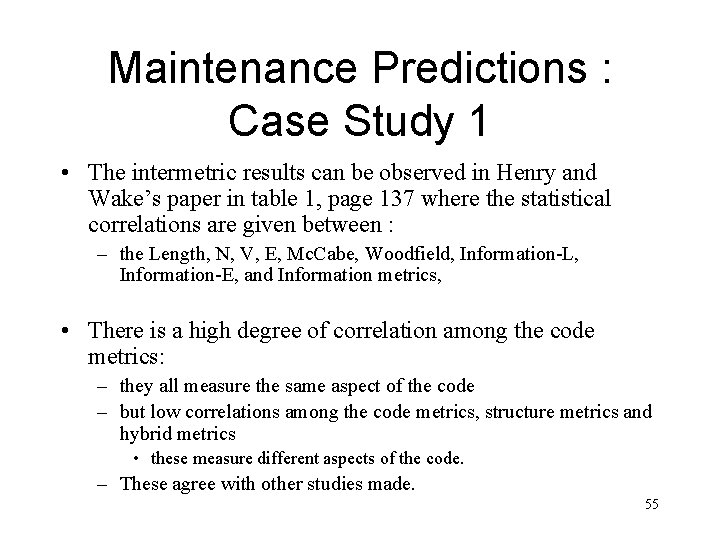

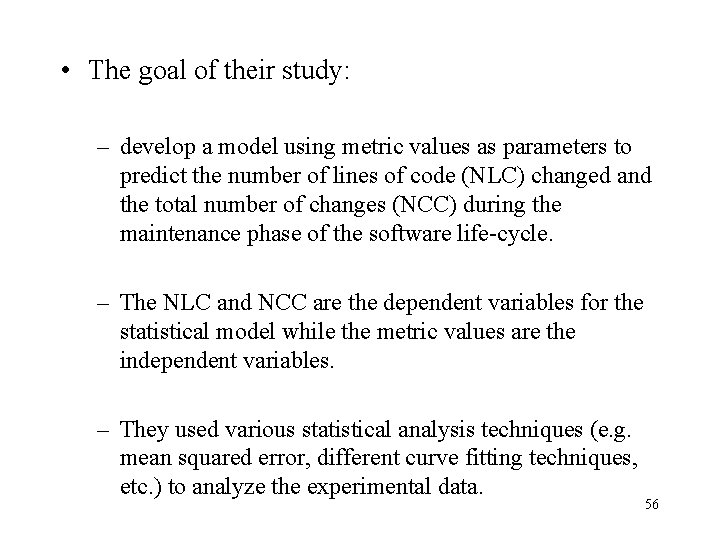

Maintenance Predictions : Case Study 1 • The intermetric results can be observed in Henry and Wake’s paper in table 1, page 137 where the statistical correlations are given between : – the Length, N, V, E, Mc. Cabe, Woodfield, Information-L, Information-E, and Information metrics, • There is a high degree of correlation among the code metrics: – they all measure the same aspect of the code – but low correlations among the code metrics, structure metrics and hybrid metrics • these measure different aspects of the code. – These agree with other studies made. 55

• The goal of their study: – develop a model using metric values as parameters to predict the number of lines of code (NLC) changed and the total number of changes (NCC) during the maintenance phase of the software life-cycle. – The NLC and NCC are the dependent variables for the statistical model while the metric values are the independent variables. – They used various statistical analysis techniques (e. g. mean squared error, different curve fitting techniques, etc. ) to analyze the experimental data. 56

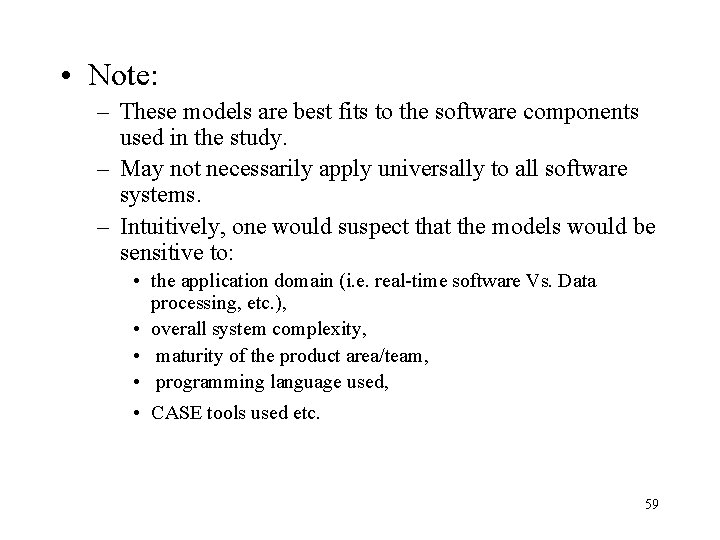

• the tables below summarize the overall top candidate models obtained in their research (see their paper for the details): Best overall NLC models NLC = 0. 42997221 + 0. 000050156*E - 0. 000000199210*INF-E NLC = 0. 45087158 + 0. 000049895*E - 0. 000173851*INF-L NLC = 0. 60631548 + 0. 000050843*E - 0. 000029819*WOOD 0. 000000177341*INF-E NLC = 0. 33675906 + 0. 000049889*E NLC = 1. 51830192 + 0. 000054724*E - 0. 10084685*V(G) - 0. 000000161798*INF-E NLC = 1. 45518829 + 0. 00005456*E - 0. 10199539*V(G) 57

• Best overall NCC models NCC = 0. 34294979 + 0. 000011418*E NCC = 0. 36846091 + 0. 000011488*E - 0. 00000005238*INF-E NCC = 0. 38710077 + 0. 000011583*E - 0. 0000068966*WOOD NCC = 0. 25250119 + 0. 003972857*N - 0. 000598677*V + 0. 000014538*E NCC = 0. 32020501 + 0. 01369264*L - 0. 000481846*V + 0. 000012304*E 58

• Note: – These models are best fits to the software components used in the study. – May not necessarily apply universally to all software systems. – Intuitively, one would suspect that the models would be sensitive to: • the application domain (i. e. real-time software Vs. Data processing, etc. ), • overall system complexity, • maturity of the product area/team, • programming language used, • CASE tools used etc. 59

• Some good correlations were found: – one procedure had a predicted NLC = 3. 86 • the actual number of lines changed was 3; – another had an NLC of 0. 07 • the number of lines changed was zero. • The experimental results look encouraging but need further research. 60

• The basis for generating the models for NLC and NCC: – not to get exact predicted values. – But, rather these values could be used as an ordering criteria to rank the components in order of likelihood of maintenance. – If performed before system release, • future maintenance could be prevented (reduced) by changing or redesigning the higher ranking components. 61

• to properly use these models (or others): – the organization would be required to collect a significant amount of error or maintenance data first • before the models results can be properly interpreted. • models such as these could be used during the coding stage: – to identify high maintenance prone components, – redesign/code them to improve the expected results. • useful during the test phase: – to identify those components which appear to require more intensive testing, – help to estimate the test effort. 62

![Case Study 2 another interesting case study was performed by Basili2 et al Case Study 2 • another interesting case study was performed by Basili[2] et al.](https://slidetodoc.com/presentation_image_h/8c5b0411cce8ecde21ad69f3d541d03c/image-63.jpg)

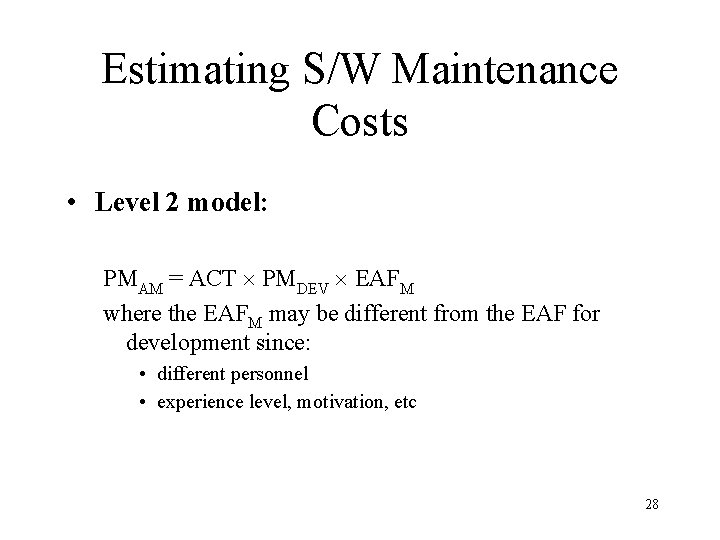

Case Study 2 • another interesting case study was performed by Basili[2] et al. • Their major results were: – the development of a predictive model for the software maintenance release process, – measurement based lessons learned about the process, – lessons learned from the establishment of a maintenance improvement program. 63

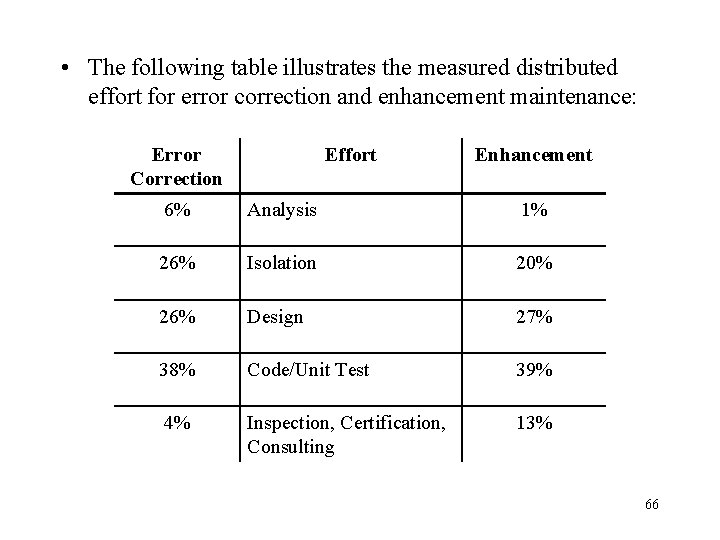

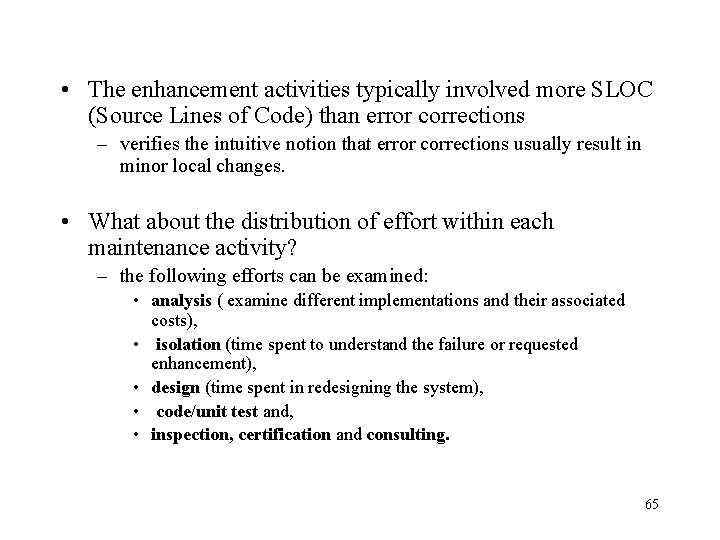

• Maintenance types considered: – error correction, – enhancement and adaptation • within the Flight Dynamics Division (FDD) of the NASA Goddard Space Flight Center. • the following table illustrates the average distribution of effort across maintenance types: Maintenance Activity Enhancement Effort 61% Correction Adaptation Other 14% 5% 20% 64

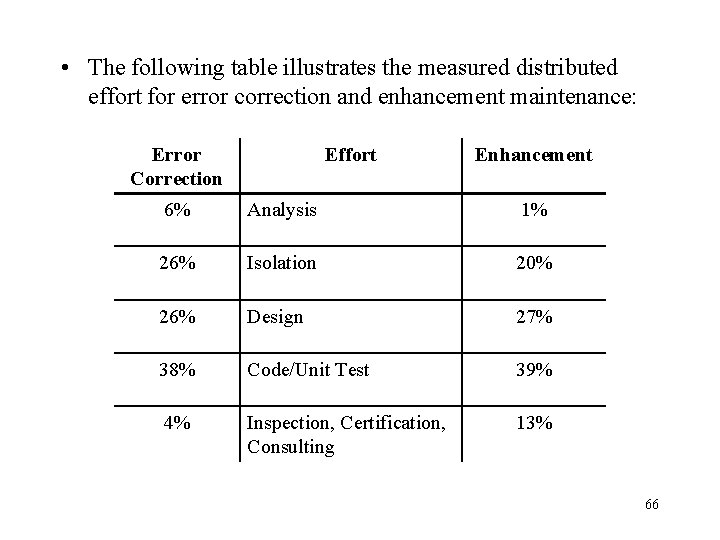

• The enhancement activities typically involved more SLOC (Source Lines of Code) than error corrections – verifies the intuitive notion that error corrections usually result in minor local changes. • What about the distribution of effort within each maintenance activity? – the following efforts can be examined: • analysis ( examine different implementations and their associated costs), • isolation (time spent to understand the failure or requested enhancement), • design (time spent in redesigning the system), • code/unit test and, • inspection, certification and consulting. 65

• The following table illustrates the measured distributed effort for error correction and enhancement maintenance: Error Correction Effort Enhancement 6% Analysis 1% 26% Isolation 20% 26% Design 27% 38% Code/Unit Test 39% 4% Inspection, Certification, Consulting 13% 66

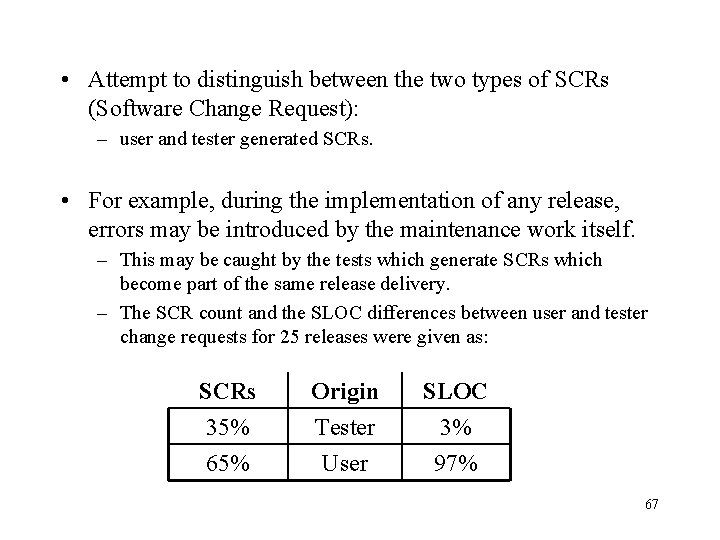

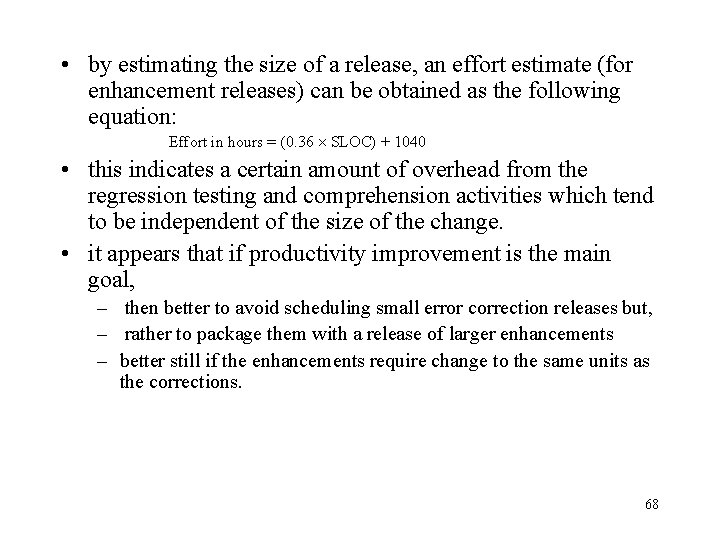

• Attempt to distinguish between the two types of SCRs (Software Change Request): – user and tester generated SCRs. • For example, during the implementation of any release, errors may be introduced by the maintenance work itself. – This may be caught by the tests which generate SCRs which become part of the same release delivery. – The SCR count and the SLOC differences between user and tester change requests for 25 releases were given as: SCRs 35% 65% Origin Tester User SLOC 3% 97% 67

• by estimating the size of a release, an effort estimate (for enhancement releases) can be obtained as the following equation: Effort in hours = (0. 36 SLOC) + 1040 • this indicates a certain amount of overhead from the regression testing and comprehension activities which tend to be independent of the size of the change. • it appears that if productivity improvement is the main goal, – then better to avoid scheduling small error correction releases but, – rather to package them with a release of larger enhancements – better still if the enhancements require change to the same units as the corrections. 68