ECE 4371 Fall 2017 Introduction to Telecommunication EngineeringTelecommunication

- Slides: 25

ECE 4371, Fall, 2017 Introduction to Telecommunication Engineering/Telecommunication Laboratory Zhu Han Department of Electrical and Computer Engineering Class 16 Oct. 30 th, 2017

Outline l Convolutional Code – – Encoder Decoder Viterbi Interleaver

Convolutional Code Introduction l Convolutional codes map information to code bits sequentially by convolving a sequence of information bits with “generator” sequences l A convolutional encoder encodes K information bits to N>K code bits at one time step l Convolutional codes can be regarded as block codes for which the encoder has a certain structure such that we can express the encoding operation as convolution

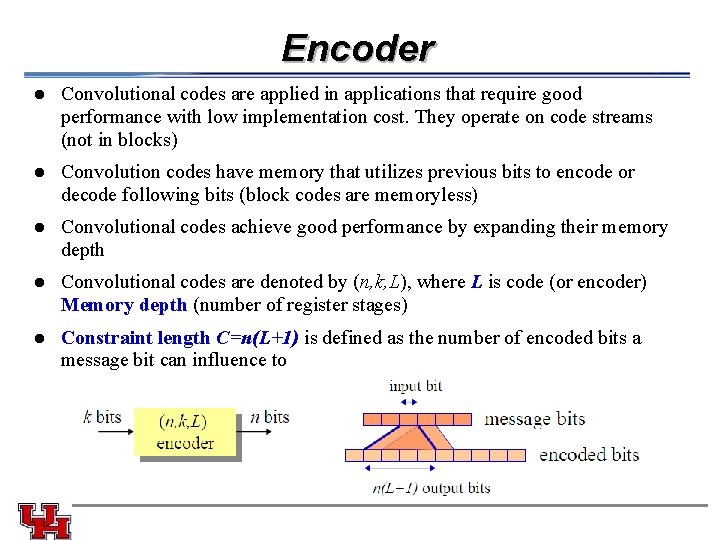

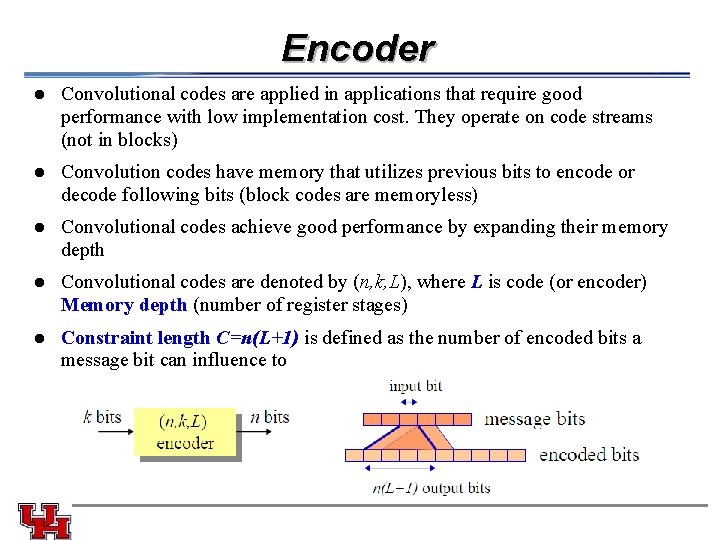

Encoder l Convolutional codes are applied in applications that require good performance with low implementation cost. They operate on code streams (not in blocks) l Convolution codes have memory that utilizes previous bits to encode or decode following bits (block codes are memoryless) l Convolutional codes achieve good performance by expanding their memory depth l Convolutional codes are denoted by (n, k, L), where L is code (or encoder) Memory depth (number of register stages) l Constraint length C=n(L+1) is defined as the number of encoded bits a message bit can influence to

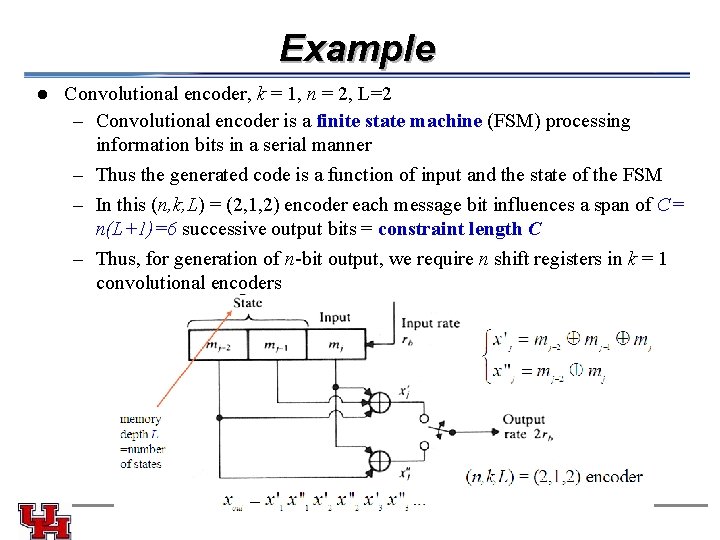

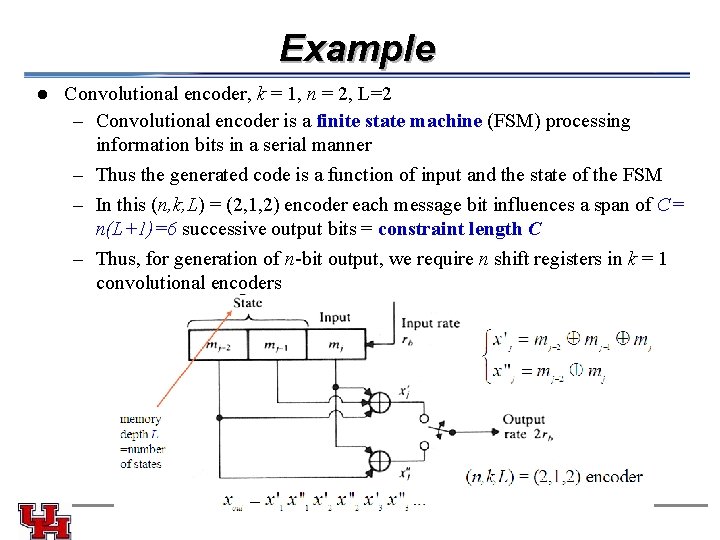

Example Convolutional encoder, k = 1, n = 2, L=2 – Convolutional encoder is a finite state machine (FSM) processing information bits in a serial manner – Thus the generated code is a function of input and the state of the FSM – In this (n, k, L) = (2, 1, 2) encoder each message bit influences a span of C= n(L+1)=6 successive output bits = constraint length C – Thus, for generation of n-bit output, we require n shift registers in k = 1 convolutional encoders l

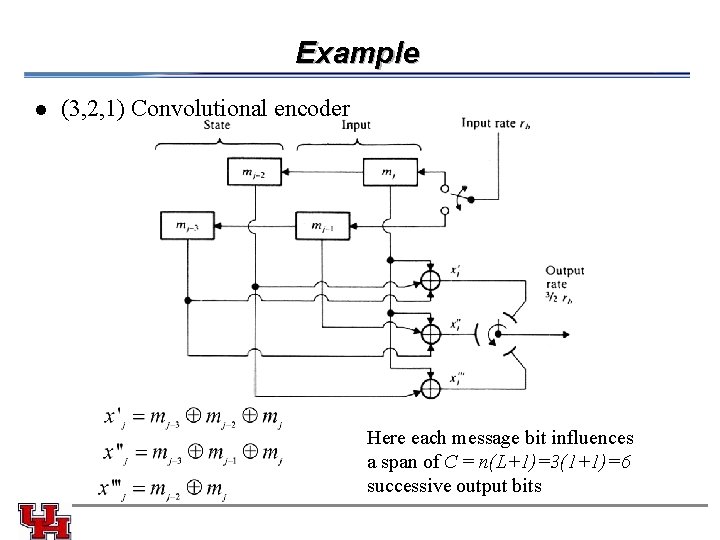

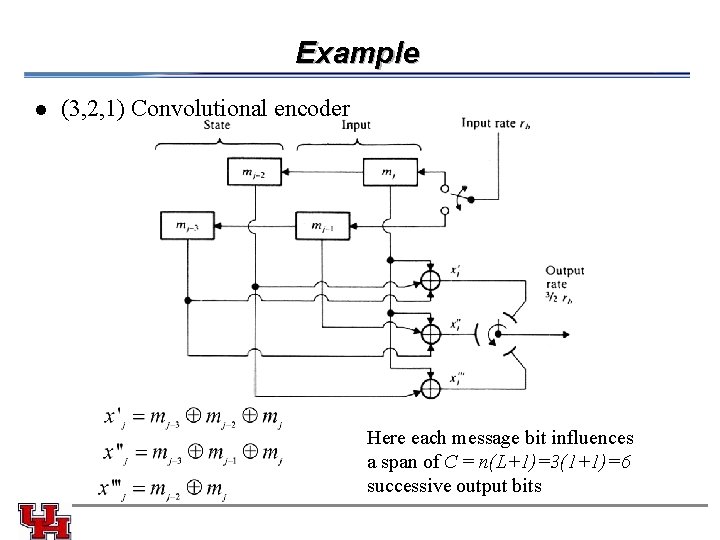

Example l (3, 2, 1) Convolutional encoder Here each message bit influences a span of C = n(L+1)=3(1+1)=6 successive output bits

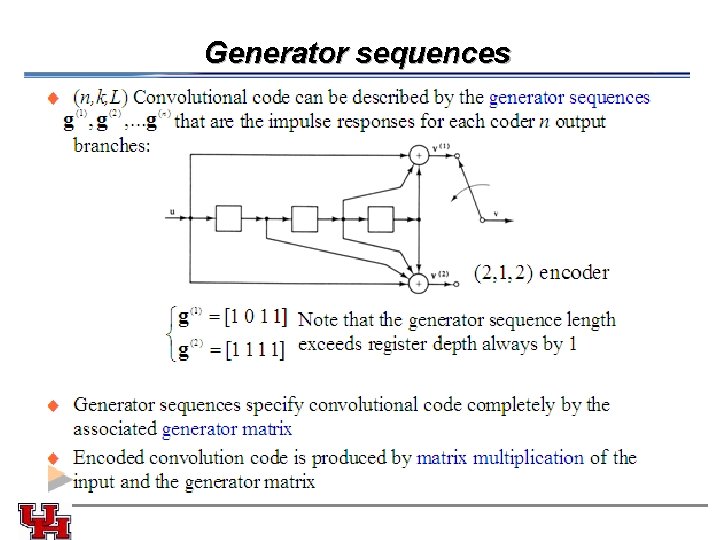

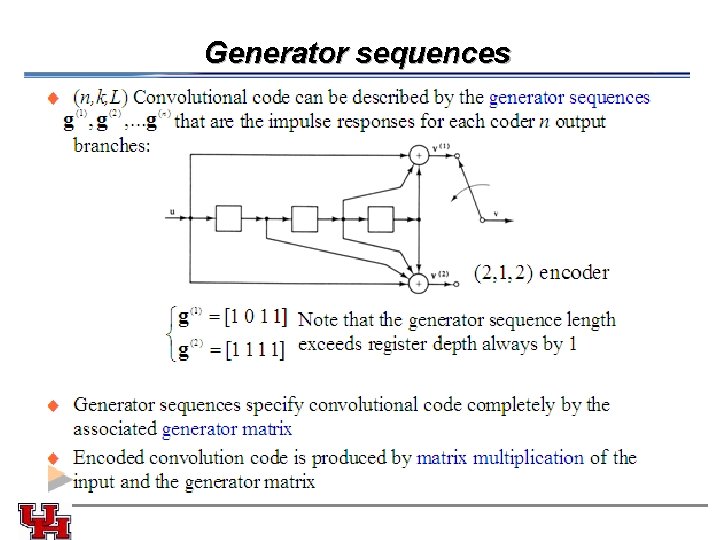

Generator sequences

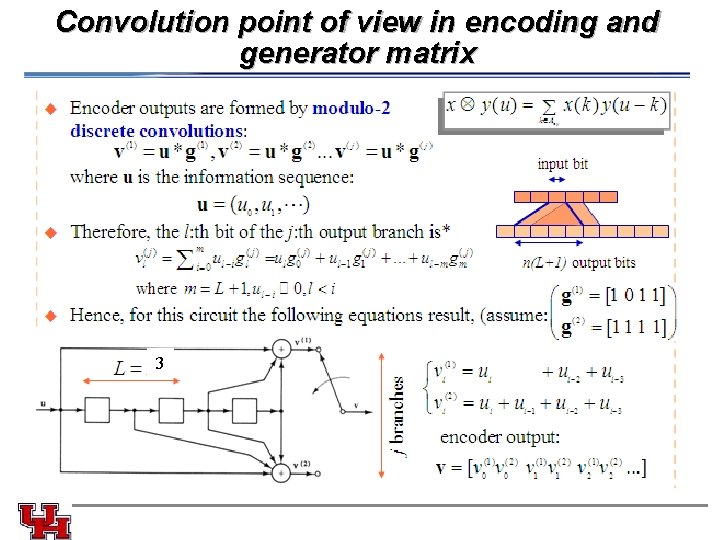

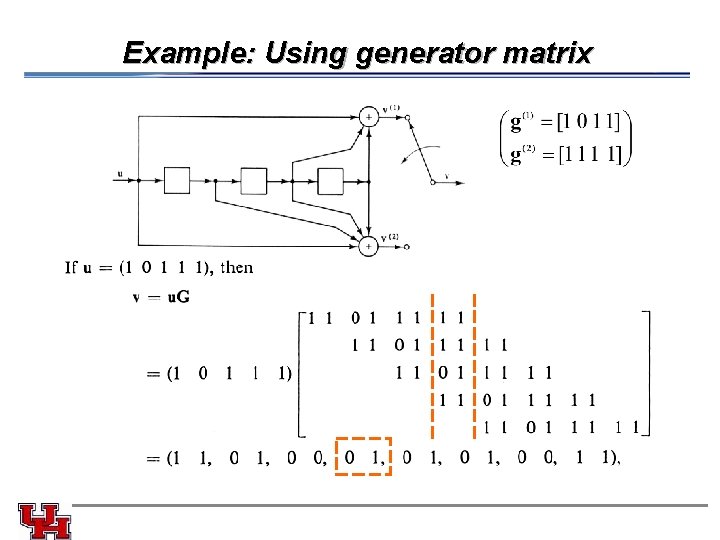

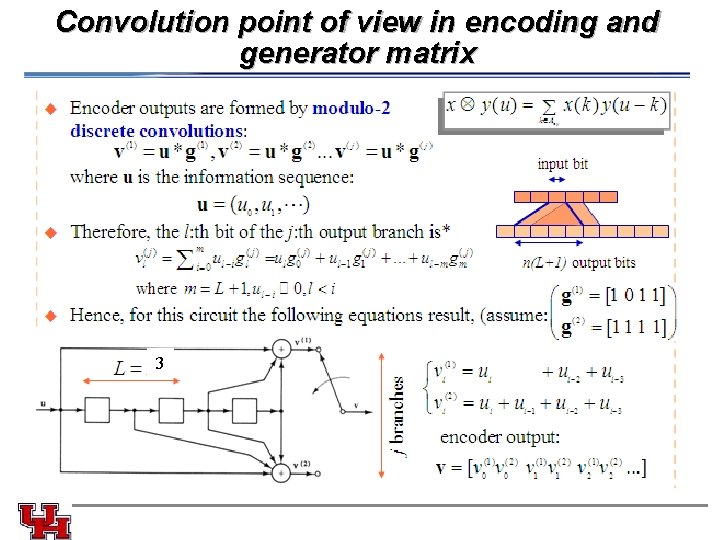

Convolution point of view in encoding and generator matrix 3

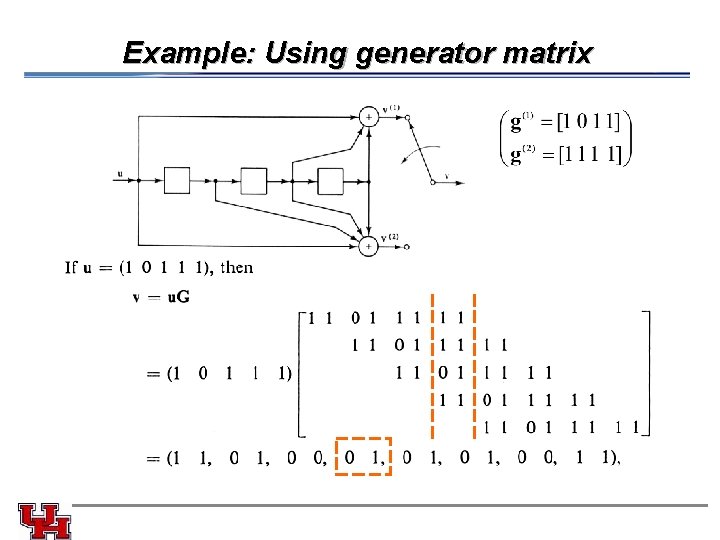

Example: Using generator matrix

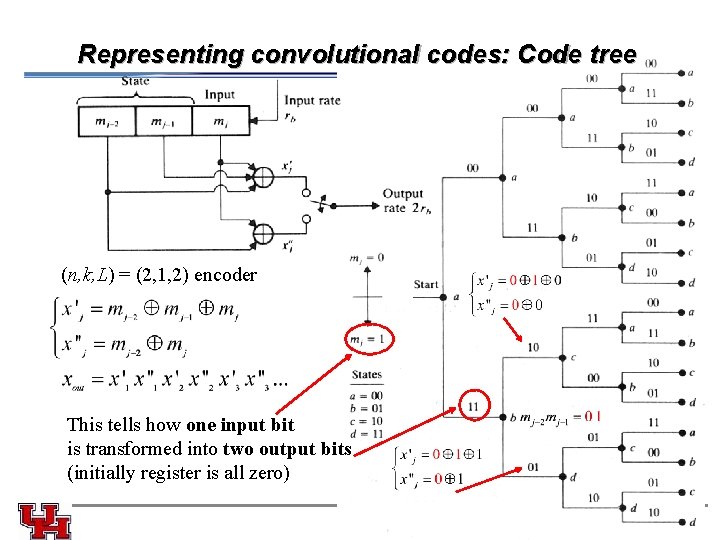

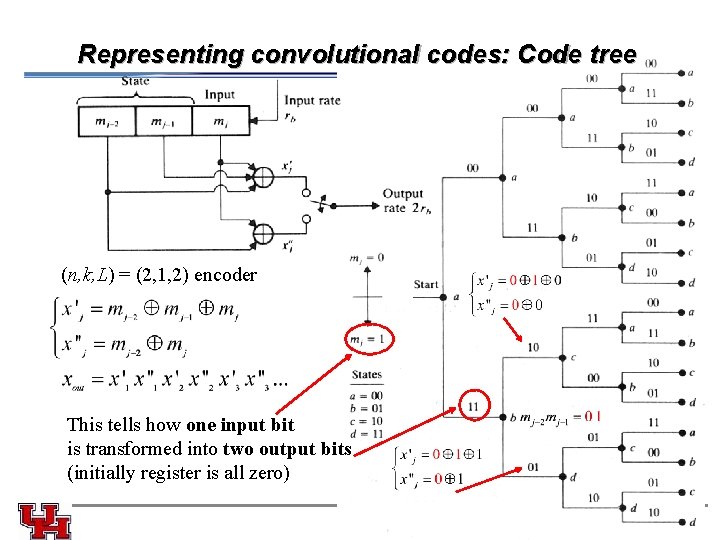

Representing convolutional codes: Code tree (n, k, L) = (2, 1, 2) encoder This tells how one input bit is transformed into two output bits (initially register is all zero)

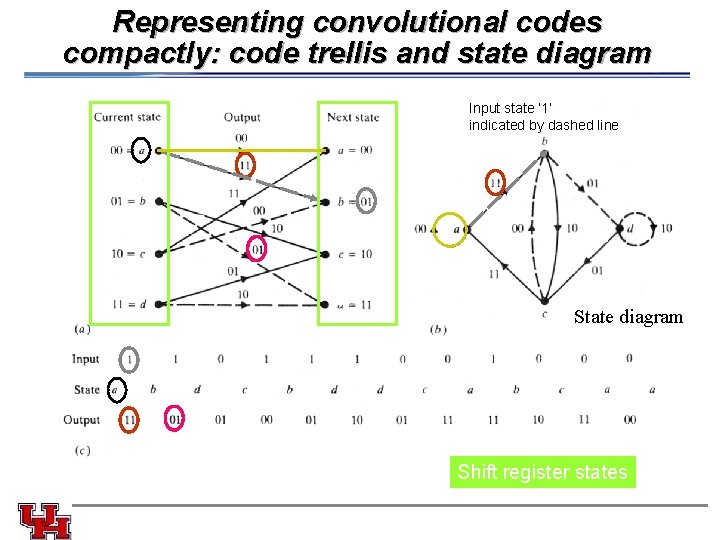

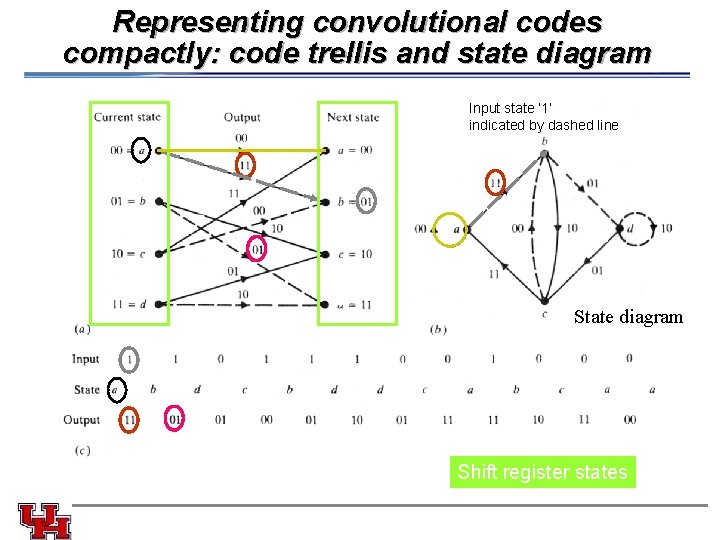

Representing convolutional codes compactly: code trellis and state diagram Input state ‘ 1’ indicated by dashed line Code trellis State diagram Shift register states

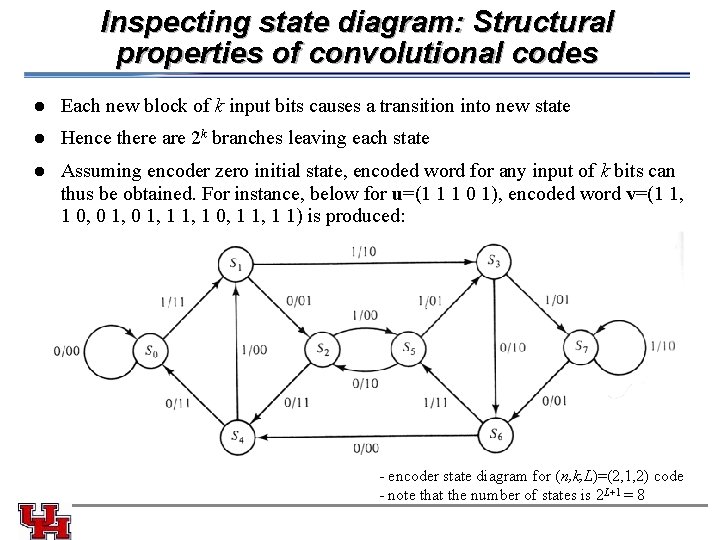

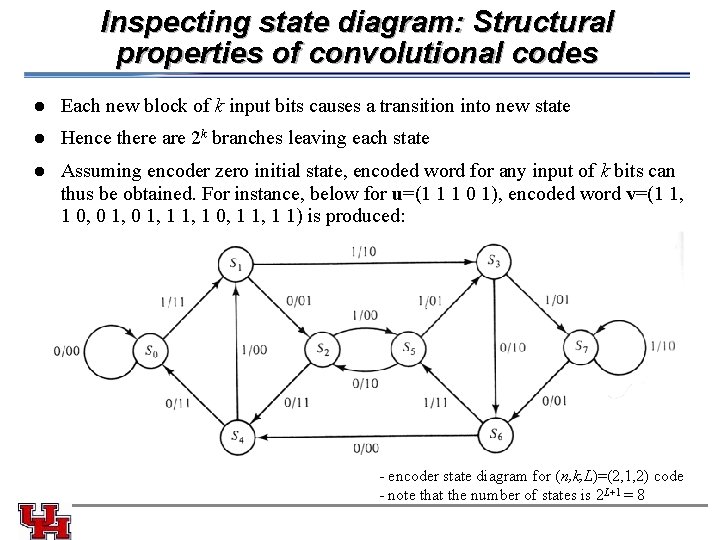

Inspecting state diagram: Structural properties of convolutional codes l Each new block of k input bits causes a transition into new state l Hence there are 2 k branches leaving each state l Assuming encoder zero initial state, encoded word for any input of k bits can thus be obtained. For instance, below for u=(1 1 1 0 1), encoded word v=(1 1, 1 0, 0 1, 1 0, 1 1) is produced: - encoder state diagram for (n, k, L)=(2, 1, 2) code - note that the number of states is 2 L+1 = 8

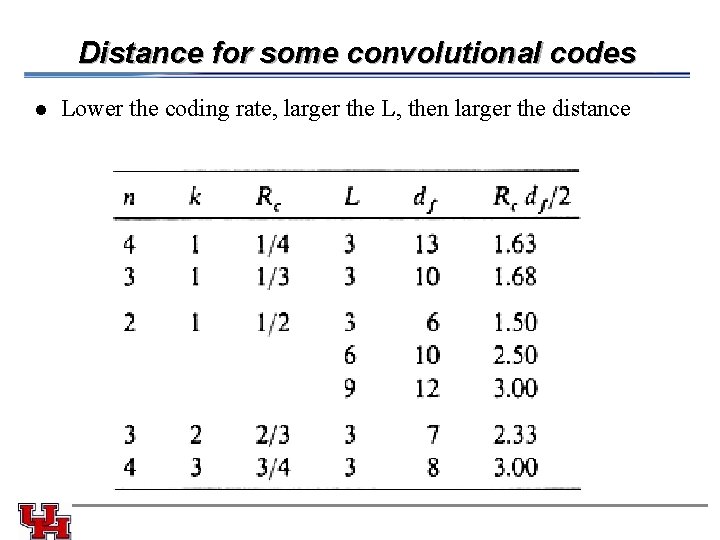

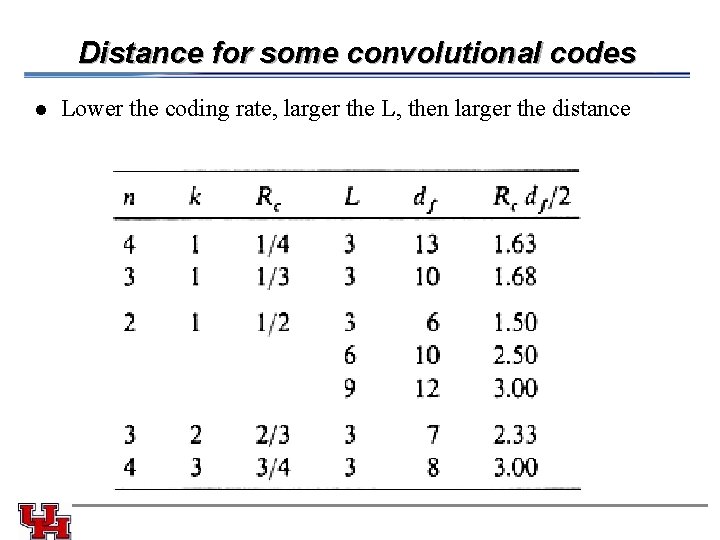

Distance for some convolutional codes l Lower the coding rate, larger the L, then larger the distance

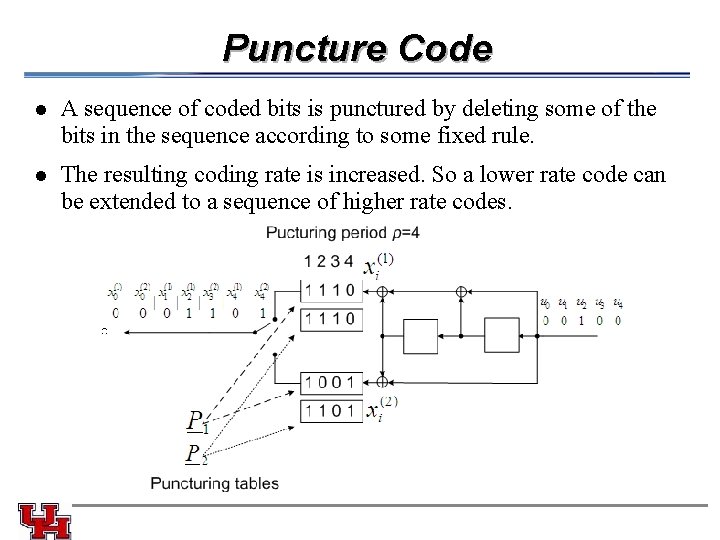

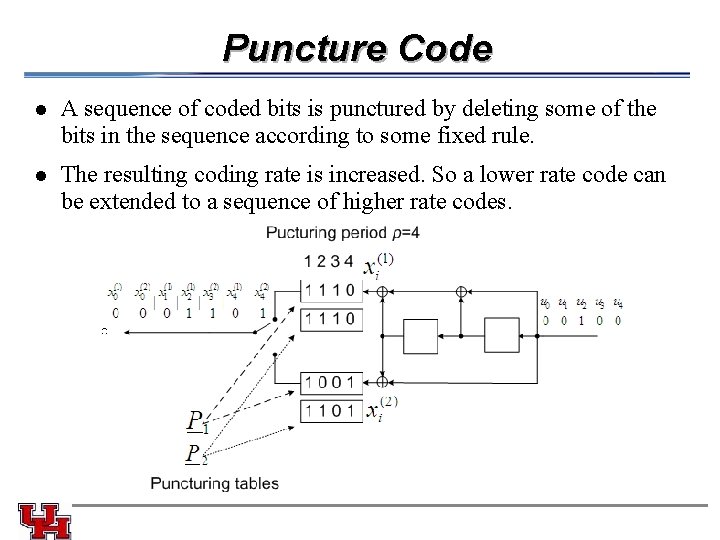

Puncture Code l A sequence of coded bits is punctured by deleting some of the bits in the sequence according to some fixed rule. l The resulting coding rate is increased. So a lower rate code can be extended to a sequence of higher rate codes.

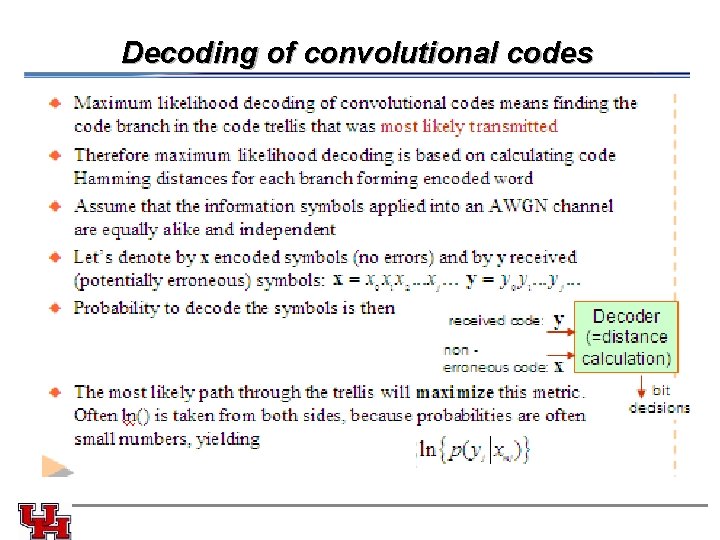

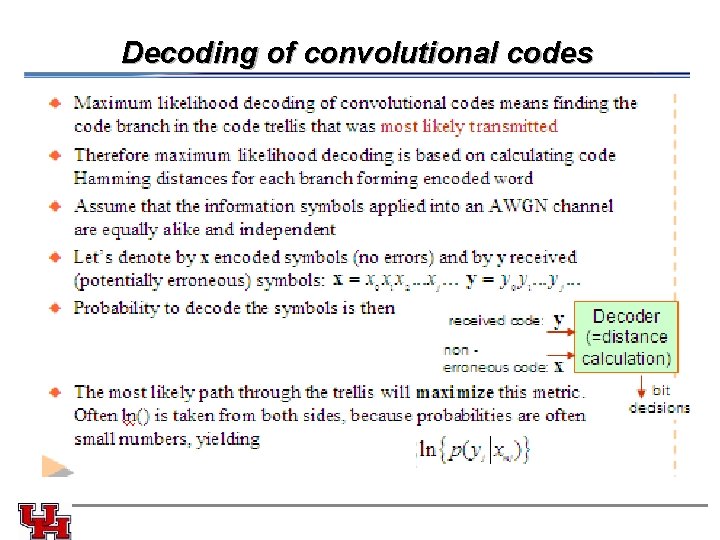

Decoding of convolutional codes

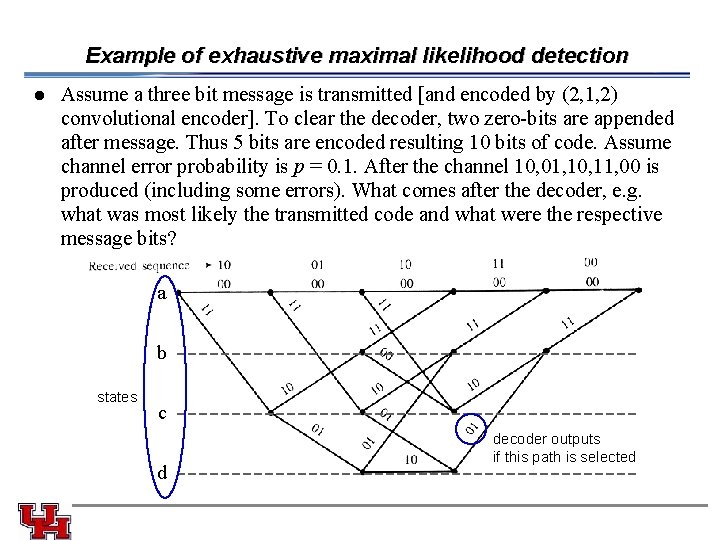

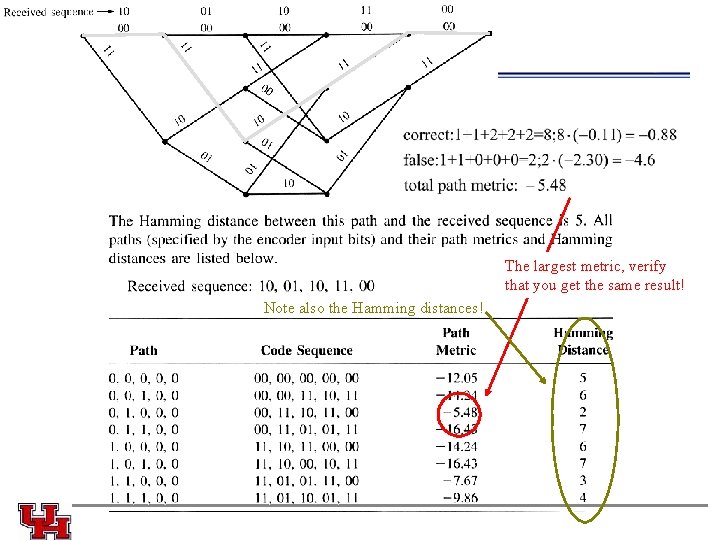

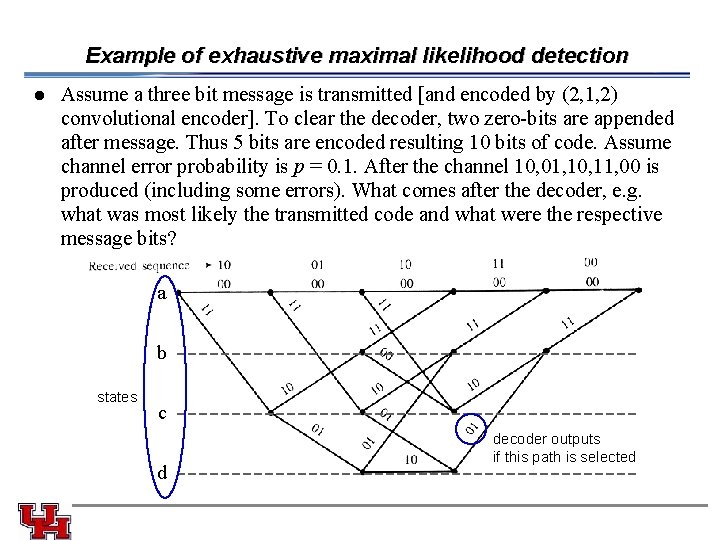

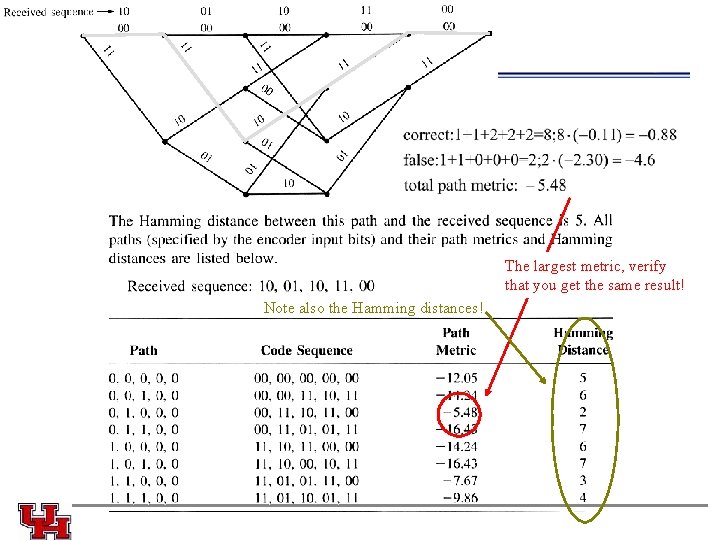

Example of exhaustive maximal likelihood detection l Assume a three bit message is transmitted [and encoded by (2, 1, 2) convolutional encoder]. To clear the decoder, two zero-bits are appended after message. Thus 5 bits are encoded resulting 10 bits of code. Assume channel error probability is p = 0. 1. After the channel 10, 01, 10, 11, 00 is produced (including some errors). What comes after the decoder, e. g. what was most likely the transmitted code and what were the respective message bits? a b states c d decoder outputs if this path is selected

The largest metric, verify that you get the same result! Note also the Hamming distances!

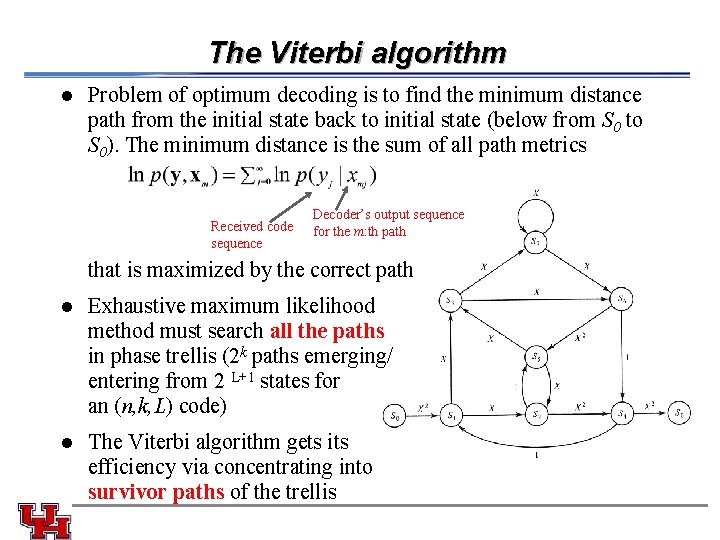

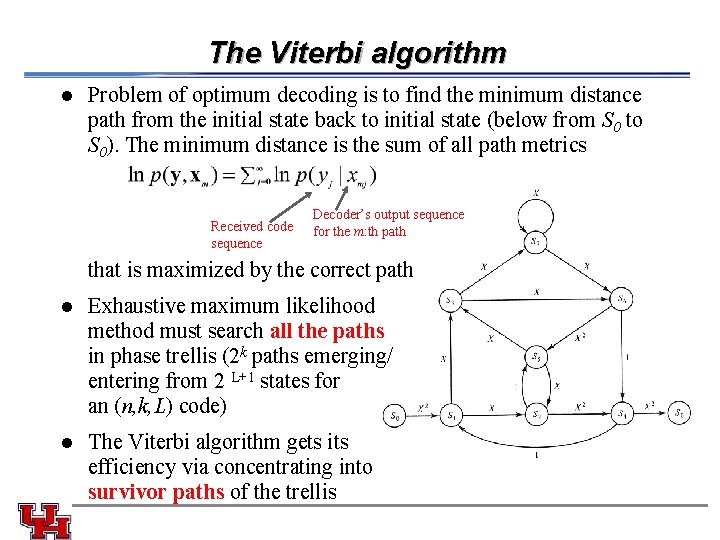

The Viterbi algorithm l Problem of optimum decoding is to find the minimum distance path from the initial state back to initial state (below from S 0 to S 0). The minimum distance is the sum of all path metrics Received code sequence Decoder’s output sequence for the m: th path that is maximized by the correct path l Exhaustive maximum likelihood method must search all the paths in phase trellis (2 k paths emerging/ entering from 2 L+1 states for an (n, k, L) code) l The Viterbi algorithm gets its efficiency via concentrating into survivor paths of the trellis

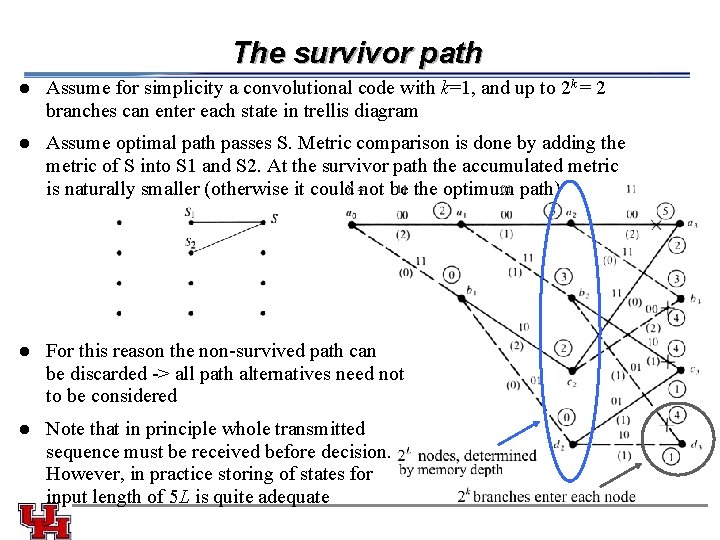

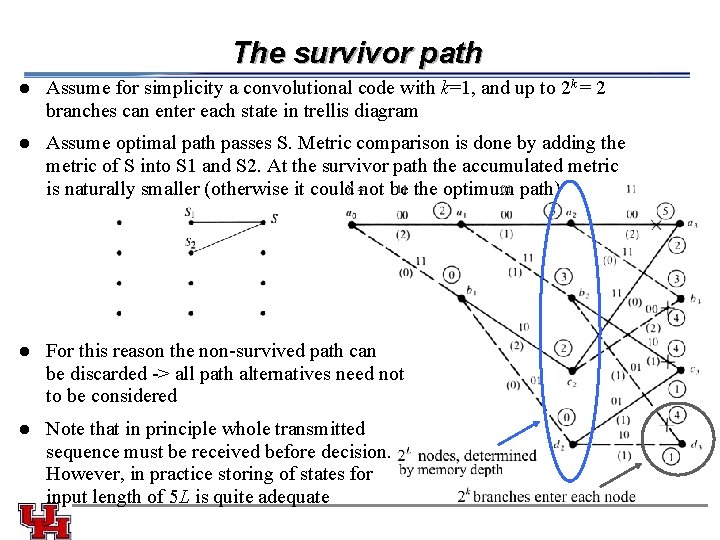

The survivor path l Assume for simplicity a convolutional code with k=1, and up to 2 k = 2 branches can enter each state in trellis diagram l Assume optimal path passes S. Metric comparison is done by adding the metric of S into S 1 and S 2. At the survivor path the accumulated metric is naturally smaller (otherwise it could not be the optimum path) l For this reason the non-survived path can be discarded -> all path alternatives need not to be considered l Note that in principle whole transmitted sequence must be received before decision. However, in practice storing of states for input length of 5 L is quite adequate

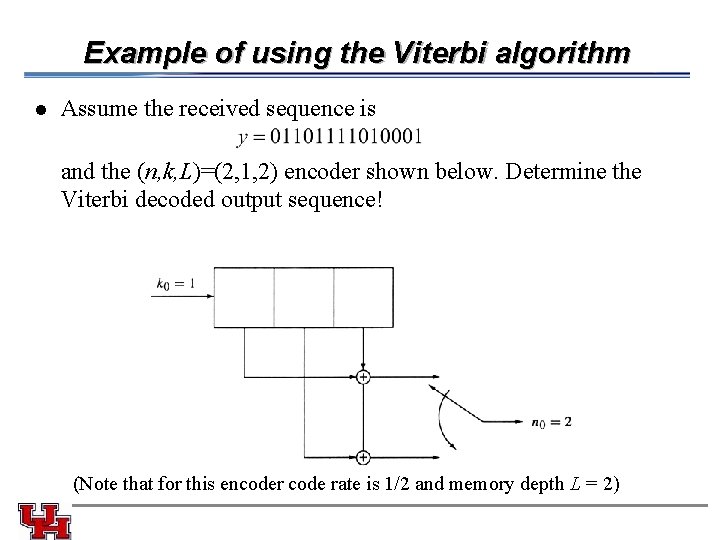

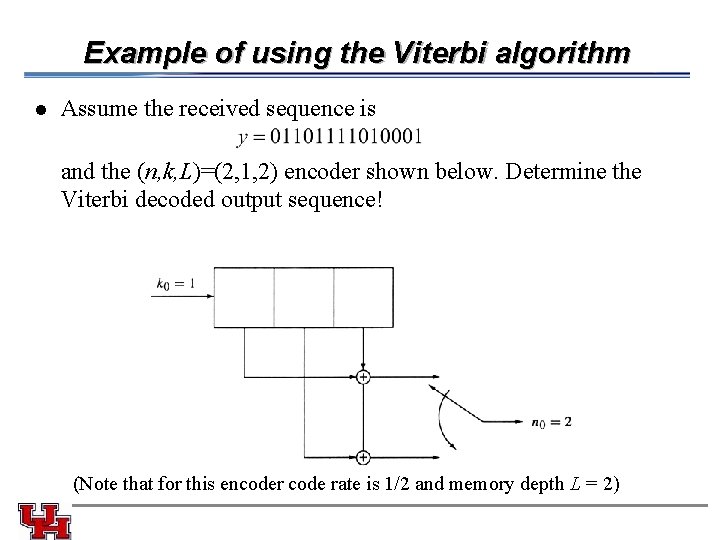

Example of using the Viterbi algorithm l Assume the received sequence is and the (n, k, L)=(2, 1, 2) encoder shown below. Determine the Viterbi decoded output sequence! (Note that for this encoder code rate is 1/2 and memory depth L = 2)

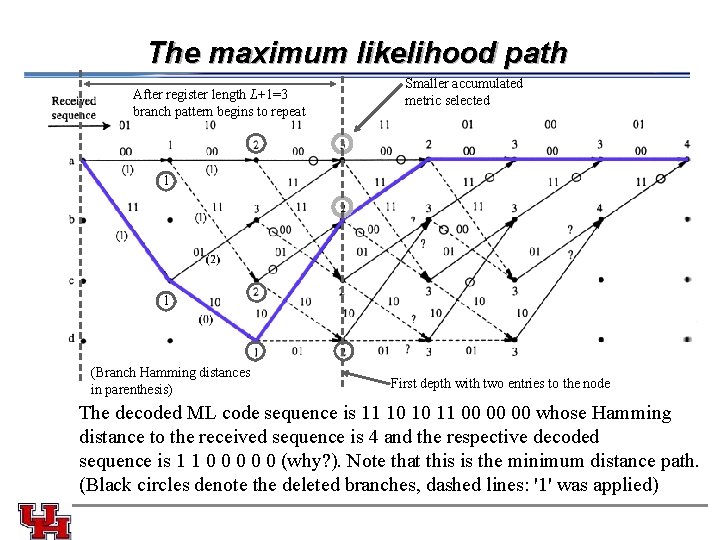

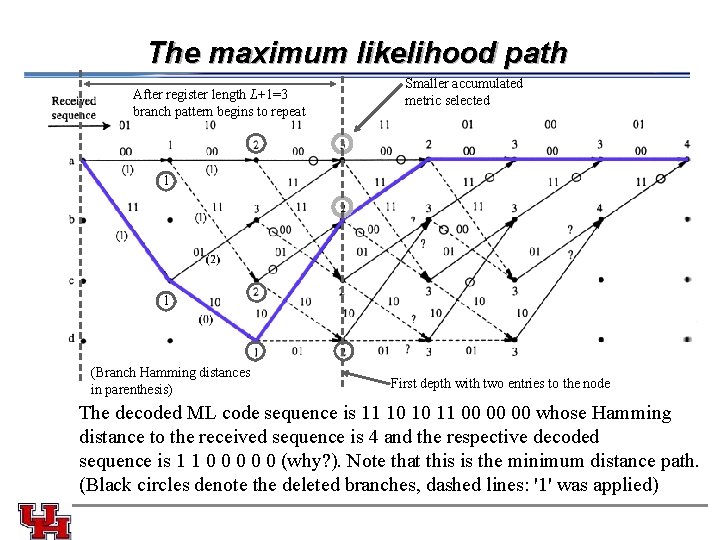

The maximum likelihood path After register length L+1=3 branch pattern begins to repeat Smaller accumulated metric selected 1 1 (Branch Hamming distances in parenthesis) First depth with two entries to the node The decoded ML code sequence is 11 10 10 11 00 00 00 whose Hamming distance to the received sequence is 4 and the respective decoded sequence is 1 1 0 0 0 (why? ). Note that this is the minimum distance path. (Black circles denote the deleted branches, dashed lines: '1' was applied)

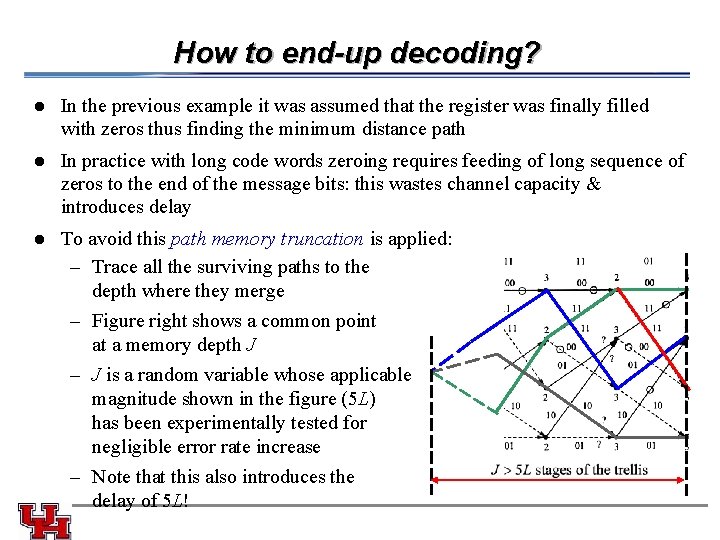

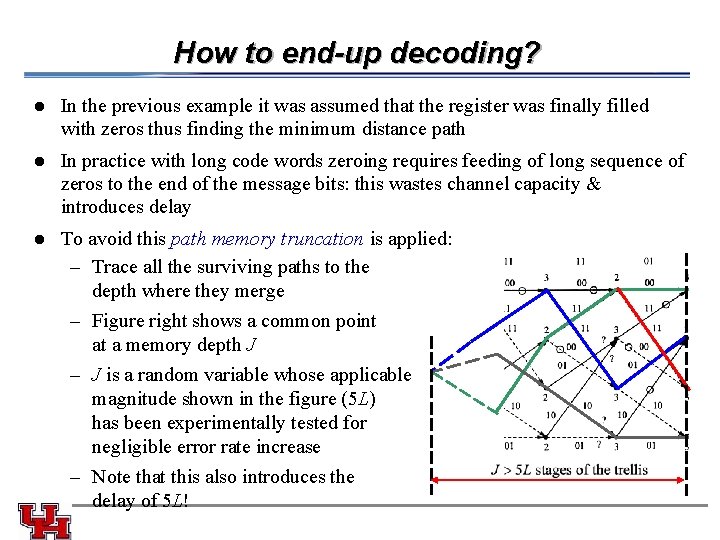

How to end-up decoding? l In the previous example it was assumed that the register was finally filled with zeros thus finding the minimum distance path l In practice with long code words zeroing requires feeding of long sequence of zeros to the end of the message bits: this wastes channel capacity & introduces delay To avoid this path memory truncation is applied: – Trace all the surviving paths to the depth where they merge – Figure right shows a common point at a memory depth J – J is a random variable whose applicable magnitude shown in the figure (5 L) has been experimentally tested for negligible error rate increase – Note that this also introduces the delay of 5 L! l

Concepts to Learn l You understand the differences between cyclic codes and convolutional codes l You can create state diagram for a convolutional encoder l You know how to construct convolutional encoder circuits based on knowing the generator sequences l You can analyze code strengths based on known code generation circuits / state diagrams or generator sequences l You understand how to realize maximum likelihood convolutional decoding by using exhaustive search l You understand the principle of Viterbi decoding

Viterbi Algorithm As a youth, Life Fellow Andrew Viterbi never envisioned that he’d create an algorithm used in every cellphone or that he would cofound Qualcomm, a Fortune 500 company that is a worldwide leader in wireless technology. l Viterbi came up with the idea for that algorithm while he was an engineering professor at the University of California at Los Angeles (UCLA) and then at the University of California at San Diego (UCSD), in the 1960 s. Today, the algorithm is used in digital cellphones and satellite receivers to transmit messages so they won’t be lost in noise. The result is a clear undamaged message thanks to a process called error correction coding. This algorithm is currently used in most cellphones. l “The algorithm was originally created for improving communication from space by being able to operate with a weak signal but today it has a multitude of applications, ” Viterbi says. l For the algorithm, which carries his name, he was awarded this year’s Benjamin Franklin Medal in electrical engineering by the Franklin Institute in Philadelphia, one of the United States’ oldest centers of science education and development. The institute serves the public through its museum, outreach programs, and curatorial work. The medal, which Viterbi received in April, recognizes individuals who have benefited humanity, advanced science, and deepened the understanding of the universe. It also honors contributions in life sciences, physics, earth and environmental sciences, and computer and cognitive sciences. l Qualcomm wasn’t the first company Viterbi started. In the late 1960 s, he and some professors from UCLA and UCSD founded Linkabit, which developed a video scrambling system called Videocipher for the fledgling cable network Home Box Office. The Videocipher encrypts a video signal so hackers who haven’t paid for the HBO service can’t obtain it. l Viterbi, who immigrated to the United States as a four-year-old refugee from facist Italy, left Linkabit to help start Qualcomm in 1985. One of the company’s first successes was Omni. Tracs, a two-way satellite communication system used by truckers to communicate from the road with their home offices. The system involves signal processing and an antenna with a directional control that moves as the truck moves so the antenna always faces the satellite. Omni. Tracs today is the transportation industry’s largest satellite-based commercial mobile system. l Another successful venture for the company was the creation of code-division multiple access (CDMA), which was introduced commercially in 1995 in cellphones and is still big today. CDMA is a “spread-spectrum” technology—which means it allows many users to occupy the same time and frequency allocations in a band or space. It assigns unique codes to each communication to differentiate it from others in the same spectrum. l Although Viterbi retired from Qualcomm as vice chairman and chief technical officer in 2000, he still keeps busy as the president of the Viterbi Group, a private investment company specializing in imaging technologies and biotechnology. He’s also professor emeritus of electrical engineering systems at UCSD and distinguished visiting professor at Technion-Israel Institute of Technology in Technion City, Haifa. In March he and his wife donated US $52 million to the University of Southern California in Los Angeles, the largest amount the school ever received from a single donor. l To honor his generosity, USC renamed its engineering school the Andrew and Erna Viterbi School of Engineering. It is one of four in the nation to house two active National Science Foundation– supported engineering research centers: the Integrated Media Systems Center (which focuses on multimedia and Internet research) and the Biomimetic Research Center (which studies the use of technology to mimic biological systems). l

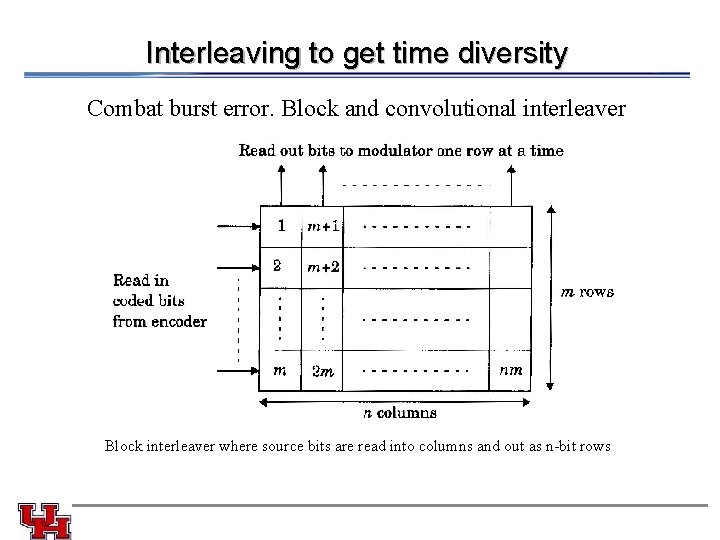

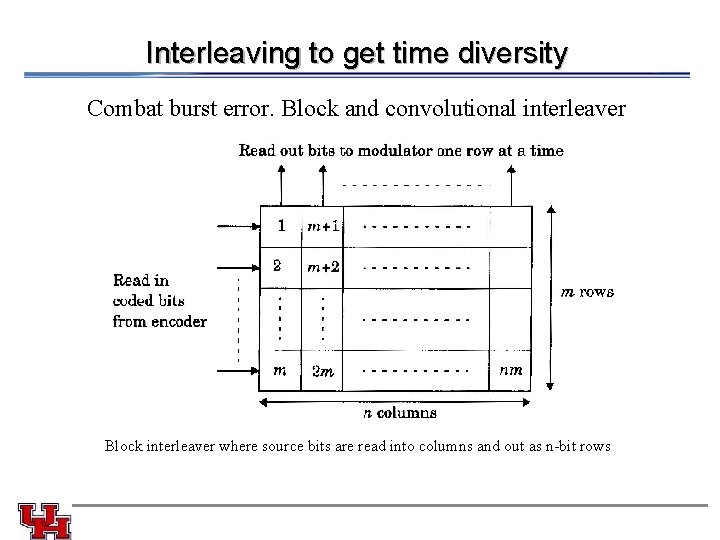

Interleaving to get time diversity Combat burst error. Block and convolutional interleaver Block interleaver where source bits are read into columns and out as n-bit rows