ECE 1747 Parallel Programming Shared Memory Open MP

![Private Variables: Alternative 1 for( i=0; i<n; i++ ) { tmp[i] = a[i]; a[i] Private Variables: Alternative 1 for( i=0; i<n; i++ ) { tmp[i] = a[i]; a[i]](https://slidetodoc.com/presentation_image/1fb944cc5abb3009d19cfcc6ca4e1358/image-45.jpg)

![PIPE: Parallel Program P 0: for( i=0; i<num_pics, read(in_pic); i++ ) { int_pic_1[i] = PIPE: Parallel Program P 0: for( i=0; i<num_pics, read(in_pic); i++ ) { int_pic_1[i] =](https://slidetodoc.com/presentation_image/1fb944cc5abb3009d19cfcc6ca4e1358/image-60.jpg)

- Slides: 64

ECE 1747 Parallel Programming Shared Memory: Open. MP Environment and Synchronization

What is Open. MP? • Standard for shared memory programming for scientific applications. • Has specific support for scientific application needs (unlike Pthreads). • Rapidly gaining acceptance among vendors and application writers. • See http: //www. openmp. org for more info.

Open. MP API Overview • API is a set of compiler directives inserted in the source program (in addition to some library functions). • Ideally, compiler directives do not affect sequential code. – pragma’s in C / C++. – (special) comments in Fortran code.

Open. MP API Example Sequential code: statement 1; statement 2; statement 3; Assume we want to execute statement 2 in parallel, and statement 1 and 3 sequentially.

Open. MP API Example (2 of 2) Open. MP parallel code: statement 1; #pragma <specific Open. MP directive> statement 2; statement 3; Statement 2 (may be) executed in parallel. Statement 1 and 3 are executed sequentially.

Important Note • By giving a parallel directive, the user asserts that the program will remain correct if the statement is executed in parallel. • Open. MP compiler does not check correctness. • Some tools exist for helping with that. • Totalview - good parallel debugger (www. etnus. com)

API Semantics • Master thread executes sequential code. • Master and slaves execute parallel code. • Note: very similar to fork-join semantics of Pthreads create/join primitives.

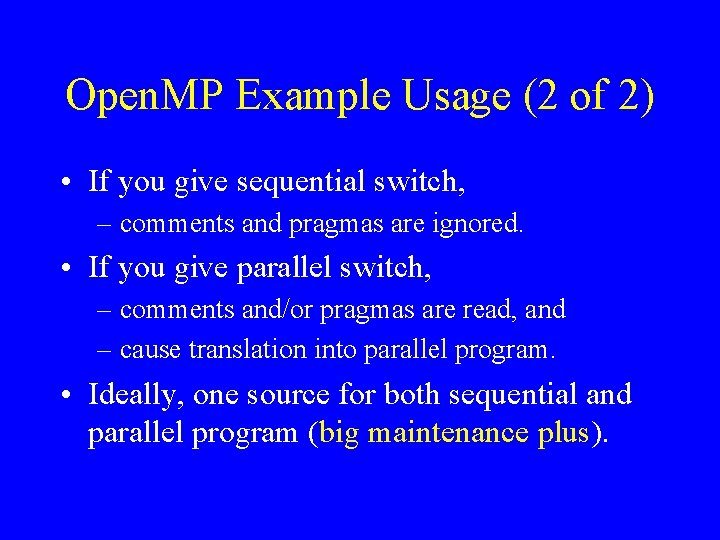

Open. MP Implementation Overview • Open. MP implementation – compiler, – library. • Unlike Pthreads (purely a library).

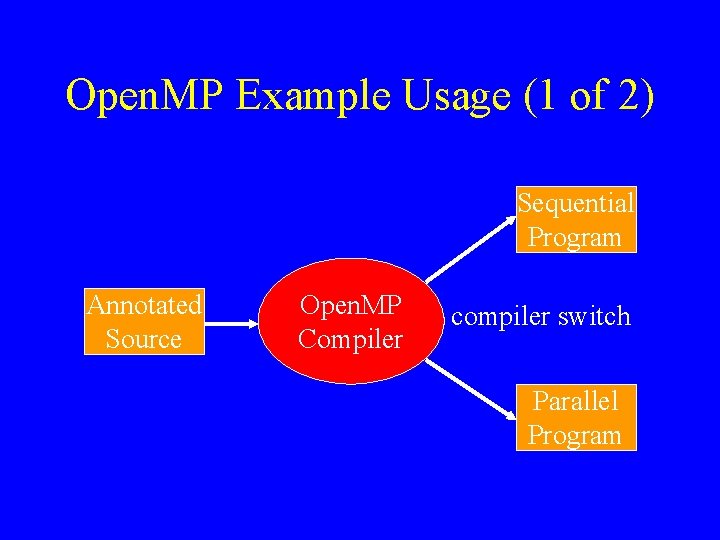

Open. MP Example Usage (1 of 2) Sequential Program Annotated Source Open. MP Compiler compiler switch Parallel Program

Open. MP Example Usage (2 of 2) • If you give sequential switch, – comments and pragmas are ignored. • If you give parallel switch, – comments and/or pragmas are read, and – cause translation into parallel program. • Ideally, one source for both sequential and parallel program (big maintenance plus).

Open. MP Directives • Parallelization directives: – parallel region – parallel for • Data environment directives: – shared, private, threadprivate, reduction, etc. • Synchronization directives: – barrier, critical

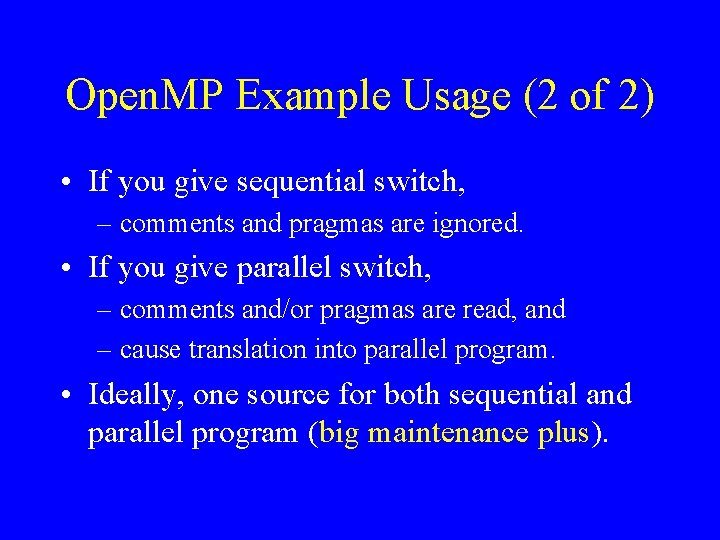

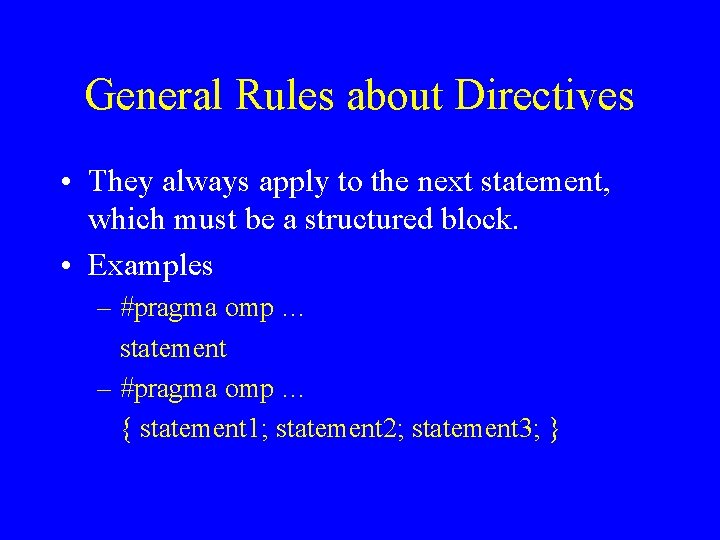

General Rules about Directives • They always apply to the next statement, which must be a structured block. • Examples – #pragma omp … statement – #pragma omp … { statement 1; statement 2; statement 3; }

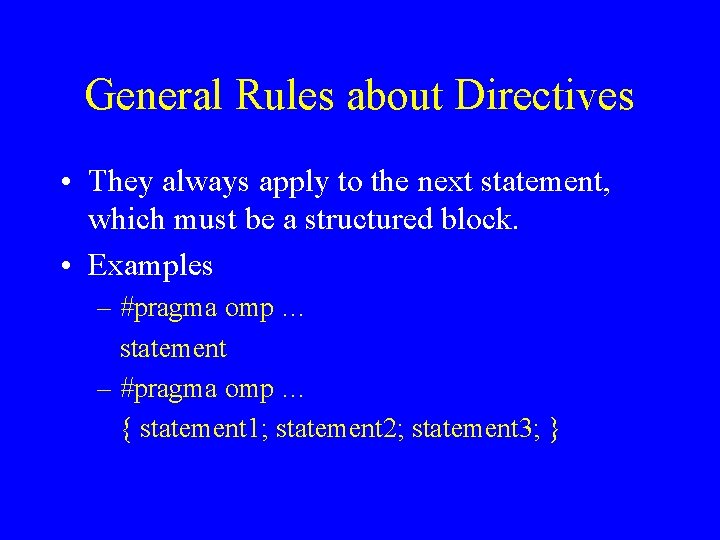

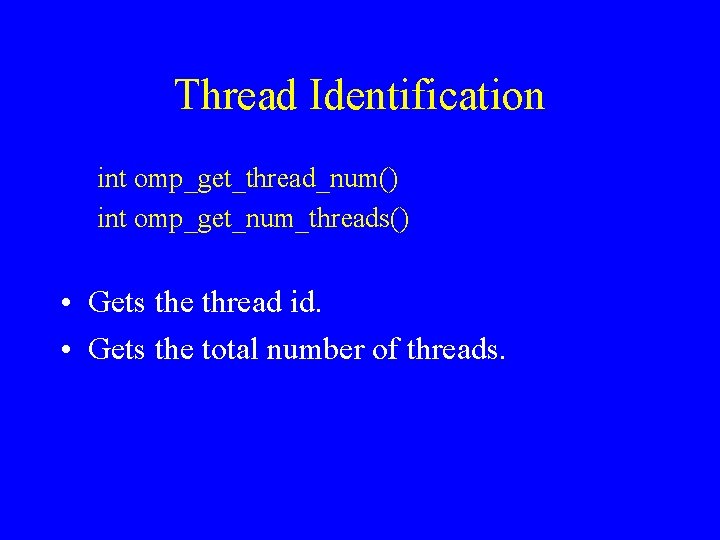

Open. MP Parallel Region #pragma omp parallel • • A number of threads are spawned at entry. Each thread executes the same code. Each thread waits at the end. Very similar to a number of create/join’s with the same function in Pthreads.

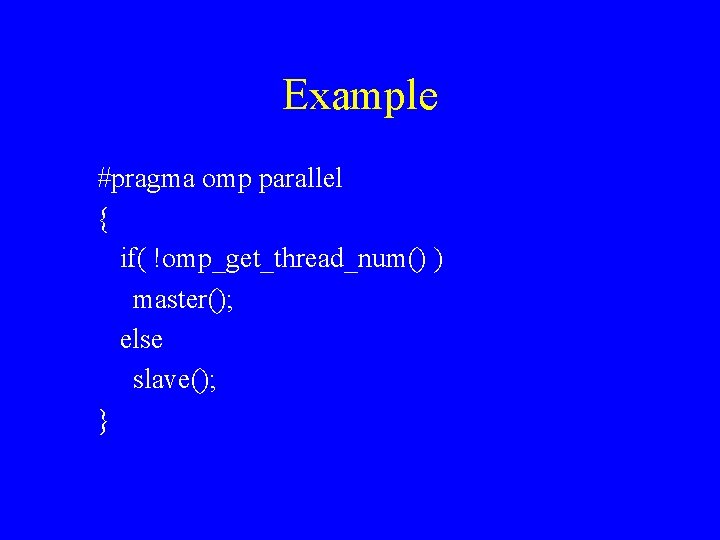

Getting Threads to do Different Things • Through explicit thread identification (as in Pthreads). • Through work-sharing directives.

Thread Identification int omp_get_thread_num() int omp_get_num_threads() • Gets the thread id. • Gets the total number of threads.

Example #pragma omp parallel { if( !omp_get_thread_num() ) master(); else slave(); }

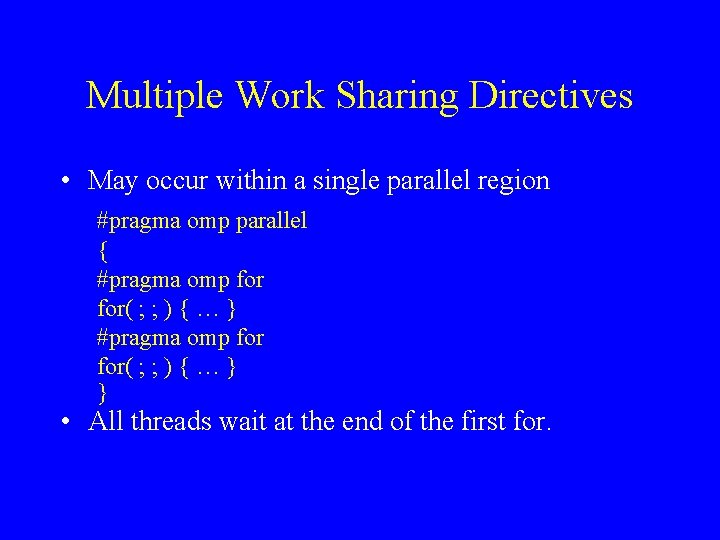

Work Sharing Directives • Always occur within a parallel region directive. • Two principal ones are – parallel for – parallel section

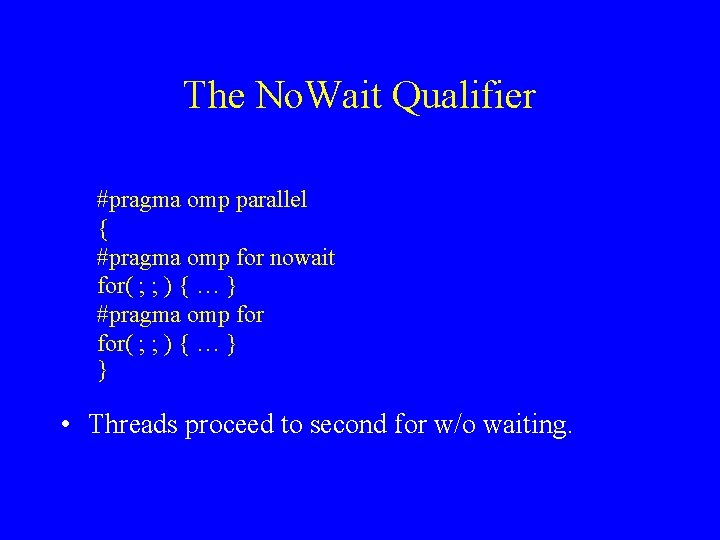

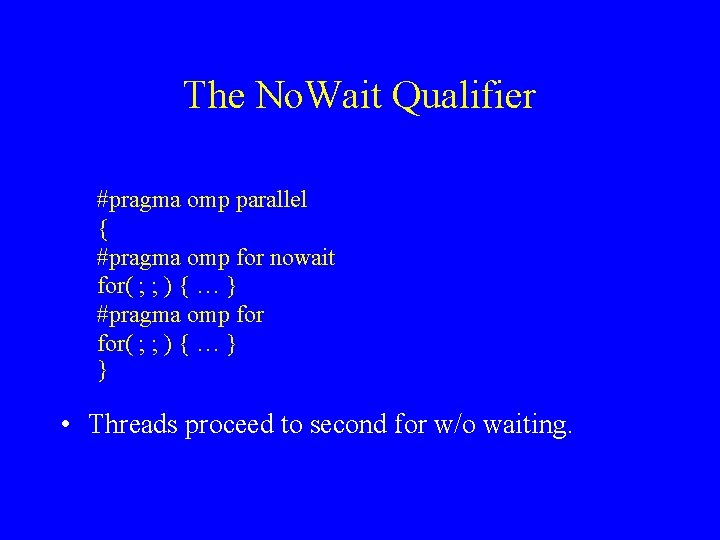

Open. MP Parallel For #pragma omp parallel #pragma omp for( … ) { … } • Each thread executes a subset of the iterations. • All threads wait at the end of the parallel for.

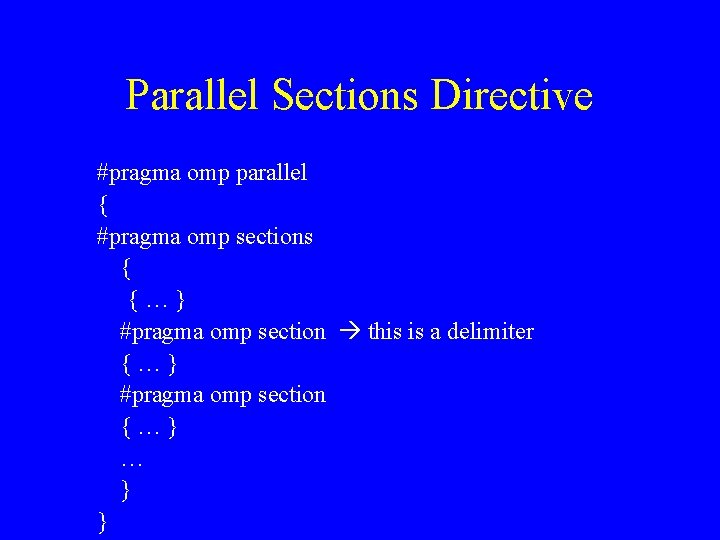

Multiple Work Sharing Directives • May occur within a single parallel region #pragma omp parallel { #pragma omp for for( ; ; ) { … } } • All threads wait at the end of the first for.

The No. Wait Qualifier #pragma omp parallel { #pragma omp for nowait for( ; ; ) { … } #pragma omp for( ; ; ) { … } } • Threads proceed to second for w/o waiting.

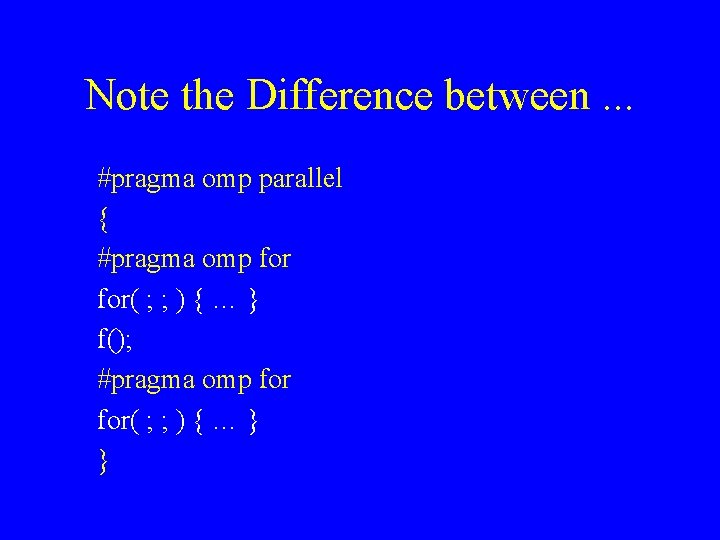

Parallel Sections Directive #pragma omp parallel { #pragma omp sections { {…} #pragma omp section this is a delimiter {…} #pragma omp section {…} … } }

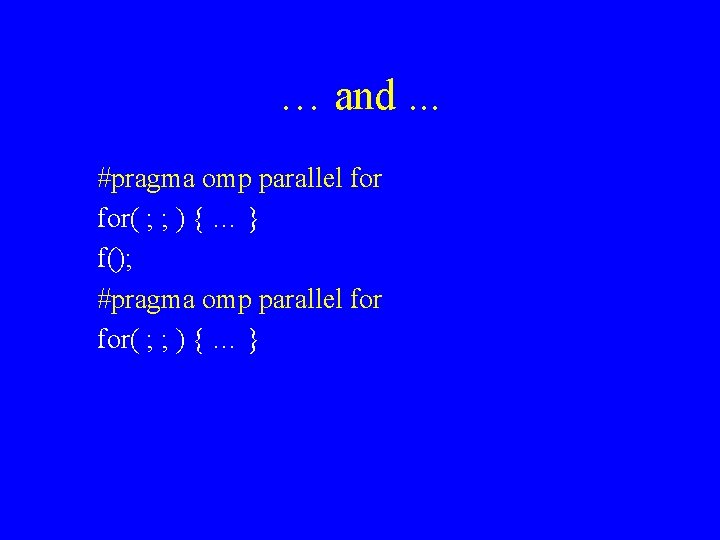

A Useful Shorthand #pragma omp parallel #pragma omp for ( ; ; ) { … } is equivalent to #pragma omp parallel for ( ; ; ) { … } (Same for parallel sections)

Note the Difference between. . . #pragma omp parallel { #pragma omp for( ; ; ) { … } f(); #pragma omp for( ; ; ) { … } }

… and. . . #pragma omp parallel for( ; ; ) { … } f(); #pragma omp parallel for( ; ; ) { … }

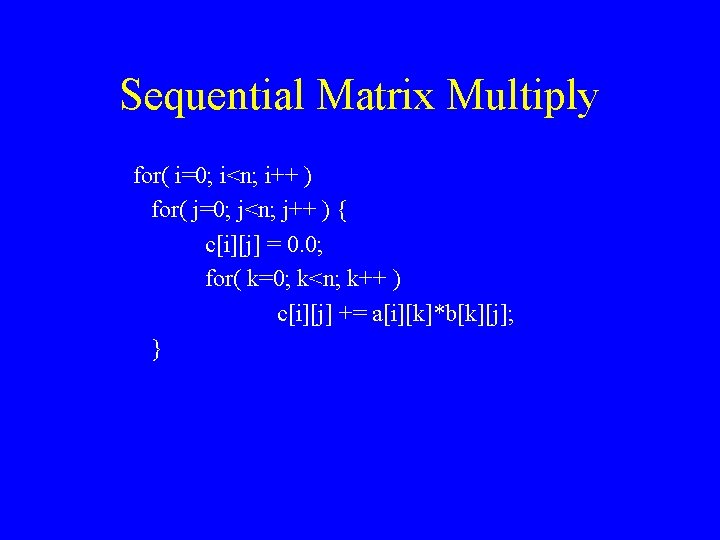

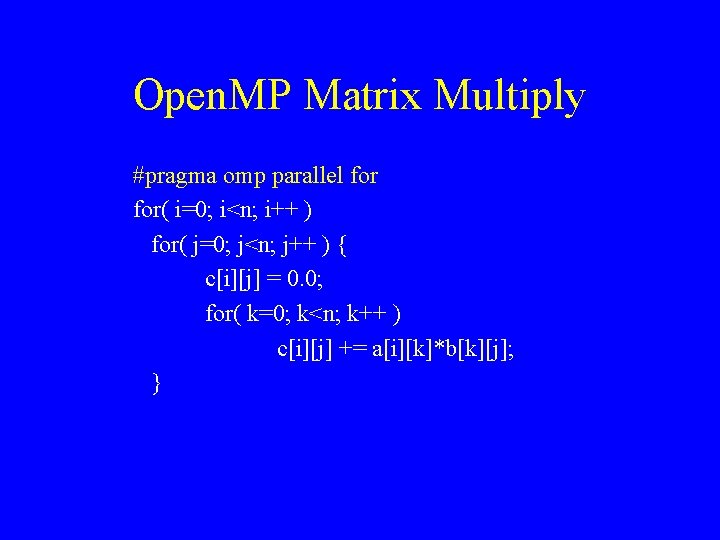

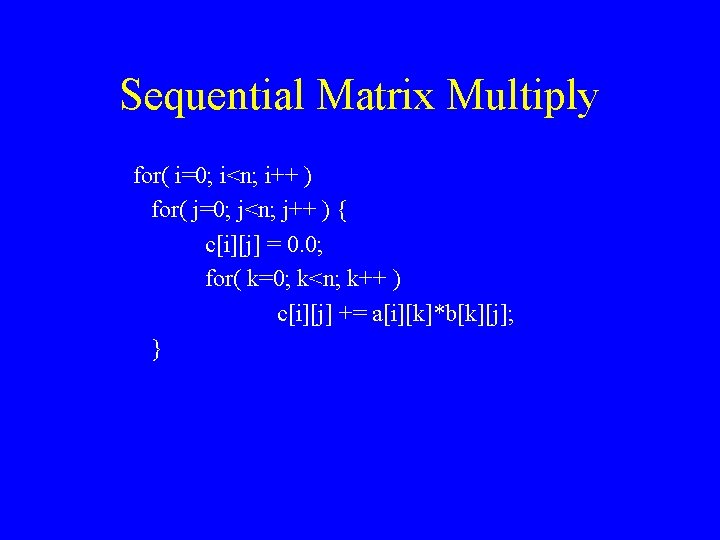

Sequential Matrix Multiply for( i=0; i<n; i++ ) for( j=0; j<n; j++ ) { c[i][j] = 0. 0; for( k=0; k<n; k++ ) c[i][j] += a[i][k]*b[k][j]; }

Open. MP Matrix Multiply #pragma omp parallel for( i=0; i<n; i++ ) for( j=0; j<n; j++ ) { c[i][j] = 0. 0; for( k=0; k<n; k++ ) c[i][j] += a[i][k]*b[k][j]; }

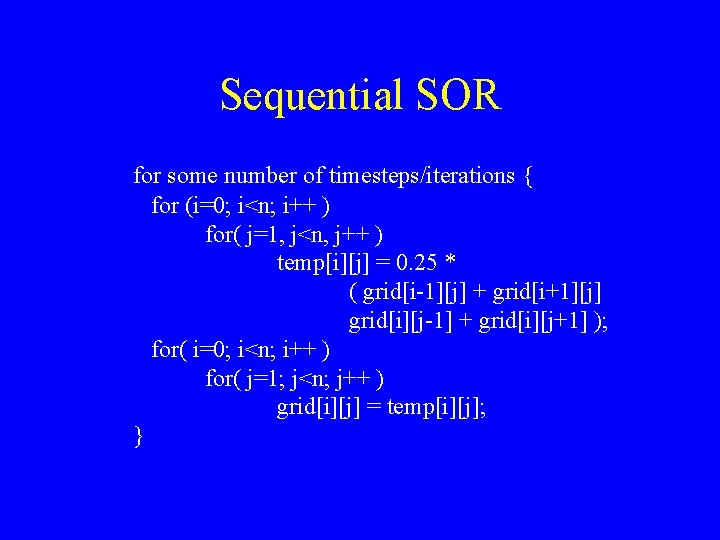

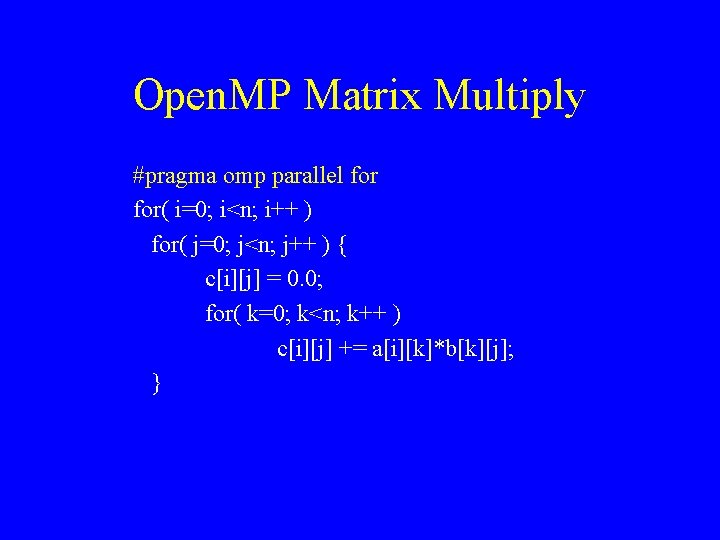

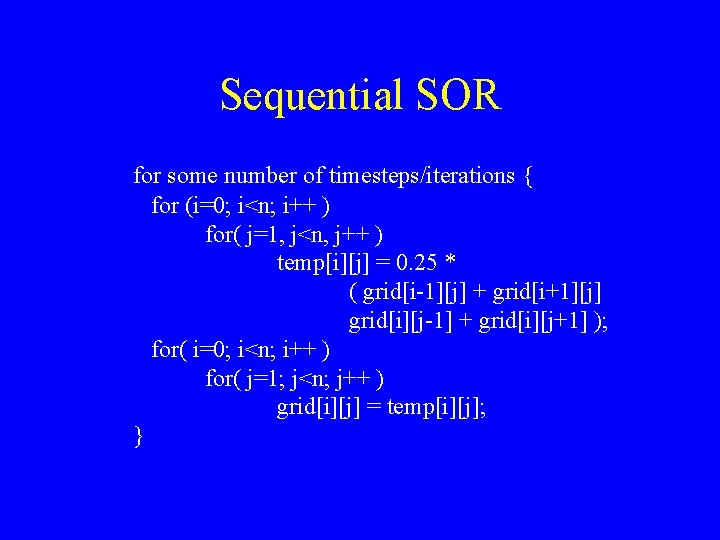

Sequential SOR for some number of timesteps/iterations { for (i=0; i<n; i++ ) for( j=1, j<n, j++ ) temp[i][j] = 0. 25 * ( grid[i-1][j] + grid[i+1][j] grid[i][j-1] + grid[i][j+1] ); for( i=0; i<n; i++ ) for( j=1; j<n; j++ ) grid[i][j] = temp[i][j]; }

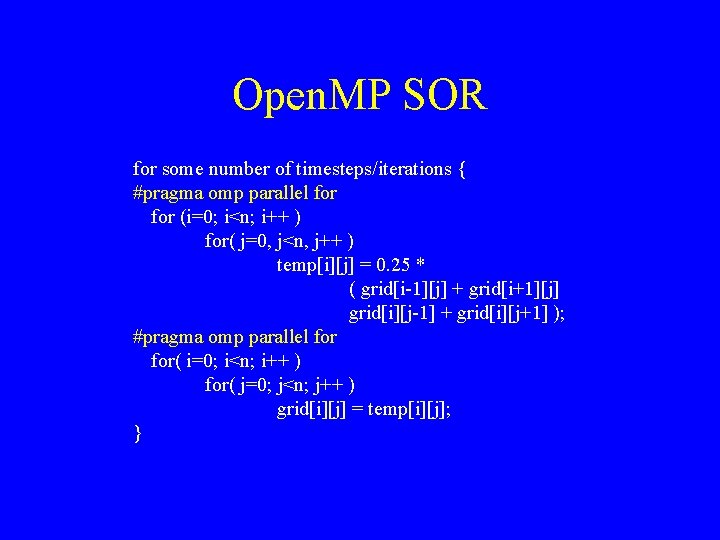

Open. MP SOR for some number of timesteps/iterations { #pragma omp parallel for (i=0; i<n; i++ ) for( j=0, j<n, j++ ) temp[i][j] = 0. 25 * ( grid[i-1][j] + grid[i+1][j] grid[i][j-1] + grid[i][j+1] ); #pragma omp parallel for( i=0; i<n; i++ ) for( j=0; j<n; j++ ) grid[i][j] = temp[i][j]; }

Equivalent Open. MP SOR for some number of timesteps/iterations { #pragma omp parallel { } } #pragma omp for (i=0; i<n; i++ ) for( j=0, j<n, j++ ) temp[i][j] = 0. 25 * ( grid[i-1][j] + grid[i+1][j] grid[i][j-1] + grid[i][j+1] ); #pragma omp for( i=0; i<n; i++ ) for( j=0; j<n; j++ ) grid[i][j] = temp[i][j]

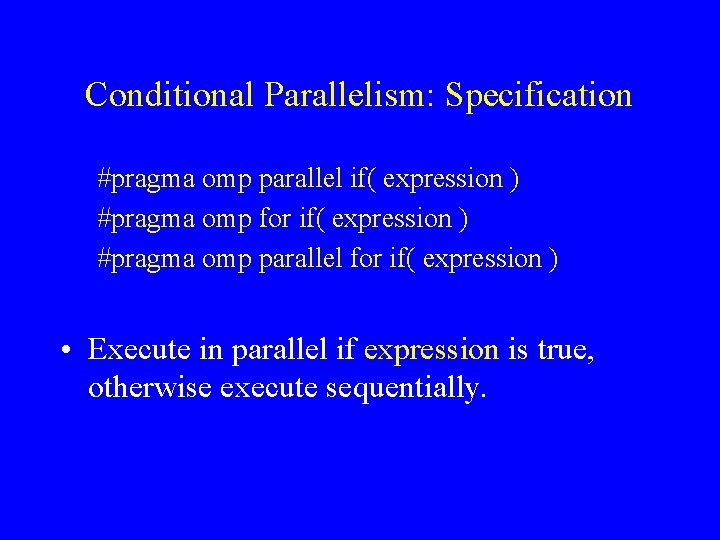

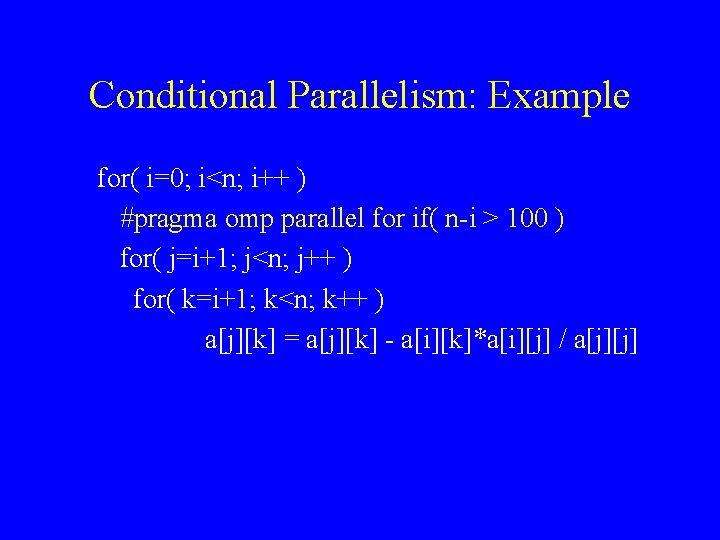

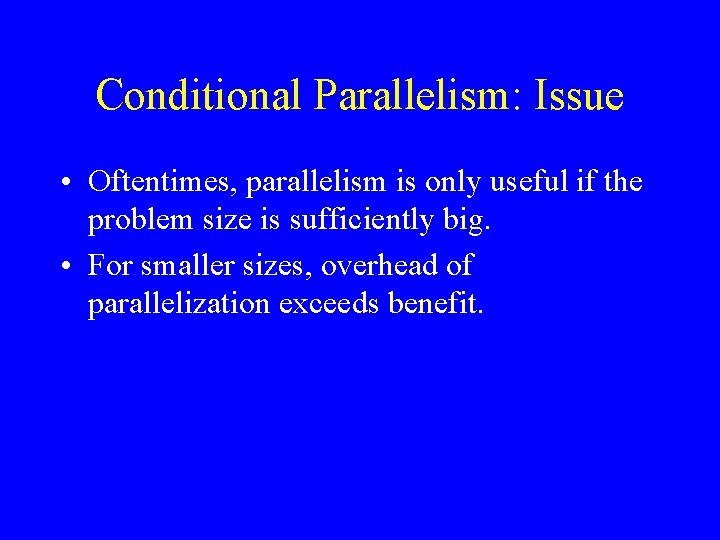

Some Advanced Features • Conditional parallelism. • Scheduling options. (More can be found in the specification)

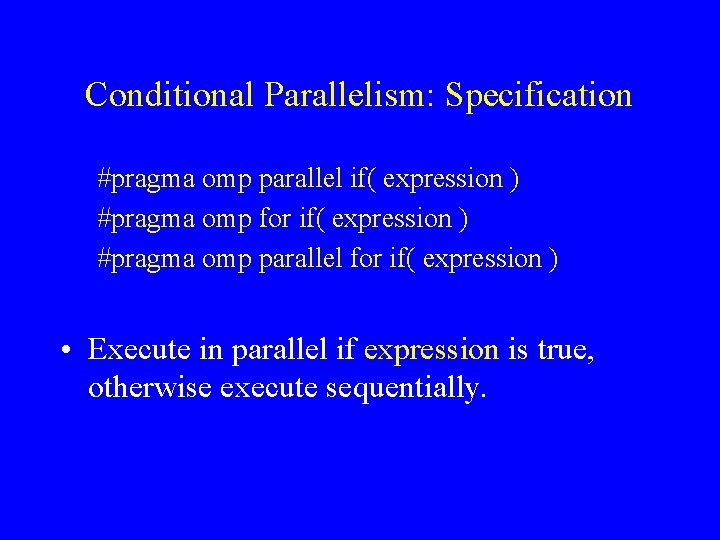

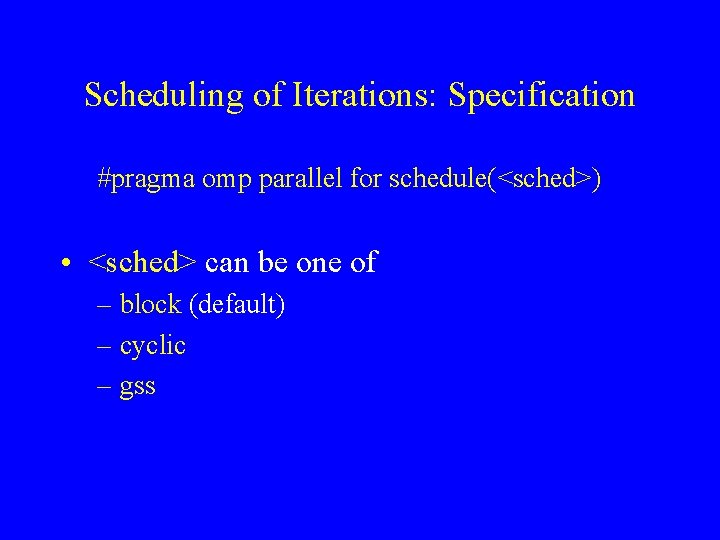

Conditional Parallelism: Issue • Oftentimes, parallelism is only useful if the problem size is sufficiently big. • For smaller sizes, overhead of parallelization exceeds benefit.

Conditional Parallelism: Specification #pragma omp parallel if( expression ) #pragma omp for if( expression ) #pragma omp parallel for if( expression ) • Execute in parallel if expression is true, otherwise execute sequentially.

Conditional Parallelism: Example for( i=0; i<n; i++ ) #pragma omp parallel for if( n-i > 100 ) for( j=i+1; j<n; j++ ) for( k=i+1; k<n; k++ ) a[j][k] = a[j][k] - a[i][k]*a[i][j] / a[j][j]

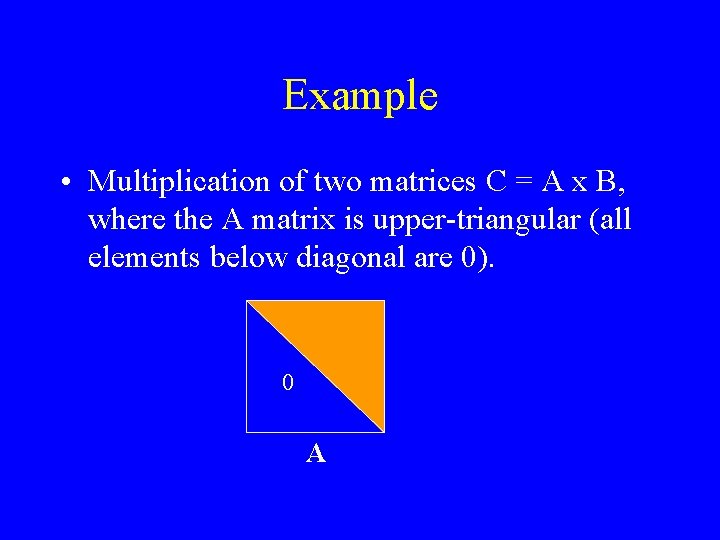

Scheduling of Iterations: Issue • Scheduling: assigning iterations to a thread. • So far, we have assumed the default which is block scheduling. • Open. MP allows other scheduling strategies as well, for instance cyclic, gss (guided selfscheduling), etc.

Scheduling of Iterations: Specification #pragma omp parallel for schedule(<sched>) • <sched> can be one of – block (default) – cyclic – gss

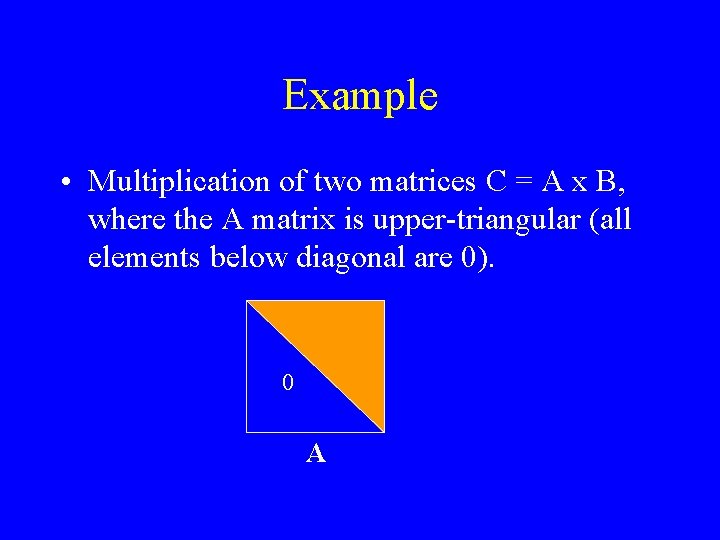

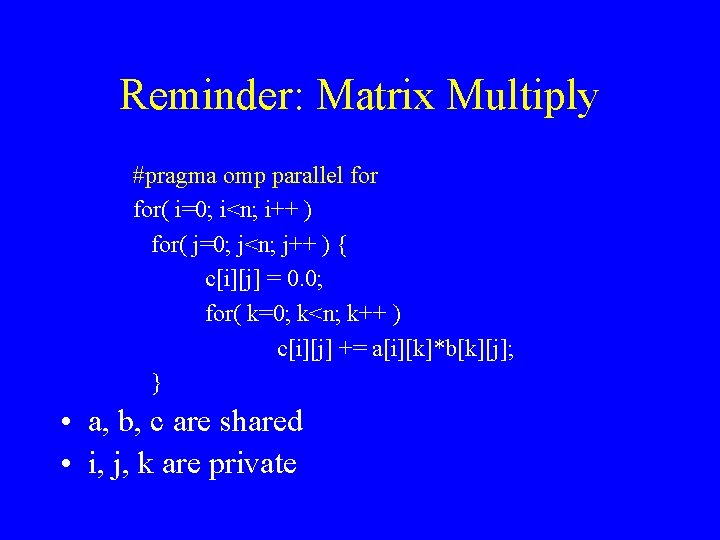

Example • Multiplication of two matrices C = A x B, where the A matrix is upper-triangular (all elements below diagonal are 0). 0 A

Sequential Matrix Multiply Becomes for( i=0; i<n; i++ ) for( j=0; j<n; j++ ) { c[i][j] = 0. 0; for( k=i; k<n; k++ ) c[i][j] += a[i][k]*b[k][j]; } Load imbalance with block distribution.

Open. MP Matrix Multiply #pragma omp parallel for schedule( cyclic ) for( i=0; i<n; i++ ) for( j=0; j<n; j++ ) { c[i][j] = 0. 0; for( k=i; k<n; k++ ) c[i][j] += a[i][k]*b[k][j]; }

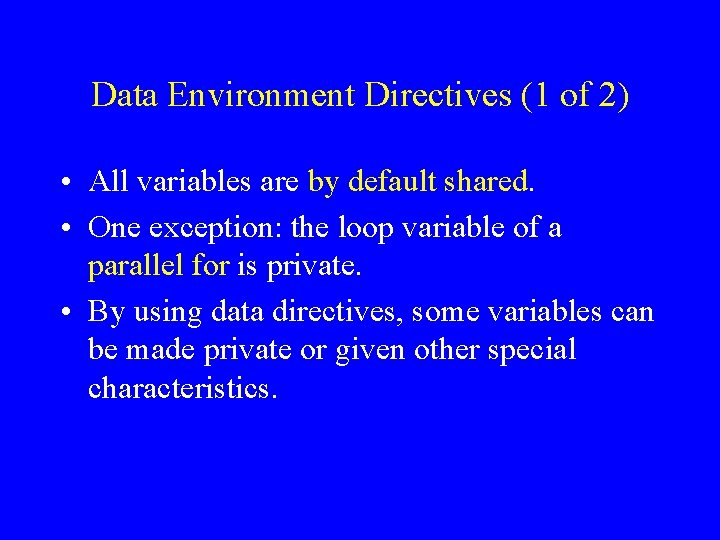

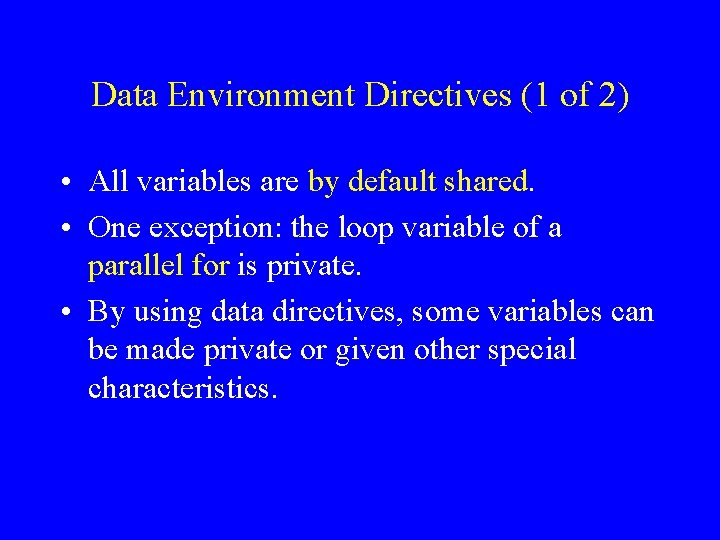

Data Environment Directives (1 of 2) • All variables are by default shared. • One exception: the loop variable of a parallel for is private. • By using data directives, some variables can be made private or given other special characteristics.

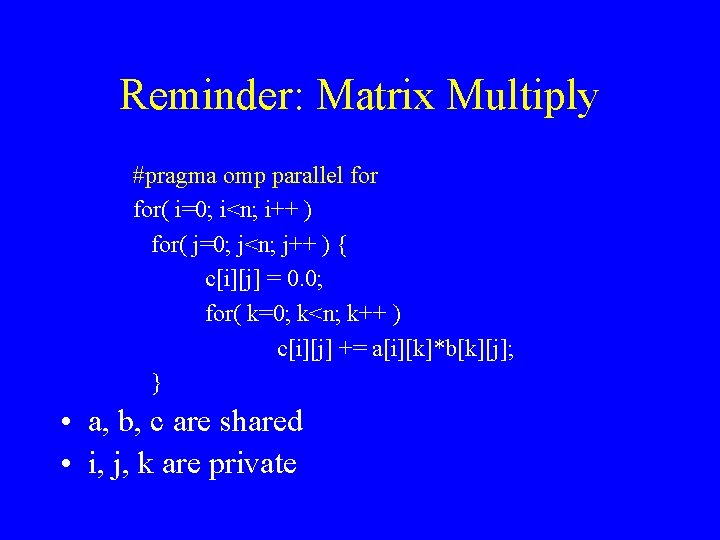

Reminder: Matrix Multiply #pragma omp parallel for( i=0; i<n; i++ ) for( j=0; j<n; j++ ) { c[i][j] = 0. 0; for( k=0; k<n; k++ ) c[i][j] += a[i][k]*b[k][j]; } • a, b, c are shared • i, j, k are private

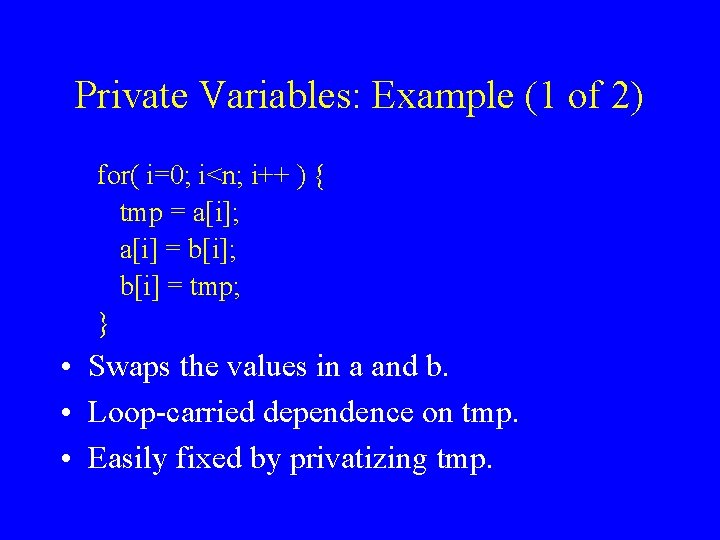

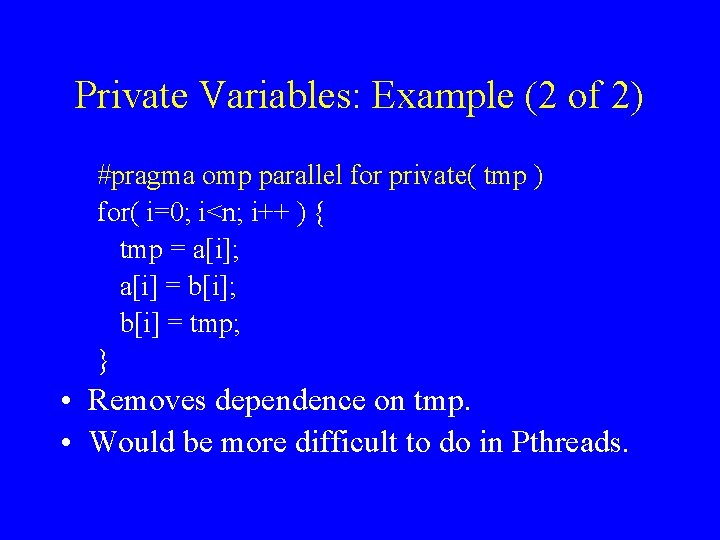

Data Environment Directives (2 of 2) • Private • Threadprivate • Reduction

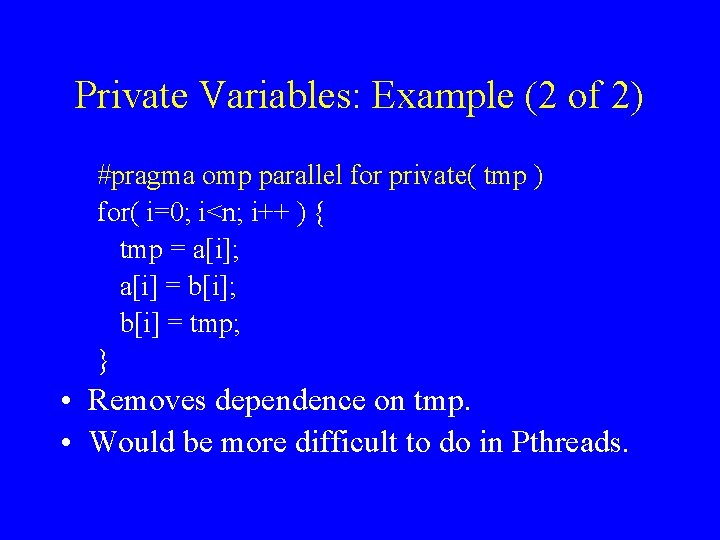

Private Variables #pragma omp parallel for private( list ) • Makes a private copy for each thread for each variable in the list. • This and all further examples are with parallel for, but same applies to other region and work-sharing directives.

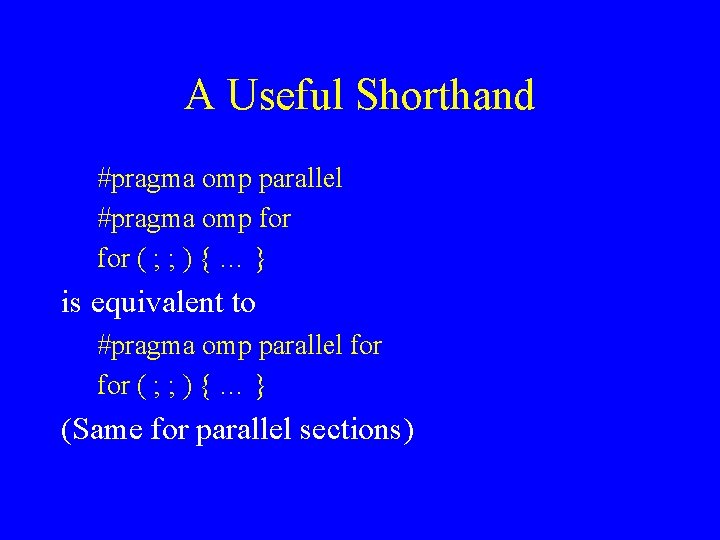

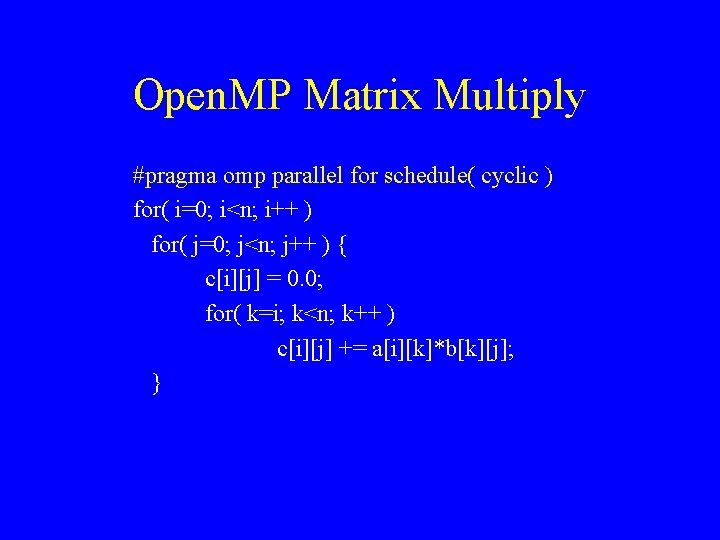

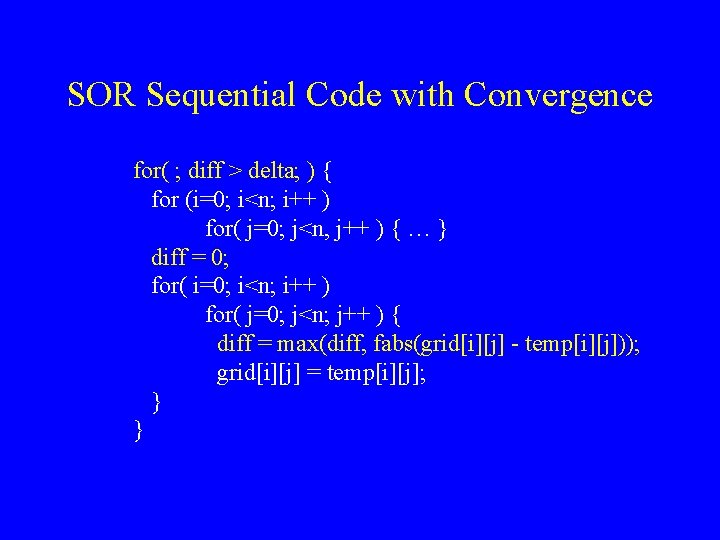

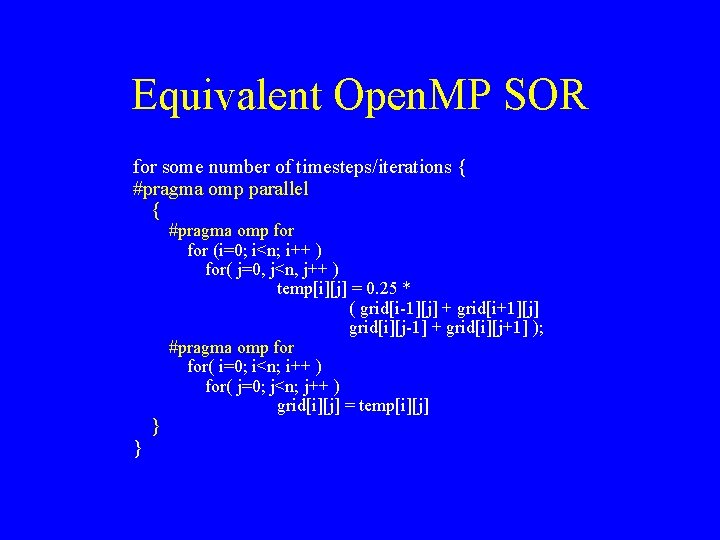

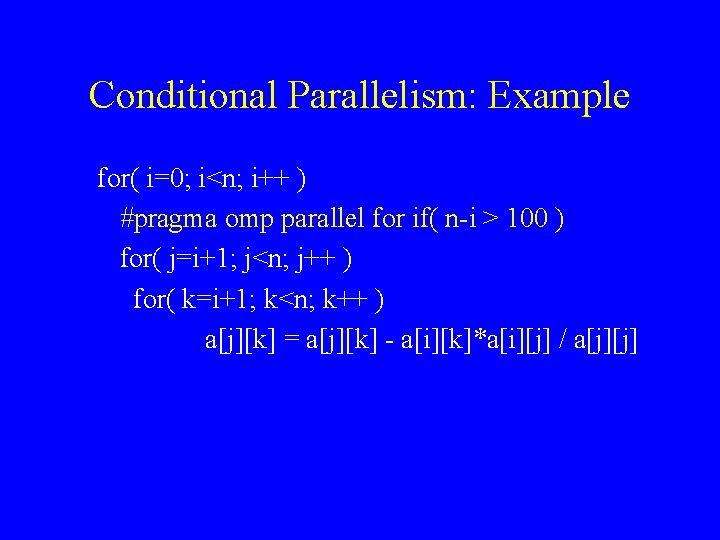

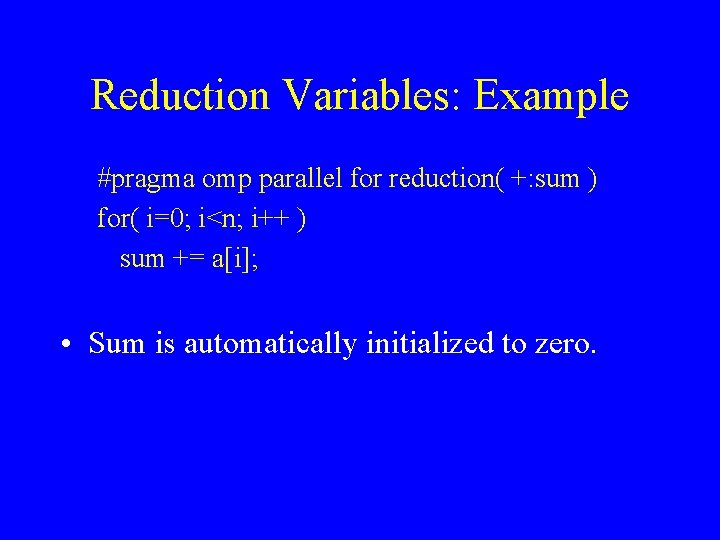

Private Variables: Example (1 of 2) for( i=0; i<n; i++ ) { tmp = a[i]; a[i] = b[i]; b[i] = tmp; } • Swaps the values in a and b. • Loop-carried dependence on tmp. • Easily fixed by privatizing tmp.

Private Variables: Example (2 of 2) #pragma omp parallel for private( tmp ) for( i=0; i<n; i++ ) { tmp = a[i]; a[i] = b[i]; b[i] = tmp; } • Removes dependence on tmp. • Would be more difficult to do in Pthreads.

![Private Variables Alternative 1 for i0 in i tmpi ai ai Private Variables: Alternative 1 for( i=0; i<n; i++ ) { tmp[i] = a[i]; a[i]](https://slidetodoc.com/presentation_image/1fb944cc5abb3009d19cfcc6ca4e1358/image-45.jpg)

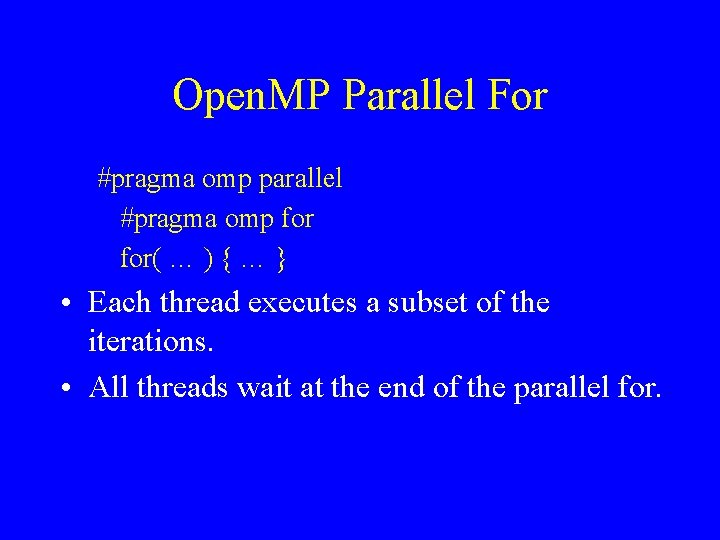

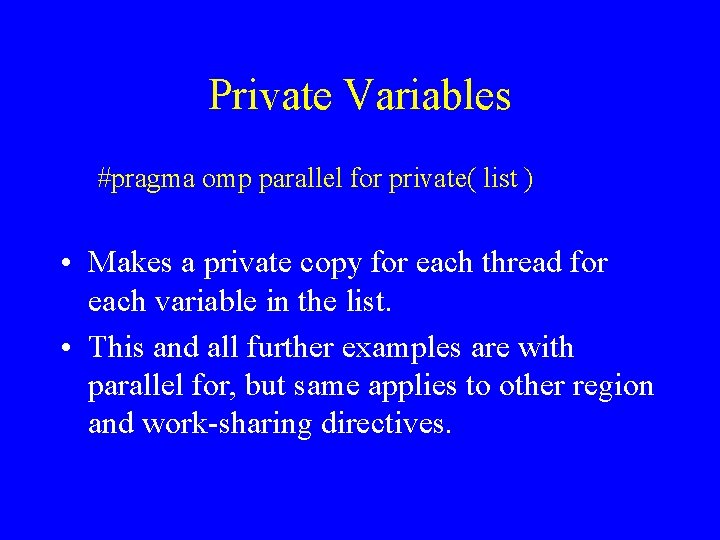

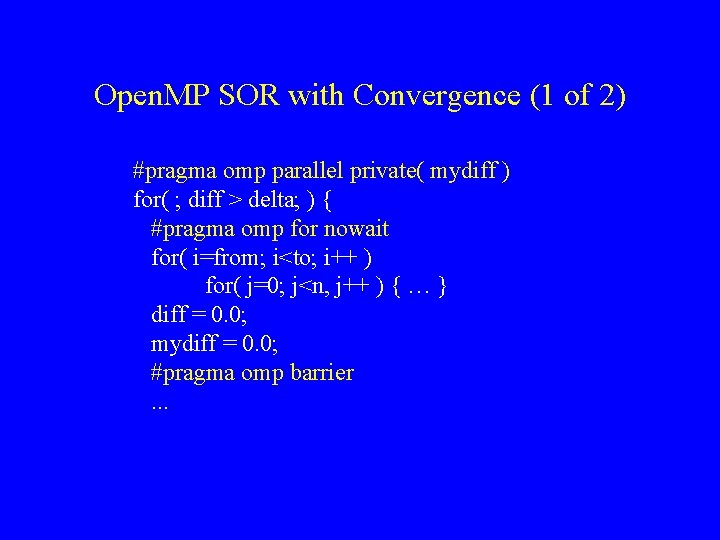

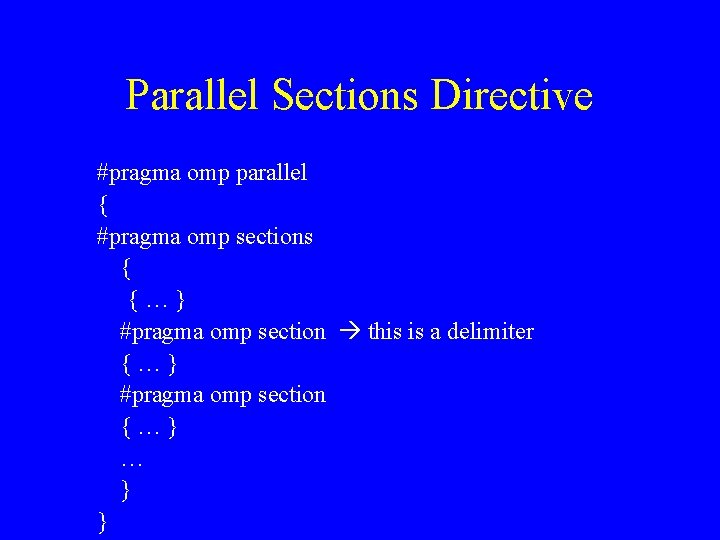

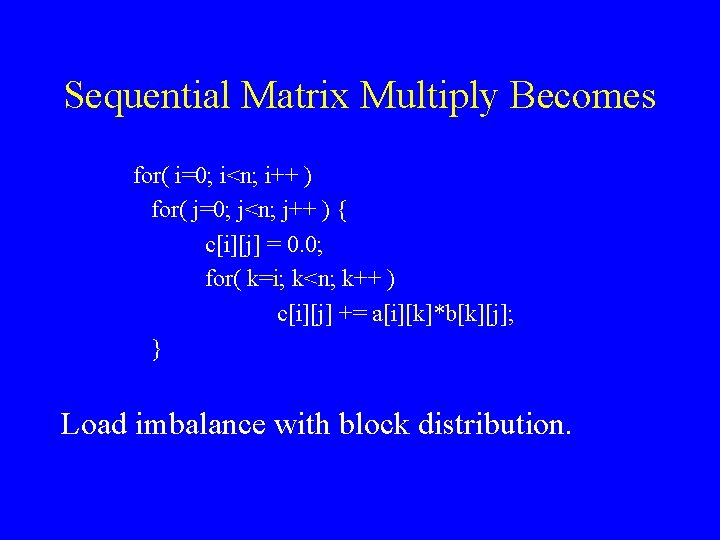

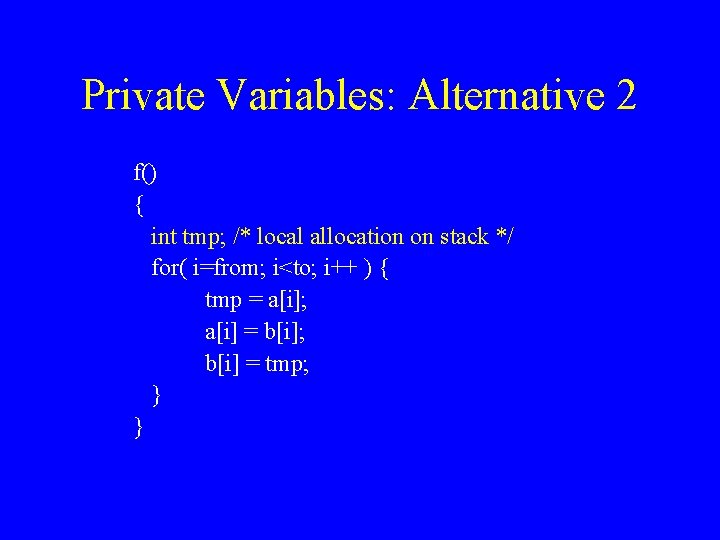

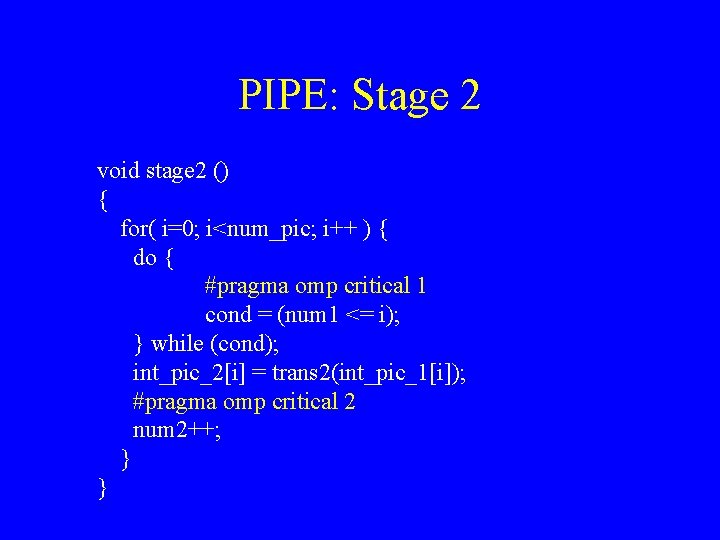

Private Variables: Alternative 1 for( i=0; i<n; i++ ) { tmp[i] = a[i]; a[i] = b[i]; b[i] = tmp[i]; } • Requires sequential program change. • Wasteful in space, O(n) vs. O(p).

Private Variables: Alternative 2 f() { int tmp; /* local allocation on stack */ for( i=from; i<to; i++ ) { tmp = a[i]; a[i] = b[i]; b[i] = tmp; } }

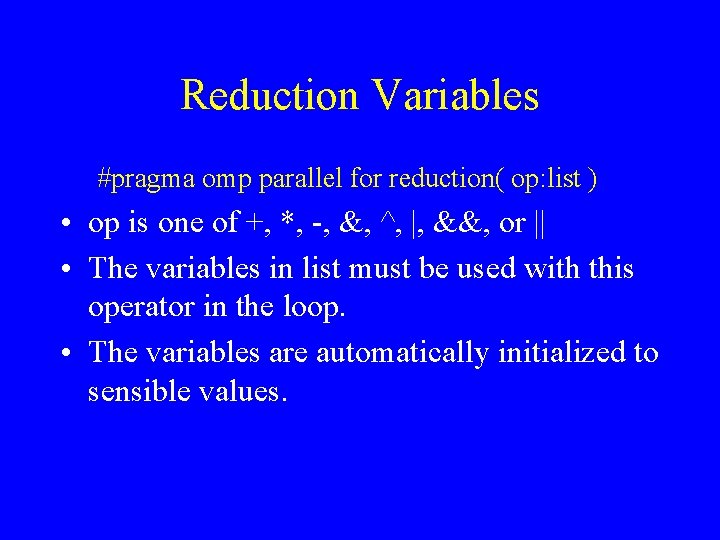

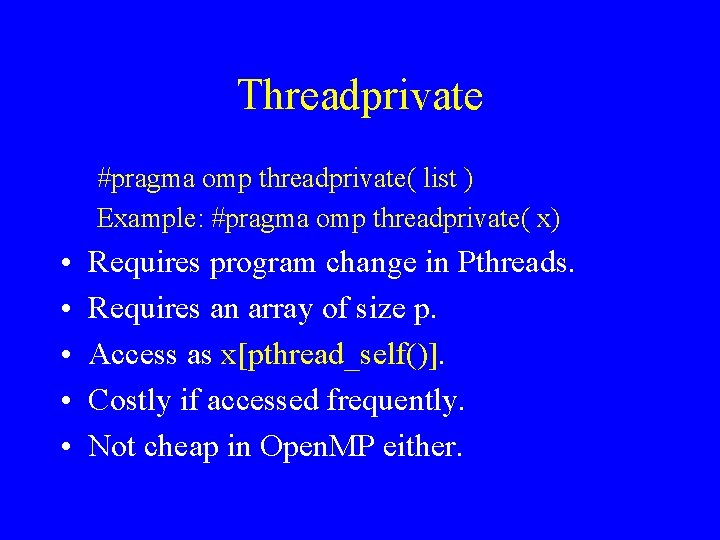

Threadprivate • Private variables are private on a parallel region basis. • Threadprivate variables are global variables that are private throughout the execution of the program.

Threadprivate #pragma omp threadprivate( list ) Example: #pragma omp threadprivate( x) • • • Requires program change in Pthreads. Requires an array of size p. Access as x[pthread_self()]. Costly if accessed frequently. Not cheap in Open. MP either.

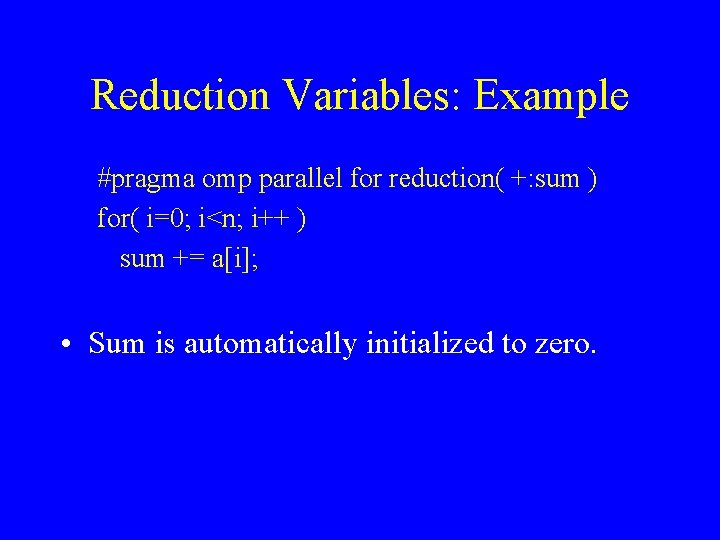

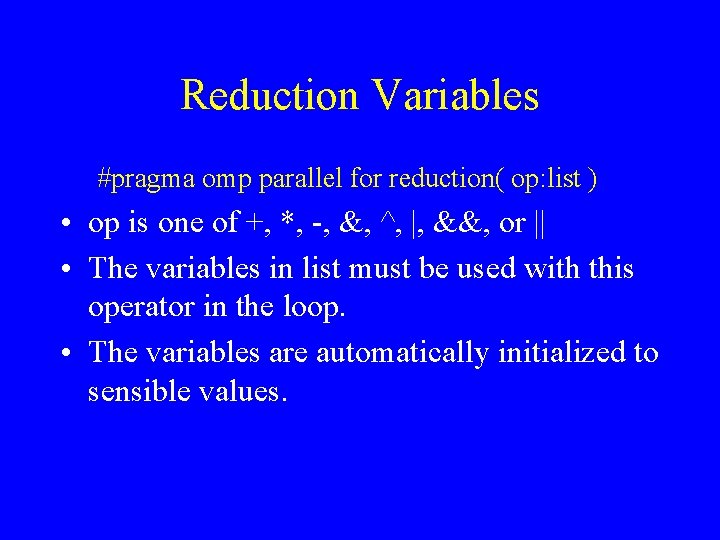

Reduction Variables #pragma omp parallel for reduction( op: list ) • op is one of +, *, -, &, ^, |, &&, or || • The variables in list must be used with this operator in the loop. • The variables are automatically initialized to sensible values.

Reduction Variables: Example #pragma omp parallel for reduction( +: sum ) for( i=0; i<n; i++ ) sum += a[i]; • Sum is automatically initialized to zero.

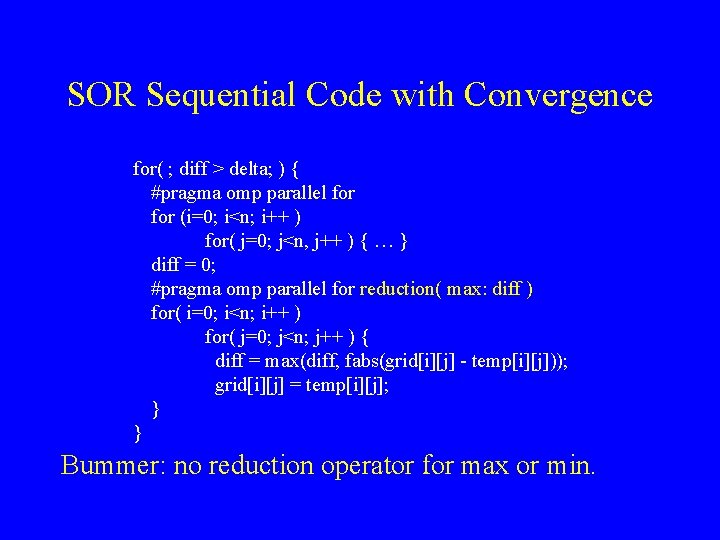

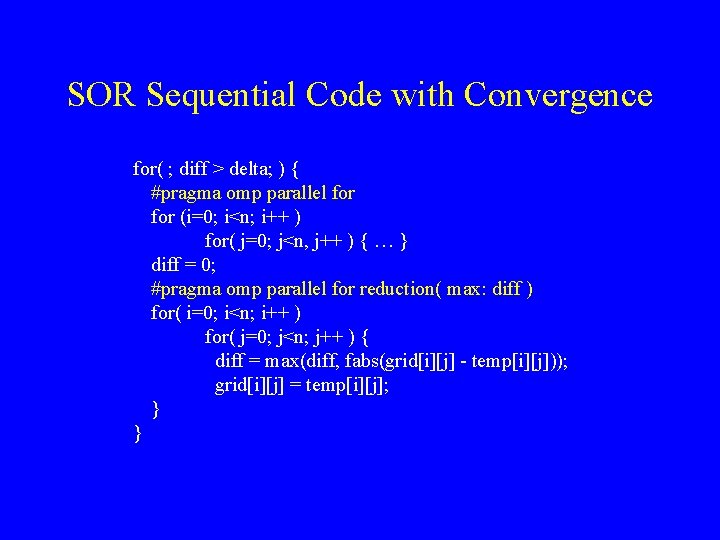

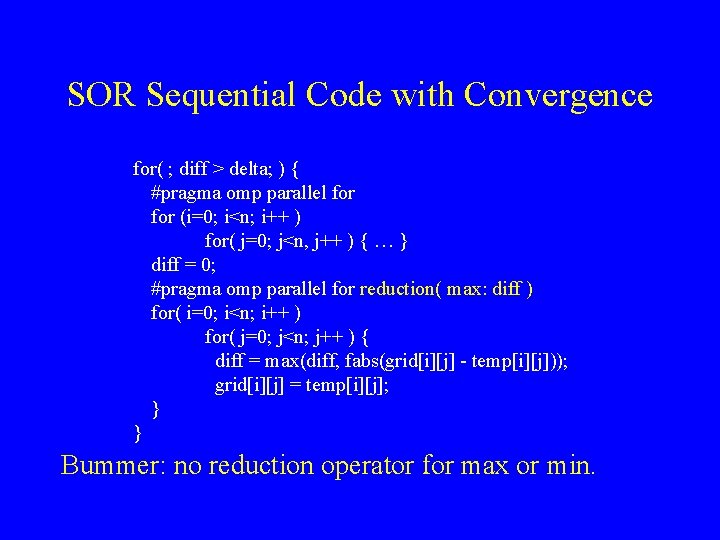

SOR Sequential Code with Convergence for( ; diff > delta; ) { for (i=0; i<n; i++ ) for( j=0; j<n, j++ ) { … } diff = 0; for( i=0; i<n; i++ ) for( j=0; j<n; j++ ) { diff = max(diff, fabs(grid[i][j] - temp[i][j])); grid[i][j] = temp[i][j]; } }

SOR Sequential Code with Convergence for( ; diff > delta; ) { #pragma omp parallel for (i=0; i<n; i++ ) for( j=0; j<n, j++ ) { … } diff = 0; #pragma omp parallel for reduction( max: diff ) for( i=0; i<n; i++ ) for( j=0; j<n; j++ ) { diff = max(diff, fabs(grid[i][j] - temp[i][j])); grid[i][j] = temp[i][j]; } }

SOR Sequential Code with Convergence for( ; diff > delta; ) { #pragma omp parallel for (i=0; i<n; i++ ) for( j=0; j<n, j++ ) { … } diff = 0; #pragma omp parallel for reduction( max: diff ) for( i=0; i<n; i++ ) for( j=0; j<n; j++ ) { diff = max(diff, fabs(grid[i][j] - temp[i][j])); grid[i][j] = temp[i][j]; } } Bummer: no reduction operator for max or min.

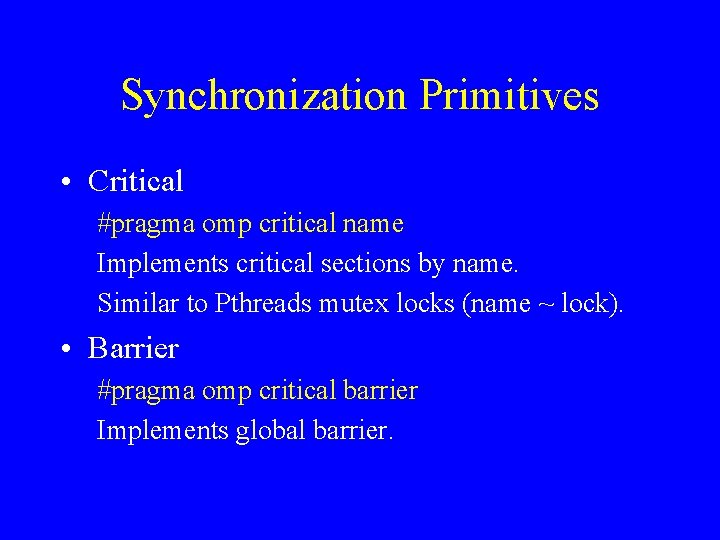

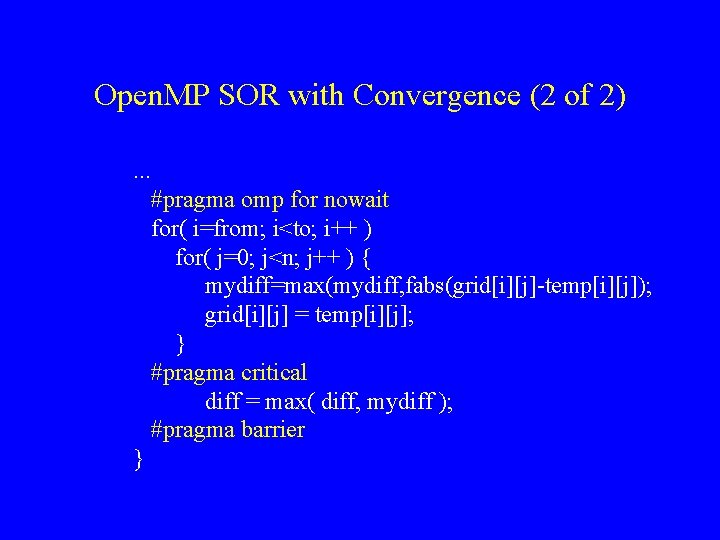

Synchronization Primitives • Critical #pragma omp critical name Implements critical sections by name. Similar to Pthreads mutex locks (name ~ lock). • Barrier #pragma omp critical barrier Implements global barrier.

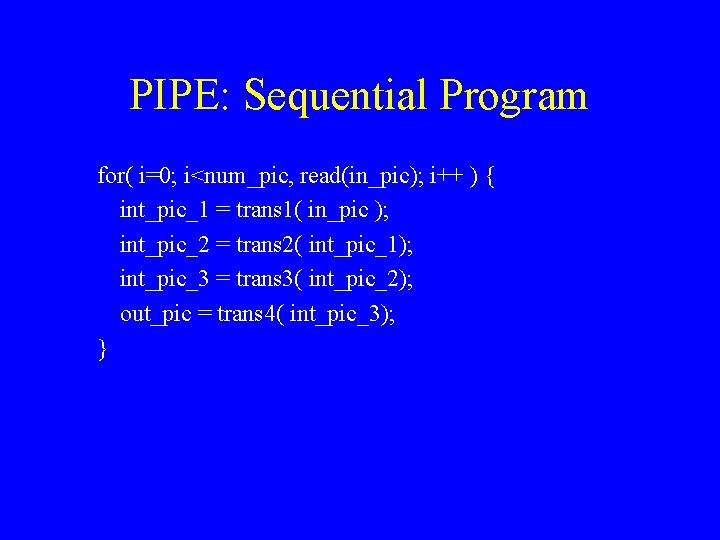

Open. MP SOR with Convergence (1 of 2) #pragma omp parallel private( mydiff ) for( ; diff > delta; ) { #pragma omp for nowait for( i=from; i<to; i++ ) for( j=0; j<n, j++ ) { … } diff = 0. 0; mydiff = 0. 0; #pragma omp barrier. . .

Open. MP SOR with Convergence (2 of 2). . . #pragma omp for nowait for( i=from; i<to; i++ ) for( j=0; j<n; j++ ) { mydiff=max(mydiff, fabs(grid[i][j]-temp[i][j]); grid[i][j] = temp[i][j]; } #pragma critical diff = max( diff, mydiff ); #pragma barrier }

Synchronization Primitives • Big bummer: no condition variables. • Result: must busy wait for condition synchronization. • Clumsy. • Very inefficient on some architectures.

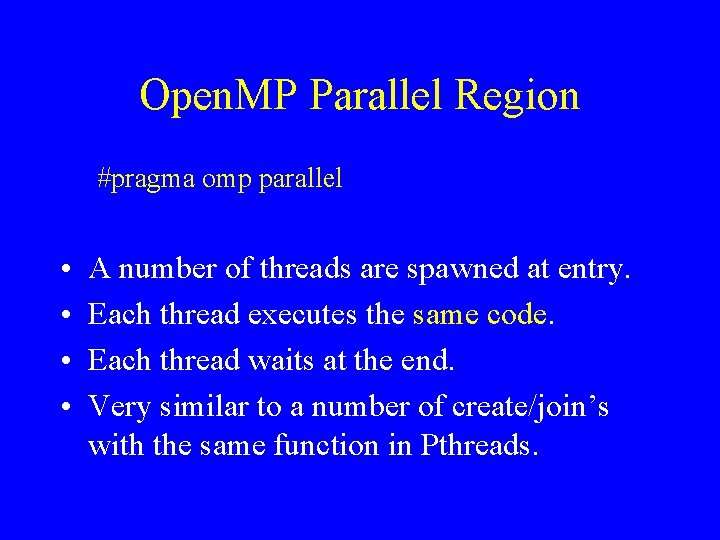

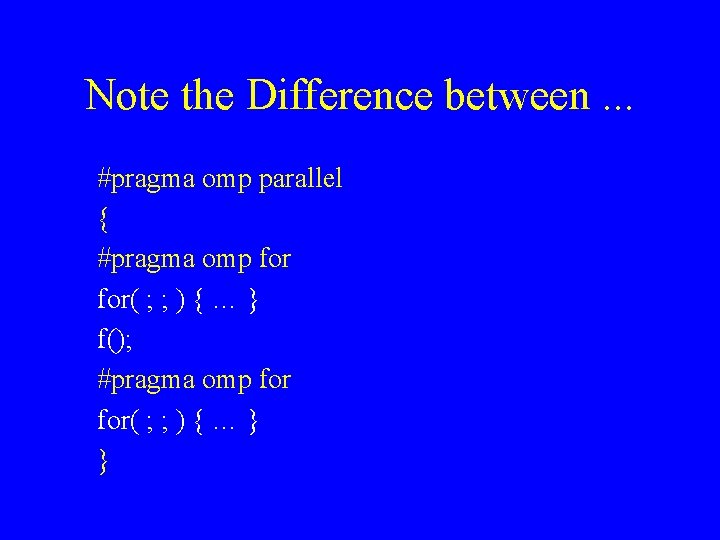

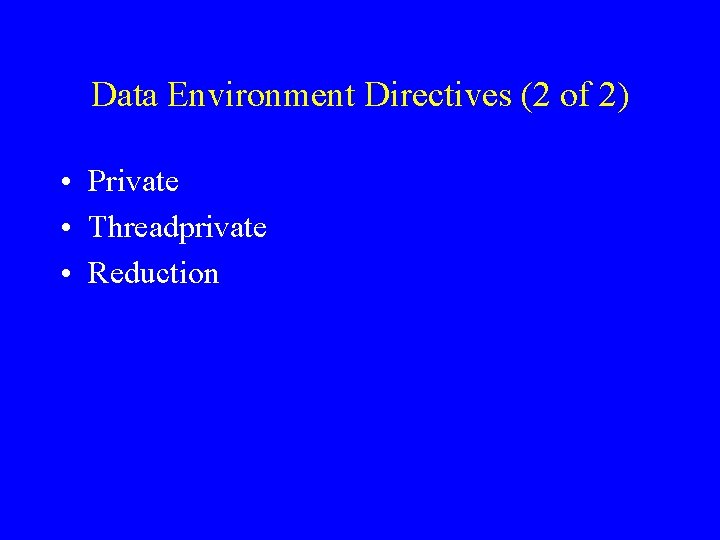

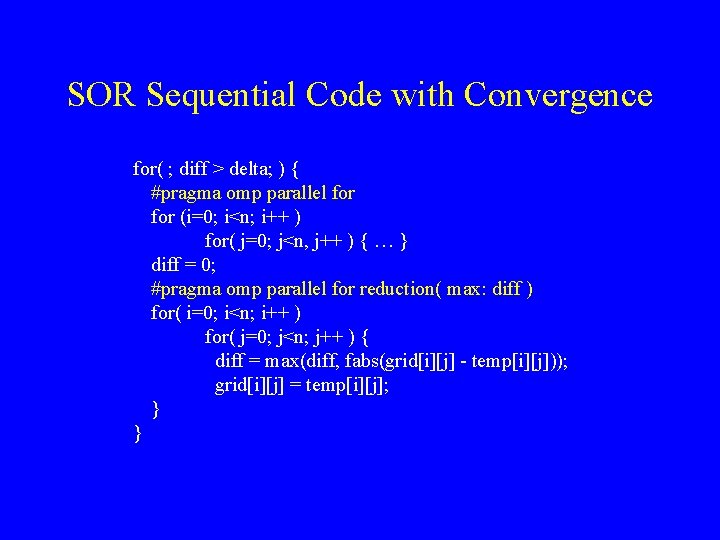

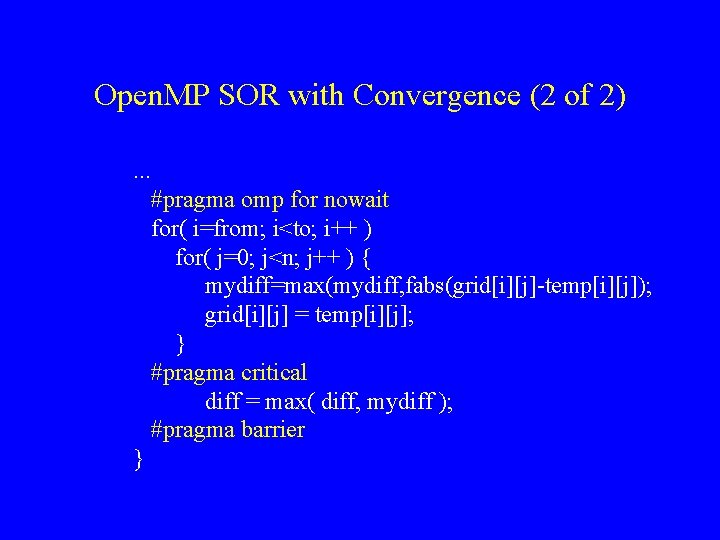

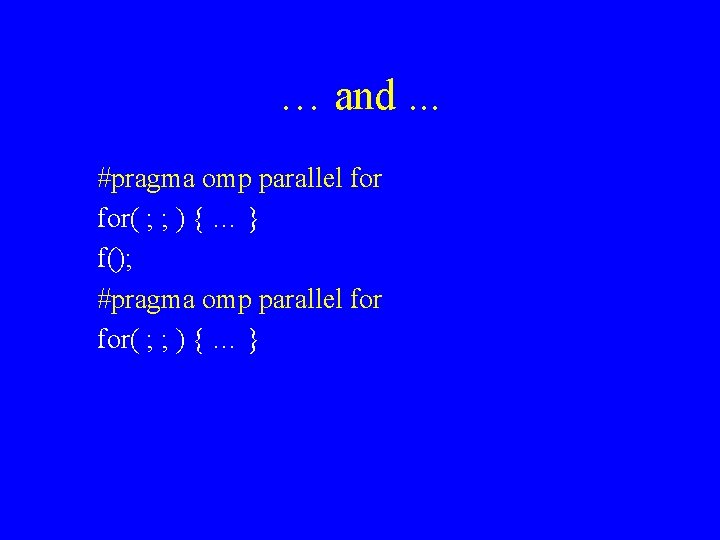

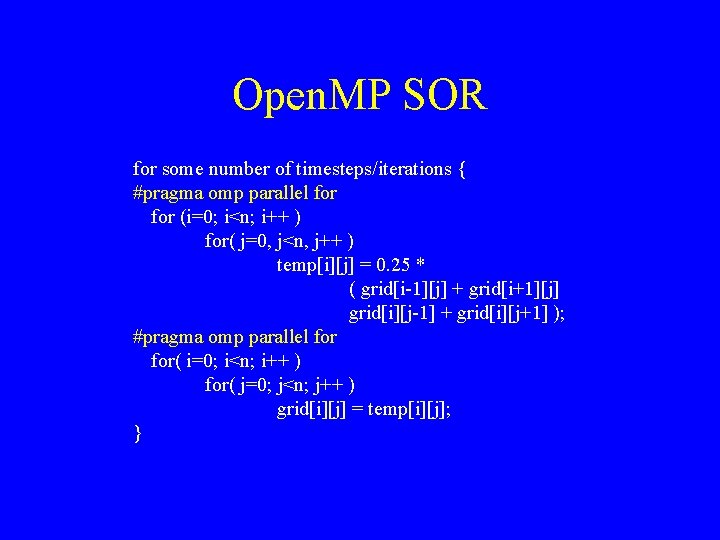

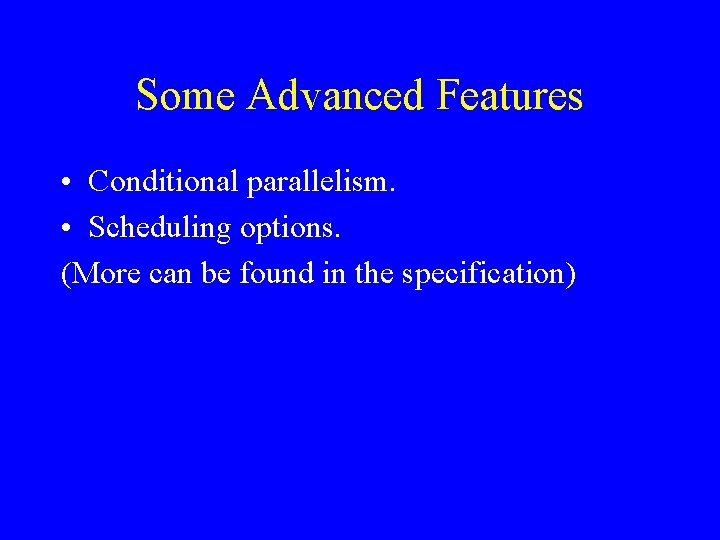

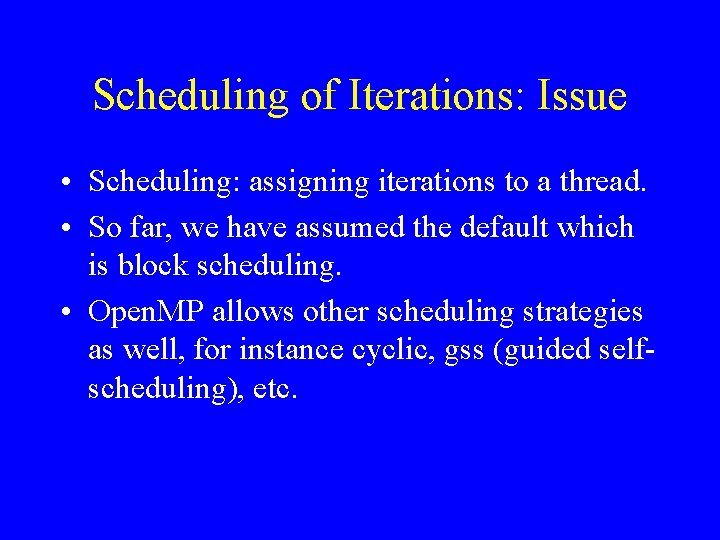

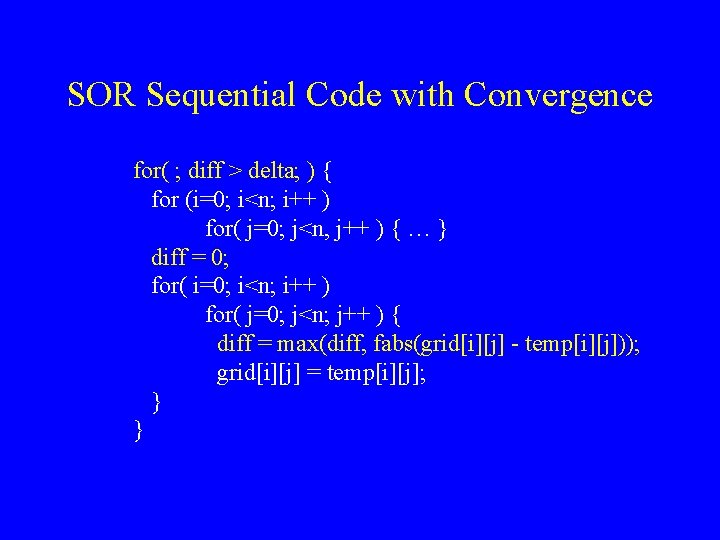

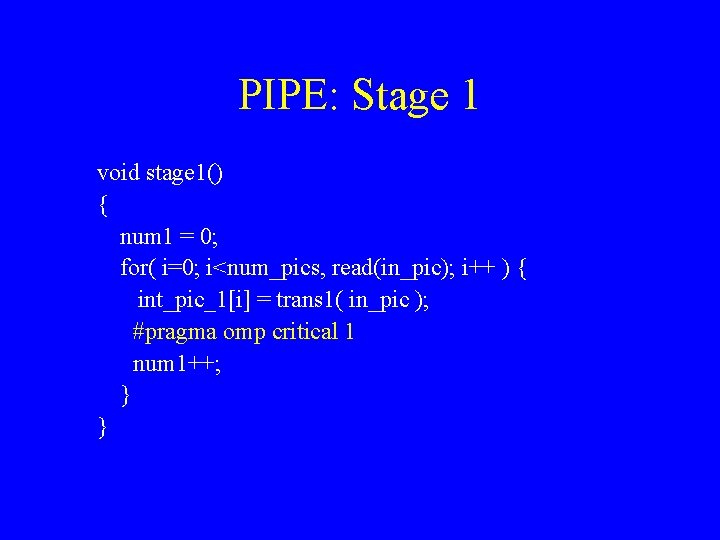

PIPE: Sequential Program for( i=0; i<num_pic, read(in_pic); i++ ) { int_pic_1 = trans 1( in_pic ); int_pic_2 = trans 2( int_pic_1); int_pic_3 = trans 3( int_pic_2); out_pic = trans 4( int_pic_3); }

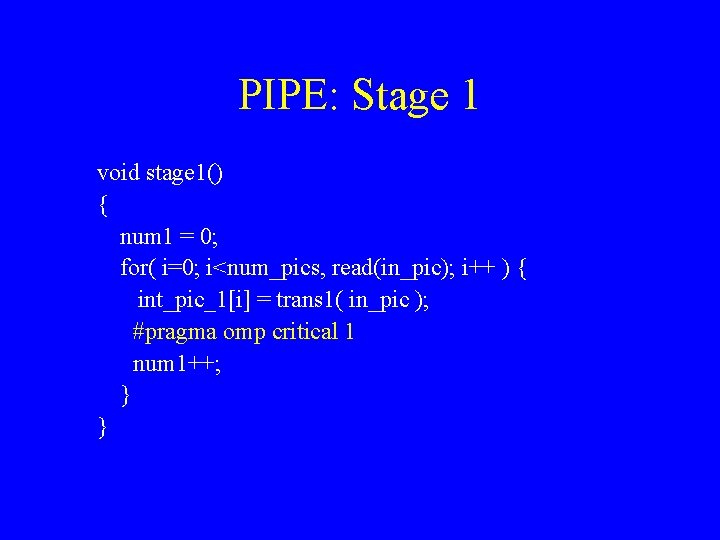

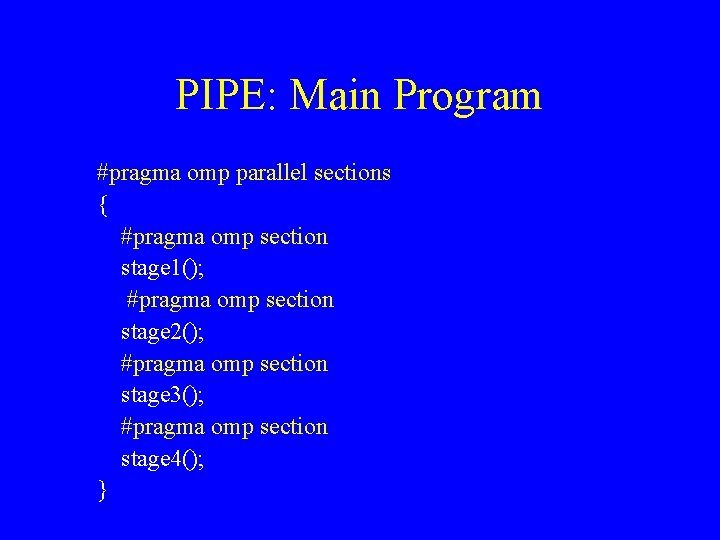

Sequential vs. Parallel Execution • Sequential • Parallel (Color -- picture; horizontal line -- processor).

![PIPE Parallel Program P 0 for i0 inumpics readinpic i intpic1i PIPE: Parallel Program P 0: for( i=0; i<num_pics, read(in_pic); i++ ) { int_pic_1[i] =](https://slidetodoc.com/presentation_image/1fb944cc5abb3009d19cfcc6ca4e1358/image-60.jpg)

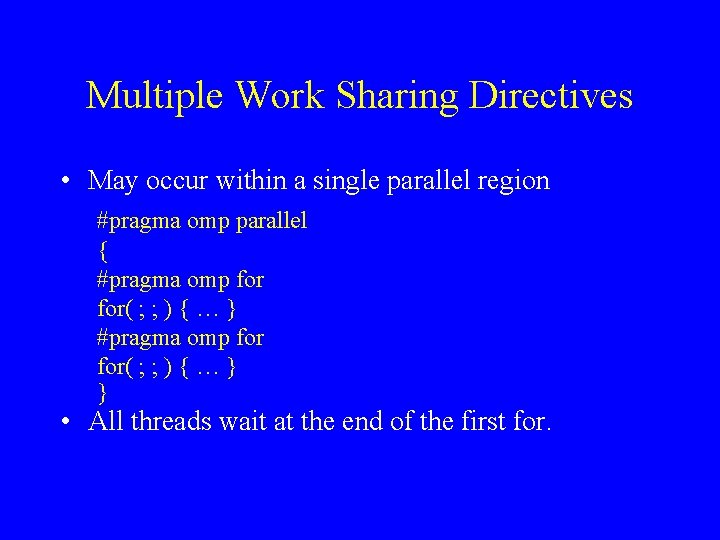

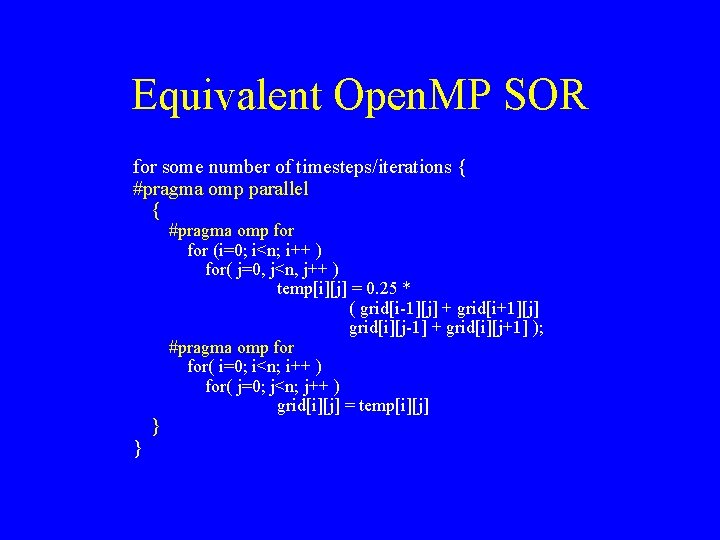

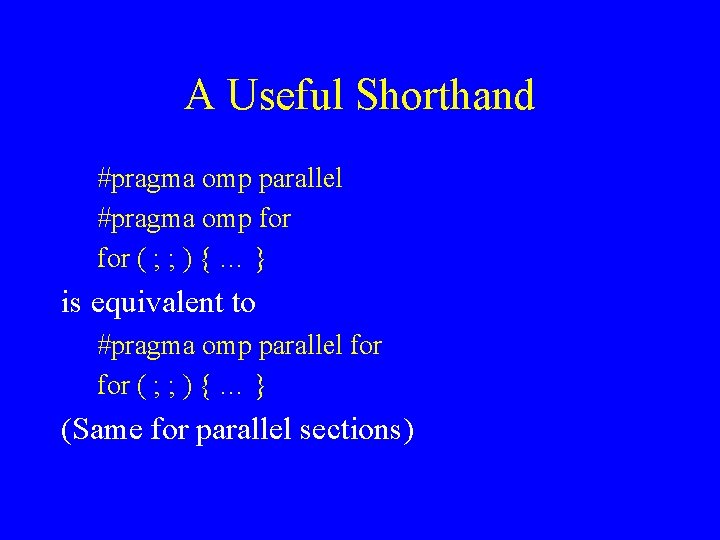

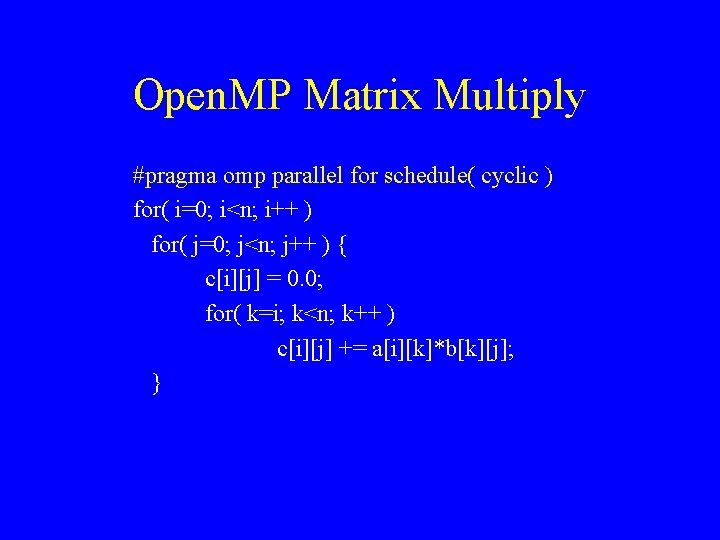

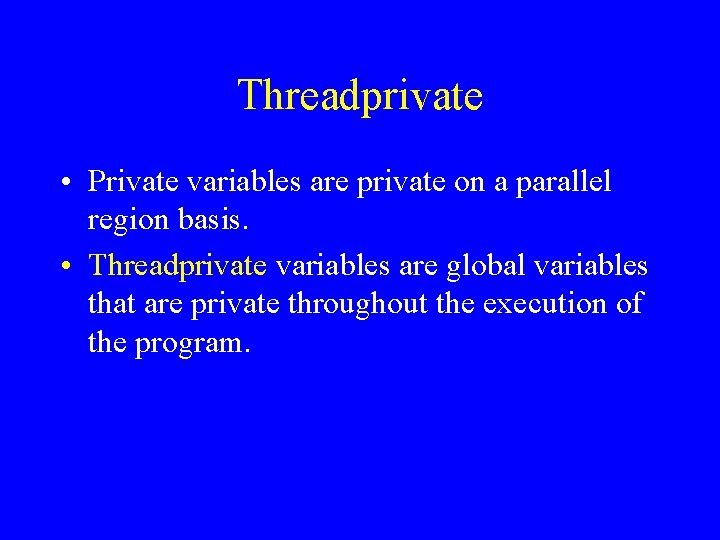

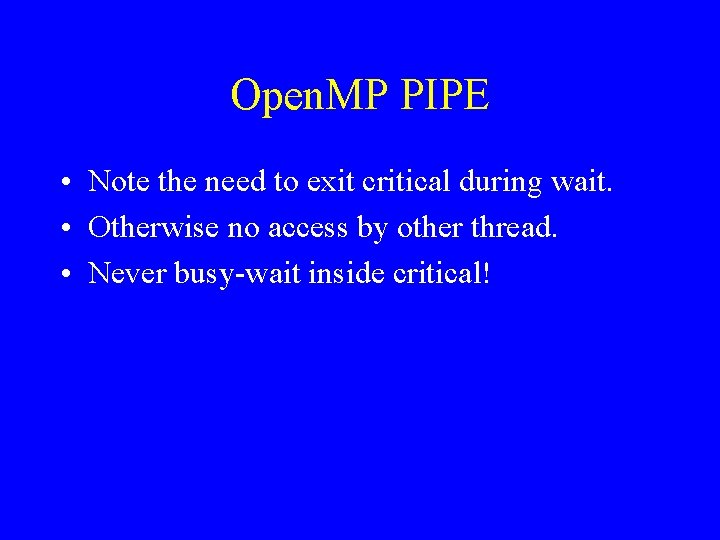

PIPE: Parallel Program P 0: for( i=0; i<num_pics, read(in_pic); i++ ) { int_pic_1[i] = trans 1( in_pic ); signal(event_1_2[i]); } P 1: for( i=0; i<num_pics; i++ ) { wait( event_1_2[i] ); int_pic_2[i] = trans 2( int_pic_1[i] ); signal(event_2_3[i]); }

PIPE: Main Program #pragma omp parallel sections { #pragma omp section stage 1(); #pragma omp section stage 2(); #pragma omp section stage 3(); #pragma omp section stage 4(); }

PIPE: Stage 1 void stage 1() { num 1 = 0; for( i=0; i<num_pics, read(in_pic); i++ ) { int_pic_1[i] = trans 1( in_pic ); #pragma omp critical 1 num 1++; } }

PIPE: Stage 2 void stage 2 () { for( i=0; i<num_pic; i++ ) { do { #pragma omp critical 1 cond = (num 1 <= i); } while (cond); int_pic_2[i] = trans 2(int_pic_1[i]); #pragma omp critical 2 num 2++; } }

Open. MP PIPE • Note the need to exit critical during wait. • Otherwise no access by other thread. • Never busy-wait inside critical!