ECE 1747 Parallel Programming Shared Memory Multithreading Pthreads

![Parallel SOR with Barriers: main int main(int argc, char *argv[]) { pthread_t *thrd[p]; /* Parallel SOR with Barriers: main int main(int argc, char *argv[]) { pthread_t *thrd[p]; /*](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-37.jpg)

![Optimizations: Example 16 for (i = 0; i < 100000; i++) a[i + 1000] Optimizations: Example 16 for (i = 0; i < 100000; i++) a[i + 1000]](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-47.jpg)

![PIPE P 1: for( i=0; i<num_pics, read(in_pic); i++ ) { int_pic_1[i] = trans 1( PIPE P 1: for( i=0; i<num_pics, read(in_pic); i++ ) { int_pic_1[i] = trans 1(](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-54.jpg)

![How to remember a signal (1 of 2) semaphore_signal(i) { pthread_mutex_lock(&mutex_rem[i]); arrived [i]= 1; How to remember a signal (1 of 2) semaphore_signal(i) { pthread_mutex_lock(&mutex_rem[i]); arrived [i]= 1;](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-56.jpg)

![How to Remember a Signal (2 of 2) semaphore_wait(i) { pthreads_mutex_lock(&mutex_rem[i]); if( arrived[i] = How to Remember a Signal (2 of 2) semaphore_wait(i) { pthreads_mutex_lock(&mutex_rem[i]); if( arrived[i] =](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-57.jpg)

![PIPE with Pthreads P 1: for( i=0; i<num_pics, read(in_pic); i++ ) { int_pic_1[i] = PIPE with Pthreads P 1: for( i=0; i<num_pics, read(in_pic); i++ ) { int_pic_1[i] =](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-58.jpg)

![Molecular Dynamics (continued) for each molecule i number of nearby molecules count[i] array of Molecular Dynamics (continued) for each molecule i number of nearby molecules count[i] array of](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-78.jpg)

- Slides: 84

ECE 1747 Parallel Programming Shared Memory Multithreading Pthreads

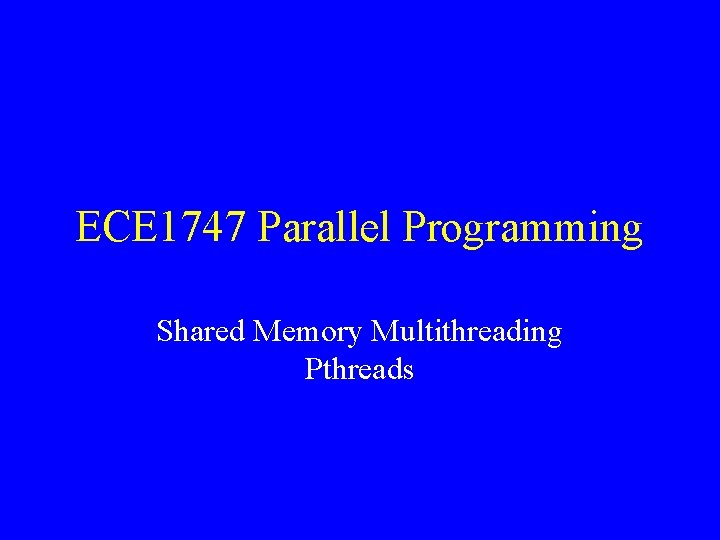

Shared Memory • All threads access the same shared memory data space. Shared Memory Address Space proc 1 proc 2 proc 3 proc. N

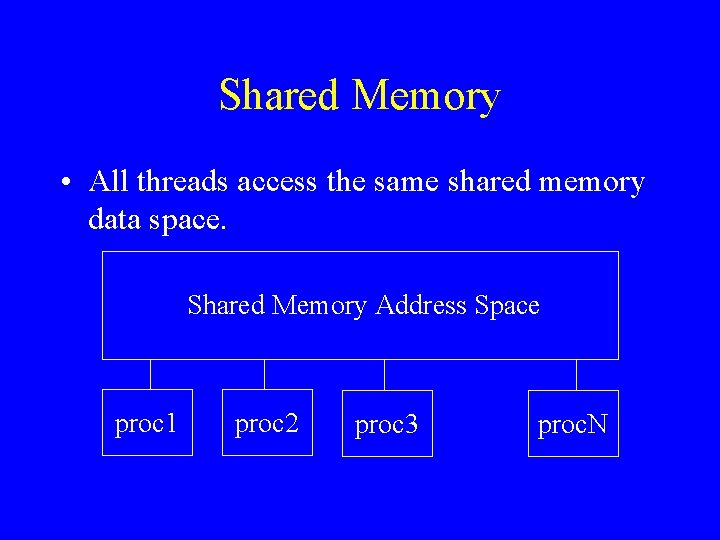

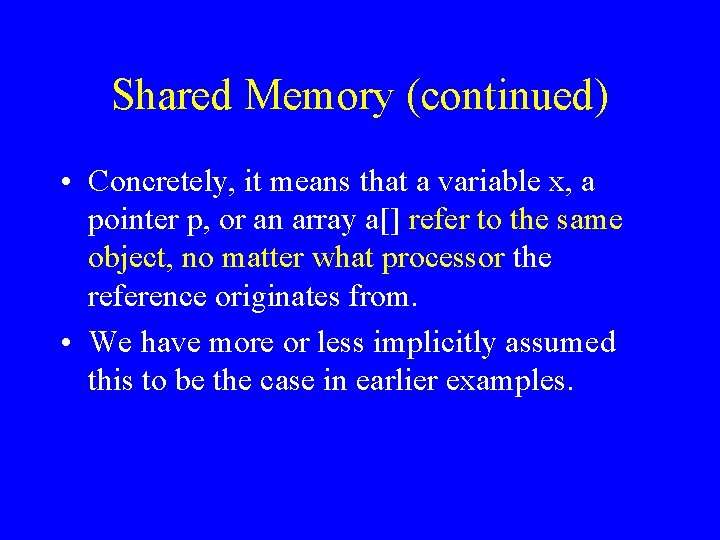

Shared Memory (continued) • Concretely, it means that a variable x, a pointer p, or an array a[] refer to the same object, no matter what processor the reference originates from. • We have more or less implicitly assumed this to be the case in earlier examples.

Shared Memory a proc 1 proc 2 proc 3 proc. N

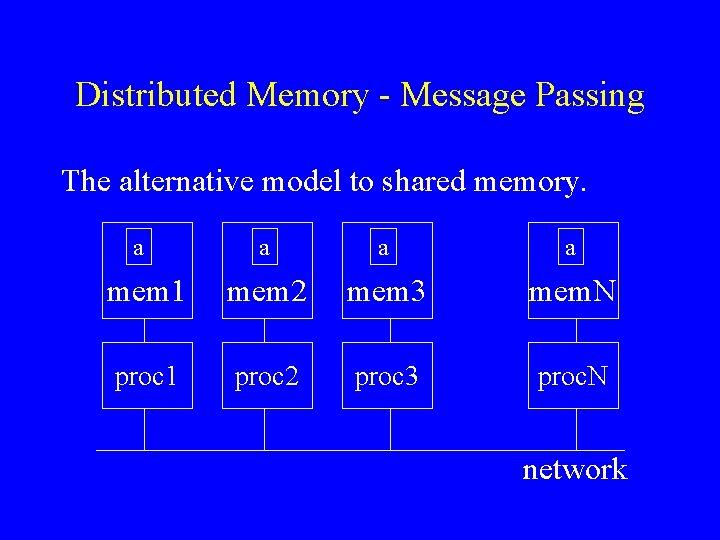

Distributed Memory - Message Passing The alternative model to shared memory. a a mem 1 mem 2 mem 3 mem. N proc 1 proc 2 proc 3 proc. N network

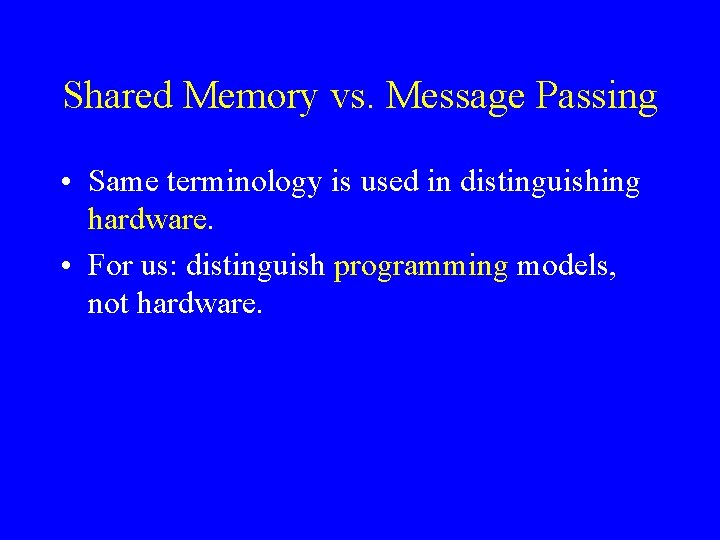

Shared Memory vs. Message Passing • Same terminology is used in distinguishing hardware. • For us: distinguish programming models, not hardware.

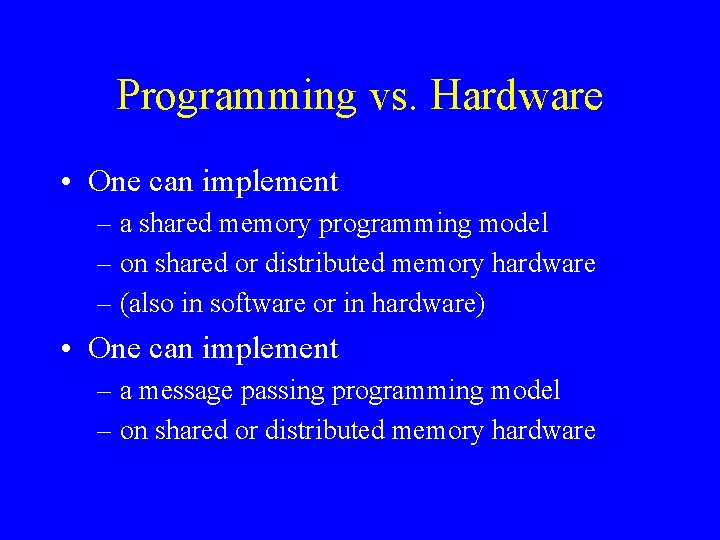

Programming vs. Hardware • One can implement – a shared memory programming model – on shared or distributed memory hardware – (also in software or in hardware) • One can implement – a message passing programming model – on shared or distributed memory hardware

Portability of programming models shared memory programming message passing programming shared memory machine distr. memory machine

Shared Memory Programming: Important Point to Remember • No matter what the implementation, it conceptually looks like shared memory. • There may be some (important) performance differences.

Multithreading • User has explicit control over thread. • Good: control can be used to performance benefit. • Bad: user has to deal with it.

Pthreads • POSIX standard shared-memory multithreading interface. • Provides primitives for process management and synchronization.

What does the user have to do? • Decide how to decompose the computation into parallel parts. • Create (and destroy) processes to support that decomposition. • Add synchronization to make sure dependences are covered.

General Thread Structure • Typically, a thread is a concurrent execution of a function or a procedure. • So, your program needs to be restructured such that parallel parts form separate procedures or functions.

Example of Thread Creation (contd. ) main() pthread_ create(func) func()

Thread Joining Example void *func(void *) { …. . } pthread_t id; int X; pthread_create(&id, NULL, func, &X); …. . pthread_join(id, NULL); …. .

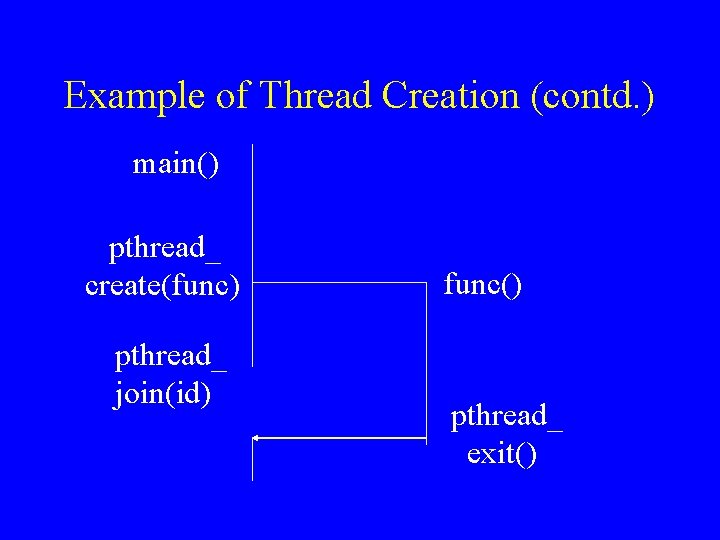

Example of Thread Creation (contd. ) main() pthread_ create(func) pthread_ join(id) func() pthread_ exit()

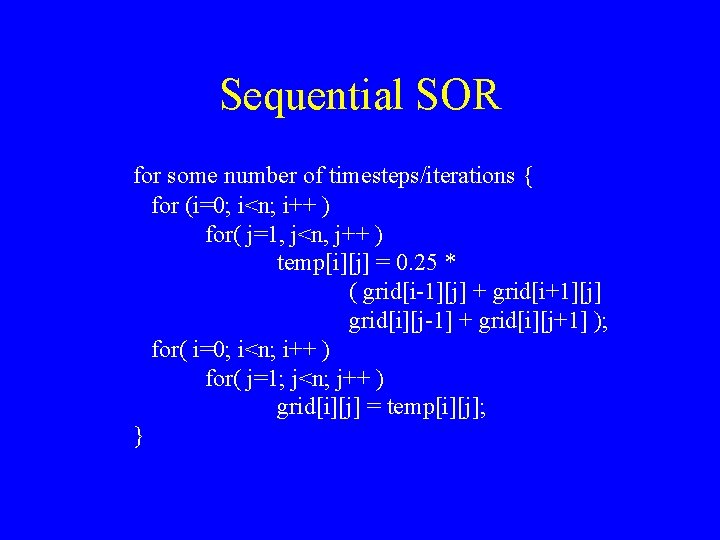

Sequential SOR for some number of timesteps/iterations { for (i=0; i<n; i++ ) for( j=1, j<n, j++ ) temp[i][j] = 0. 25 * ( grid[i-1][j] + grid[i+1][j] grid[i][j-1] + grid[i][j+1] ); for( i=0; i<n; i++ ) for( j=1; j<n; j++ ) grid[i][j] = temp[i][j]; }

Parallel SOR • First (i, j) loop nest can be parallelized. • Second (i, j) loop nest can be parallelized. • Must wait to start second loop nest until all processors have finished first. • Must wait to start first loop nest of next iteration until all processors have second loop nest of previous iteration. • Give n/p rows to each processor.

Pthreads SOR: Parallel parts (1) void* sor_1(void *s) { int slice = (int) s; int from = (slice*n)/p; int to = ((slice+1)*n)/p; for( i=from; i<to; i++) for( j=0; j<n; j++ ) temp[i][j] = 0. 25*(grid[i-1][j] + grid[i+1][j] +grid[i][j-1] + grid[i][j+1]); }

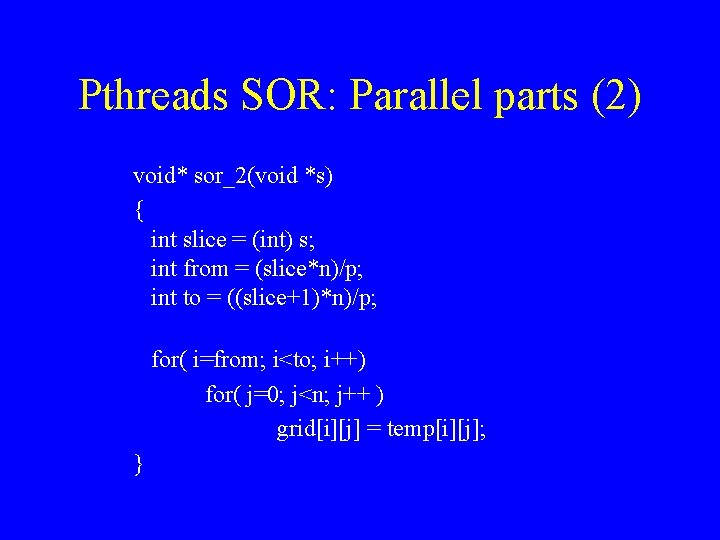

Pthreads SOR: Parallel parts (2) void* sor_2(void *s) { int slice = (int) s; int from = (slice*n)/p; int to = ((slice+1)*n)/p; for( i=from; i<to; i++) for( j=0; j<n; j++ ) grid[i][j] = temp[i][j]; }

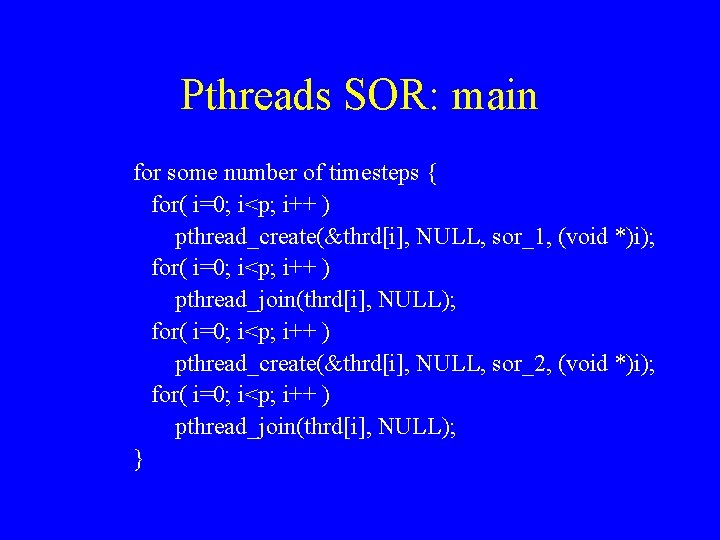

Pthreads SOR: main for some number of timesteps { for( i=0; i<p; i++ ) pthread_create(&thrd[i], NULL, sor_1, (void *)i); for( i=0; i<p; i++ ) pthread_join(thrd[i], NULL); for( i=0; i<p; i++ ) pthread_create(&thrd[i], NULL, sor_2, (void *)i); for( i=0; i<p; i++ ) pthread_join(thrd[i], NULL); }

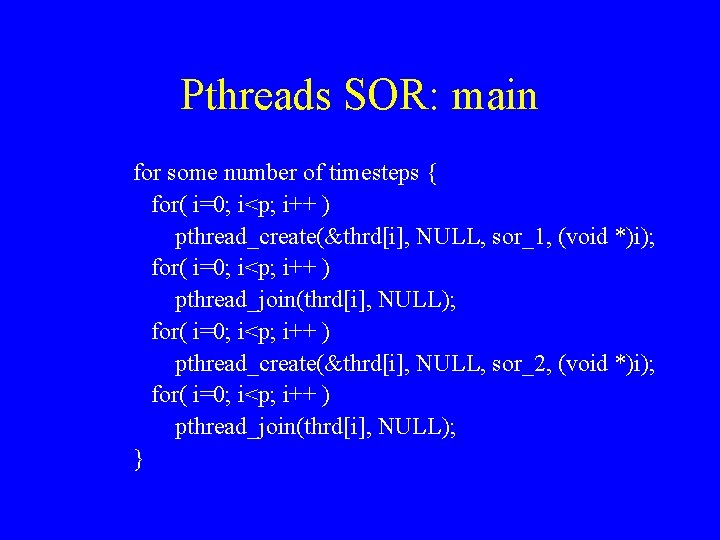

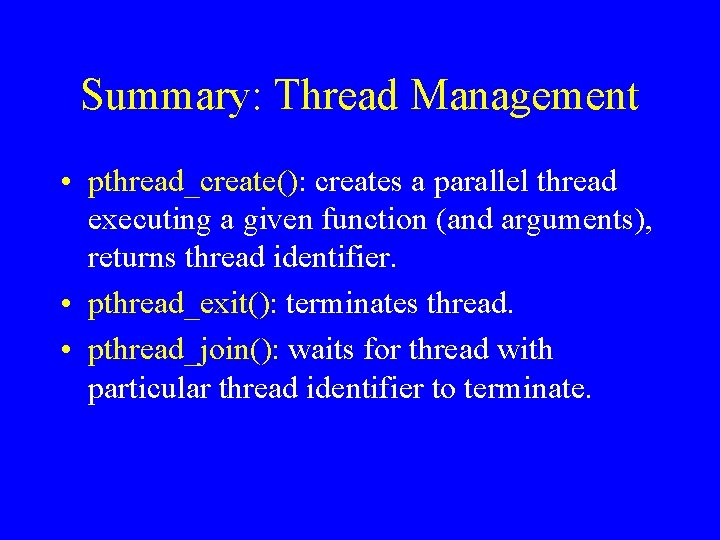

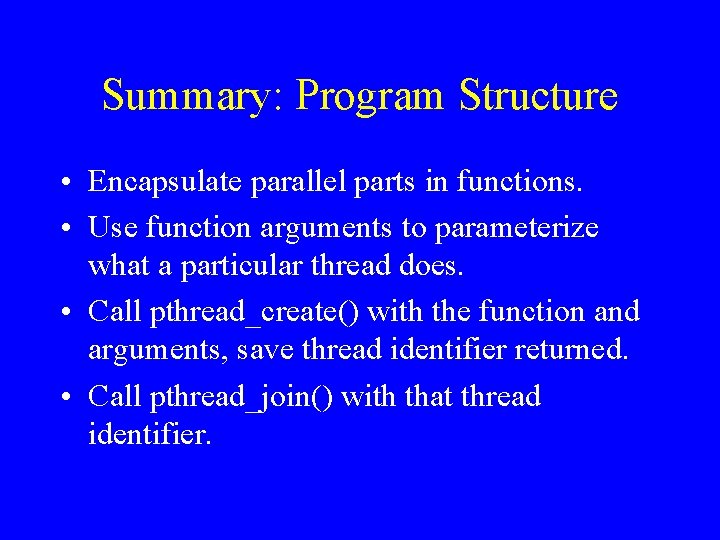

Summary: Thread Management • pthread_create(): creates a parallel thread executing a given function (and arguments), returns thread identifier. • pthread_exit(): terminates thread. • pthread_join(): waits for thread with particular thread identifier to terminate.

Summary: Program Structure • Encapsulate parallel parts in functions. • Use function arguments to parameterize what a particular thread does. • Call pthread_create() with the function and arguments, save thread identifier returned. • Call pthread_join() with that thread identifier.

Pthreads Synchronization • Create/exit/join – provide some form of synchronization, – at a very coarse level, – requires thread creation/destruction. • Need for finer-grain synchronization – mutex locks, – condition variables.

Use of Mutex Locks • To implement critical sections. • Pthreads provides only exclusive locks. • Some other systems allow shared-read, exclusive-write locks.

Condition variables (1 of 5) pthread_cond_init( pthread_cond_t *cond, pthread_cond_attr *attr) • Creates a new condition variable cond. • Attribute: ignore for now.

Condition Variables (2 of 5) pthread_cond_destroy( pthread_cond_t *cond) • Destroys the condition variable cond.

Condition Variables (3 of 5) pthread_cond_wait( pthread_cond_t *cond, pthread_mutex_t *mutex) • Blocks the calling thread, waiting on cond. • Unlocks the mutex.

Condition Variables (4 of 5) pthread_cond_signal( pthread_cond_t *cond) • Unblocks one thread waiting on cond. • Which one is determined by scheduler. • If no thread waiting, then signal is a no-op.

Condition Variables (5 of 5) pthread_cond_broadcast( pthread_cond_t *cond) • Unblocks all threads waiting on cond. • If no thread waiting, then broadcast is a noop.

Use of Condition Variables • To implement signal-wait synchronization discussed in earlier examples. • Important note: a signal is “forgotten” if there is no corresponding wait that has already happened.

Barrier Synchronization • A wait at a barrier causes a thread to wait until all threads have performed a wait at the barrier. • At that point, they all proceed.

Implementing Barriers in Pthreads • Count the number of arrivals at the barrier. • Wait if this is not the last arrival. • Make everyone unblock if this is the last arrival. • Since the arrival count is a shared variable, enclose the whole operation in a mutex lock -unlock.

Implementing Barriers in Pthreads void barrier() { pthread_mutex_lock(&mutex_arr); arrived++; if (arrived<N) { pthread_cond_wait(&cond, &mutex_arr); } else { pthread_cond_broadcast(&cond); arrived=0; /* be prepared for next barrier */ } pthread_mutex_unlock(&mutex_arr); }

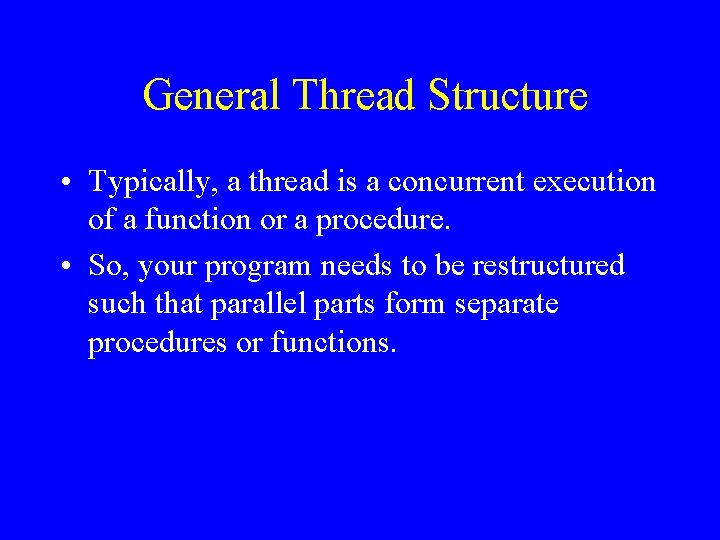

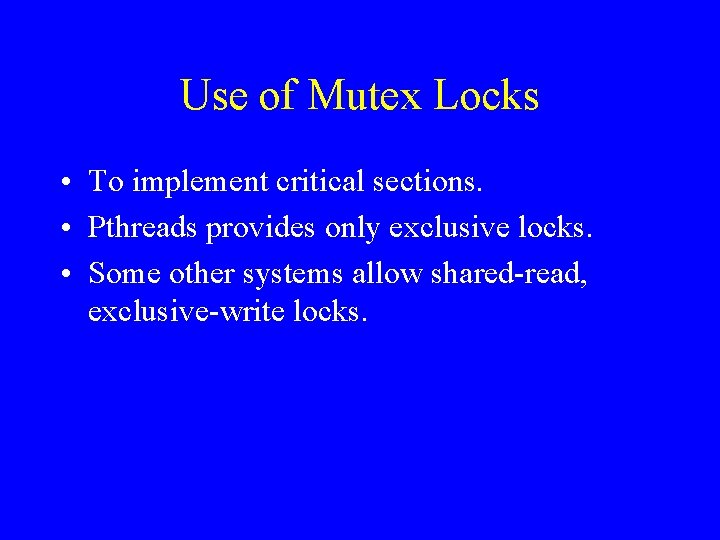

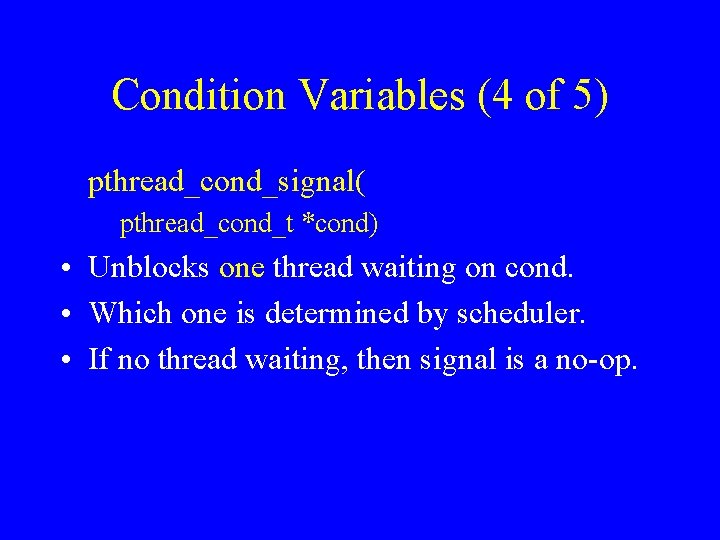

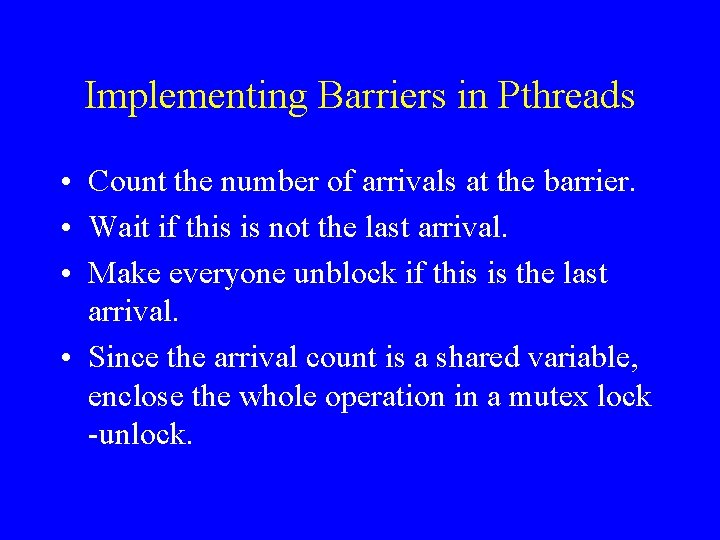

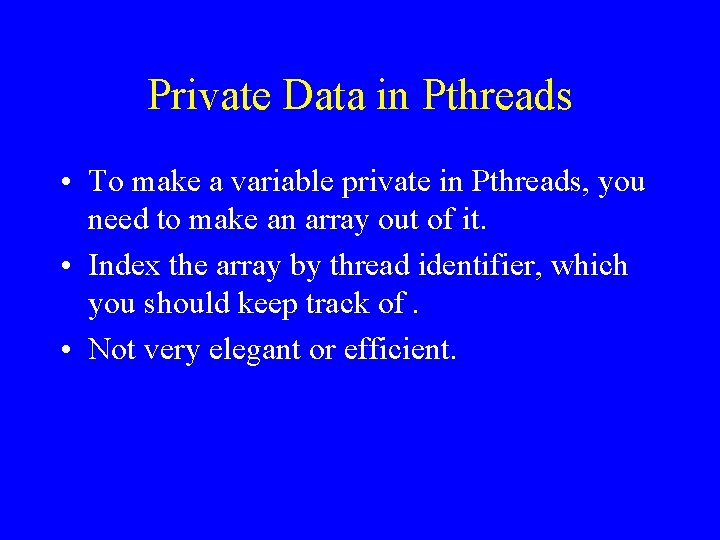

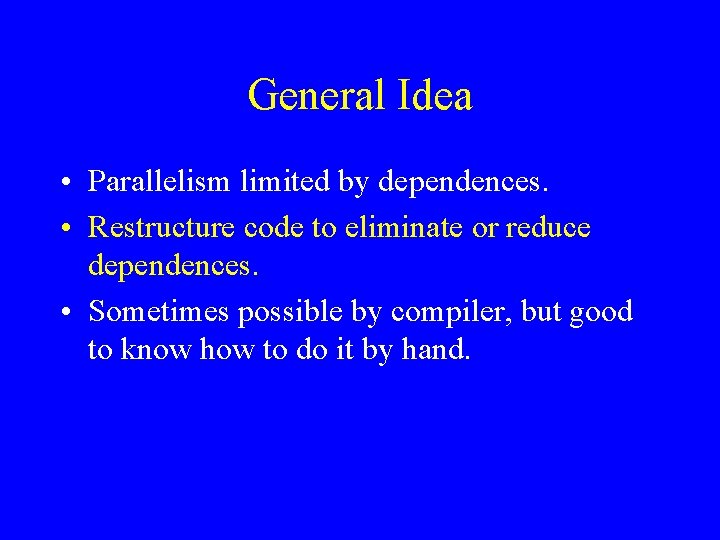

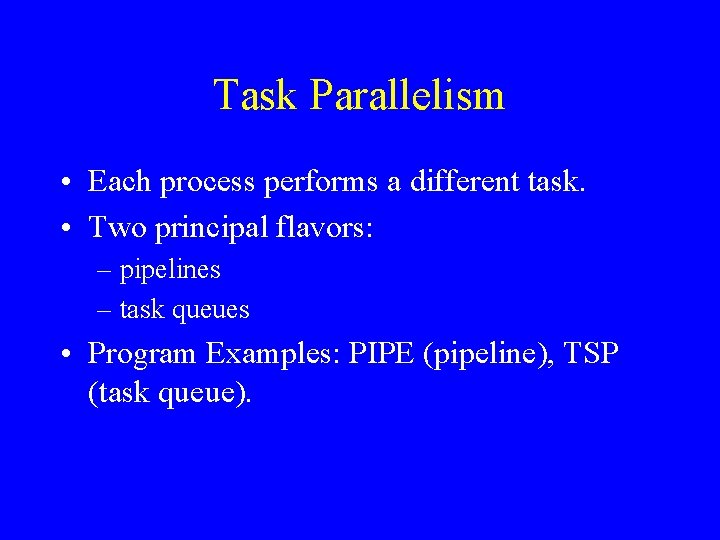

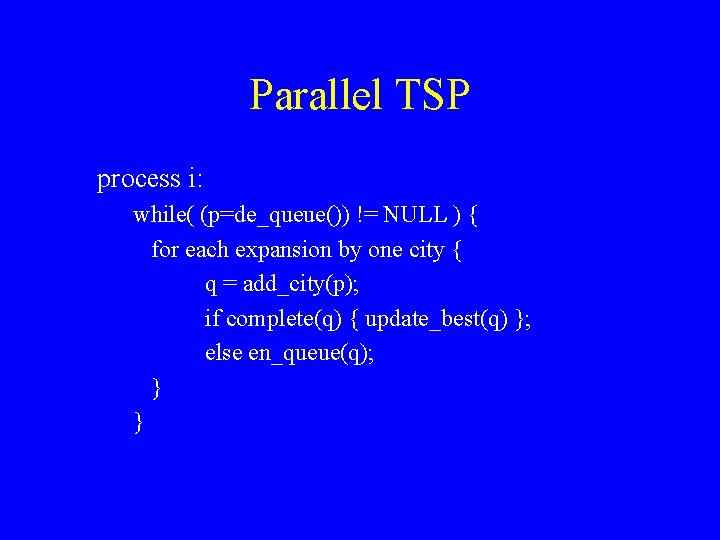

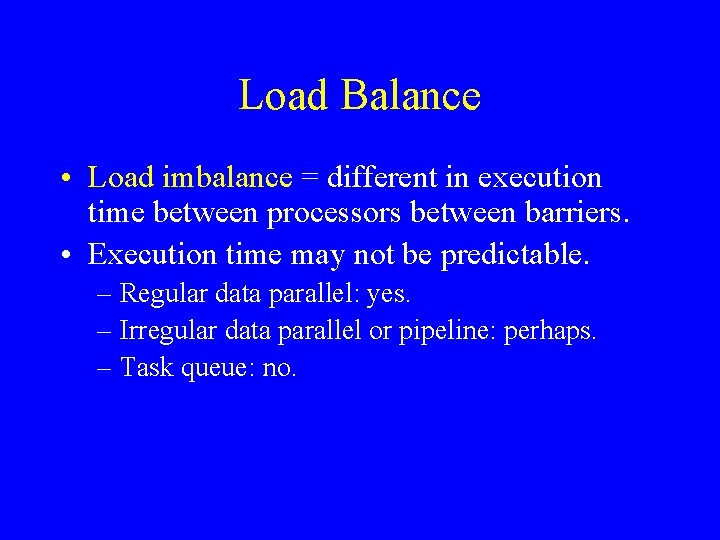

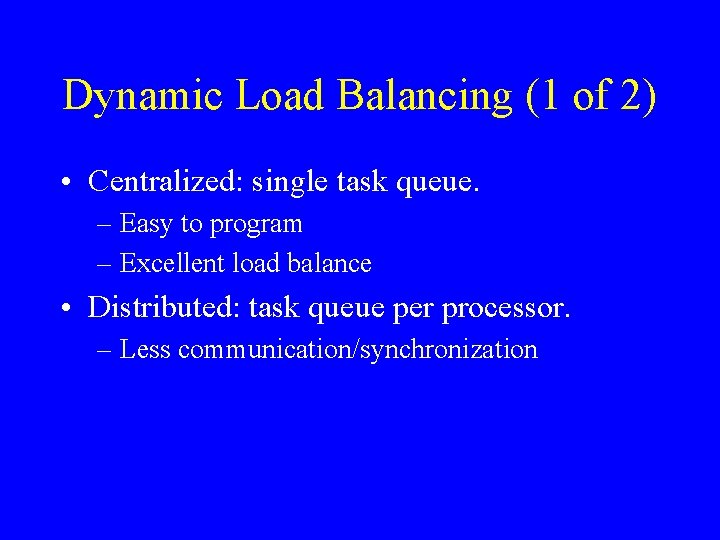

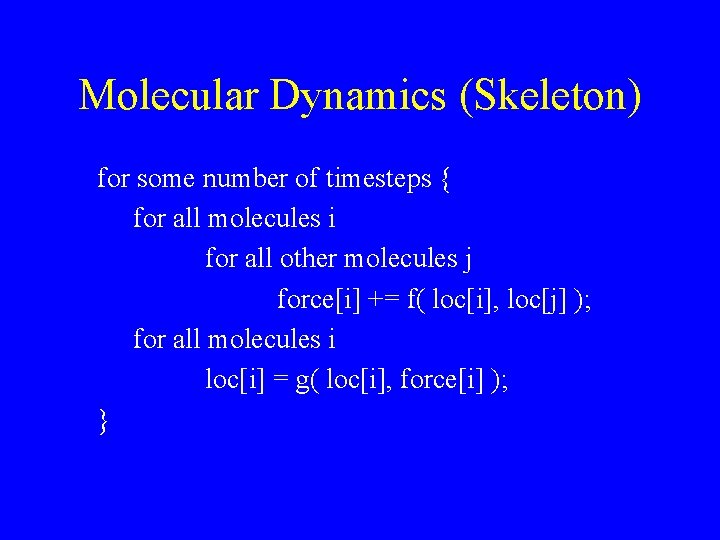

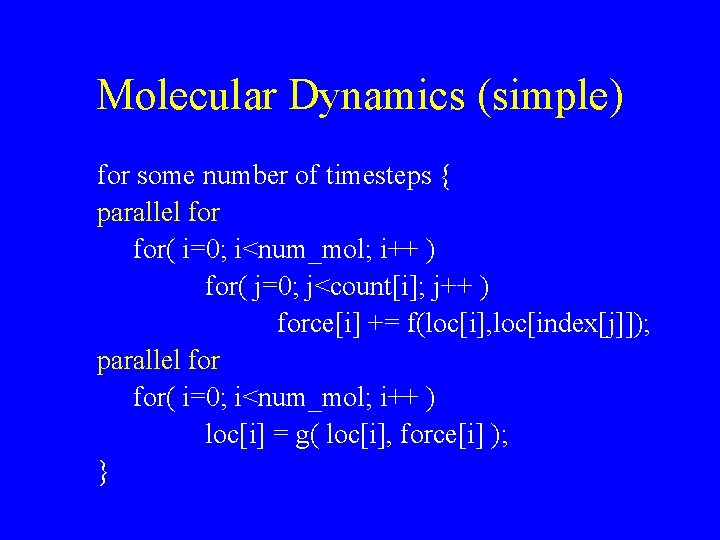

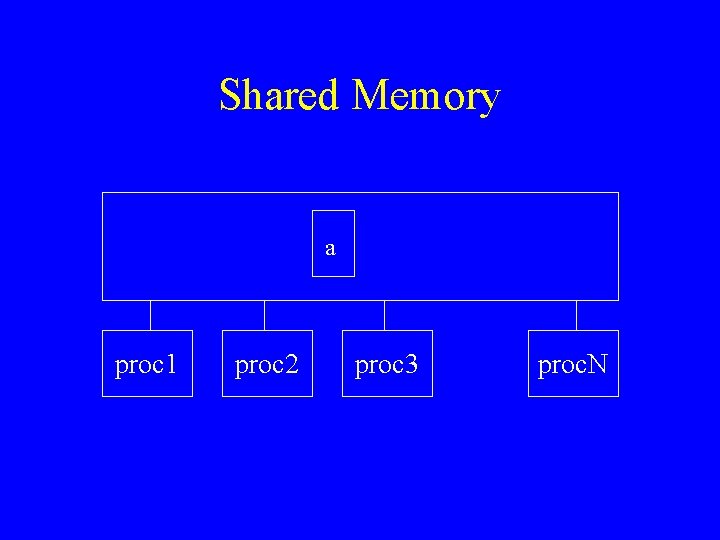

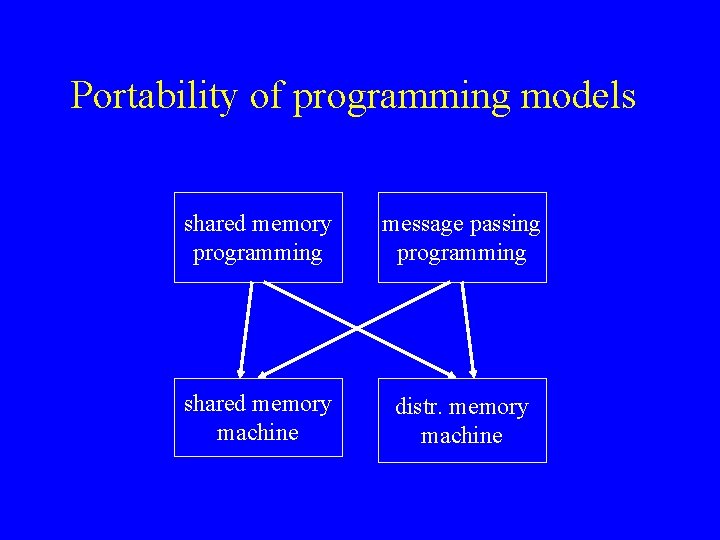

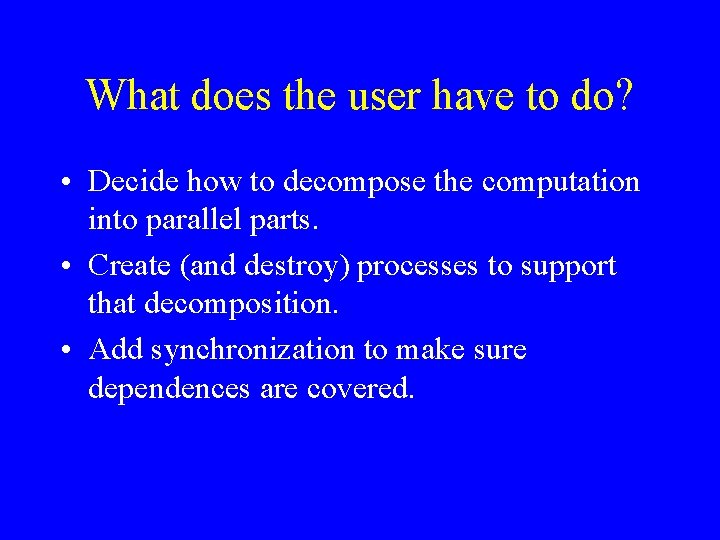

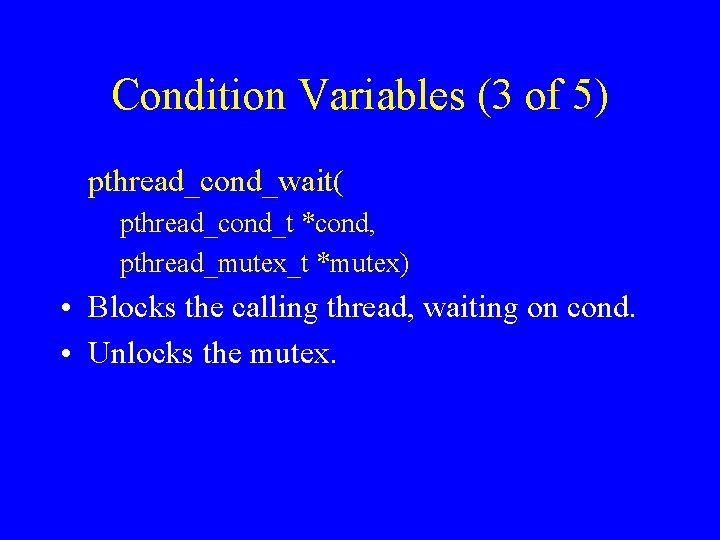

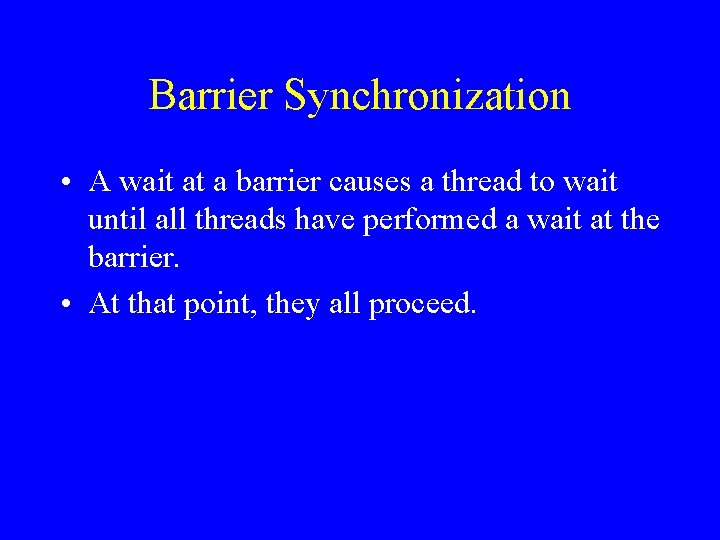

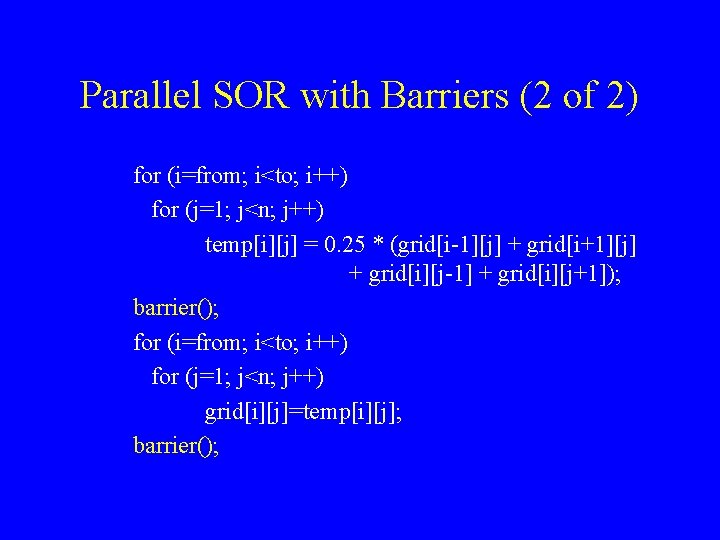

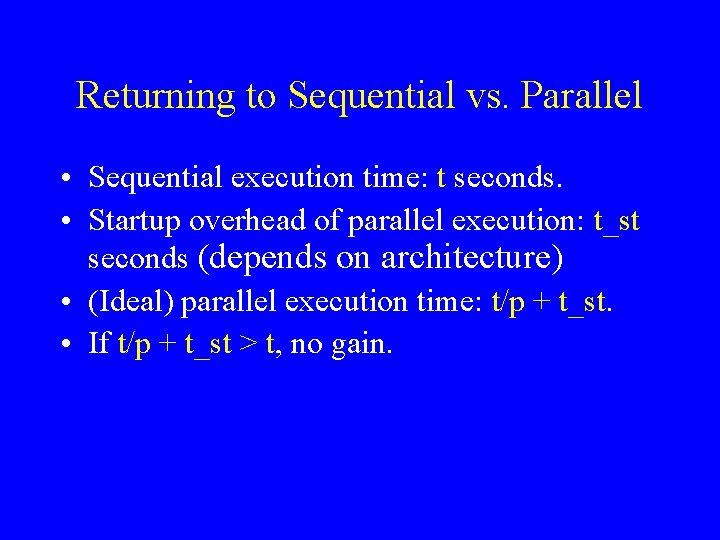

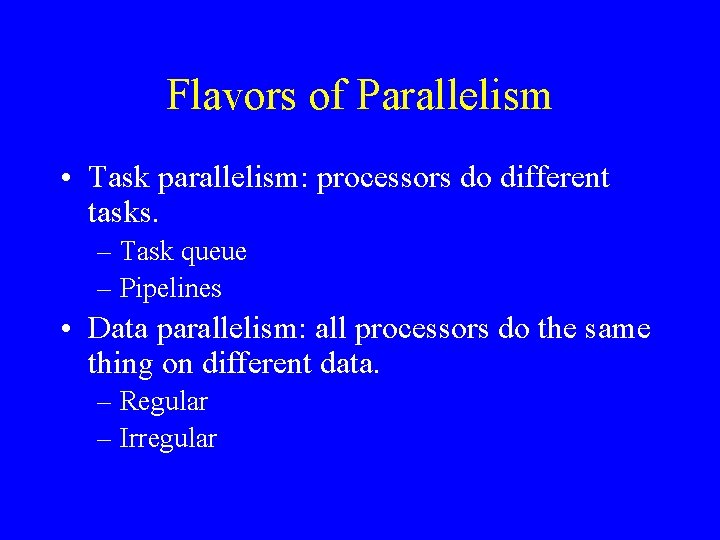

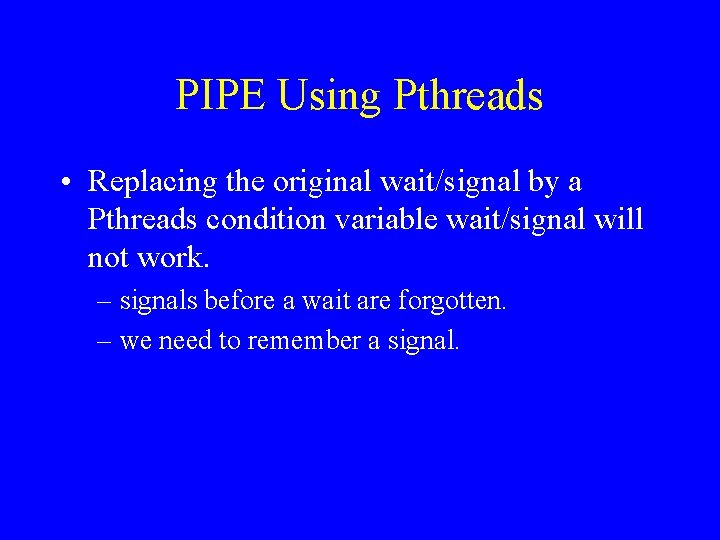

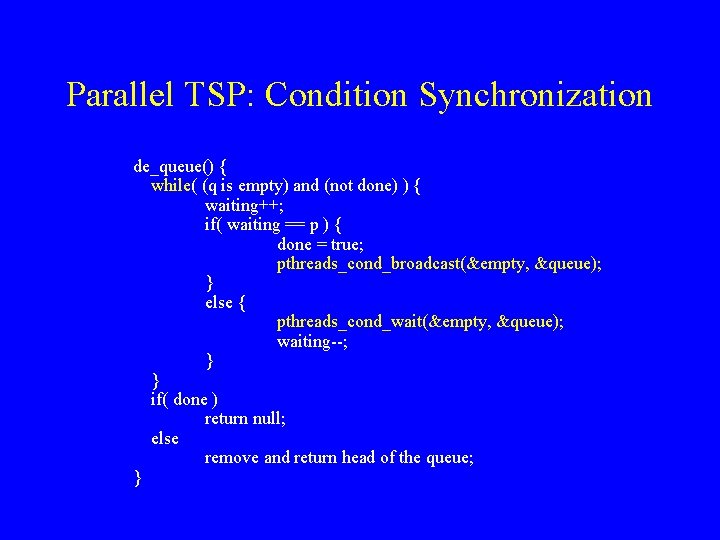

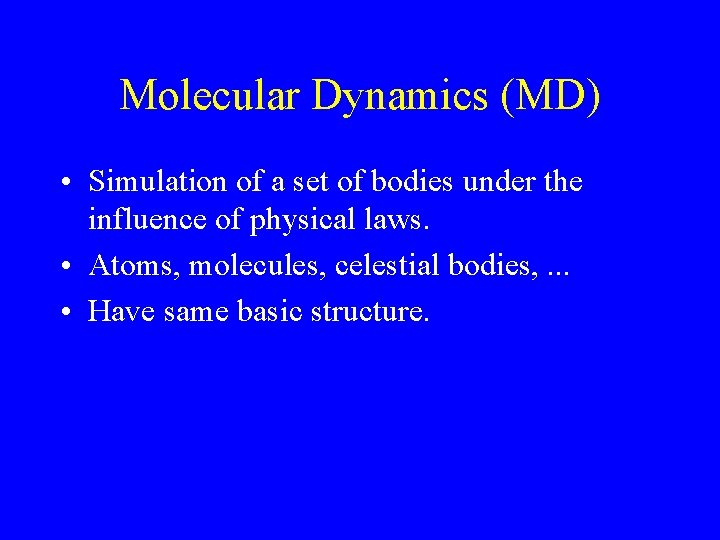

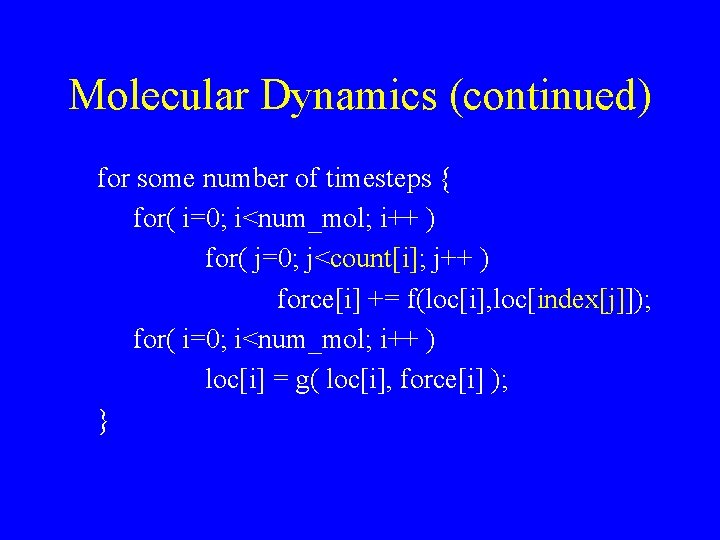

Parallel SOR with Barriers (1 of 2) void* sor (void* arg) { int slice = (int)arg; int from = (slice * (n-1))/p + 1; int to = ((slice+1) * (n-1))/p + 1; for some number of iterations { … } }

Parallel SOR with Barriers (2 of 2) for (i=from; i<to; i++) for (j=1; j<n; j++) temp[i][j] = 0. 25 * (grid[i-1][j] + grid[i+1][j] + grid[i][j-1] + grid[i][j+1]); barrier(); for (i=from; i<to; i++) for (j=1; j<n; j++) grid[i][j]=temp[i][j]; barrier();

![Parallel SOR with Barriers main int mainint argc char argv pthreadt thrdp Parallel SOR with Barriers: main int main(int argc, char *argv[]) { pthread_t *thrd[p]; /*](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-37.jpg)

Parallel SOR with Barriers: main int main(int argc, char *argv[]) { pthread_t *thrd[p]; /* Initialize mutex and condition variables */ for (i=0; i<p; i++) pthread_create (&thrd[i], &attr, sor, (void*)i); for (i=0; i<p; i++) pthread_join (thrd[i], NULL); /* Destroy mutex and condition variables */ }

Note again • Many shared memory programming systems (other than Pthreads) have barriers as basic primitive. • If they do, you should use it, not construct it yourself. • Implementation may be more efficient than what you can do yourself.

Busy Waiting • Not an explicit part of the API. • Available in a general shared memory programming environment.

Busy Waiting initially: flag = 0; P 1: produce data; flag = 1; P 2: while( !flag ) ; consume data;

Use of Busy Waiting • On the surface, simple and efficient. • In general, not a recommended practice. • Often leads to messy and unreadable code (blurs data/synchronization distinction). • May be inefficient

Private Data in Pthreads • To make a variable private in Pthreads, you need to make an array out of it. • Index the array by thread identifier, which you should keep track of. • Not very elegant or efficient.

Other Primitives in Pthreads • Set the attributes of a thread. • Set the attributes of a mutex lock. • Set scheduling parameters.

ECE 1747 Parallel Programming Machine-independent Performance Optimization Techniques

Returning to Sequential vs. Parallel • Sequential execution time: t seconds. • Startup overhead of parallel execution: t_st seconds (depends on architecture) • (Ideal) parallel execution time: t/p + t_st. • If t/p + t_st > t, no gain.

General Idea • Parallelism limited by dependences. • Restructure code to eliminate or reduce dependences. • Sometimes possible by compiler, but good to know how to do it by hand.

![Optimizations Example 16 for i 0 i 100000 i ai 1000 Optimizations: Example 16 for (i = 0; i < 100000; i++) a[i + 1000]](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-47.jpg)

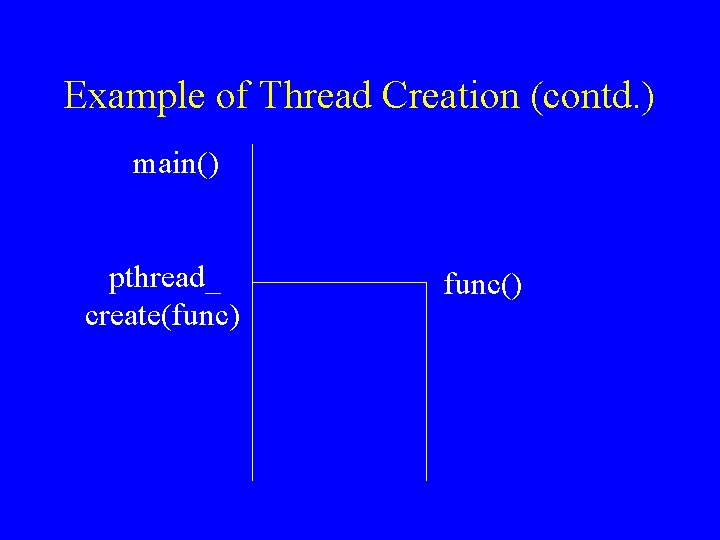

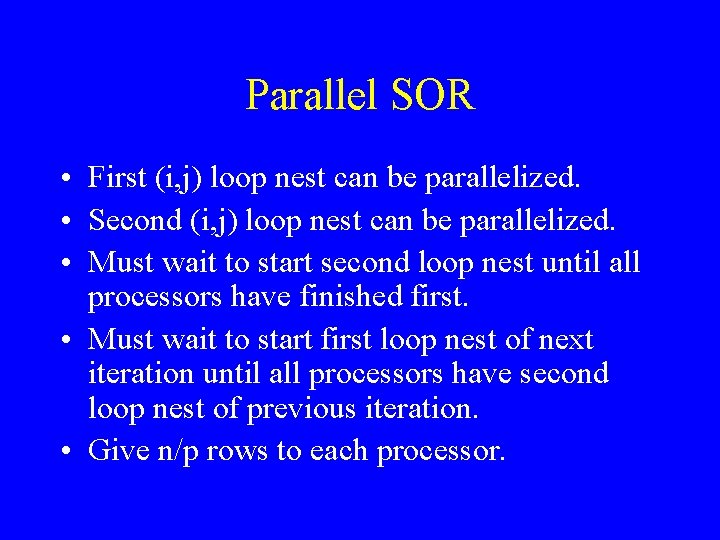

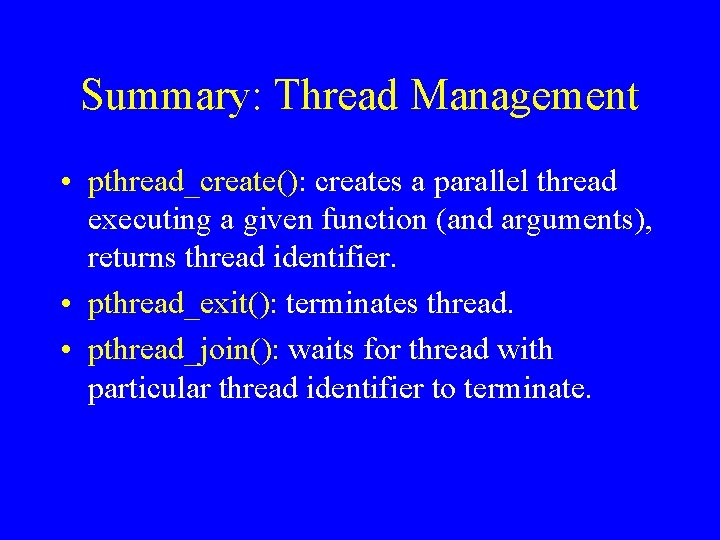

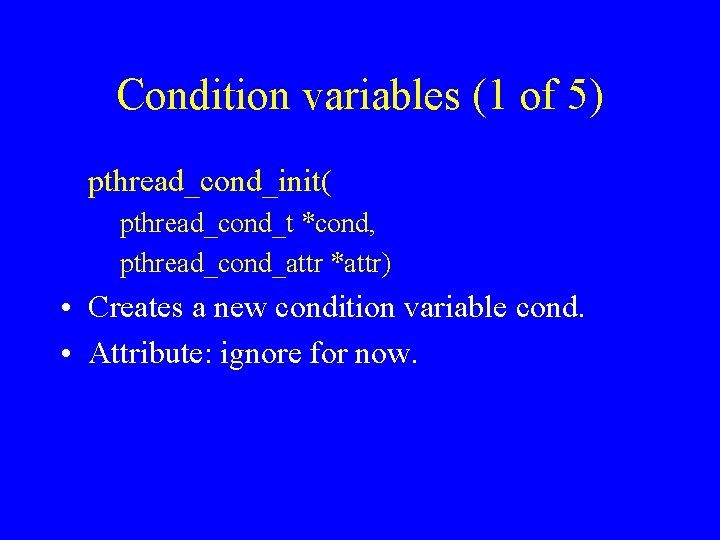

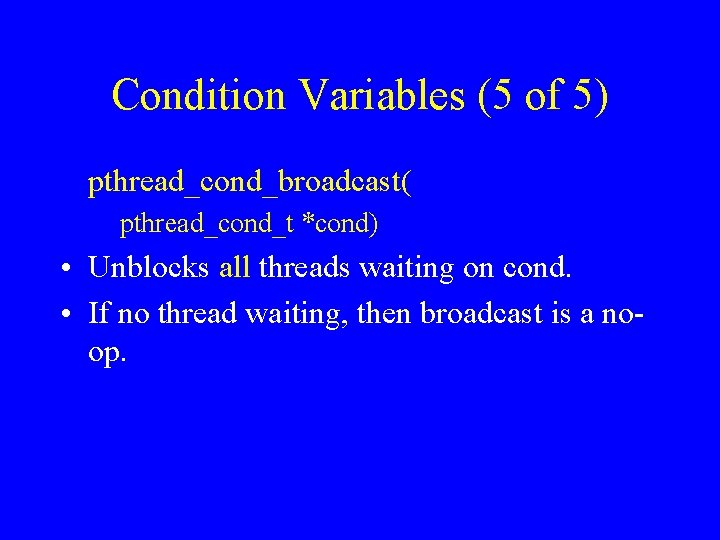

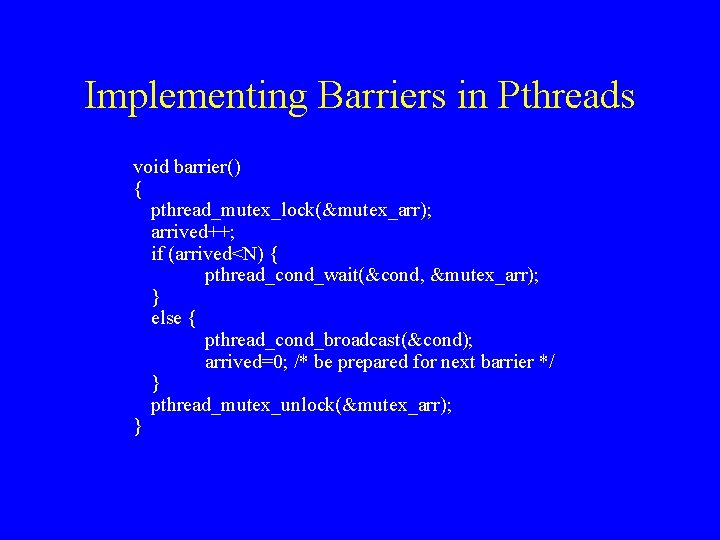

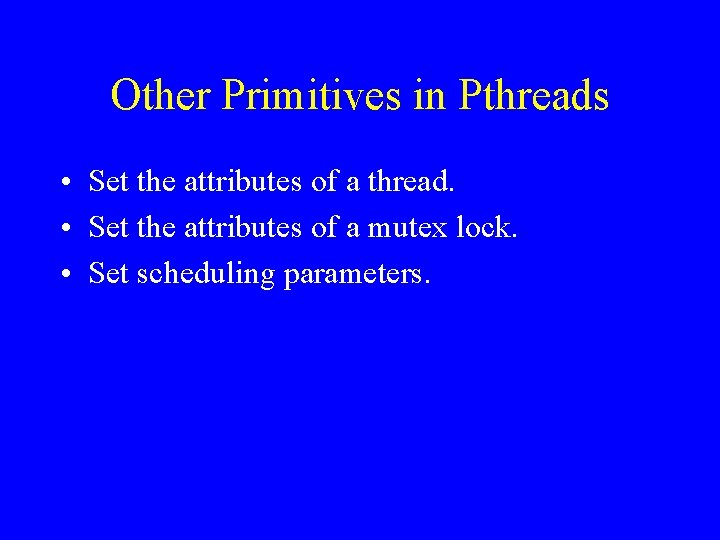

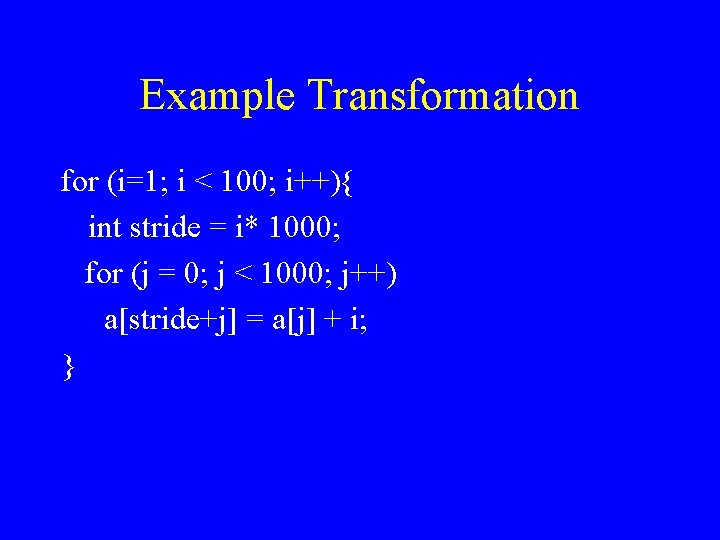

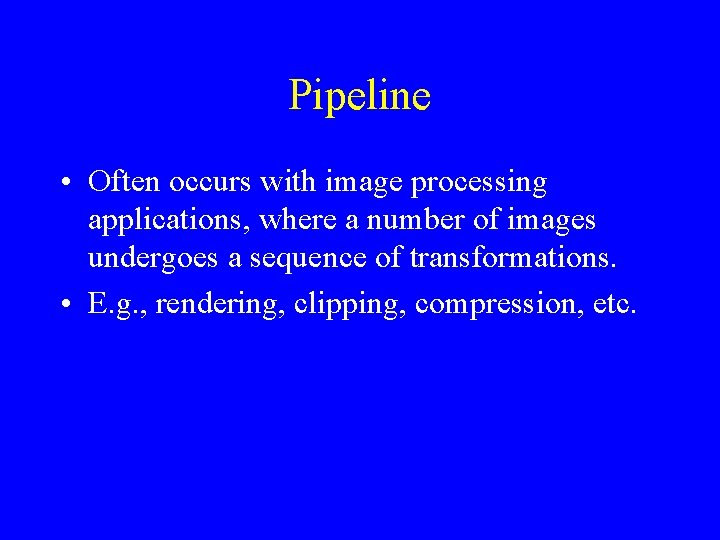

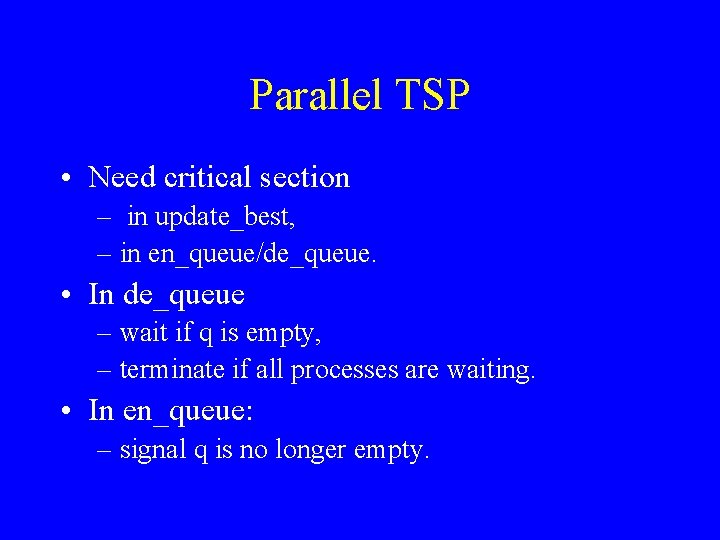

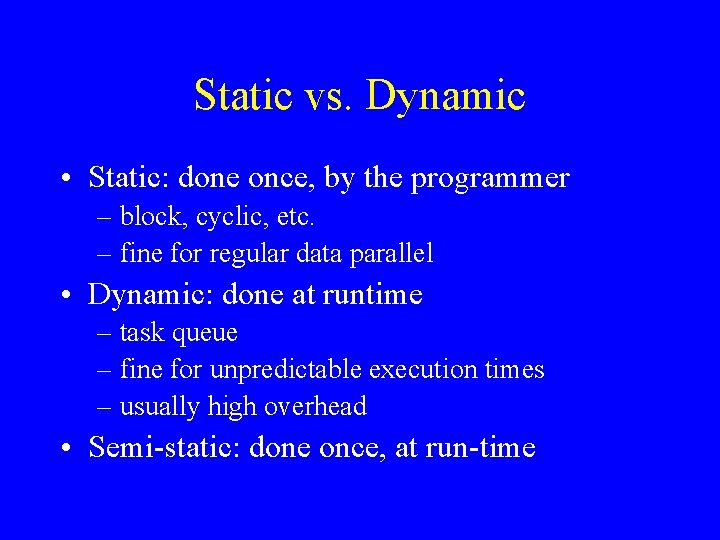

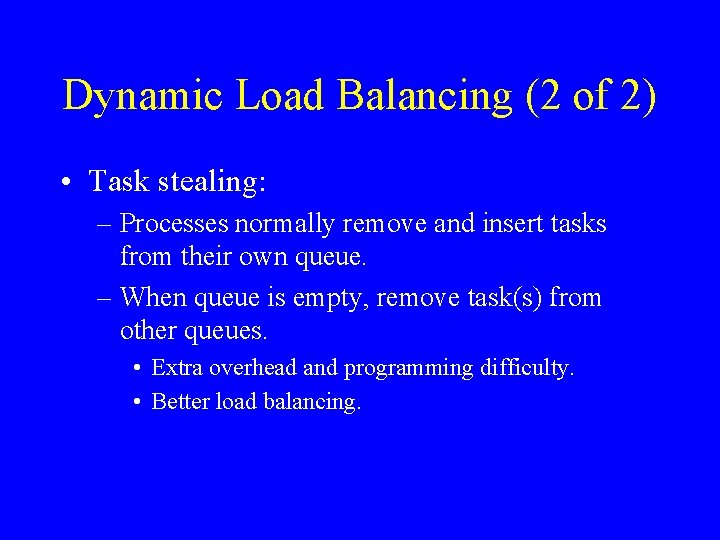

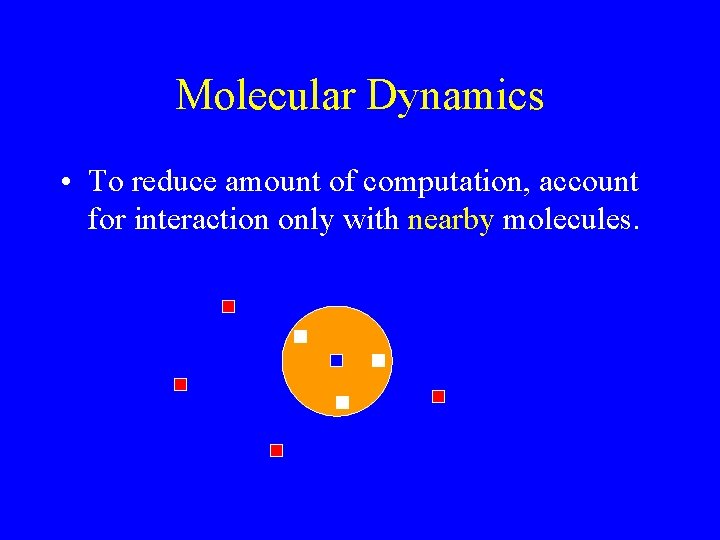

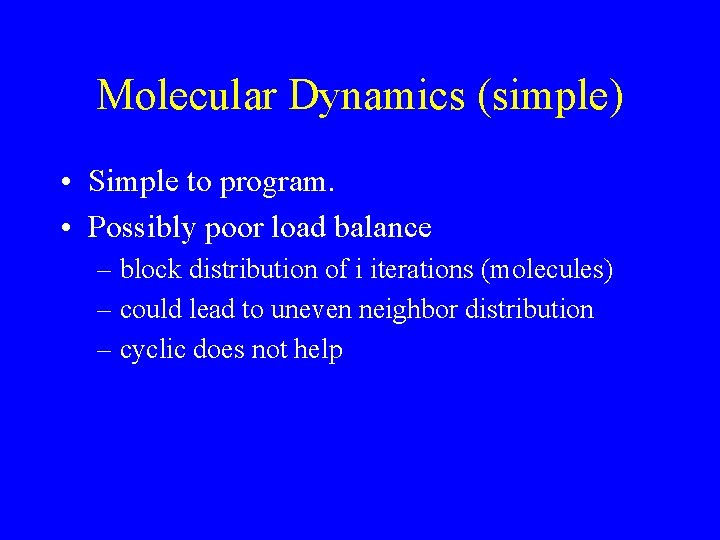

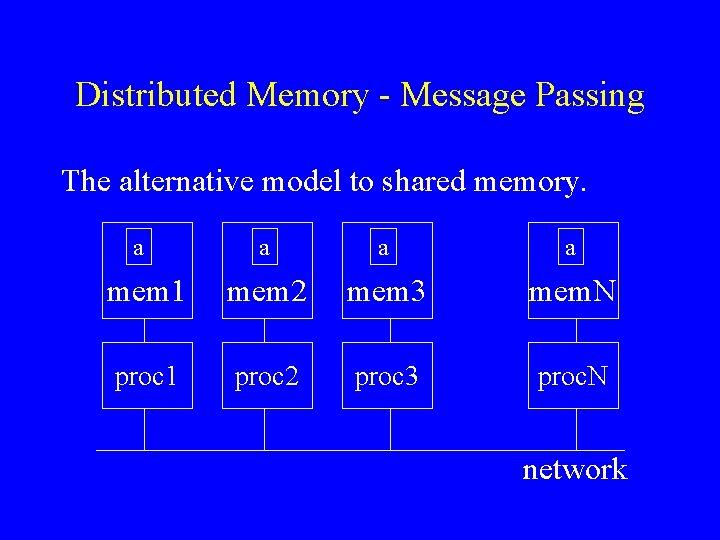

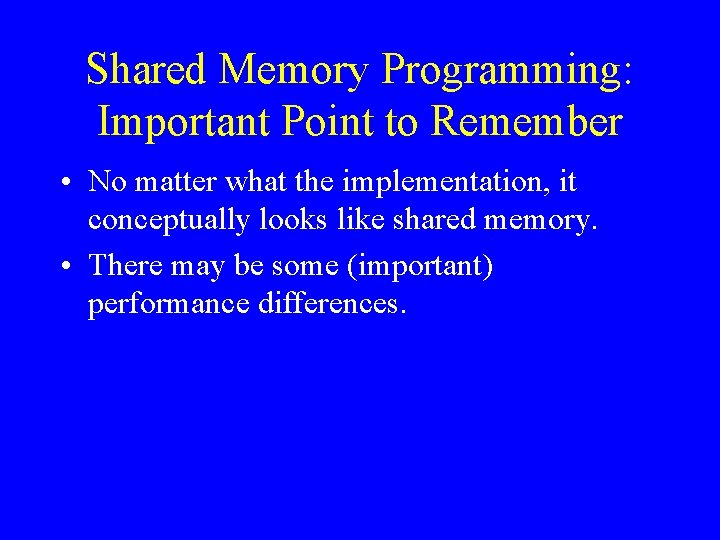

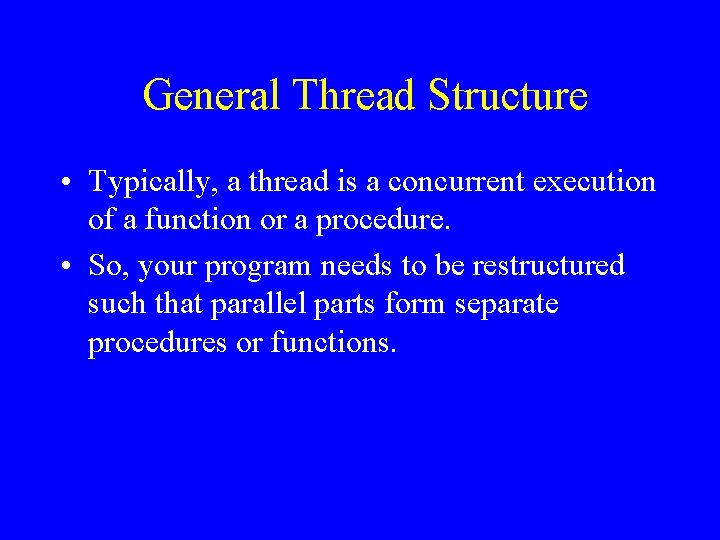

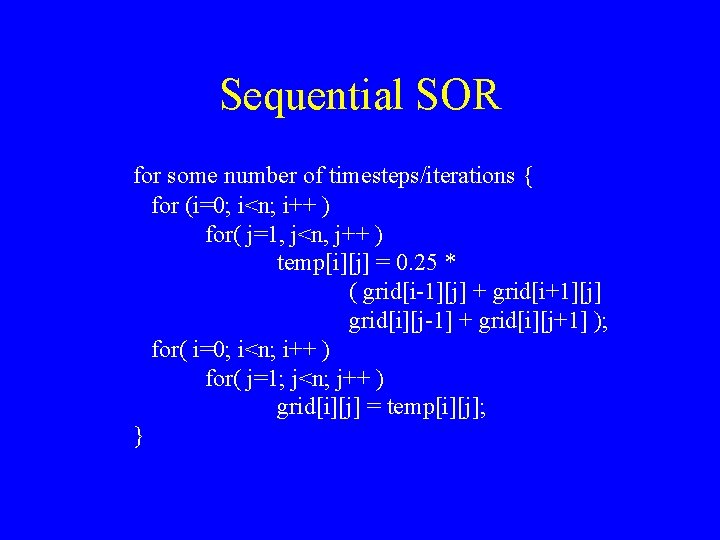

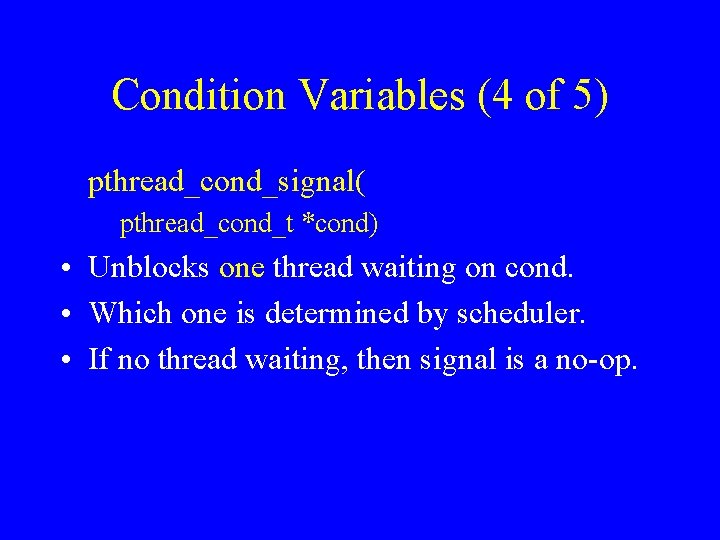

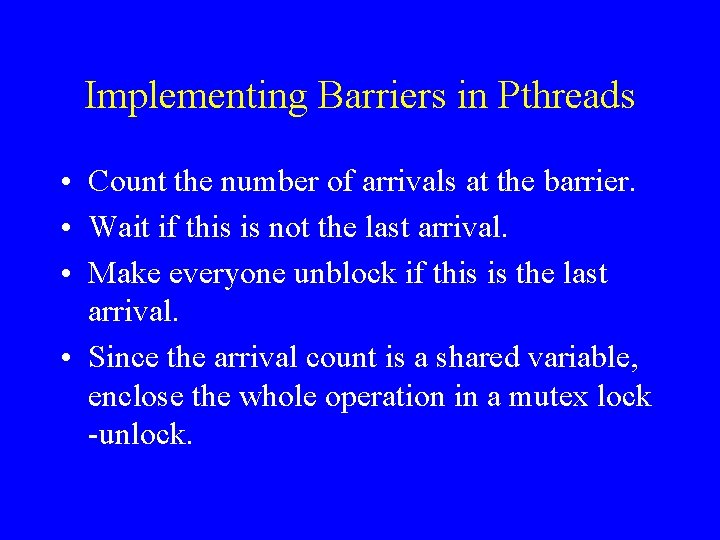

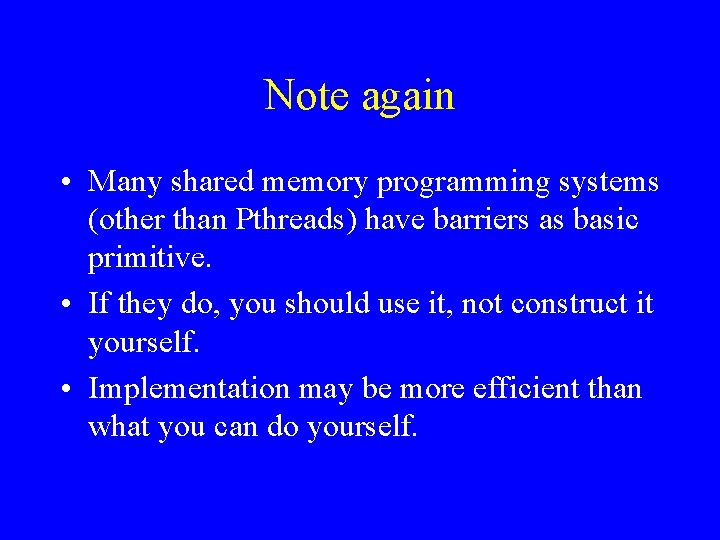

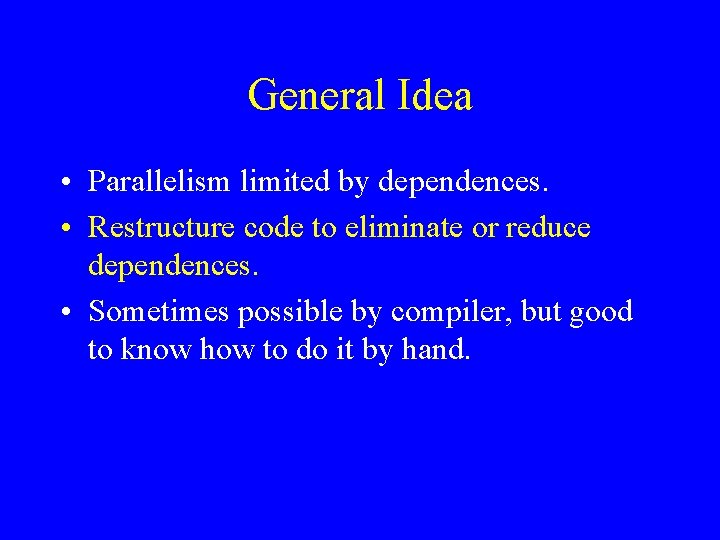

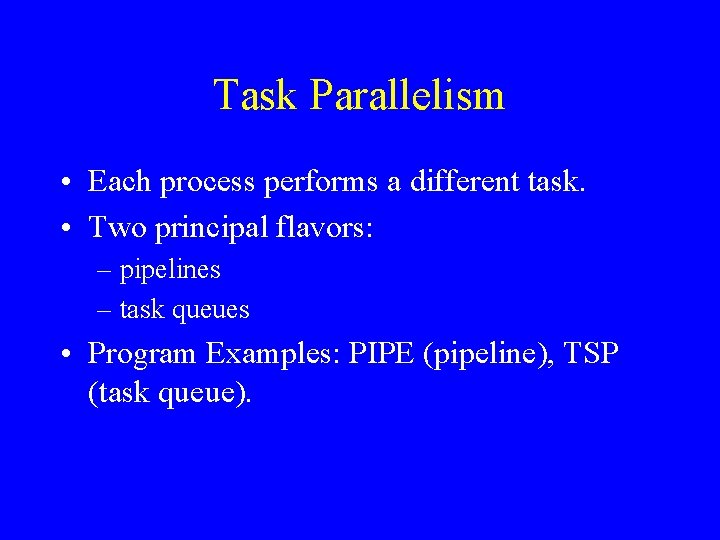

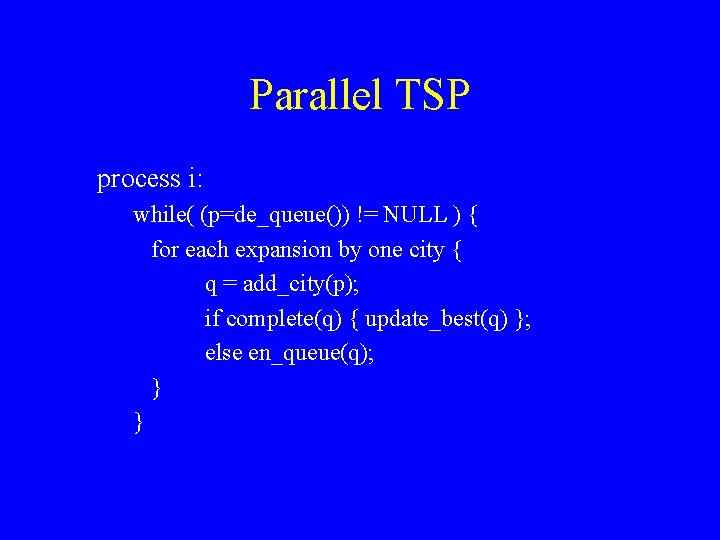

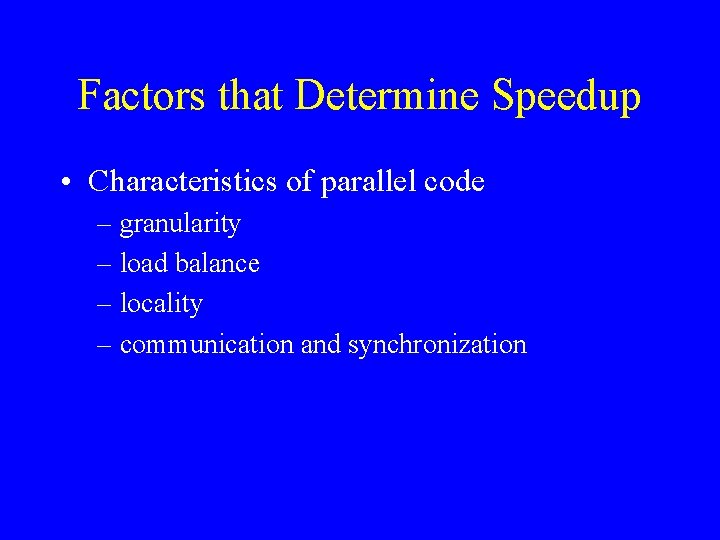

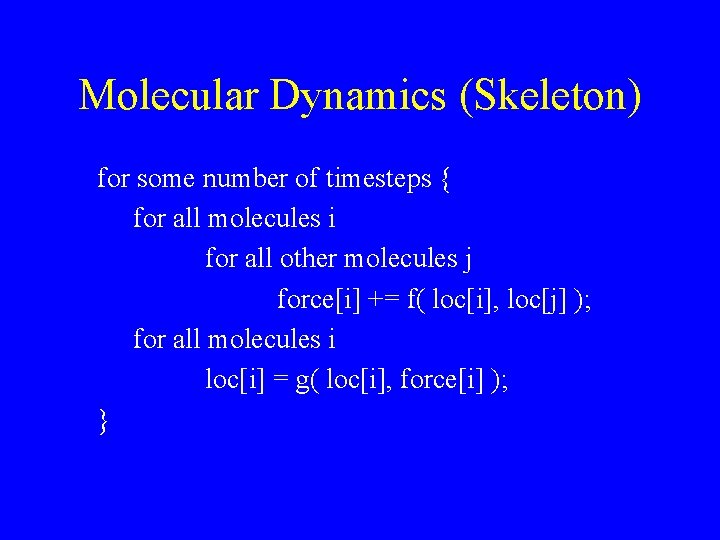

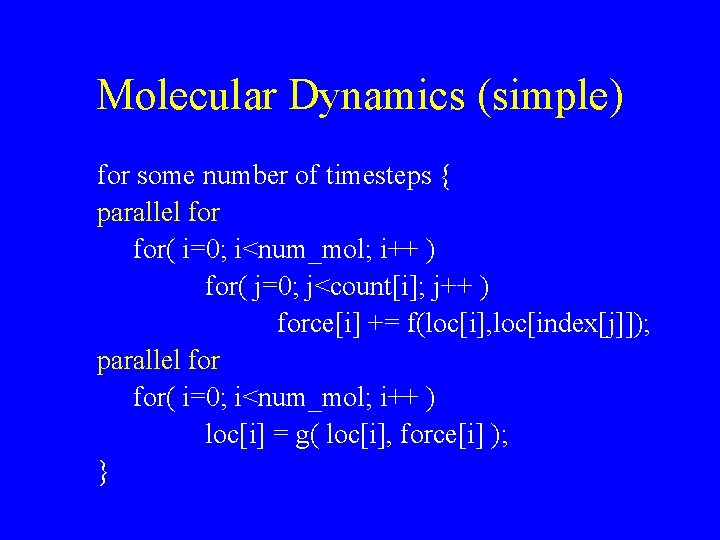

Optimizations: Example 16 for (i = 0; i < 100000; i++) a[i + 1000] = a[i] + 1; Cannot be parallelized as is. May be parallelized by applying certain code transformations.

Example Transformation for (i=1; i < 100; i++){ int stride = i* 1000; for (j = 0; j < 1000; j++) a[stride+j] = a[j] + i; }

Code Transformations • Reorganize code such that – dependences are removed or reduced – large pieces of parallel work emerge • Code can become messy … there is a point of diminishing returns.

Flavors of Parallelism • Task parallelism: processors do different tasks. – Task queue – Pipelines • Data parallelism: all processors do the same thing on different data. – Regular – Irregular

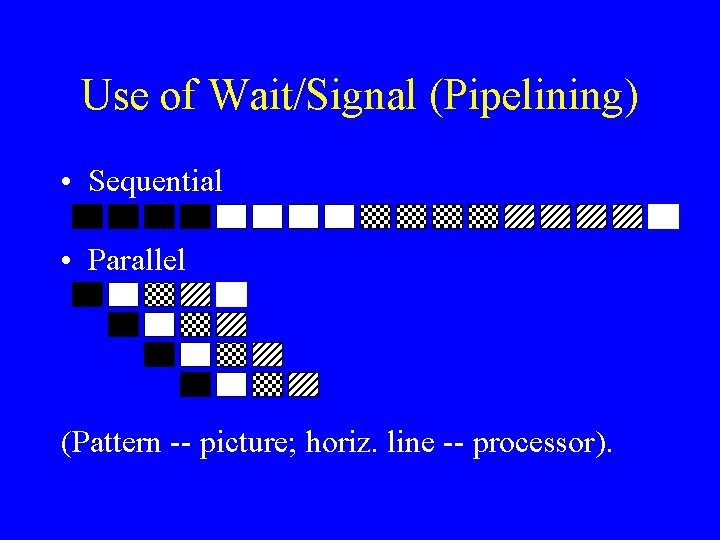

Task Parallelism • Each process performs a different task. • Two principal flavors: – pipelines – task queues • Program Examples: PIPE (pipeline), TSP (task queue).

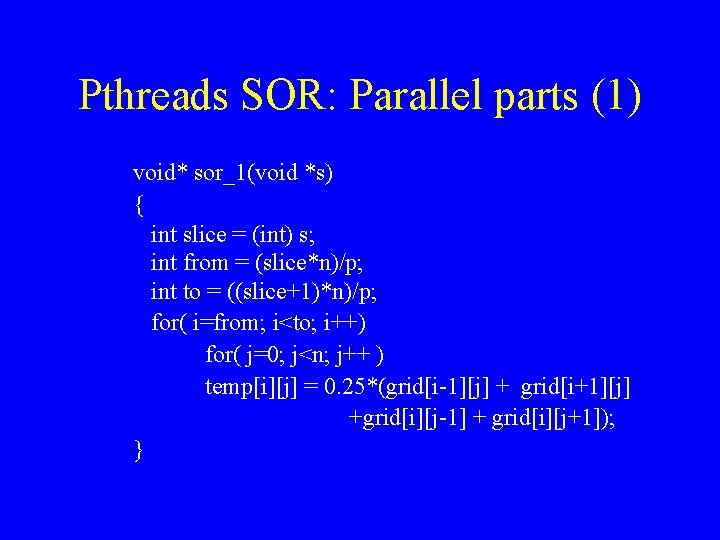

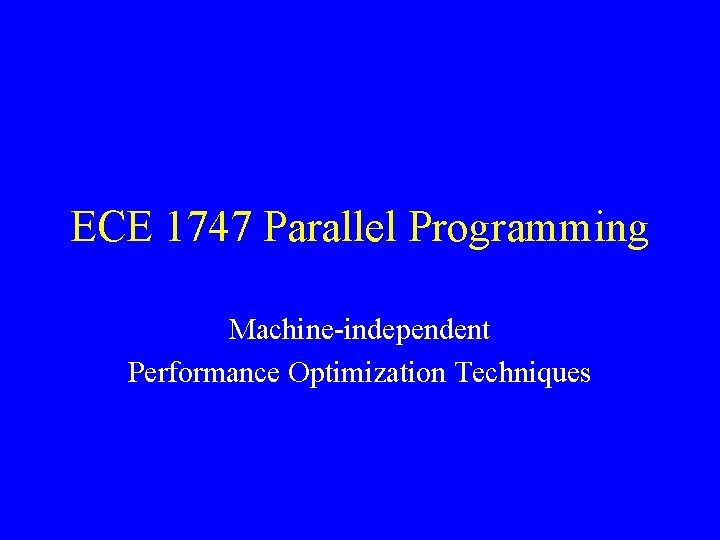

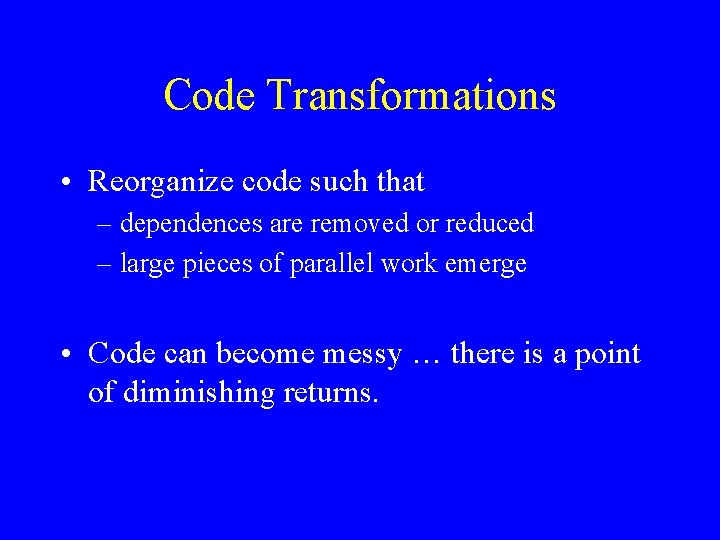

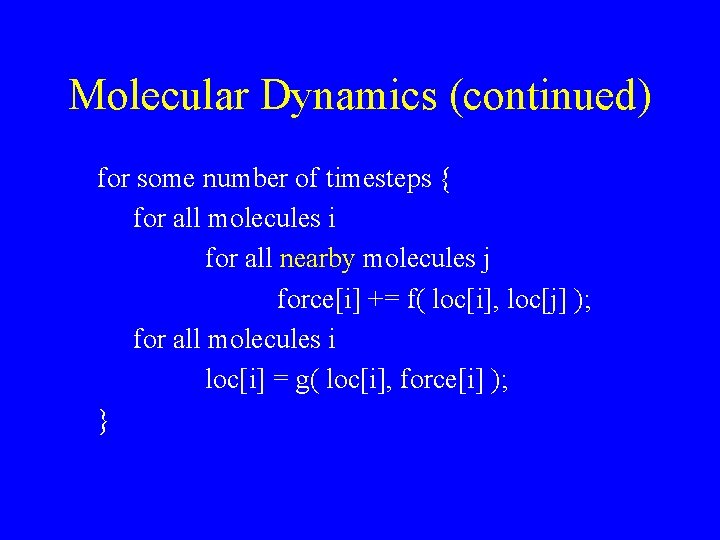

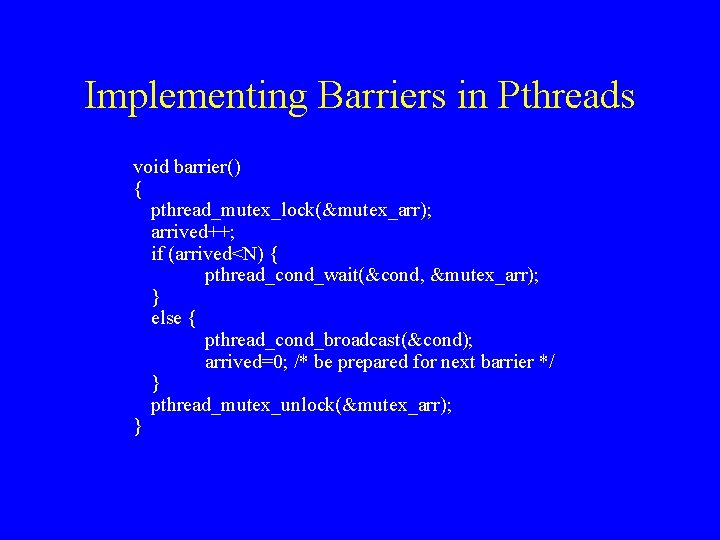

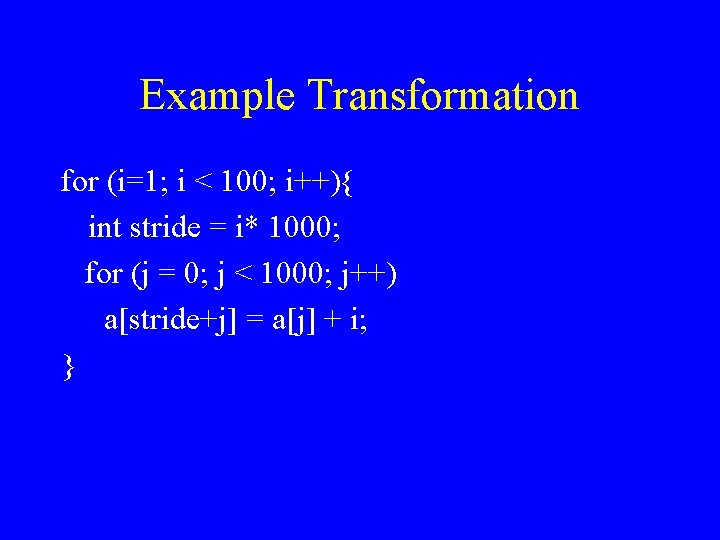

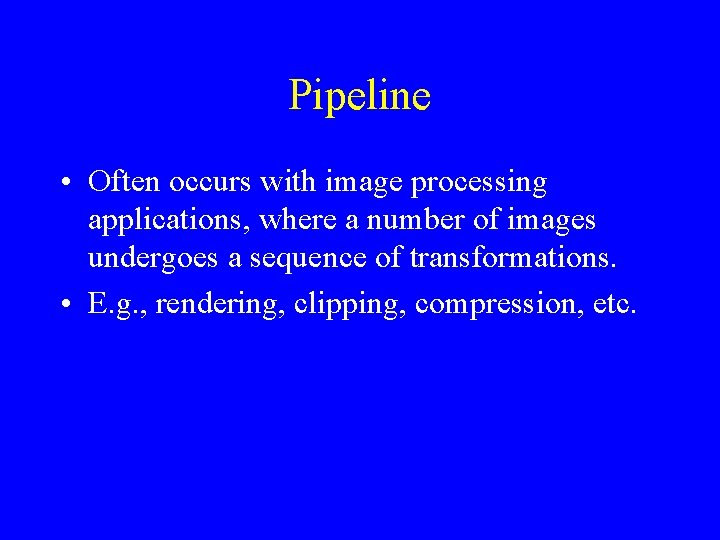

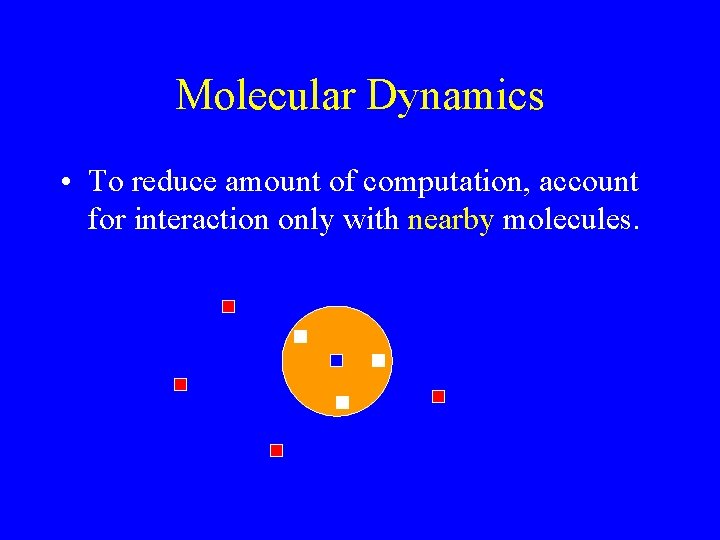

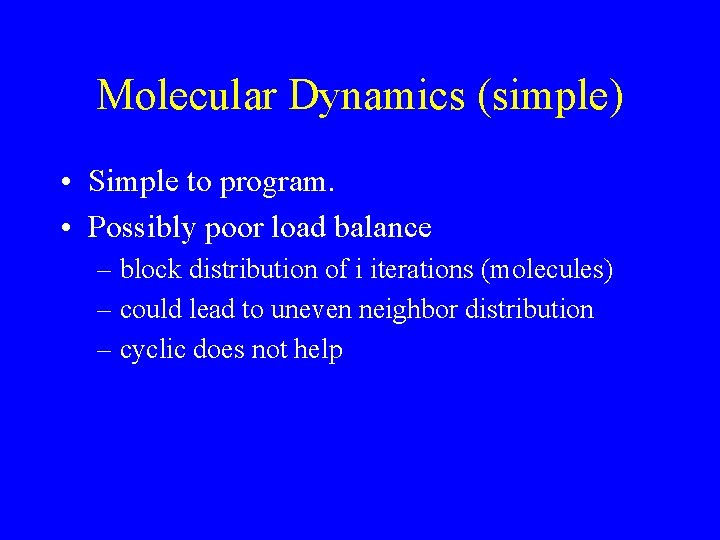

Pipeline • Often occurs with image processing applications, where a number of images undergoes a sequence of transformations. • E. g. , rendering, clipping, compression, etc.

Use of Wait/Signal (Pipelining) • Sequential • Parallel (Pattern -- picture; horiz. line -- processor).

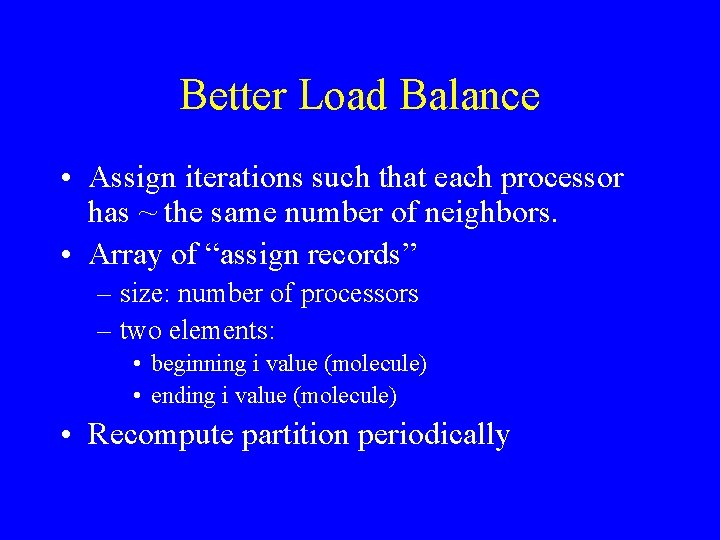

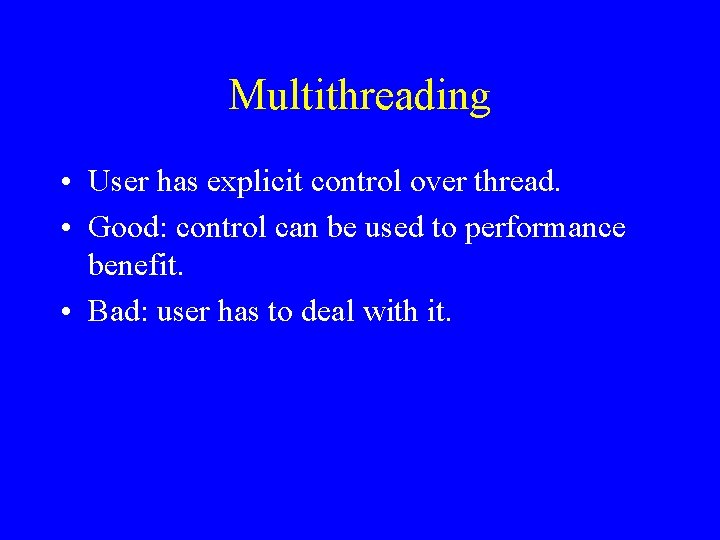

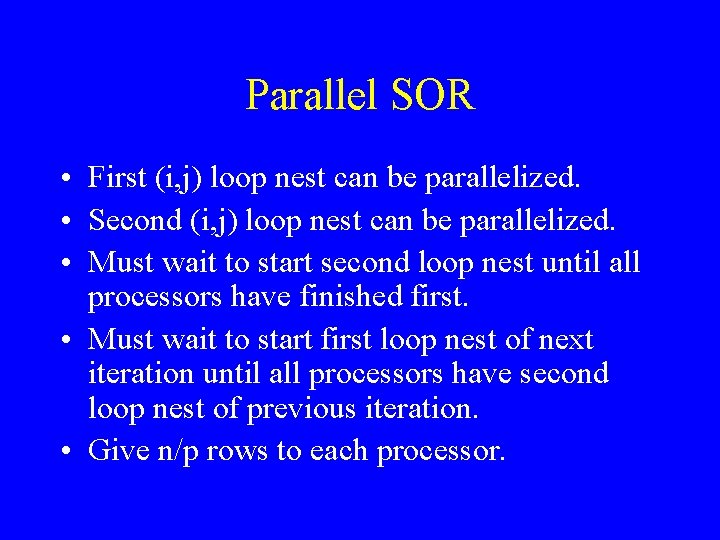

![PIPE P 1 for i0 inumpics readinpic i intpic1i trans 1 PIPE P 1: for( i=0; i<num_pics, read(in_pic); i++ ) { int_pic_1[i] = trans 1(](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-54.jpg)

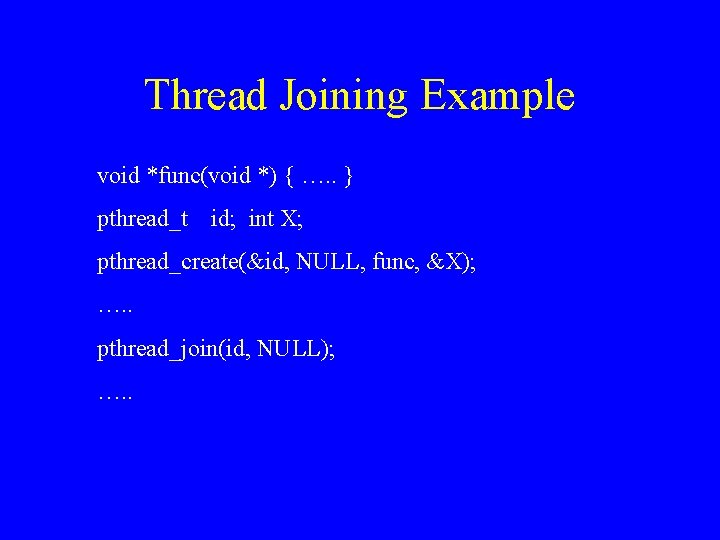

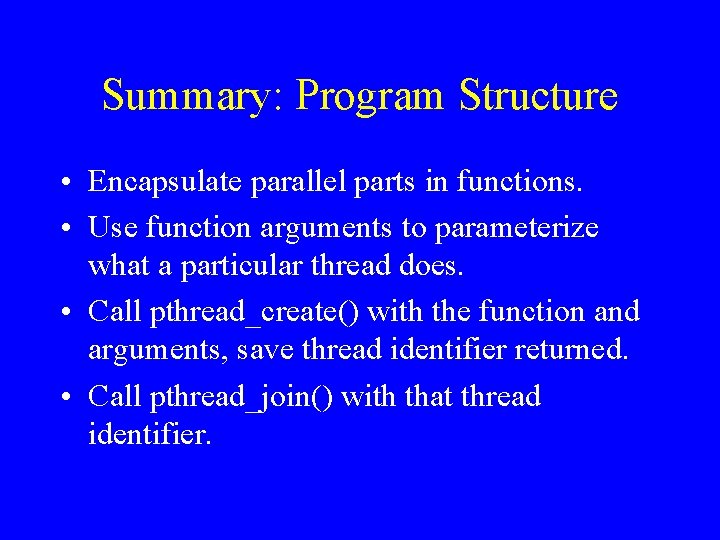

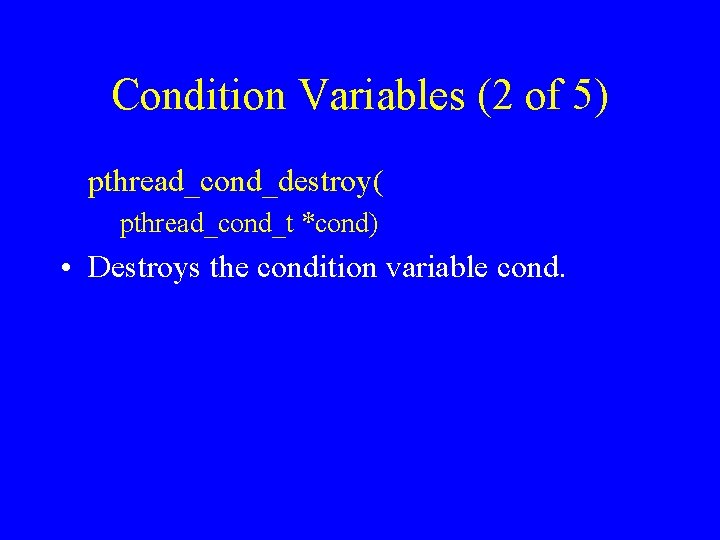

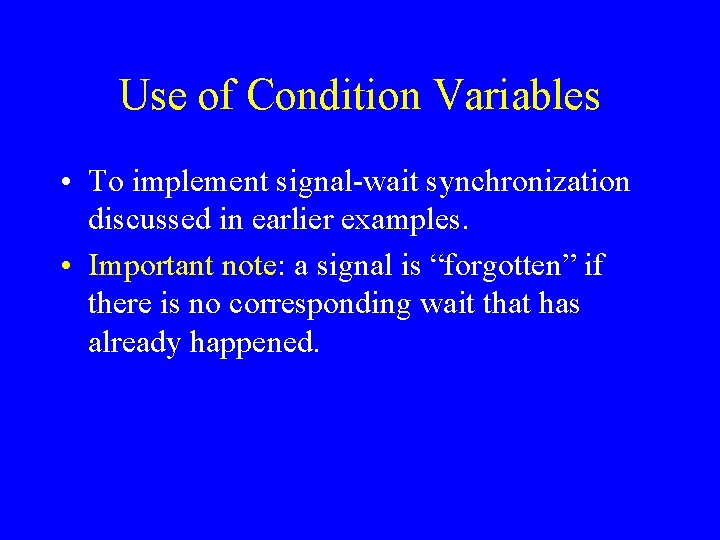

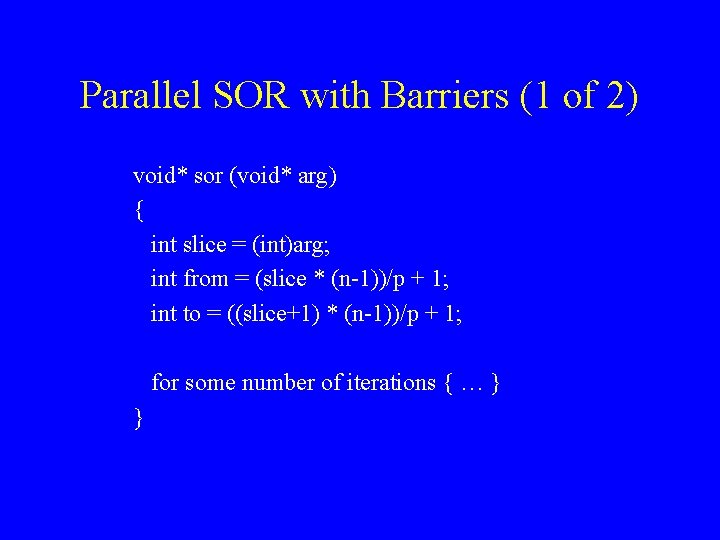

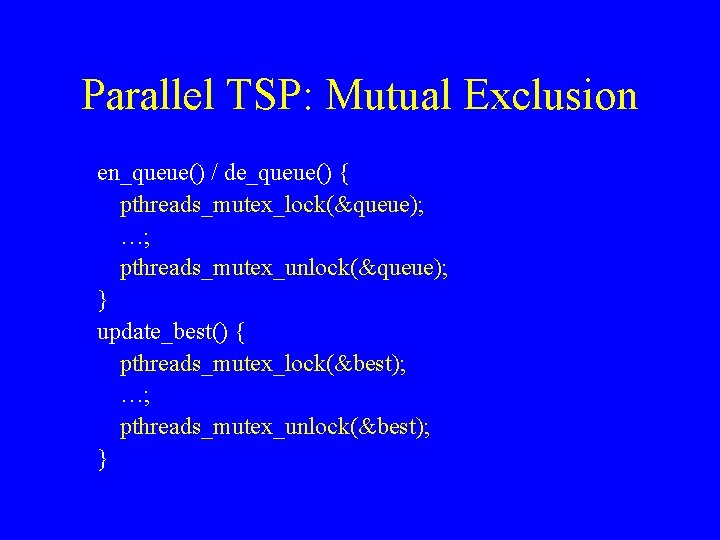

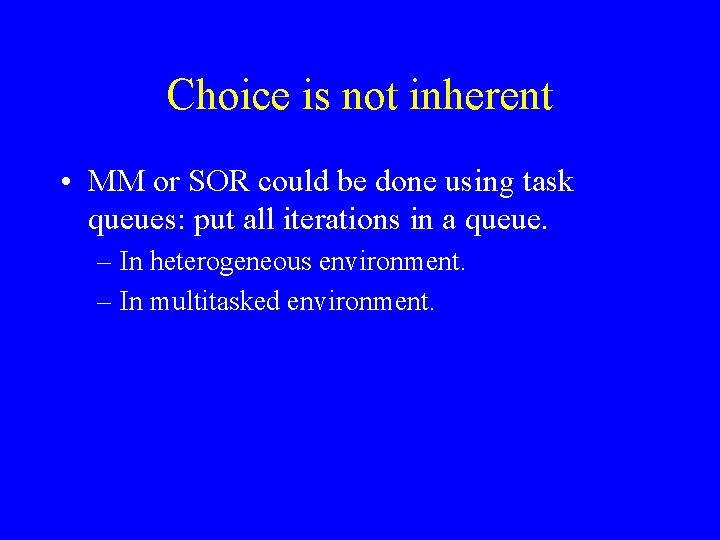

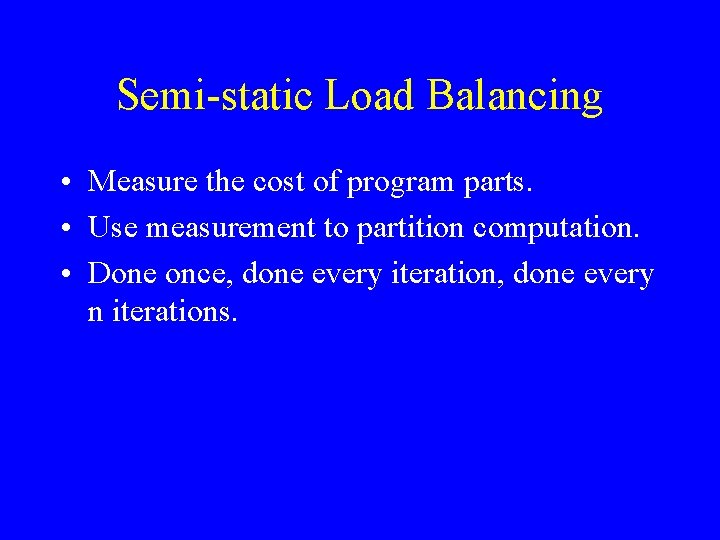

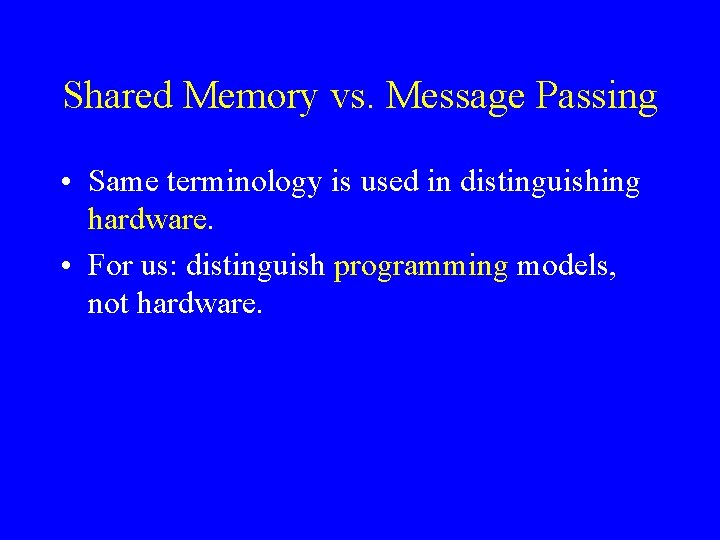

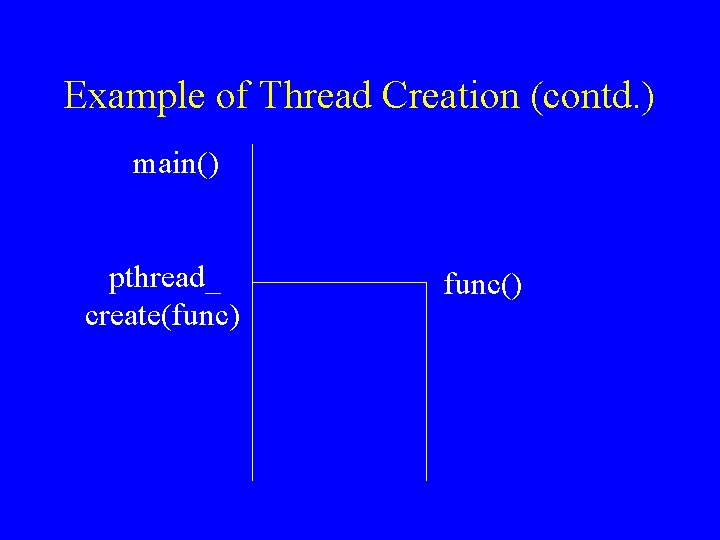

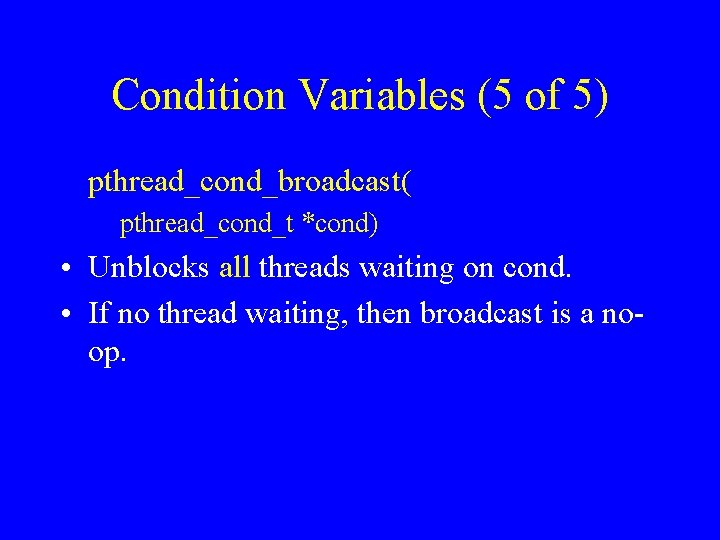

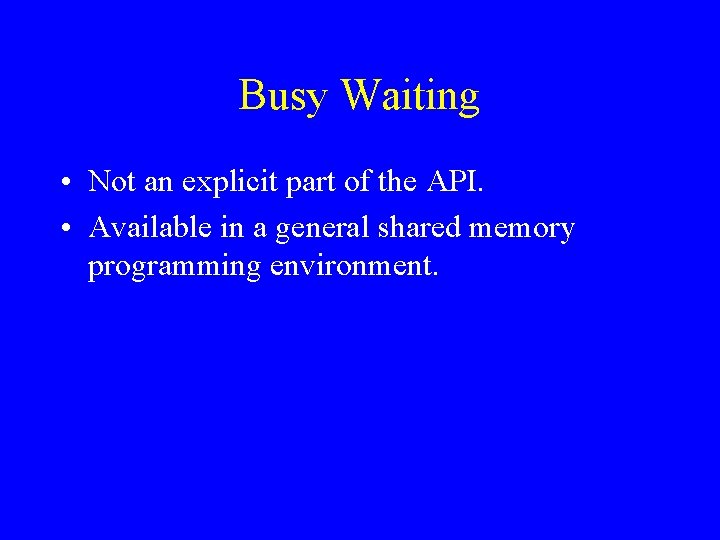

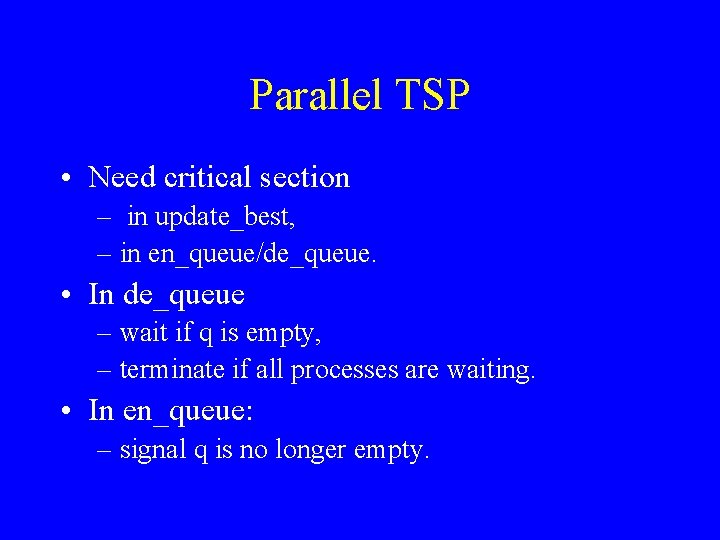

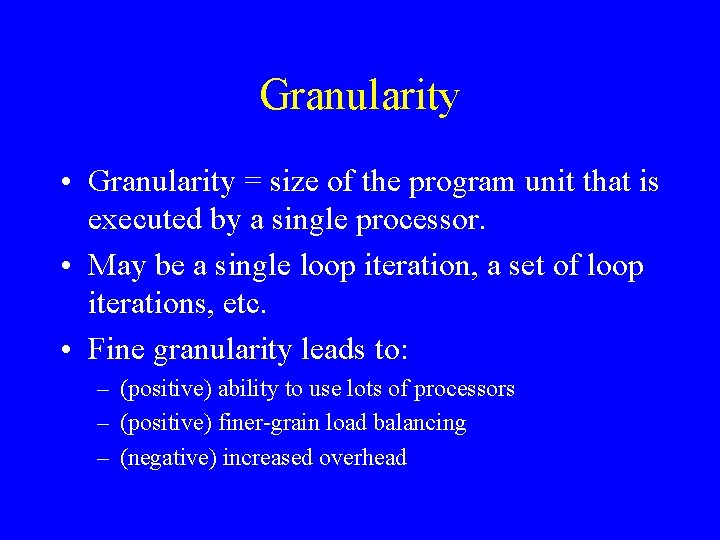

PIPE P 1: for( i=0; i<num_pics, read(in_pic); i++ ) { int_pic_1[i] = trans 1( in_pic ); signal( event_1_2[i] ); } P 2: for( i=0; i<num_pics; i++ ) { wait( event_1_2[i] ); int_pic_2[i] = trans 2( int_pic_1[i] ); signal( event_2_3[i] ); }

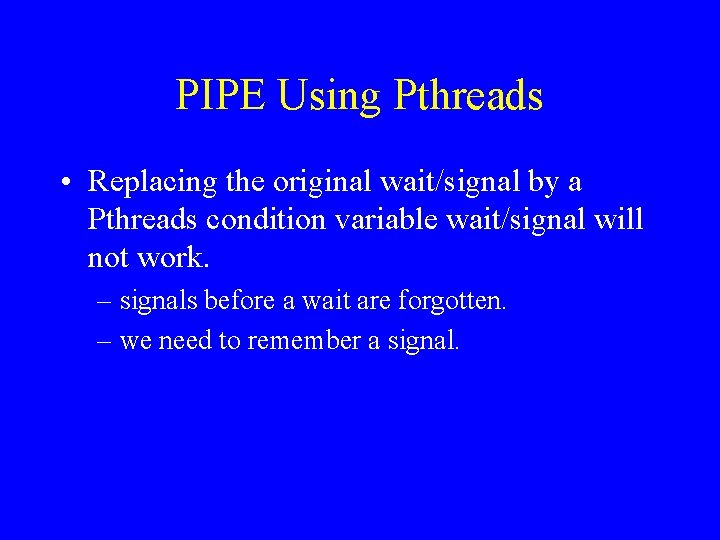

PIPE Using Pthreads • Replacing the original wait/signal by a Pthreads condition variable wait/signal will not work. – signals before a wait are forgotten. – we need to remember a signal.

![How to remember a signal 1 of 2 semaphoresignali pthreadmutexlockmutexremi arrived i 1 How to remember a signal (1 of 2) semaphore_signal(i) { pthread_mutex_lock(&mutex_rem[i]); arrived [i]= 1;](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-56.jpg)

How to remember a signal (1 of 2) semaphore_signal(i) { pthread_mutex_lock(&mutex_rem[i]); arrived [i]= 1; pthread_cond_signal(&cond[i]); pthread_mutex_unlock(&mutex_rem[i]); }

![How to Remember a Signal 2 of 2 semaphorewaiti pthreadsmutexlockmutexremi if arrivedi How to Remember a Signal (2 of 2) semaphore_wait(i) { pthreads_mutex_lock(&mutex_rem[i]); if( arrived[i] =](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-57.jpg)

How to Remember a Signal (2 of 2) semaphore_wait(i) { pthreads_mutex_lock(&mutex_rem[i]); if( arrived[i] = 0 ) { pthreads_cond_wait(&cond[i], mutex_rem[i]); } arrived[i] = 0; pthreads_mutex_unlock(&mutex_rem[i]); }

![PIPE with Pthreads P 1 for i0 inumpics readinpic i intpic1i PIPE with Pthreads P 1: for( i=0; i<num_pics, read(in_pic); i++ ) { int_pic_1[i] =](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-58.jpg)

PIPE with Pthreads P 1: for( i=0; i<num_pics, read(in_pic); i++ ) { int_pic_1[i] = trans 1( in_pic ); semaphore_signal( event_1_2[i] ); } P 2: for( i=0; i<num_pics; i++ ) { semaphore_wait( event_1_2[i] ); int_pic_2[i] = trans 2( int_pic_1[i] ); semaphore_signal( event_2_3[i] ); }

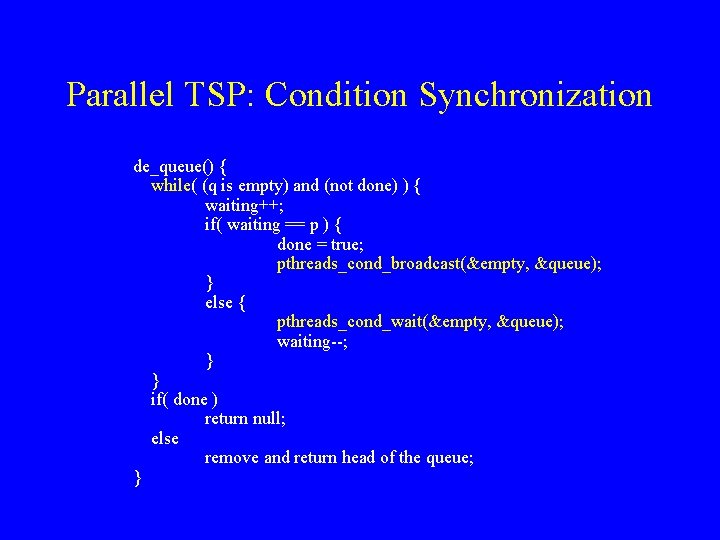

Parallel TSP process i: while( (p=de_queue()) != NULL ) { for each expansion by one city { q = add_city(p); if complete(q) { update_best(q) }; else en_queue(q); } }

Parallel TSP • Need critical section – in update_best, – in en_queue/de_queue. • In de_queue – wait if q is empty, – terminate if all processes are waiting. • In en_queue: – signal q is no longer empty.

Parallel TSP: Mutual Exclusion en_queue() / de_queue() { pthreads_mutex_lock(&queue); …; pthreads_mutex_unlock(&queue); } update_best() { pthreads_mutex_lock(&best); …; pthreads_mutex_unlock(&best); }

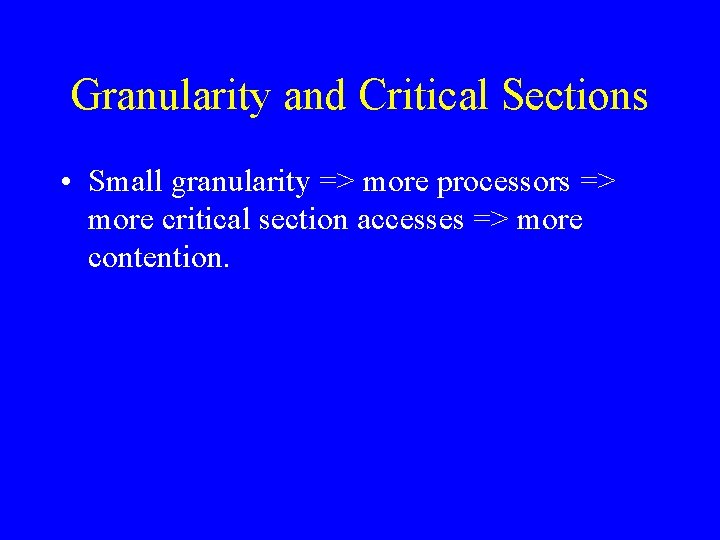

Parallel TSP: Condition Synchronization de_queue() { while( (q is empty) and (not done) ) { waiting++; if( waiting == p ) { done = true; pthreads_cond_broadcast(&empty, &queue); } else { pthreads_cond_wait(&empty, &queue); waiting--; } } if( done ) return null; else remove and return head of the queue; }

Factors that Determine Speedup • Characteristics of parallel code – granularity – load balance – locality – communication and synchronization

Granularity • Granularity = size of the program unit that is executed by a single processor. • May be a single loop iteration, a set of loop iterations, etc. • Fine granularity leads to: – (positive) ability to use lots of processors – (positive) finer-grain load balancing – (negative) increased overhead

Granularity and Critical Sections • Small granularity => more processors => more critical section accesses => more contention.

Issues in Performance of Parallel Parts • • Granularity. Load balance. Locality. Synchronization and communication.

Load Balance • Load imbalance = different in execution time between processors between barriers. • Execution time may not be predictable. – Regular data parallel: yes. – Irregular data parallel or pipeline: perhaps. – Task queue: no.

Static vs. Dynamic • Static: done once, by the programmer – block, cyclic, etc. – fine for regular data parallel • Dynamic: done at runtime – task queue – fine for unpredictable execution times – usually high overhead • Semi-static: done once, at run-time

Choice is not inherent • MM or SOR could be done using task queues: put all iterations in a queue. – In heterogeneous environment. – In multitasked environment.

Static Load Balancing • Block – best locality – possibly poor load balance • Cyclic – better load balance – worse locality • Block-cyclic – load balancing advantages of cyclic (mostly) – better locality

Dynamic Load Balancing (1 of 2) • Centralized: single task queue. – Easy to program – Excellent load balance • Distributed: task queue per processor. – Less communication/synchronization

Dynamic Load Balancing (2 of 2) • Task stealing: – Processes normally remove and insert tasks from their own queue. – When queue is empty, remove task(s) from other queues. • Extra overhead and programming difficulty. • Better load balancing.

Semi-static Load Balancing • Measure the cost of program parts. • Use measurement to partition computation. • Done once, done every iteration, done every n iterations.

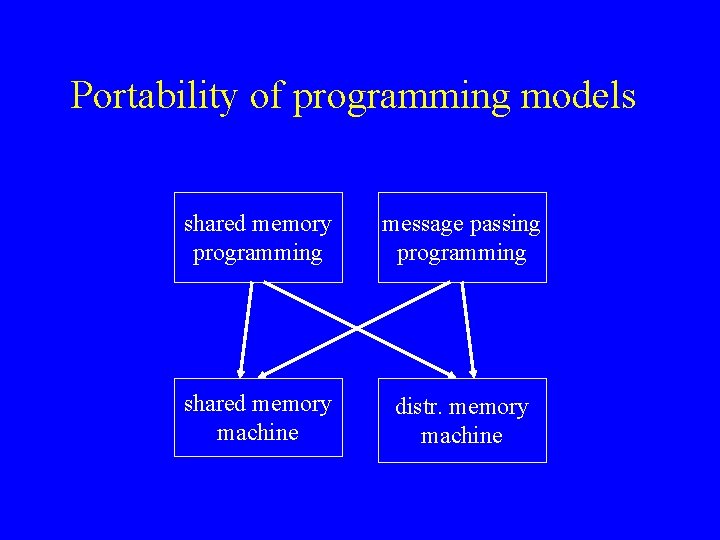

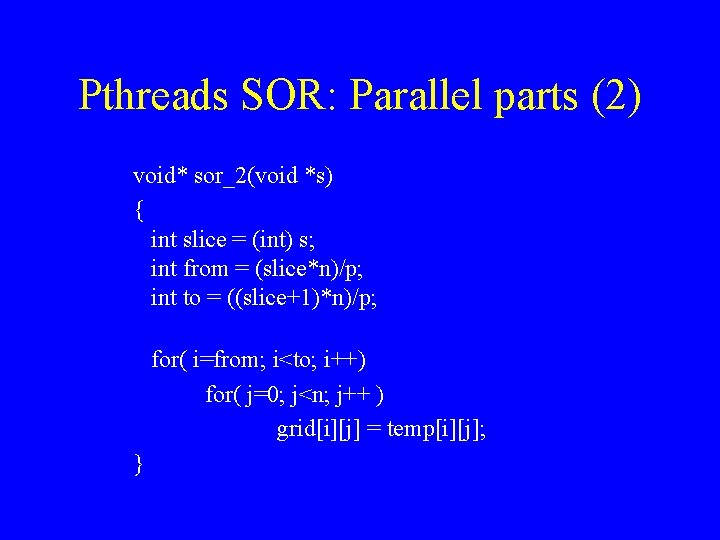

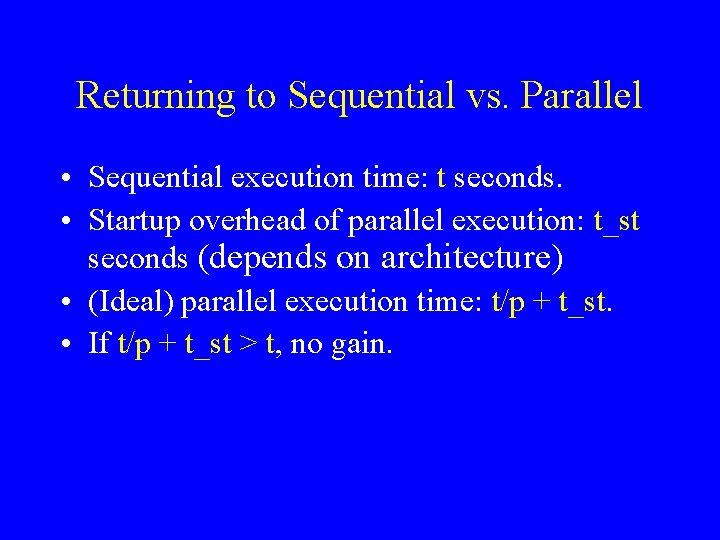

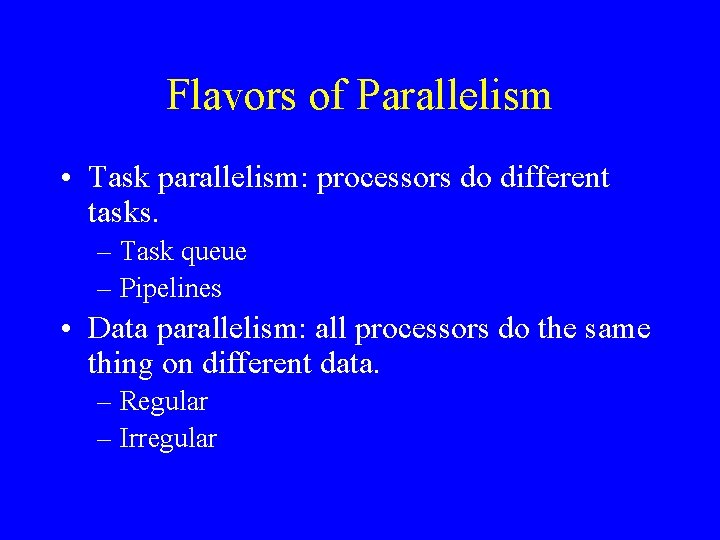

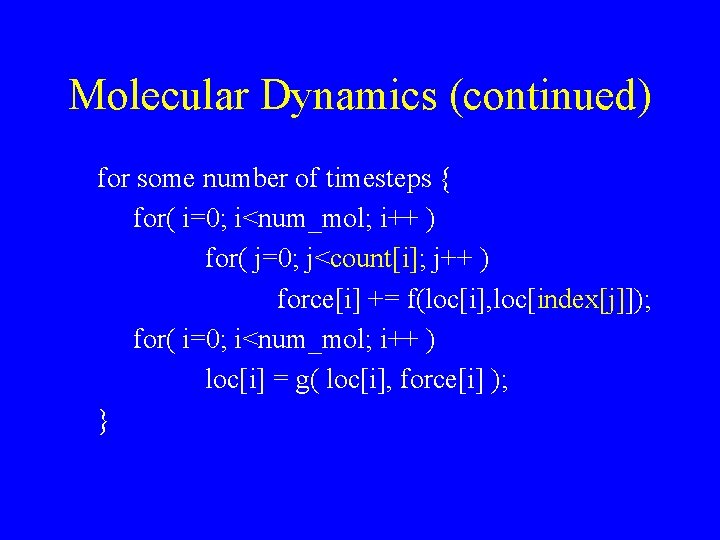

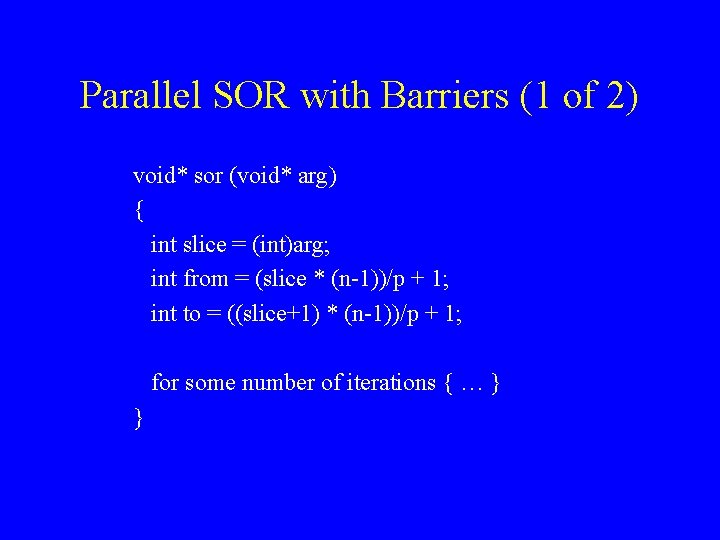

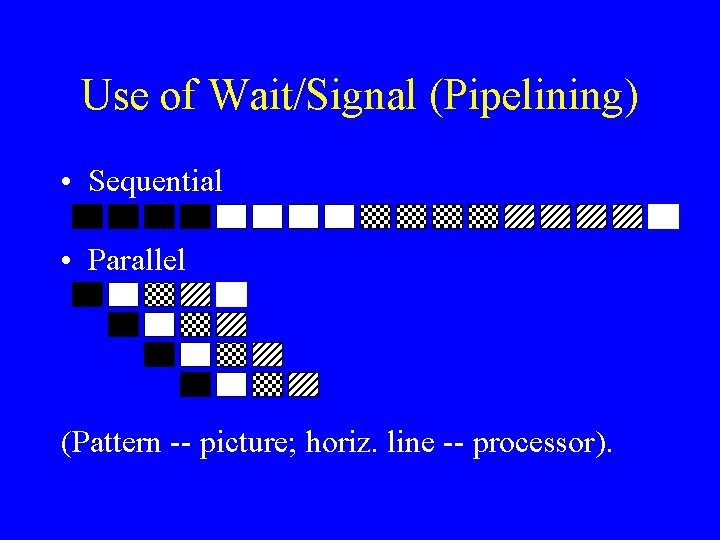

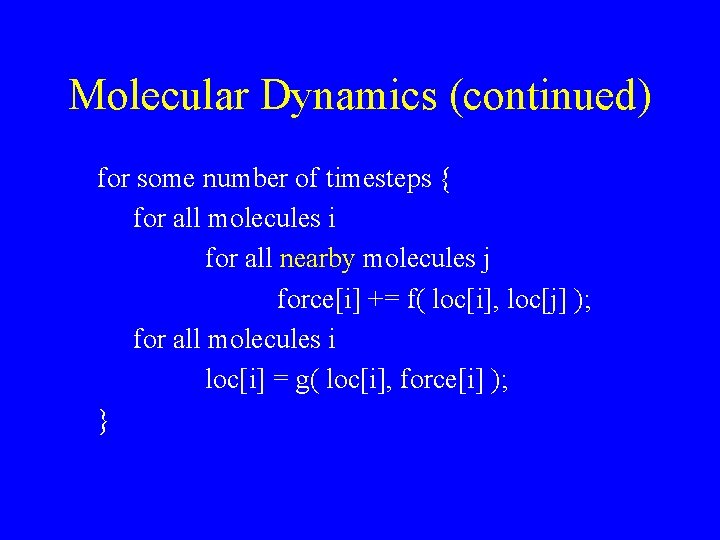

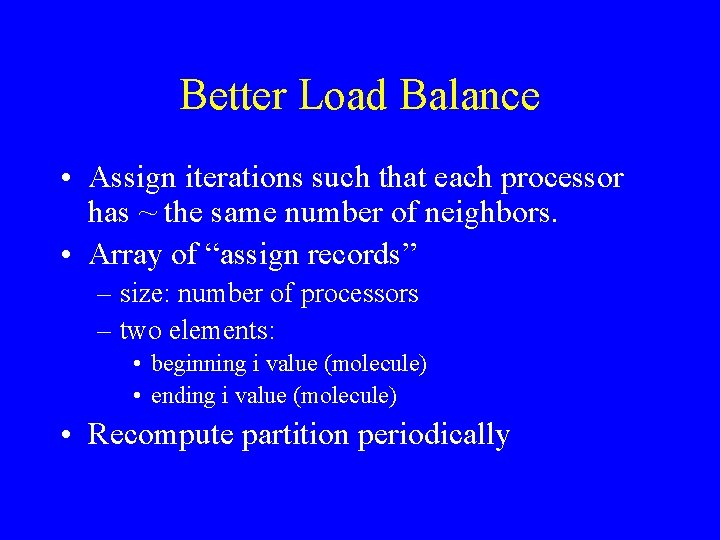

Molecular Dynamics (MD) • Simulation of a set of bodies under the influence of physical laws. • Atoms, molecules, celestial bodies, . . . • Have same basic structure.

Molecular Dynamics (Skeleton) for some number of timesteps { for all molecules i for all other molecules j force[i] += f( loc[i], loc[j] ); for all molecules i loc[i] = g( loc[i], force[i] ); }

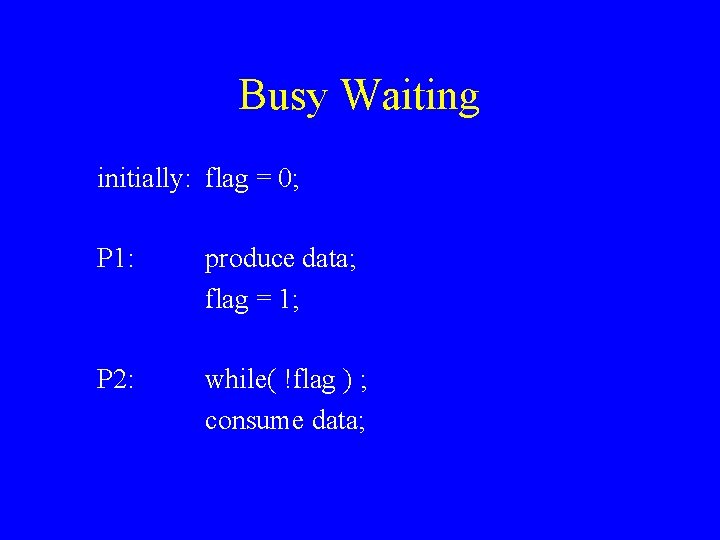

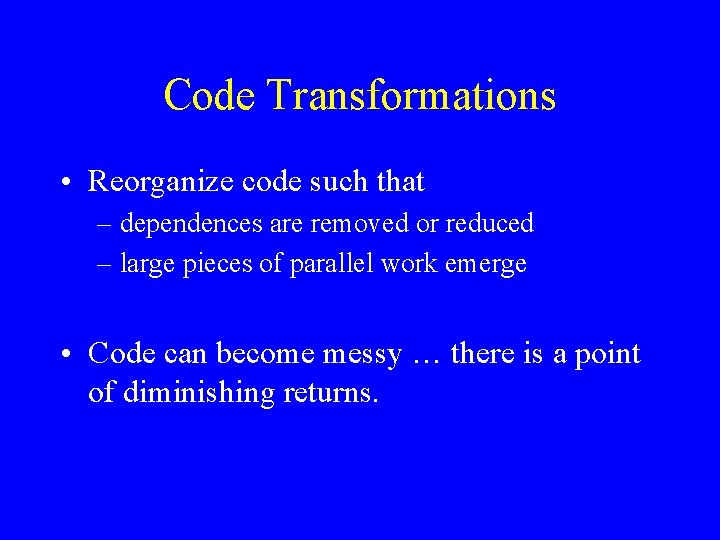

Molecular Dynamics • To reduce amount of computation, account for interaction only with nearby molecules.

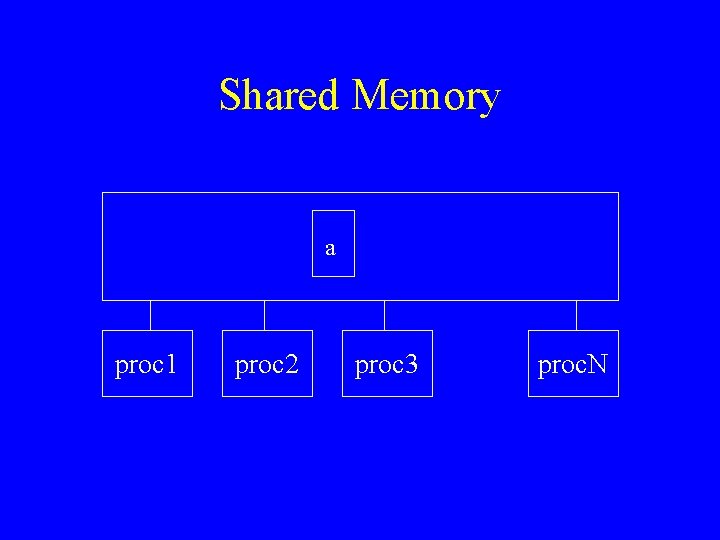

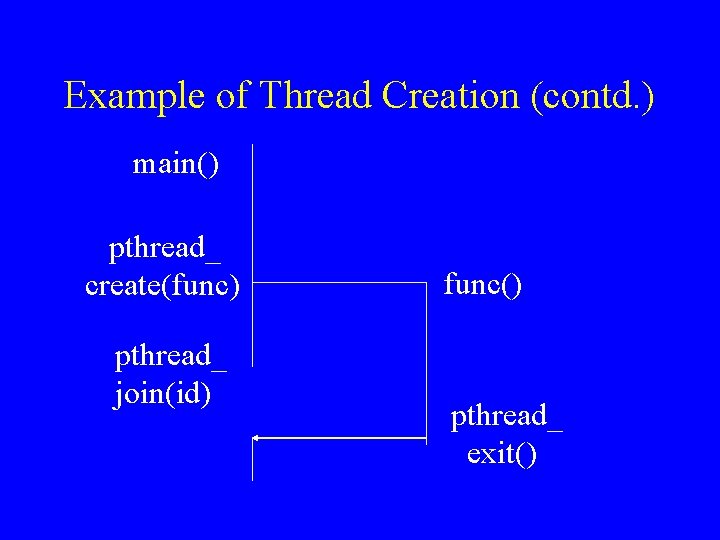

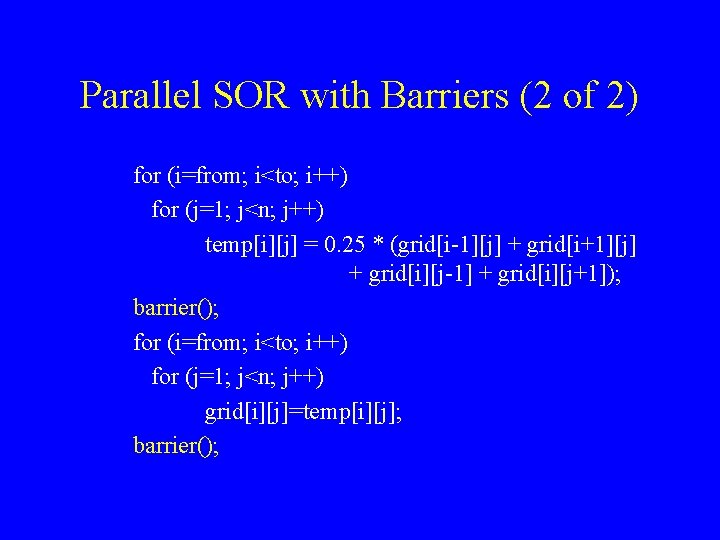

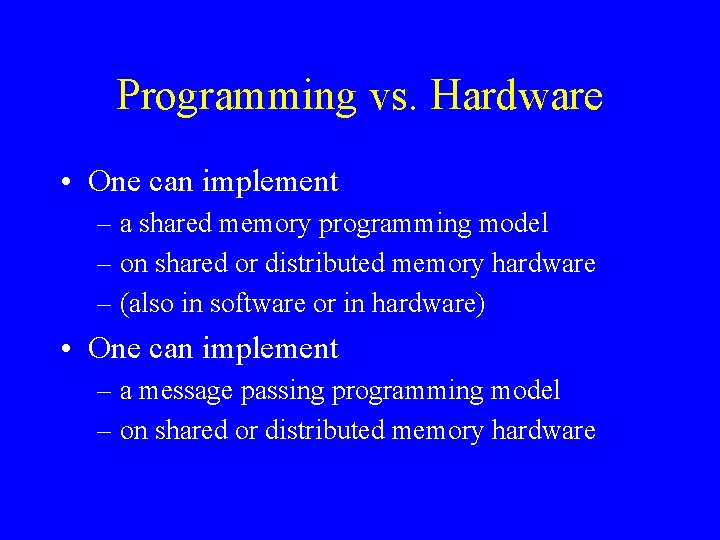

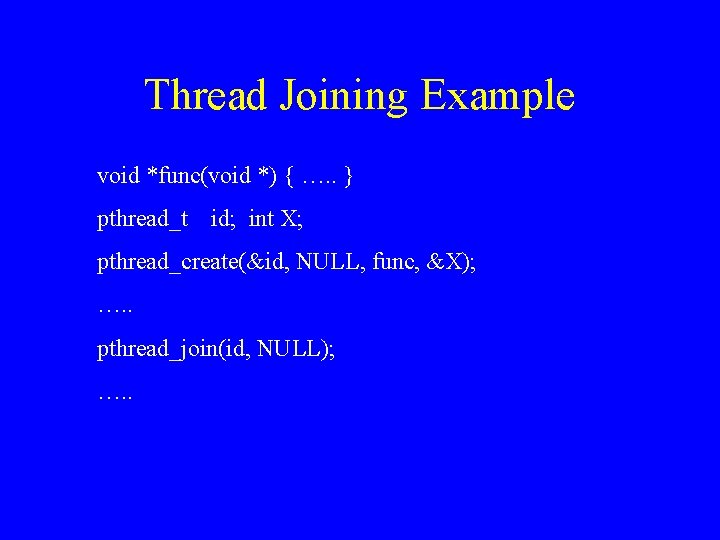

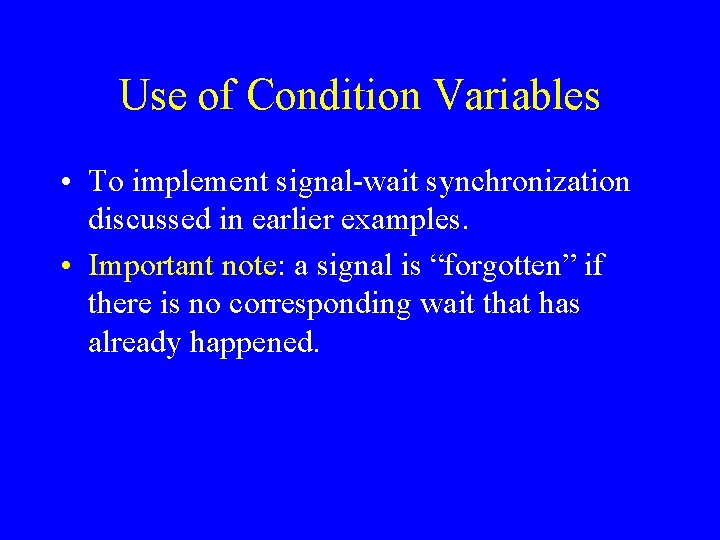

Molecular Dynamics (continued) for some number of timesteps { for all molecules i for all nearby molecules j force[i] += f( loc[i], loc[j] ); for all molecules i loc[i] = g( loc[i], force[i] ); }

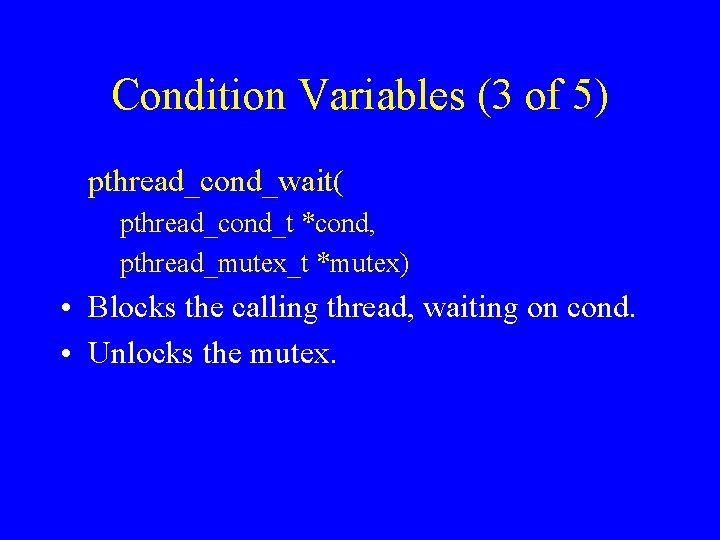

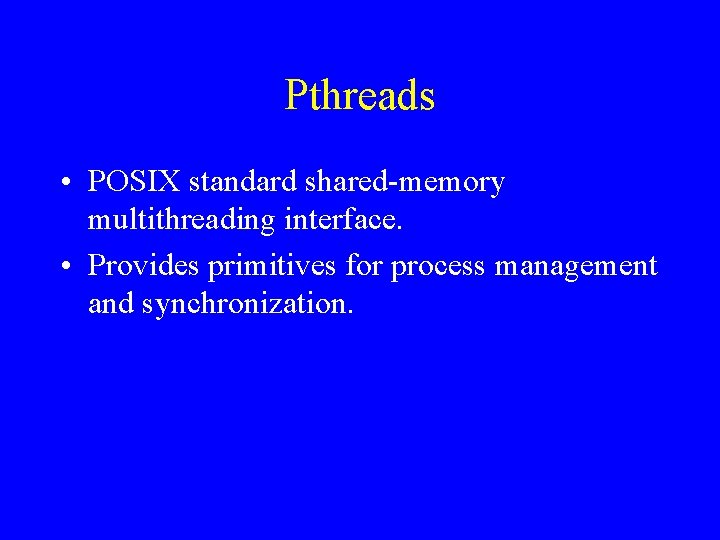

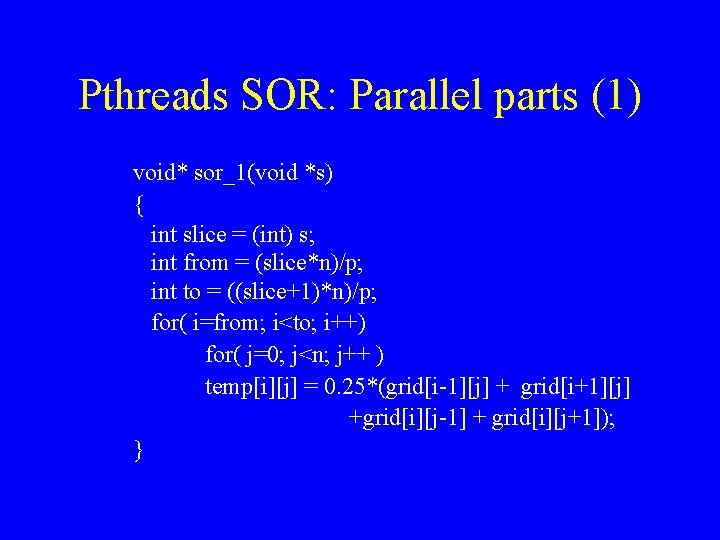

![Molecular Dynamics continued for each molecule i number of nearby molecules counti array of Molecular Dynamics (continued) for each molecule i number of nearby molecules count[i] array of](https://slidetodoc.com/presentation_image_h2/ec28a07e1bf5d58c392253c205b325e1/image-78.jpg)

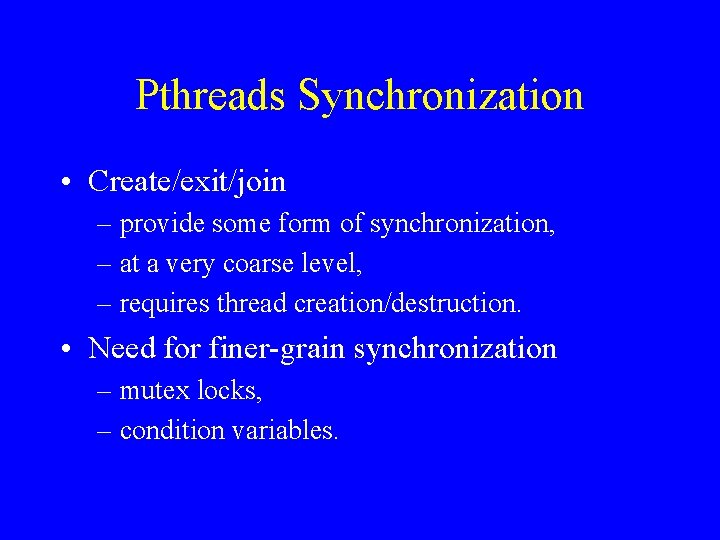

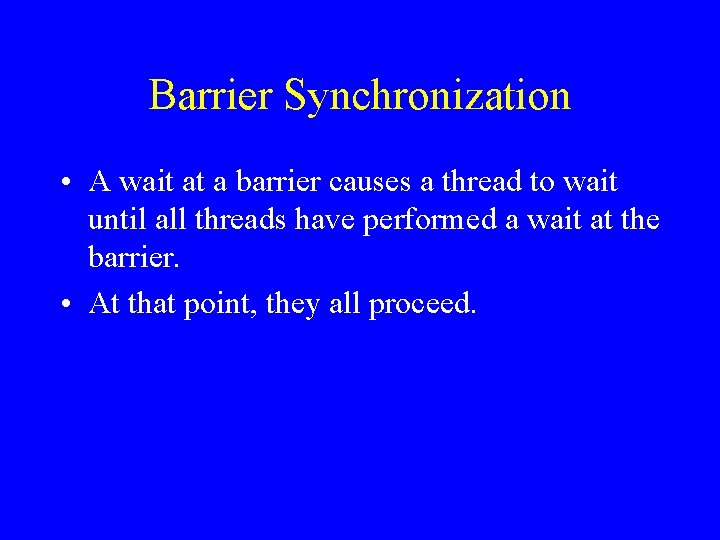

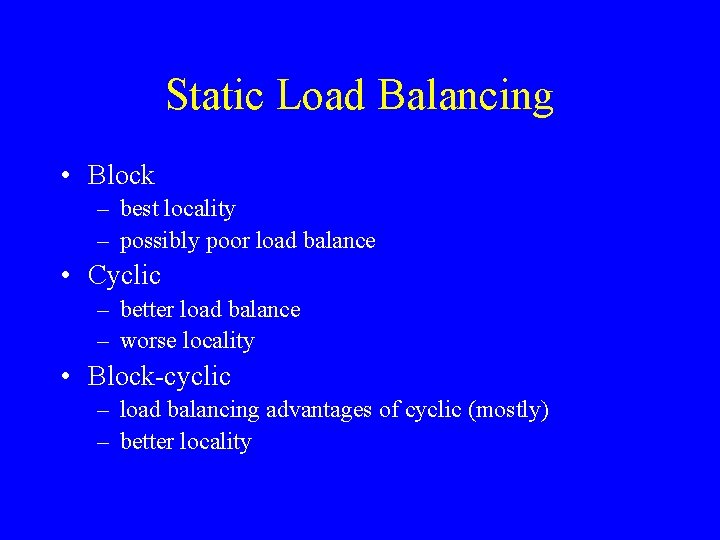

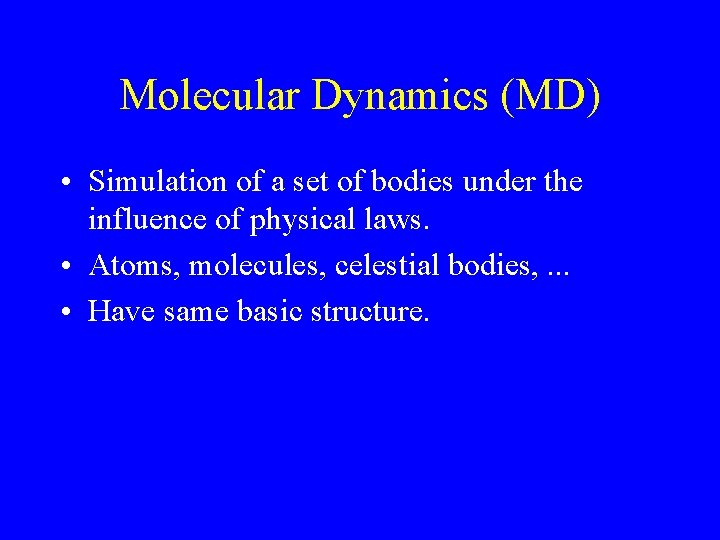

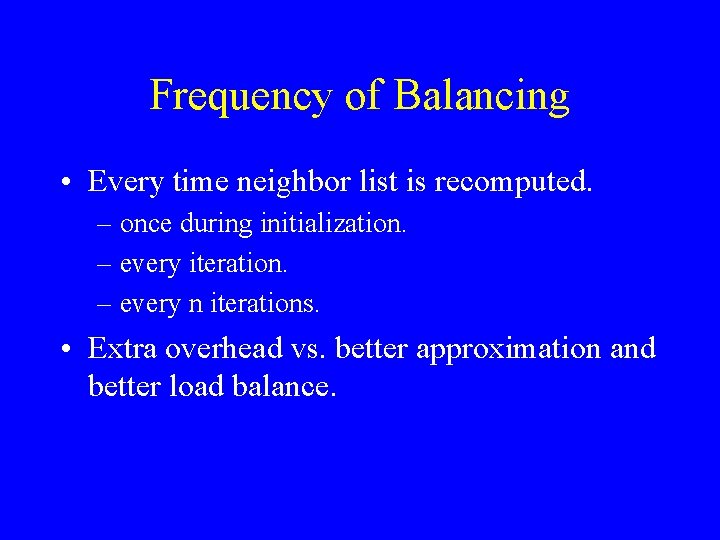

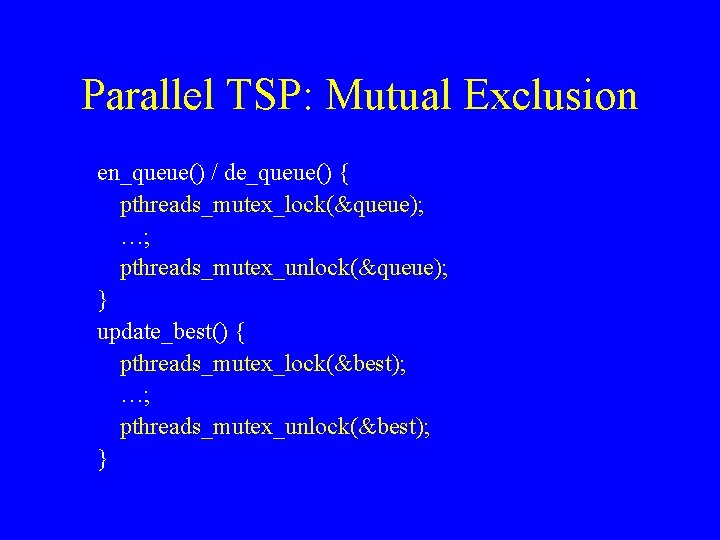

Molecular Dynamics (continued) for each molecule i number of nearby molecules count[i] array of indices of nearby molecules index[j] ( 0 <= j < count[i])

Molecular Dynamics (continued) for some number of timesteps { for( i=0; i<num_mol; i++ ) for( j=0; j<count[i]; j++ ) force[i] += f(loc[i], loc[index[j]]); for( i=0; i<num_mol; i++ ) loc[i] = g( loc[i], force[i] ); }

Molecular Dynamics (simple) for some number of timesteps { parallel for( i=0; i<num_mol; i++ ) for( j=0; j<count[i]; j++ ) force[i] += f(loc[i], loc[index[j]]); parallel for( i=0; i<num_mol; i++ ) loc[i] = g( loc[i], force[i] ); }

Molecular Dynamics (simple) • Simple to program. • Possibly poor load balance – block distribution of i iterations (molecules) – could lead to uneven neighbor distribution – cyclic does not help

Better Load Balance • Assign iterations such that each processor has ~ the same number of neighbors. • Array of “assign records” – size: number of processors – two elements: • beginning i value (molecule) • ending i value (molecule) • Recompute partition periodically

Frequency of Balancing • Every time neighbor list is recomputed. – once during initialization. – every iteration. – every n iterations. • Extra overhead vs. better approximation and better load balance.

Summary • Parallel code optimization – Critical section accesses. – Granularity. – Load balance.