ECE 1747 Parallel Programming Course Project Dec 2006

- Slides: 25

ECE 1747 Parallel Programming Course Project Dec. 2006 Parallel Computation of the 2 D Laminar Axisymmetric Coflow Nonpremixed Flames Qingan Andy Zhang Ph. D Candidate Department of Mechanical and Industrial Engineering University of Toronto

Outline n n n n Introduction Motivation Objective Methodology Result Conclusion Future Improvement Work in Progress 2

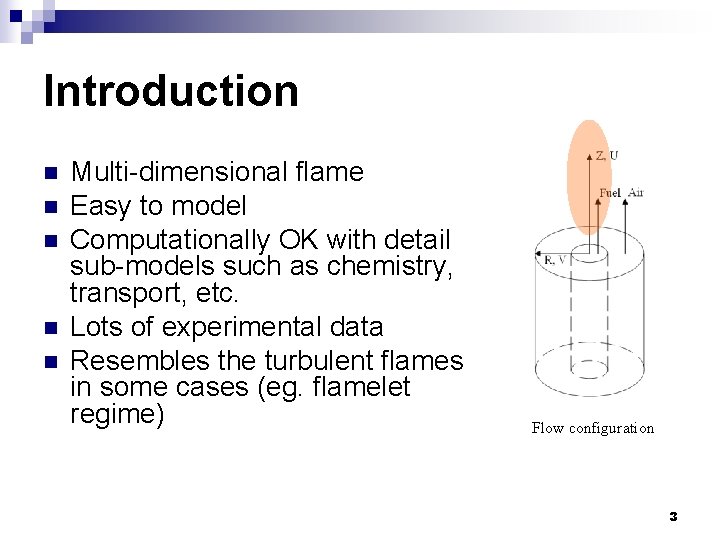

Introduction n n Multi-dimensional flame Easy to model Computationally OK with detail sub-models such as chemistry, transport, etc. Lots of experimental data Resembles the turbulent flames in some cases (eg. flamelet regime) Flow configuration 3

Motivation The run time is expected to be long if: n Complex Chemical Mechanism ¨ Appel n (2000) mechanism (101 species, 543 reactions) Complex Geometry ¨ Large 2 D coflow laminar flame (1, 000*500=500, 000) ¨ 3 D laminar flame (1, 000*500*100=50, 000) n Complex Physical Problem ¨ Soot formation ¨ Multi-phase problem 4

Objective To develop parallel flame code based on the sequential flame code Speedup n Feasibility n Accuracy n Flexibility n 5

Methodology -- Options n Shared Memory ¨ Open. MP ¨ Pthread n Distributed Memory ¨ n MPI Distributed Shared Memory ¨ Munin ¨ Tread. Marks MPI is chosen because it is widely used for scientific computation, easy to program and also the cluster is a Distributed Memory system. 6

Methodology -- Preparation Linux OS n Programming tool (Fortran, Make, IDE) n Parallel computation concepts n MPI commands n Network (SSH, queuing system) n 7

Methodology –Sequential code n Sequential Code Analysis ¨ Algorithm ¨ Dependency Data ¨ I/O ¨ ¨ CPU time breakdown Sequential code is the backbone for parallelization! 8

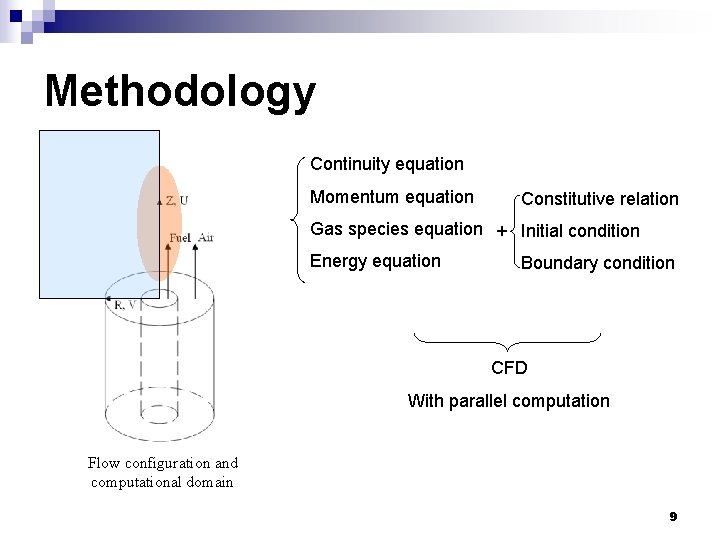

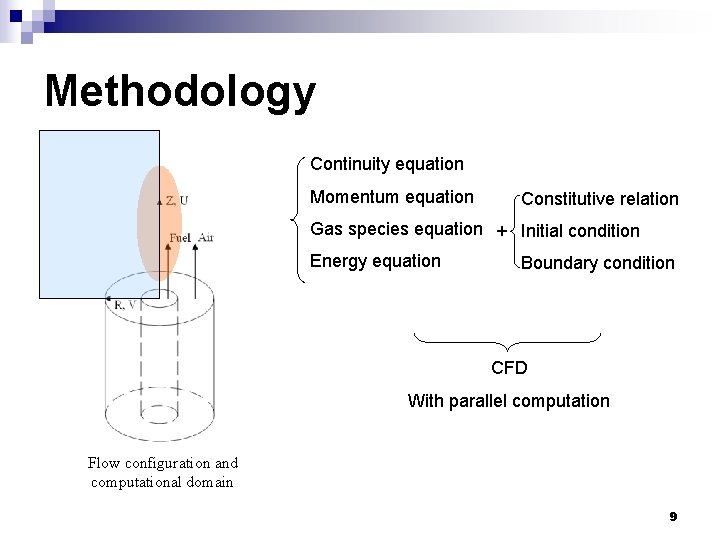

Methodology Continuity equation Momentum equation Constitutive relation Gas species equation + Initial condition Energy equation Boundary condition CFD With parallel computation Flow configuration and computational domain 9

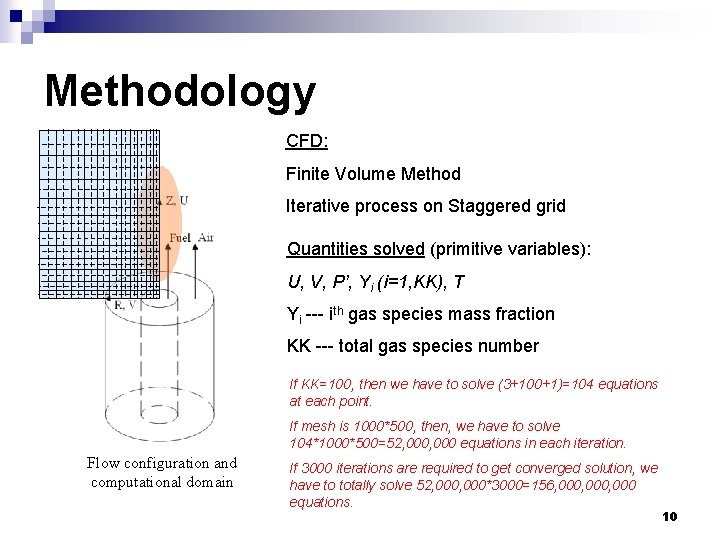

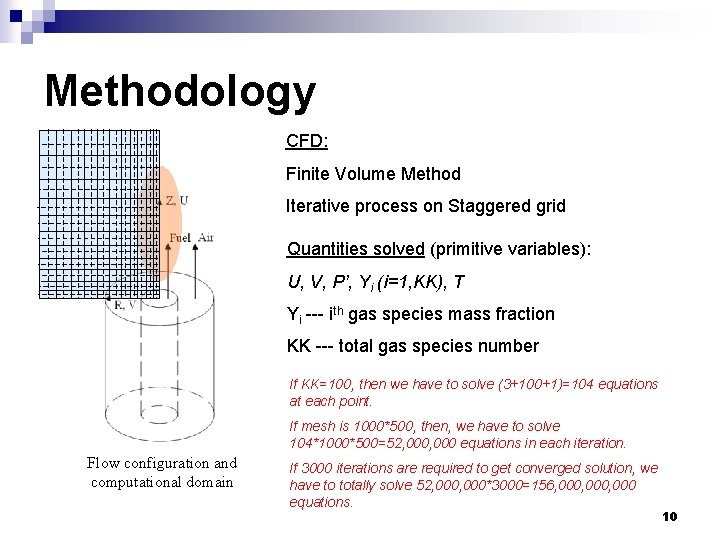

Methodology CFD: Finite Volume Method Iterative process on Staggered grid Quantities solved (primitive variables): U, V, P’, Yi (i=1, KK), T Yi --- ith gas species mass fraction KK --- total gas species number If KK=100, then we have to solve (3+100+1)=104 equations at each point. If mesh is 1000*500, then, we have to solve 104*1000*500=52, 000 equations in each iteration. Flow configuration and computational domain If 3000 iterations are required to get converged solution, we have to totally solve 52, 000*3000=156, 000, 000 equations. 10

General Transport Equation Unsteady Term + Convection Term = Diffusion Term + Source Term Unsteady: time variant term Convection: caused by flow motion Diffusion: For species: molecular diffusion and thermo diffusion Source term: For species: chemical reaction 11

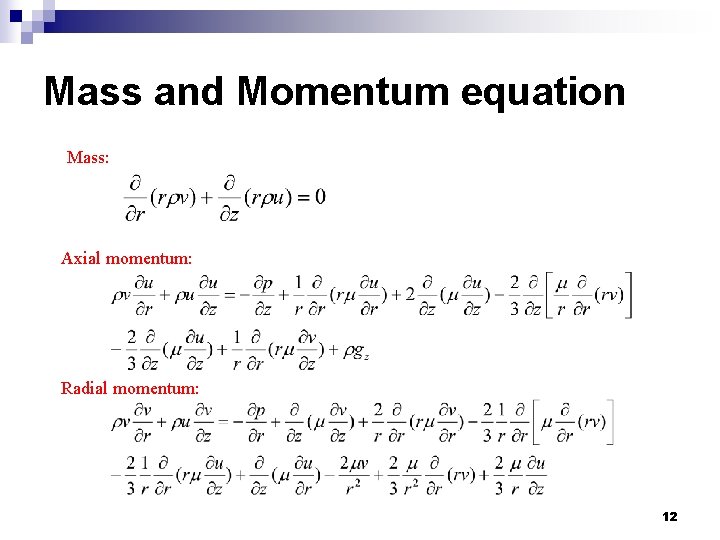

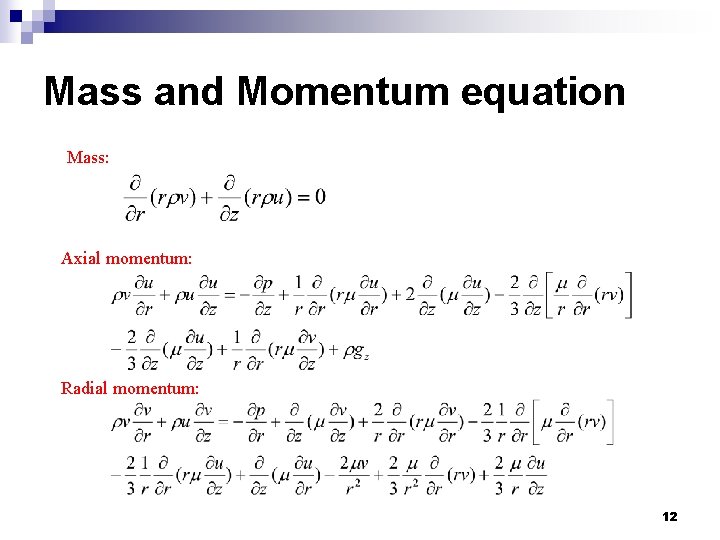

Mass and Momentum equation Mass: Axial momentum: Radial momentum: 12

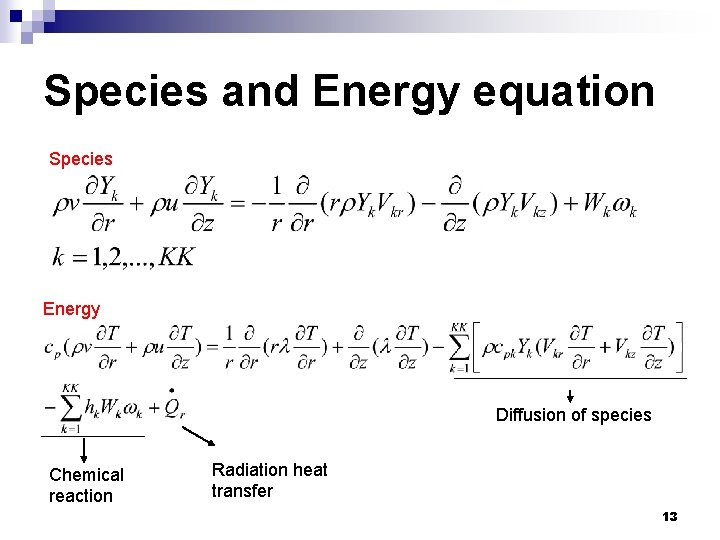

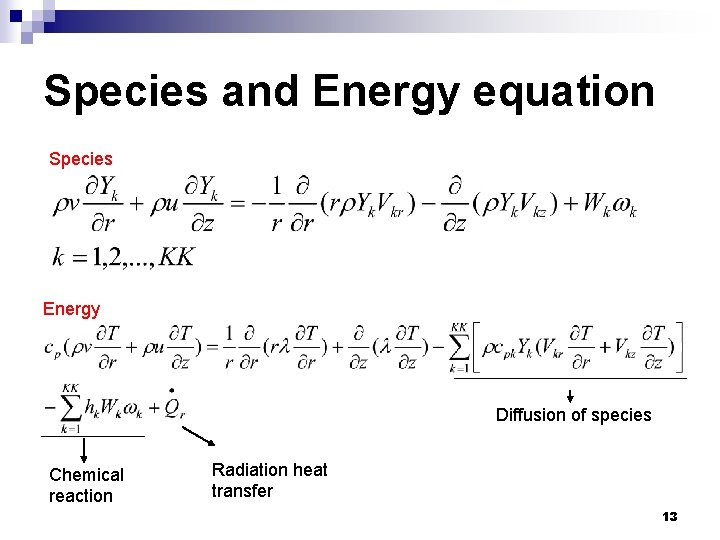

Species and Energy equation Species Energy Diffusion of species Chemical reaction Radiation heat transfer 13

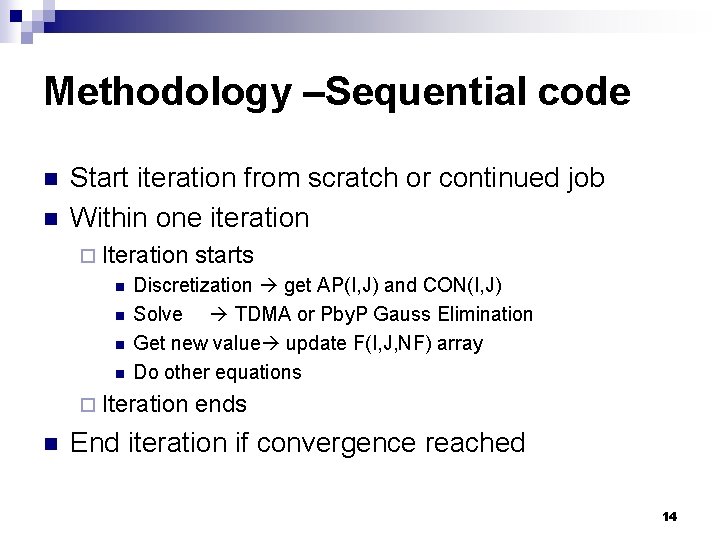

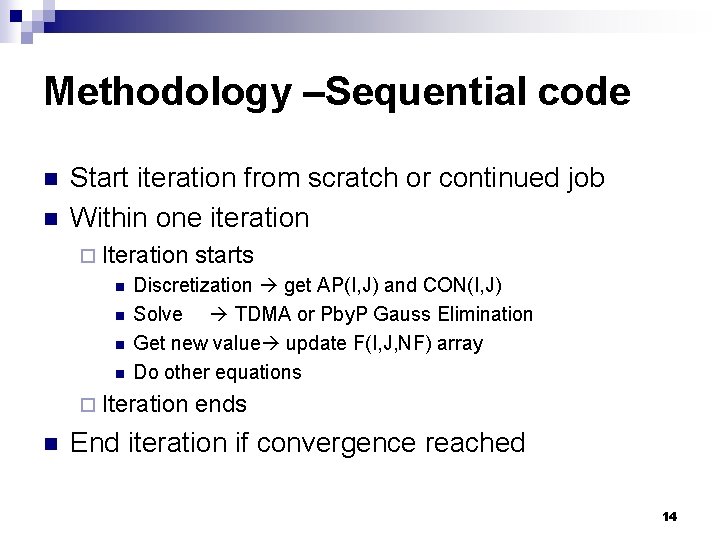

Methodology –Sequential code n n Start iteration from scratch or continued job Within one iteration ¨ Iteration n n Discretization get AP(I, J) and CON(I, J) Solve TDMA or Pby. P Gauss Elimination Get new value update F(I, J, NF) array Do other equations ¨ Iteration n starts ends End iteration if convergence reached 14

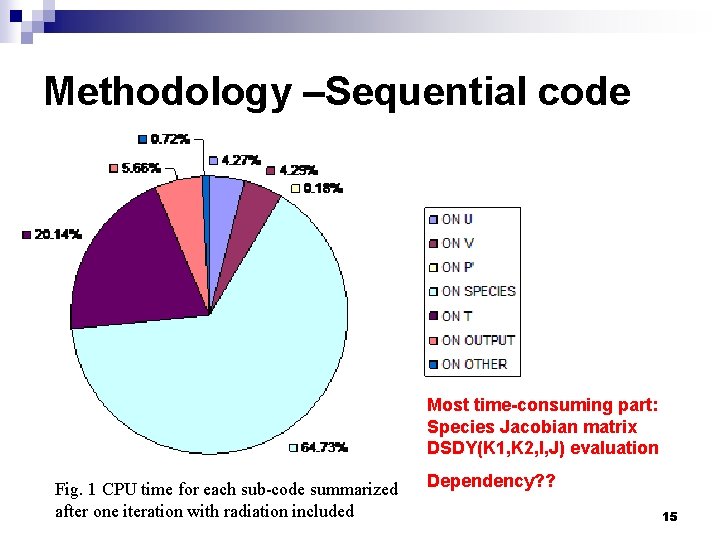

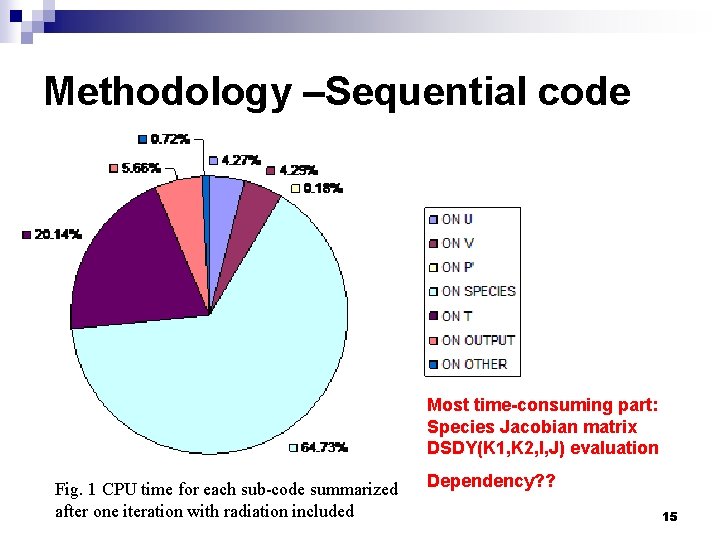

Methodology –Sequential code Most time-consuming part: Species Jacobian matrix DSDY(K 1, K 2, I, J) evaluation Fig. 1 CPU time for each sub-code summarized after one iteration with radiation included Dependency? ? 15

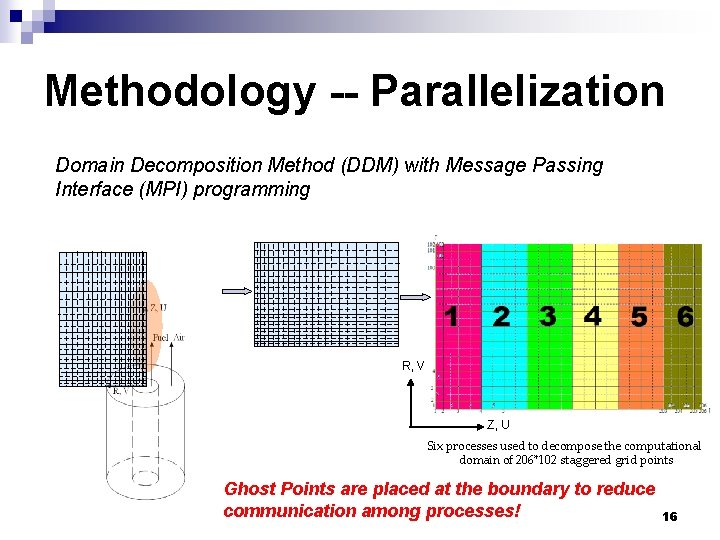

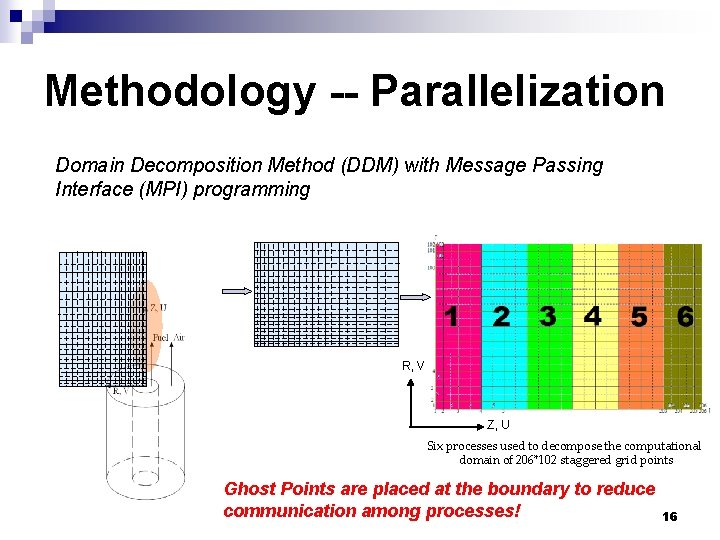

Methodology -- Parallelization Domain Decomposition Method (DDM) with Message Passing Interface (MPI) programming R, V Z, U Six processes used to decompose the computational domain of 206*102 staggered grid points Ghost Points are placed at the boundary to reduce communication among processes! 16

Cluster Information n n Cluster location: icpet. nrc. ca in Ottawa 40 nodes connected by Ethernet n 1 -5 n 2 -5 n 3 -5 n 4 -5 n 1 -4 n 2 -4 n 3 -4 n 4 -4 n 1 -3 n 2 -3 n 3 -3 n 4 -3 n 1 -2 n 2 -2 n 3 -2 n 4 -2 n 1 -1 n 2 -1 n 3 -1 n 4 -1 n n | | | n 5 -5 n 6 -5 n 7 -5 n 8 -5 n 5 -4 n 6 -4 n 7 -4 n 8 -4 n 5 -3 n 6 -3 n 7 -3 n 8 -3 n 5 -2 n 6 -2 n 7 -2 n 8 -2 n 5 -1 n 6 -1 n 7 -1 n 8 -1 AMD Opteron 250 (2. 4 GHz) with 5 G memory Redhat Linux Enterprise Edition 4. 0 Batch-queuing system: Sun Grid Engine (SGE) Portland Group compilers (V 6. 2) + MPICH 2 17

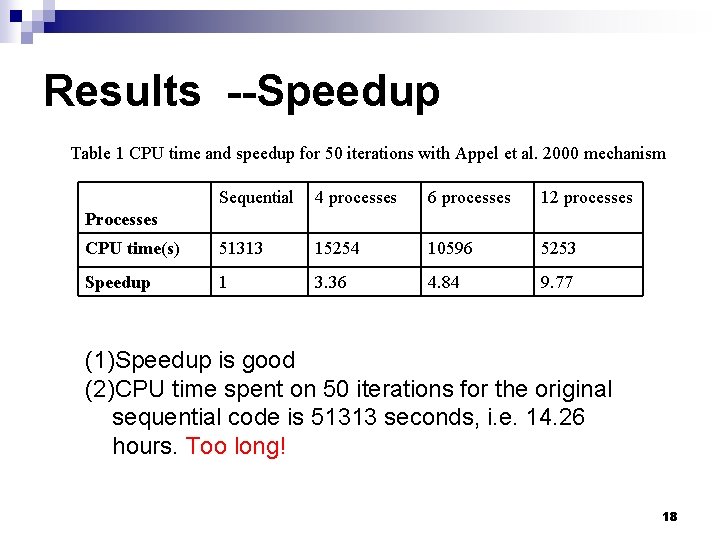

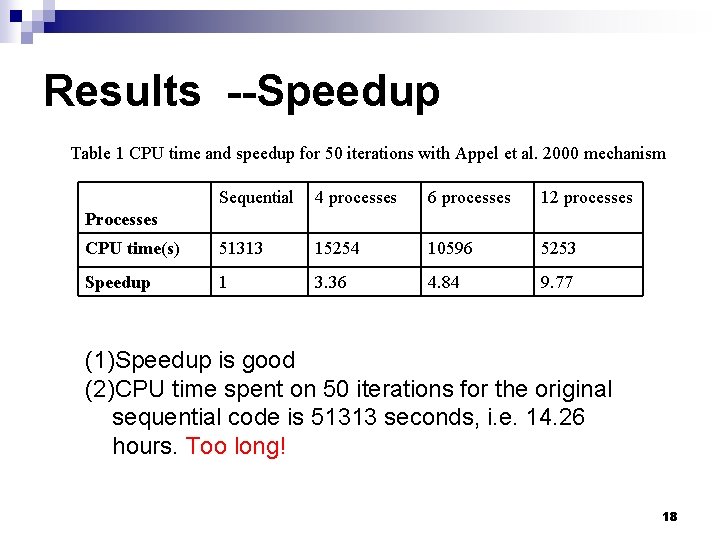

Results --Speedup Table 1 CPU time and speedup for 50 iterations with Appel et al. 2000 mechanism Sequential 4 processes 6 processes 12 processes CPU time(s) 51313 15254 10596 5253 Speedup 1 3. 36 4. 84 9. 77 Processes (1)Speedup is good (2)CPU time spent on 50 iterations for the original sequential code is 51313 seconds, i. e. 14. 26 hours. Too long! 18

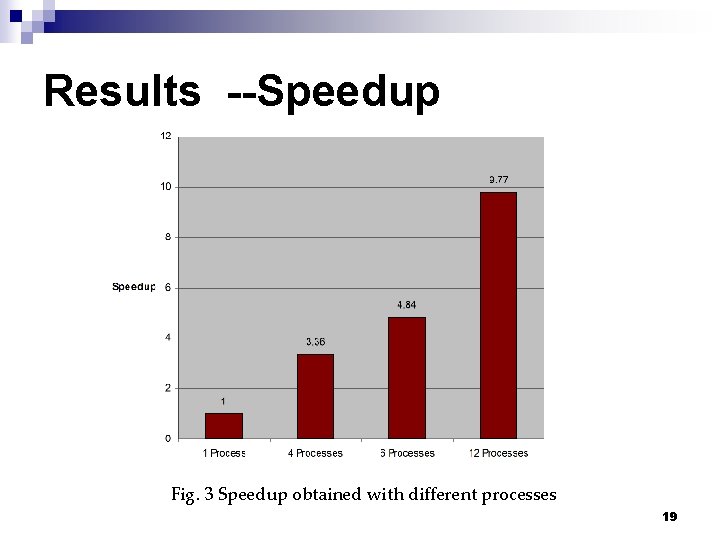

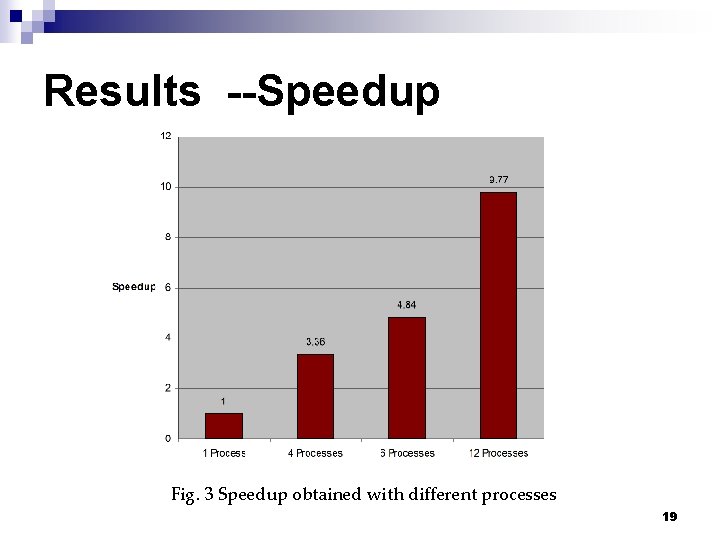

Results --Speedup Fig. 3 Speedup obtained with different processes 19

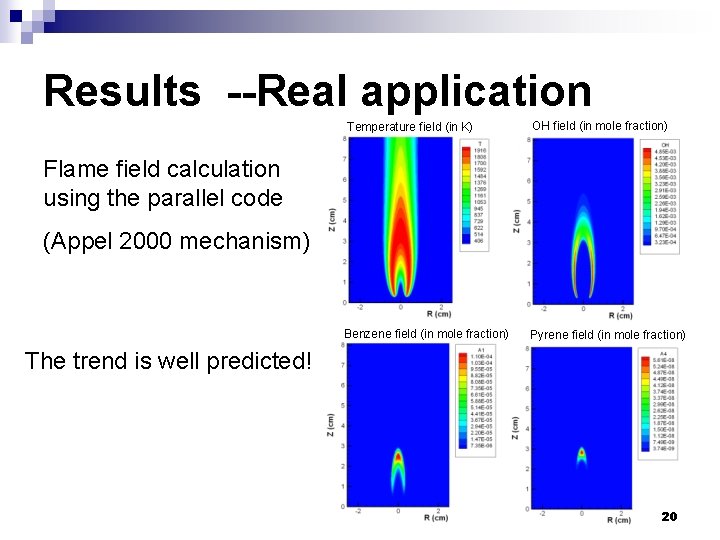

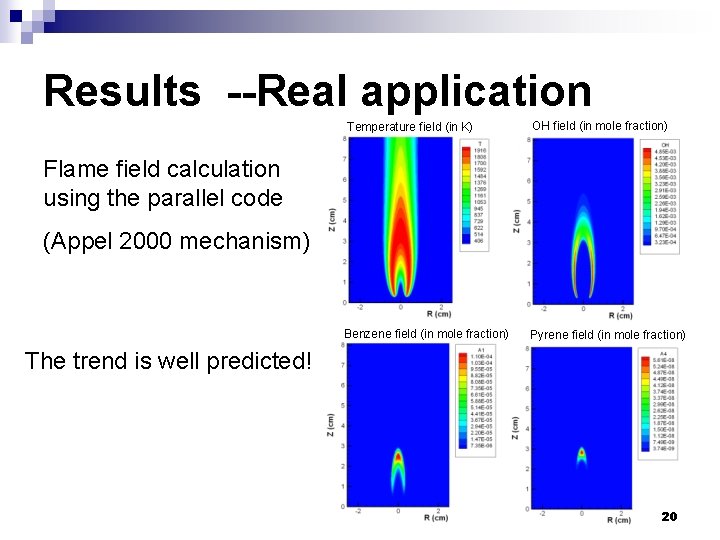

Results --Real application Temperature field (in K) OH field (in mole fraction) Benzene field (in mole fraction) Pyrene field (in mole fraction) Flame field calculation using the parallel code (Appel 2000 mechanism) The trend is well predicted! 20

Conclusion The sequential flame code is parallelized with DDM n Speedup is good n The parallel code is applied to model a flame using a detailed mechanism n Flexibility is good, i. e. geometry and/or # of processors can be easily changed n 21

Future Improvement Optimized DDM n Species line solver n 22

Work in Progress n Fixed sectional soot model ¨ Add 70 equations to the original system of equations 23

Experience n n Keep communication down Wise parallelization method Debugging is hard I/O 24

Thanks Questions? 25