Early Milestones in IR Research Cheng Xiang Zhai

![Automatic abstracting algorithm [Luhn 58] The idea of query-specific summarization “In many instances condensations Automatic abstracting algorithm [Luhn 58] The idea of query-specific summarization “In many instances condensations](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-12.jpg)

![Probabilistic representation and similarity computation [Luhn 61] An early idea about using unigram language Probabilistic representation and similarity computation [Luhn 61] An early idea about using unigram language](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-14.jpg)

![Other early ideas related to indexing • • • [Joyce & Needham 58]: Relevance-based Other early ideas related to indexing • • • [Joyce & Needham 58]: Relevance-based](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-15.jpg)

![Measuring associations of words [Doyle 62] 16 Measuring associations of words [Doyle 62] 16](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-16.jpg)

![Word Association Map for Browsing [Doyle 62] Imagine this can be further combined with Word Association Map for Browsing [Doyle 62] Imagine this can be further combined with](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-17.jpg)

![Measures: Precision, Recall, and Fallout [Cleverdon 67] 23 Measures: Precision, Recall, and Fallout [Cleverdon 67] 23](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-23.jpg)

![Precision-Recall Curve [Cleverdon 67] 24 Precision-Recall Curve [Cleverdon 67] 24](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-24.jpg)

![Cranfield II Test: Results [Cleverdon 67] For more information about Cranfield II test, see Cranfield II Test: Results [Cleverdon 67] For more information about Cranfield II test, see](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-25.jpg)

![Probabilistic Indexing [Maron & Kuhns 60] • Major contributions: – Formalization of “relevance” with Probabilistic Indexing [Maron & Kuhns 60] • Major contributions: – Formalization of “relevance” with](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-40.jpg)

![Relevance Feedback [Rocchio 65] 47 Relevance Feedback [Rocchio 65] 47](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-47.jpg)

- Slides: 49

Early Milestones in IR Research Cheng. Xiang Zhai Department of Computer Science Graduate School of Library & Information Science Institute for Genomic Biology Department of Statistics University of Illinois, Urbana-Champaign 1

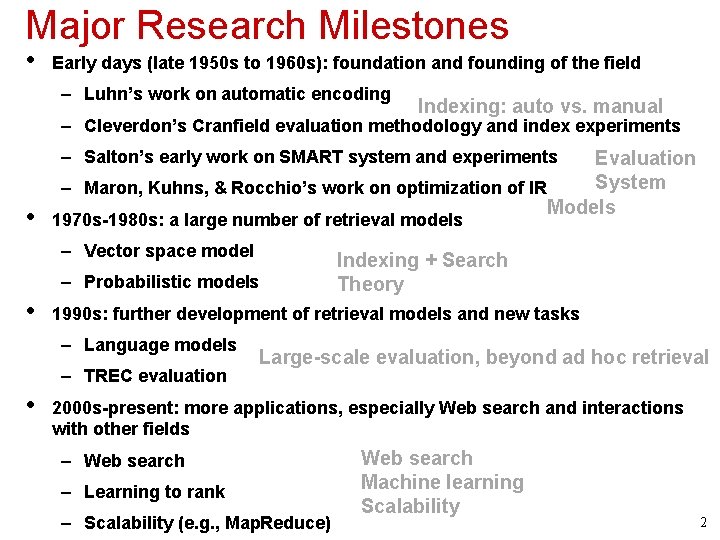

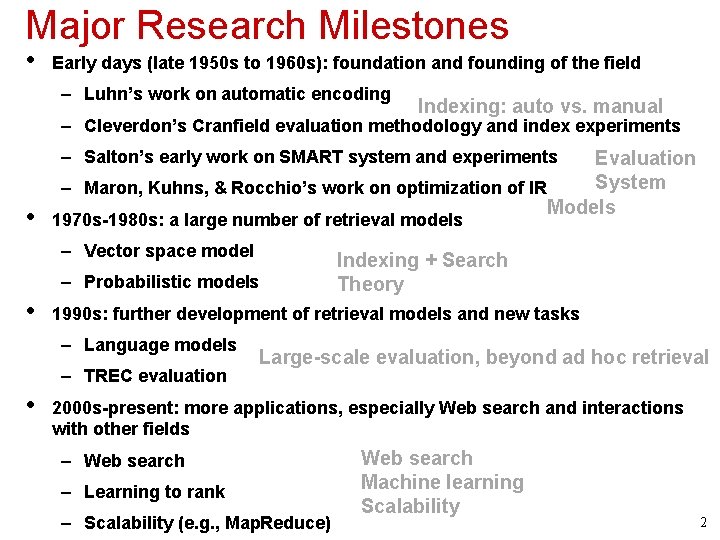

Major Research Milestones • Early days (late 1950 s to 1960 s): foundation and founding of the field – Luhn’s work on automatic encoding Indexing: auto vs. manual – Cleverdon’s Cranfield evaluation methodology and index experiments – Salton’s early work on SMART system and experiments • Evaluation System – Maron, Kuhns, & Rocchio’s work on optimization of IR Models 1970 s-1980 s: a large number of retrieval models – Vector space model – Probabilistic models • 1990 s: further development of retrieval models and new tasks – Language models – TREC evaluation • Indexing + Search Theory Large-scale evaluation, beyond ad hoc retrieval 2000 s-present: more applications, especially Web search and interactions with other fields – Web search – Learning to rank – Scalability (e. g. , Map. Reduce) Web search Machine learning Scalability 2

Outline • Milestone 1: Automatic Indexing (Luhn) • Milestone 2: Evaluation (Cleverdon) • Milestone 3: SMART system (Salton) • Milestone 4: Probabilistic model & feedback (Maron & Kuhns, Rocchio) 3

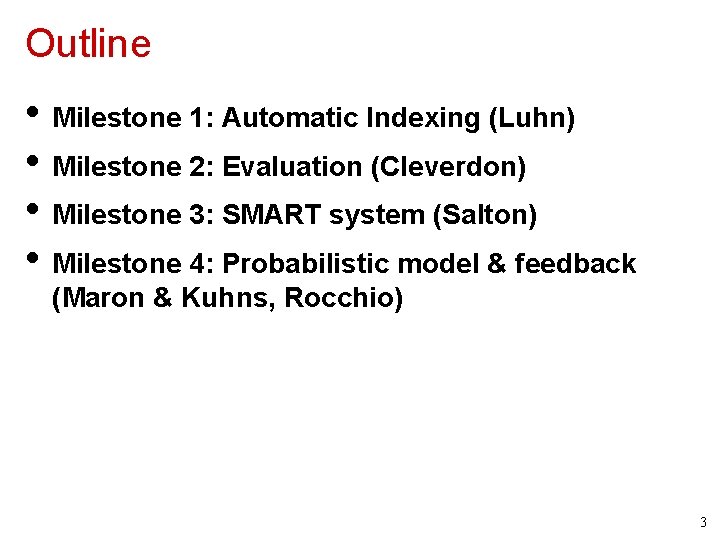

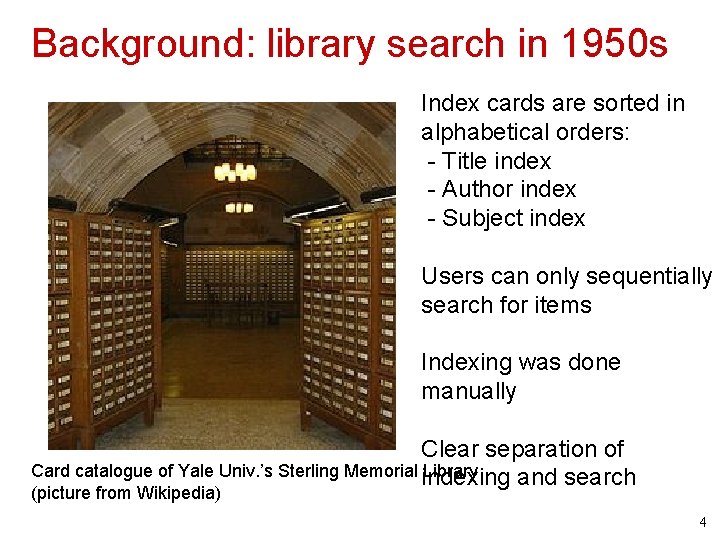

Background: library search in 1950 s Index cards are sorted in alphabetical orders: - Title index - Author index - Subject index Users can only sequentially search for items Indexing was done manually Clear separation of Card catalogue of Yale Univ. ’s Sterling Memorial indexing Library and search (picture from Wikipedia) 4

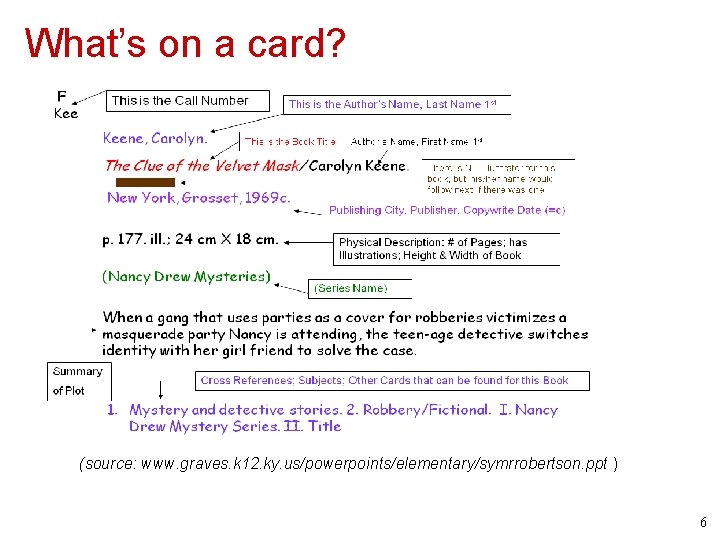

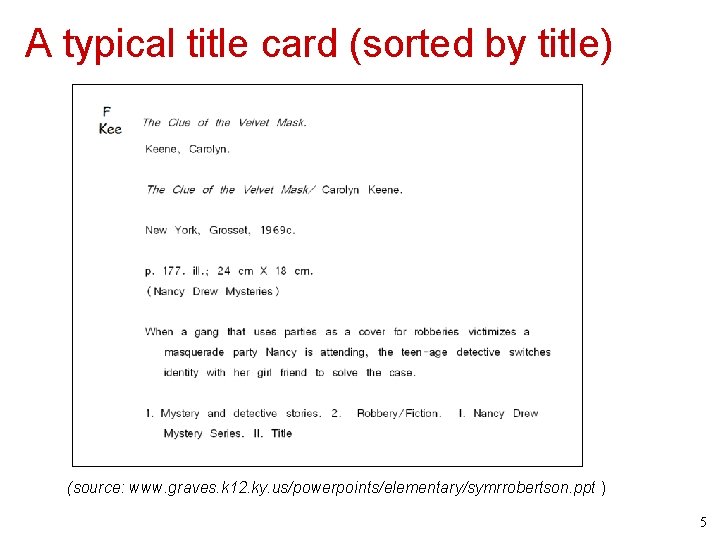

A typical title card (sorted by title) (source: www. graves. k 12. ky. us/powerpoints/elementary/symrrobertson. ppt ) 5

What’s on a card? (source: www. graves. k 12. ky. us/powerpoints/elementary/symrrobertson. ppt ) 6

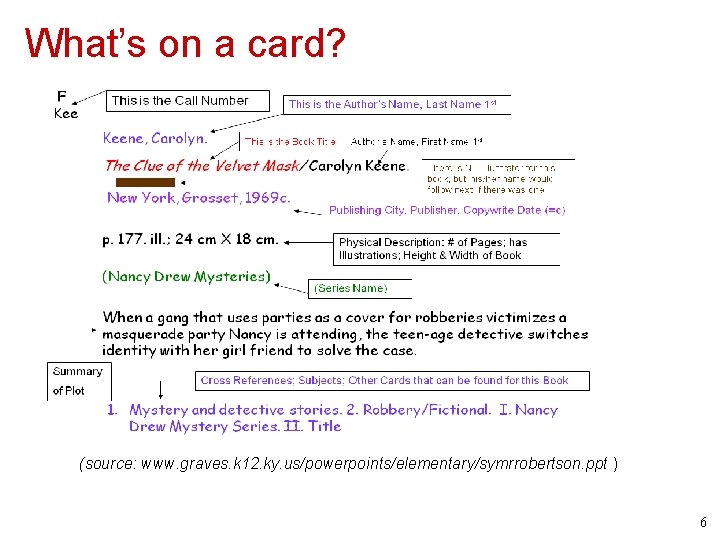

Milestone 1: Automatic Indexing 7

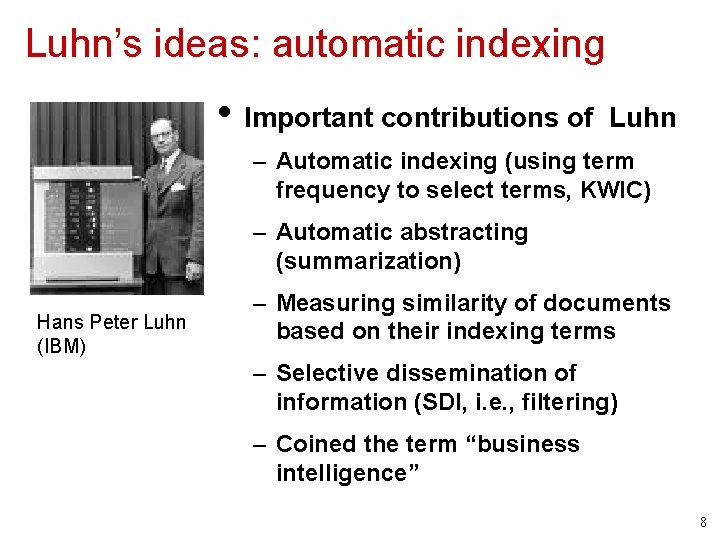

Luhn’s ideas: automatic indexing • Important contributions of Luhn – Automatic indexing (using term frequency to select terms, KWIC) – Automatic abstracting (summarization) Hans Peter Luhn (IBM) – Measuring similarity of documents based on their indexing terms – Selective dissemination of information (SDI, i. e. , filtering) – Coined the term “business intelligence” 8

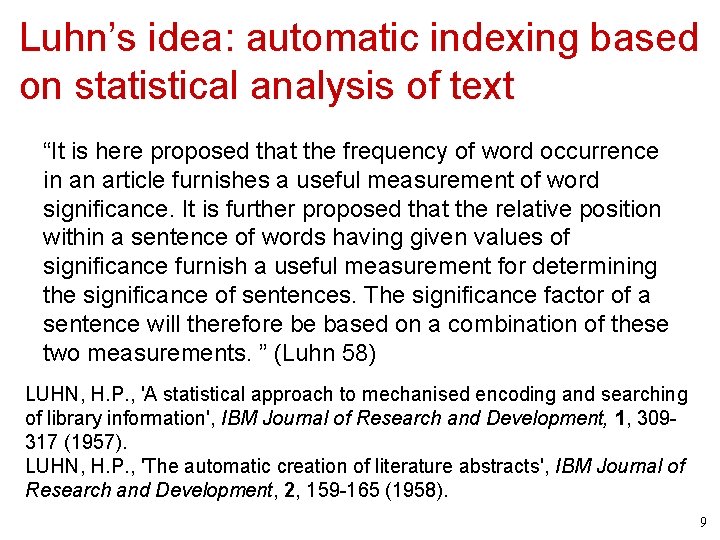

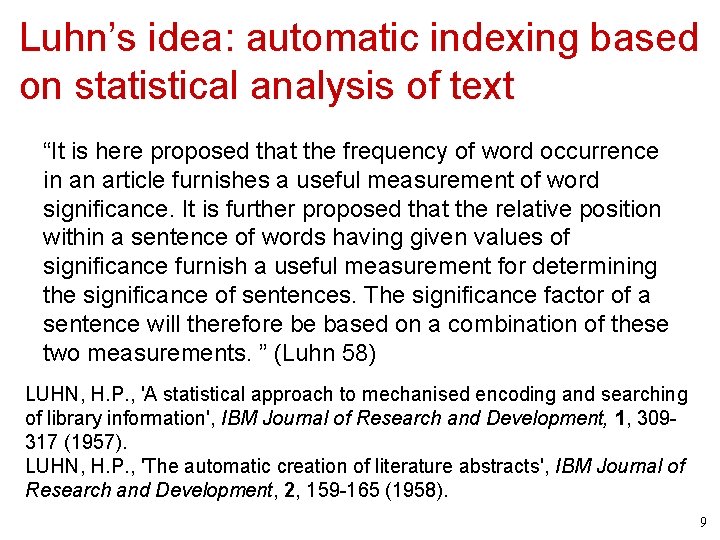

Luhn’s idea: automatic indexing based on statistical analysis of text “It is here proposed that the frequency of word occurrence in an article furnishes a useful measurement of word significance. It is further proposed that the relative position within a sentence of words having given values of significance furnish a useful measurement for determining the significance of sentences. The significance factor of a sentence will therefore be based on a combination of these two measurements. ” (Luhn 58) LUHN, H. P. , 'A statistical approach to mechanised encoding and searching of library information', IBM Journal of Research and Development, 1, 309317 (1957). LUHN, H. P. , 'The automatic creation of literature abstracts', IBM Journal of Research and Development, 2, 159 -165 (1958). 9

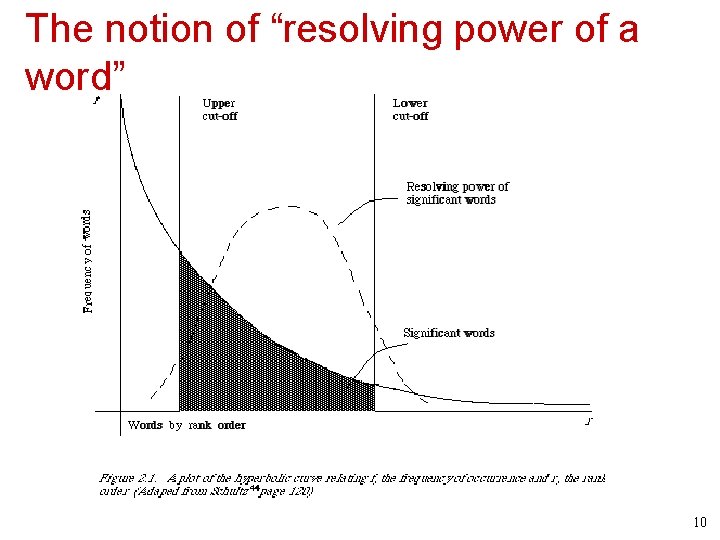

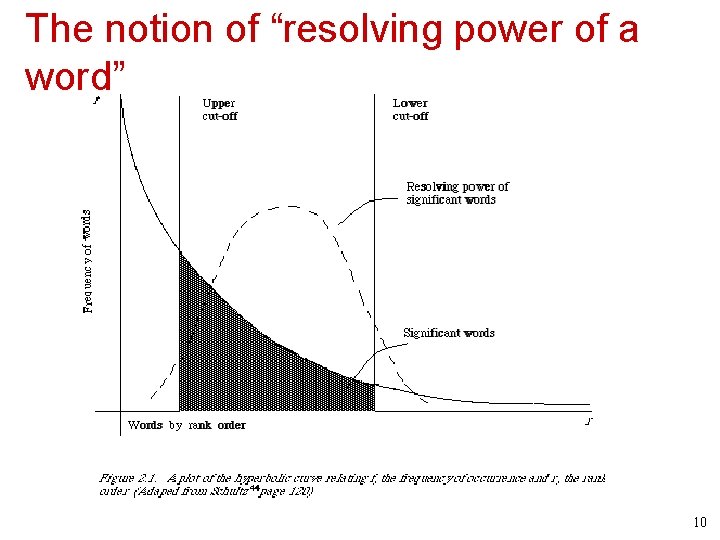

The notion of “resolving power of a word” 10

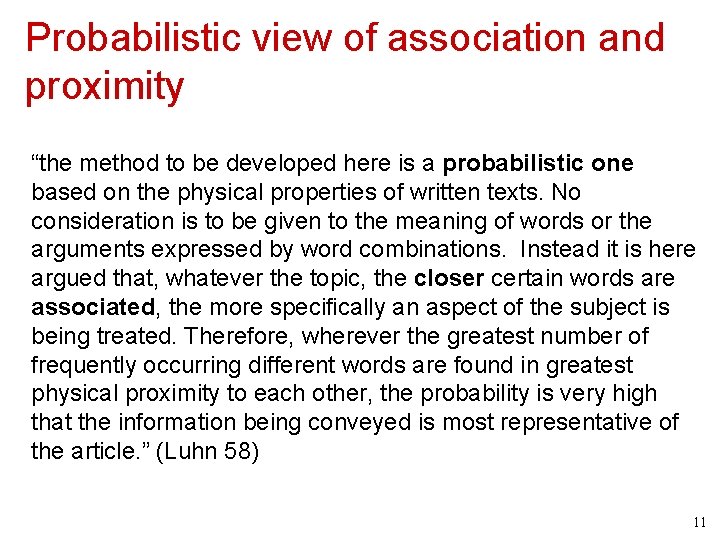

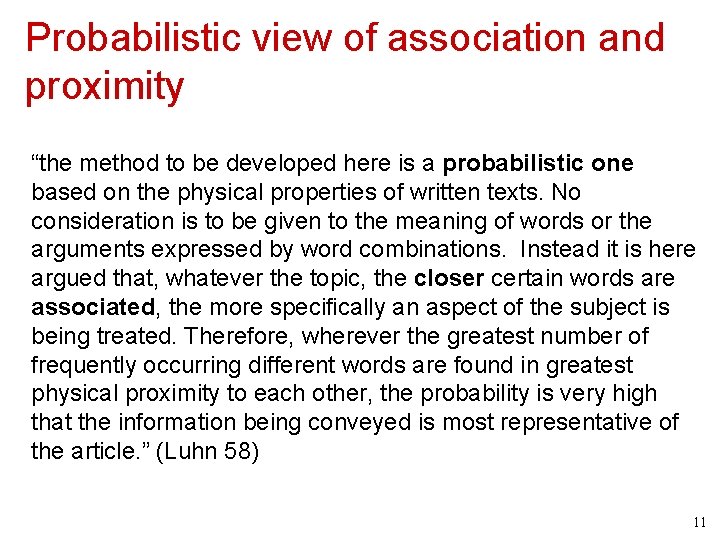

Probabilistic view of association and proximity “the method to be developed here is a probabilistic one based on the physical properties of written texts. No consideration is to be given to the meaning of words or the arguments expressed by word combinations. Instead it is here argued that, whatever the topic, the closer certain words are associated, the more specifically an aspect of the subject is being treated. Therefore, wherever the greatest number of frequently occurring different words are found in greatest physical proximity to each other, the probability is very high that the information being conveyed is most representative of the article. ” (Luhn 58) 11

![Automatic abstracting algorithm Luhn 58 The idea of queryspecific summarization In many instances condensations Automatic abstracting algorithm [Luhn 58] The idea of query-specific summarization “In many instances condensations](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-12.jpg)

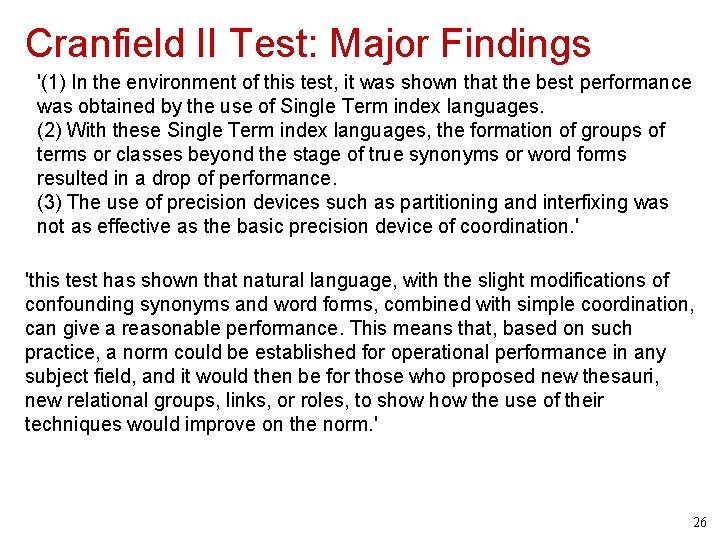

Automatic abstracting algorithm [Luhn 58] The idea of query-specific summarization “In many instances condensations of documents are made emphasizing the relationship of the information in the document to a special interest or field of investigation. In such cases sentences could be weighted by assigning a premium value to a predetermined class of words. ” 12

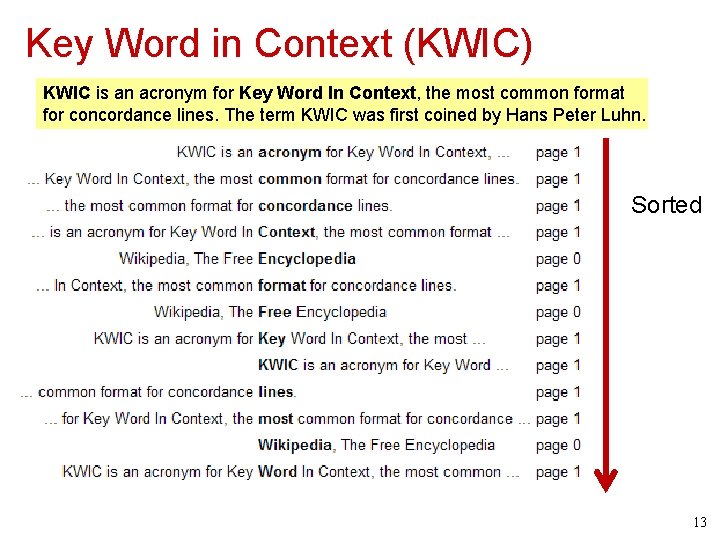

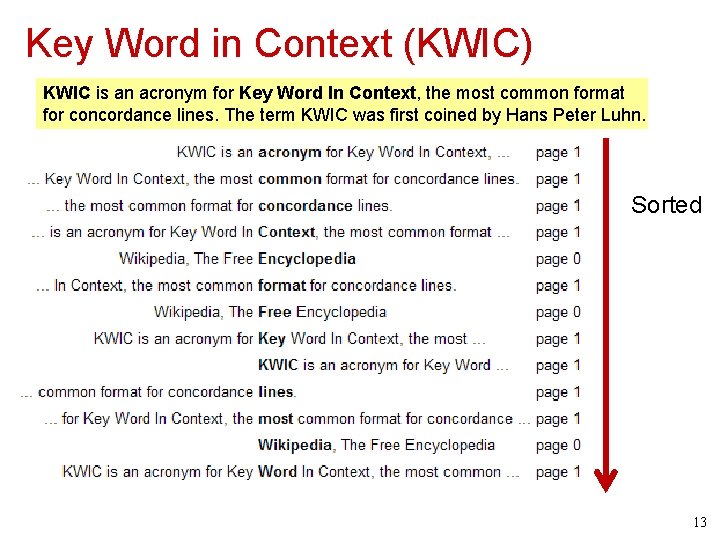

Key Word in Context (KWIC) KWIC is an acronym for Key Word In Context, the most common format for concordance lines. The term KWIC was first coined by Hans Peter Luhn. Sorted 13

![Probabilistic representation and similarity computation Luhn 61 An early idea about using unigram language Probabilistic representation and similarity computation [Luhn 61] An early idea about using unigram language](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-14.jpg)

Probabilistic representation and similarity computation [Luhn 61] An early idea about using unigram language model to represent text What do you think about the similarity function? 14

![Other early ideas related to indexing Joyce Needham 58 Relevancebased Other early ideas related to indexing • • • [Joyce & Needham 58]: Relevance-based](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-15.jpg)

Other early ideas related to indexing • • • [Joyce & Needham 58]: Relevance-based ranking, vector -space model, query expansion, connection between machine translation and IR [Doyle 62]: Automatic discovery of term relations/clusters, “semantic road map” for both search and browsing (and text mining!) [Maron 61]: automatic text categorization [Borko 62]: categories can be automatically generated from text using factor analysis [Edmundson & Wyllys 61]: local-global relative frequency (kind of TF-IDF) Many more (e. g. , citation index…) 15

![Measuring associations of words Doyle 62 16 Measuring associations of words [Doyle 62] 16](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-16.jpg)

Measuring associations of words [Doyle 62] 16

![Word Association Map for Browsing Doyle 62 Imagine this can be further combined with Word Association Map for Browsing [Doyle 62] Imagine this can be further combined with](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-17.jpg)

Word Association Map for Browsing [Doyle 62] Imagine this can be further combined with querying 17

Milestone 2: Cranfield Evaluation Methodology 18

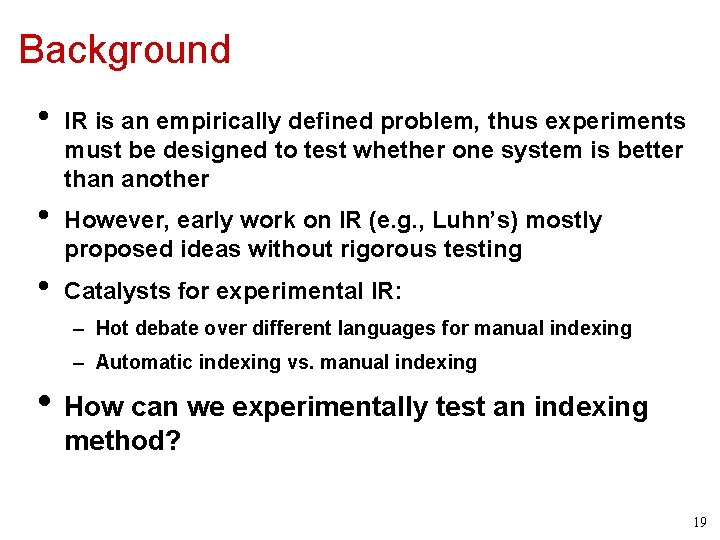

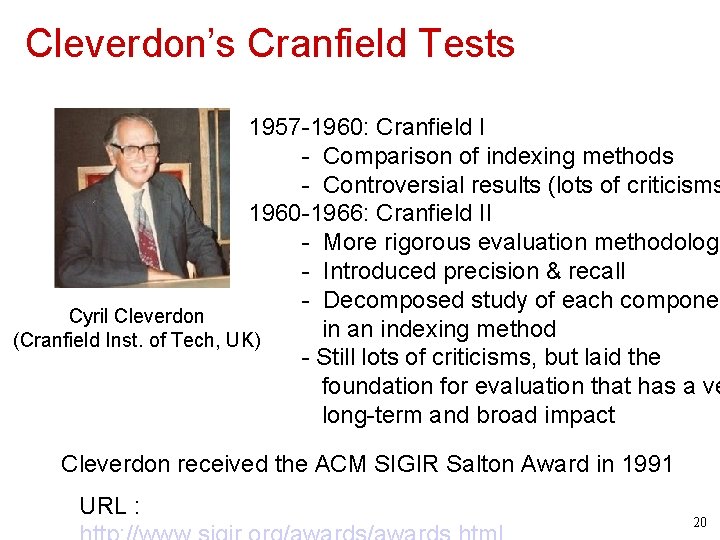

Background • • • IR is an empirically defined problem, thus experiments must be designed to test whether one system is better than another However, early work on IR (e. g. , Luhn’s) mostly proposed ideas without rigorous testing Catalysts for experimental IR: – Hot debate over different languages for manual indexing – Automatic indexing vs. manual indexing • How can we experimentally test an indexing method? 19

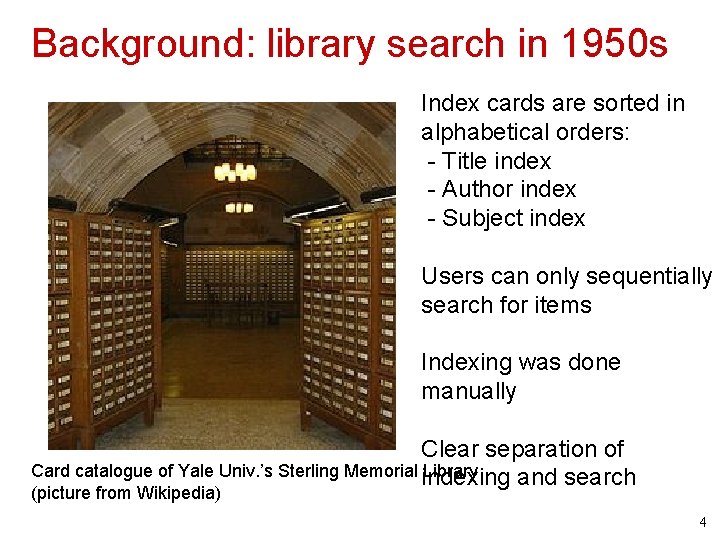

Cleverdon’s Cranfield Tests 1957 -1960: Cranfield I - Comparison of indexing methods - Controversial results (lots of criticisms 1960 -1966: Cranfield II - More rigorous evaluation methodology - Introduced precision & recall - Decomposed study of each componen Cyril Cleverdon in an indexing method (Cranfield Inst. of Tech, UK) - Still lots of criticisms, but laid the foundation for evaluation that has a ve long-term and broad impact Cleverdon received the ACM SIGIR Salton Award in 1991 URL : 20

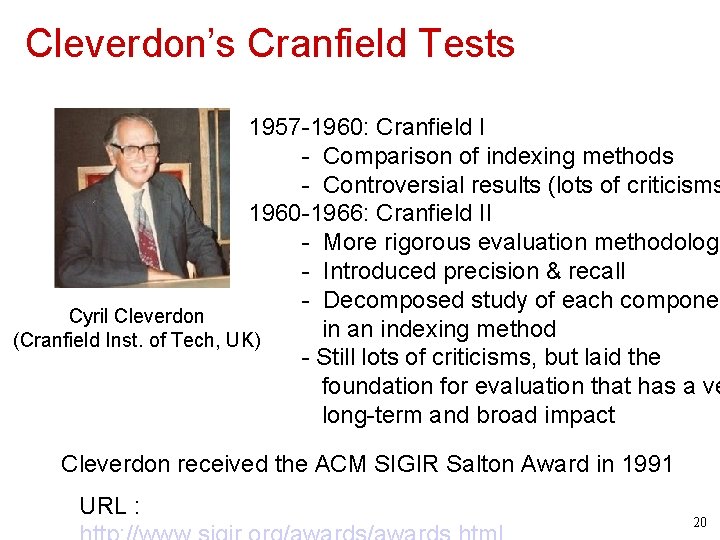

• • Cranfield II Test: Experiment Design Decomposed study of contributions of different components of an indexing language Rigorous control of evaluation – Having complete judgments is more important than having a large set of documents – Document collection: 1400 documents (cited papers by 200 authors, no original papers by these authors) – Queries: 279 questions provided by authors of original papers – Relevance judgments: • Multiple levels: 1 -5 • Initially done by 6 students in 3 months; final judgments by the originators – Measures: precision, recall, fallout, prec-recall curve – Ranking method: coordination level (# matched terms) 21

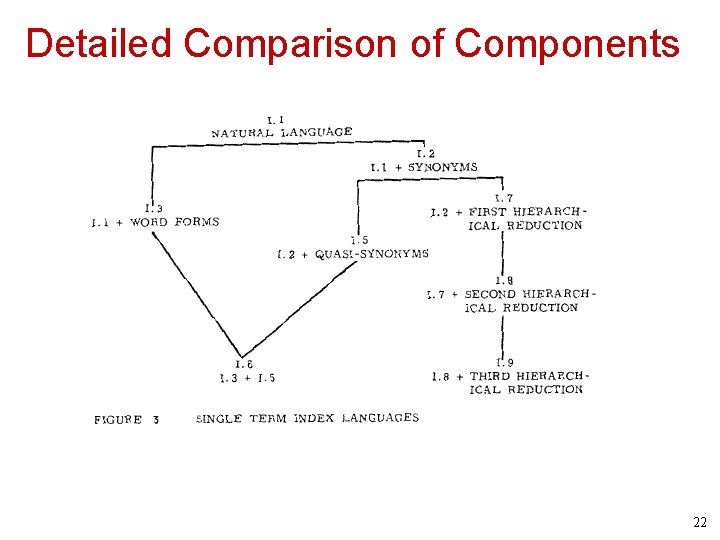

Detailed Comparison of Components 22

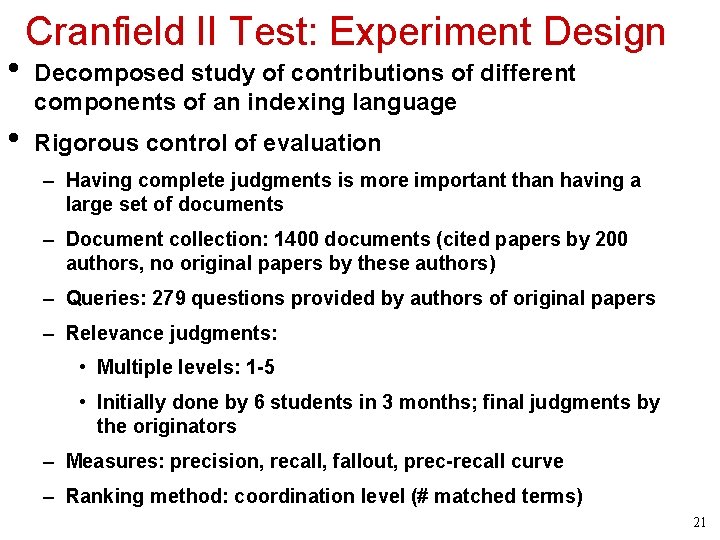

![Measures Precision Recall and Fallout Cleverdon 67 23 Measures: Precision, Recall, and Fallout [Cleverdon 67] 23](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-23.jpg)

Measures: Precision, Recall, and Fallout [Cleverdon 67] 23

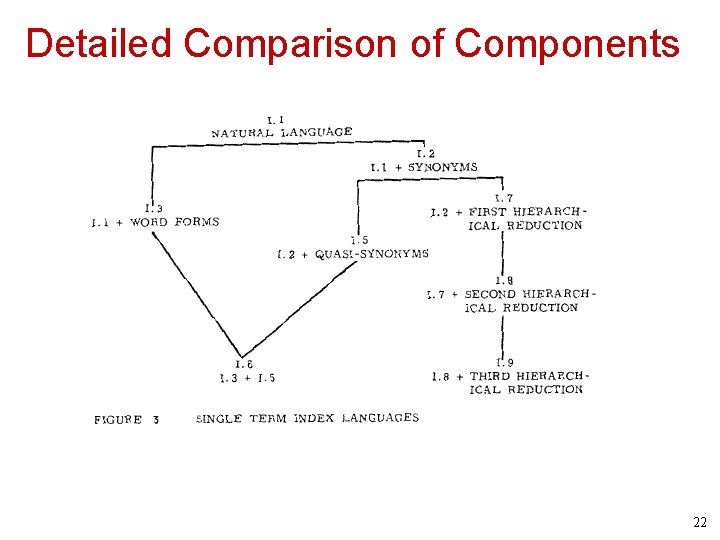

![PrecisionRecall Curve Cleverdon 67 24 Precision-Recall Curve [Cleverdon 67] 24](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-24.jpg)

Precision-Recall Curve [Cleverdon 67] 24

![Cranfield II Test Results Cleverdon 67 For more information about Cranfield II test see Cranfield II Test: Results [Cleverdon 67] For more information about Cranfield II test, see](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-25.jpg)

Cranfield II Test: Results [Cleverdon 67] For more information about Cranfield II test, see Cleverdon, C. W. , 1967, The Cranfield tests on index language devices. Aslib Proceedi 19, 173 -192. 25

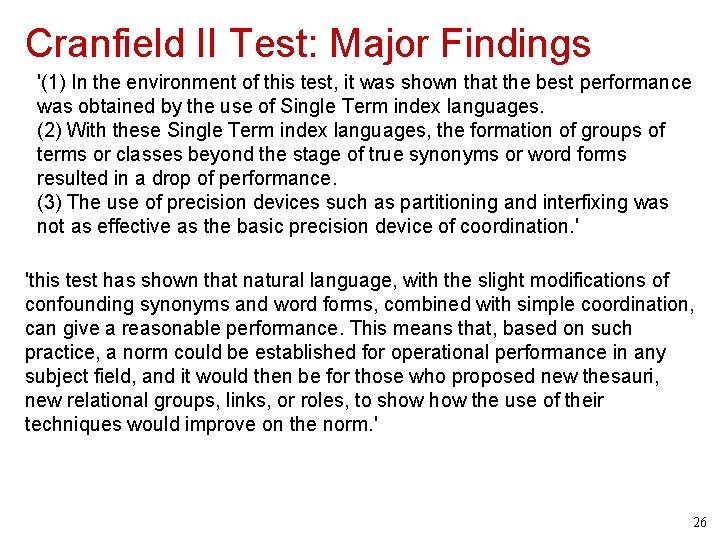

Cranfield II Test: Major Findings '(1) In the environment of this test, it was shown that the best performance was obtained by the use of Single Term index languages. (2) With these Single Term index languages, the formation of groups of terms or classes beyond the stage of true synonyms or word forms resulted in a drop of performance. (3) The use of precision devices such as partitioning and interfixing was not as effective as the basic precision device of coordination. ' 'this test has shown that natural language, with the slight modifications of confounding synonyms and word forms, combined with simple coordination, can give a reasonable performance. This means that, based on such practice, a norm could be established for operational performance in any subject field, and it would then be for those who proposed new thesauri, new relational groups, links, or roles, to show the use of their techniques would improve on the norm. ' 26

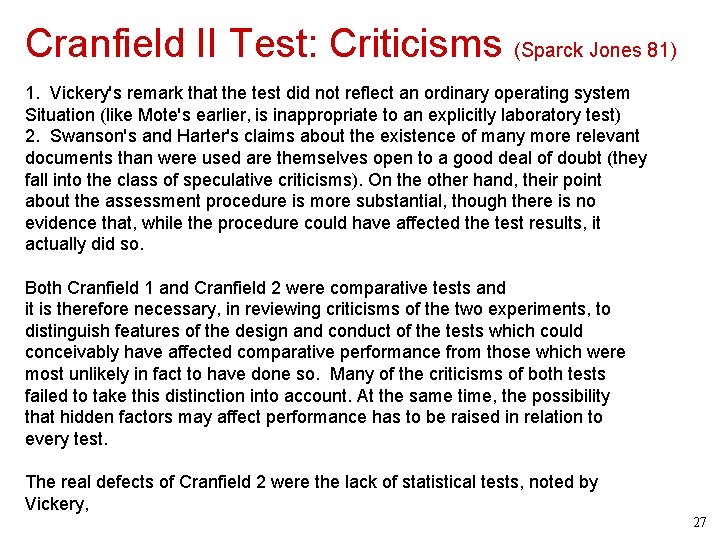

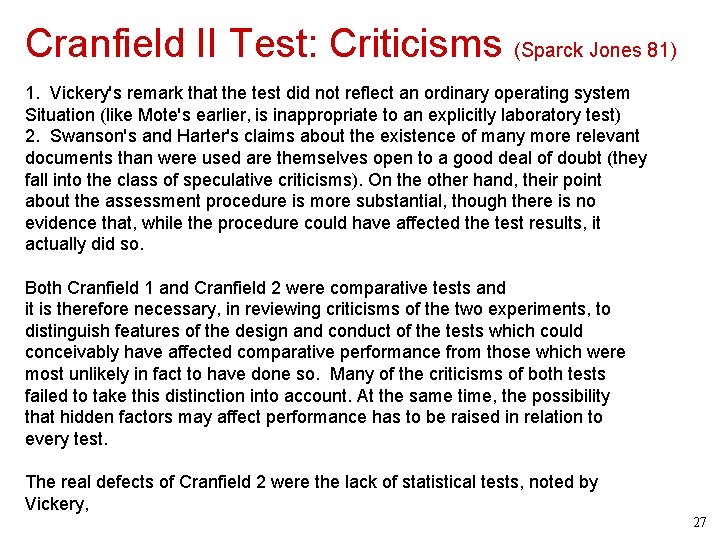

Cranfield II Test: Criticisms (Sparck Jones 81) 1. Vickery's remark that the test did not reflect an ordinary operating system Situation (like Mote's earlier, is inappropriate to an explicitly laboratory test) 2. Swanson's and Harter's claims about the existence of many more relevant documents than were used are themselves open to a good deal of doubt (they fall into the class of speculative criticisms). On the other hand, their point about the assessment procedure is more substantial, though there is no evidence that, while the procedure could have affected the test results, it actually did so. Both Cranfield 1 and Cranfield 2 were comparative tests and it is therefore necessary, in reviewing criticisms of the two experiments, to distinguish features of the design and conduct of the tests which could conceivably have affected comparative performance from those which were most unlikely in fact to have done so. Many of the criticisms of both tests failed to take this distinction into account. At the same time, the possibility that hidden factors may affect performance has to be raised in relation to every test. The real defects of Cranfield 2 were the lack of statistical tests, noted by Vickery, 27

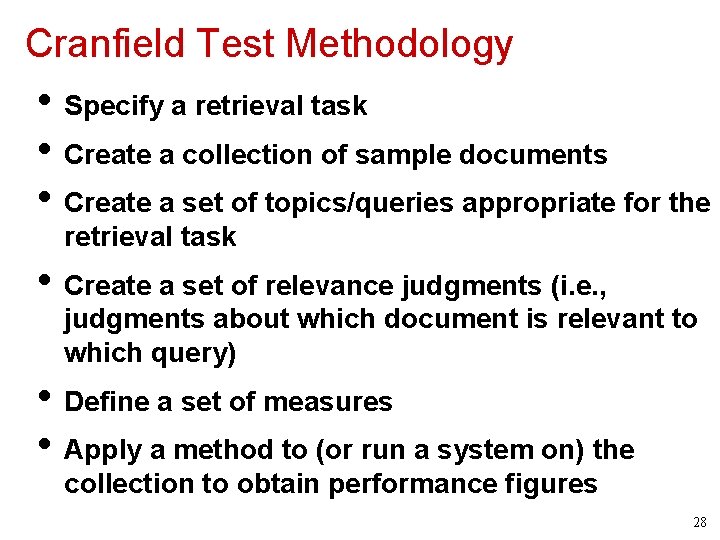

Cranfield Test Methodology • Specify a retrieval task • Create a collection of sample documents • Create a set of topics/queries appropriate for the retrieval task • Create a set of relevance judgments (i. e. , judgments about which document is relevant to which query) • Define a set of measures • Apply a method to (or run a system on) the collection to obtain performance figures 28

Milestone 3: SMART IR System 29

Cranfield experiments were done manually, how about doing all the experiments with an automatic system? SMART System 30

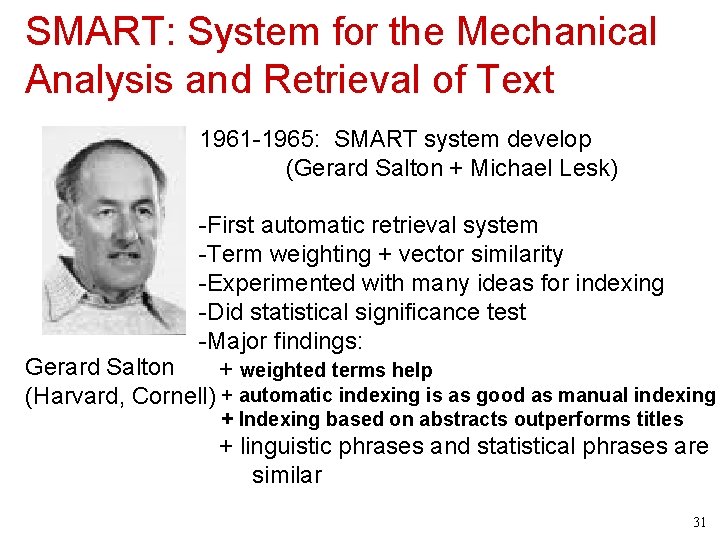

SMART: System for the Mechanical Analysis and Retrieval of Text 1961 -1965: SMART system develop (Gerard Salton + Michael Lesk) -First automatic retrieval system -Term weighting + vector similarity -Experimented with many ideas for indexing -Did statistical significance test -Major findings: Gerard Salton + weighted terms help (Harvard, Cornell) + automatic indexing is as good as manual indexing + Indexing based on abstracts outperforms titles + linguistic phrases and statistical phrases are similar 31

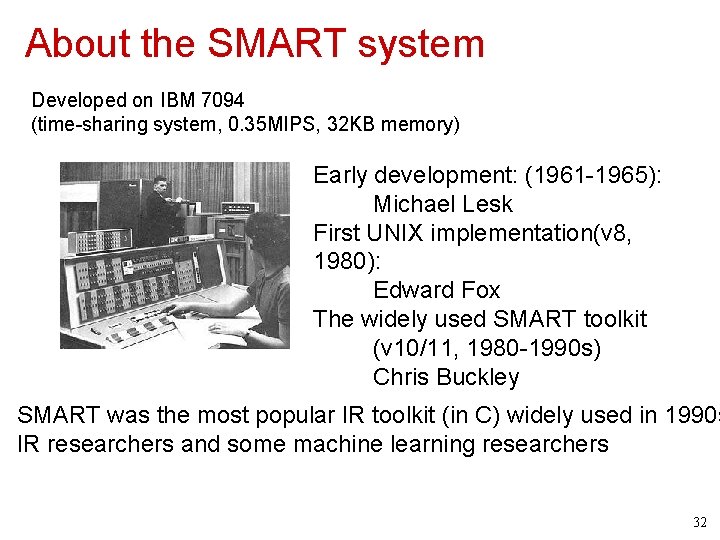

About the SMART system Developed on IBM 7094 (time-sharing system, 0. 35 MIPS, 32 KB memory) Early development: (1961 -1965): Michael Lesk First UNIX implementation(v 8, 1980): Edward Fox The widely used SMART toolkit (v 10/11, 1980 -1990 s) Chris Buckley SMART was the most popular IR toolkit (in C) widely used in 1990 s IR researchers and some machine learning researchers 32

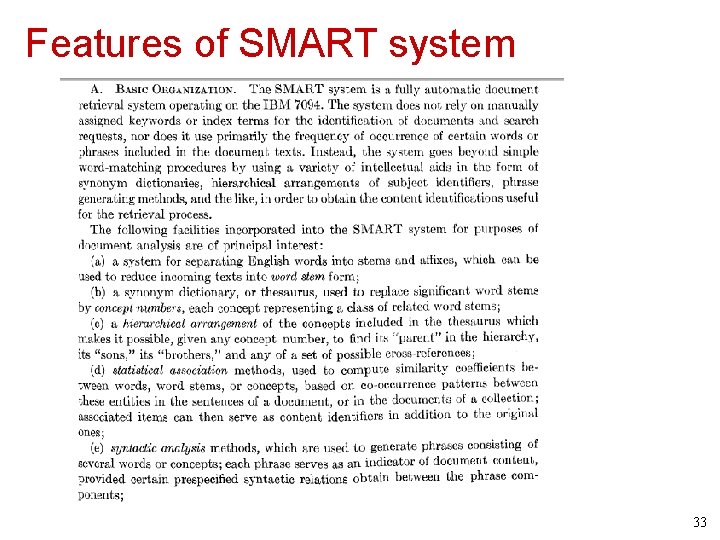

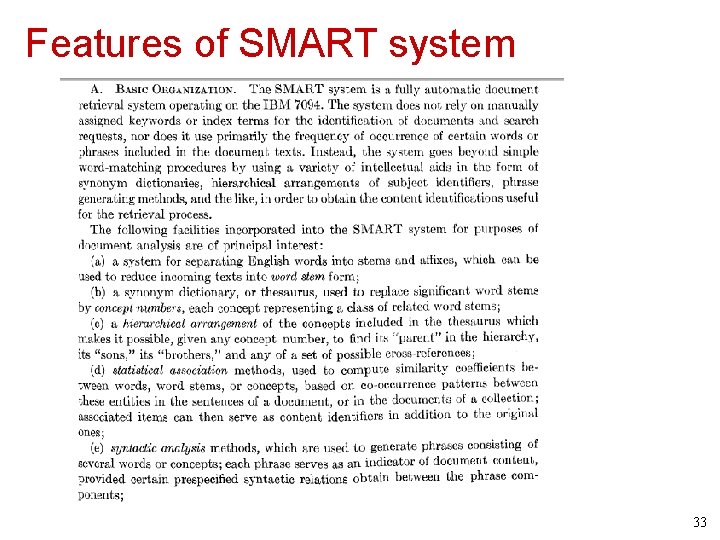

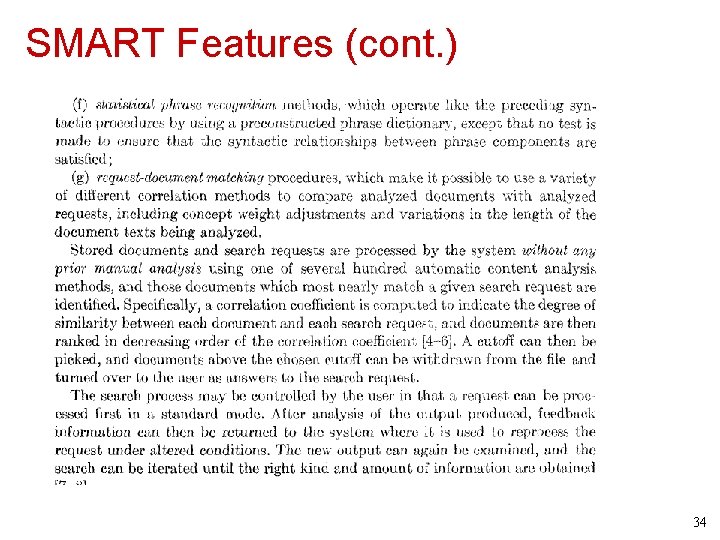

Features of SMART system 33

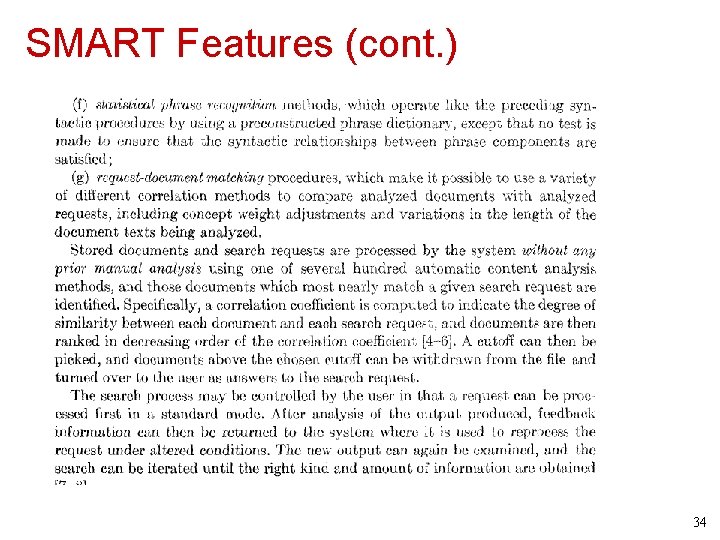

SMART Features (cont. ) 34

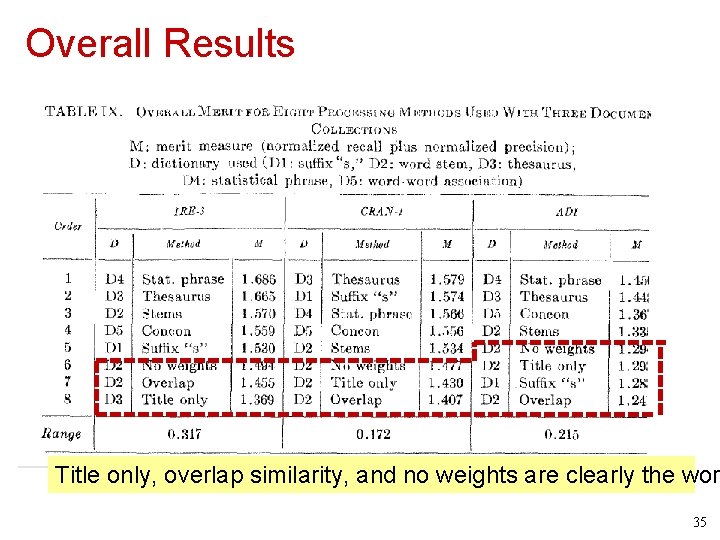

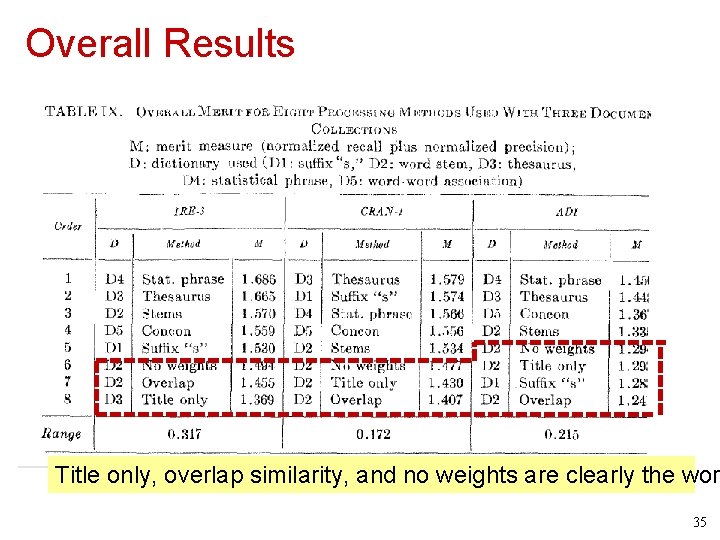

Overall Results Title only, overlap similarity, and no weights are clearly the wor 35

Key Findings • Term weighting is very useful (better than binary values) • Cosine similarity is better than the overlap similarity measure • Using abstracts for indexing is better than using titles only • Synonyms are helpful • Automatic indexing may be as effective as manual indexing 36

Milestone 4: Probabilistic model & feedback 37

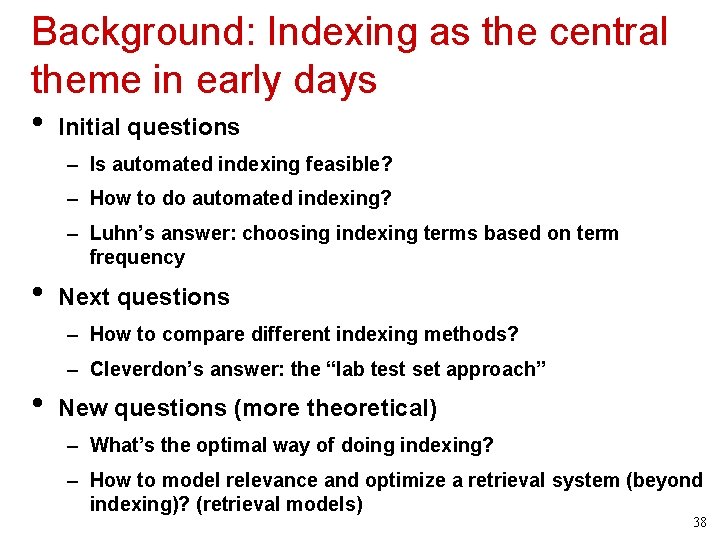

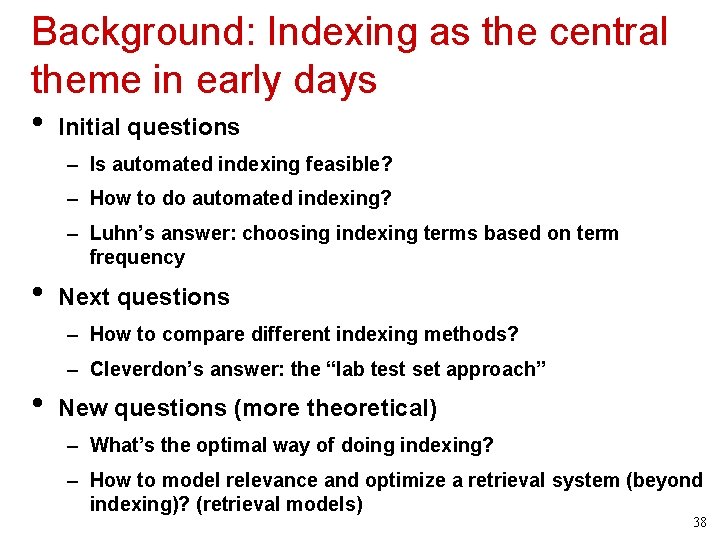

Background: Indexing as the central theme in early days • Initial questions – Is automated indexing feasible? – How to do automated indexing? – Luhn’s answer: choosing indexing terms based on term frequency • Next questions – How to compare different indexing methods? – Cleverdon’s answer: the “lab test set approach” • New questions (more theoretical) – What’s the optimal way of doing indexing? – How to model relevance and optimize a retrieval system (beyond indexing)? (retrieval models) 38

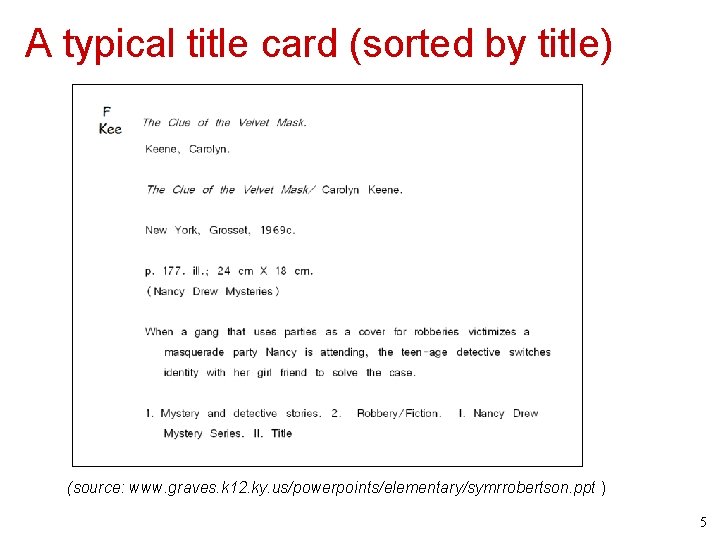

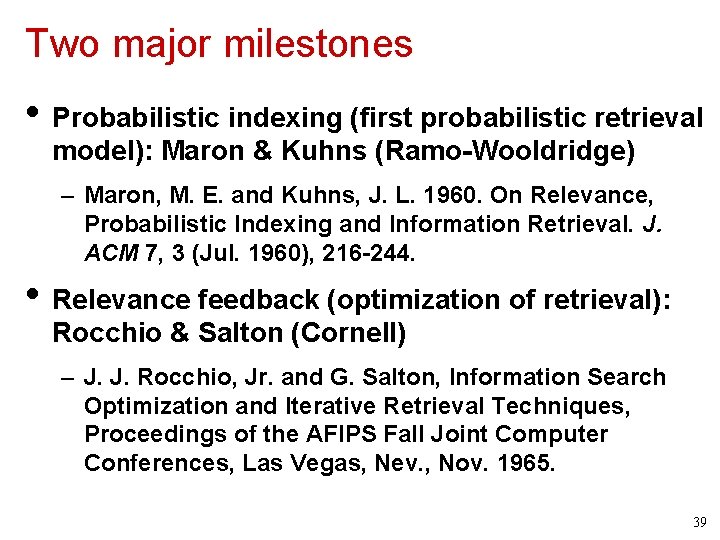

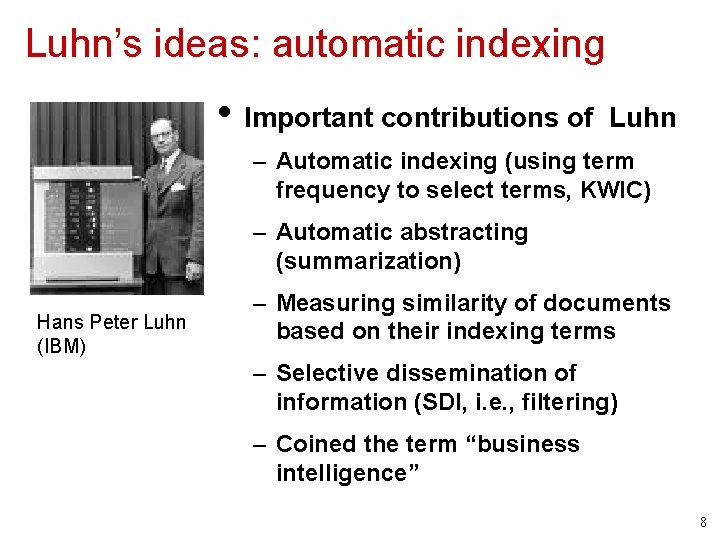

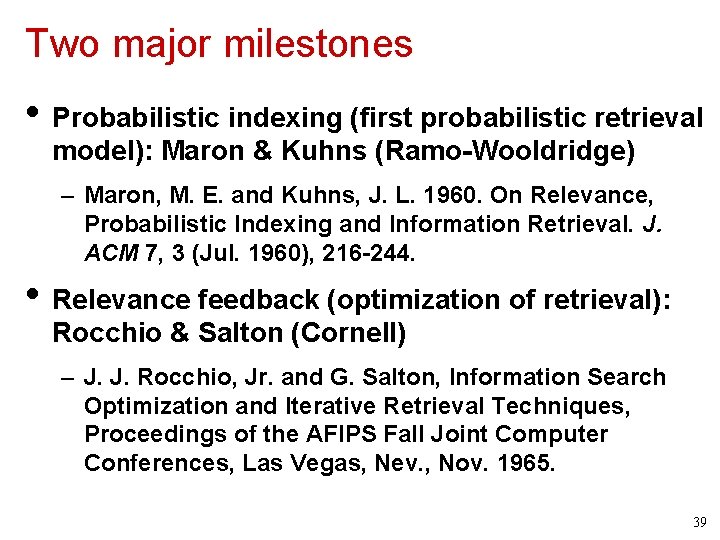

Two major milestones • Probabilistic indexing (first probabilistic retrieval model): Maron & Kuhns (Ramo-Wooldridge) – Maron, M. E. and Kuhns, J. L. 1960. On Relevance, Probabilistic Indexing and Information Retrieval. J. ACM 7, 3 (Jul. 1960), 216 -244. • Relevance feedback (optimization of retrieval): Rocchio & Salton (Cornell) – J. J. Rocchio, Jr. and G. Salton, Information Search Optimization and Iterative Retrieval Techniques, Proceedings of the AFIPS Fall Joint Computer Conferences, Las Vegas, Nev. , Nov. 1965. 39

![Probabilistic Indexing Maron Kuhns 60 Major contributions Formalization of relevance with Probabilistic Indexing [Maron & Kuhns 60] • Major contributions: – Formalization of “relevance” with](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-40.jpg)

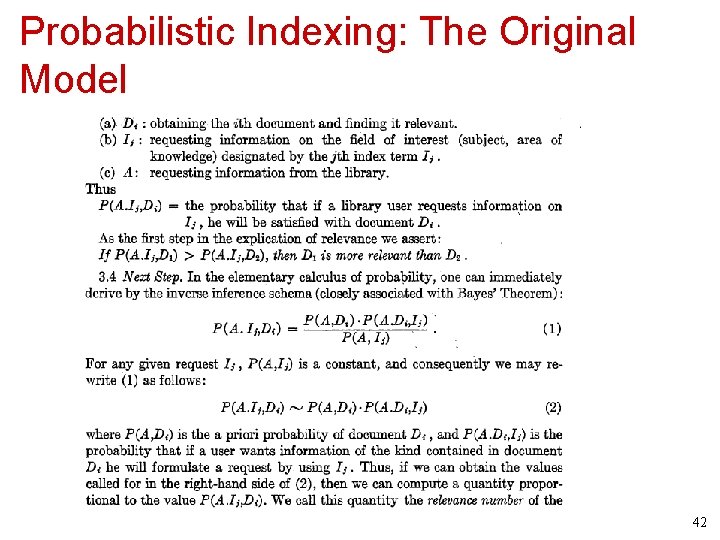

Probabilistic Indexing [Maron & Kuhns 60] • Major contributions: – Formalization of “relevance” with a probabilistic model – Ranking documents based on “probable relevance of a document to a request” – Optimizing retrieval accuracy = statistical inference • Key insights: – Indexing shouldn’t be a “go or no-go” binary choice – We need to quantify relevance – Take a theoretical approach (use a math model) • Other contributions: – Query expansion (leveraging term association) – Document expansion (pseudo feedback) – IDF 40

Now, think about how to define an optimal retrieval model using a probabilistic framework… 41

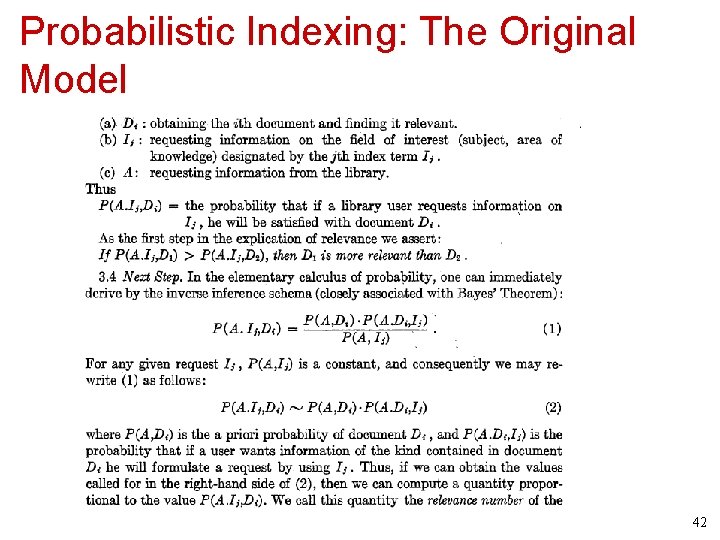

Probabilistic Indexing: The Original Model 42

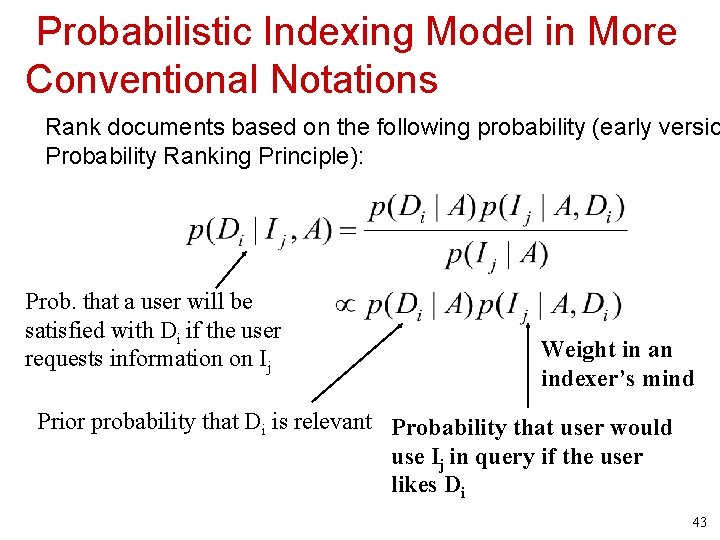

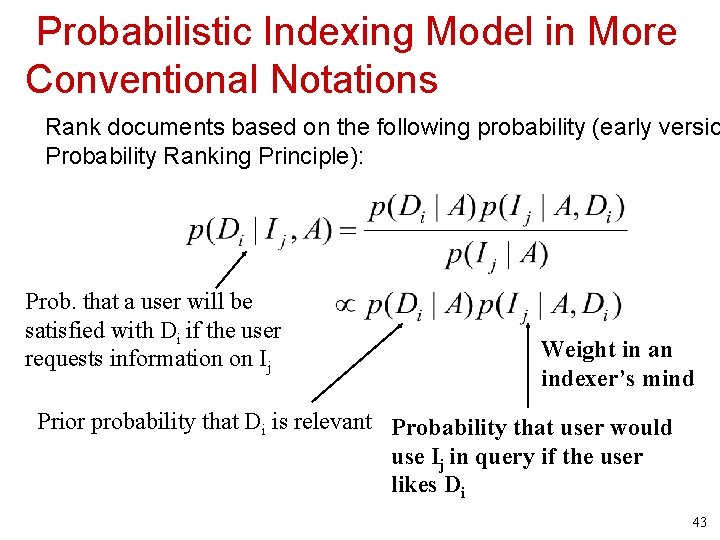

Probabilistic Indexing Model in More Conventional Notations Rank documents based on the following probability (early versio Probability Ranking Principle): Prob. that a user will be satisfied with Di if the user requests information on Ij Weight in an indexer’s mind Prior probability that Di is relevant Probability that user would use Ij in query if the user likes Di 43

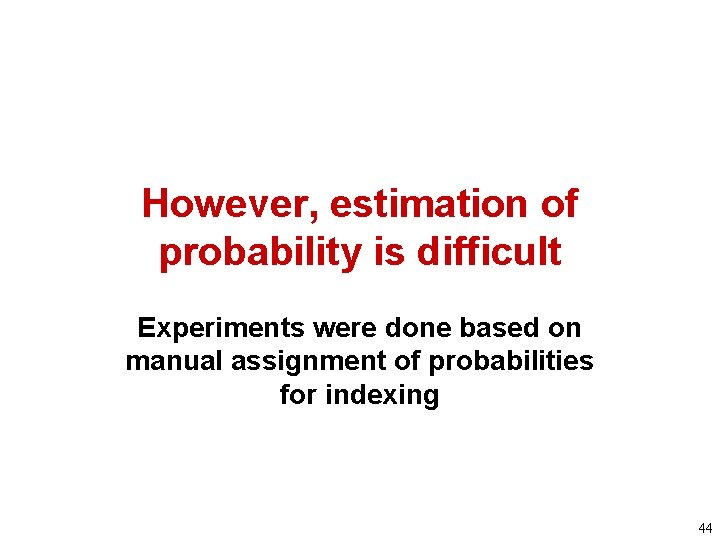

However, estimation of probability is difficult Experiments were done based on manual assignment of probabilities for indexing 44

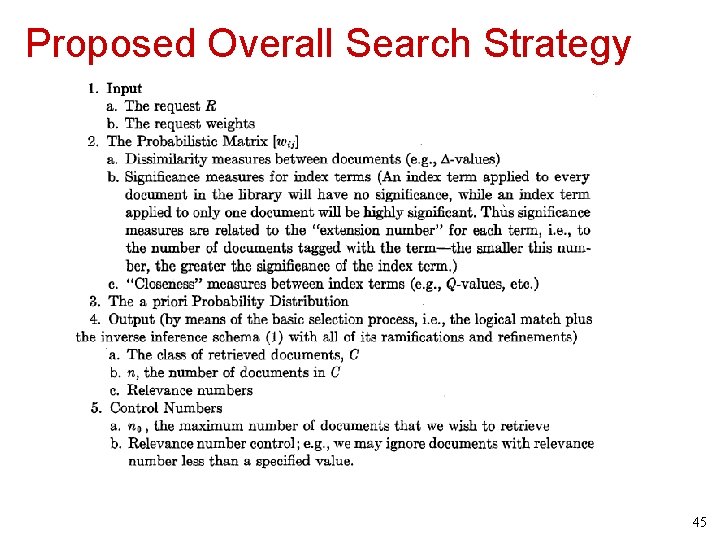

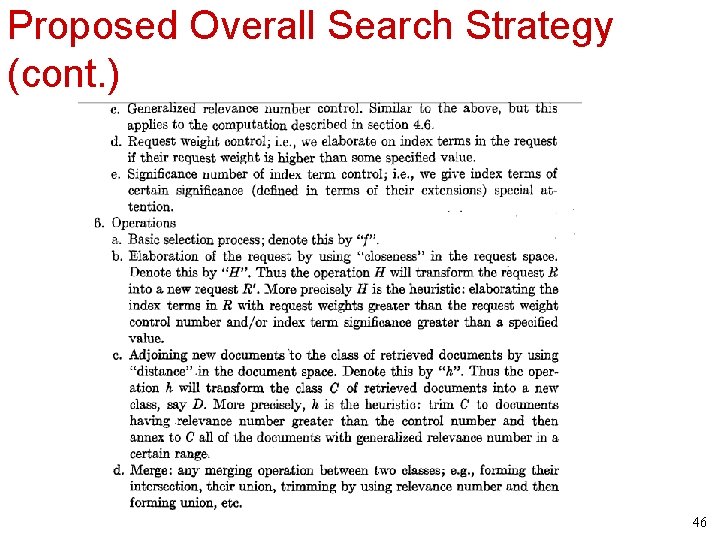

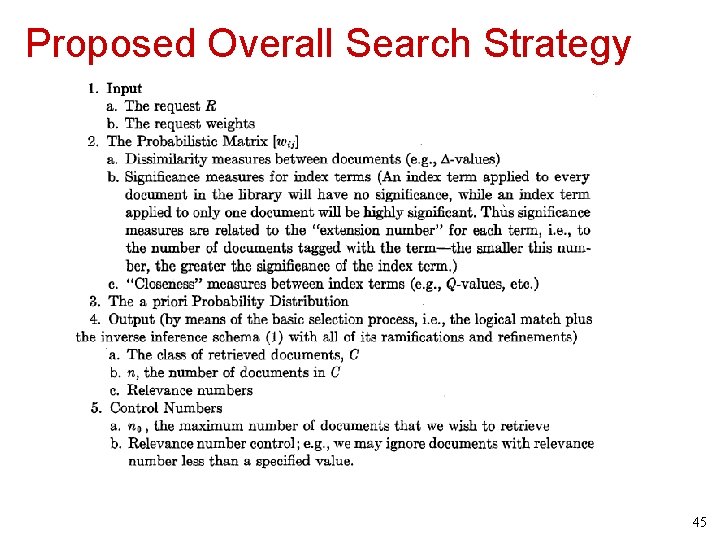

Proposed Overall Search Strategy 45

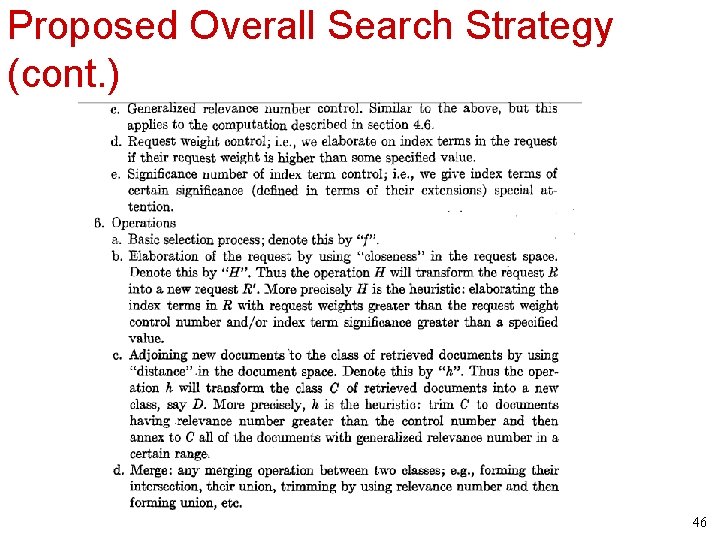

Proposed Overall Search Strategy (cont. ) 46

![Relevance Feedback Rocchio 65 47 Relevance Feedback [Rocchio 65] 47](https://slidetodoc.com/presentation_image/2705e918ce66a84bd39f5062161de657/image-47.jpg)

Relevance Feedback [Rocchio 65] 47

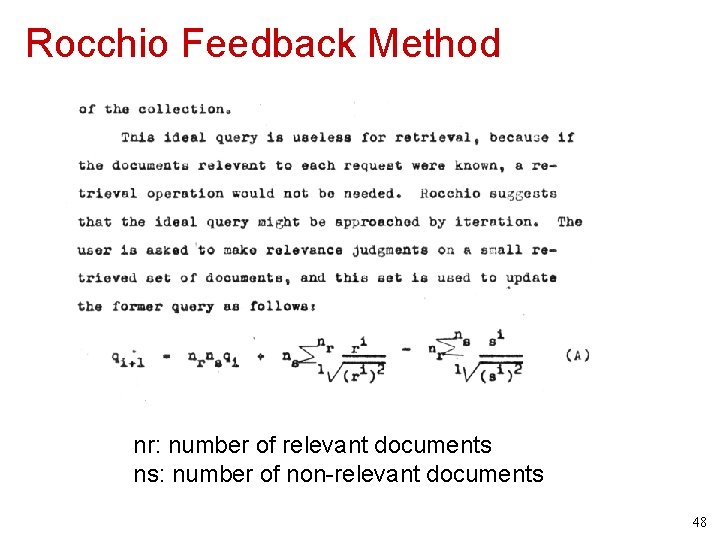

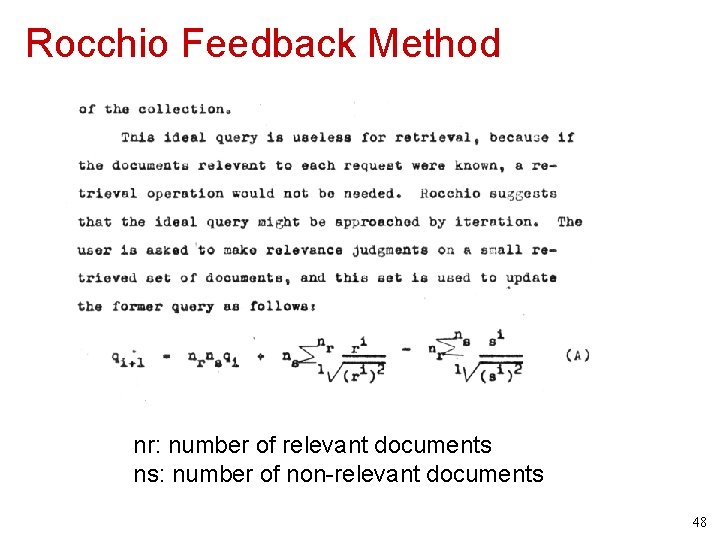

Rocchio Feedback Method nr: number of relevant documents ns: number of non-relevant documents 48

What You Should Know • Research in IR was very active in 1950 s and 1960 s! • Most foundational work were done at that time – Luhn’s work proved the feasibility of automatic indexing, thus automatic retrieval – Cleverdon’s work set the standard for evaluation – Salton’s work led to the very first IR system and a large body of IR algorithms – Maron & Kuhns proposed the first probabilistic model for IR – Rocchio pioneered the use of supervised learning for IR (relevance feedback) 49

Chengxiang zhai

Chengxiang zhai Cheng xiang zhai

Cheng xiang zhai Cheng xiang zhai

Cheng xiang zhai Cheng xiang zhai

Cheng xiang zhai Cheng xiang zhai

Cheng xiang zhai Cheng xiang zhai

Cheng xiang zhai E e e qu xiang xiang tian ge

E e e qu xiang xiang tian ge Xiang cheng mit

Xiang cheng mit Xiang cheng mit

Xiang cheng mit Milestones for complex needs

Milestones for complex needs Social development in adulthood 19-45

Social development in adulthood 19-45 Early adulthood milestones

Early adulthood milestones Biological theory of aging

Biological theory of aging Seth zhai

Seth zhai Molly zhai

Molly zhai Vex xiang

Vex xiang Liu xiang weightlifter

Liu xiang weightlifter Liu xiang

Liu xiang Xiang yu liu bang

Xiang yu liu bang Its rosewhatt

Its rosewhatt Xiang yang liu

Xiang yang liu Miscosoft forms

Miscosoft forms Jessie xiang

Jessie xiang Pu tao you

Pu tao you Perfume xia xiang

Perfume xia xiang Vex xiang

Vex xiang Yongqing xiang

Yongqing xiang Early cpr and early defibrillation can: *

Early cpr and early defibrillation can: * Developmental milestones بالعربي

Developmental milestones بالعربي Pitch deck milestones

Pitch deck milestones Milestones family medicine

Milestones family medicine Father of public health dentistry in world

Father of public health dentistry in world Developmental milestones 40-65 years

Developmental milestones 40-65 years Iap teaching slides

Iap teaching slides Eog grading scale 1-5

Eog grading scale 1-5 Eesa subtest

Eesa subtest Milestones vs deliverables

Milestones vs deliverables Milestone in project charter

Milestone in project charter Thomas tay

Thomas tay Molecular response

Molecular response Vb mapp graph

Vb mapp graph Prototype analitik adalah

Prototype analitik adalah Georgia milestones formula sheet

Georgia milestones formula sheet Georgia milestones eog score interpretation guide

Georgia milestones eog score interpretation guide Toyota chairman of the board

Toyota chairman of the board Internet milestones

Internet milestones Grs curtin

Grs curtin Milestone 1 curtin

Milestone 1 curtin Conclusion of developmental milestones

Conclusion of developmental milestones Software milestones

Software milestones