Dynamo Amazons Highly Available Key Value Store Authors

Dynamo: Amazon’s Highly Available Key Value Store Authors Giuseppe De. Candia, Deniz Hastorun, Madan Jampani, Gunavardhan Kakulapati, Avinash Lakshman, Alex Pilchin, Swaminathan Sivasubramanian, Peter Vosshall and Werner Vogels Pooja Singhal Tilak Paija Pun Seattle University Tuesday, November 29, 2011

Outline • • • Introduction Motivation Problem Statement Background Related Work Architecture Implementation Experiences & Lesson Learned Conclusion Summary

Introduction • • Dynamo: Key-Value store, underlying storage infrastructure for some of Amazon's core Web Services

Motivation • Scalability of a system directly dependent on how application state is managed • Many of Amazon's services only need primarykey access to a data store, e. g. , o o • Best Seller lists Shopping carts Customer preferences Product catelogs Use of RDBMS limits scability, availability, and causes efficiency

Problem Statement • How to provide "always available" storage system because even a brief outage may mean $$$$ in cost and customer trust? • How to provide a storage system that scales well when demands increase? • How to provide a storage system that's configurable to meet a variety of SLA requirements (Availability, Performance, and Cost)?

Solution • Use Partitioning (Note: consistent hashing) • Use Replication (Note: versioning to facililate consistency) • Make the system decentralized (Note: quorumbased management) • Reduce the need for manual administration (Note: nodes added/removed and the system adjusts self)

Background • Amazon's e-commerce made up of hundreds of services running on thousands of servers across data-centers around the world • Traditionally RDBMS used in production to persist application state (RDBMS far from ideal for Amazon's use cases) • Dynamo successfully addresses the needs of Amazon's use cases

Assumptions and requirements • Query Model: simple read/write identified by a key. Size of objects stored < 1 MB. • ACID properties: ACID results in poor availability. So, in Dynamo, weaker Consistency, and no Isolation guarantee. • Efficiency: must be able to meet strict SLA agreements. • Others: used internally, non-hostile environment, no security and authentication required. Only hundreds of nodes.

Design considerations • Choose eventually consistent model • Resolve conflicts at read time rather than write time • Allow applications an option to resolve conflicts themselves • Allow incremetal scability (one node at a time) • Provide symmetry (treat all nodes as equal) • Provide decentralization (prefer peer-to-peer control over centralized one) • Respect heterogeneity (some nodes may have more powerful hardware)

Related Work • Unstructured P 2 P networks o o • Structured P 2 P networks o o • Freenet Gnutella Pastry Chord Distributed File Systems and Databases o o Ficus Coda GFS Bigtable, etc

Then, why do we need Dynamo? • Dynamo does not need hierarchical names spaces and associated overhead (a norm in many file systems) • Dynamo needs to meet latency sensitive SLAs (Pastry and Chord provide multi-hop routing and introduces variability in latency while Dynamo provides zero-hop routing) • Dynamo is "always writable" store (No writes are rejected even when failures occur)

Dynamo : System Architecture Partition � o o Done based on a varient of consistent hashing technique Each node is responsible for a set of "virtual nodes" (each node assigned to mutiple points instead of single point in the ring)

Dynamo : System Architecture contd. Replication � o Data replicated at N hosts. o Each node responsible for a range of keys. A node responsible for key, k, also responsible for replicating it to the next N-1 subsequent nodes in the ring o List of nodes responsible for storing a key, k, known as preference list.

Dynamo : System Architecture contd. Data versioning � o o o Dynamo uses "eventual consistency" (It means an update will propagate asynchronously to all replicas eventually) Vector clock passed around When concurrent updates occur, Dynamo will return all the versions

Dynamo : System Architecture contd. Execution of get() and put() operations • Two ways to select a node to perform these tasks � o o Via generic load balancer (Simple but may involve multple forwarding) Via partion-aware client library (Provides lower latency)

Handling Temporary Failures: Sloppy Quorum • Sloppy Quorum (N, R, W) • R, W is the min number of nodes must participate in a successful Read or Write. • All operations performed on first N healthy nodes. • Latency of model is detected by slowest R or W replicas. Configuring R and W less then N provides better latency ◦ Highest Availability -> Tune W = 1, R = N. In practice, set W to Higher value to achieve ? ? ◦ Highest Read -> Tune R = 1, W = N Why not Traditional Quorum? What is traditional Quorum? • Unavailable during Server failures and Network Partition • Reduced Durability under simple failures ◦

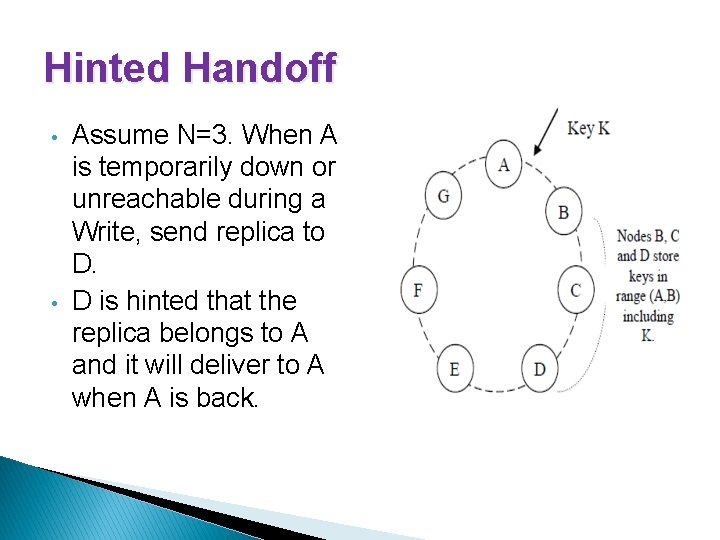

Handling Temporary Failures: Hinted Handoff • R/W operations are not failed due to temp. node or network failure • If node is unavailable, put() request is sent and written to another • node. This data is called a “hinted replica”. • The hinted replica’s context metadata includes what the original node was. • As a background job, upon detecting that original node has recovered, the hinted replica is written to original node. Dynamo Design • Each object is replicated across multiple data centers. • Preference list is constructed with nodes spread over multiple data centers. • Handle Entire Data Center failures.

Hinted Handoff • • Assume N=3. When A is temporarily down or unreachable during a Write, send replica to D. D is hinted that the replica belongs to A and it will deliver to A when A is back.

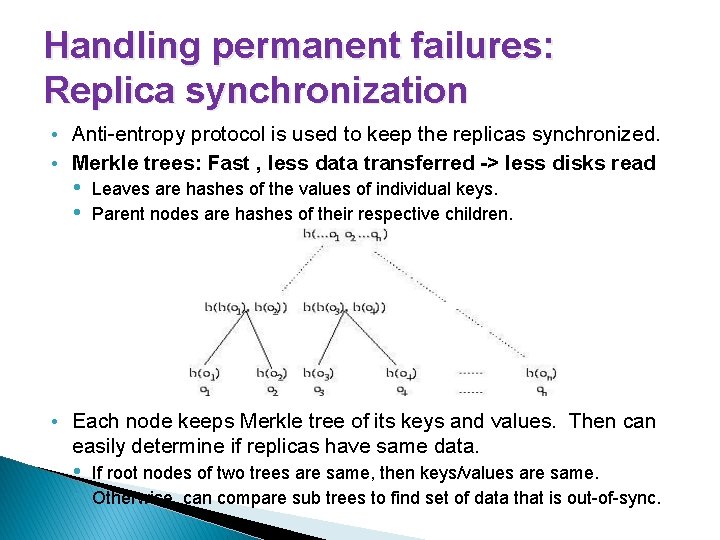

Handling permanent failures: Replica synchronization • Anti-entropy protocol is used to keep the replicas synchronized. • Merkle trees: Fast , less data transferred -> less disks read • Leaves are hashes of the values of individual keys. • Parent nodes are hashes of their respective children. • Each node keeps Merkle tree of its keys and values. Then can easily determine if replicas have same data. • • If root nodes of two trees are same, then keys/values are same. Otherwise, can compare sub trees to find set of data that is out-of-sync.

Node Membership and Failure Detection • Membership • Gossip-based protocol to propagate node additions/removals to ring. Each node contacts a random peer every second, and they exchange data on partitioning and placement (i. e. , preference lists). • Additionally, explicit node join/leave commands are used. • “Seeds” are a subset of nodes (configurable) known to all nodes. Eventually each node will gossip with a seed node. This reduces chances of partition. • Local failure detection • To identify which nodes are unreachable, each node maintains its own list of available nodes. No need for a globally consistent view. • If a node does not respond to a message, it is marked as unreachable. • Periodically, the node retries the unreachable nodes.

Node Membership and Failure Detection � Membership and Failure Detection • Explicit mechanism available to initiate the addition and removal of nodes from a Dynamo ring. • To prevent logical partitions, some Dynamo nodes play the role of seed nodes. • Seeds: Nodes that are discovered by an external mechanism and known to all nodes. • Failure detection of communication done in a purely local manner. • Gossip-based distributed failure detection and membership protocol

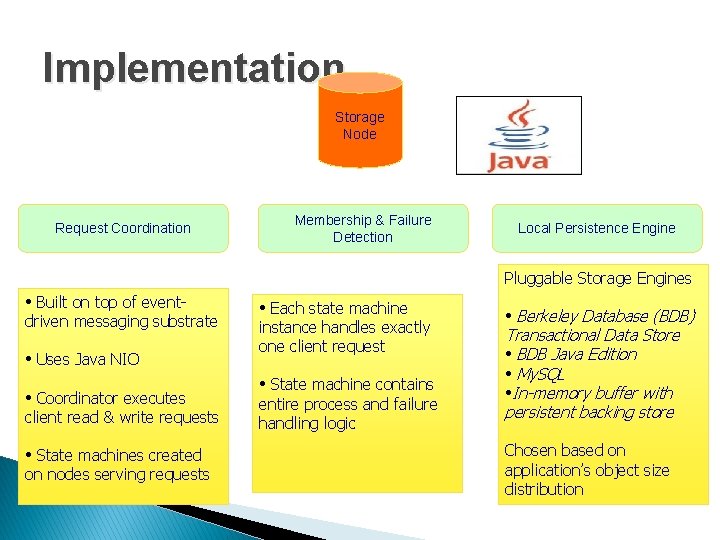

Implementation Storage Node Request Coordination Membership & Failure Detection Local Persistence Engine Pluggable Storage Engines • Built on top of event- driven messaging substrate • Uses Java NIO • Coordinator executes client read & write requests • State machines created on nodes serving requests • Each state machine instance handles exactly one client request • State machine contains entire process and failure handling logic • Berkeley Database (BDB) Transactional Data Store • BDB Java Edition • My. SQL • In-memory buffer with persistent backing store Chosen based on application’s object size distribution

Local Persistence Engine • • Allows different storage engines plugged in. Berkeley Database, Transactional Data Store, My. SQL, In-Memory Buffer with persistent backing store. Design: Choose that Storage Engine which best suited for Application Access Patterns Applications choose Dynamo Local Persistent Engine based upon object size distribution

Request Coordination • • • Built on top of Event Driven Messaging substrate : Message Processing pipeline is split into multiple stages. Coordinator executes Read and write on behalf of Client. Read: Collect data from one or more nodes Write: Storing data at one or more nodes State Machine is created at the node that received Client Request. State Machine : contains all the logic

State Machine • • Identifying the nodes responsible for a key. Sending the requests Waiting for responses Retries Processing the replies Packaging the response to Client. Read Repair: State machine waits for a small period to receive any outstanding responses. If stale versions were returned in any of the responses, coordinator updates those nodes with the latest version.

Experiences & Lesson Learned

Different Version Reconciliation Logics • • • Business Logic specific Reconciliation: • Popular use • Each object is replicated across multiple nodes • Client performs its own reconciliation logic (not Dynamo) in case of divergent versions. • Example: Shopping Cart. Client application merges cart contents. Timestamp based reconciliation • Dynamo uses “last write wins” reconciliation mechanism based on timestamp. • Example: User Sessions High Performance Read Engine • Some services have high Read Request rate, small updates • R = 1 and W = N • Partition and replicate data across multiple nodes • Example: Product Catalog, Promotional Items

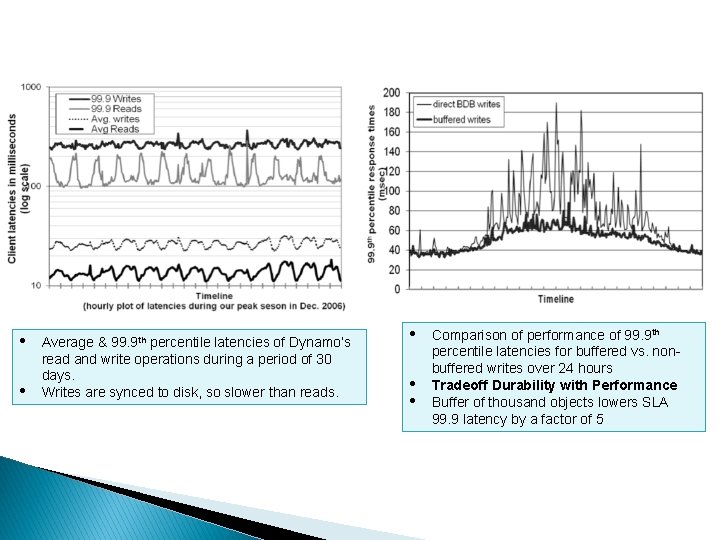

Balancing Performance and Durability Highly available Data store, Performance is equally important! Replica Synchronization: keep replica synchronized • Typical SLA : 99. 9% of R and W execute with in 300 ms • Problems: � � • 1) Commodity Hardware • 2) R and W on multiple nodes : Performance limited by slowest R and W • Few Customers Services: Dynamo tradeoff durability for Performance

• • Average & 99. 9 th percentile latencies of Dynamo’s read and write operations during a period of 30 days. Writes are synced to disk, so slower than reads. • • • Comparison of performance of 99. 9 th percentile latencies for buffered vs. nonbuffered writes over 24 hours Tradeoff Durability with Performance Buffer of thousand objects lowers SLA 99. 9 latency by a factor of 5

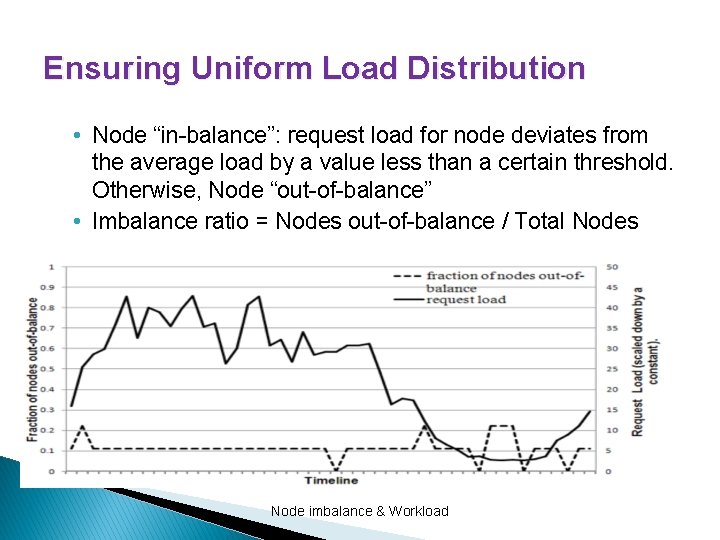

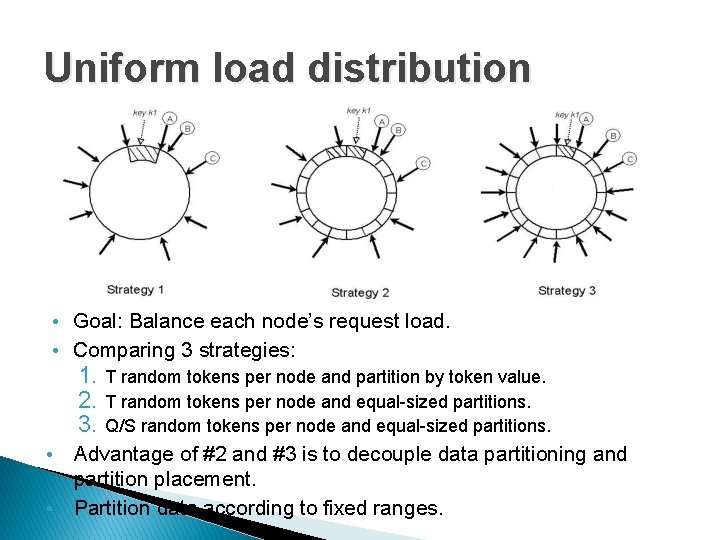

Ensuring Uniform Load Distribution Uses consistent Hashing � to Partition its Key space across Replicas � To ensure uniform Load Distribution � Three Strategies � Strategy 1: T Random tokens per node, partition by token value � Strategy 2: T Random tokens per node, equal sized partitions � Strategy 3: Q/S tokens per node, equal sized partitions (S= #allnodes, Q= #partitions) �

Ensuring Uniform Load Distribution • Node “in-balance”: request load for node deviates from the average load by a value less than a certain threshold. Otherwise, Node “out-of-balance” • Imbalance ratio = Nodes out-of-balance / Total Nodes Node imbalance & Workload

Uniform load distribution • Goal: Balance each node’s request load. • Comparing 3 strategies: 1. T random tokens per node and partition by token value. 2. T random tokens per node and equal-sized partitions. 3. Q/S random tokens per node and equal-sized partitions. • • Advantage of #2 and #3 is to decouple data partitioning and partition placement. Partition data according to fixed ranges.

Divergent Versions: When and How Many? Dynamo Design: Tradeoff Consistency for Availability � Two Factors 1) Failure Scenarios 2) Large number of Concurrent Writers to a single Data Item: Multiple nodes coordinating updates concurrently. What we need! • Keep Divergent versions as low as possible -> Efficiency & Usability • Version Reconciliation: Use Vector clocks (Syntactical Reconciliation)-> Not Reconciled -> Use Business logic(Semantic Reconciliation). • Semantic Reconciliation: Downside – Additional Load –> Minimize it � � Experiment – Shopping Cart Service – 24 hours � • • 99. 94% of requests saw 1 version 0. 00057% saw 2 versions 0. 00047% saw 3 versions 0. 000009% saw 4 versions.

Client Driven/Server Driven Coordination Request Coordination component: State Machine handles incoming requests. Server Driven Approach: • Load Balancer uniformly assigns Client request to nodes in the ring. • Read Request Coordinator: Any Dynamo node • Write Request Coordinator: 1) Node in Key’s current Preference list 2) Any random Node (Versioning scheme: Physical time stamp) � � � Client Driven Approach: Use Library for Request Coordination locally. • Download Membership state from a random Dynamo Node (after 10 s) • Read Coordination: coordinated by client node • Write Coordination: 1) forward to a node in Preference list, 2)Co-ordinated locally (Timestamp based Versioning) �

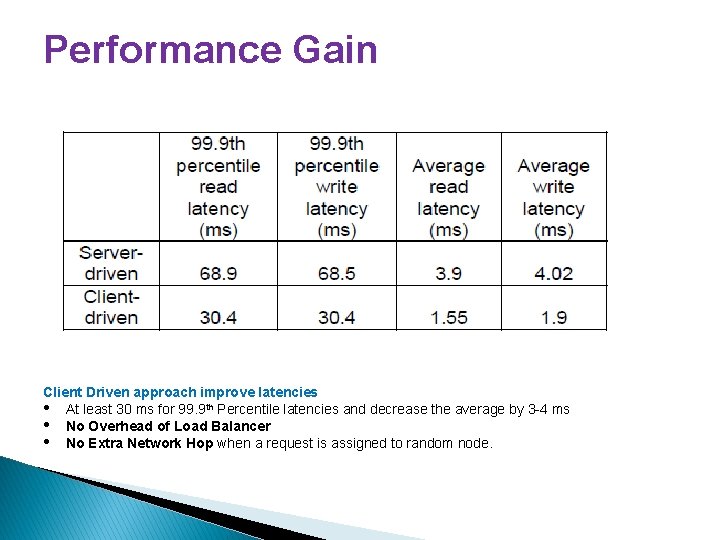

Performance Gain Client Driven approach improve latencies • At least 30 ms for 99. 9 th Percentile latencies and decrease the average by 3 -4 ms • No Overhead of Load Balancer • No Extra Network Hop when a request is assigned to random node.

Balancing Background Versus Foreground Task • • Each Node performs Background Tasks : Replica Synchronization, Data Handoff, and Foreground Tasks – (get() , put() ) Background tasks trigger resource contention and affect performance of get() and put() operations. Necessary to ensure Background tasks does not effect critical operations Background Tasks integrate with Admission Control Mechanism to reserve Run Time slices of shared Resources. It constantly monitors resource accesses like latencies of disk operations, failed DB accesses and decides how many time slices will be available to background tasks. Use Feedback Loop to limit the intrusiveness of background tasks. A Feedback Mechanism based on the performance of all the foreground tasks can change the number of slices available to background tasks.

Summary: Experiences & Lesson Learned • Different Services use Dynamo with different Configuration! • Different Read/Write Quorum characteristics • Client applications can tune parameters to achieve specific objectives: • • N: Performance, Durability {no. of hosts a data item is replicated at} R: Availability, Consistency {min. no. of nodes in a successful read} W: Durability, Consistency {min. no. of nodes in a successful write} Commonly used configuration (N, R, W) = (3, 2, 2) • Dynamo exposes data consistency & reconciliation logic to developers • Dynamo adopts a full membership model – each node is aware of the data hosted by its peers

Conclusion Dynamo: • Is a highly available and scalable data store • Is used for storing state of a number of core services of Amazon. com’s ecommerce platform • Has provided desired levels of availability and performance and has been successful in handling: � • • Server failures Data center failures Network partitions Is incrementally scalable Sacrifices consistency under certain failure scenarios Extensively uses object versioning and application-assisted conflict resolution Allows service owners to: • • scale up and down based on their current request load customize their storage system to meet desired performance, durability and consistency SLAs by allowing tuning of N, R, W parameters • Combination of decentralized techniques can be combined to provide a single highly-available system.

Summary • • • Always Writeable Consistent Caching Vector Clock Recovery from permanent failures: Replica Synchronization: Anti – entropy using Merkle Trees Sloppy Quorum and Hinted Handoff Membership and Failure Detection: Gossip

References • • • http: //www. allthingsdistributed. com/2007/10/amazons_dynamo. html www. cc. gatech. edu/~lingliu/courses/cs 4440/notes/Yash. Dynao. ppt http: //www. cs. uwaterloo. ca/~arkane/CS 848%20 -%20 Dynamo%20 Presentation. pdf http: //csse. usc. edu http: //cs. nyu. edu

THANK YOU

- Slides: 41