Dynamic Workcell for Industrial Robots Yanfei Liu Dept

Dynamic Workcell for Industrial Robots Yanfei Liu Dept. of Electrical and Computer Engineering Clemson University, SC CLEMSON UNIVERSITY

Outline • Motivation for this research – Current status of vision in industrial workcells – A novel industrial workcell with continuous visual guidance • Work that has been done – Our prototype: camera network based industrial workcell – A new generic timing model for vision-based robotic systems – Dynamic intercept and manipulation of objects under semi -structured motion – Grasping research using a novel flexible pneumatic endeffector Clemson University

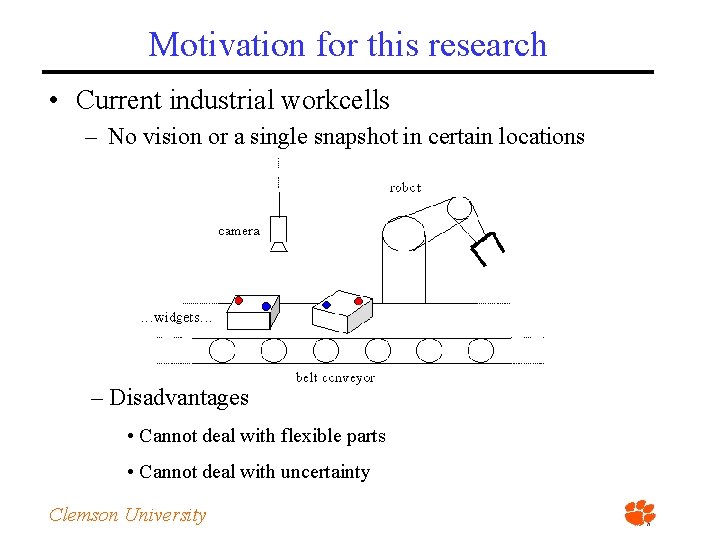

Motivation for this research • Current industrial workcells – No vision or a single snapshot in certain locations – Disadvantages • Cannot deal with flexible parts • Cannot deal with uncertainty Clemson University

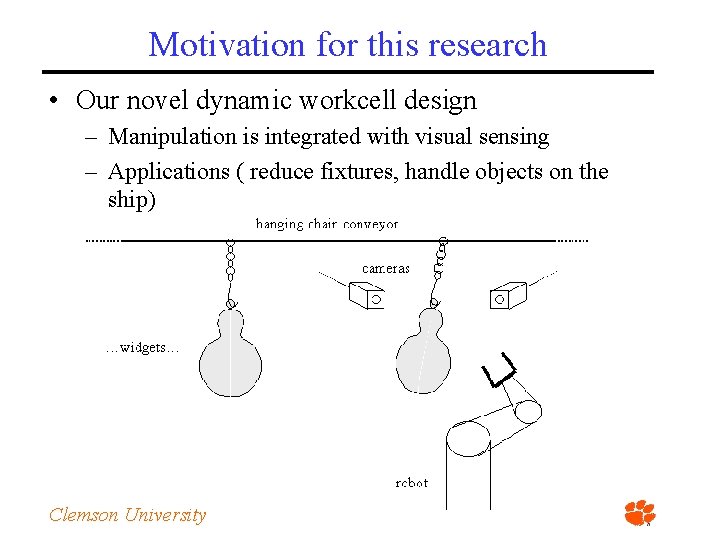

Motivation for this research • Our novel dynamic workcell design – Manipulation is integrated with visual sensing – Applications ( reduce fixtures, handle objects on the ship) Clemson University

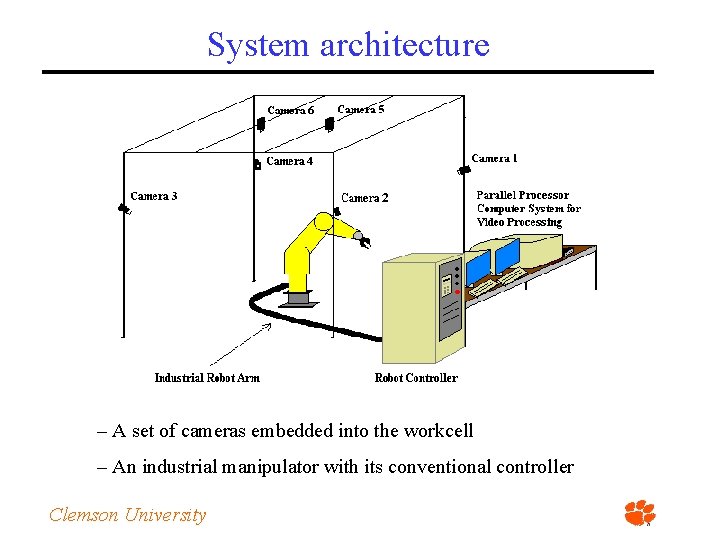

System architecture – A set of cameras embedded into the workcell – An industrial manipulator with its conventional controller Clemson University

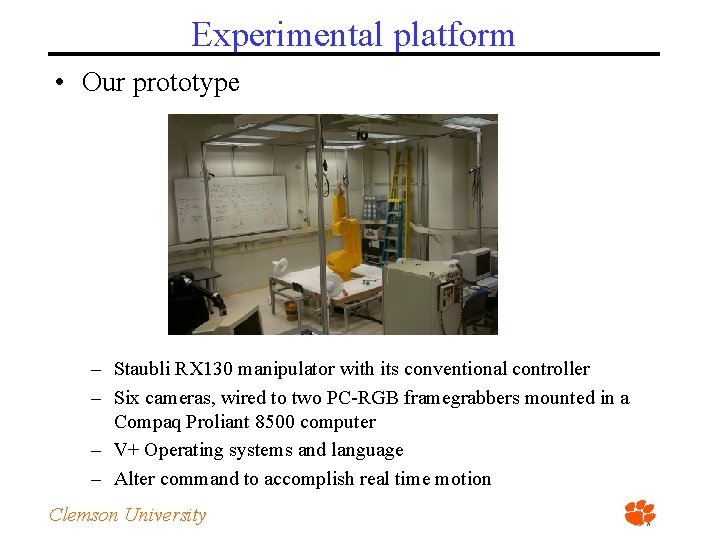

Experimental platform • Our prototype – Staubli RX 130 manipulator with its conventional controller – Six cameras, wired to two PC-RGB framegrabbers mounted in a Compaq Proliant 8500 computer – V+ Operating systems and language – Alter command to accomplish real time motion Clemson University

Tracking experiments Clemson University

First part: A new generic timing model for vision-based robotic system Clemson University

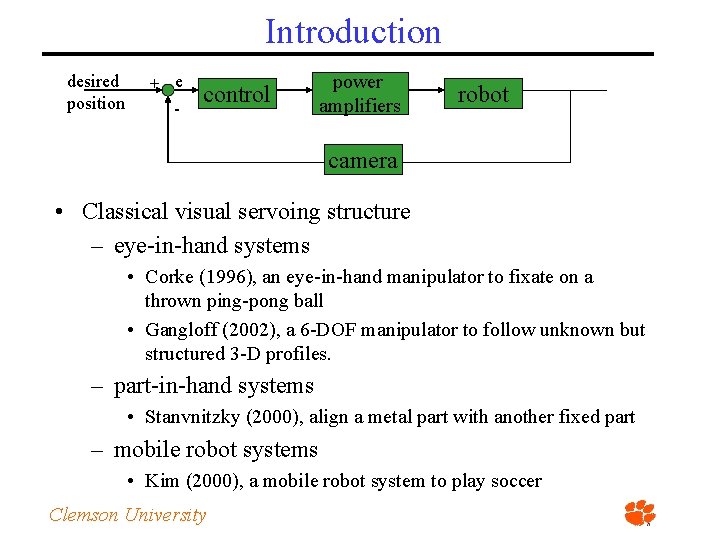

Introduction desired position + e - control power amplifiers robot camera • Classical visual servoing structure – eye-in-hand systems • Corke (1996), an eye-in-hand manipulator to fixate on a thrown ping-pong ball • Gangloff (2002), a 6 -DOF manipulator to follow unknown but structured 3 -D profiles. – part-in-hand systems • Stanvnitzky (2000), align a metal part with another fixed part – mobile robot systems • Kim (2000), a mobile robot system to play soccer Clemson University

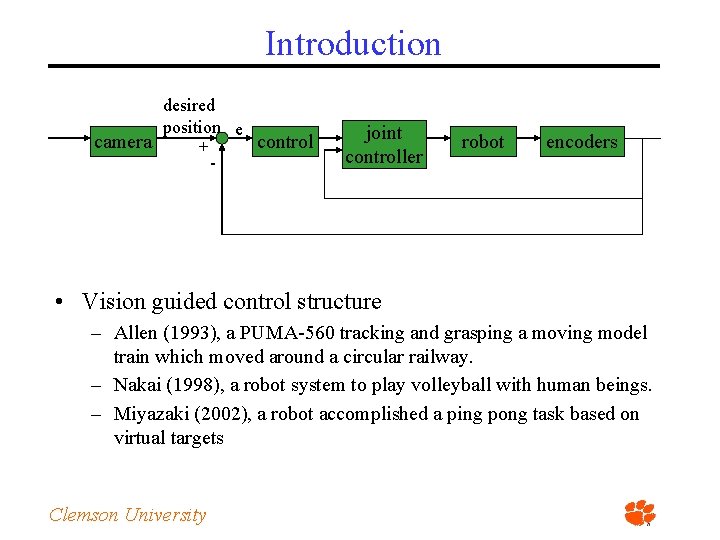

Introduction desired position e camera control + - joint controller robot encoders • Vision guided control structure – Allen (1993), a PUMA-560 tracking and grasping a moving model train which moved around a circular railway. – Nakai (1998), a robot system to play volleyball with human beings. – Miyazaki (2002), a robot accomplished a ping pong task based on virtual targets Clemson University

Introduction • Three common problems in visual systems – Maximum possible rate for complex visual sensing and processing is much slower than the minimum required rate for mechanical control. – Complex visual processing introduces a significant lag (processing lag) between when reality is sensed and when the result from processing a measurement of the object state is available. – A lag (motion lag) is produced when the mechanical system takes time to complete the desired motion. Clemson University

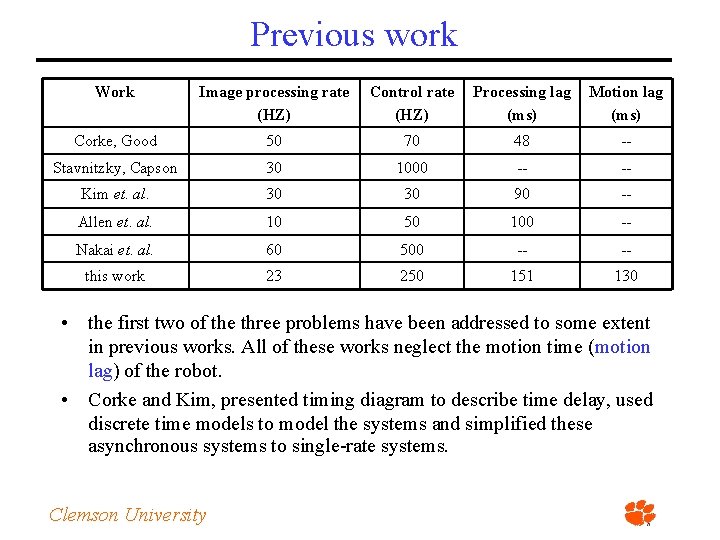

Previous work Work Image processing rate (HZ) Control rate (HZ) Processing lag (ms) Motion lag (ms) Corke, Good 50 70 48 -- Stavnitzky, Capson 30 1000 -- -- Kim et. al. 30 30 90 -- Allen et. al. 10 50 100 -- Nakai et. al. 60 500 -- -- this work 23 250 151 130 • the first two of the three problems have been addressed to some extent in previous works. All of these works neglect the motion time (motion lag) of the robot. • Corke and Kim, presented timing diagram to describe time delay, used discrete time models to model the systems and simplified these asynchronous systems to single-rate systems. Clemson University

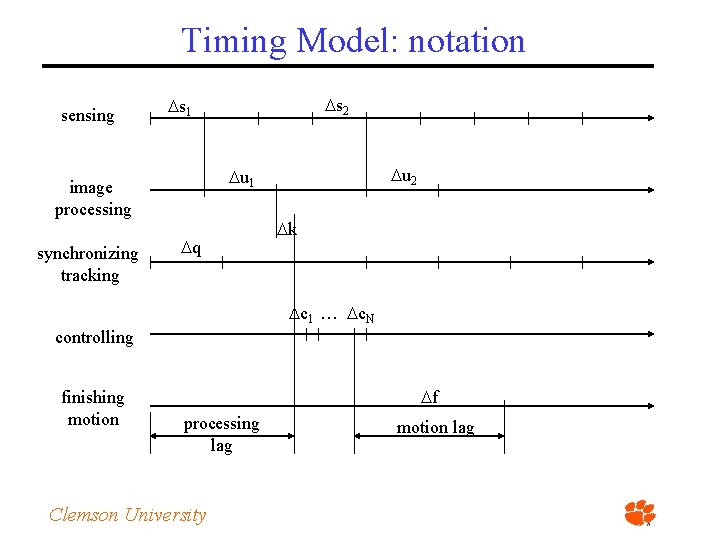

Timing Model: notation sensing u 2 u 1 image processing synchronizing tracking s 2 s 1 q k c 1 … c. N controlling finishing motion f processing lag Clemson University motion lag

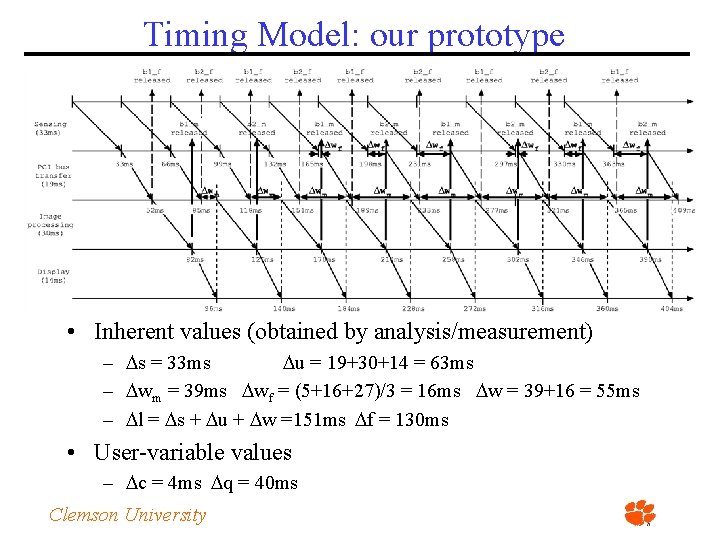

Timing Model: our prototype • Inherent values (obtained by analysis/measurement) – s = 33 ms u = 19+30+14 = 63 ms – wm = 39 ms wf = (5+16+27)/3 = 16 ms w = 39+16 = 55 ms – l = s + u + w =151 ms f = 130 ms • User-variable values – c = 4 ms q = 40 ms Clemson University

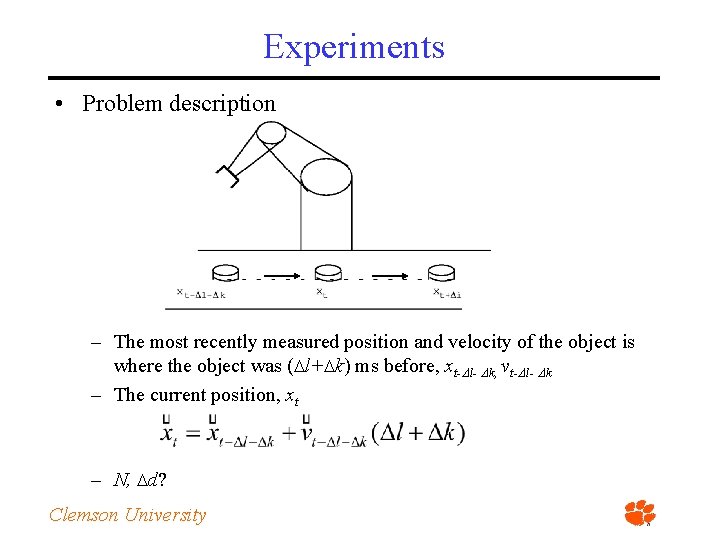

Experiments • Problem description – The most recently measured position and velocity of the object is where the object was ( l+ k) ms before, xt- l- k, vt- l- k – The current position, xt – N, d? Clemson University

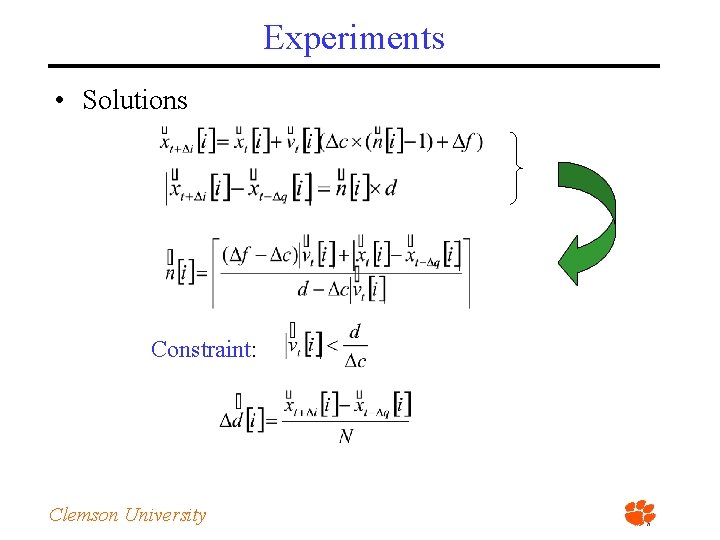

Experiments • Solutions Constraint: Clemson University

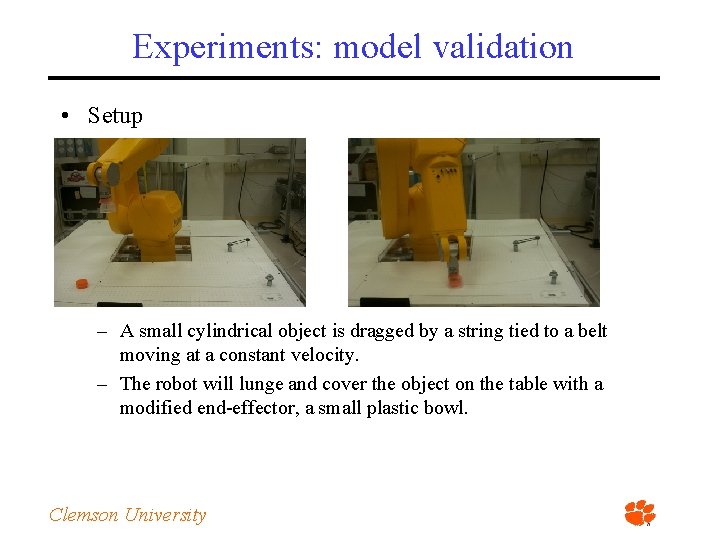

Experiments: model validation • Setup – A small cylindrical object is dragged by a string tied to a belt moving at a constant velocity. – The robot will lunge and cover the object on the table with a modified end-effector, a small plastic bowl. Clemson University

Experiments (video) Clemson University

Experiments • Experiment description – We set q to two different values, 40 and 80, in these two sets of experiments. We let the object move at three different velocities. For each velocity, we ran the experiment ten times. • Results q=40 q=80 Velocity range(mm/s) Stdev range Catch percentage 84. 4 – 97. 4 1. 3 – 3. 8 100% 85. 9 – 95. 1 2. 5 - 3. 7 100% 129. 8 -146. 7 1. 7 - 3. 2 100% 126. 1 – 137. 7 1. 7 – 3. 3 100% 177. 6 – 195. 1 0. 5 – 2. 6 100% 175. 8 – 192. 8 1. 1 – 2. 7 100% Clemson University

Experiments (video) Clemson University

Second part: Dynamic Intercept and Manipulation of Objects under Semi-Structured Motion Clemson University

Scooping balls (video) Clemson University

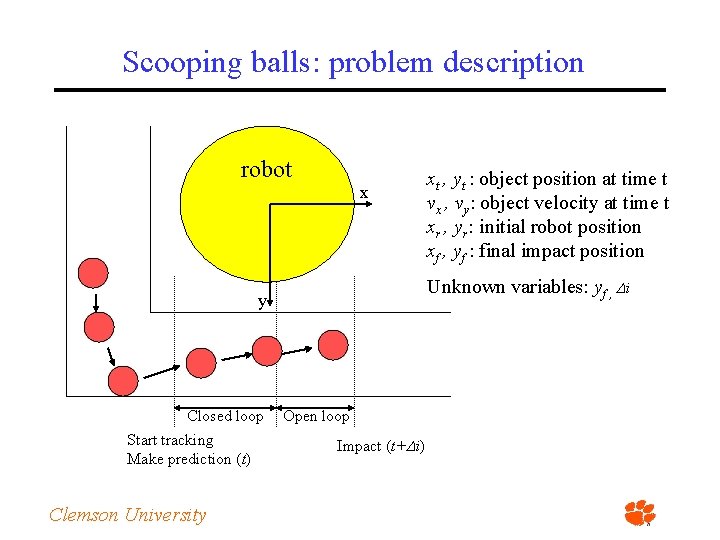

Scooping balls: problem description robot x Unknown variables: yf , i y Closed loop Start tracking Make prediction (t) Clemson University xt , yt : object position at time t vx , vy: object velocity at time t xr , yr: initial robot position xf , yf : final impact position Open loop Impact (t+ i)

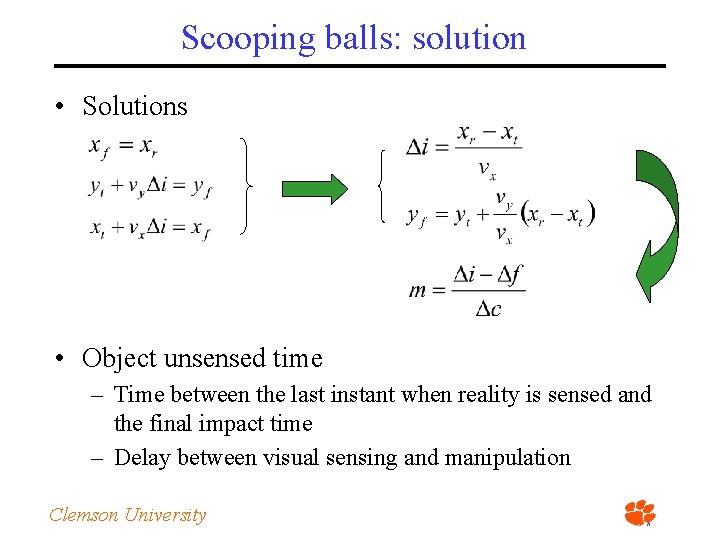

Scooping balls: solution • Solutions • Object unsensed time – Time between the last instant when reality is sensed and the final impact time – Delay between visual sensing and manipulation Clemson University

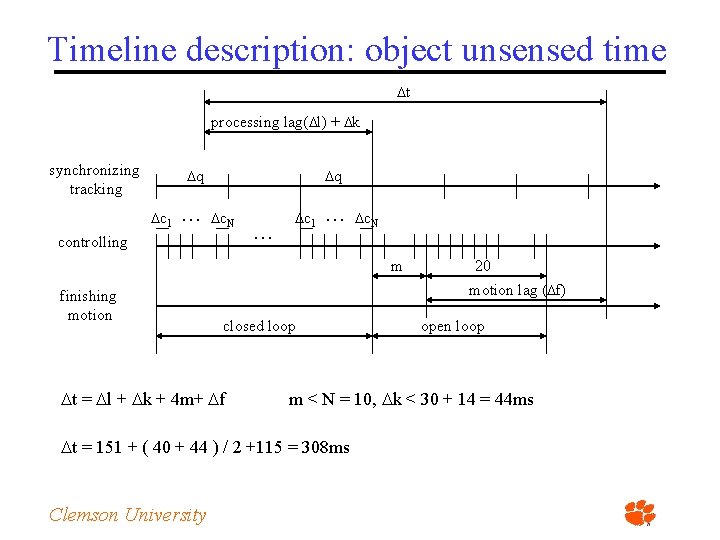

Timeline description: object unsensed time t processing lag( l) + k synchronizing tracking q c 1 … q c. N controlling … c 1 … c. N m finishing motion lag ( f) closed loop t = l + k + 4 m+ f open loop m < N = 10, k < 30 + 14 = 44 ms t = 151 + ( 40 + 44 ) / 2 +115 = 308 ms Clemson University 20

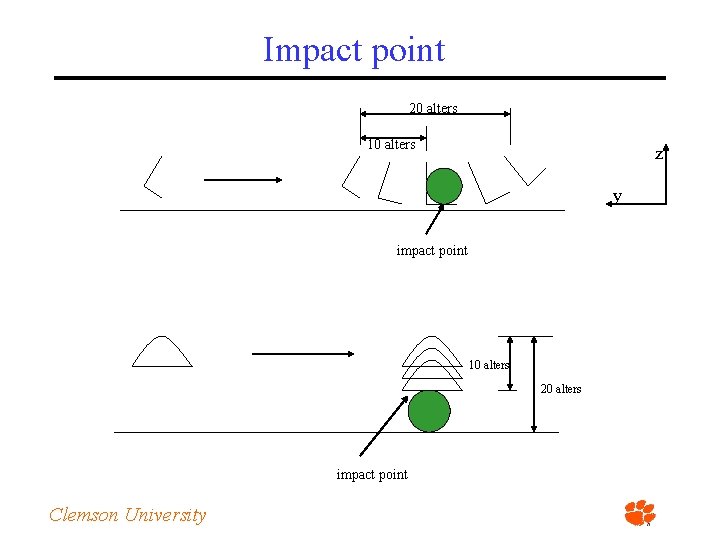

Impact point 20 alters 10 alters z y impact point 10 alters 20 alters impact point Clemson University

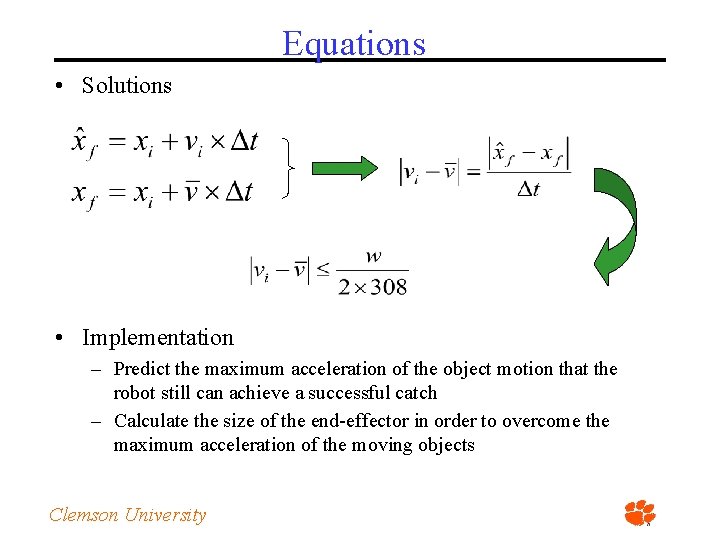

Equations • Solutions • Implementation – Predict the maximum acceleration of the object motion that the robot still can achieve a successful catch – Calculate the size of the end-effector in order to overcome the maximum acceleration of the moving objects Clemson University

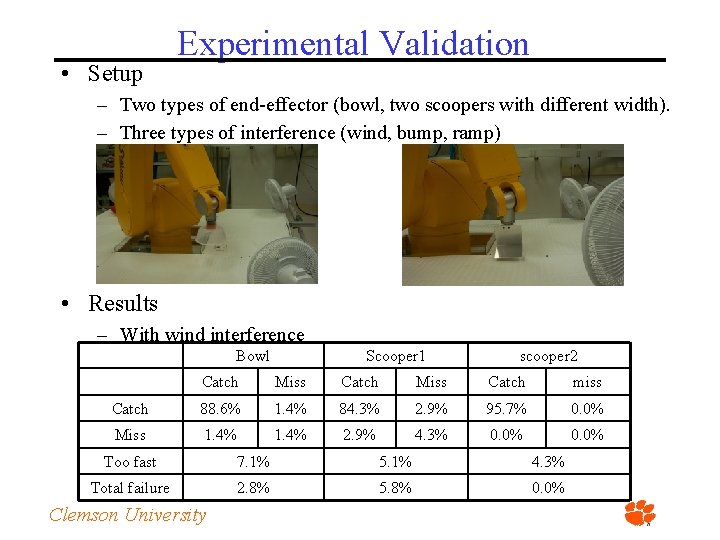

• Setup Experimental Validation – Two types of end-effector (bowl, two scoopers with different width). – Three types of interference (wind, bump, ramp) • Results – With wind interference Bowl Scooper 1 scooper 2 Catch Miss Catch miss Catch 88. 6% 1. 4% 84. 3% 2. 9% 95. 7% 0. 0% Miss 1. 4% 2. 9% 4. 3% 0. 0% Too fast 7. 1% 5. 1% 4. 3% Total failure 2. 8% 5. 8% 0. 0% Clemson University

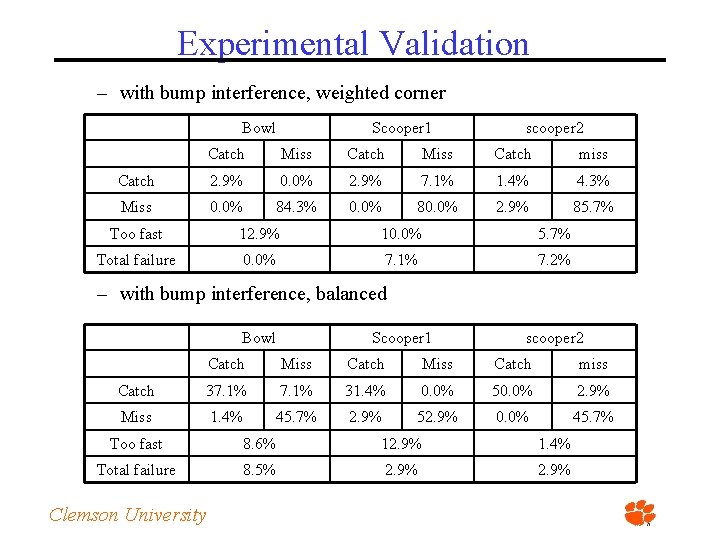

Experimental Validation – with bump interference, weighted corner Bowl Scooper 1 scooper 2 Catch Miss Catch miss Catch 2. 9% 0. 0% 2. 9% 7. 1% 1. 4% 4. 3% Miss 0. 0% 84. 3% 0. 0% 80. 0% 2. 9% 85. 7% Too fast 12. 9% 10. 0% 5. 7% Total failure 0. 0% 7. 1% 7. 2% – with bump interference, balanced Bowl Scooper 1 scooper 2 Catch Miss Catch miss Catch 37. 1% 31. 4% 0. 0% 50. 0% 2. 9% Miss 1. 4% 45. 7% 2. 9% 52. 9% 0. 0% 45. 7% Too fast 8. 6% 12. 9% 1. 4% Total failure 8. 5% 2. 9% Clemson University

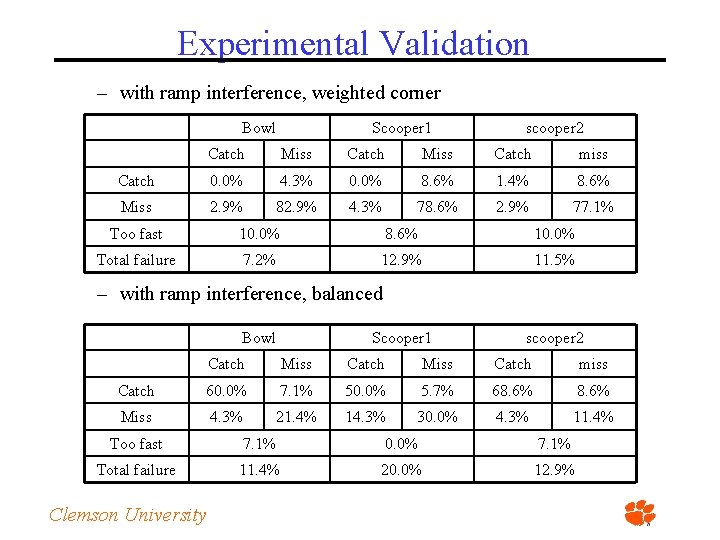

Experimental Validation – with ramp interference, weighted corner Bowl Scooper 1 scooper 2 Catch Miss Catch miss Catch 0. 0% 4. 3% 0. 0% 8. 6% 1. 4% 8. 6% Miss 2. 9% 82. 9% 4. 3% 78. 6% 2. 9% 77. 1% Too fast 10. 0% 8. 6% 10. 0% Total failure 7. 2% 12. 9% 11. 5% – with ramp interference, balanced Bowl Scooper 1 scooper 2 Catch Miss Catch miss Catch 60. 0% 7. 1% 50. 0% 5. 7% 68. 6% Miss 4. 3% 21. 4% 14. 3% 30. 0% 4. 3% 11. 4% Too fast 7. 1% 0. 0% 7. 1% Total failure 11. 4% 20. 0% 12. 9% Clemson University

Third part: A Novel Pneumatic Three-finger Robot Hand Clemson University

Related work • Three different types of robot hands – Electric motor powered hands, for example: • A. Ramos et. al. Goldfinger • C. Lovchik et. al. The robonaut hand • J. Butterfa et. al. DLR-Hand • Barrett hand – Pneumatically driven hands: • S. Jacobsen et. al. UTAH/M. I. T. hand – Hydraulically driven hands: • D. Schmidt et. al. Hydraulically actuated finger • Vision-based robot hand research – A. Morales et. al. presented a vision-based strategy for computing threefinger grasp on unknown planar objects – A. Hauck et. al. Determine 3 D grasps on unknown, non-polyhedral objects using a parallel jaw gripper Clemson University

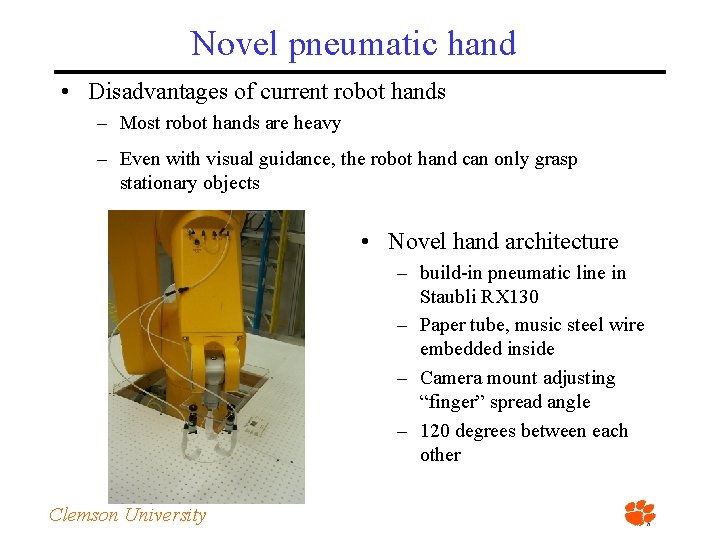

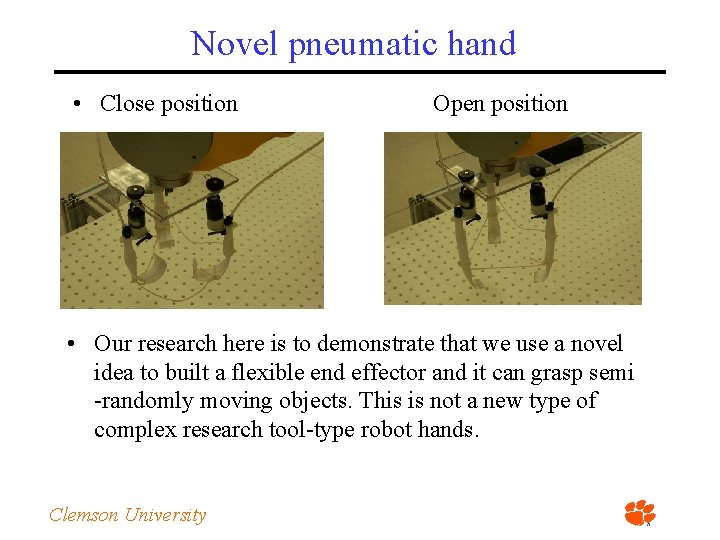

Novel pneumatic hand • Disadvantages of current robot hands – Most robot hands are heavy – Even with visual guidance, the robot hand can only grasp stationary objects • Novel hand architecture – build-in pneumatic line in Staubli RX 130 – Paper tube, music steel wire embedded inside – Camera mount adjusting “finger” spread angle – 120 degrees between each other Clemson University

Novel pneumatic hand • Close position Open position • Our research here is to demonstrate that we use a novel idea to built a flexible end effector and it can grasp semi -randomly moving objects. This is not a new type of complex research tool-type robot hands. Clemson University

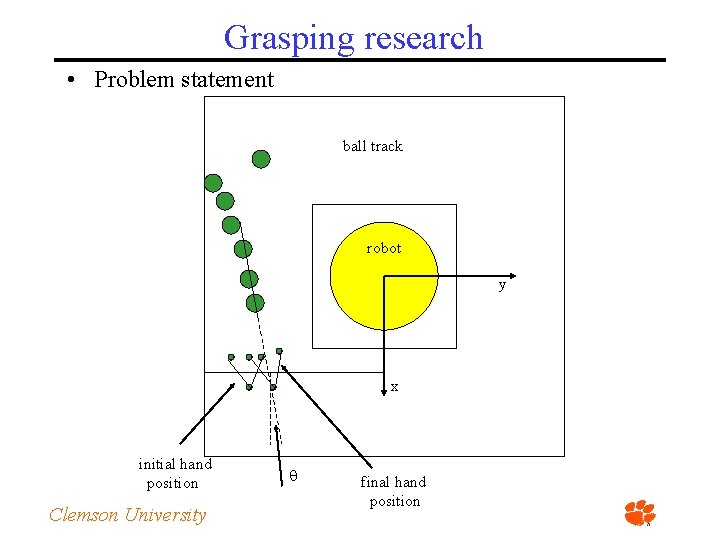

Grasping research • Problem statement ball track robot y x initial hand position Clemson University final hand position

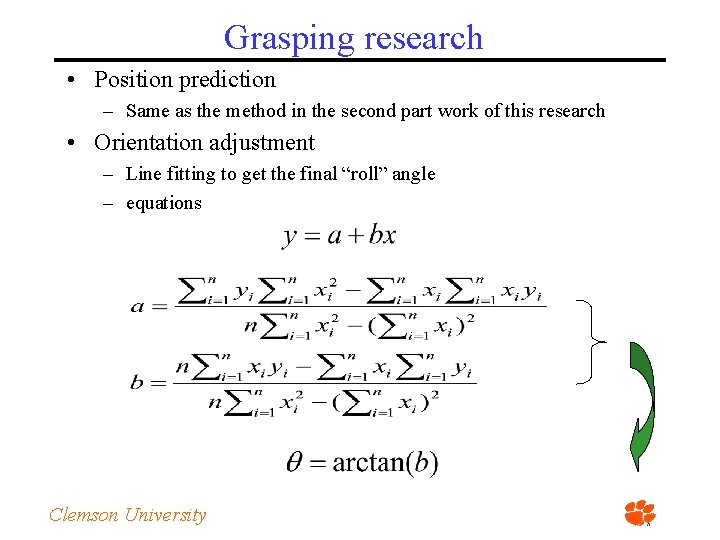

Grasping research • Position prediction – Same as the method in the second part work of this research • Orientation adjustment – Line fitting to get the final “roll” angle – equations Clemson University

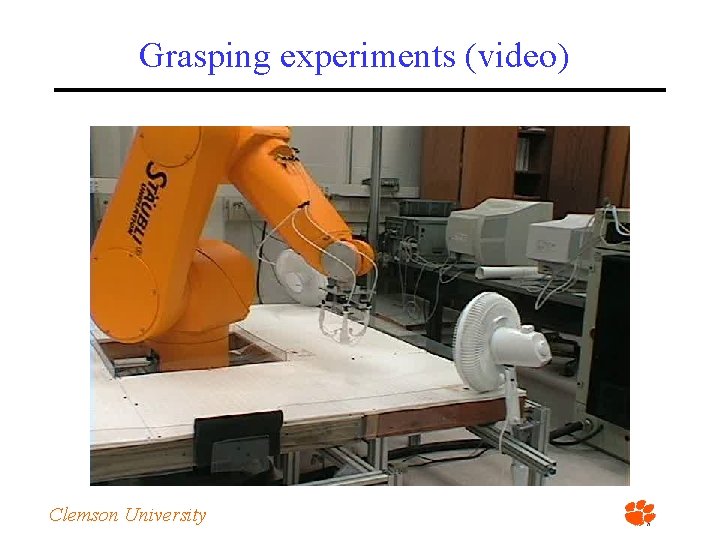

Grasping experiments (video) Clemson University

Conclusions: timing model • A generic timing model for a robotic system using visual sensing, where the camera provides the desired position to the robot controller. • We demonstrate how to obtain the values of the parameters in the model, using our camera networkcell as an example. • Implementation to let our industrial manipulator intercept a moving object. • Experimental results indicate that our model is highly effective, and generalizable. Clemson University

Conclusions: dynamic manipulation • Based on the timing model, we present a novel generic and simple theory to quantify the dynamic intercept ability of vision based robotic systems. • We validate theory by designing 15 sets of experiments (1050 runs), using two different end effectors under three different interference. • The experimental results demonstrate that our theory is effective. Clemson University

Conclusions: novel pneumatic hand • A novel pneumatic three-finger hand is designed and demonstrated. • It is simple, light and effective. • Experimental results demonstrate that this novel pneumatic hand can grasp semi-randomly moving objects. • Advantages – The compliance from pneumatics will allow the three-finger hand to manipulate more delicate and fragile objects. – In the experiments of grasping moving objects, unlike the traditional gripper, the contact position for this continuous finger is not very critical, which leaves more room for sensing error. Clemson University

Sponsors • The South Carolina Commission on Higher Education • The Staubli Corporation • The U. S. Office of Naval Research Clemson University

Thanks Clemson University

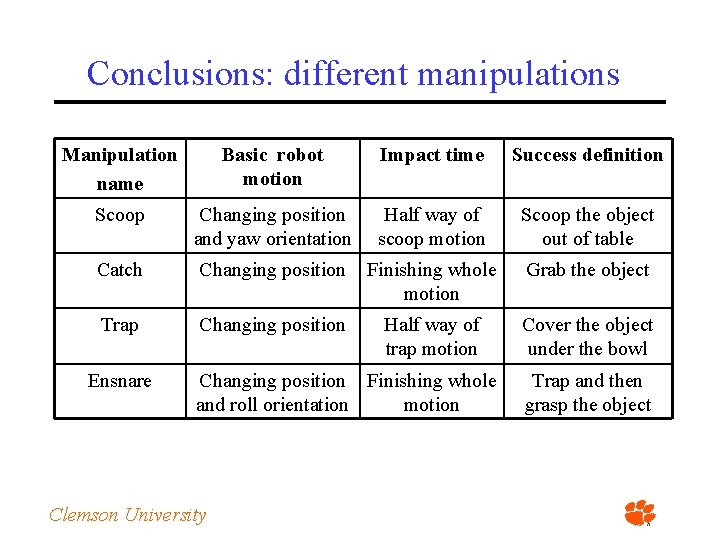

Conclusions: different manipulations Manipulation name Basic robot motion Impact time Success definition Scoop Changing position and yaw orientation Half way of scoop motion Scoop the object out of table Catch Changing position Finishing whole motion Grab the object Trap Changing position Half way of trap motion Cover the object under the bowl Ensnare Changing position Finishing whole and roll orientation motion Clemson University Trap and then grasp the object

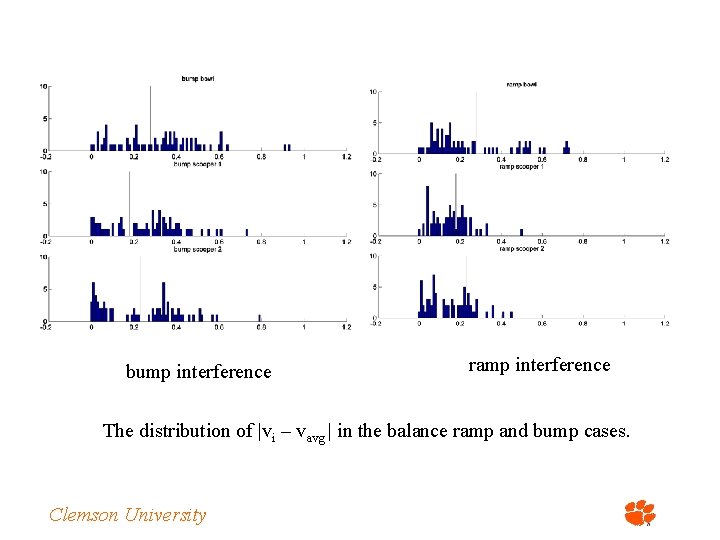

bump interference ramp interference The distribution of |vi – vavg | in the balance ramp and bump cases. Clemson University

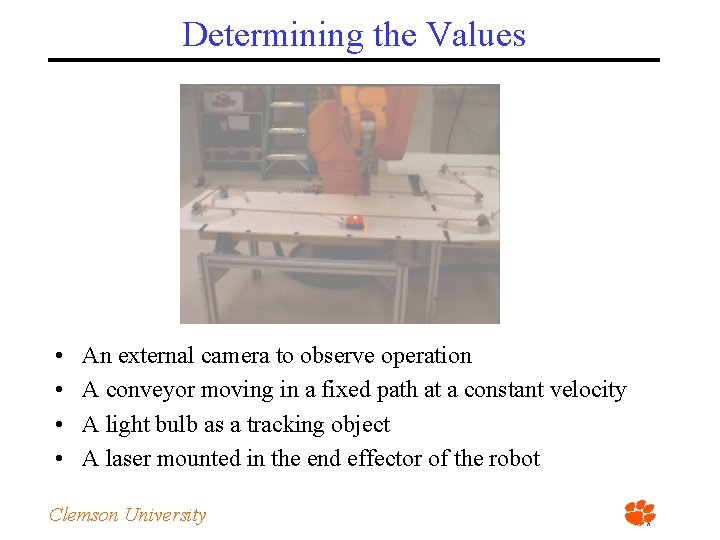

Determining the Values • • An external camera to observe operation A conveyor moving in a fixed path at a constant velocity A light bulb as a tracking object A laser mounted in the end effector of the robot Clemson University

- Slides: 45