Dynamic Resource Management in Internet Data Centers Prashant

![Single-tier Provisioning q Single tier provisioning well studied [Muse, TACT] § Non-trivial to extend Single-tier Provisioning q Single tier provisioning well studied [Muse, TACT] § Non-trivial to extend](https://slidetodoc.com/presentation_image_h/030d3d906eb23345454e6f5bae287cb5/image-12.jpg)

![Single-tier Provisioning q Single tier provisioning well studied [Muse, TACT] § Non-trivial to extend Single-tier Provisioning q Single tier provisioning well studied [Muse, TACT] § Non-trivial to extend](https://slidetodoc.com/presentation_image_h/030d3d906eb23345454e6f5bae287cb5/image-13.jpg)

![Other Research Results q OS Resource Allocation § § Qlinux [ACM MM 00], SFS Other Research Results q OS Resource Allocation § § Qlinux [ACM MM 00], SFS](https://slidetodoc.com/presentation_image_h/030d3d906eb23345454e6f5bae287cb5/image-31.jpg)

- Slides: 37

Dynamic Resource Management in Internet Data Centers Prashant Shenoy University of Massachusetts Computer Science

Motivation q Internet applications used in a variety of domains § Online banking, online brokerage, online music store, e-commerce q Internet usage continues to grow rapidly § Broadband deployment is accelerating q Outages of Internet applications more common Computer Science “Site not responding” “connection timed out”

Internet Application Outages Holiday Shopping Season 2000: Down for 30 minutes Periodic outages over 4 days Average download time ~ 260 sec 9/11: site inaccessible for brief periods Cause: Too many users leading to overload Computer Science

Internet Workloads are highly variable q Short-term fluctuations § “Slashdot Effect” § Flash Crowds Soccer World Cup’ 98 q Long-term seasonal effects § Time-of-day, month-of-year q Peak difficult to predict § Static overprovisoning not effective q Manual allocation: slow Key Issue: How can we design applications to handle large workload variations? Computer Science

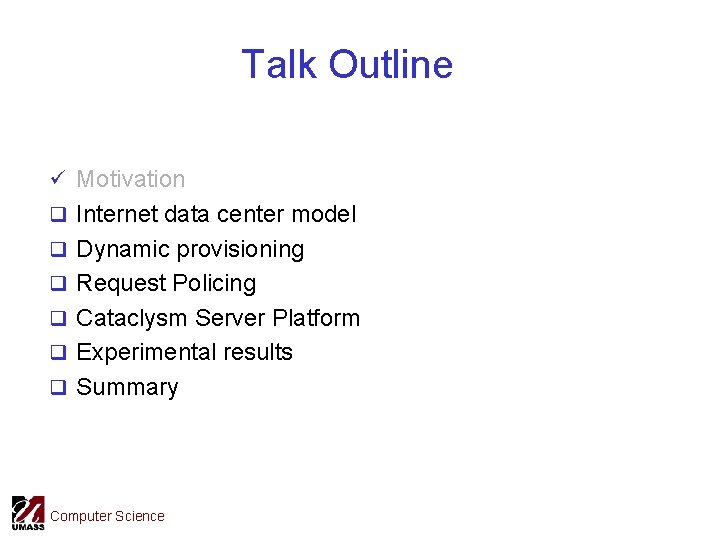

Internet Data Centers n Internet applications run on data centers n Server farms n n n Provide computational and storage resources Applications share data center resources Problem: How should the platform allocate resources to absorb workload variations? Computer Science

Talk Outline ü Motivation q Internet data center model q Dynamic provisioning q Request Policing q Cataclysm Server Platform q Experimental results q Summary Computer Science

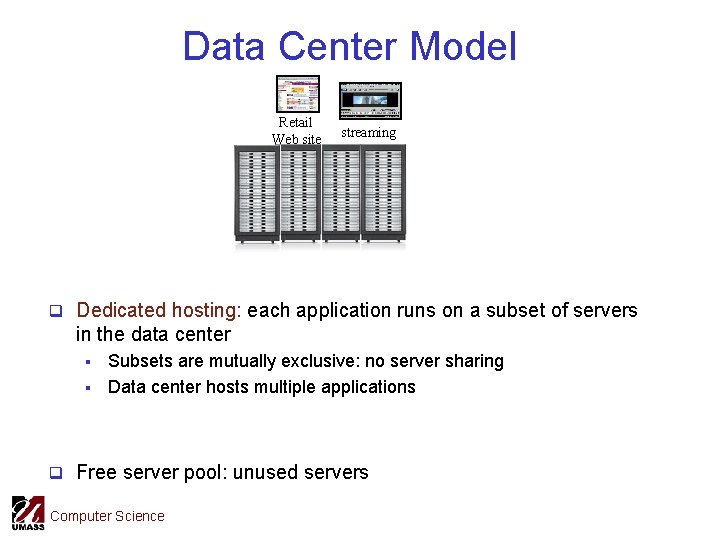

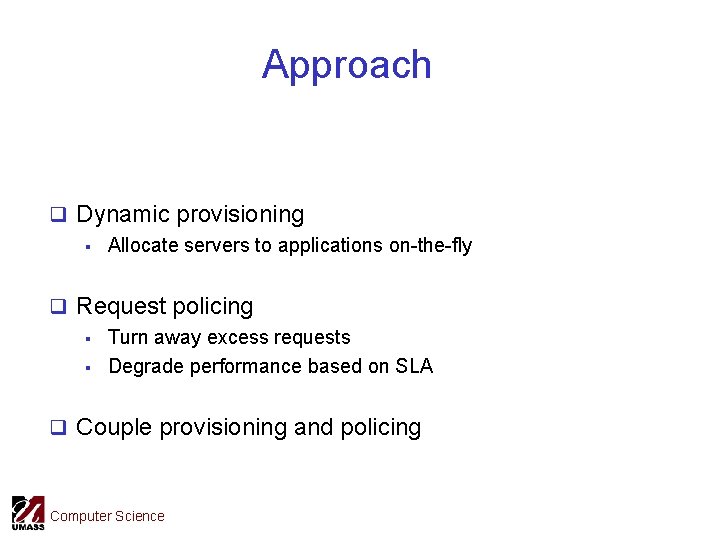

Data Center Model Retail Web site streaming q Dedicated hosting: each application runs on a subset of servers in the data center § § Subsets are mutually exclusive: no server sharing Data center hosts multiple applications q Free server pool: unused servers Computer Science

Internet Application Model requests Load balancing sentry database http J 2 EE q Internet applications: multiple tiers § Example: 3 tiers: HTTP, J 2 EE app server, database q Replicable applications § Individual tiers: partially or fully replicable § Example: clustered HTTP, J 2 EE server, shared-nothing db q Each application employs a sentry § Each tier uses a dispatcher: load balancing Computer Science

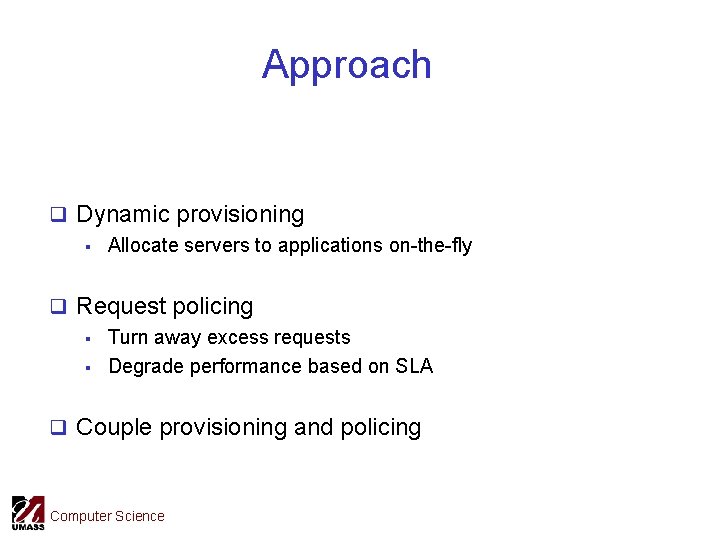

Approach q Dynamic provisioning § Allocate servers to applications on-the-fly q Request policing § Turn away excess requests § Degrade performance based on SLA q Couple provisioning and policing Computer Science

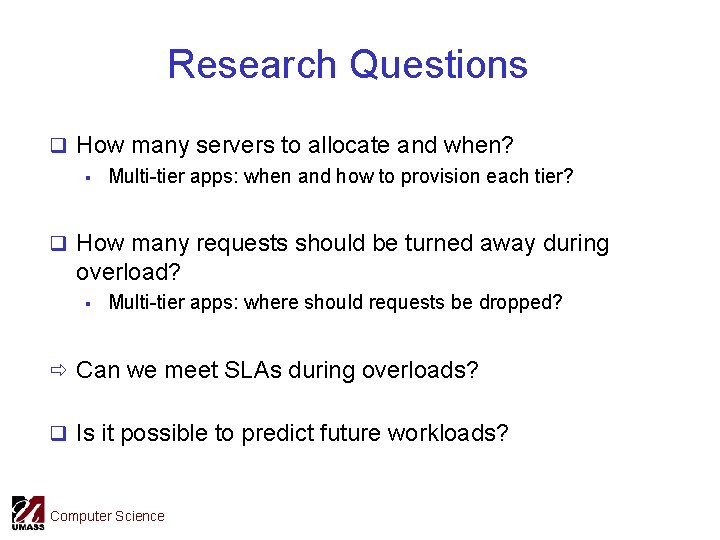

Research Questions q How many servers to allocate and when? § Multi-tier apps: when and how to provision each tier? q How many requests should be turned away during overload? § Multi-tier apps: where should requests be dropped? ð Can we meet SLAs during overloads? q Is it possible to predict future workloads? Computer Science

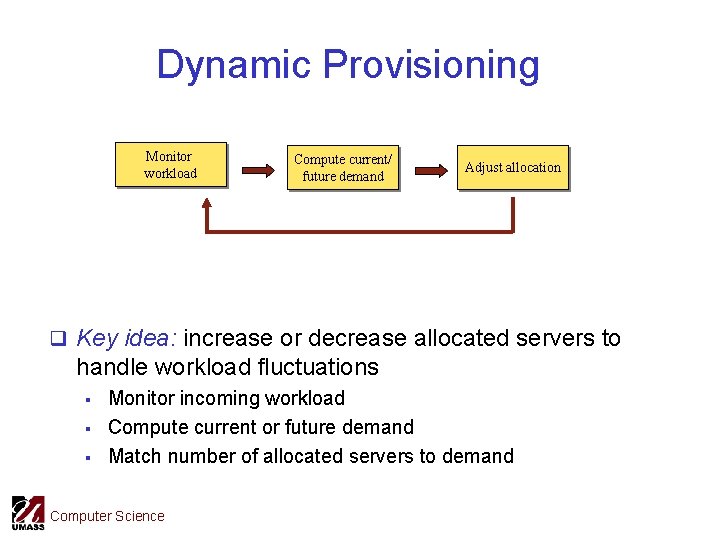

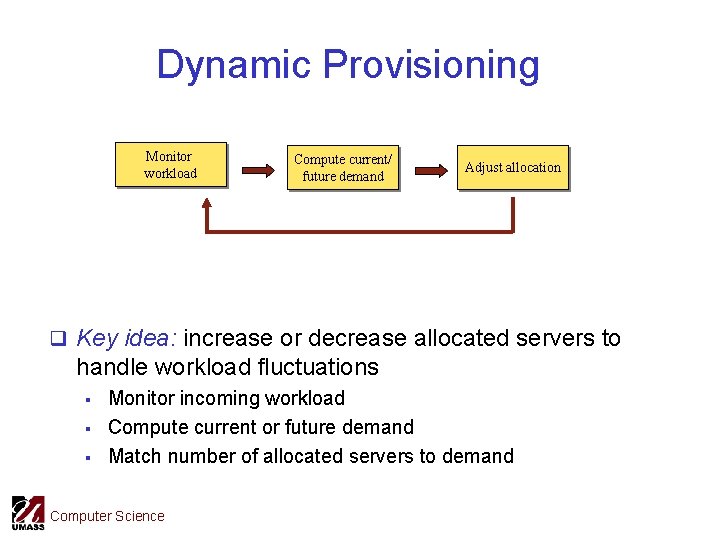

Dynamic Provisioning Monitor workload Compute current/ future demand Adjust allocation q Key idea: increase or decrease allocated servers to handle workload fluctuations § § § Monitor incoming workload Compute current or future demand Match number of allocated servers to demand Computer Science

![Singletier Provisioning q Single tier provisioning well studied Muse TACT Nontrivial to extend Single-tier Provisioning q Single tier provisioning well studied [Muse, TACT] § Non-trivial to extend](https://slidetodoc.com/presentation_image_h/030d3d906eb23345454e6f5bae287cb5/image-12.jpg)

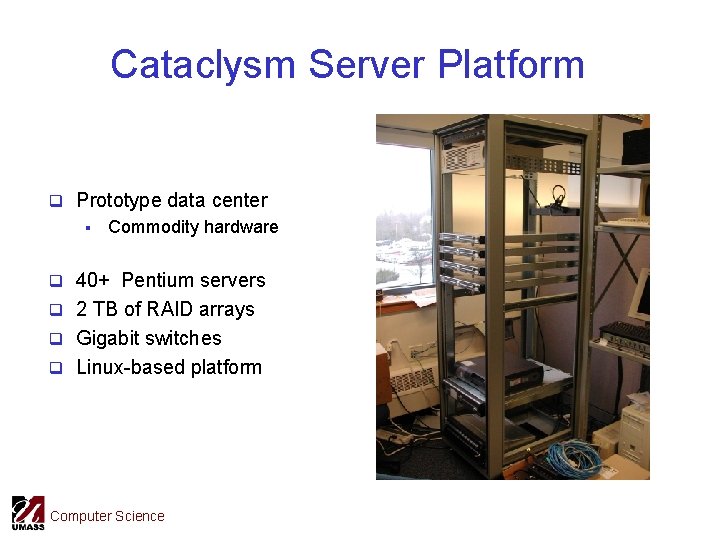

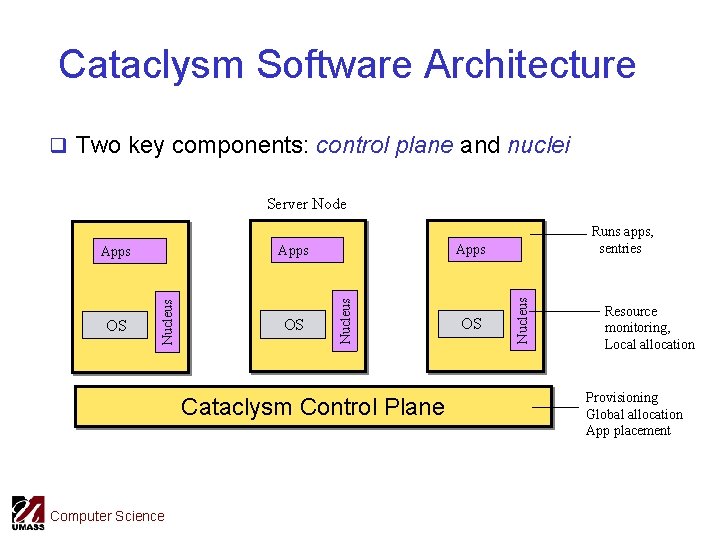

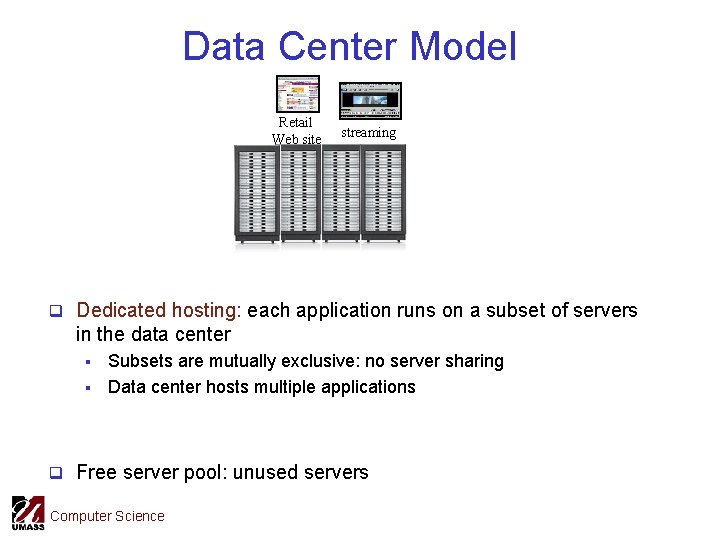

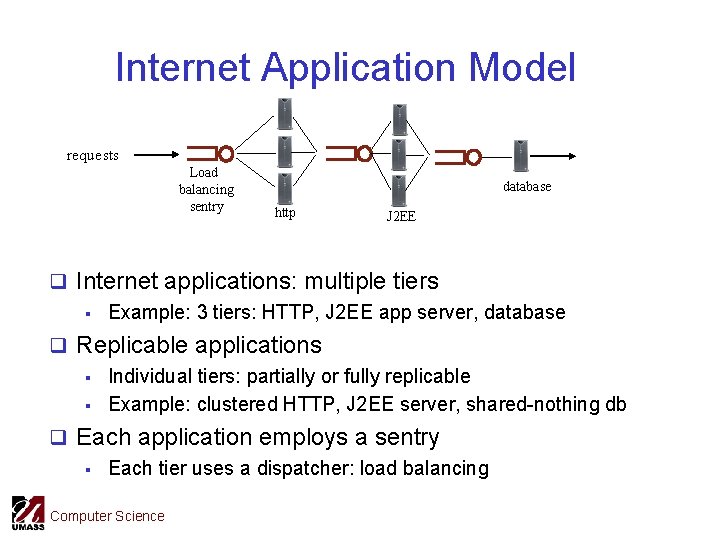

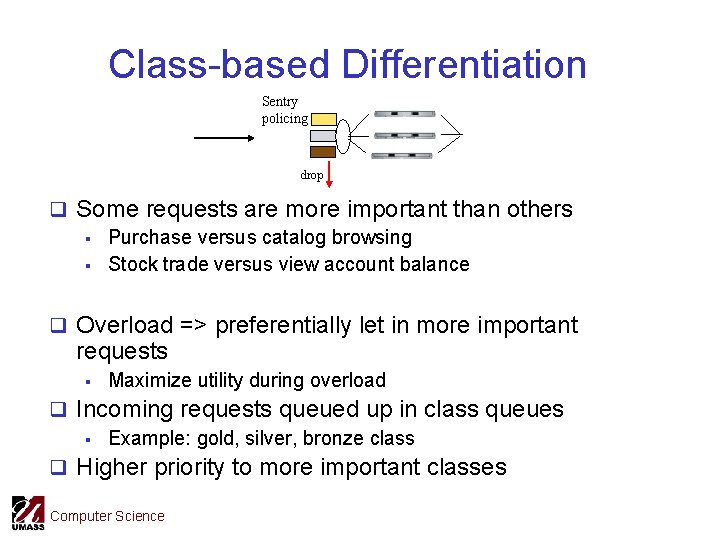

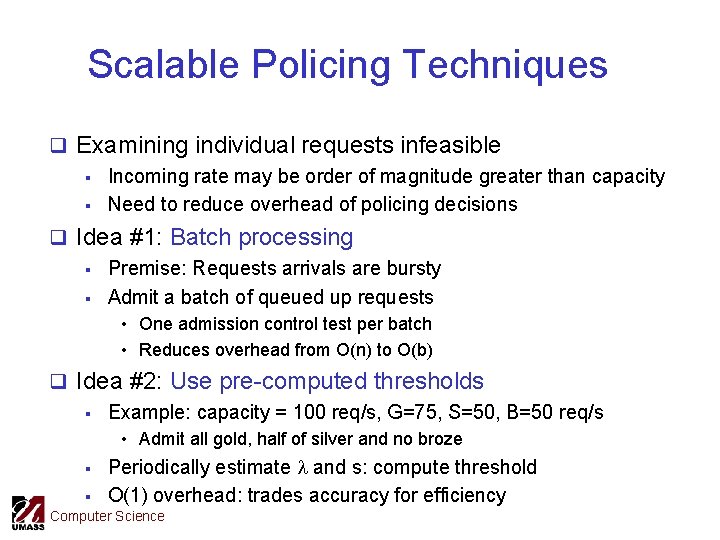

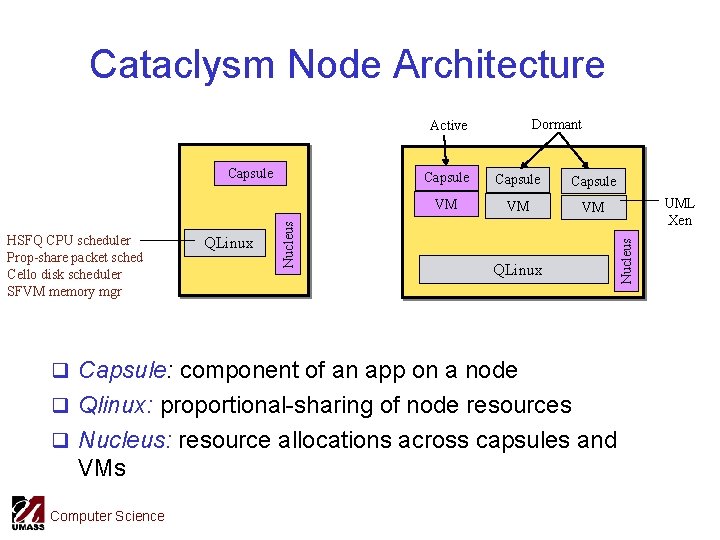

Single-tier Provisioning q Single tier provisioning well studied [Muse, TACT] § Non-trivial to extend to multiple-tiers q Strawman #1: use single-tier provisioning independently at each tier q Problem: independent tier provisioning may not increase goodput 10 14 14 req/s C=15 C=10 dropped 4 req/s Computer Science 10 C=10. 1

![Singletier Provisioning q Single tier provisioning well studied Muse TACT Nontrivial to extend Single-tier Provisioning q Single tier provisioning well studied [Muse, TACT] § Non-trivial to extend](https://slidetodoc.com/presentation_image_h/030d3d906eb23345454e6f5bae287cb5/image-13.jpg)

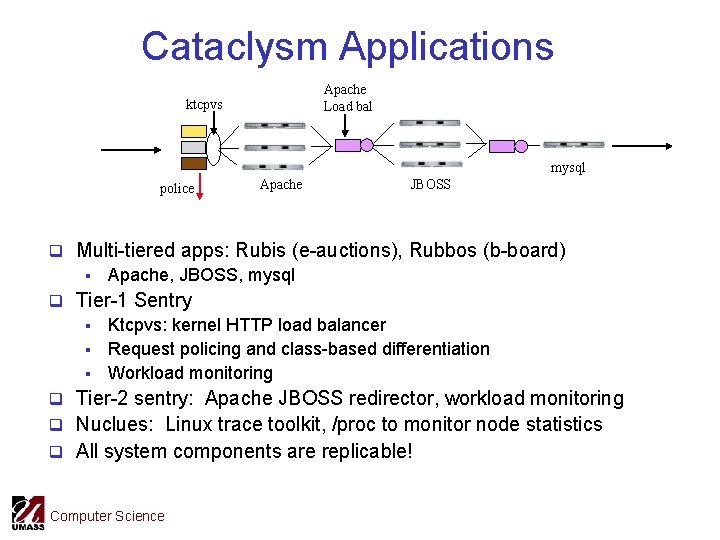

Single-tier Provisioning q Single tier provisioning well studied [Muse, TACT] § Non-trivial to extend to multiple-tiers q Strawman #1: use single-tier provisioning independently at each tier q Problem: independent tier provisioning may not increase goodput 14 req/s C=15 10. 1 14 14 C=20 C=10. 1 dropped 3. 9 req/s Computer Science

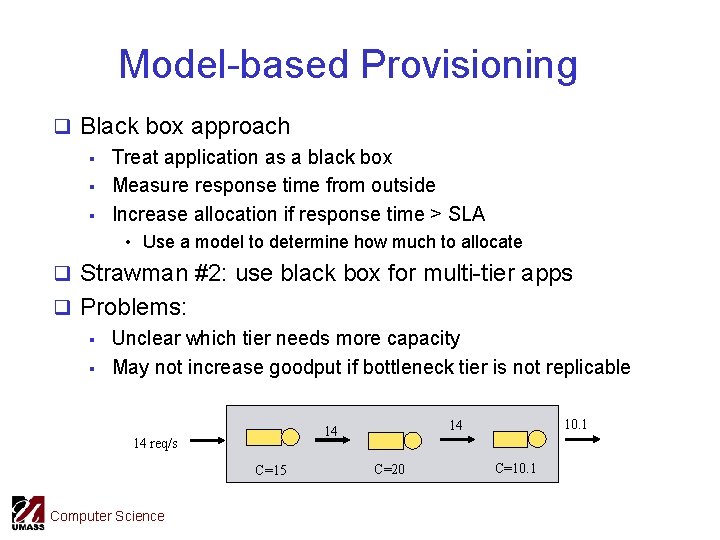

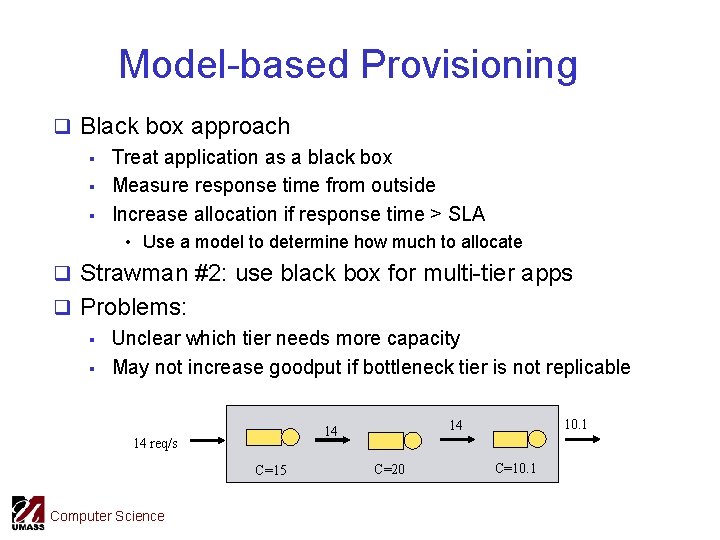

Model-based Provisioning q Black box approach § Treat application as a black box § Measure response time from outside § Increase allocation if response time > SLA • Use a model to determine how much to allocate q Strawman #2: use black box for multi-tier apps q Problems: § Unclear which tier needs more capacity § May not increase goodput if bottleneck tier is not replicable 14 req/s C=15 Computer Science 10. 1 14 14 C=20 C=10. 1

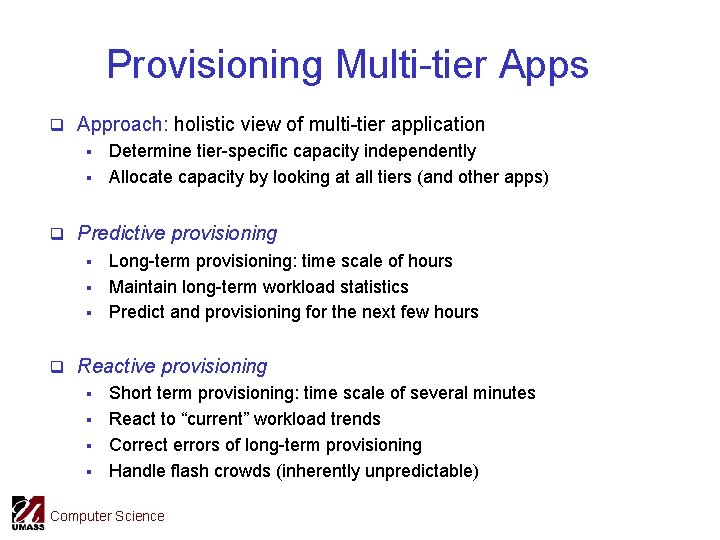

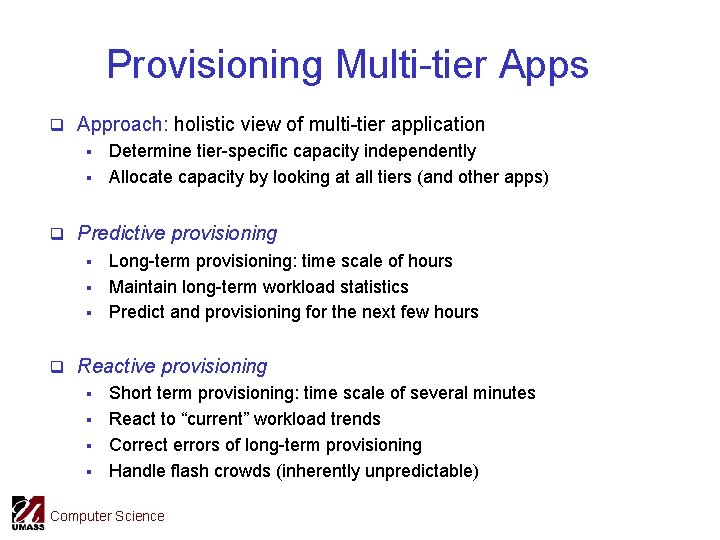

Provisioning Multi-tier Apps q Approach: holistic view of multi-tier application § § Determine tier-specific capacity independently Allocate capacity by looking at all tiers (and other apps) q Predictive provisioning § § § Long-term provisioning: time scale of hours Maintain long-term workload statistics Predict and provisioning for the next few hours q Reactive provisioning § § Short term provisioning: time scale of several minutes React to “current” workload trends Correct errors of long-term provisioning Handle flash crowds (inherently unpredictable) Computer Science

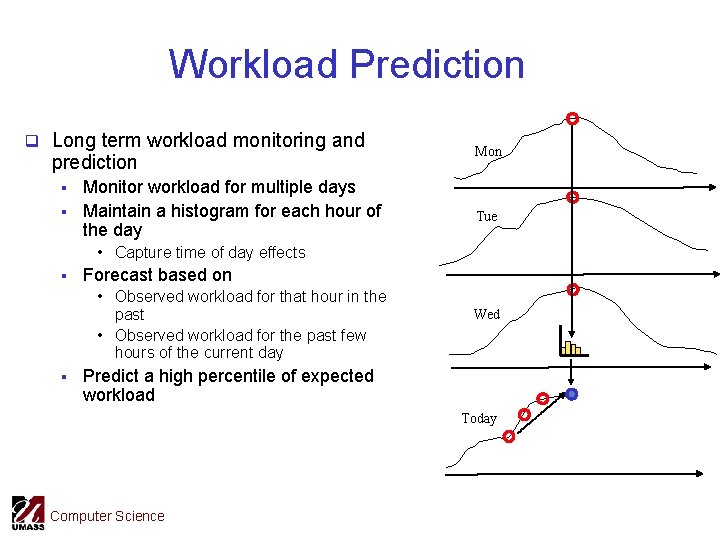

Workload Prediction q Long term workload monitoring and prediction § § Monitor workload for multiple days Maintain a histogram for each hour of the day Mon Tue • Capture time of day effects § Forecast based on • Observed workload for that hour in the past • Observed workload for the past few hours of the current day § Wed Predict a high percentile of expected workload Today Computer Science

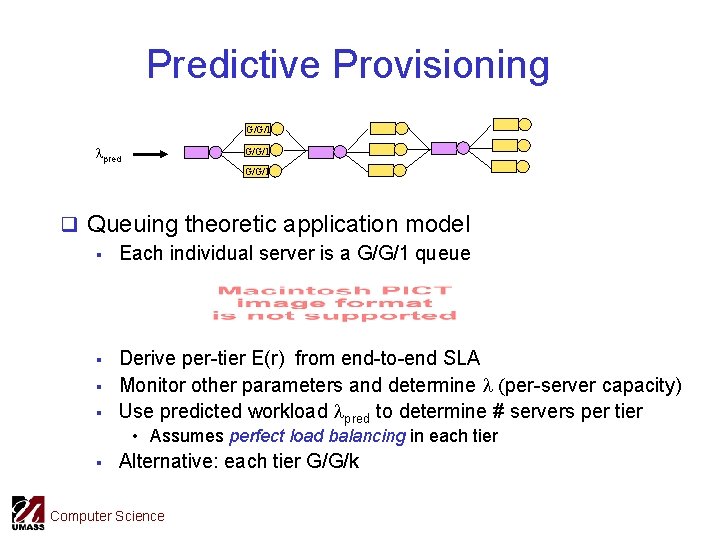

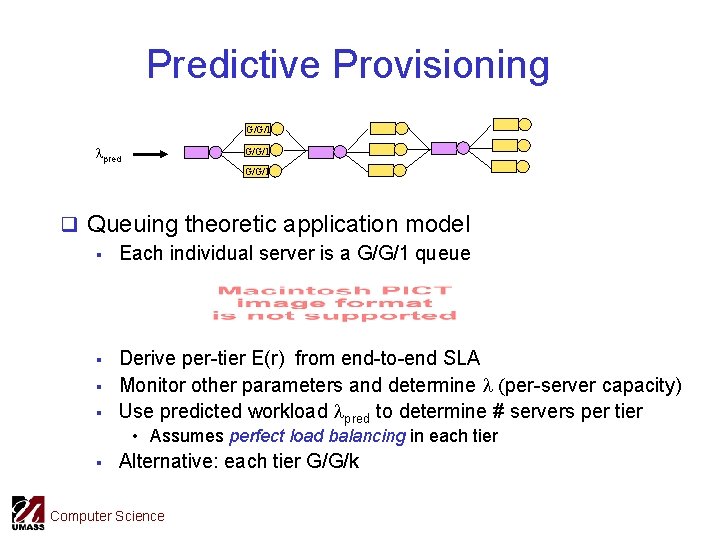

Predictive Provisioning G/G/1 lpred G/G/1 q Queuing theoretic application model § Each individual server is a G/G/1 queue § § § Derive per-tier E(r) from end-to-end SLA Monitor other parameters and determine l (per-server capacity) Use predicted workload lpred to determine # servers per tier • Assumes perfect load balancing in each tier § Alternative: each tier G/G/k Computer Science

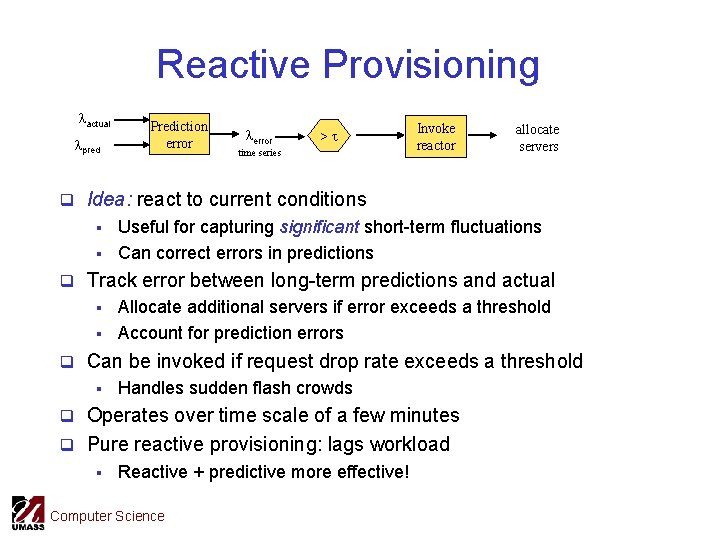

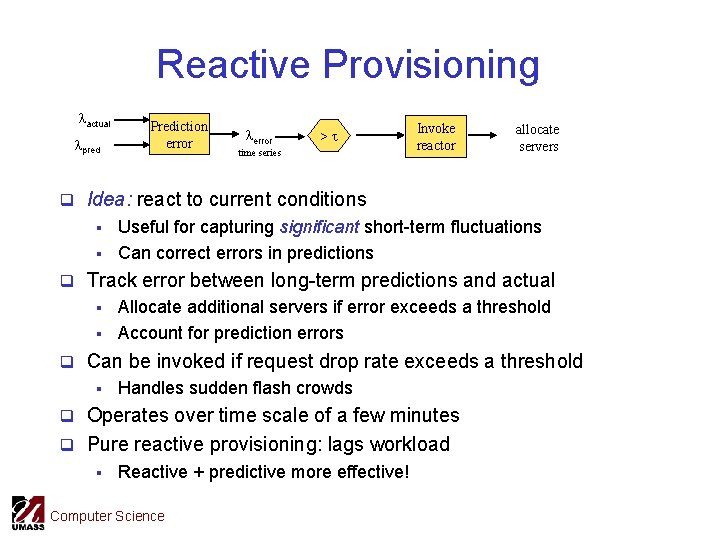

Reactive Provisioning lactual lpred Prediction error lerror >t time series Invoke reactor allocate servers q Idea: react to current conditions § § Useful for capturing significant short-term fluctuations Can correct errors in predictions q Track error between long-term predictions and actual § § Allocate additional servers if error exceeds a threshold Account for prediction errors q Can be invoked if request drop rate exceeds a threshold § Handles sudden flash crowds q Operates over time scale of a few minutes q Pure reactive provisioning: lags workload § Reactive + predictive more effective! Computer Science

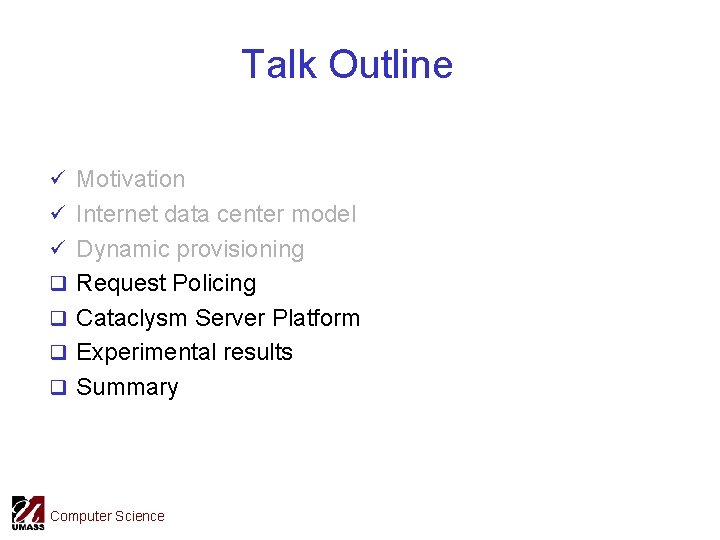

Talk Outline ü Motivation ü Internet data center model ü Dynamic provisioning q Request Policing q Cataclysm Server Platform q Experimental results q Summary Computer Science

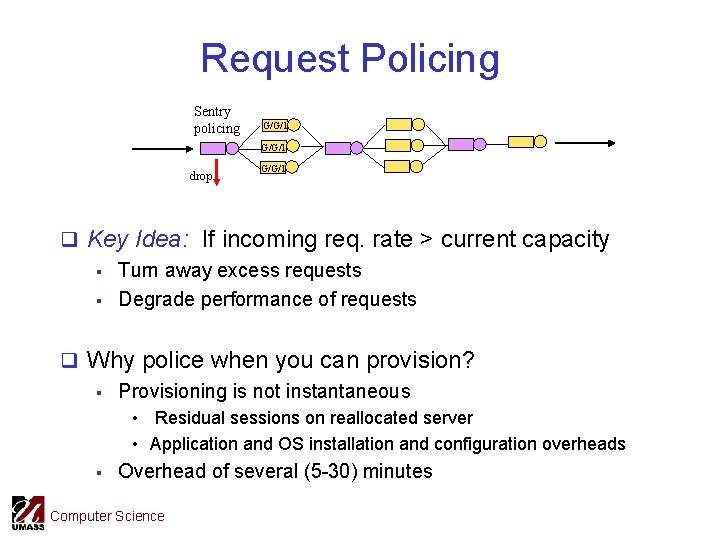

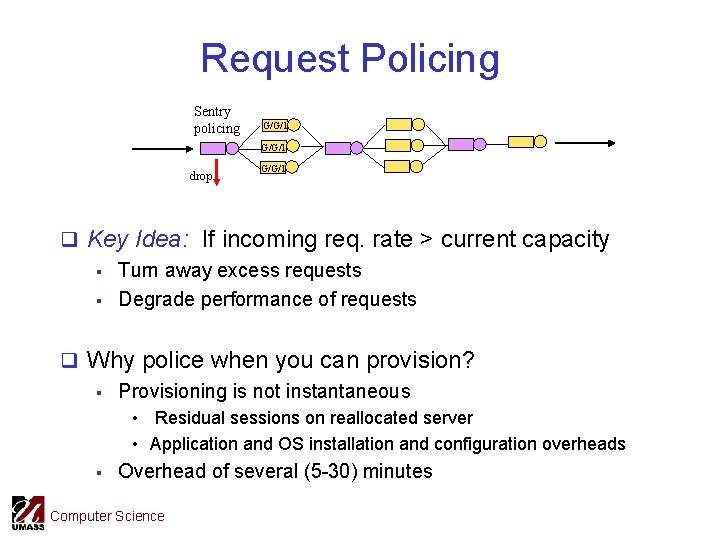

Request Policing Sentry policing G/G/1 drop G/G/1 q Key Idea: If incoming req. rate > current capacity § Turn away excess requests § Degrade performance of requests q Why police when you can provision? § Provisioning is not instantaneous • Residual sessions on reallocated server • Application and OS installation and configuration overheads § Overhead of several (5 -30) minutes Computer Science

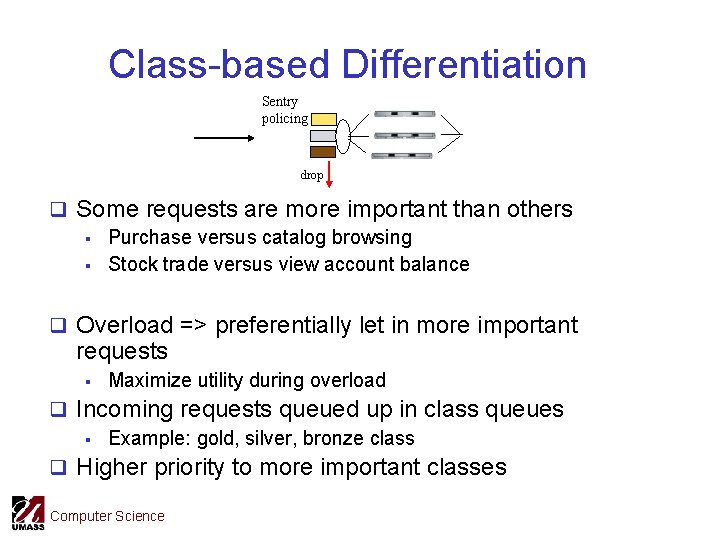

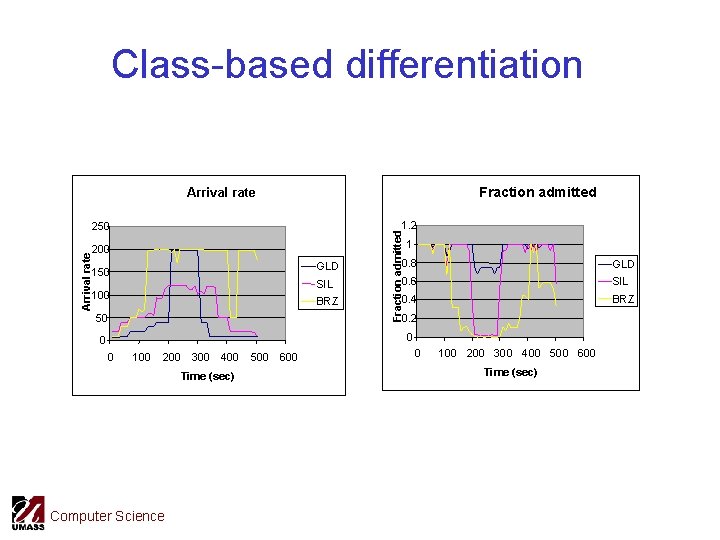

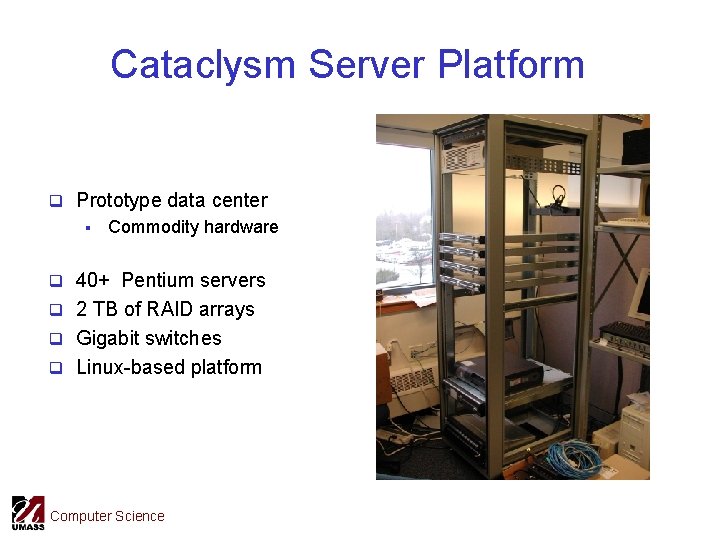

Class-based Differentiation Sentry policing drop q Some requests are more important than others § Purchase versus catalog browsing § Stock trade versus view account balance q Overload => preferentially let in more important requests § Maximize utility during overload q Incoming requests queued up in class queues § Example: gold, silver, bronze class q Higher priority to more important classes Computer Science

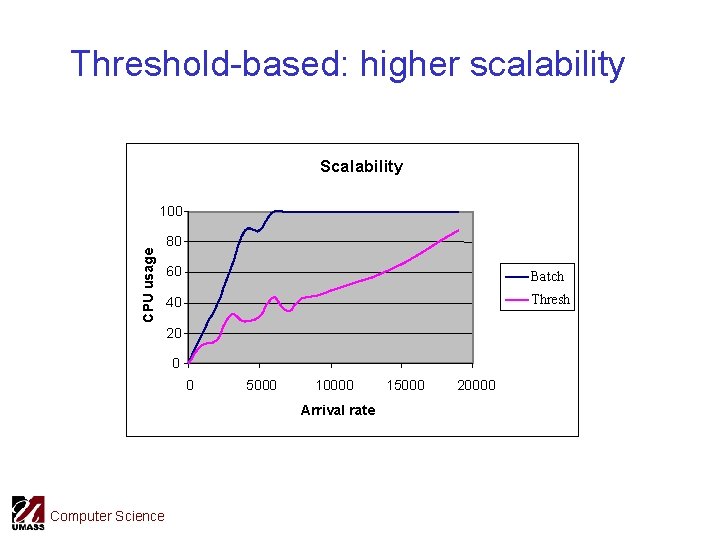

Scalable Policing Techniques q Examining individual requests infeasible § Incoming rate may be order of magnitude greater than capacity § Need to reduce overhead of policing decisions q Idea #1: Batch processing § Premise: Requests arrivals are bursty § Admit a batch of queued up requests • One admission control test per batch • Reduces overhead from O(n) to O(b) q Idea #2: Use pre-computed thresholds § Example: capacity = 100 req/s, G=75, S=50, B=50 req/s • Admit all gold, half of silver and no broze § § Periodically estimate l and s: compute threshold O(1) overhead: trades accuracy for efficiency Computer Science

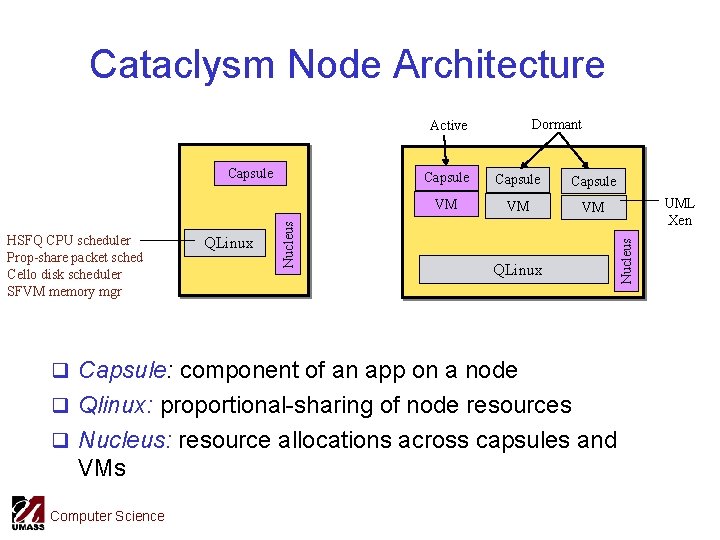

Cataclysm Server Platform q Prototype data center § Commodity hardware q 40+ Pentium servers q 2 TB of RAID arrays q Gigabit switches q Linux-based platform Computer Science

Cataclysm Software Architecture q Two key components: control plane and nuclei Server Node Cataclysm Control Plane Computer Science OS Nucleus OS Apps Runs apps, sentries Resource monitoring, Local allocation Provisioning Global allocation App placement

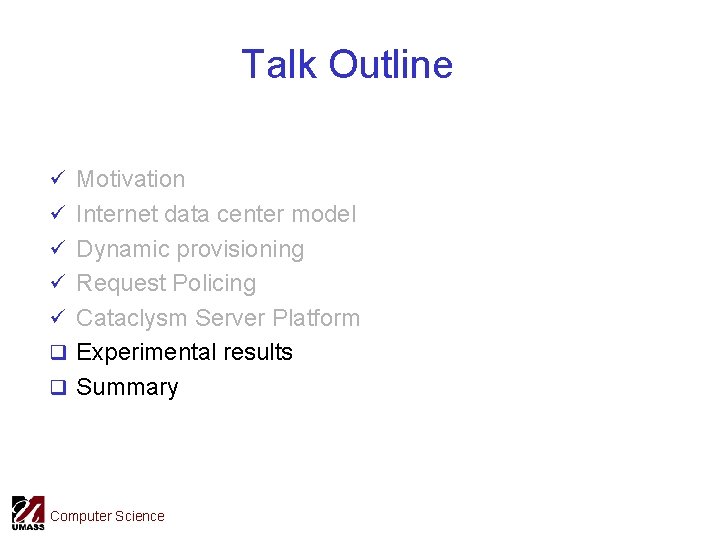

Cataclysm Node Architecture Dormant HSFQ CPU scheduler Prop-share packet sched Cello disk scheduler SFVM memory mgr QLinux Nucleus Capsule VM VM VM QLinux q Capsule: component of an app on a node q Qlinux: proportional-sharing of node resources q Nucleus: resource allocations across capsules and VMs Computer Science UML Xen Nucleus Active

Cataclysm Applications Apache Load bal ktcpvs mysql police Apache JBOSS q Multi-tiered apps: Rubis (e-auctions), Rubbos (b-board) § Apache, JBOSS, mysql q Tier-1 Sentry § Ktcpvs: kernel HTTP load balancer § Request policing and class-based differentiation § Workload monitoring q Tier-2 sentry: Apache JBOSS redirector, workload monitoring q Nuclues: Linux trace toolkit, /proc to monitor node statistics q All system components are replicable! Computer Science

Talk Outline ü Motivation ü Internet data center model ü Dynamic provisioning ü Request Policing ü Cataclysm Server Platform q Experimental results q Summary Computer Science

Dynamic Provisioning q Ru. Bi. S: E-auction application like Ebay Workload Server Allocation adapts to changing workload Computer Science

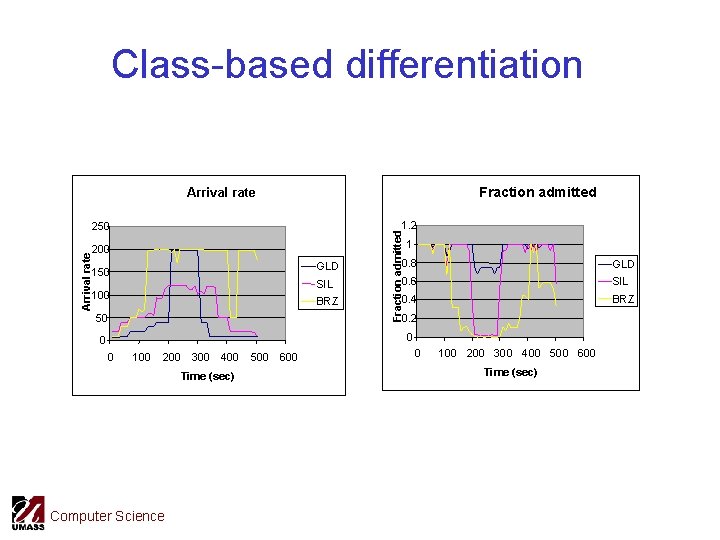

Class-based differentiation Fraction admitted Arrival rate 200 GLD 150 SIL 100 BRZ 50 Fraction admitted 1. 2 250 1 0. 8 GLD 0. 6 SIL 0. 4 BRZ 0. 2 0 0 0 100 200 300 400 Time (sec) Computer Science 500 600 0 100 200 300 400 500 600 Time (sec)

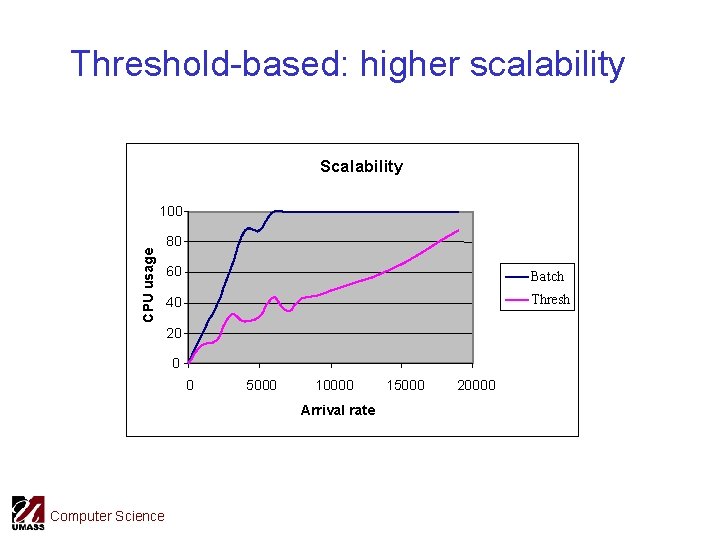

Threshold-based: higher scalability Scalability CPU usage 100 80 60 Batch 40 Thresh 20 0 0 5000 10000 Arrival rate Computer Science 15000 20000

![Other Research Results q OS Resource Allocation Qlinux ACM MM 00 SFS Other Research Results q OS Resource Allocation § § Qlinux [ACM MM 00], SFS](https://slidetodoc.com/presentation_image_h/030d3d906eb23345454e6f5bae287cb5/image-31.jpg)

Other Research Results q OS Resource Allocation § § Qlinux [ACM MM 00], SFS [OSDI 00], DFS [RTAS 02] SHARC cluster-based prop. sharing [TPDS 03] q Shared hosting provisioning § § Measurement-based [IWQOS 02], Queuing-based [Sigmetrics 03, IWQOS 03] Provisioning granularity [Self-manage 03] q Application placement [PDCS 2004] q Profiling and Overbooking [OSDI 02] q Storage issues § i. SCSI vs NFS [FAST 03], Policy-managed [TR 03] Computer Science

Glimpse of Other Projects q Hyperion: Network processor based measurement platform § § Measurement in the backbone and at the edge NP-based measurements in the data center q Ri. SE: Rich Sensor Environments § Video sensor networks § Robotics sensor networks § Real-time sensor networks § Weather sensors Computer Science

Concluding Remarks q Internet applications see varying workloads q Handle workload dynamics by § Dynamic capacity provisioning § Request Policing q Need to account for multi-tiered applications q Joint work: Bhuvan Urgaonkar, Abhishek Chandra and Vijay Sundaram q More at http: //lass. cs. umass. edu Computer Science

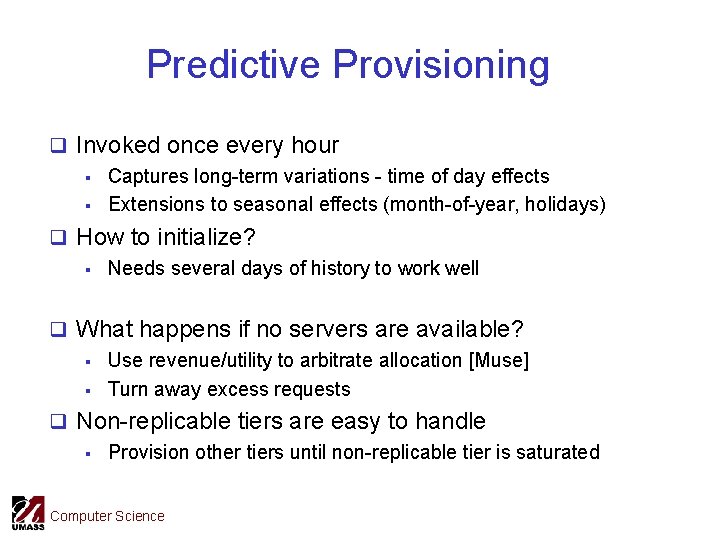

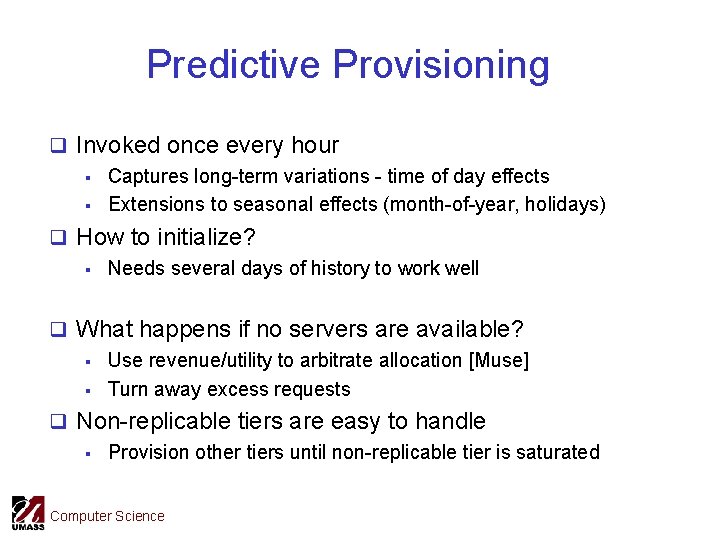

Predictive Provisioning q Invoked once every hour § Captures long-term variations - time of day effects § Extensions to seasonal effects (month-of-year, holidays) q How to initialize? § Needs several days of history to work well q What happens if no servers are available? § Use revenue/utility to arbitrate allocation [Muse] § Turn away excess requests q Non-replicable tiers are easy to handle § Provision other tiers until non-replicable tier is saturated Computer Science

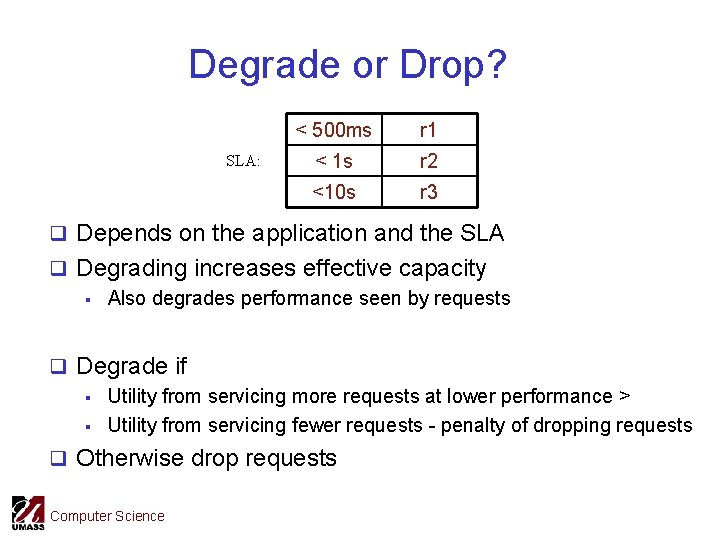

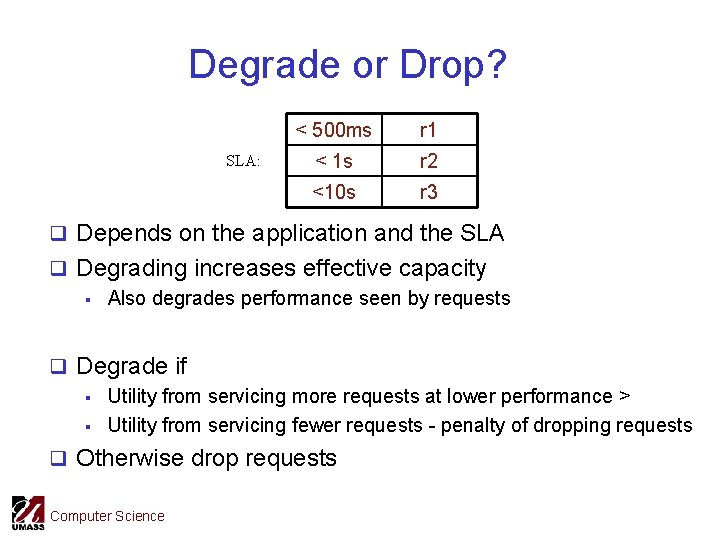

Degrade or Drop? SLA: < 500 ms r 1 < 1 s r 2 <10 s r 3 q Depends on the application and the SLA q Degrading increases effective capacity § Also degrades performance seen by requests q Degrade if § Utility from servicing more requests at lower performance > § Utility from servicing fewer requests - penalty of dropping requests q Otherwise drop requests Computer Science

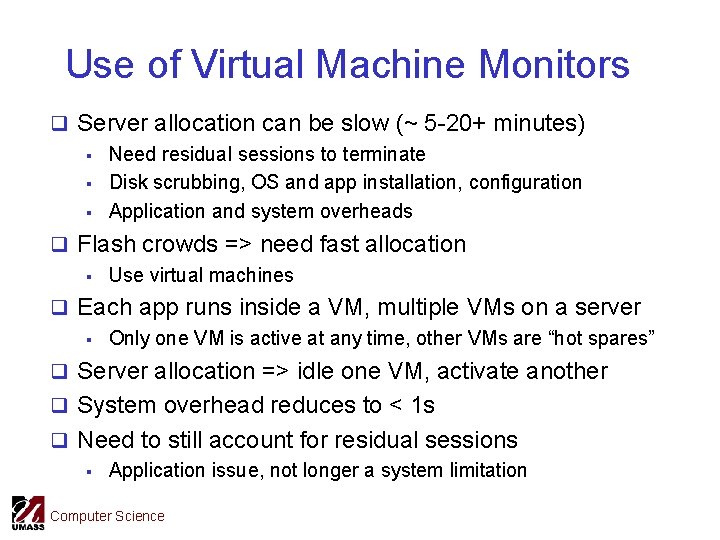

Use of Virtual Machine Monitors q Server allocation can be slow (~ 5 -20+ minutes) § Need residual sessions to terminate § Disk scrubbing, OS and app installation, configuration § Application and system overheads q Flash crowds => need fast allocation § Use virtual machines q Each app runs inside a VM, multiple VMs on a server § Only one VM is active at any time, other VMs are “hot spares” q Server allocation => idle one VM, activate another q System overhead reduces to < 1 s q Need to still account for residual sessions § Application issue, not longer a system limitation Computer Science

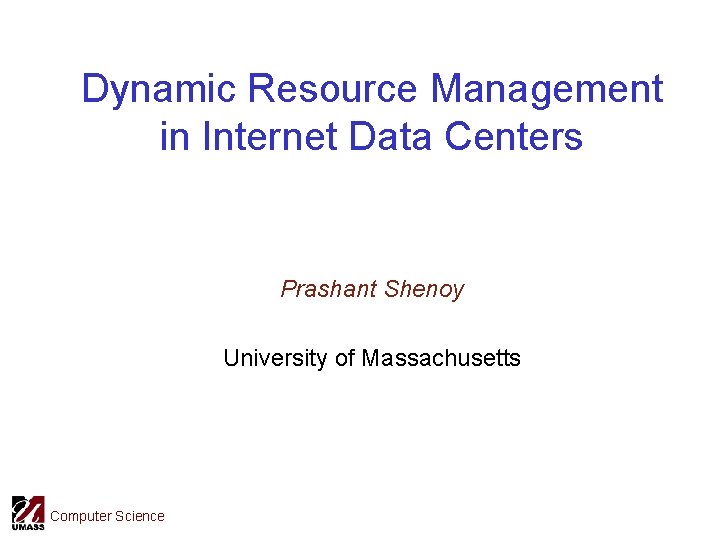

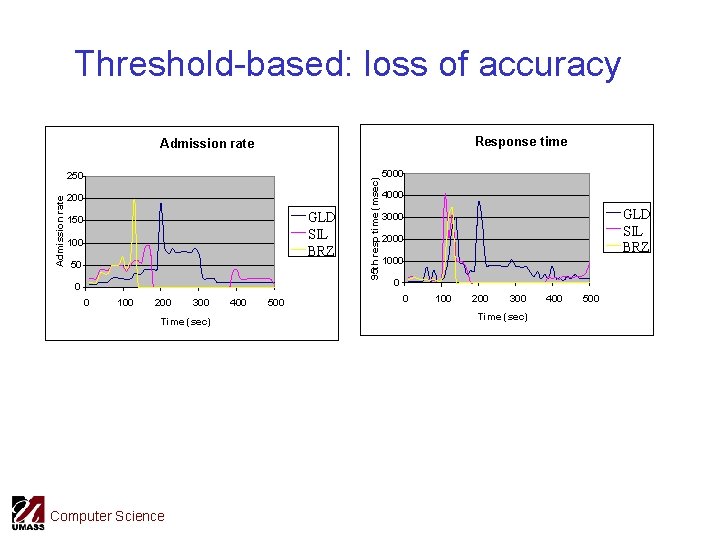

Threshold-based: loss of accuracy Response time Admission rate 250 200 GLD SIL BRZ 150 100 50 0 0 100 200 300 Time (sec) Computer Science 400 500 95 th resp time (msec) Admission rate 5000 4000 GLD SIL BRZ 3000 2000 1000 0 0 100 200 300 Time (sec) 400 500