Dynamic Hash Tables on the GPU John Shortt

Dynamic Hash Tables on the GPU John Shortt School of Computer Science Carleton University, Ottawa, Canada johnshortt@cmail. carleton. ca COMP 5704 Project Presentation

Problem Statement • Hash Table • Associative memory: given a key return a value • insert, search, delete, all O(1) • Variants – map, set, non-unique keys, counter, Bloom filter • Dynamic Model – Bulk build, periodic/bulk search, insert, delete. Concurrent operations • Key Metrics – Performance (ops/second), memory usage • How can we implement a high-performance Dynamic Hash Table on the GPU? COMP 5704 Project Presentation

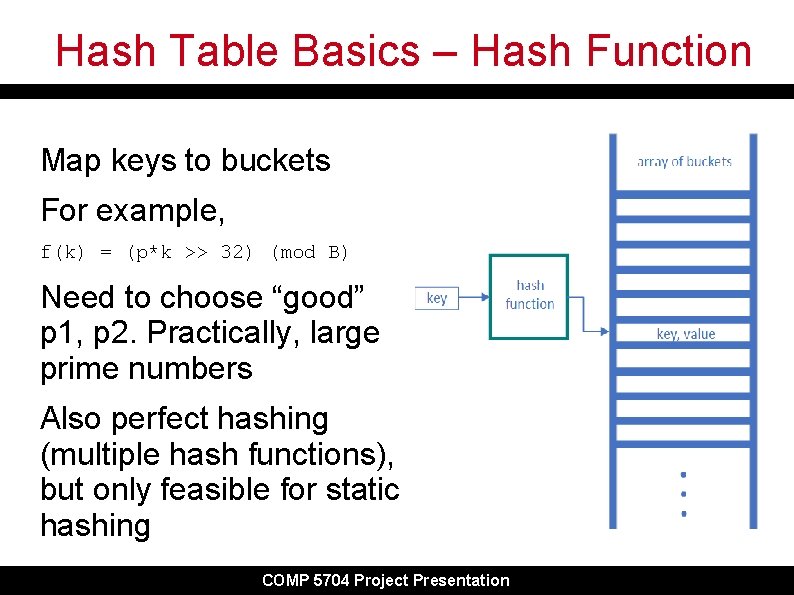

Hash Table Basics – Hash Function Map keys to buckets For example, f(k) = (p*k >> 32) (mod B) Need to choose “good” p 1, p 2. Practically, large prime numbers Also perfect hashing (multiple hash functions), but only feasible for static hashing COMP 5704 Project Presentation

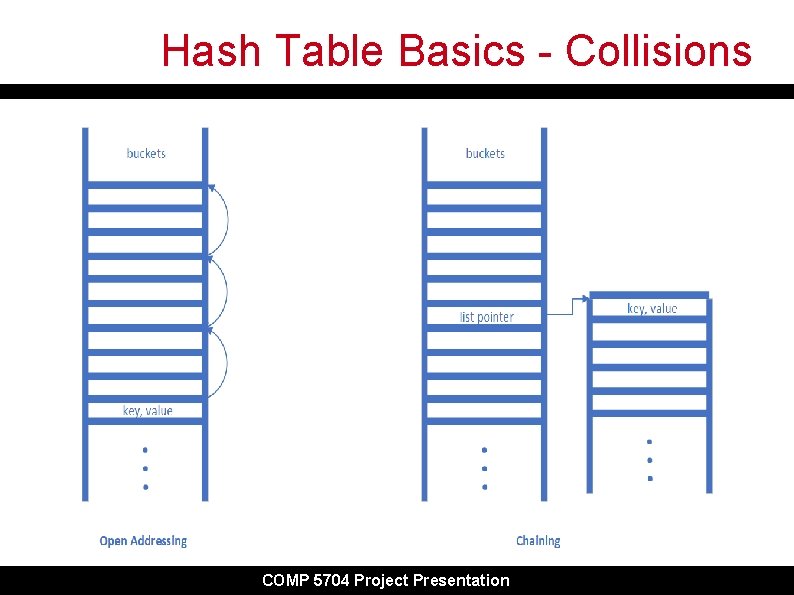

Hash Table Basics - Collisions • Many variants but 2 major categories: Chaining, Open Addressing • Key aspect of performance • GPU challenges COMP 5704 Project Presentation

Hash Table Basics - Collisions COMP 5704 Project Presentation

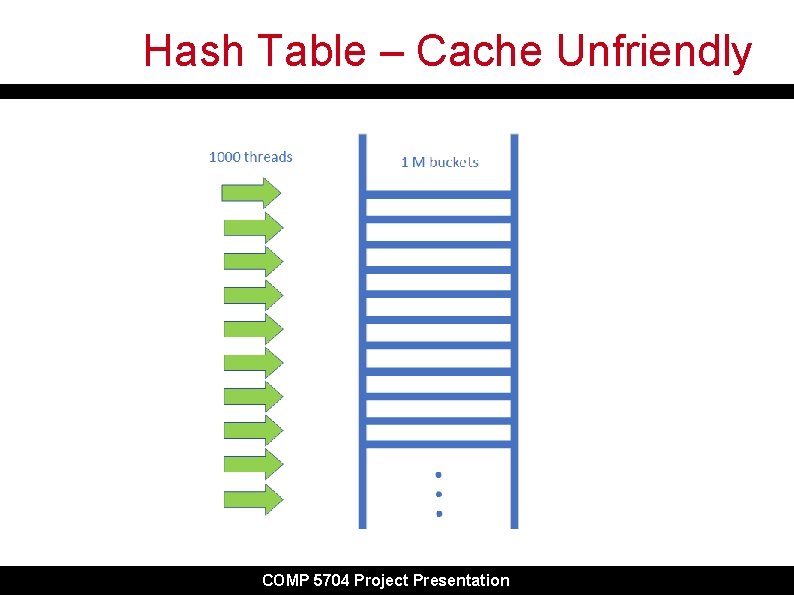

Hash Table – GPU Challenges • Memory management • Data parallel problem but scattering due to hash function renders caches ineffective • Inability to use caching • Diversion in kernel code • Concurrency challenges COMP 5704 Project Presentation

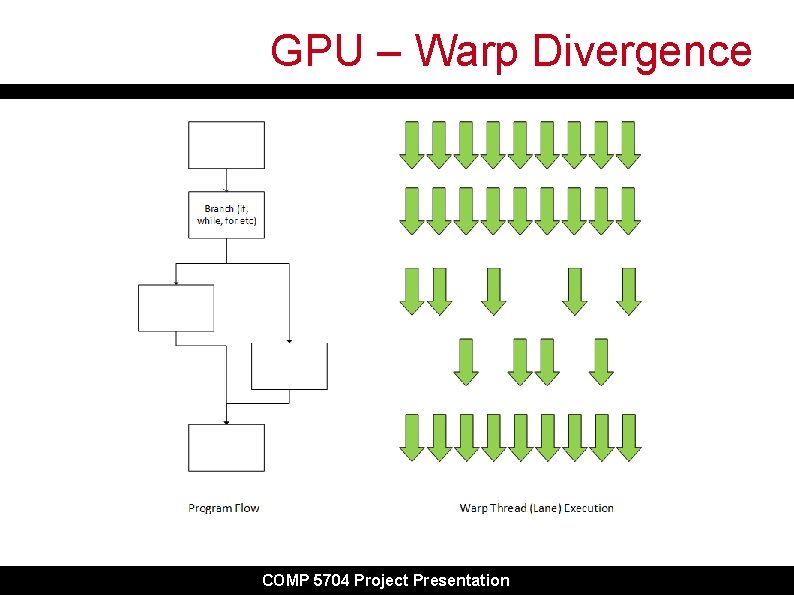

GPU – Warps, Issues • What’s a warp? • Unit of scheduling in a GPU • Typically 32 threads (also called lanes) • Warps share some resources, notably memory for registers • Warp threads (or lanes) execute a kernel (the same kernel) instruction by instruction • Warp thread divergence happens when code branches COMP 5704 Project Presentation

GPU – Warp Divergence COMP 5704 Project Presentation

Hash Table – Cache Unfriendly COMP 5704 Project Presentation

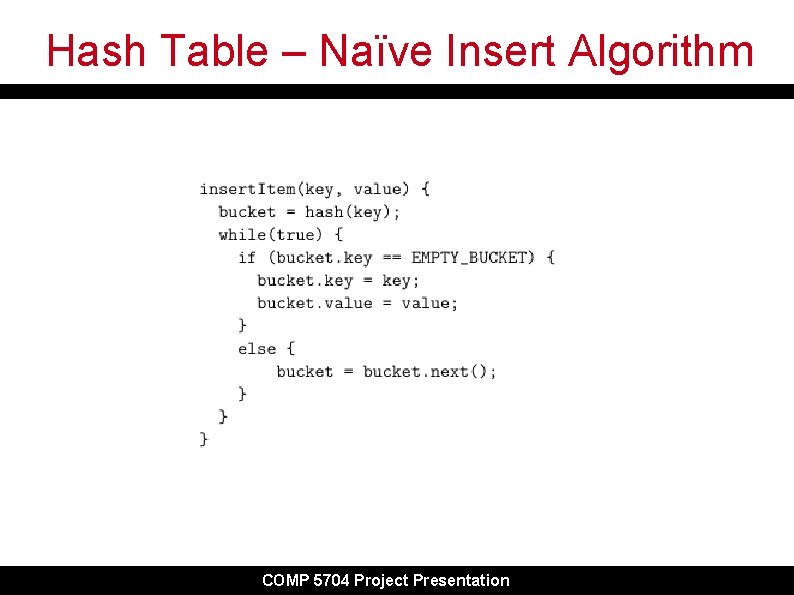

Hash Table – Naïve Insert Algorithm COMP 5704 Project Presentation

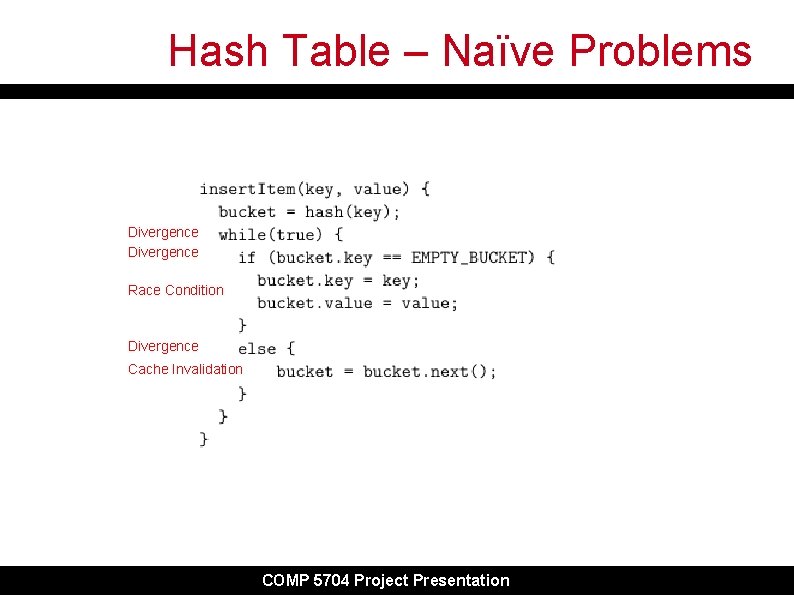

Hash Table – Naïve Problems Divergence Race Condition Divergence Cache Invalidation COMP 5704 Project Presentation

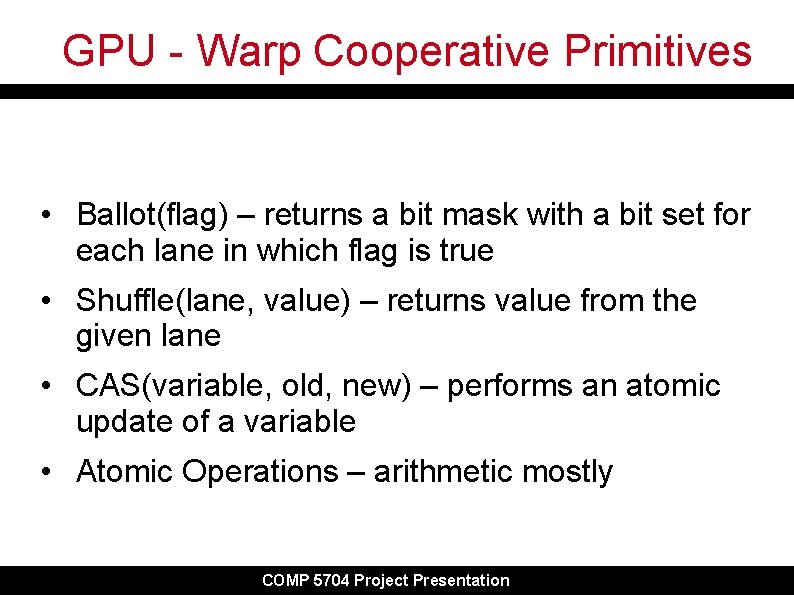

GPU - Warp Cooperative Primitives • Ballot(flag) – returns a bit mask with a bit set for each lane in which flag is true • Shuffle(lane, value) – returns value from the given lane • CAS(variable, old, new) – performs an atomic update of a variable • Atomic Operations – arithmetic mostly COMP 5704 Project Presentation

Slab Hash - Overview Chained hash table implementation on the GPU Buckets stored in slabs – custom memory mgt One slab contains 32 buckets (i. e. key/value pairs) Provides C++ APIs for insert, search, delete, mixed workloads Typical use pattern is bulk create, periodic insert, search and delete Non-locking so highly concurrent COMP 5704 Project Presentation

Slab Hash – Warp Cooperative • Recall that a warp is made up of 32 threads running the same kernel in “lock step” with fast shared memory, state sharing primitives • Idea: Turn the insert algorithm “on its head” and instead of having each thread in a warp insert its own item we have them cooperate on each of their items • Essentially rewrite the insert algorithm to run at the warp level, not the thread level COMP 5704 Project Presentation

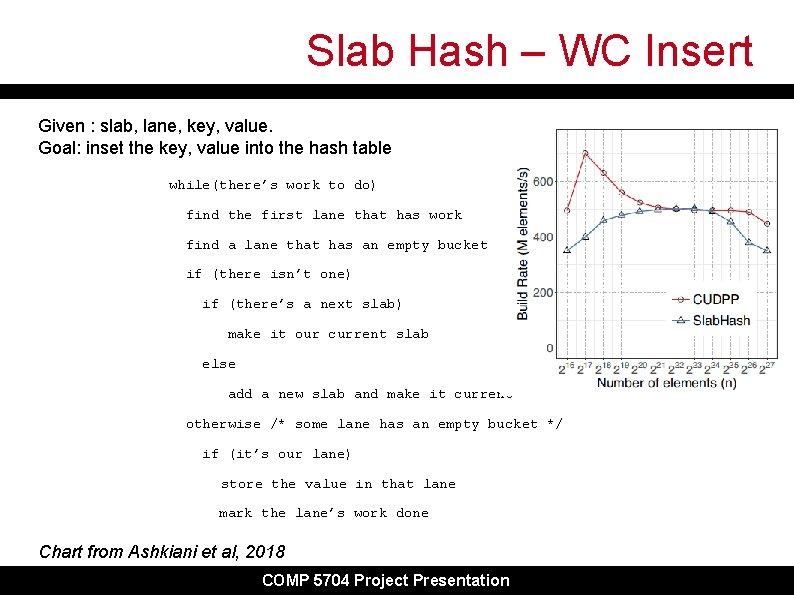

Slab Hash – WC Insert Given : slab, lane, key, value. Goal: inset the key, value into the hash table while(there’s work to do) find the first lane that has work find a lane that has an empty bucket if (there isn’t one) if (there’s a next slab) make it our current slab else add a new slab and make it current otherwise /* some lane has an empty bucket */ if (it’s our lane) store the value in that lane mark the lane’s work done Chart from Ashkiani et al, 2018 COMP 5704 Project Presentation

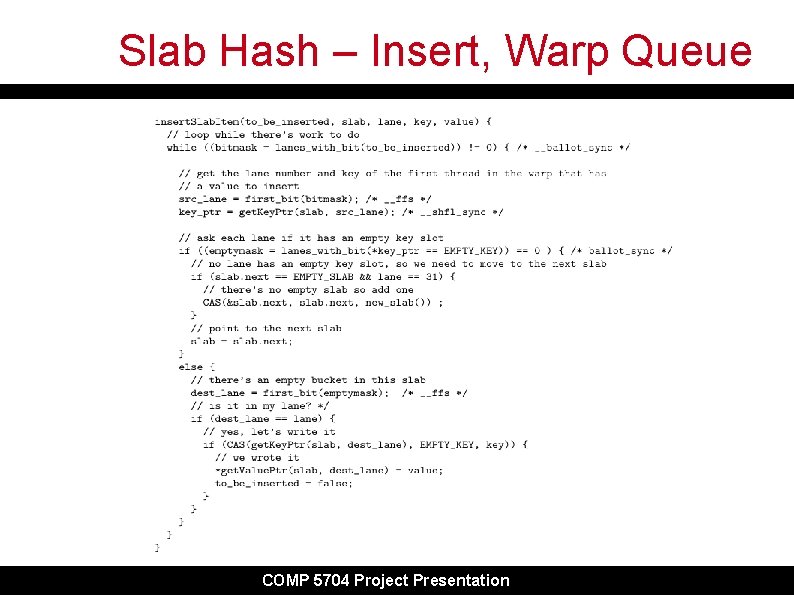

Slab Hash – Insert, Warp Queue COMP 5704 Project Presentation

Slab Hash - Search, Delete • Search algorithm (individual key): • Work queue like insert, choose a lane that has work, assign the work to the lane that “owns” that bucket • Complications: “not found”, multiple slabs • Delete algorithm: • Again work queue, move work to “owner” lane • Same complications as Search • Make sure delete is atomic COMP 5704 Project Presentation

Gel Hash • Chained hash table implementation • Supports locking (reader locks) • Adaptive thread or warp level operations • Tunable based on workload parameters to optimize thread vs. warp operations • Outperforms Slab. Hash for n up to ~ 128 K, • Slabhash outperforms when n ~ 4 M or higher COMP 5704 Project Presentation

Warp Core • Open addressing hash table implementation • Hybrid probing scheme using double hashing and linear probing • Warp cooperative kernel operations • APIs for maps, sets; unique/non-unique keys • Multiple GPU support • Outperforms Slabhash at load factors above 0. 70 COMP 5704 Project Presentation

HH - Design Goals • Novel GPU hash table implementation • Open addressing collision mechanism • Improved memory utilization • Improved performance in certain cases (e. g small batches, incremental growth) • Minimally, equal performance COMP 5704 Project Presentation

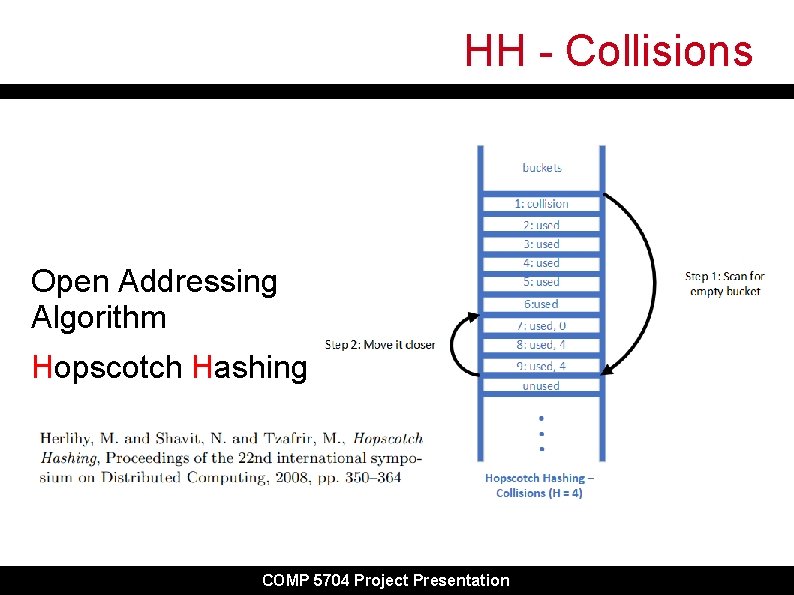

HH - Collisions Open Addressing Algorithm Hopscotch Hashing COMP 5704 Project Presentation

HH, Slab. Hash - Findings Slab Hash HH HH 0. 6 0. 7 0. 8 0. 9 Memory (MB) 13. 75 20. 0 17. 1 15. 0 13. 3 Insert Ops (M/sec) 57. 1 102. 6 85. 7 68. 7 39. 8 Search Ops (M/sec) 89. 7 186. 7 149. 1 105. 3 51. 8 Load Factor N = 1, 000; H = 32 for HH; Ge. Force GXT 960 GPU (2015) COMP 5704 Project Presentation

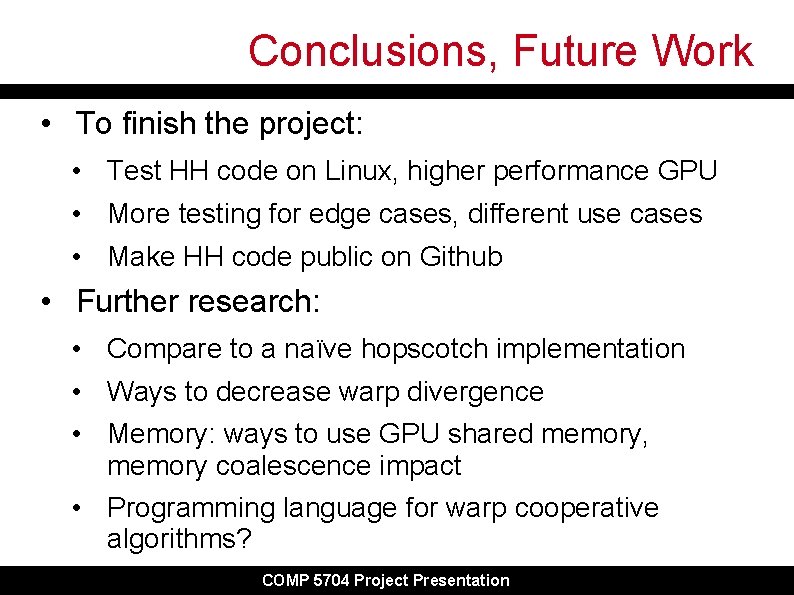

Conclusions, Future Work • To finish the project: • Test HH code on Linux, higher performance GPU • More testing for edge cases, different use cases • Make HH code public on Github • Further research: • Compare to a naïve hopscotch implementation • Ways to decrease warp divergence • Memory: ways to use GPU shared memory, memory coalescence impact • Programming language for warp cooperative algorithms? COMP 5704 Project Presentation

3 questions • Which warp primitive allows data to be transferred from one lane to another: a) ballot, b) shuffle, c) ffs, d) CAS • True or False: Warp divergence can occur in an arithmetic calculation? • How many key/value pairs does Slab. Hash store in a slab? Why that many? COMP 5704 Project Presentation

Extra slides Keep these for now, some may be useful in a longer presentation for a more general audience COMP 5704 Project Presentation

GPU - Threads and Parallelization • Diagram • GPU - blocks, processors • diagram to show hierarchy, relations COMP 5704 Project Presentation

GPU – Memory, Caches • Diagram • L 2, shared memory, registers • hierarchy diagram COMP 5704 Project Presentation

Slab Hash – Insert, Shuffle • Code fragment for insert - shuffle • all threads in a slot search for empty slot COMP 5704 Project Presentation

Slab Hash – Insert, Next Slab • Code fragment for insert – slab update COMP 5704 Project Presentation

Slab Hash – Insert, Insert item • Code fragment for insert – item insert COMP 5704 Project Presentation

HH, Slab. Hash - Findings • Theoretical vs Actual Speed • Memory efficiency • Suitability for applications COMP 5704 Project Presentation

Comparision of Existing Designs • Table of features • hash function • collisions • memory management • use cases, application suitability COMP 5704 Project Presentation

Comparision of Performance (table) • Table • search, insert, delete /s • memory efficiency COMP 5704 Project Presentation

Limitations, Shortcomings • Table • cases where negative performance occurs • interesting cases that current implementations don't optimize (e. g. construction interleaved with search, delete) • memory efficiency challenges COMP 5704 Project Presentation

- Slides: 34