Dynamic and static hand gesture recognition in computer

![Recognizing static hand gestures (3) The results of classifiers examination Classifier E [%] t. Recognizing static hand gestures (3) The results of classifiers examination Classifier E [%] t.](https://slidetodoc.com/presentation_image_h/3411005dbb3975e16641a3490a4d0952/image-28.jpg)

- Slides: 36

Dynamic and static hand gesture recognition in computer vision Andrzej Czyżewski, Bożena Kostek, Piotr Odya, Bartosz Kunka, Michał Lech Gdansk University of Technology, Faculty of Electronics, Telecommunications and Informatics Multimedia Systems Dept. Warsaw, 13. 08. 2014

Presentation outline 1. Developed gesture recognition system 2. Background / foreground segmentation 3. Recognizing dynamic hand gestures 4. Recognizing static hand gestures 5. Efficiency 6. Video presentations

Presentation outline 1. Developed gesture recognition system 2. Background / foreground segmentation 3. Recognizing dynamic hand gestures 4. Recognizing static hand gestures 5. Efficiency 6. Video presentations

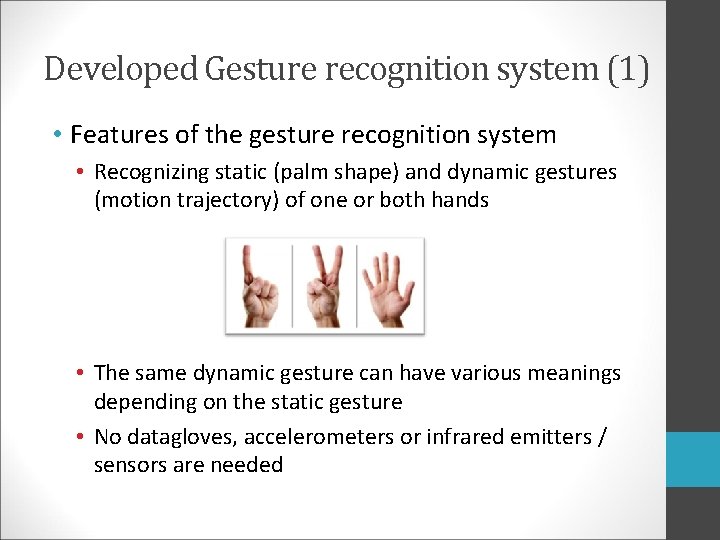

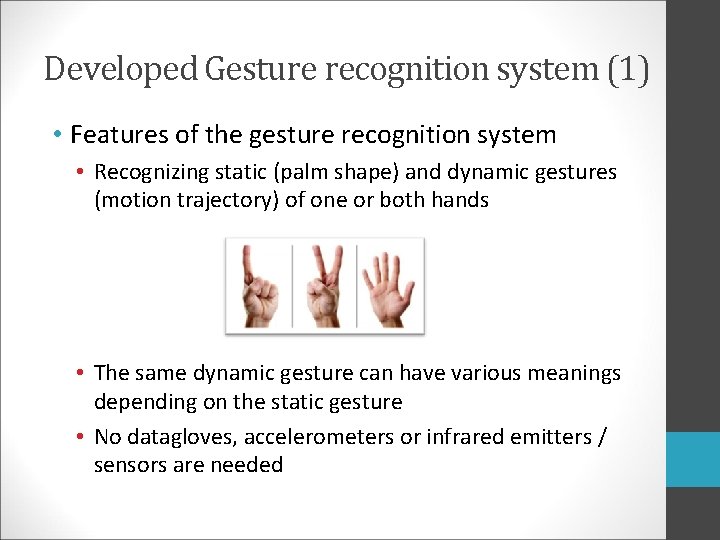

Developed Gesture recognition system (1) • Features of the gesture recognition system • Recognizing static (palm shape) and dynamic gestures (motion trajectory) of one or both hands • The same dynamic gesture can have various meanings depending on the static gesture • No datagloves, accelerometers or infrared emitters / sensors are needed

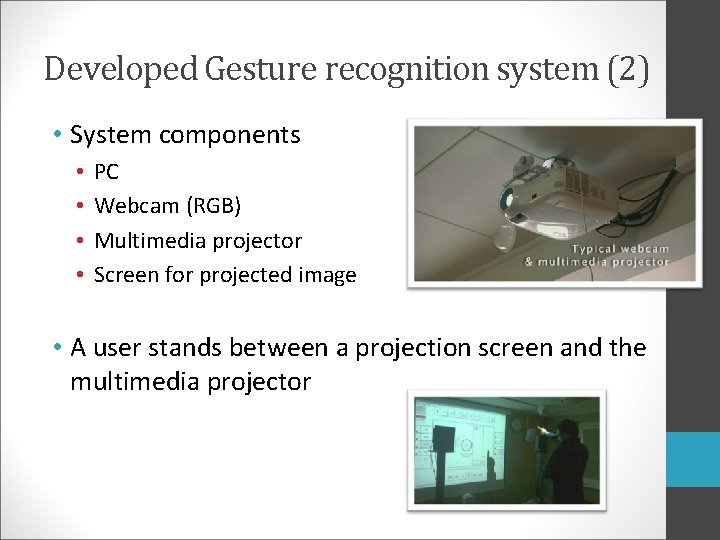

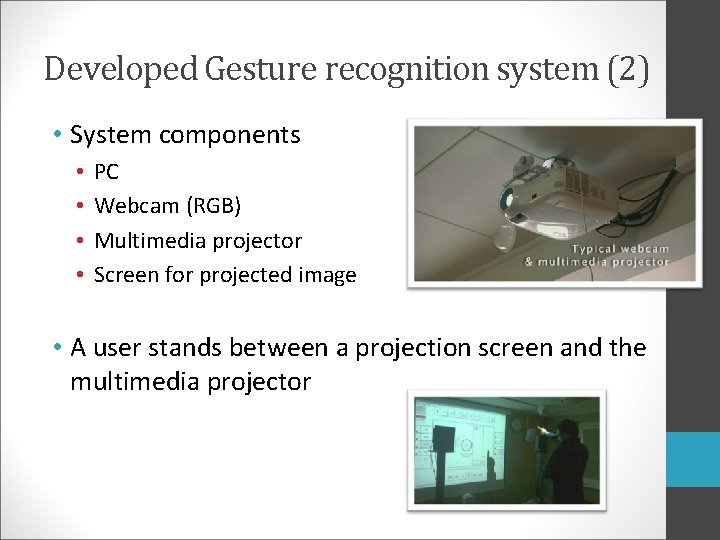

Developed Gesture recognition system (2) • System components • • PC Webcam (RGB) Multimedia projector Screen for projected image • A user stands between a projection screen and the multimedia projector

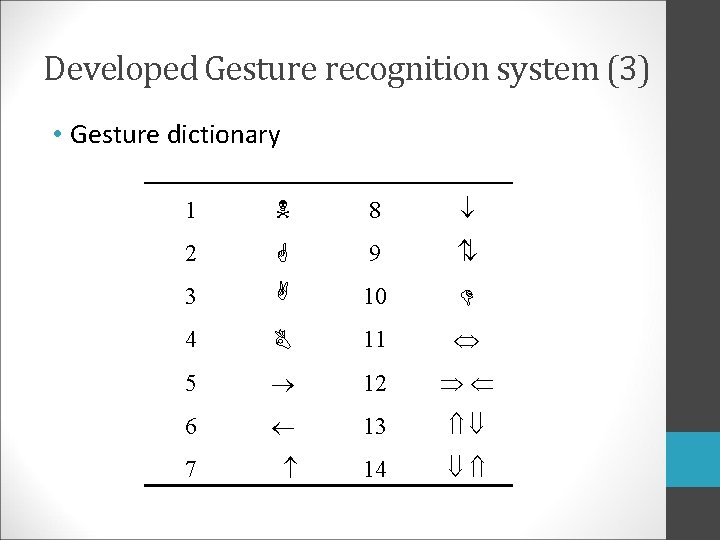

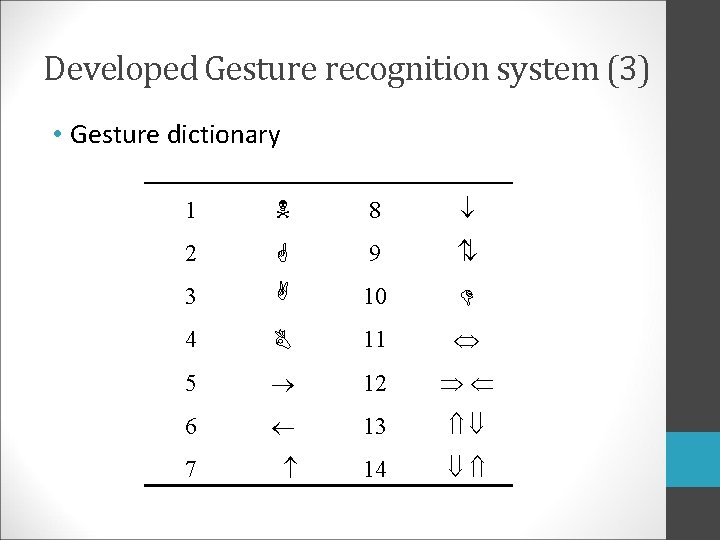

Developed Gesture recognition system (3) • Gesture dictionary 1 N 8 ¯ 2 G 9 ¯ 3 10 D 4 11 Û 5 ® 12 ÞÜ 6 ¬ 13 Ýß 7 14 ßÝ

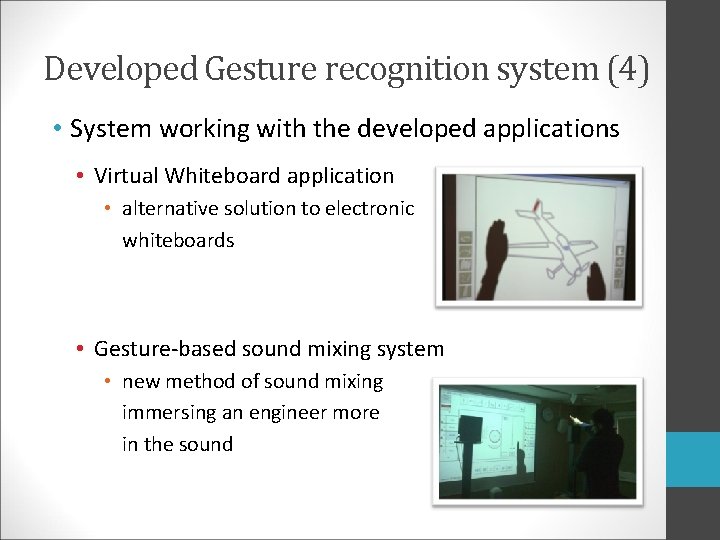

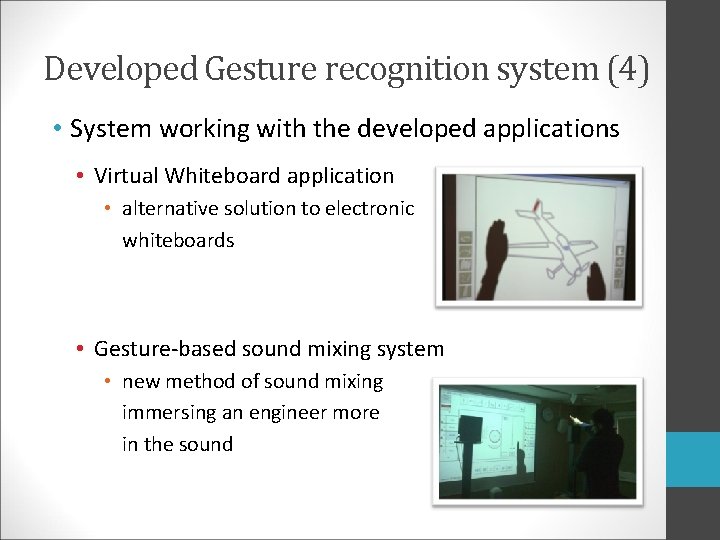

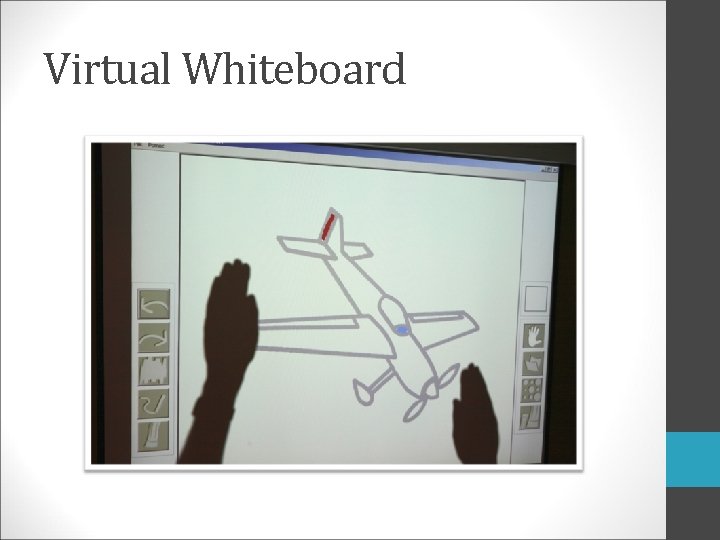

Developed Gesture recognition system (4) • System working with the developed applications • Virtual Whiteboard application • alternative solution to electronic whiteboards • Gesture-based sound mixing system • new method of sound mixing immersing an engineer more in the sound

Presentation outline 1. Developed gesture recognition system 2. Background / foreground segmentation 3. Recognizing dynamic hand gestures 4. Recognizing static hand gestures 5. Efficiency 6. Video presentations

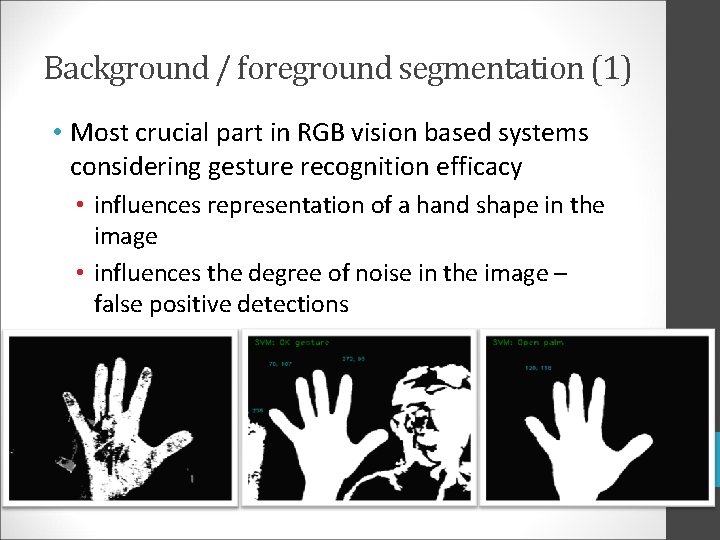

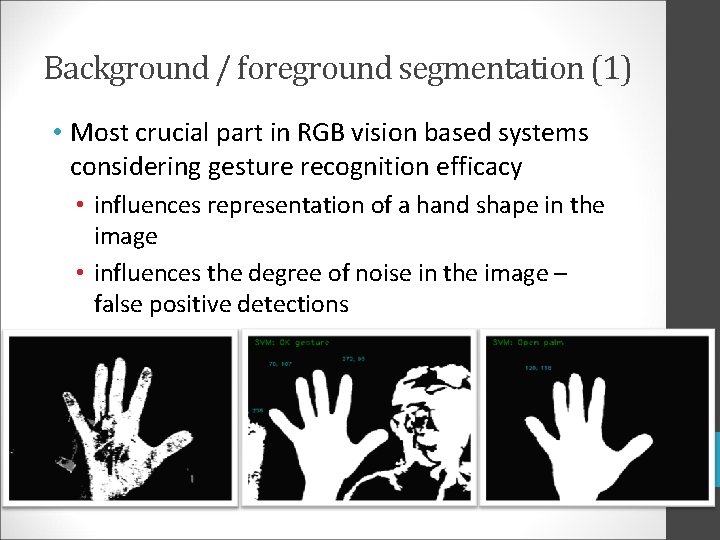

Background / foreground segmentation (1) • Most crucial part in RGB vision based systems considering gesture recognition efficacy • influences representation of a hand shape in the image • influences the degree of noise in the image – false positive detections

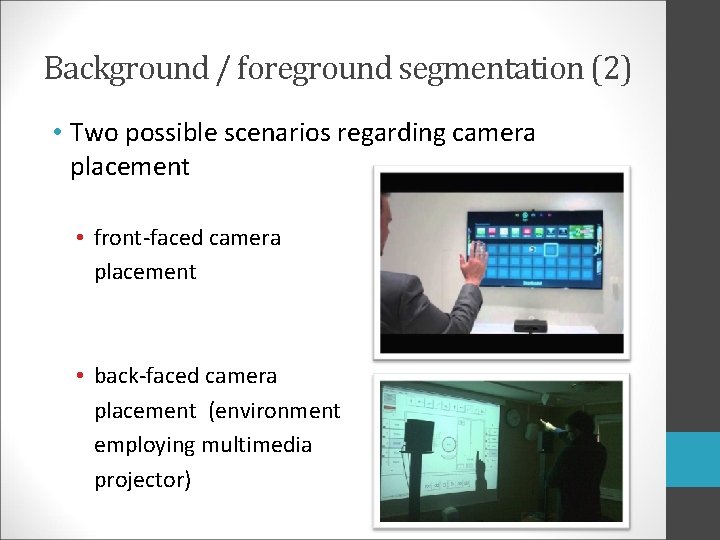

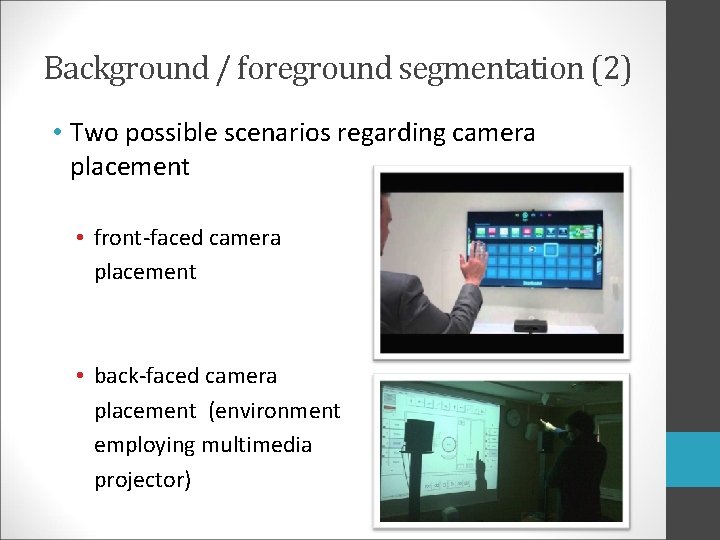

Background / foreground segmentation (2) • Two possible scenarios regarding camera placement • front-faced camera placement • back-faced camera placement (environment employing multimedia projector)

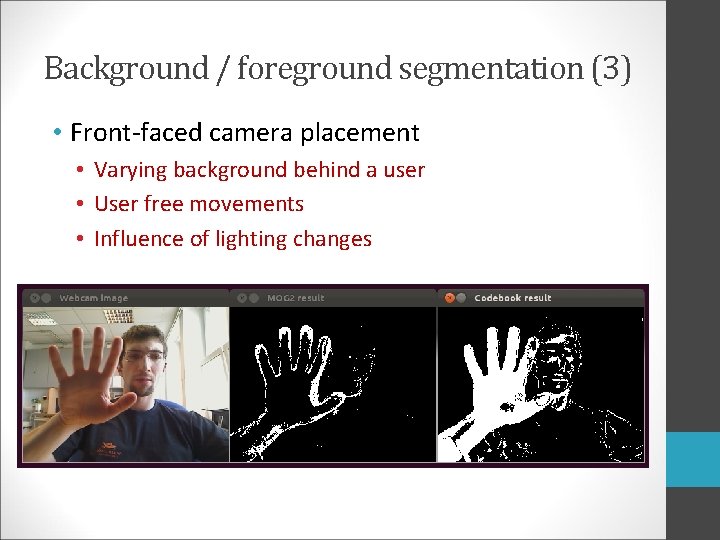

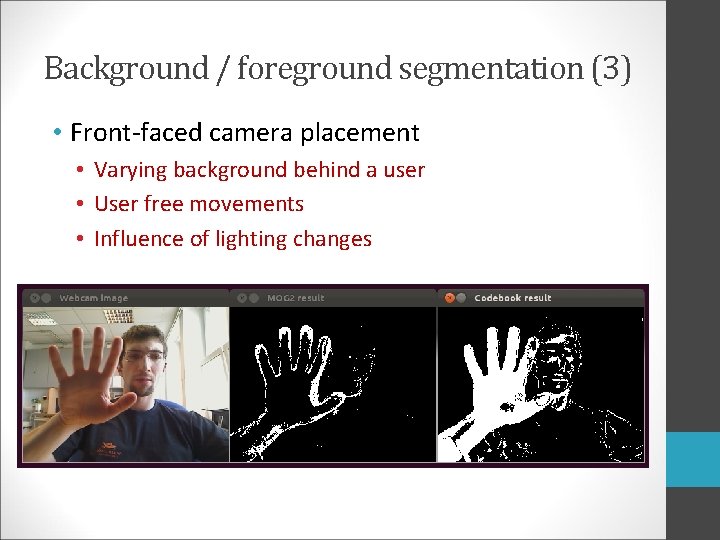

Background / foreground segmentation (3) • Front-faced camera placement • Varying background behind a user • User free movements • Influence of lighting changes

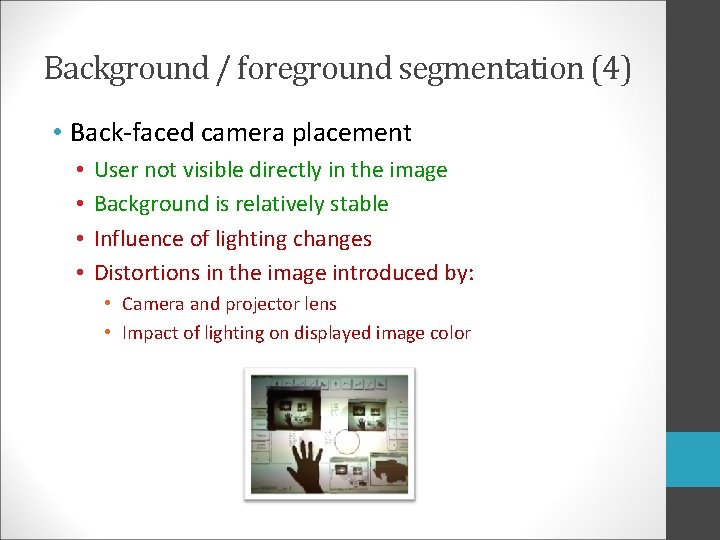

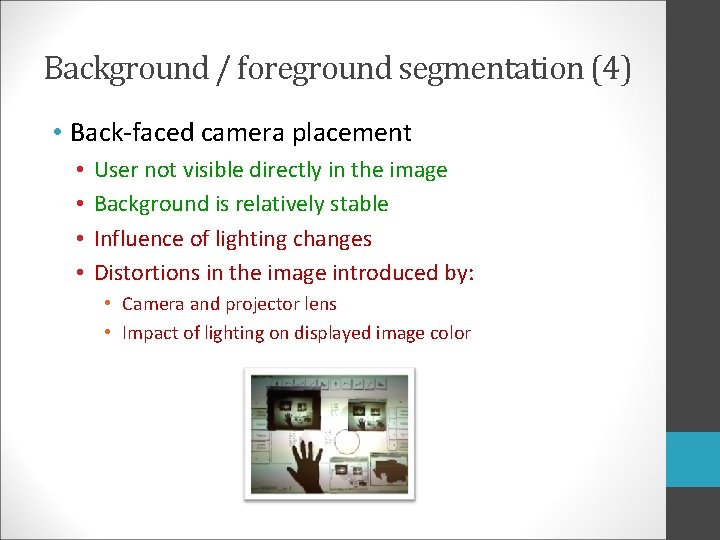

Background / foreground segmentation (4) • Back-faced camera placement • • User not visible directly in the image Background is relatively stable Influence of lighting changes Distortions in the image introduced by: • Camera and projector lens • Impact of lighting on displayed image color

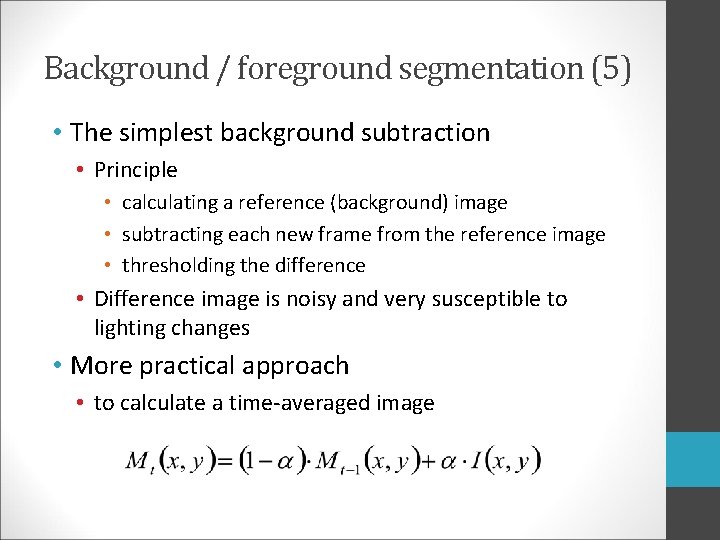

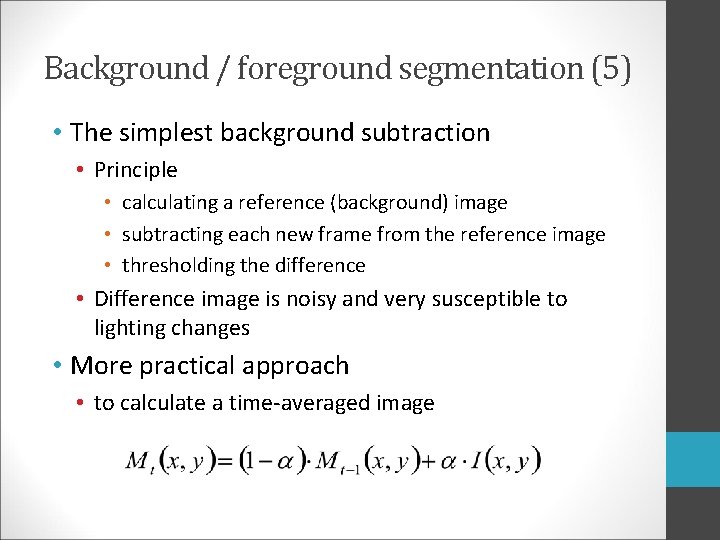

Background / foreground segmentation (5) • The simplest background subtraction • Principle • calculating a reference (background) image • subtracting each new frame from the reference image • thresholding the difference • Difference image is noisy and very susceptible to lighting changes • More practical approach • to calculate a time-averaged image

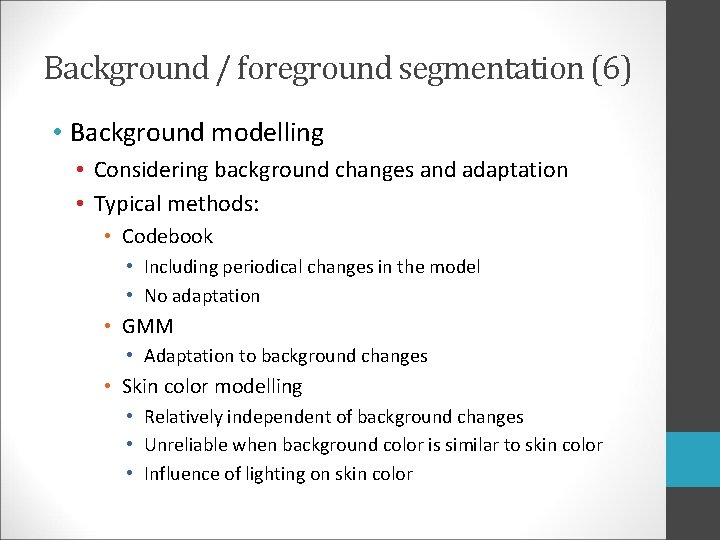

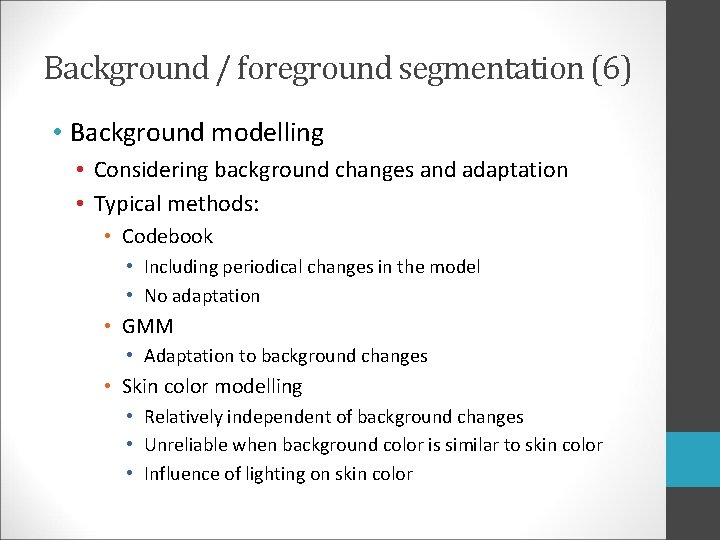

Background / foreground segmentation (6) • Background modelling • Considering background changes and adaptation • Typical methods: • Codebook • Including periodical changes in the model • No adaptation • GMM • Adaptation to background changes • Skin color modelling • Relatively independent of background changes • Unreliable when background color is similar to skin color • Influence of lighting on skin color

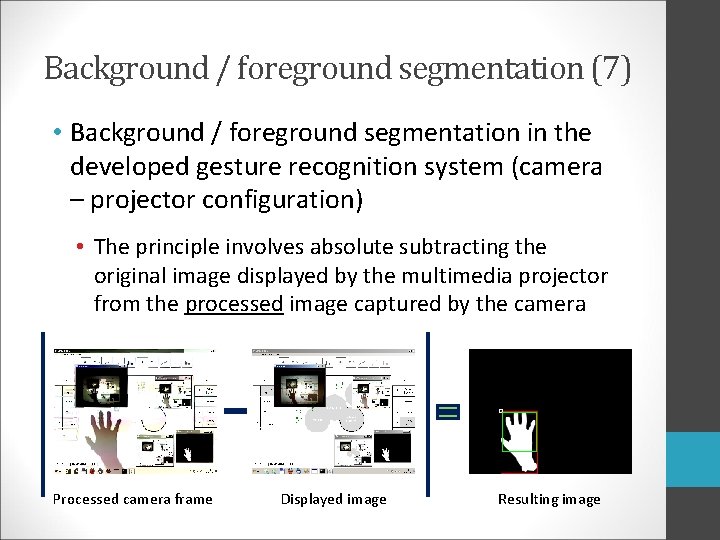

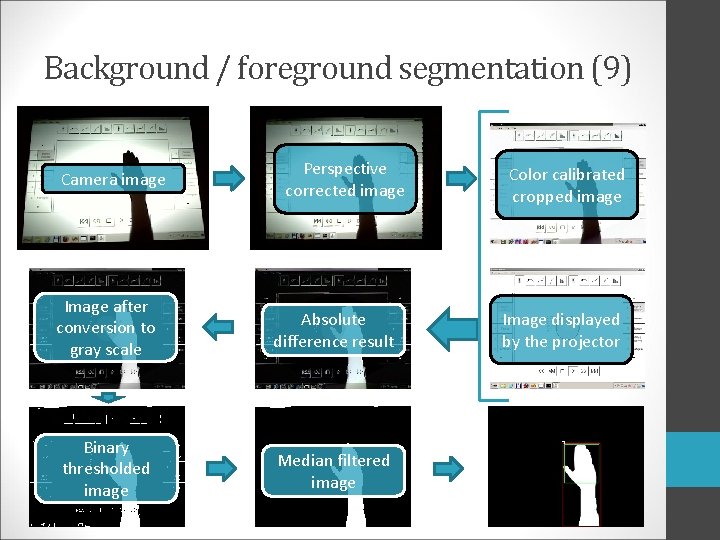

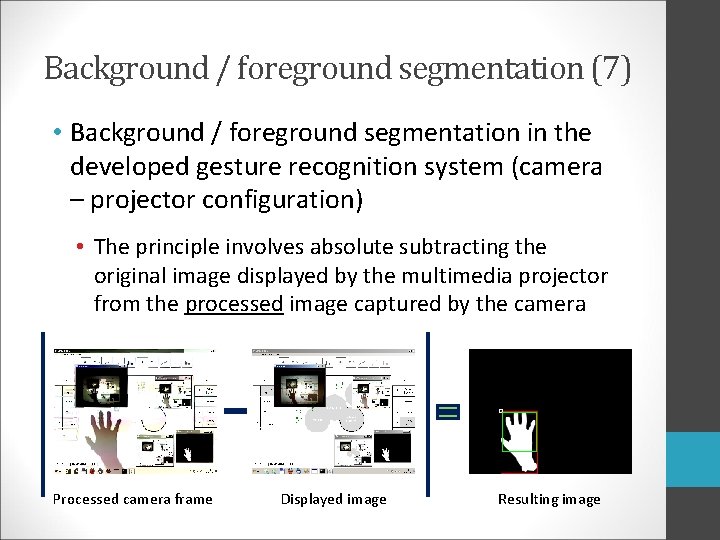

Background / foreground segmentation (7) • Background / foreground segmentation in the developed gesture recognition system (camera – projector configuration) • The principle involves absolute subtracting the original image displayed by the multimedia projector from the processed image captured by the camera Processed camera frame Displayed image Resulting image

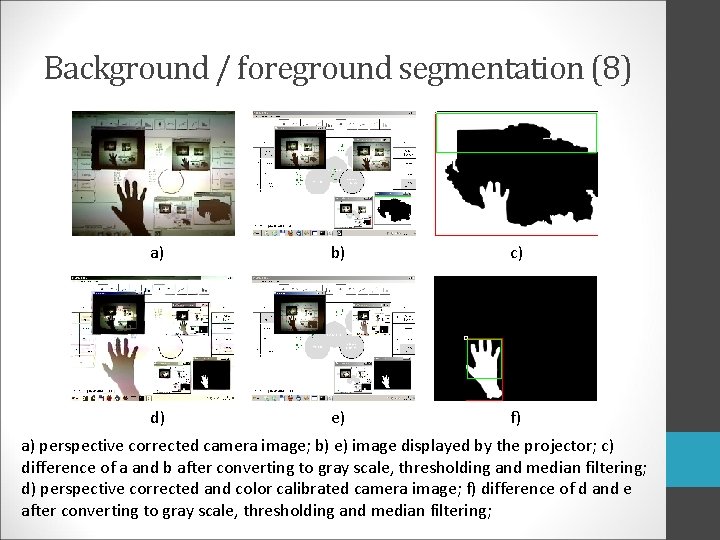

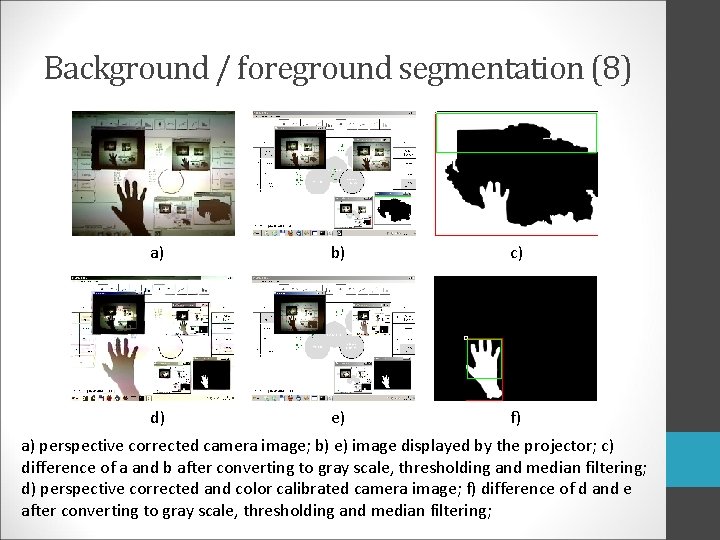

Background / foreground segmentation (8) a) b) c) d) e) f) a) perspective corrected camera image; b) e) image displayed by the projector; c) difference of a and b after converting to gray scale, thresholding and median filtering; d) perspective corrected and color calibrated camera image; f) difference of d and e after converting to gray scale, thresholding and median filtering;

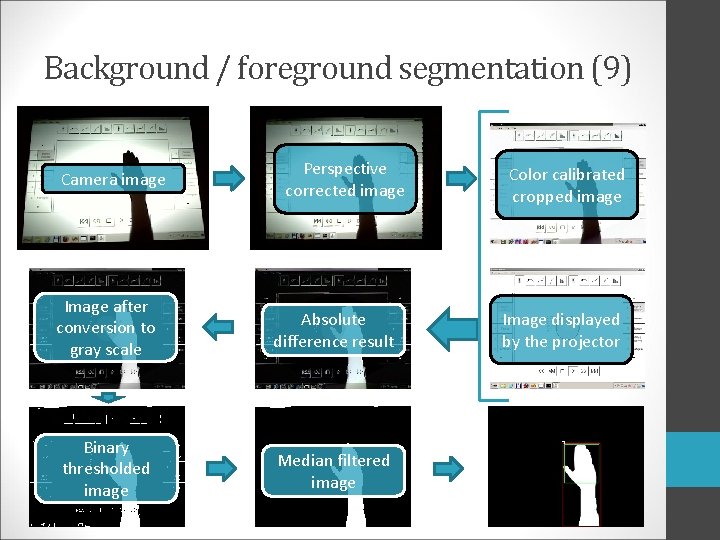

Background / foreground segmentation (9) Camera image Perspective corrected image Image after conversion to gray scale Absolute difference result Binary thresholded image Median filtered image Color calibrated cropped image Image displayed by the projector

Presentation outline 1. Developed gesture recognition system 2. Background / foreground segmentation 3. Recognizing dynamic hand gestures 4. Recognizing static hand gestures 5. Efficiency 6. Video presentations

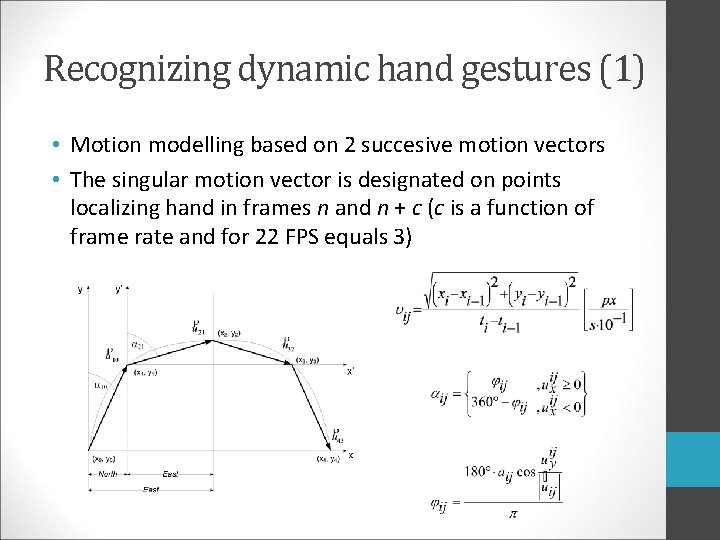

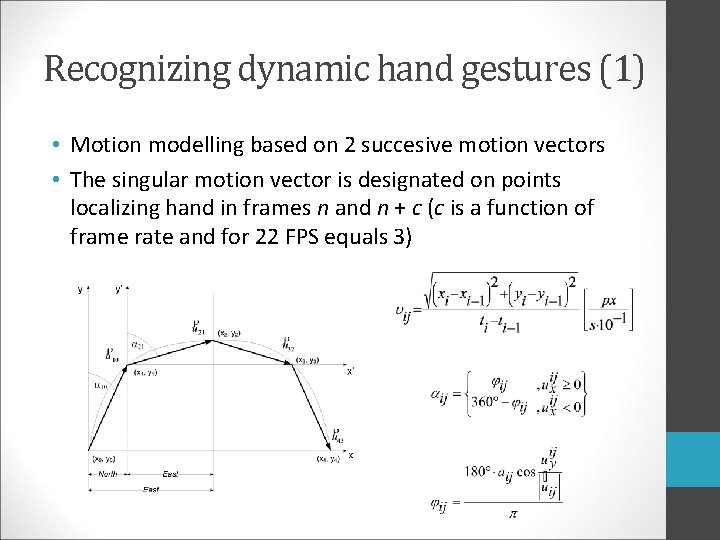

Recognizing dynamic hand gestures (1) • Motion modelling based on 2 succesive motion vectors • The singular motion vector is designated on points localizing hand in frames n and n + c (c is a function of frame rate and for 22 FPS equals 3)

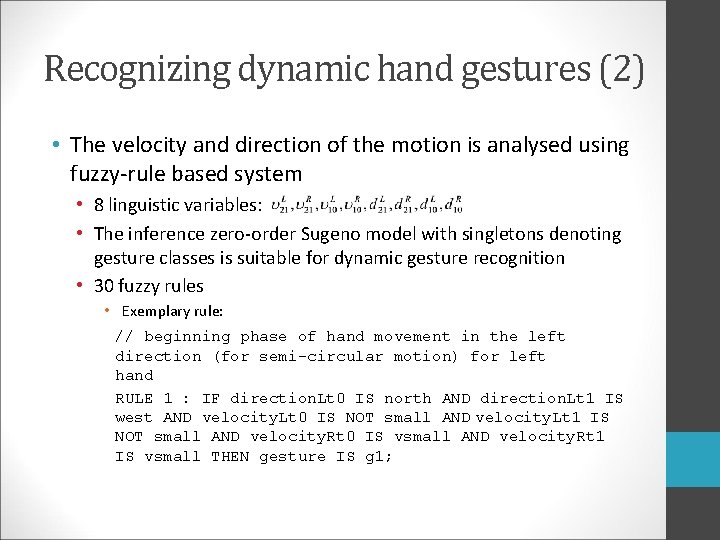

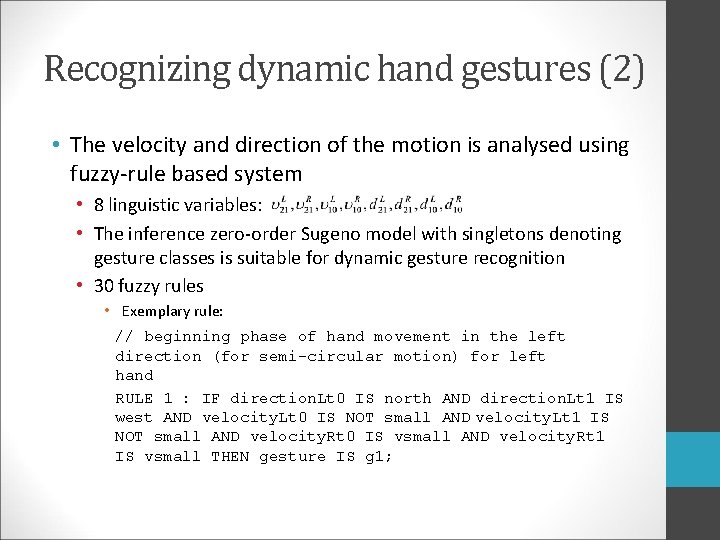

Recognizing dynamic hand gestures (2) • The velocity and direction of the motion is analysed using fuzzy-rule based system • 8 linguistic variables: • The inference zero-order Sugeno model with singletons denoting gesture classes is suitable for dynamic gesture recognition • 30 fuzzy rules • Exemplary rule: // beginning phase of hand movement in the left direction (for semi-circular motion) for left hand RULE 1 : IF direction. Lt 0 IS north AND direction. Lt 1 IS west AND velocity. Lt 0 IS NOT small AND velocity. Lt 1 IS NOT small AND velocity. Rt 0 IS vsmall AND velocity. Rt 1 IS vsmall THEN gesture IS g 1;

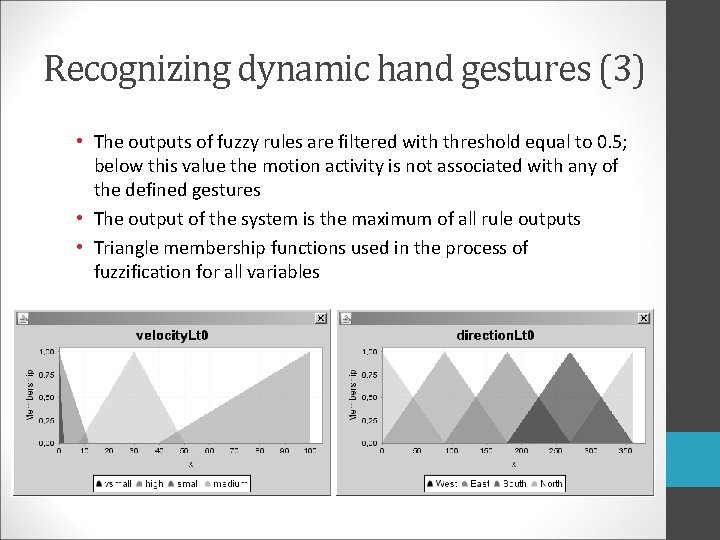

Recognizing dynamic hand gestures (3) • The outputs of fuzzy rules are filtered with threshold equal to 0. 5; below this value the motion activity is not associated with any of the defined gestures • The output of the system is the maximum of all rule outputs • Triangle membership functions used in the process of fuzzification for all variables

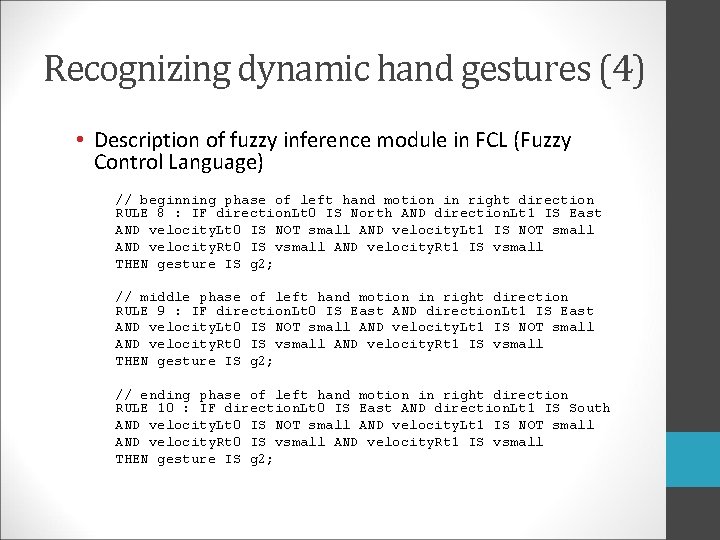

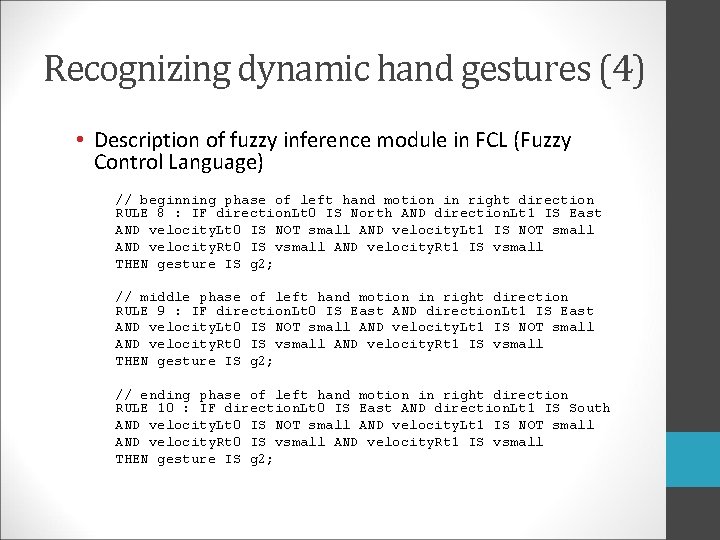

Recognizing dynamic hand gestures (4) • Description of fuzzy inference module in FCL (Fuzzy Control Language) // beginning phase of left hand motion in right direction RULE 8 : IF direction. Lt 0 IS North AND direction. Lt 1 IS East AND velocity. Lt 0 IS NOT small AND velocity. Lt 1 IS NOT small AND velocity. Rt 0 IS vsmall AND velocity. Rt 1 IS vsmall THEN gesture IS g 2; // middle phase of left hand motion in right direction RULE 9 : IF direction. Lt 0 IS East AND direction. Lt 1 IS East AND velocity. Lt 0 IS NOT small AND velocity. Lt 1 IS NOT small AND velocity. Rt 0 IS vsmall AND velocity. Rt 1 IS vsmall THEN gesture IS g 2; // ending phase of left hand motion in right direction RULE 10 : IF direction. Lt 0 IS East AND direction. Lt 1 IS South AND velocity. Lt 0 IS NOT small AND velocity. Lt 1 IS NOT small AND velocity. Rt 0 IS vsmall AND velocity. Rt 1 IS vsmall THEN gesture IS g 2;

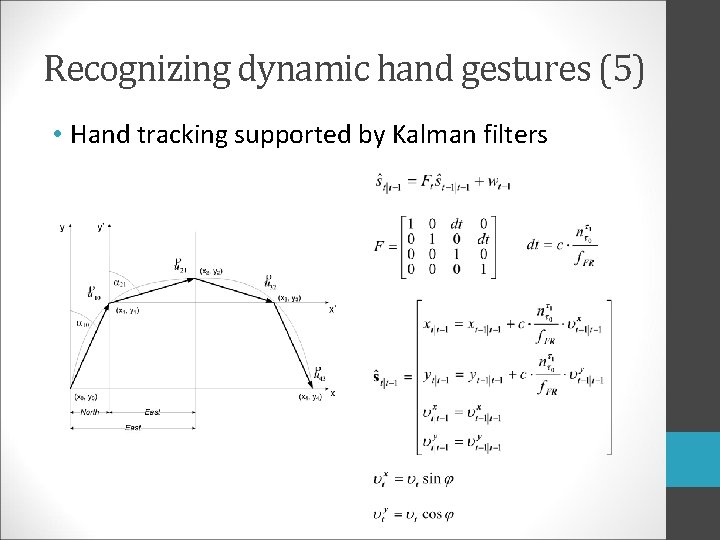

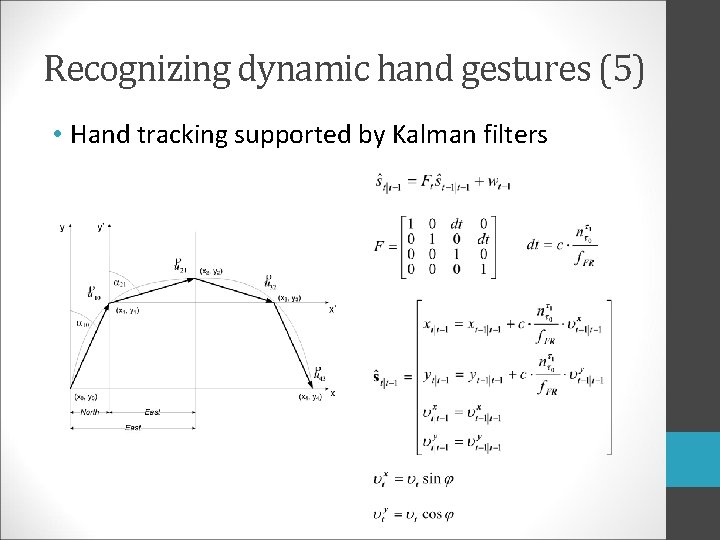

Recognizing dynamic hand gestures (5) • Hand tracking supported by Kalman filters

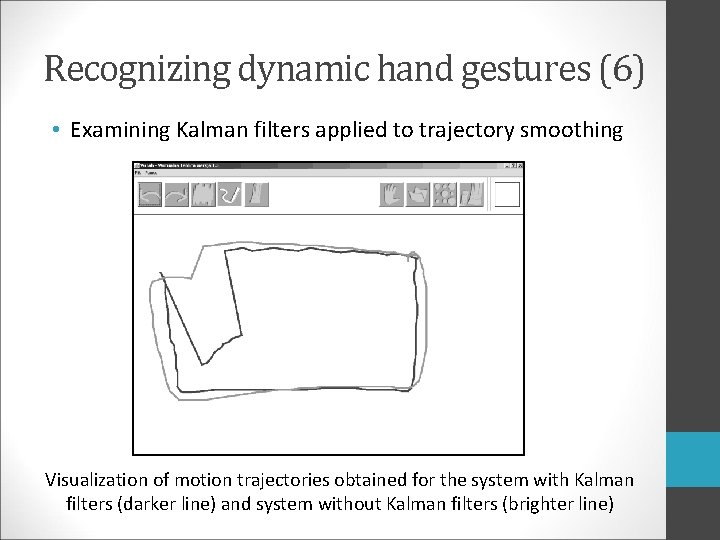

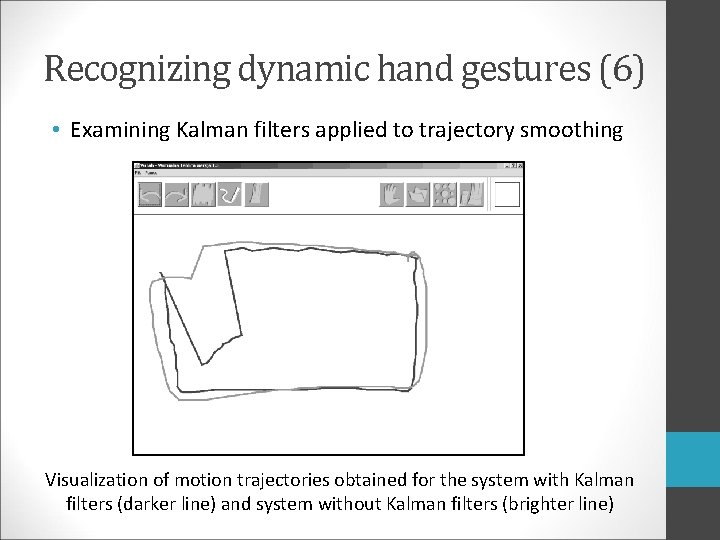

Recognizing dynamic hand gestures (6) • Examining Kalman filters applied to trajectory smoothing Visualization of motion trajectories obtained for the system with Kalman filters (darker line) and system without Kalman filters (brighter line)

Presentation outline 1. Developed gesture recognition system 2. Background / foreground segmentation 3. Recognizing dynamic hand gestures 4. Recognizing static hand gestures 5. Efficiency 6. Video presentations

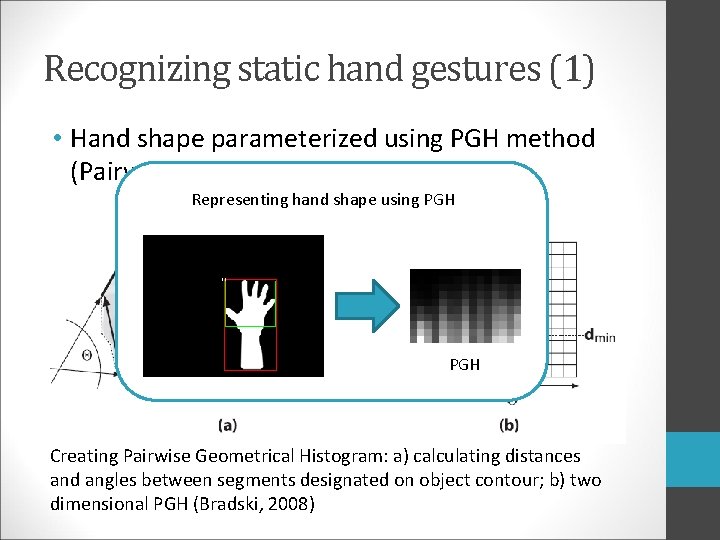

Recognizing static hand gestures (1) • Hand shape parameterized using PGH method (Pairwise Geometrical Histograms) Representing hand shape using PGH Creating Pairwise Geometrical Histogram: a) calculating distances and angles between segments designated on object contour; b) two dimensional PGH (Bradski, 2008)

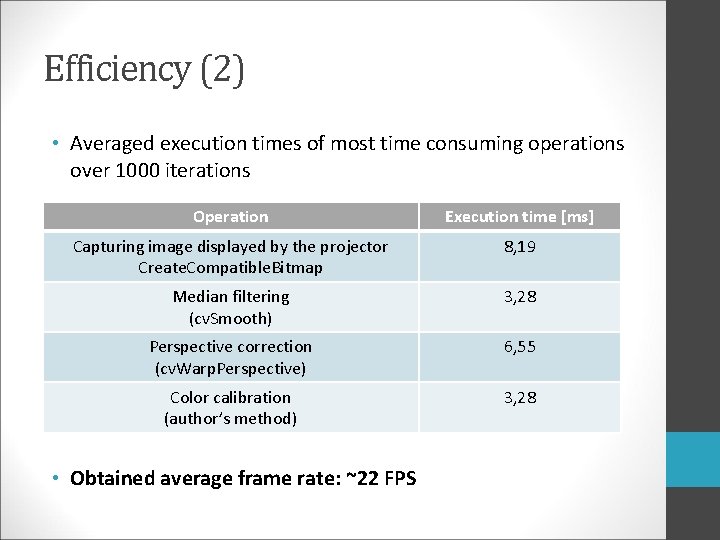

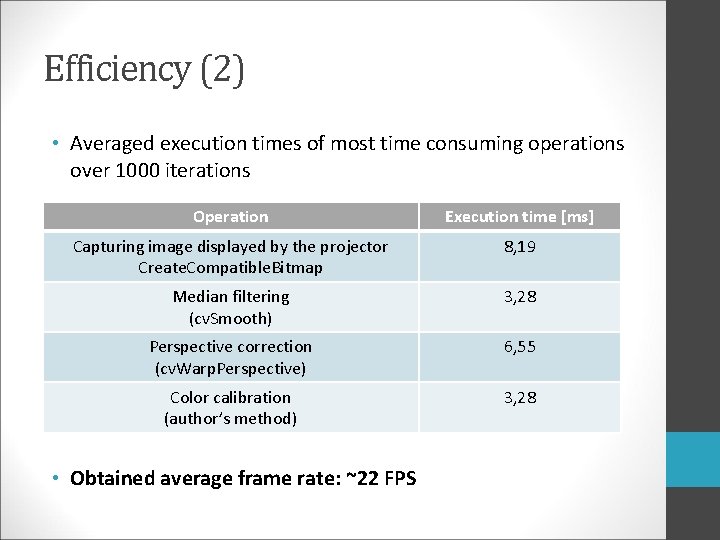

Recognizing static hand gestures (2) • To provide reliable gesture recognition it is essential to chose the optimal classifier • experiments using WEKA application • Random Tree • C 4. 5 (J 48) • Naive Bayes Net • NNge • Random Forest • Artifical Neural Network • Support Vector Machines

![Recognizing static hand gestures 3 The results of classifiers examination Classifier E t Recognizing static hand gestures (3) The results of classifiers examination Classifier E [%] t.](https://slidetodoc.com/presentation_image_h/3411005dbb3975e16641a3490a4d0952/image-28.jpg)

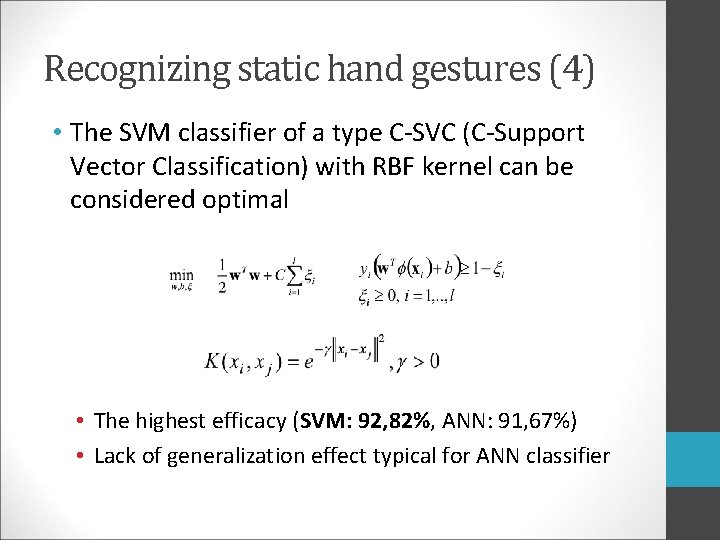

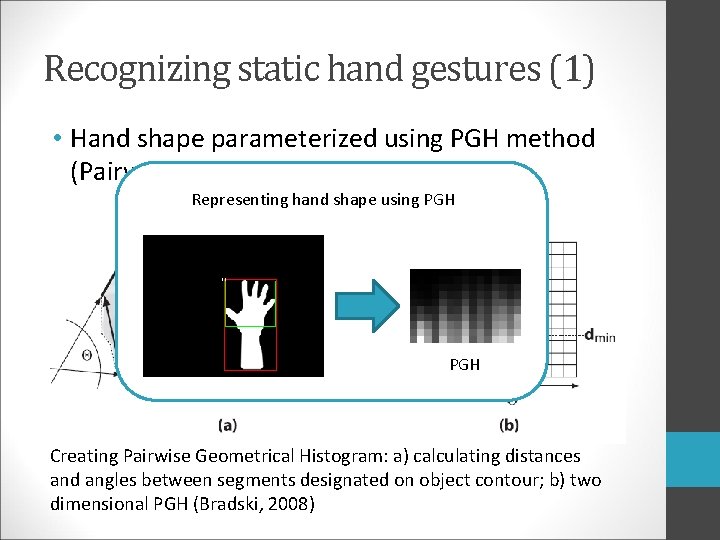

Recognizing static hand gestures (3) The results of classifiers examination Classifier E [%] t. T [ms] t. K [ms] Parameters Random Tree 77. 04 443 3 k = 26, m = 2 -17 C 4. 5 (J 48) 77. 73 1342 4 Naive Bayes Net 79. 49 303 73 NNge 83. 47 14234 8073 C = 2 -7, m = 2 supervised discretization g = 22, i = 24 Random Forest Artificial Neural Network SVM (Lib. SVM) 89. 91 91. 67 92. 82 59644 1458 2508 722 i = 29, k = 24, unlimited depth 187 l = 2 -3, m = 2 -5, e = 23, one hidden layer, 4 nodes 1159 = 2 -11, C = 211, RBF kernel t. T – average training time, t. K – average validation time

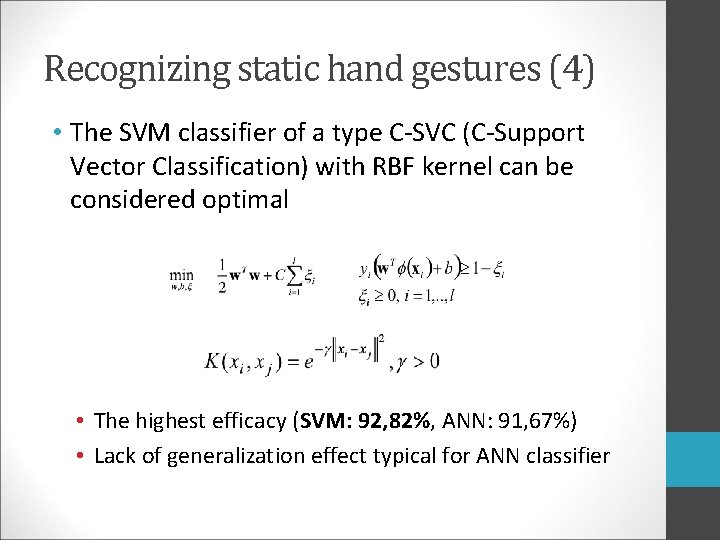

Recognizing static hand gestures (4) • The SVM classifier of a type C-SVC (C-Support Vector Classification) with RBF kernel can be considered optimal • The highest efficacy (SVM: 92, 82%, ANN: 91, 67%) • Lack of generalization effect typical for ANN classifier

Presentation outline 1. Developed gesture recognition system 2. Background / foreground segmentation 3. Recognizing dynamic hand gestures 4. Recognizing static hand gestures 5. Efficiency 6. Video presentations

Efficiency (1) • Computer parameters: • Intel Core 2 Duo P 7350 2. 0 GHz • 400 MHz DDR 2 RAM, 6: 6: 6: 18 cycle latency • Windows Vista Business 32 -bit • Screen resolution: 1024 x 768 px • Processing frames of a size 320 x 240 px

Efficiency (2) • Averaged execution times of most time consuming operations over 1000 iterations Operation Execution time [ms] Capturing image displayed by the projector Create. Compatible. Bitmap 8, 19 Median filtering (cv. Smooth) 3, 28 Perspective correction (cv. Warp. Perspective) 6, 55 Color calibration (author’s method) 3, 28 • Obtained average frame rate: ~22 FPS

Presentation outline 1. Developed gesture recognition system 2. Background / foreground segmentation 3. Recognizing dynamic hand gestures 4. Recognizing static hand gestures 5. Efficiency 6. Video presentations

Virtual Whiteboard

Gesture Mixer

Thank you for your attention.