Dynamic and Scalable Distributed Metadata Management in Gluster

Dynamic and Scalable Distributed Metadata Management in Gluster File System Huang Qiulan huangql@ihep. ac. cn Computing Center, Institute of High Energy Physics, Chinese Academy of Sciences

Topics • Introduction to Gluster • Gluster in IHEP • Deployment, Performance and Issues • Design and Implementation • What we have done? • Experiment results • Summary HUANG QIULAN/CC/IHEP

Gluster Introduction

Gluster Overview • Gluster is an open-source distributed file system • Linear scale-out , support several petabytes and thousands connect § No metadata structure § Elastic hashing algorithm to distribute data efficiently § Fully distributed architecture • Global Namespace to support POSIX • High reliability § Data replication § Data self-heal • Design and implementation based on a stackable modular user space • HUANG QIULAN/CC/IHEP

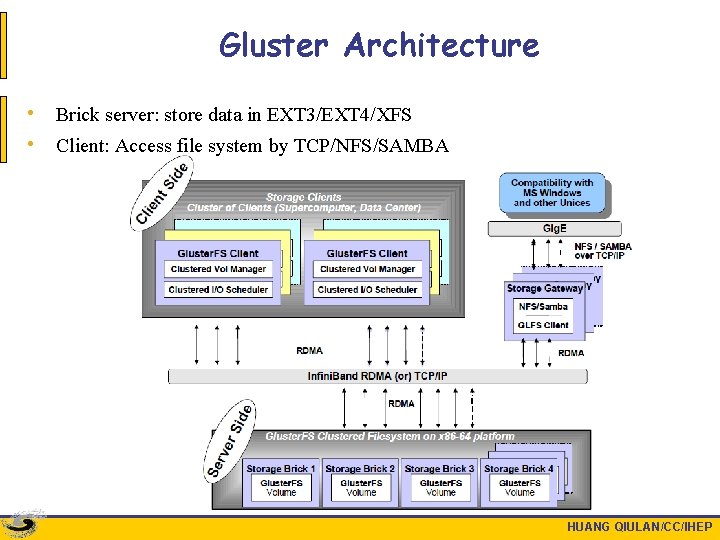

Gluster Architecture • Brick server: store data in EXT 3/EXT 4/XFS • Client: Access file system by TCP/NFS/SAMBA HUANG QIULAN/CC/IHEP

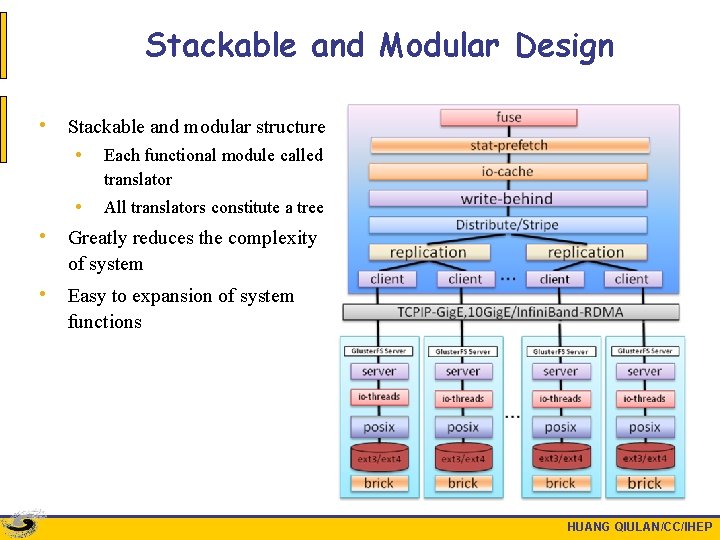

Stackable and Modular Design • Stackable and modular structure • Each functional module called translator • All translators constitute a tree • Greatly reduces the complexity of system • Easy to expansion of system functions HUANG QIULAN/CC/IHEP

Gluster in IHEP

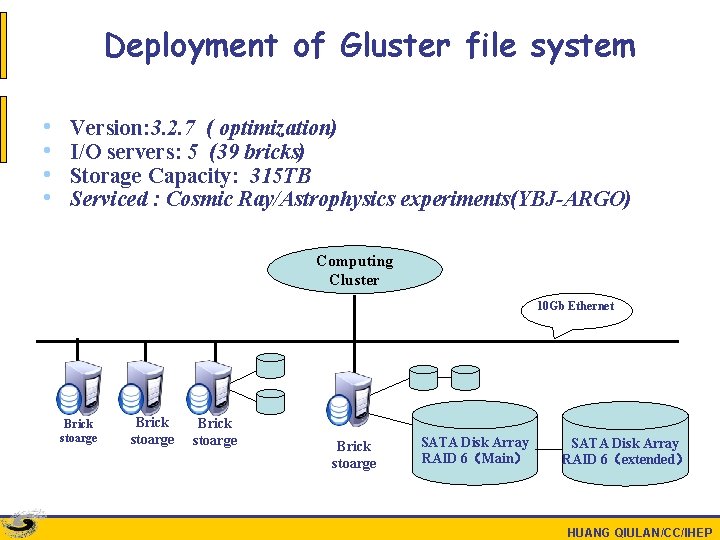

Deployment of Gluster file system • • Version: 3. 2. 7 ( optimization) I/O servers: 5 (39 bricks) Storage Capacity: 315 TB Serviced : Cosmic Ray/Astrophysics experiments(YBJ-ARGO) Computing Cluster 10 Gb Ethernet Brick stoarge SATA Disk Array RAID 6(Main) SATA Disk Array RAID 6(extended) HUANG QIULAN/CC/IHEP

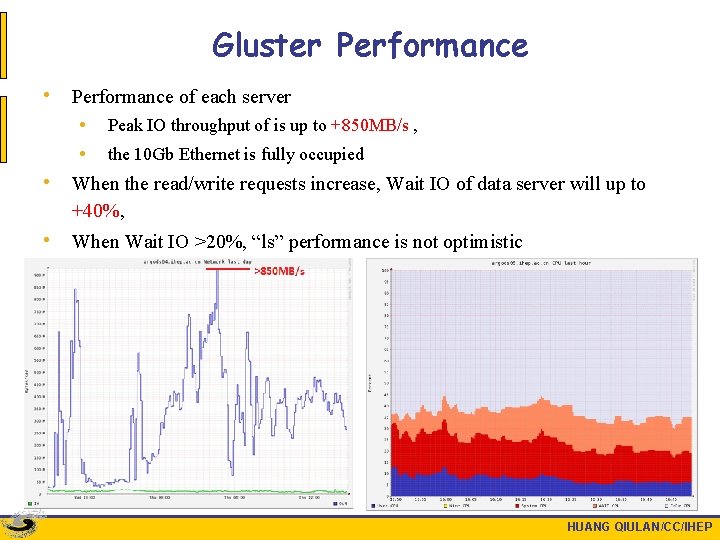

Gluster Performance • Performance of each server • • Peak IO throughput of is up to +850 MB/s , the 10 Gb Ethernet is fully occupied • When the read/write requests increase, Wait IO of data server will up to +40%, • When Wait IO >20%, “ls” performance is not optimistic HUANG QIULAN/CC/IHEP

Gluster Issues • Metadata problems • When data server is busy , “ls” performance lost more • With bricks increase, “mkdir” , “ rmdir” performance changed worse • Directory tree inconsistent • When one brick got problems, client requests would stuck • Ownership of link files changed to root: root • Most of problems is metadata • What can we do for Gluster? HUANG QIIULAN/CC/IHEP

Design and Implementation

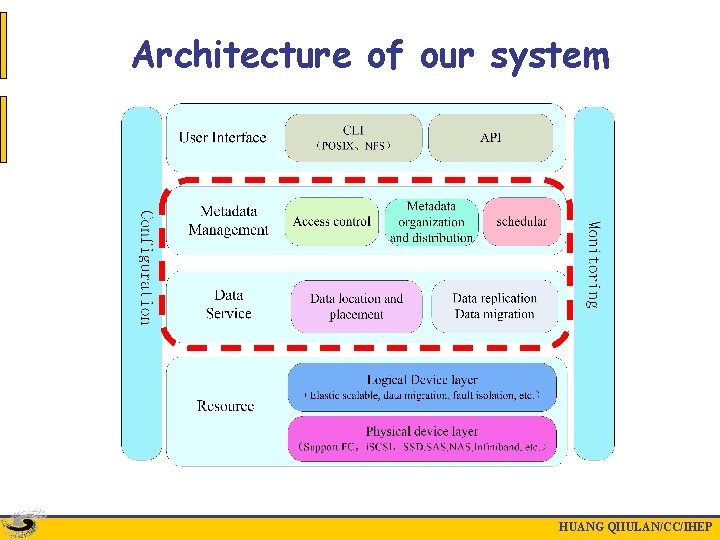

Architecture of our system HUANG QIIULAN/CC/IHEP

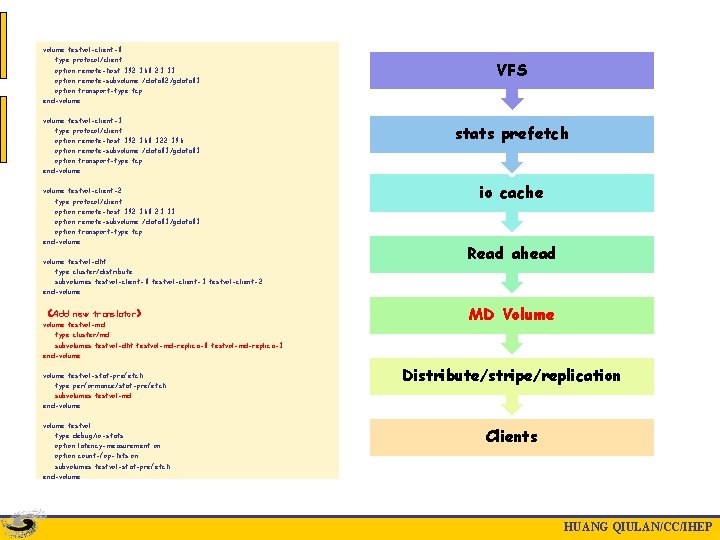

volume testvol-client-0 type protocol/client option remote-host 192. 168. 23. 31 option remote-subvolume /data 02/gdata 01 option transport-type tcp end-volume testvol-client-1 type protocol/client option remote-host 192. 168. 122. 196 option remote-subvolume /data 03/gdata 01 option transport-type tcp end-volume testvol-client-2 type protocol/client option remote-host 192. 168. 23. 31 option remote-subvolume /data 03/gdata 01 option transport-type tcp end-volume testvol-dht type cluster/distribute subvolumes testvol-client-0 testvol-client-1 testvol-client-2 end-volume (Add new translator) volume testvol-md type cluster/md subvolumes testvol-dht testvol-md-replica-0 testvol-md-replica-1 end-volume testvol-stat-prefetch type performance/stat-prefetch subvolumes testvol-md end-volume testvol type debug/io-stats option latency-measurement on option count-fop-hits on subvolumes testvol-stat-prefetch end-volume VFS stats prefetch io cache Read ahead MD Volume Distribute/stripe/replication Clients HUANG QIULAN/CC/IHEP

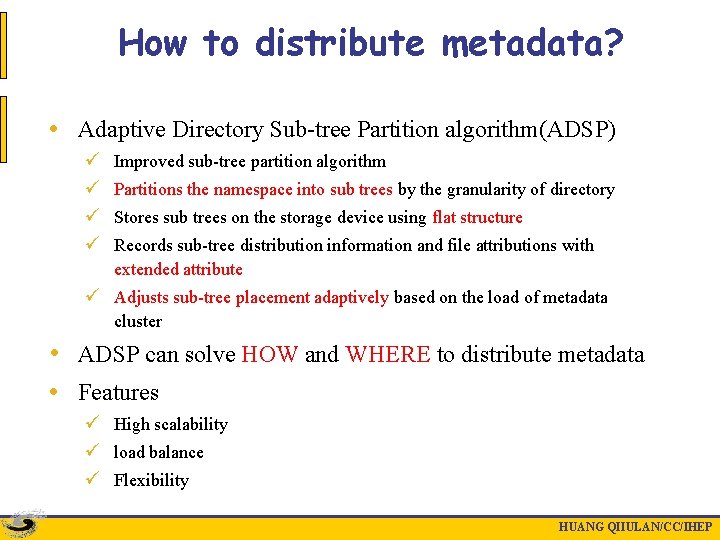

How to distribute metadata? • Adaptive Directory Sub-tree Partition algorithm(ADSP) ü ü Improved sub-tree partition algorithm Partitions the namespace into sub trees by the granularity of directory Stores sub trees on the storage device using flat structure Records sub-tree distribution information and file attributions with extended attribute ü Adjusts sub-tree placement adaptively based on the load of metadata cluster • ADSP can solve HOW and WHERE to distribute metadata • Features ü High scalability ü load balance ü Flexibility HUANG QIIULAN/CC/IHEP

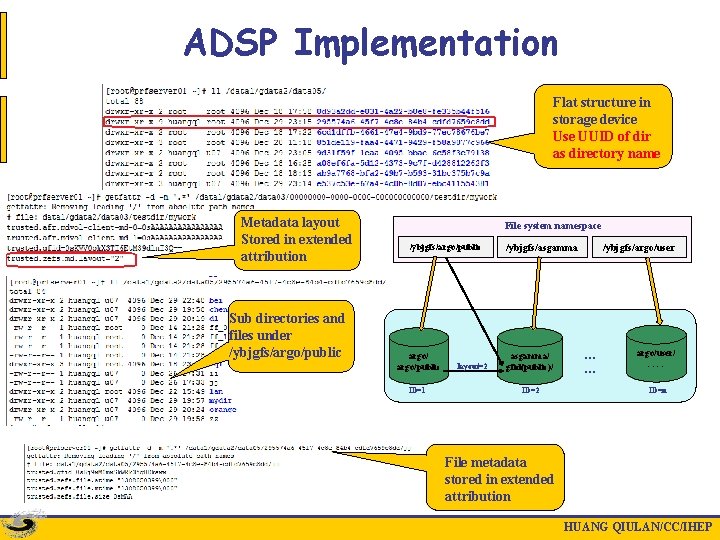

ADSP Implementation Flat structure in storage device Use UUID of dir as directory name Metadata layout Stored in extended attribution Sub directories and files under /ybjgfs/argo/public File system namespace /ybjgfs/argo/public ID=1 layout=2 /ybjgfs/asgamma/ gfid(public)/ ID=2 /ybjgfs/argo/user … … argo/user/ …… ID=n File metadata stored in extended attribution HUANG QIULAN/CC/IHEP

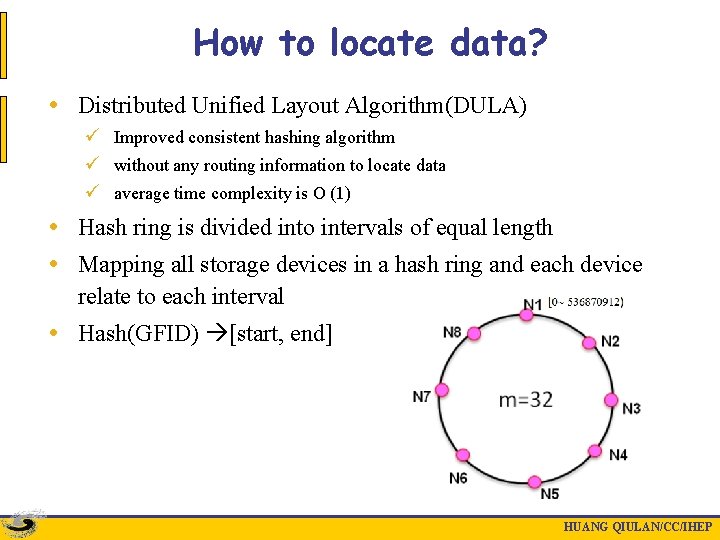

How to locate data? • Distributed Unified Layout Algorithm(DULA) ü Improved consistent hashing algorithm ü without any routing information to locate data ü average time complexity is O (1) • Hash ring is divided into intervals of equal length • Mapping all storage devices in a hash ring and each device relate to each interval • Hash(GFID) [start, end] HUANG QIULAN/CC/IHEP

Experiment results

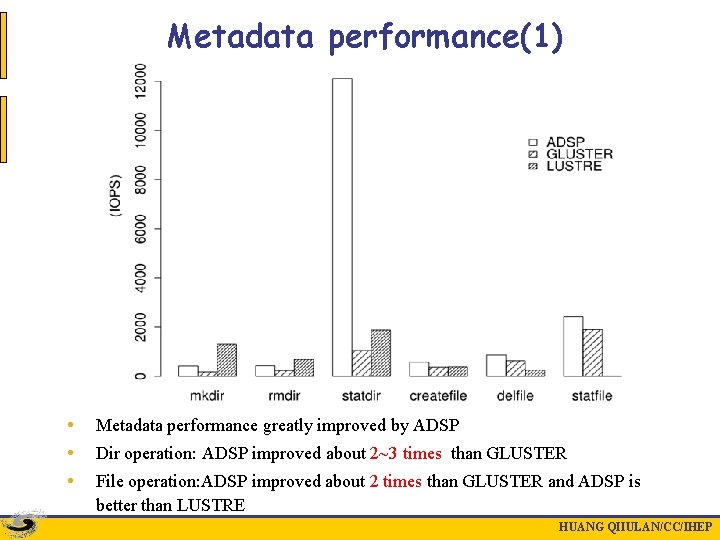

Metadata performance(1) • • • Metadata performance greatly improved by ADSP Dir operation: ADSP improved about 2~3 times than GLUSTER File operation: ADSP improved about 2 times than GLUSTER and ADSP is better than LUSTRE HUANG QIIULAN/CC/IHEP

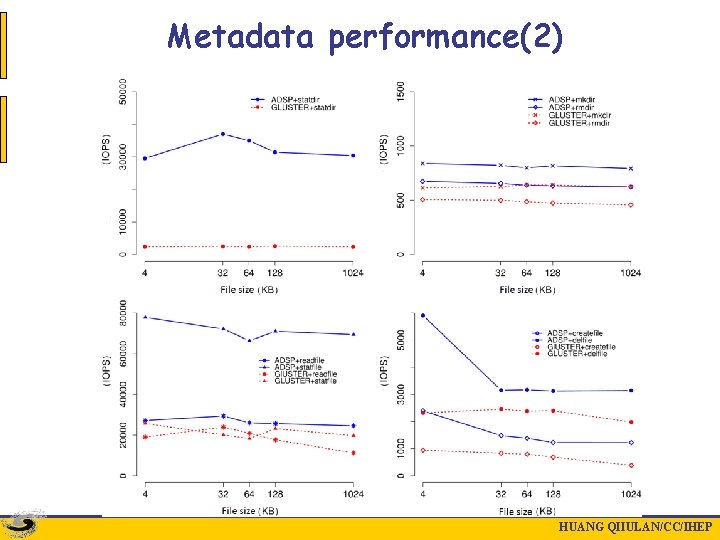

Metadata performance(2) HUANG QIIULAN/CC/IHEP

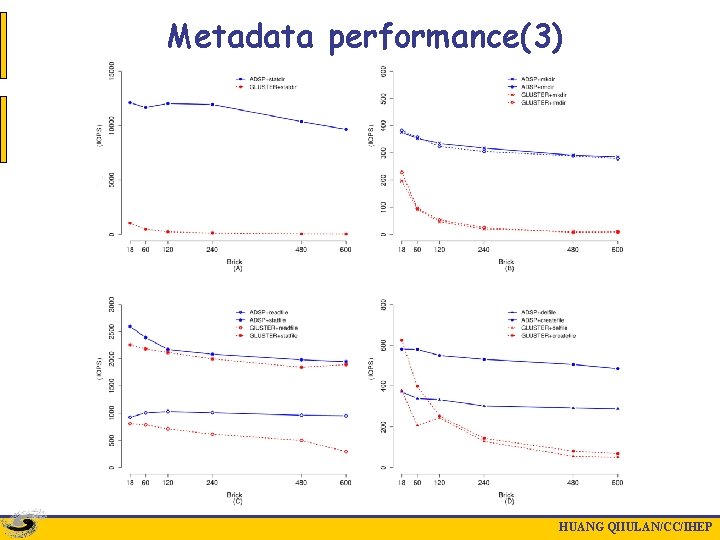

Metadata performance(3) HUANG QIIULAN/CC/IHEP

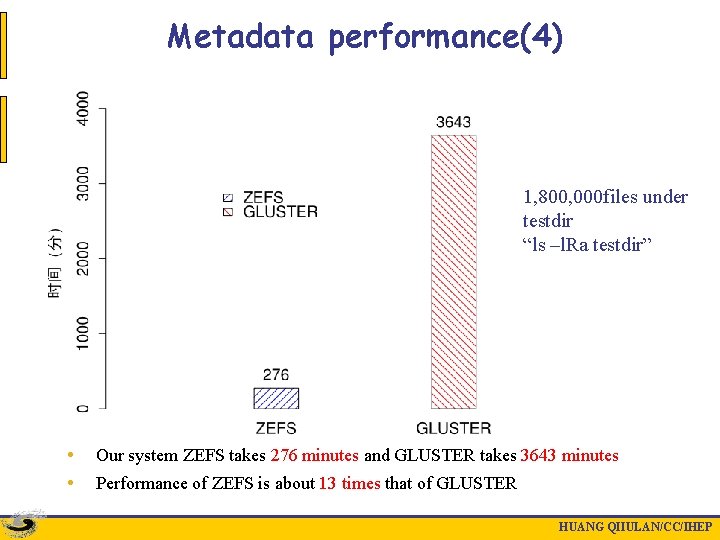

Metadata performance(4) 1, 800, 000 files under testdir “ls –l. Ra testdir” • • Our system ZEFS takes 276 minutes and GLUSTER takes 3643 minutes Performance of ZEFS is about 13 times that of GLUSTER HUANG QIIULAN/CC/IHEP

Summary • Expanse Metadata module in Gluster framework • ADSP algorithm to be responsible metadata distribution and organization • DULA algorithm to solve data position in cluster • Metadata performance greatly improved n Single client, single process : dir operation improved about 2~3 times than Gluster, file operation improved about 2 times than Gluster and our system is better than Lustre n Multi-clients, multi processes: High oncurrent access to small files, performance of our system is about 3~4 times that of Gluster, different file size has little effect on performance of directory operation. The overall trend showed greater file size, the performance is slower, but the trend is not particularly obvious. n Better scalability than Gluster HUANG QIIULAN/CC/IHEP

Thank you Question? Author email: huangql@ihep. ac. cn HUANG QIULAN/CC/IHEP

- Slides: 23