Dualaddressing Memory Architecture for Twodimensional Memory Access Patterns

![Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-2.jpg)

![Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-6.jpg)

![Non-linear Data Placement (Morton) [7][8] • Change the sequence of data placement 1 2 Non-linear Data Placement (Morton) [7][8] • Change the sequence of data placement 1 2](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-7.jpg)

![Stride Pre-fetching [12] • Reference prediction table (RPT) PC Previous address - Miss prediction Stride Pre-fetching [12] • Reference prediction table (RPT) PC Previous address - Miss prediction](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-8.jpg)

![Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-9.jpg)

![Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-16.jpg)

![Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-25.jpg)

![Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-33.jpg)

![Sequential Memory Allocation User defined array A [100][150] Dual-addressing memory Destroy inherent user data Sequential Memory Allocation User defined array A [100][150] Dual-addressing memory Destroy inherent user data](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-40.jpg)

![Two-dimensional Memory Allocation A [100][150] External fragmentation Dual-addressing memory B A D [60][160] [100][150] Two-dimensional Memory Allocation A [100][150] External fragmentation Dual-addressing memory B A D [60][160] [100][150]](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-41.jpg)

![Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-44.jpg)

![Virtual Dual-addressing Memory An 5 by 4 array Page [0][0] Page [0][1] [0][0] [0][1] Virtual Dual-addressing Memory An 5 by 4 array Page [0][0] Page [0][1] [0][0] [0][1]](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-53.jpg)

- Slides: 55

Dual-addressing Memory Architecture for Two-dimensional Memory Access Patterns Yen-Hao Chen Yi-Yu Liu Dept. of Computer Science and Engineering Yuan Ze University, Chungli, Taiwan, R. O. C.

![Outline Introduction DRAM Previous work Nonlinear data placement 78 Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-2.jpg)

Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Stride pre-fetching [12] • Dual-addressing (DA) memory organization - DA DRAM architecture - Full system hierarchy with DA • Cache coherence - WURF • Experimental results • DA memory optimizations - Data granularity and indexing - Virtual DA memory • Conclusions 2

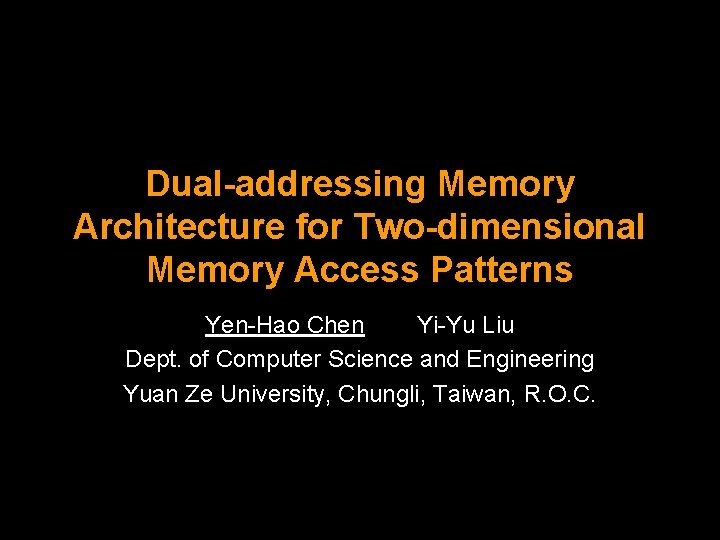

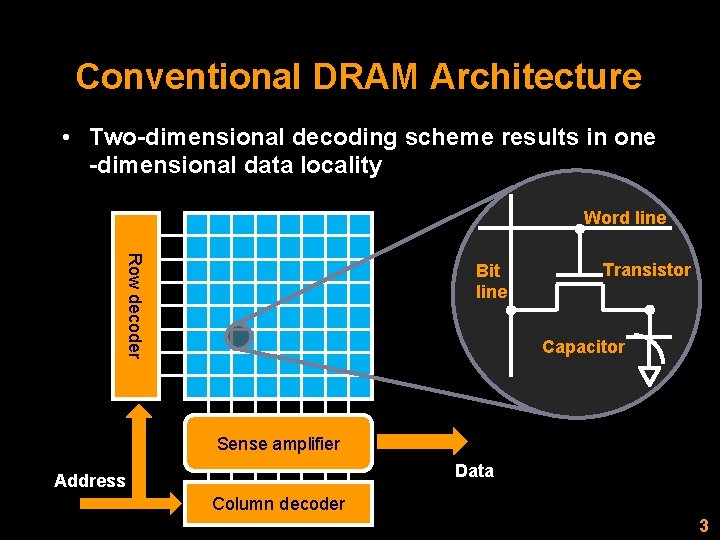

Conventional DRAM Architecture • Two-dimensional decoding scheme results in one -dimensional data locality Word line Row decoder Bit line Transistor Capacitor Sense amplifier Data Address Column decoder 3

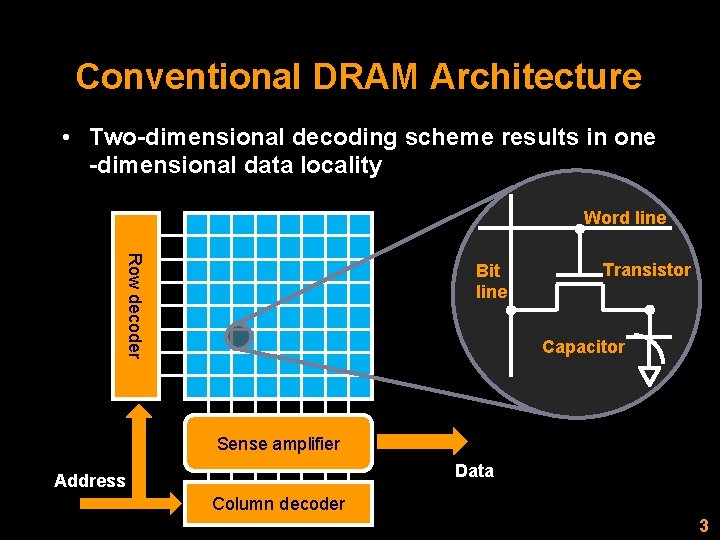

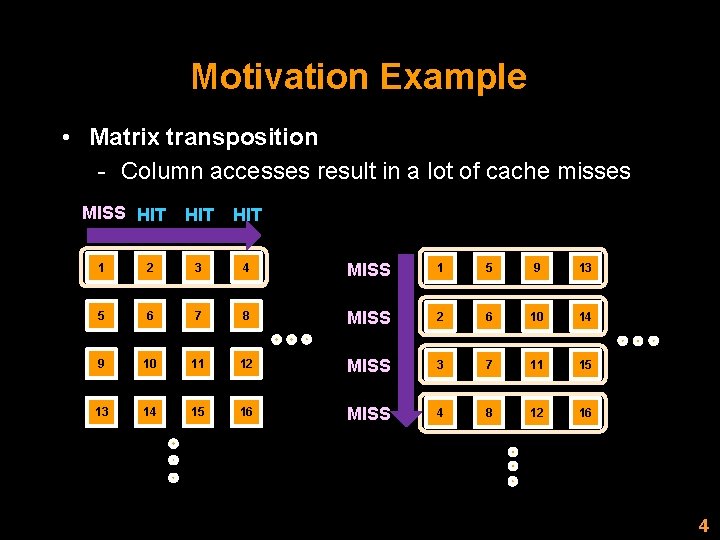

Motivation Example • Matrix transposition - Column accesses result in a lot of cache misses MISS HIT HIT 1 2 3 4 MISS 1 5 9 13 5 6 7 8 MISS 2 6 10 14 9 10 11 12 MISS 3 7 11 15 13 14 15 16 MISS 4 8 12 16 4

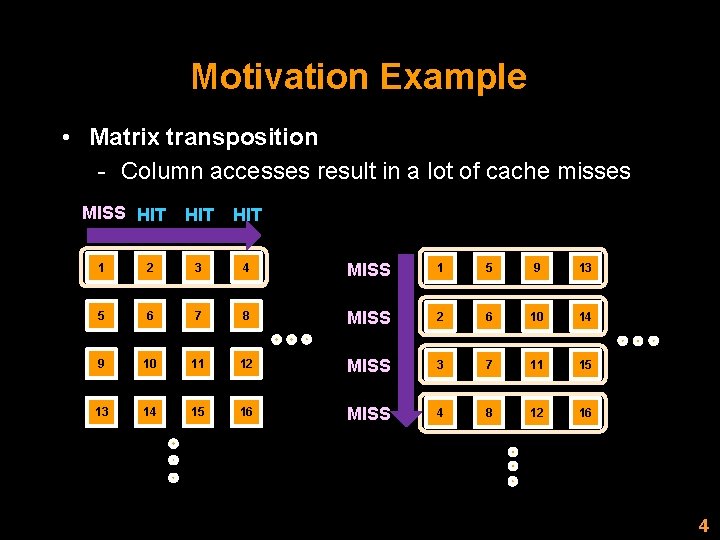

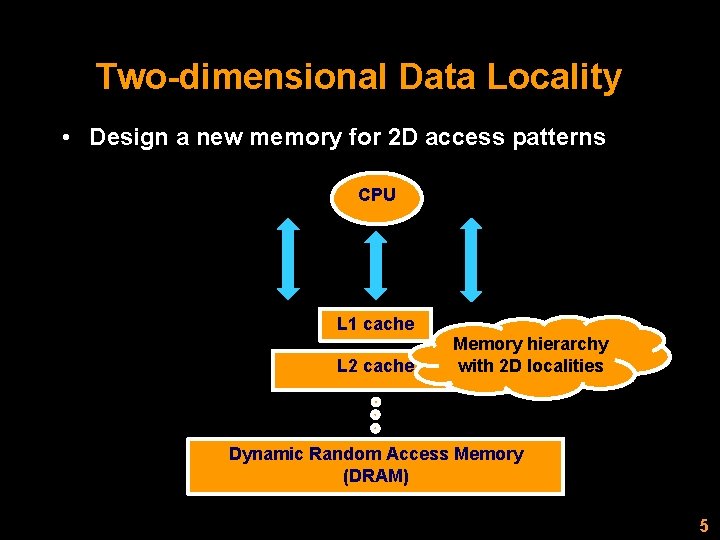

Two-dimensional Data Locality • Design a new memory for 2 D access patterns CPU L 1 cache L 2 cache Memory hierarchy with 2 D localities Dynamic Random Access Memory (DRAM) 5

![Outline Introduction DRAM Previous work Nonlinear data placement 78 Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-6.jpg)

Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Stride pre-fetching [12] • Dual-addressing (DA) memory organization - DA DRAM architecture - Full system hierarchy with DA • Cache coherence - WURF • Experimental results • DA memory optimizations - Data granularity and indexing - Virtual DA memory • Conclusions 6

![Nonlinear Data Placement Morton 78 Change the sequence of data placement 1 2 Non-linear Data Placement (Morton) [7][8] • Change the sequence of data placement 1 2](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-7.jpg)

Non-linear Data Placement (Morton) [7][8] • Change the sequence of data placement 1 2 3 1 2 5 6 4 Expensive index calculations 3 4 7 8 9 10 13 14 11 12 15 16 7

![Stride Prefetching 12 Reference prediction table RPT PC Previous address Miss prediction Stride Pre-fetching [12] • Reference prediction table (RPT) PC Previous address - Miss prediction](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-8.jpg)

Stride Pre-fetching [12] • Reference prediction table (RPT) PC Previous address - Miss prediction results in cache pollution Instruction tag Previous address Stride State + Prefetching address 8

![Outline Introduction DRAM Previous work Nonlinear data placement 78 Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-9.jpg)

Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Stride pre-fetching [12] • Dual-addressing (DA) memory organization - DA DRAM architecture - Full system hierarchy with DA • Cache coherence - WURF • Experimental results • DA memory optimizations - Data granularity and indexing - Virtual DA memory • Conclusions 9

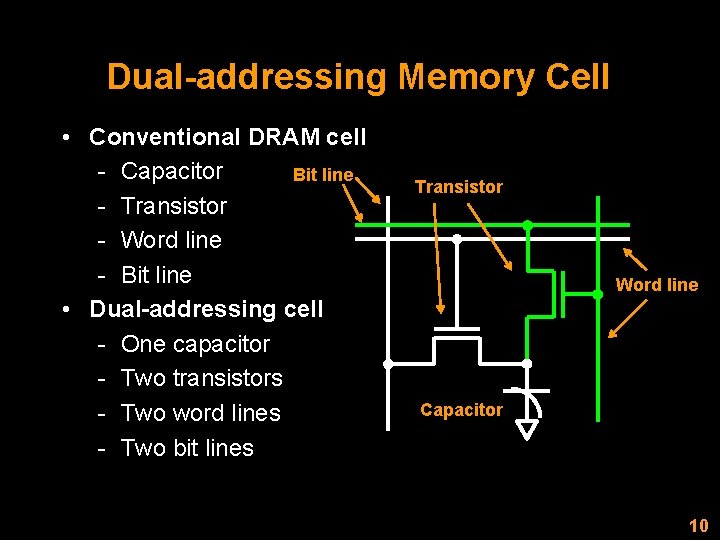

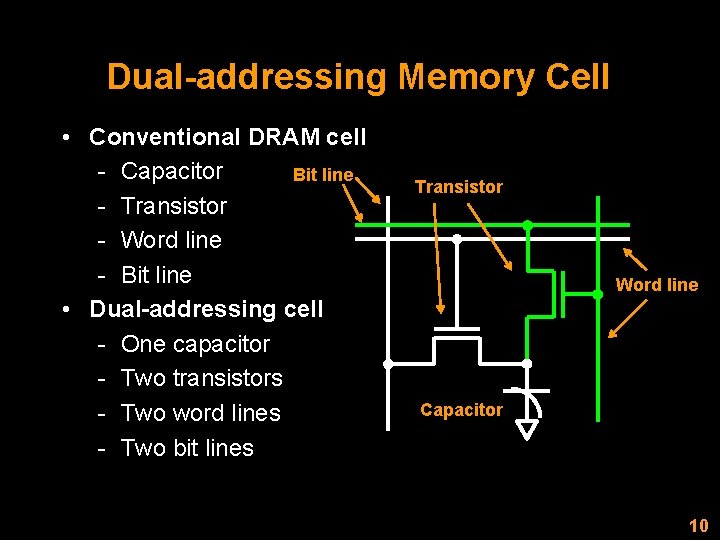

Dual-addressing Memory Cell • Conventional DRAM cell - Capacitor Bit line - Transistor - Word line - Bit line • Dual-addressing cell - One capacitor - Two transistors - Two word lines - Two bit lines Transistor Word line Capacitor 10

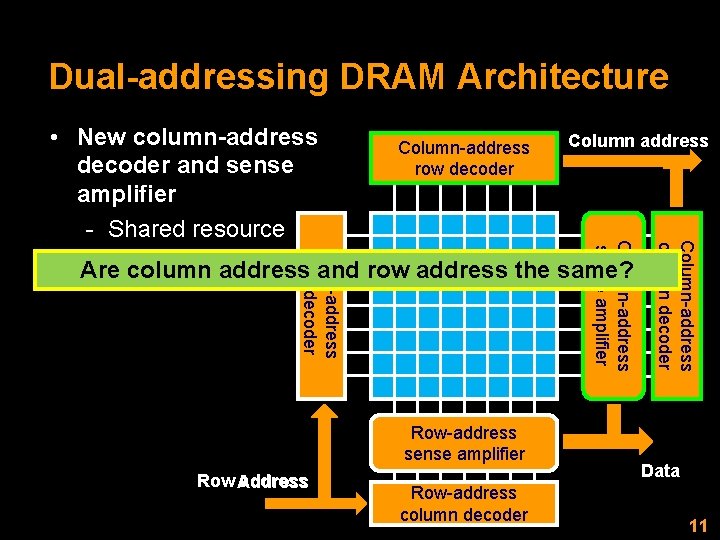

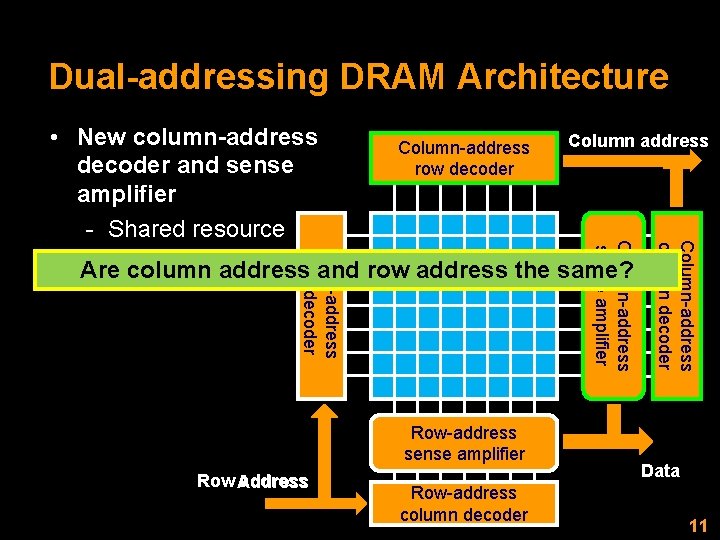

Dual-addressing DRAM Architecture Row-address sense amplifier Row address Address Row-address column decoder Column-address column decoder Row-address row decoder Column-address sense amplifier • New column-address Column-address decoder and sense row decoder amplifier - Shared resource in square shape Are column address and row address the same? Data 11

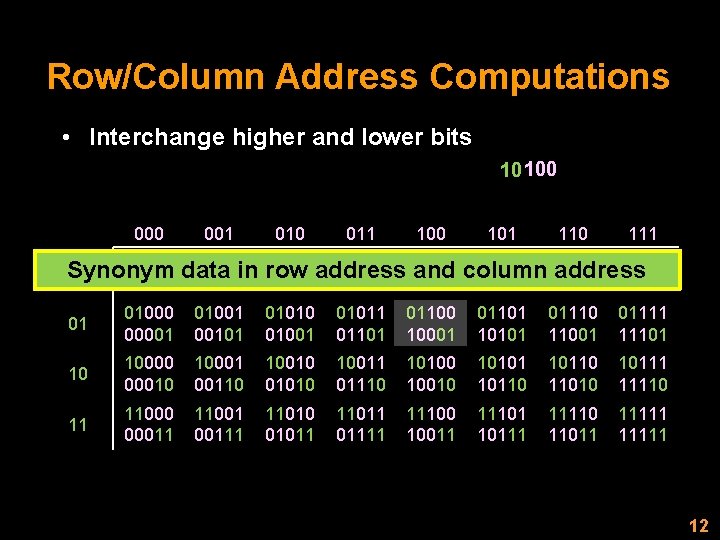

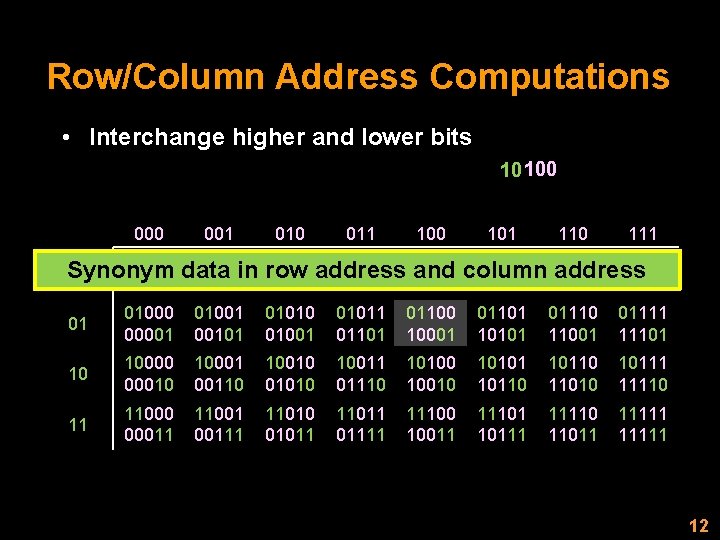

Row/Column Address Computations • Interchange higher and lower bits 10 100 001 010 011 100 101 110 111 000001 00010 00011 00100 00101 00110 00111 Synonym data in row address and column address 00 00000 001000 01100 10000 10100 11000 11100 01 01000 00001 01001 001010 01001 01011 01100 10001 01101 10101 01110 11001 01111 11101 10 10000 00010 10001 00110 10010 01010 10011 01110 10100 10010 10101 10110 11010 10111 11110 11 11000 00011 11001 00111 11010 01011 11011 01111 11100 10011 11101 10111 11110 11011 11111 12

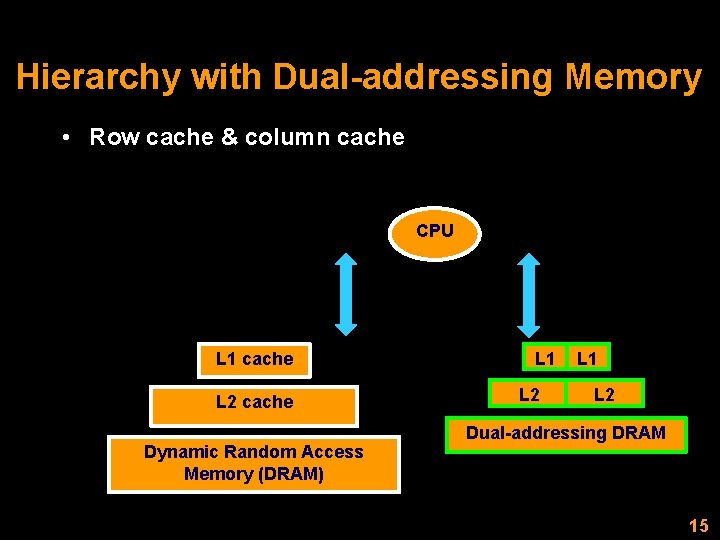

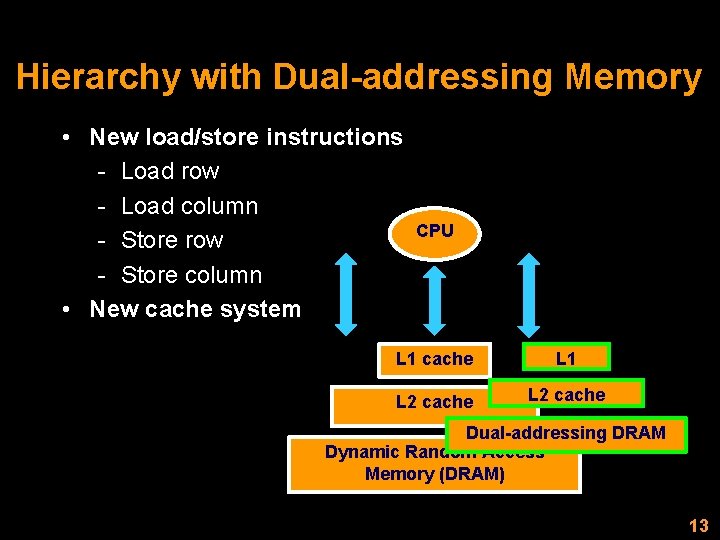

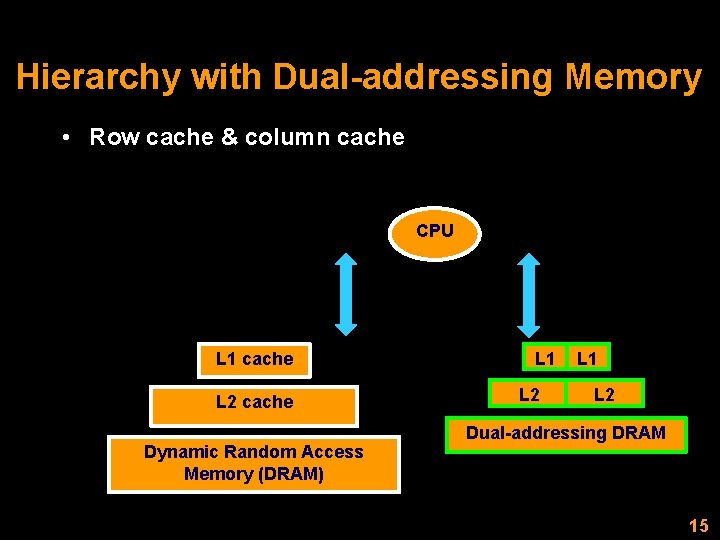

Hierarchy with Dual-addressing Memory • New load/store instructions - Load row - Load column - Store row - Store column • New cache system CPU L 1 cache L 1 L 2 cache Dual-addressing DRAM Dynamic Random Access Memory (DRAM) 13

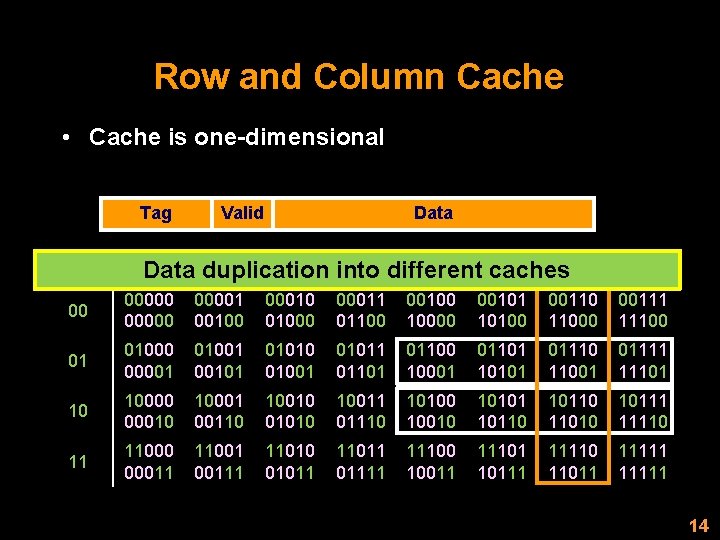

Row and Column Cache • Cache is one-dimensional Tag Valid Data 000 001 010 011 100 101 110 Data duplication into different caches 111 00 000001 00100 00010 01000 00011 01100 00100 10000 00101 10100 00110 11000 00111 11100 01 01000 00001 01001 001010 01001 01011 01100 10001 01101 10101 01110 11001 01111 11101 10 10000 00010 10001 00110 10010 01010 10011 01110 10100 10010 10101 10110 11010 10111 11110 11 11000 00011 11001 00111 11010 01011 11011 01111 11100 10011 11101 10111 11110 11011 11111 14

Hierarchy with Dual-addressing Memory • Row cache & column cache CPU L 1 cache L 2 cache Dynamic Random Access Memory (DRAM) L 1 L 2 Dual-addressing DRAM 15

![Outline Introduction DRAM Previous work Nonlinear data placement 78 Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-16.jpg)

Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Stride pre-fetching [12] • Dual-addressing (DA) memory organization - DA DRAM architecture - Full system hierarchy with DA • Cache coherence - WURF • Experimental results • DA memory optimizations - Data granularity and indexing - Virtual DA memory • Conclusions 16

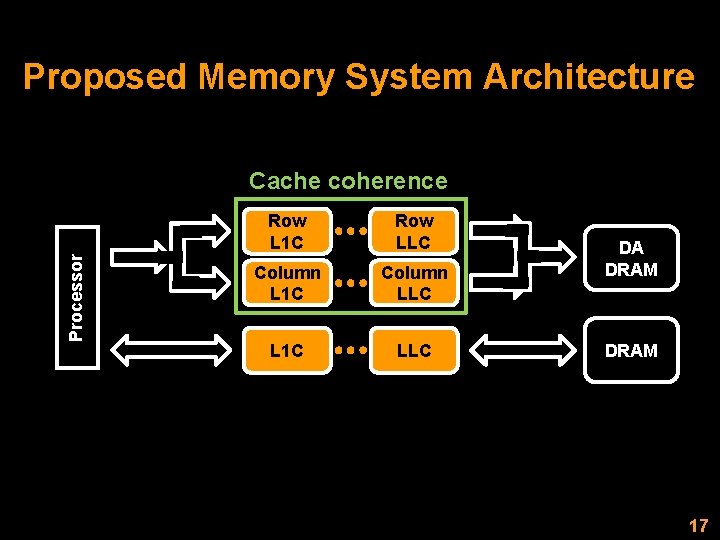

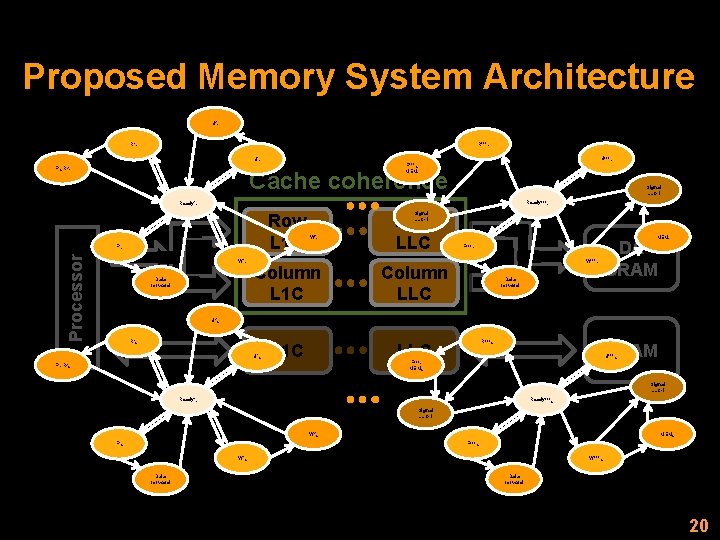

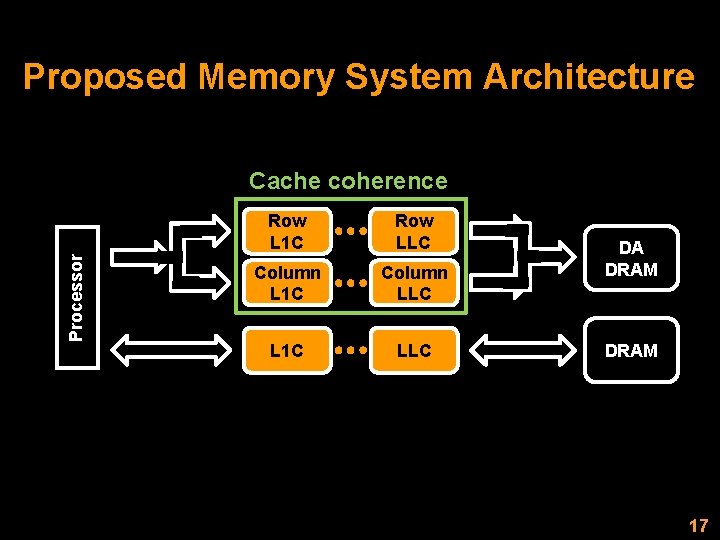

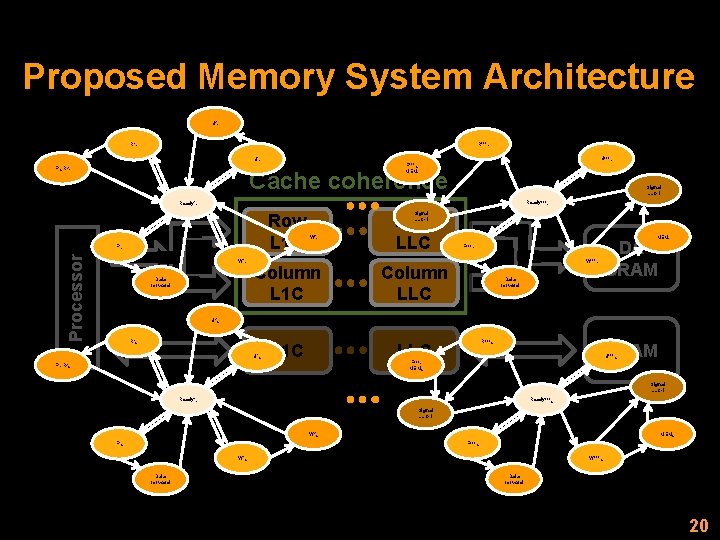

Proposed Memory System Architecture Processor Cache coherence Row L 1 C Row LLC Column L 1 C Column LLC L 1 C LLC DA DRAM 17

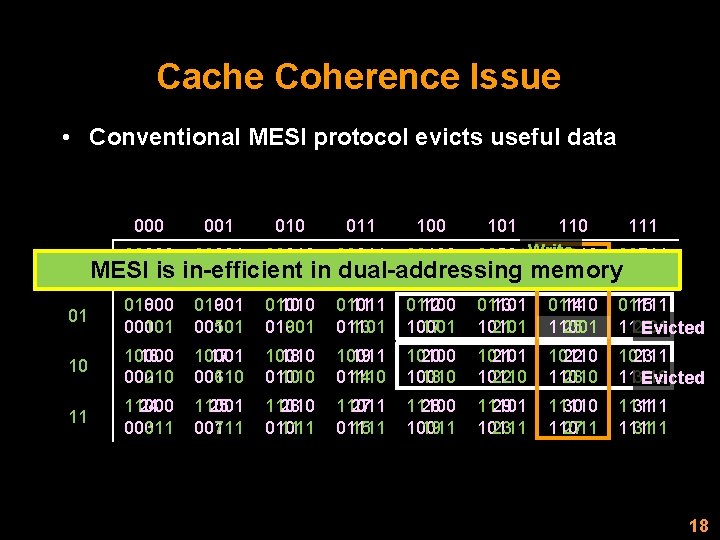

Cache Coherence Issue • Conventional MESI protocol evicts useful data 000 001 010 011 100 101 110 111 00000 0 00001 1 00010 2 00011 3 00100 4 00101 5 Write 00110 6 00111 7 00 MESI is in-efficient in dual-addressing memory 00000 0 00100 4 01000 8 01100 12 10000 16 10100 20 11000 24 11100 28 01 01000 8 00001 1 01001 9 00101 5 01010 10 01001 9 01011 11 01101 13 01100 12 10001 17 01101 13 10101 21 01110 14 11001 25 01111 15 11101 29 Evicted 10 10000 16 00010 2 10001 17 00110 6 10010 18 01010 10 10011 19 01110 14 10100 20 10010 18 10101 21 10110 22 11010 26 10111 23 11110 30 Evicted 11 11000 24 00011 3 11001 25 00111 7 11010 26 01011 11 11011 27 01111 15 11100 28 10011 19 11101 29 10111 23 11110 30 11011 27 11111 31 18

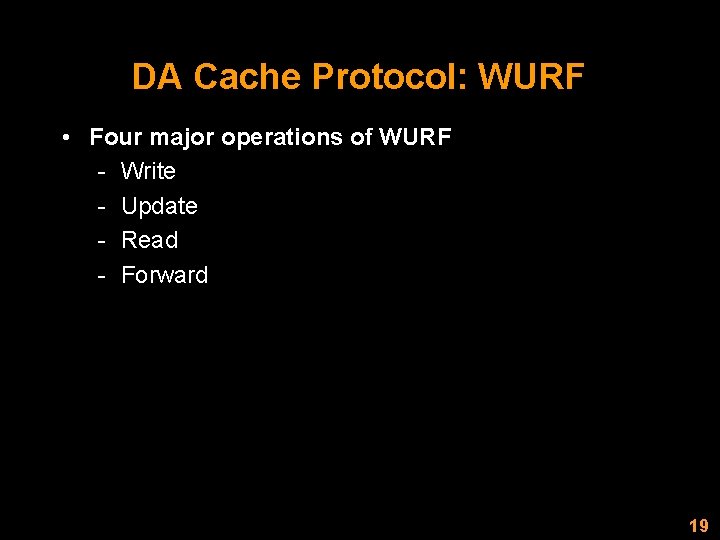

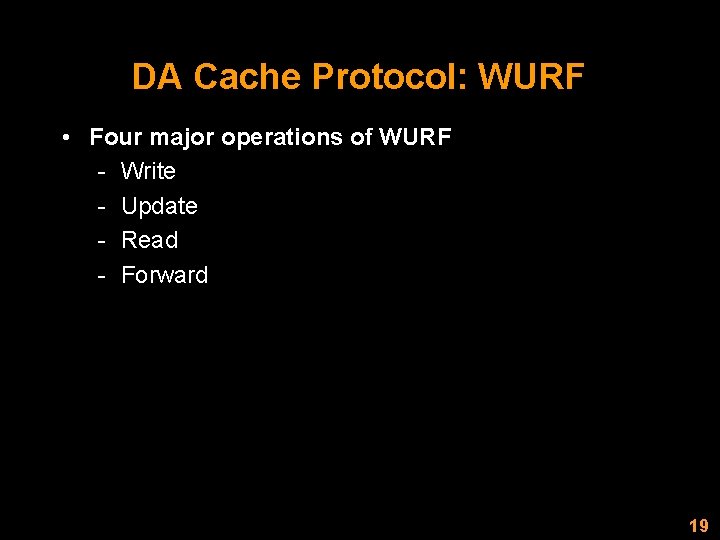

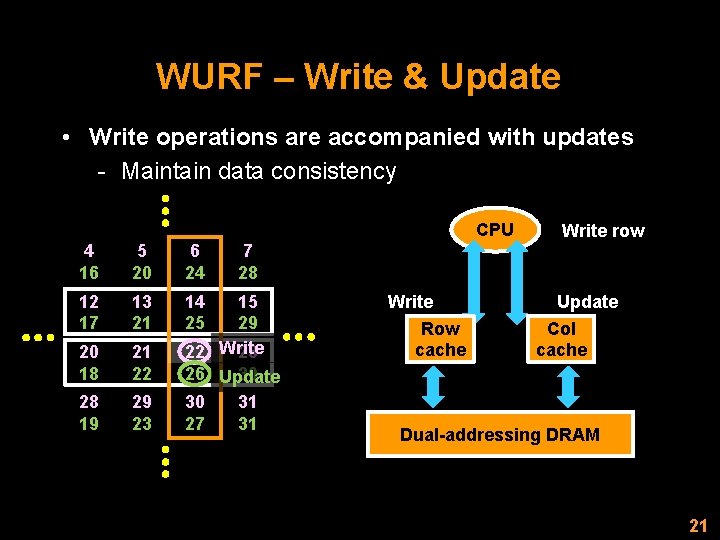

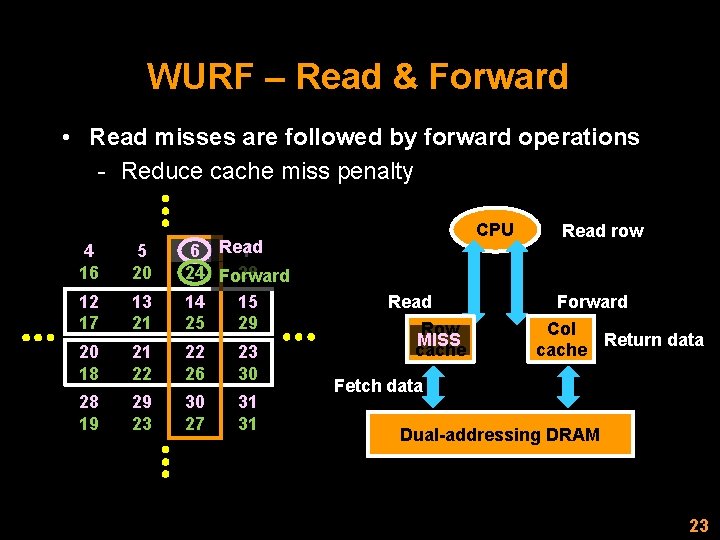

DA Cache Protocol: WURF • Four major operations of WURF - Write - Update - Read - Forward 19

Proposed Memory System Architecture U 2 r RLLCr R 1 r U 1 F 1 c R 2 r r ULLCr FLLCc MEMr Cache coherence Ready 1 r Row L 1 C F 1 r Processor Signal LLC-1 Ready. LLCr W 1 r Data forward Signal LLC-1 W 2 r Column L 1 C Row LLC MEMr FLLCr WLLCr Column LLC Data forward DA DRAM U 2 c R 1 c U 1 c RLLCc LLC L 1 C DRAM ULLCc FLLCr MEMc F 1 r R 2 c Signal LLC-1 Ready. LLCc r Signal LLC-1 W 2 c F 1 c MEMc FLLCc W 1 c Data forward WLLCc Data forward 20

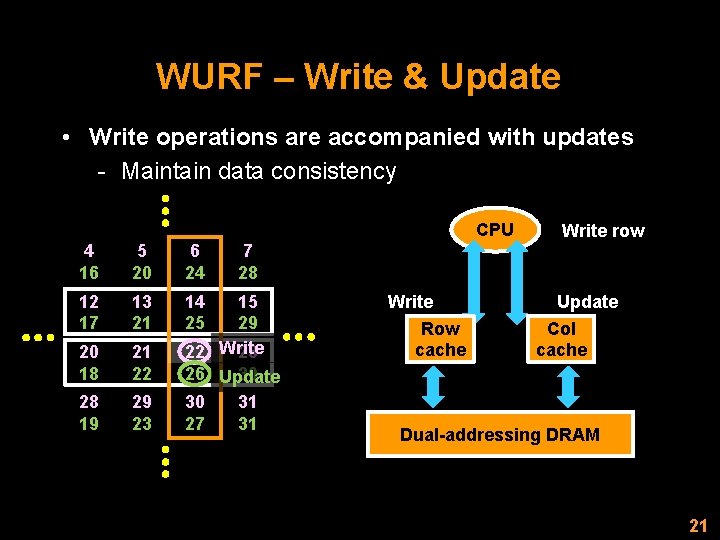

WURF – Write & Update • Write operations are accompanied with updates - Maintain data consistency CPU 4 16 5 20 6 24 7 28 12 17 13 21 14 25 20 18 21 22 15 29 22 Write 23 26 Update 30 28 19 29 23 30 27 31 31 Write Row cache Write row Update Col cache Dual-addressing DRAM 21

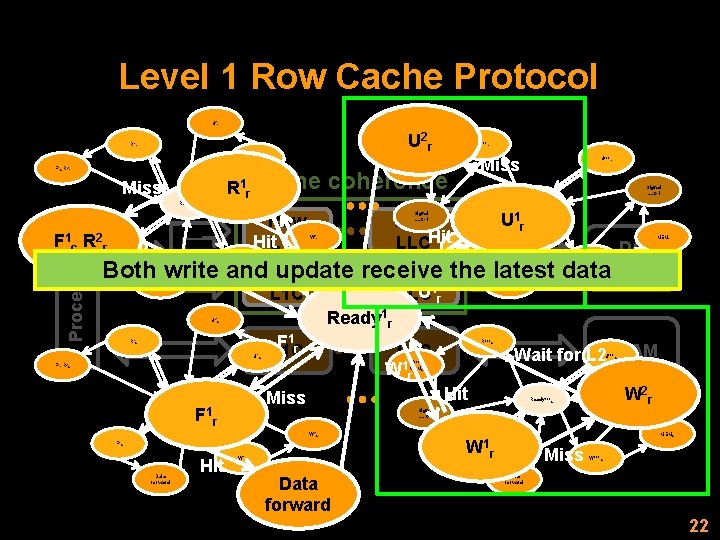

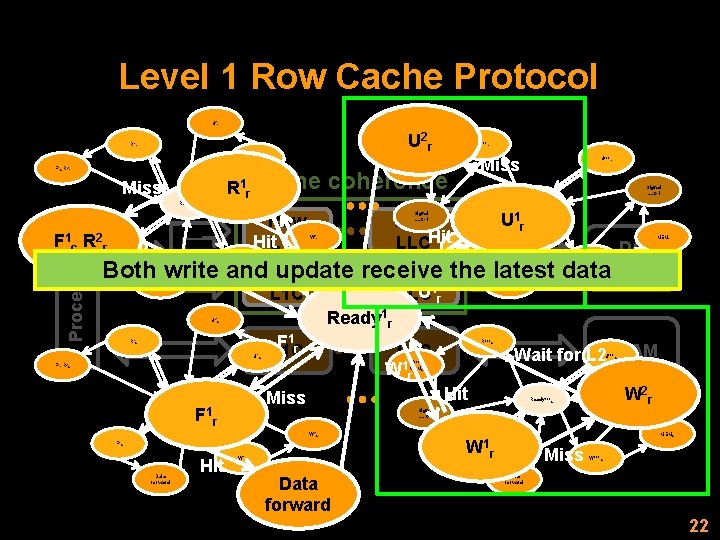

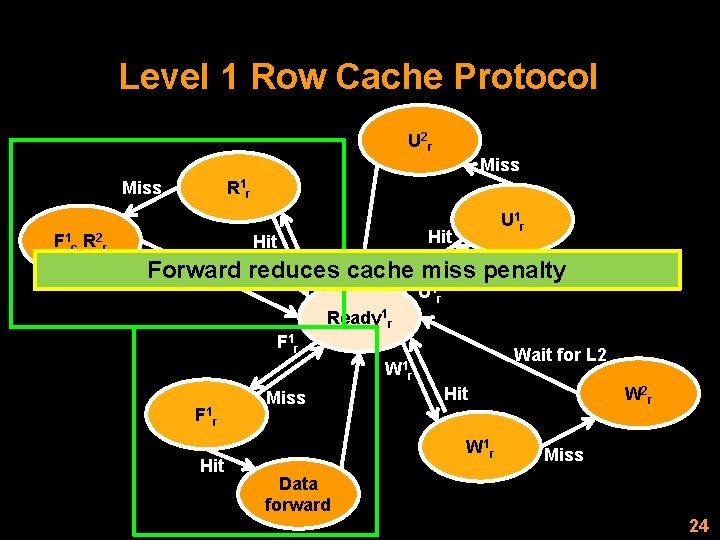

Level 1 Row Cache Protocol U 2 r R 1 r U 1 F 1 c R 2 r Processor F 1 c R 2 r Row Hit. L 1 C Signal LLC-1 U 1 r Signal LLC-1 W 2 r Row LLCHit ULLCr Ready. LLCr r MEMr DA R 1 r DRAM Both write and update receive the latest data Column Wait for L 2 U 1 r LLC L 1 C Ready 1 r 1 F r LLC L 1 C Wait for L 2 DRAM 1 Wr Hit W 2 r Miss F 1 r F 1 r FLLCr W 1 r Data forward U 2 c R 1 c RLLCc U 1 c F 1 r R 2 c Miss FLLCc MEMr R 1 r. Cache coherence Miss Ready 1 r RLLCr ULLCc FLLCr MEMc Signal LLC-1 Ready. LLCc r Signal LLC-1 W 2 c F 1 c W 1 FLLCc Data forward Hit W 1 c Data forward MEMc Miss r WLLCc Data forward 22

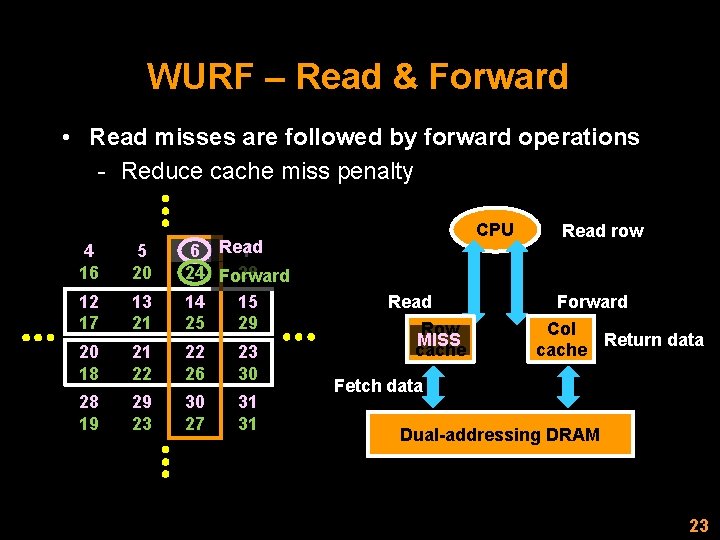

WURF – Read & Forward • Read misses are followed by forward operations - Reduce cache miss penalty 4 16 5 20 12 17 13 21 6 Read 7 24 Forward 28 14 15 25 29 20 18 21 22 22 26 23 30 28 19 29 23 30 27 31 31 CPU Read Row MISS cache Read row Forward Col Return data cache Fetch data Dual-addressing DRAM 23

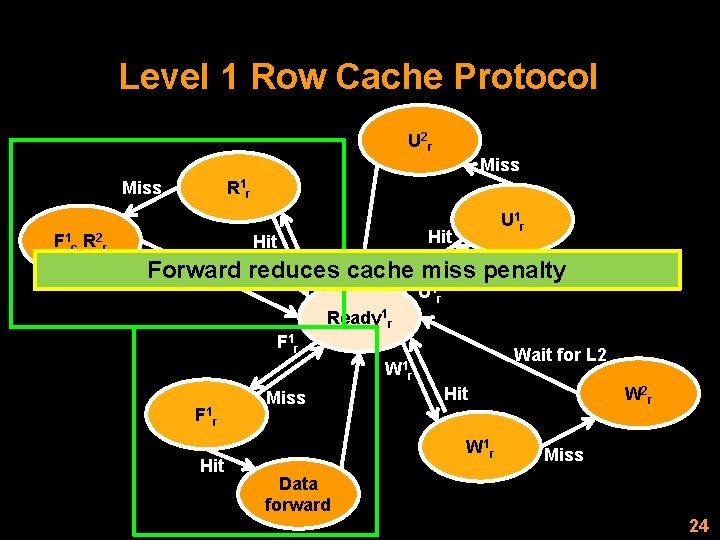

Level 1 Row Cache Protocol U 2 r Miss R 1 r Miss F 1 c R 2 r U 1 r Hit R 1 r Forward reduces cache miss penalty Wait for L 2 U 1 r Ready 1 r F 1 r Wait for L 2 W 1 r F 1 r Hit Miss Hit W 1 r W 2 r Miss Data forward 24

![Outline Introduction DRAM Previous work Nonlinear data placement 78 Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-25.jpg)

Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Stride pre-fetching [12] • Dual-addressing (DA) memory organization - DA DRAM architecture - Full system hierarchy with DA • Cache coherence - WURF • Experimental results • DA memory optimizations - Data granularity and indexing - Virtual DA memory • Conclusions 25

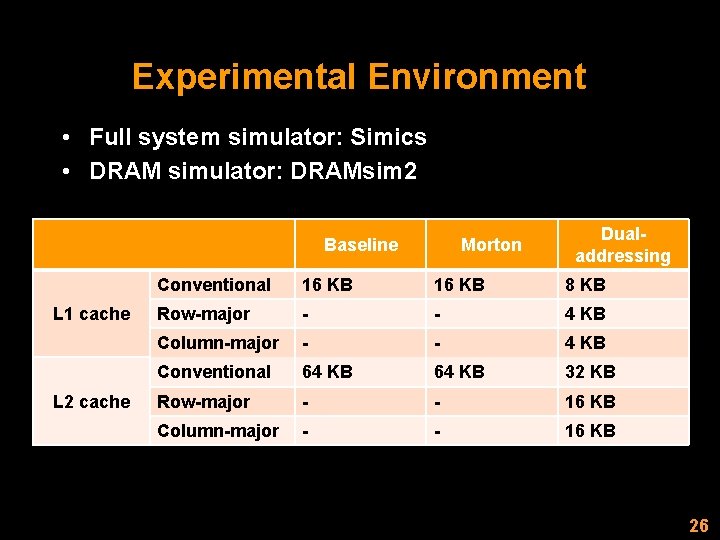

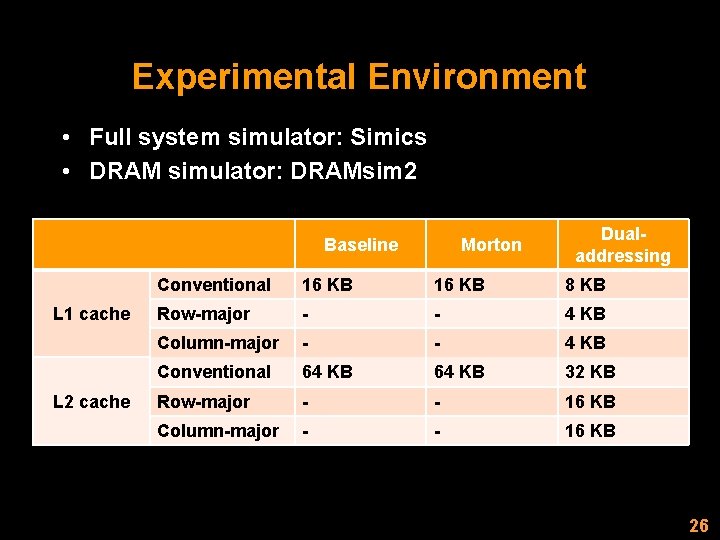

Experimental Environment • Full system simulator: Simics • DRAM simulator: DRAMsim 2 Baseline L 1 cache L 2 cache Morton Dualaddressing Conventional 16 KB 8 KB Row-major - - 4 KB Column-major - - 4 KB Conventional 64 KB 32 KB Row-major - - 16 KB Column-major - - 16 KB 26

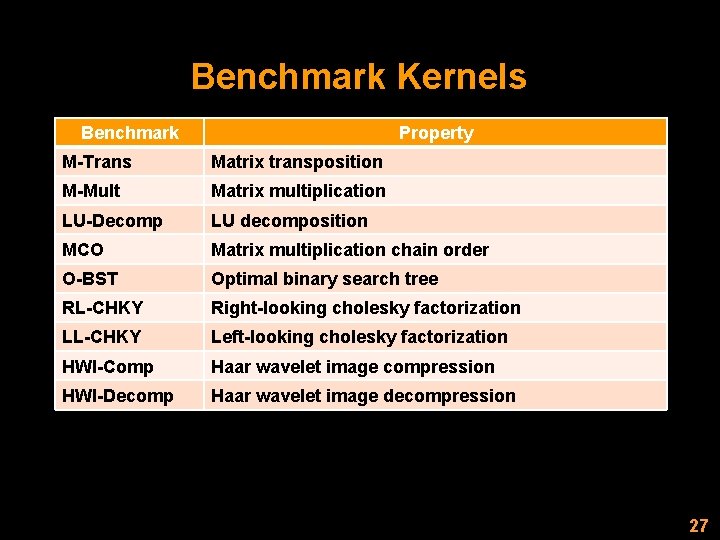

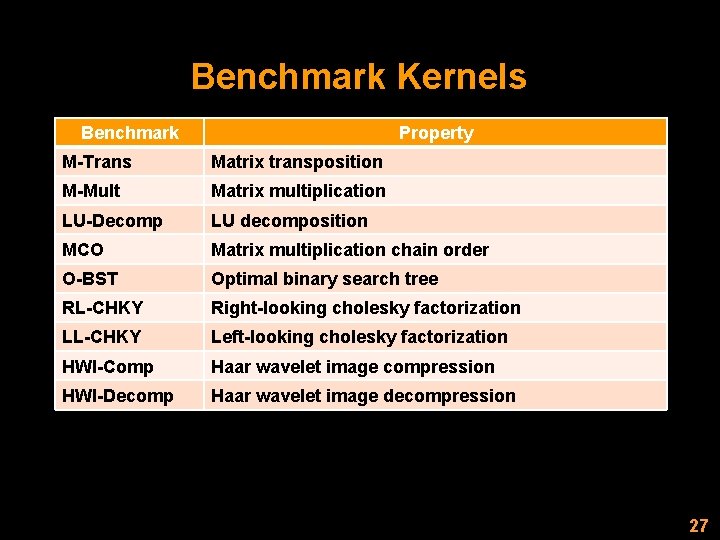

Benchmark Kernels Benchmark Property M-Trans Matrix transposition M-Mult Matrix multiplication LU-Decomp LU decomposition MCO Matrix multiplication chain order O-BST Optimal binary search tree RL-CHKY Right-looking cholesky factorization LL-CHKY Left-looking cholesky factorization HWI-Comp Haar wavelet image compression HWI-Decomp Haar wavelet image decompression 27

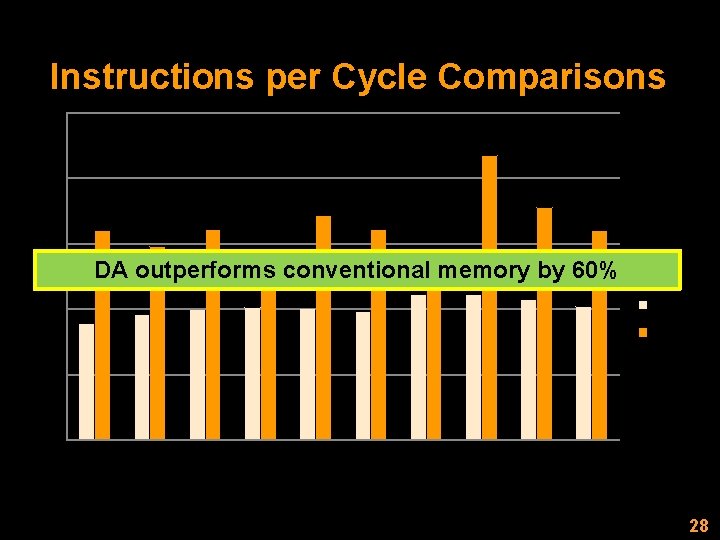

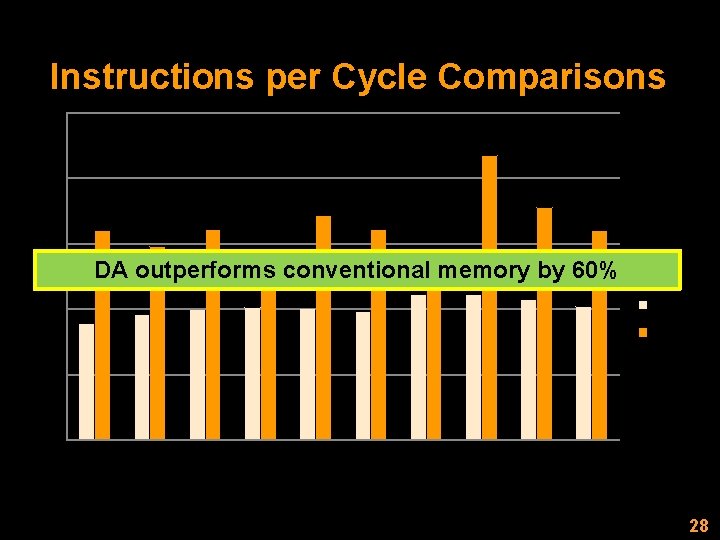

Instructions per Cycle Comparisons 250% 200% 150% DA outperforms conventional memory by 60% Morton 100% DA 50% ge av er a ec om p p W I-D I-C om W H KY -C LL H KY R LC -B ST O C O m ec o -D LU M p t ul -M M M -T ra ns 0% 28

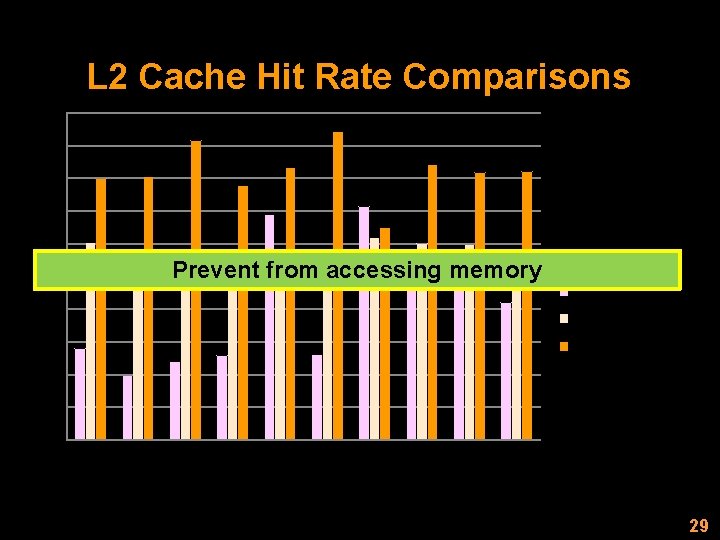

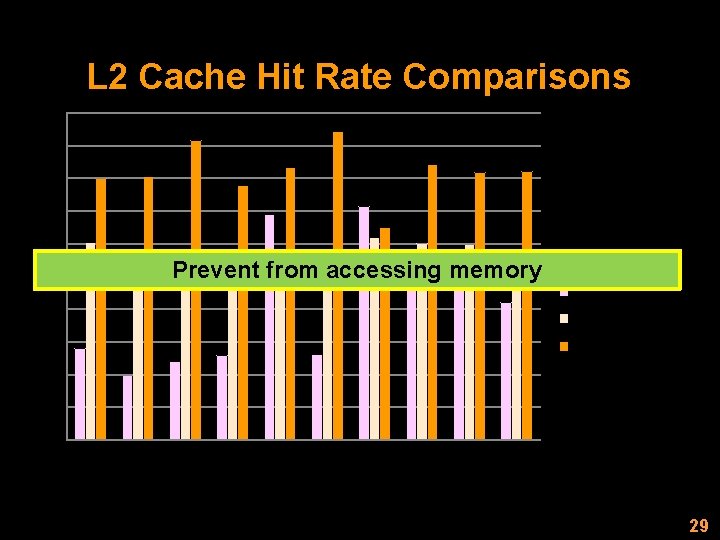

L 2 Cache Hit Rate Comparisons 100% 90% 80% 70% 60% Prevent from accessing memory 50% 40% Baseline_1 D (%) Morton (%) 30% DA (%) 20% 10% -B ST R LC H KY LL -C H KY W I-C om W I-D p ec om av p er ag e O C O M p m t ec o ul -D -M LU M M -T ra ns 0% 29

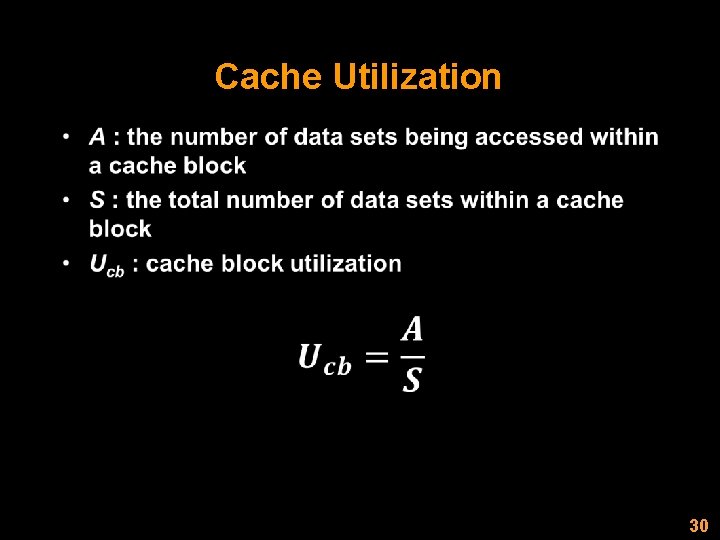

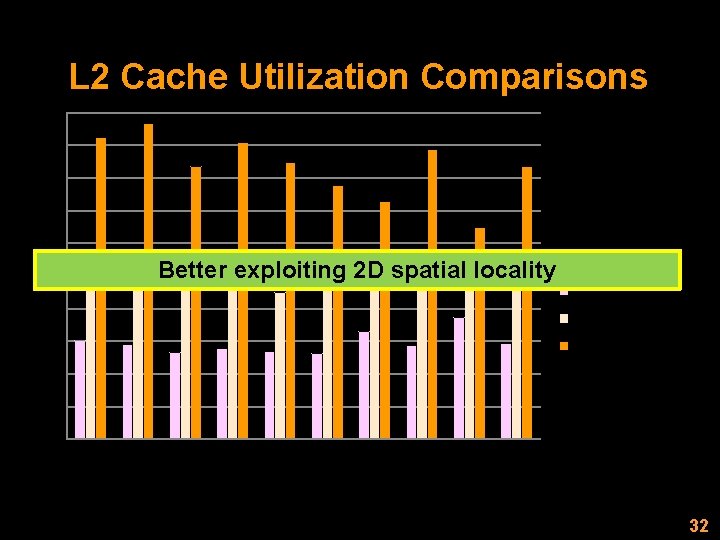

Cache Utilization • 30

Average Cache Utilization • 31

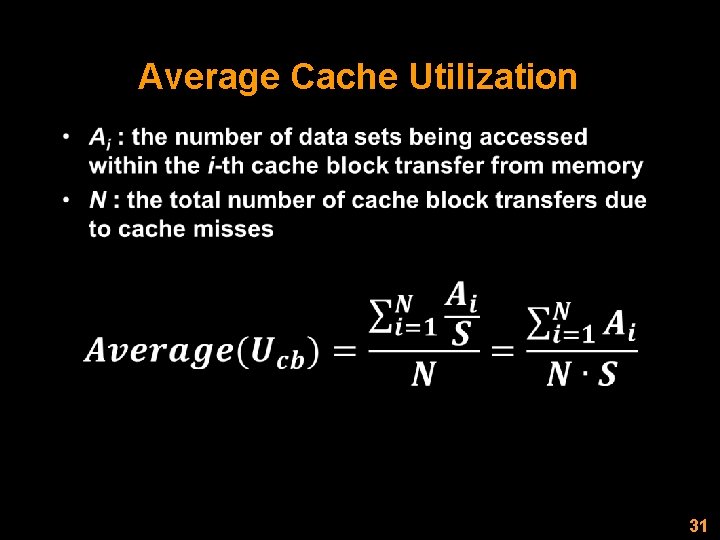

L 2 Cache Utilization Comparisons 100% 90% 80% 70% 60% Better exploiting 2 D spatial locality 50% 40% Baseline_1 D (%) Morton (%) 30% DA (%) 20% 10% -B ST R LC H KY LL -C H KY W I-C om W I-D p ec om av p er ag e O C O M p m t ec o ul -D -M LU M M -T ra ns 0% 32

![Outline Introduction DRAM Previous work Nonlinear data placement 78 Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-33.jpg)

Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Stride pre-fetching [12] • Dual-addressing (DA) memory organization - DA DRAM architecture - Full system hierarchy with DA • Cache coherence - WURF • Experimental results • DA memory optimizations - Data granularity and indexing - Virtual DA memory • Conclusions 33

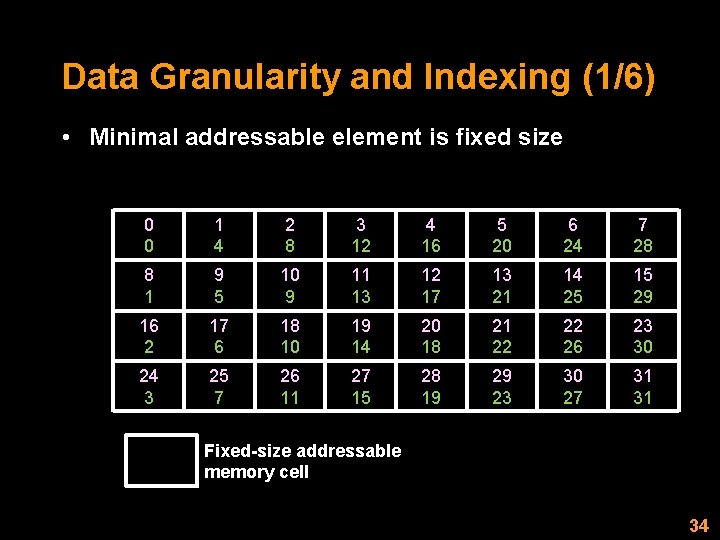

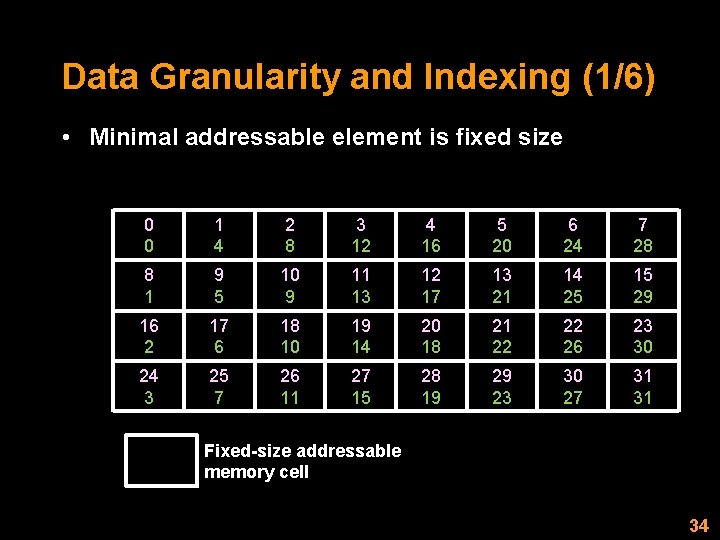

Data Granularity and Indexing (1/6) • Minimal addressable element is fixed size 0 0 1 4 2 8 3 12 4 16 5 20 6 24 7 28 8 1 9 5 10 9 11 13 12 17 13 21 14 25 15 29 16 2 17 6 18 10 19 14 20 18 21 22 22 26 23 30 24 3 25 7 26 11 27 15 28 19 29 23 30 27 31 31 Fixed-size addressable memory cell 34

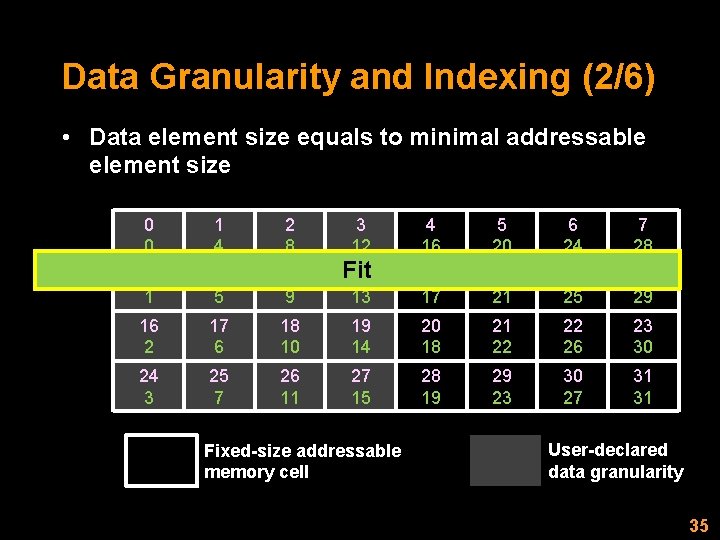

Data Granularity and Indexing (2/6) • Data element size equals to minimal addressable element size 0 0 1 4 2 8 3 12 4 16 5 20 6 24 7 28 8 1 9 5 10 9 13 12 17 13 21 14 25 15 29 16 2 17 6 18 10 19 14 20 18 21 22 22 26 23 30 24 3 25 7 26 11 27 15 28 19 29 23 30 27 31 31 Fit 11 Fixed-size addressable memory cell User-declared data granularity 35

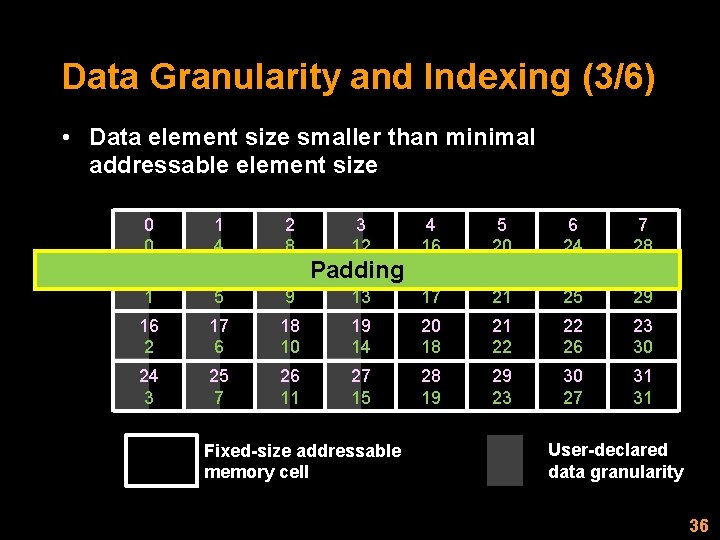

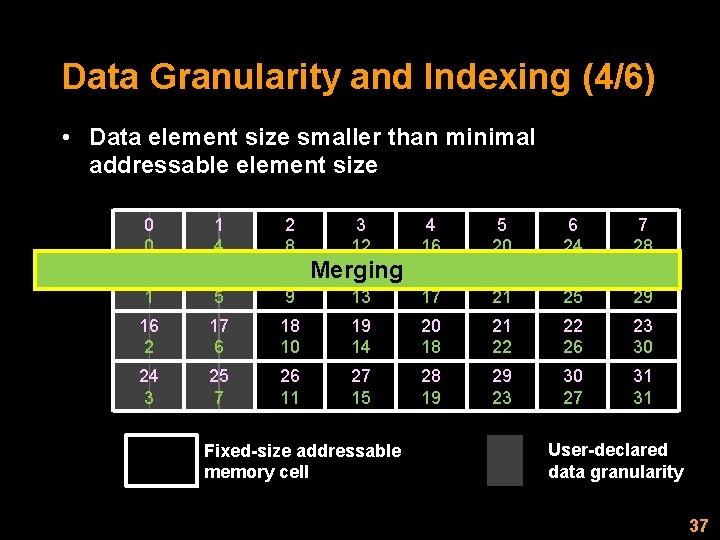

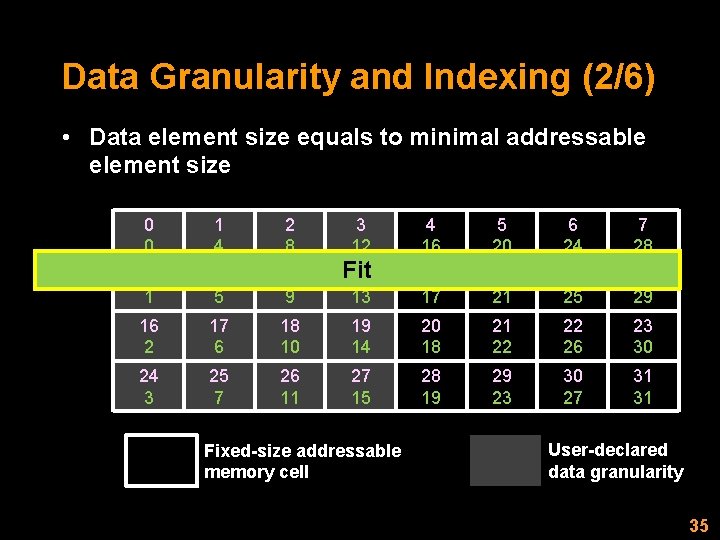

Data Granularity and Indexing (3/6) • Data element size smaller than minimal addressable element size 0 0 1 4 2 8 3 12 4 16 5 20 6 24 7 28 8 1 9 5 10 9 13 12 17 13 21 14 25 15 29 16 2 17 6 18 10 19 14 20 18 21 22 22 26 23 30 24 3 25 7 26 11 27 15 28 19 29 23 30 27 31 31 Padding 11 Fixed-size addressable memory cell User-declared data granularity 36

Data Granularity and Indexing (4/6) • Data element size smaller than minimal addressable element size 0 0 1 4 2 8 3 12 4 16 5 20 6 24 7 28 8 1 9 5 10 9 13 12 17 13 21 14 25 15 29 16 2 17 6 18 10 19 14 20 18 21 22 22 26 23 30 24 3 25 7 26 11 27 15 28 19 29 23 30 27 31 31 Merging 11 Fixed-size addressable memory cell User-declared data granularity 37

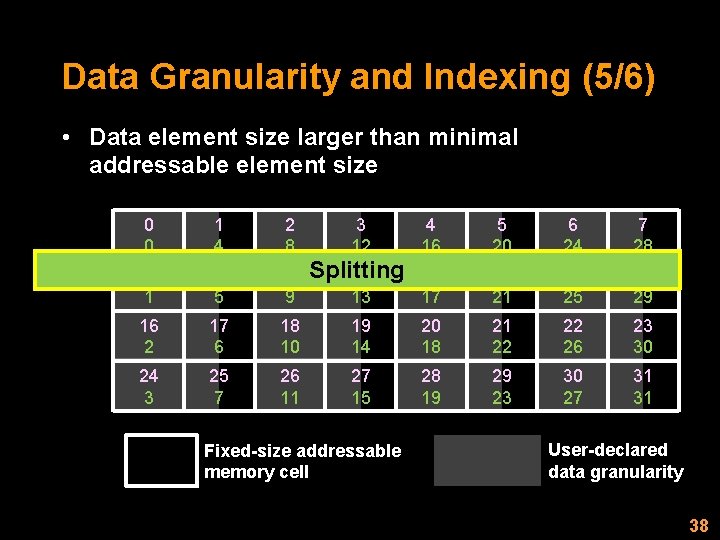

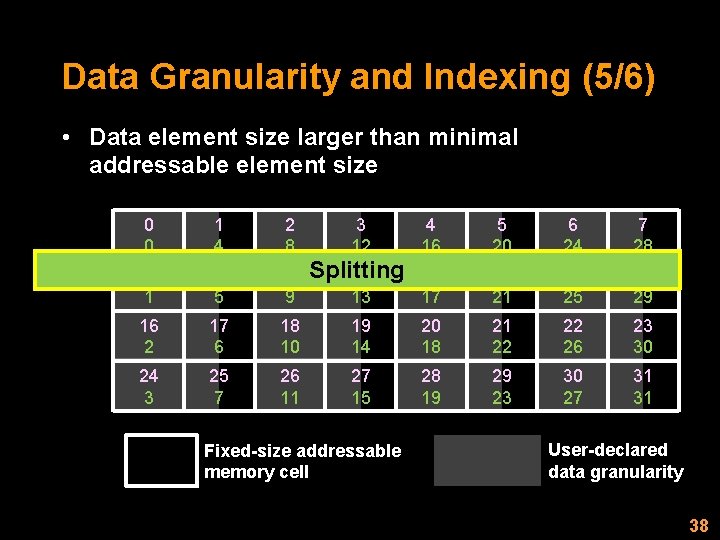

Data Granularity and Indexing (5/6) • Data element size larger than minimal addressable element size 0 0 1 4 2 8 3 12 4 16 5 20 6 24 7 28 8 1 9 5 10 9 13 12 17 13 21 14 25 15 29 16 2 17 6 18 10 19 14 20 18 21 22 22 26 23 30 24 3 25 7 26 11 27 15 28 19 29 23 30 27 31 31 Splitting 11 Fixed-size addressable memory cell User-declared data granularity 38

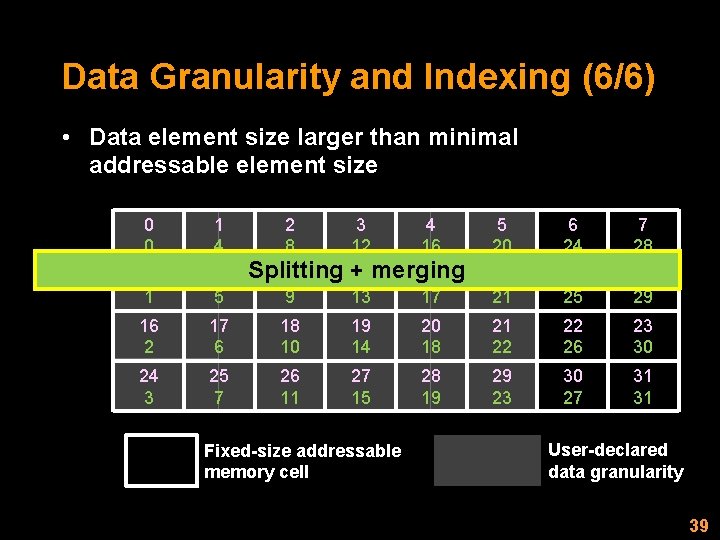

Data Granularity and Indexing (6/6) • Data element size larger than minimal addressable element size 0 0 1 4 8 1 9 5 16 2 24 3 2 8 3 12 4 16 5 20 6 24 7 28 14 25 15 29 Splitting + merging 10 11 12 9 13 17 13 21 17 6 18 10 19 14 20 18 21 22 22 26 23 30 25 7 26 11 27 15 28 19 29 23 30 27 31 31 Fixed-size addressable memory cell User-declared data granularity 39

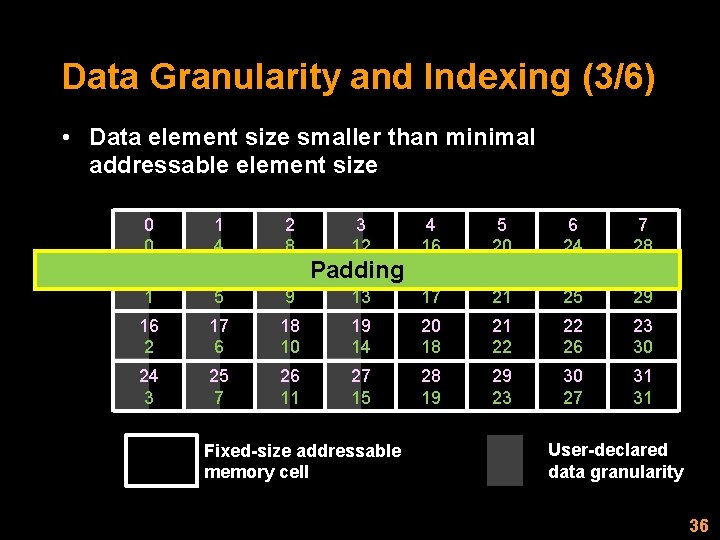

![Sequential Memory Allocation User defined array A 100150 Dualaddressing memory Destroy inherent user data Sequential Memory Allocation User defined array A [100][150] Dual-addressing memory Destroy inherent user data](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-40.jpg)

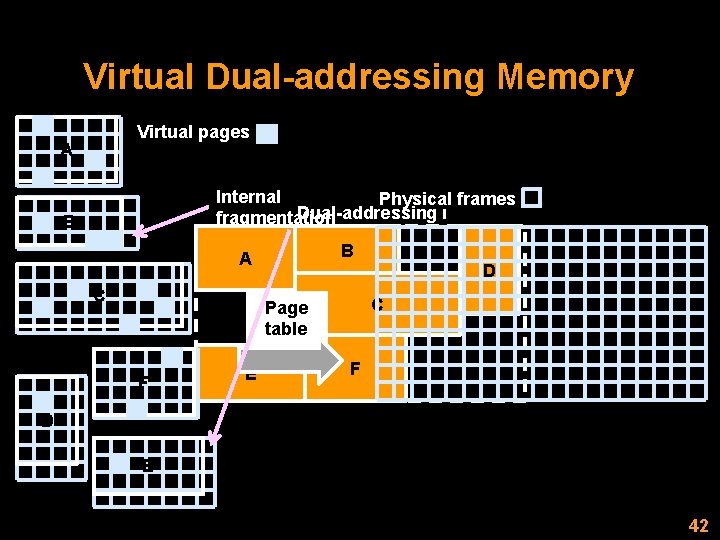

Sequential Memory Allocation User defined array A [100][150] Dual-addressing memory Destroy inherent user data locality 40

![Twodimensional Memory Allocation A 100150 External fragmentation Dualaddressing memory B A D 60160 100150 Two-dimensional Memory Allocation A [100][150] External fragmentation Dual-addressing memory B A D [60][160] [100][150]](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-41.jpg)

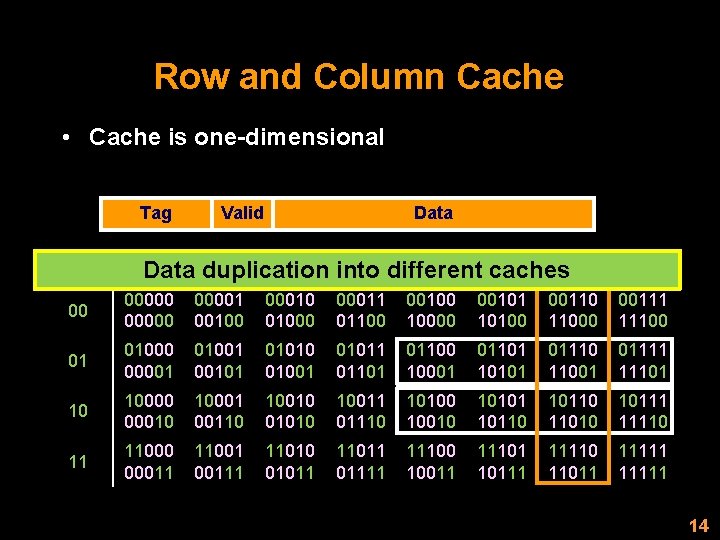

Two-dimensional Memory Allocation A [100][150] External fragmentation Dual-addressing memory B A D [60][160] [100][150] EDA floorplanning problem results in fragmentations [70] C [160][320] E [100][200] F [120][140] 41

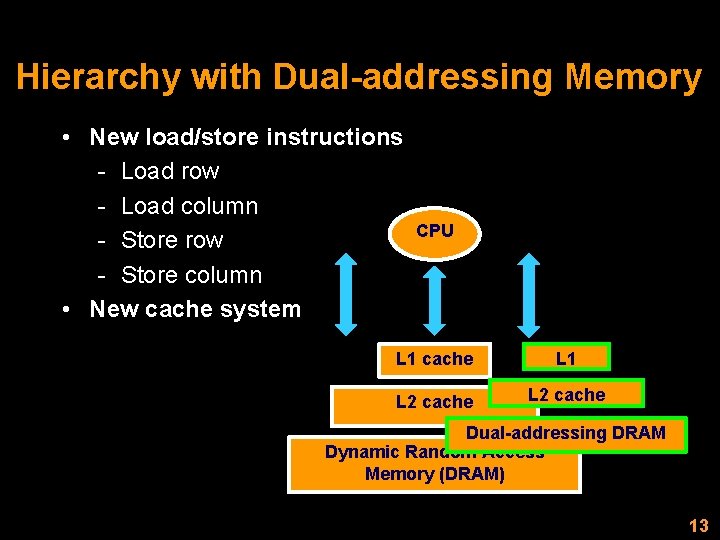

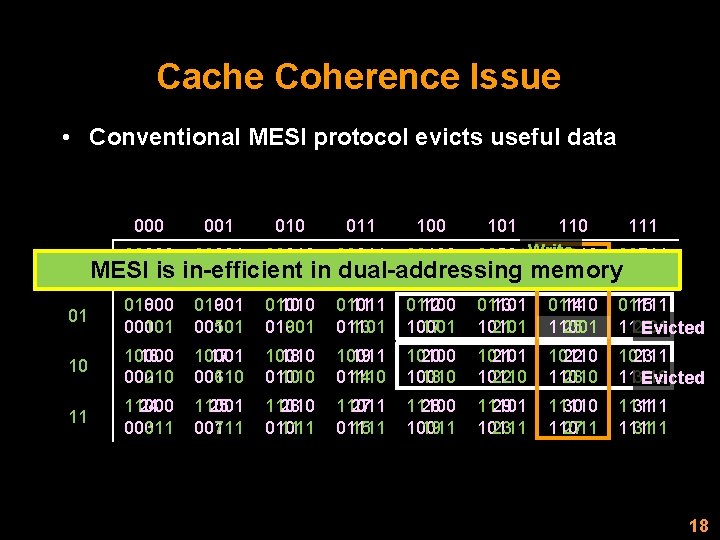

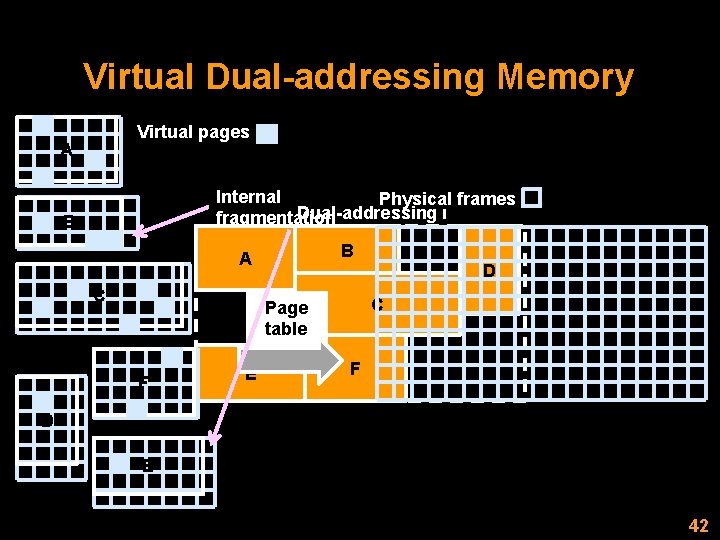

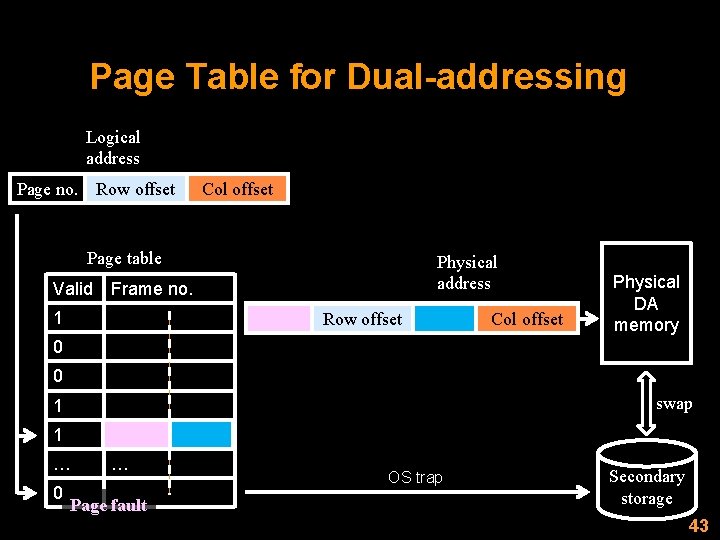

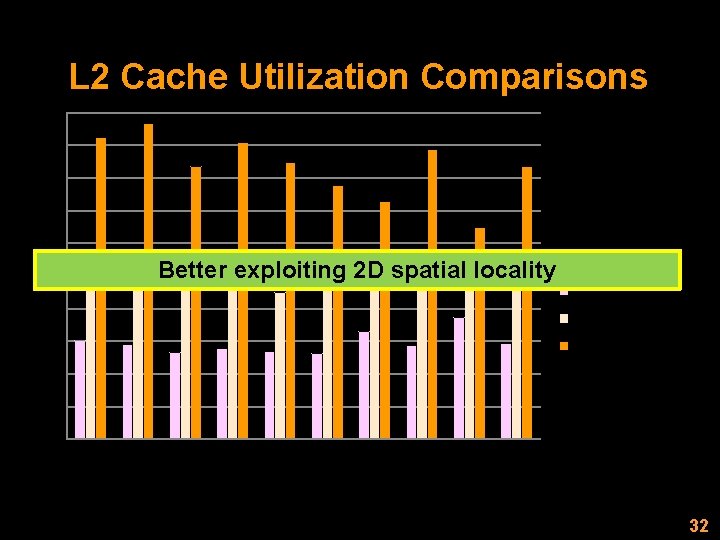

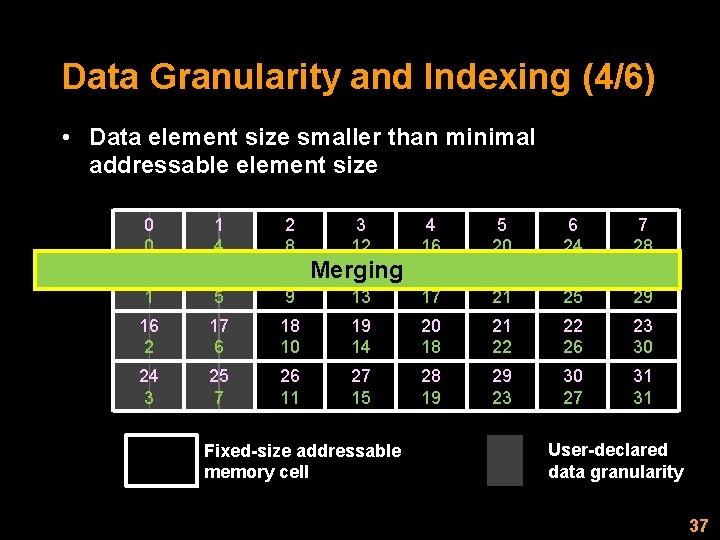

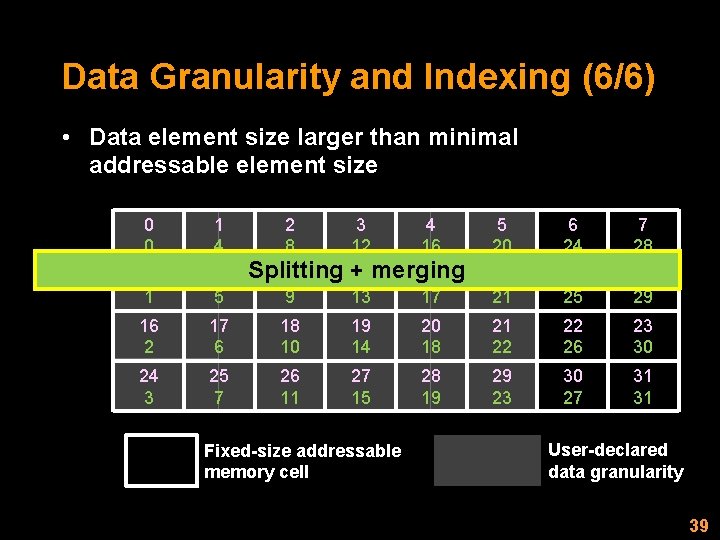

Virtual Dual-addressing Memory Virtual pages A Internal Physical frames Dual-addressing memory fragmentation B B A C C Page table F E D F D E 42

Page Table for Dual-addressing Logical address Page no. Row offset Col offset Page table Physical address Valid Frame no. 1 Row offset Col offset Physical DA memory 0 0 swap 1 1 … 0 … Page fault OS trap Secondary storage 43

![Outline Introduction DRAM Previous work Nonlinear data placement 78 Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] -](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-44.jpg)

Outline • Introduction - DRAM • Previous work - Non-linear data placement [7][8] - Stride pre-fetching [12] • Dual-addressing (DA) memory organization - DA DRAM architecture - Full system hierarchy with DA • Cache coherence - WURF • Experimental results • DA memory optimizations - Data granularity and indexing - Virtual DA memory • Conclusions 44

Conclusions • Dual-addressing memory supports twodimensional memory access patterns • Coherence protocol, WURF, maintains DA cache coherence • Granularity policy provides good trade off between memory utilization and access latency • Virtual DA memory system avoid external fragmentations 45

Backup Slides

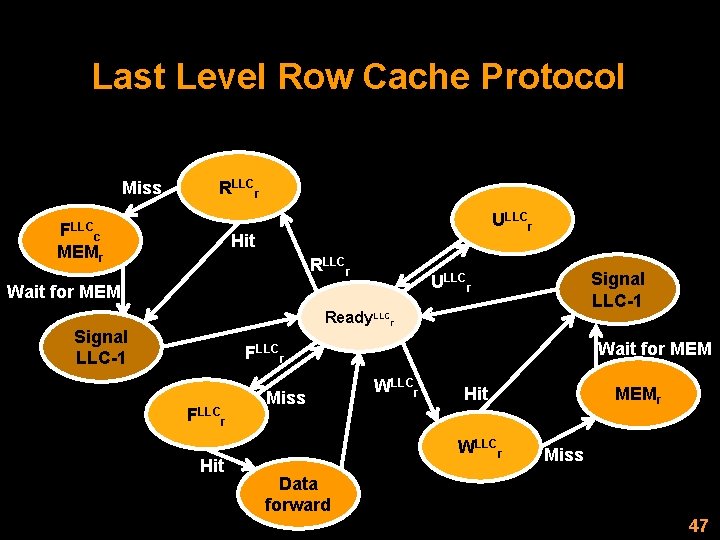

Last Level Row Cache Protocol Miss FLLC RLLCr c ULLCr Hit MEMr RLLCr Signal LLC-1 ULLCr Wait for MEM Ready. LLCr Signal LLC-1 Wait for MEM FLLCr Hit Miss WLLCr Hit WLLCr MEMr Miss Data forward 47

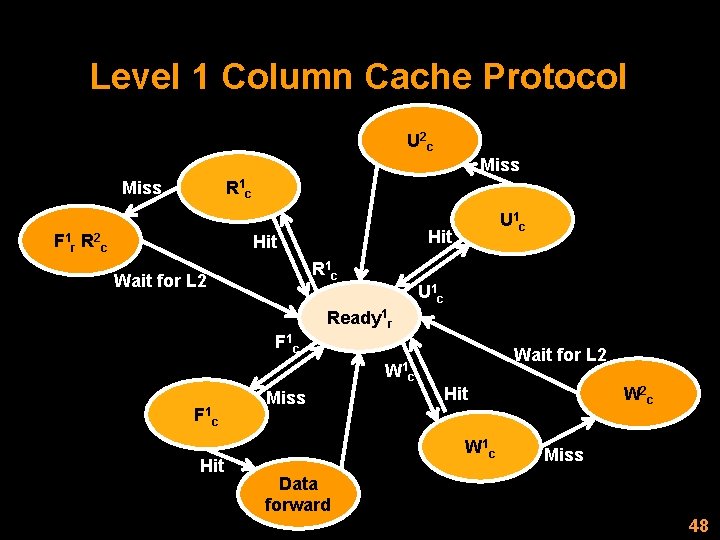

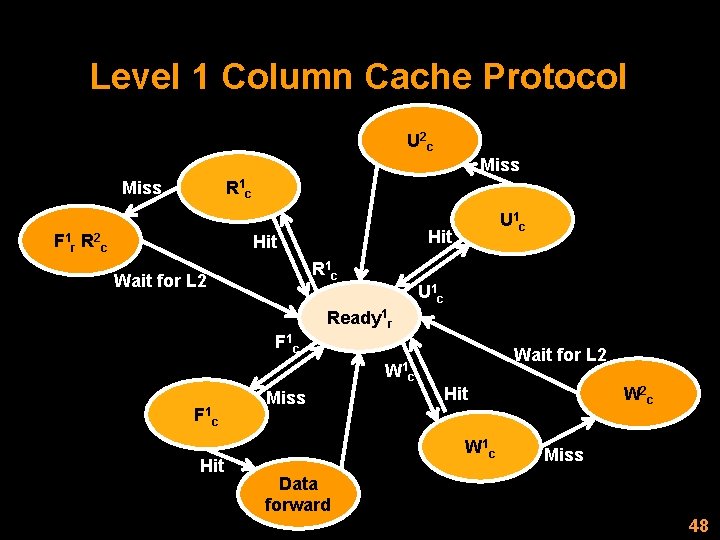

Level 1 Column Cache Protocol U 2 c Miss R 1 c Miss F 1 r R 2 c Hit R 1 c Wait for L 2 U 1 c Ready 1 r F 1 c W 1 F 1 c Hit Miss Wait for L 2 c Hit W 1 c W 2 c Miss Data forward 48

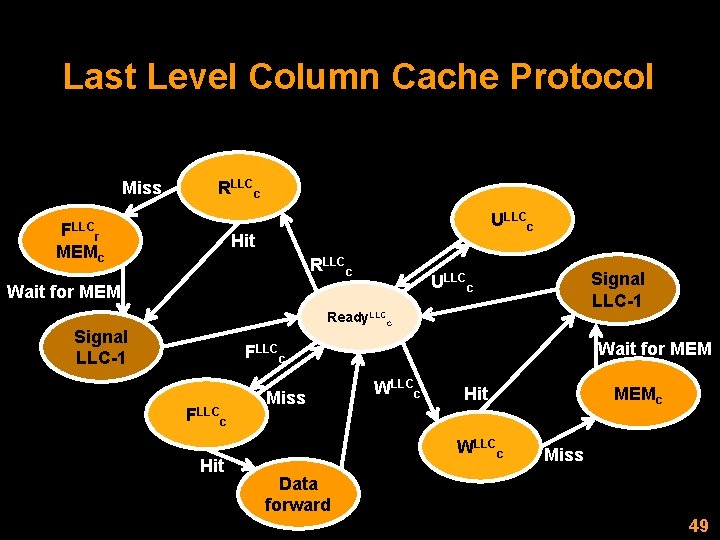

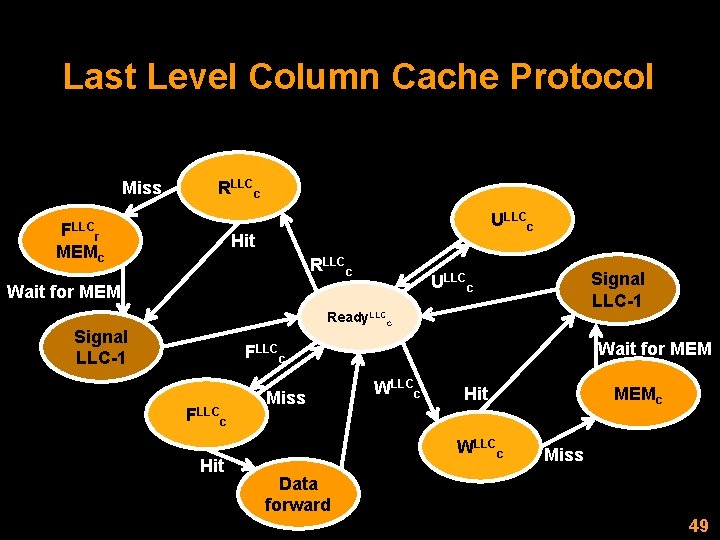

Last Level Column Cache Protocol Miss FLLC RLLCc r ULLCc Hit MEMc RLLCc Signal LLC-1 ULLCc Wait for MEM Ready. LLCc Signal LLC-1 Wait for MEM FLLCc Hit Miss WLLCc Hit WLLCc MEMc Miss Data forward 49

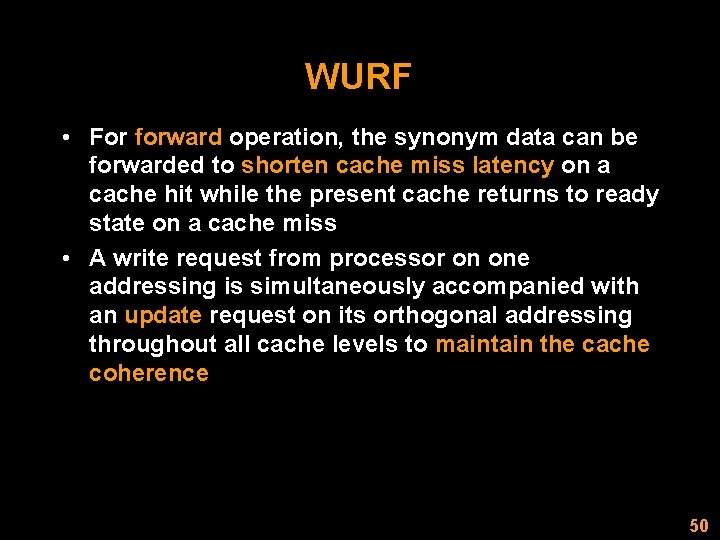

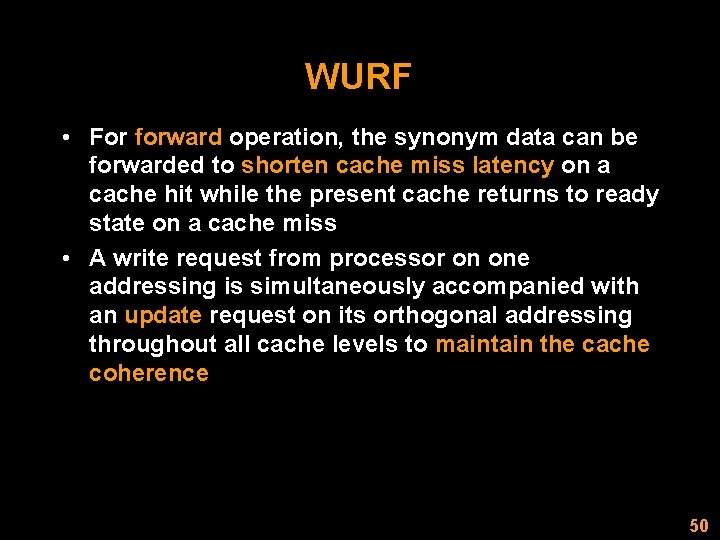

WURF • For forward operation, the synonym data can be forwarded to shorten cache miss latency on a cache hit while the present cache returns to ready state on a cache miss • A write request from processor on one addressing is simultaneously accompanied with an update request on its orthogonal addressing throughout all cache levels to maintain the cache coherence 50

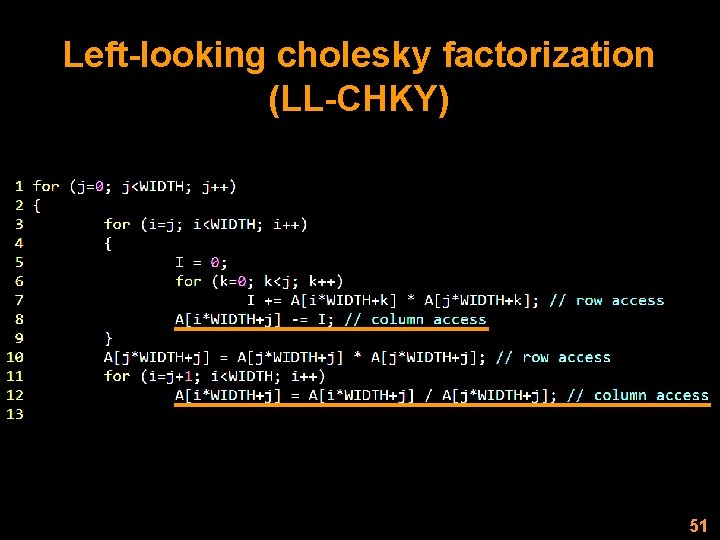

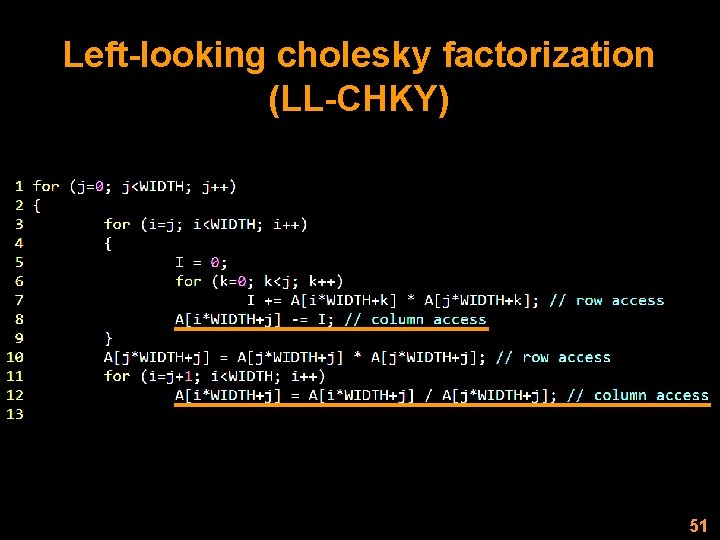

Left-looking cholesky factorization (LL-CHKY) 51

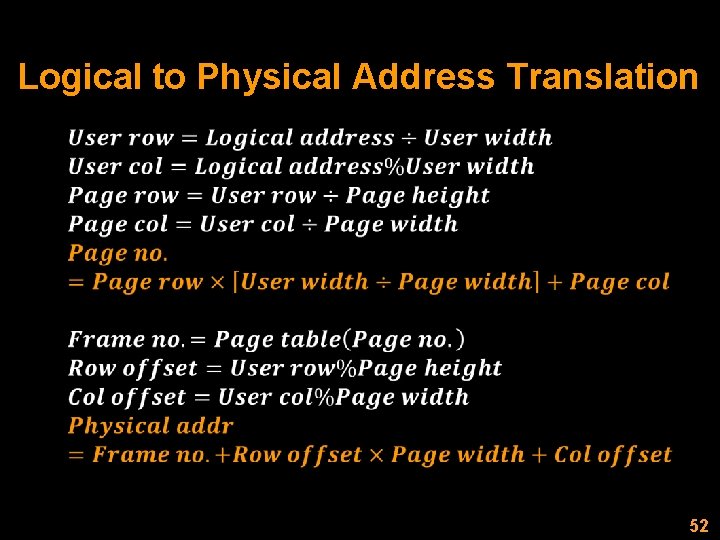

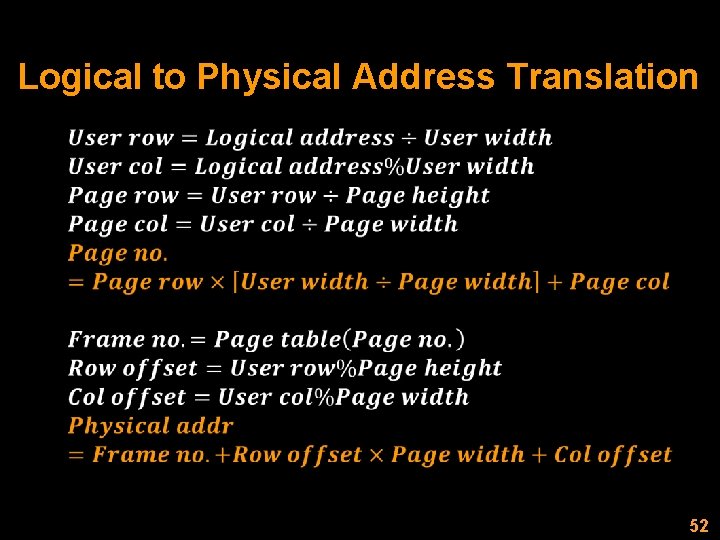

Logical to Physical Address Translation 52

![Virtual Dualaddressing Memory An 5 by 4 array Page 00 Page 01 00 01 Virtual Dual-addressing Memory An 5 by 4 array Page [0][0] Page [0][1] [0][0] [0][1]](https://slidetodoc.com/presentation_image/d3928adaf8baf3ec453100a9c2546f66/image-53.jpg)

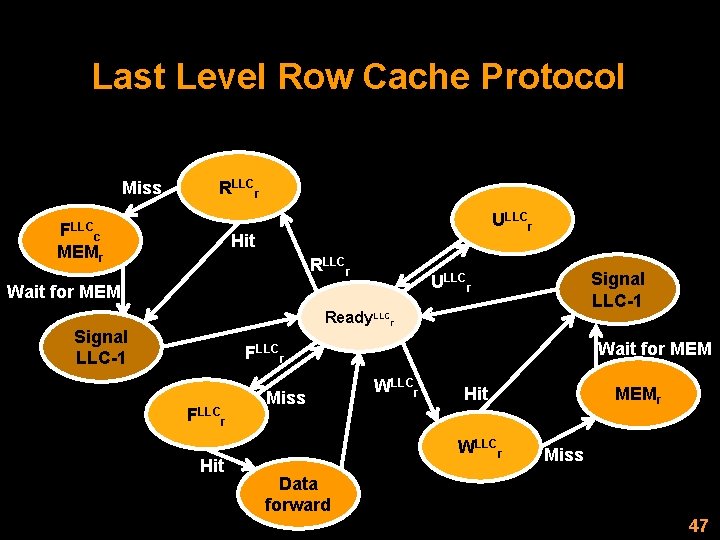

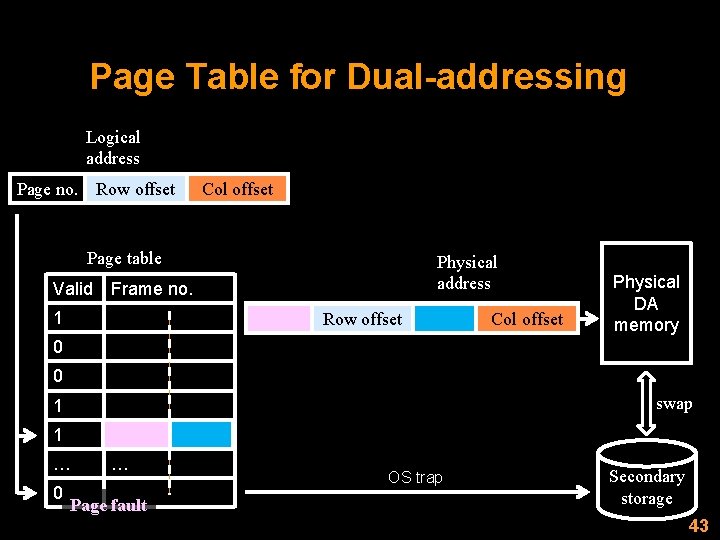

Virtual Dual-addressing Memory An 5 by 4 array Page [0][0] Page [0][1] [0][0] [0][1] [0][2] [0][3] 3 3 0 1 2 • Virtual addr - [3][2] • Page no. [1][0] [1][1] [1][2] [1][3] 7 7 4 5 6 - [3/3][2/3] Physical addr = page base + offset[0][2] [2][0] [2][1] [2][2] [2][3] - [1][0] 11 11 8 9 10 • Offset [3][0] [3][1] [3][2]Internal fragmentation [3][3] Page [1][1] - [3%3][2%3] Page [1][0] 12 13 14 15 [3][3] [3][0] [3][1] [3][2] - [0][2] 12 13 14 [4][3] 15 [4][0] [4][1] [4][2] 16 17 18 19 3 [4][3] 19 3 53

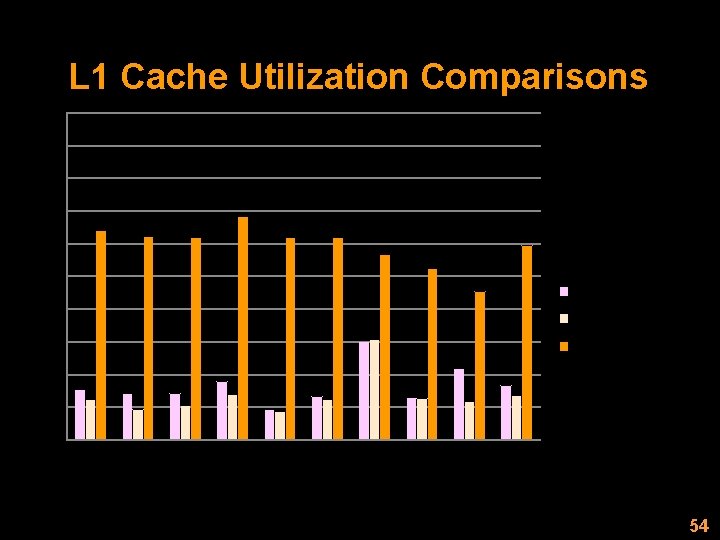

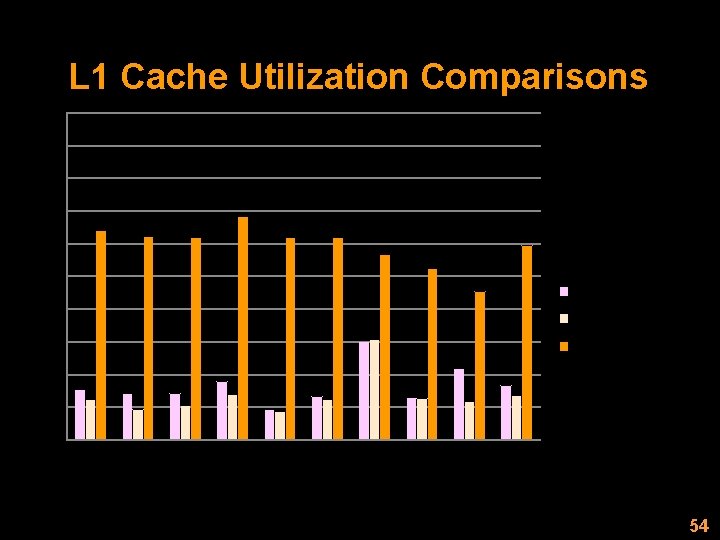

L 1 Cache Utilization Comparisons 100% 90% 80% 70% 60% 50% Baseline_1 D (%) 40% Morton (%) 30% DA (%) 20% 10% -B ST R LC H KY LL -C H KY W I-C om W I-D p ec om av p er ag e O C O M p m t ec o ul -D -M LU M M -T ra ns 0% 54

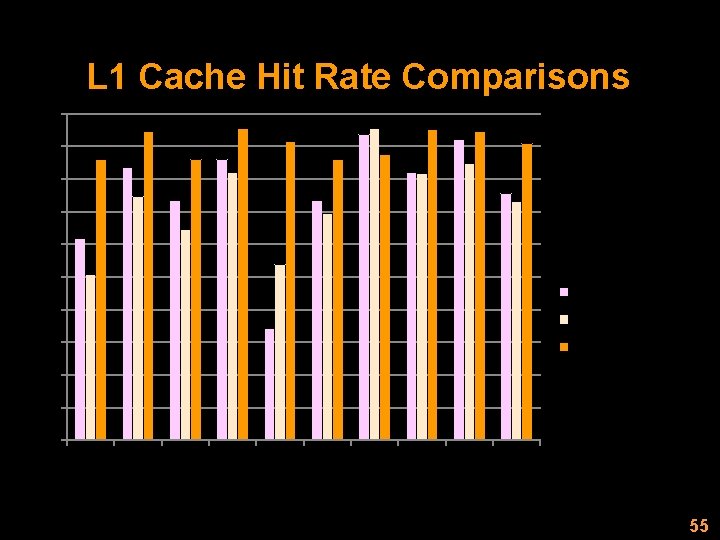

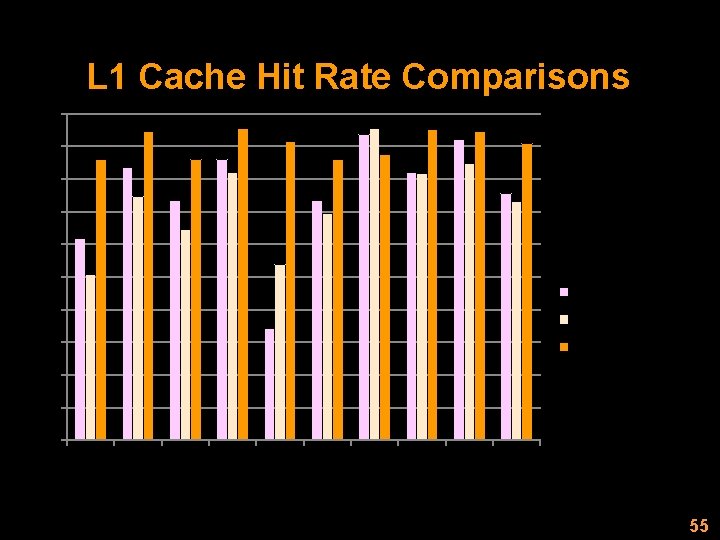

L 1 Cache Hit Rate Comparisons 100% 90% 80% 70% 60% 50% Baseline_1 D (%) 40% Morton (%) 30% DA (%) 20% 10% -B ST R LC H KY LL -C H KY W I-C om W I-D p ec om av p er ag e O C O M p m t ec o ul -D -M LU M M -T ra ns 0% 55