DRAM background FullyBuffered DIMM Memory Architectures Understanding Mechanisms

DRAM background Fully-Buffered DIMM Memory Architectures: Understanding Mechanisms, Overheads and Scaling, Garnesh, HPCA'07 CS 8501, Mario D. Marino, 02/08

DRAM Background

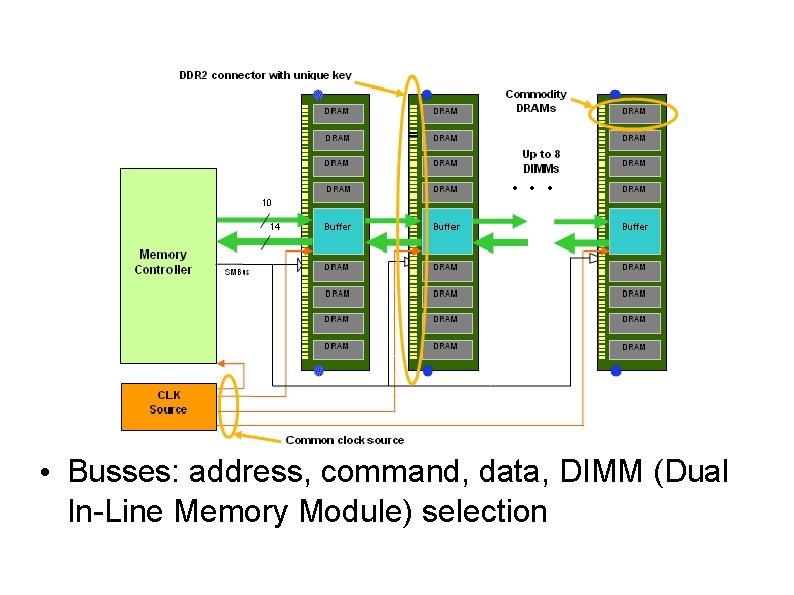

Typical Memory • Busses: address, command, data, DIMM (Dual In-Line Memory Module) selection

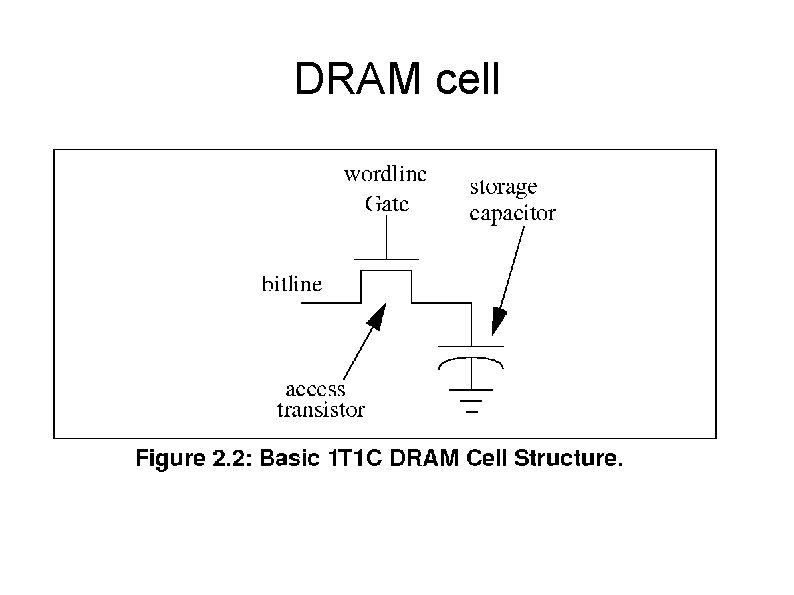

DRAM cell

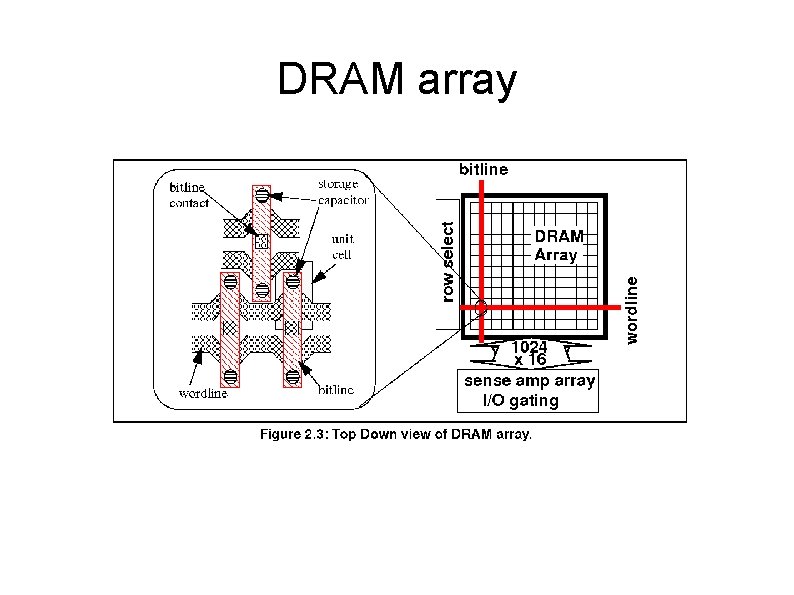

DRAM array

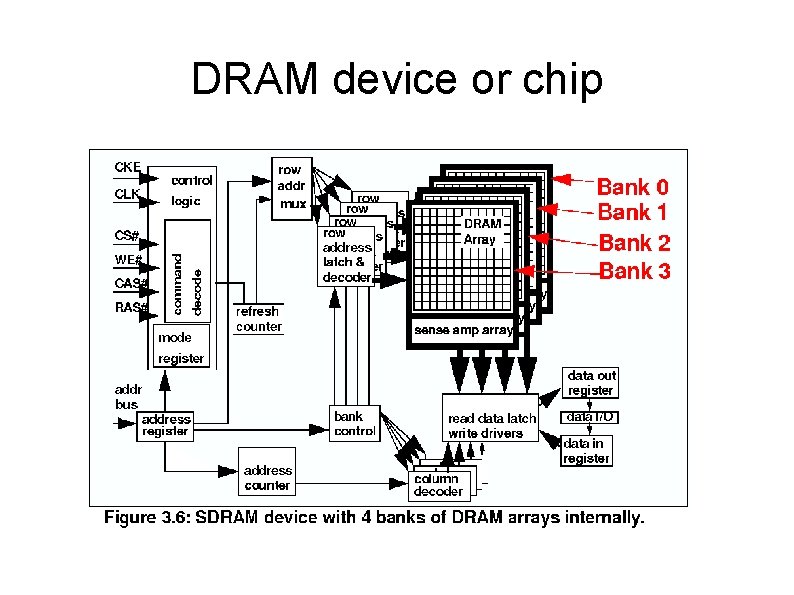

DRAM device or chip

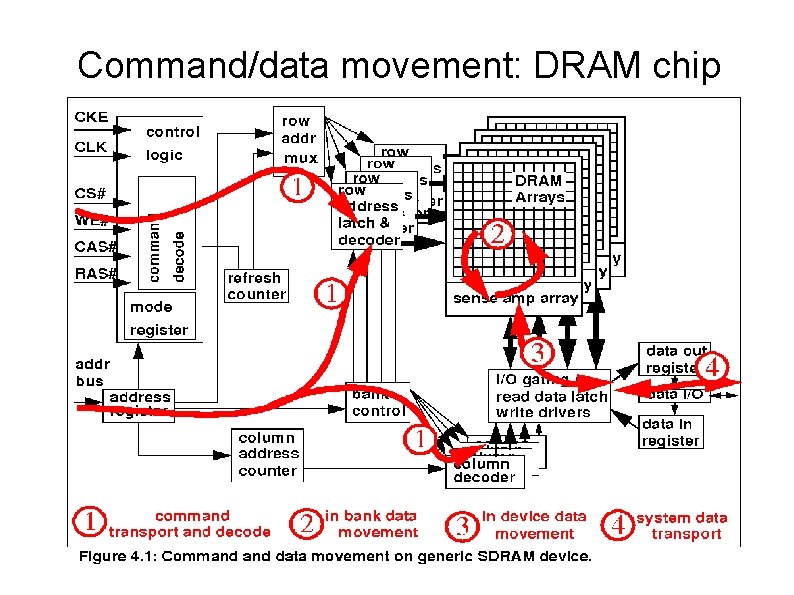

Command/data movement: DRAM chip

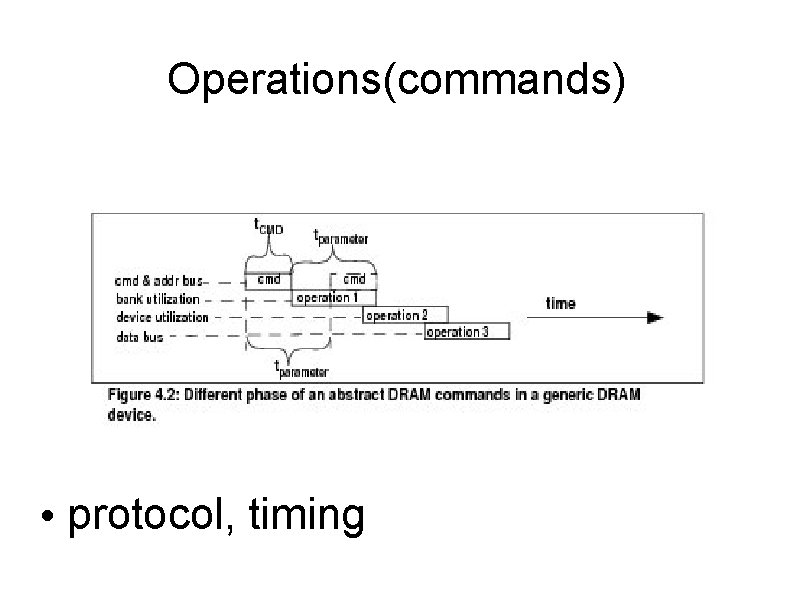

Operations(commands) • protocol, timing

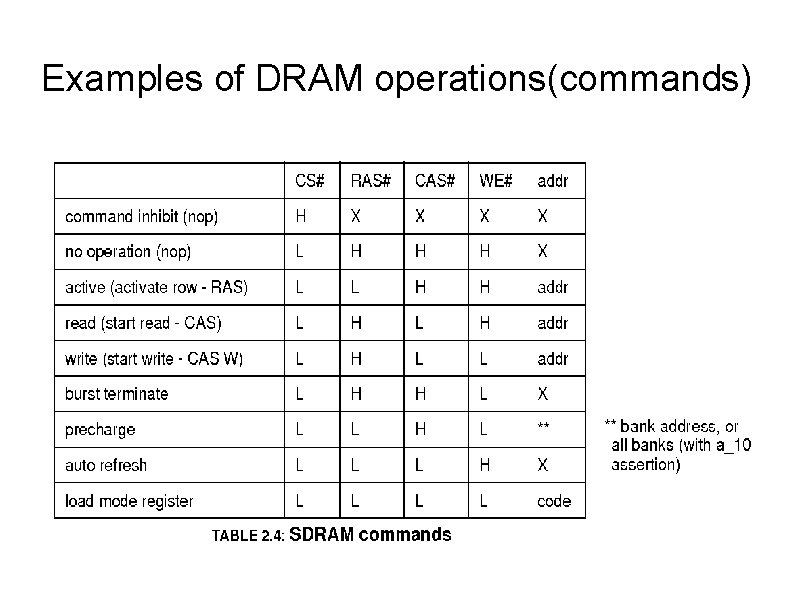

Examples of DRAM operations(commands)

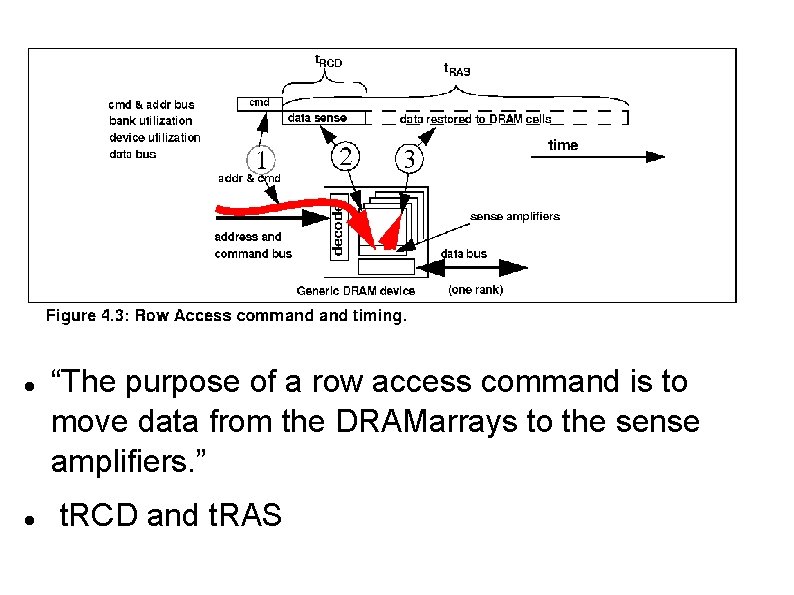

“The purpose of a row access command is to move data from the DRAMarrays to the sense amplifiers. ” t. RCD and t. RAS

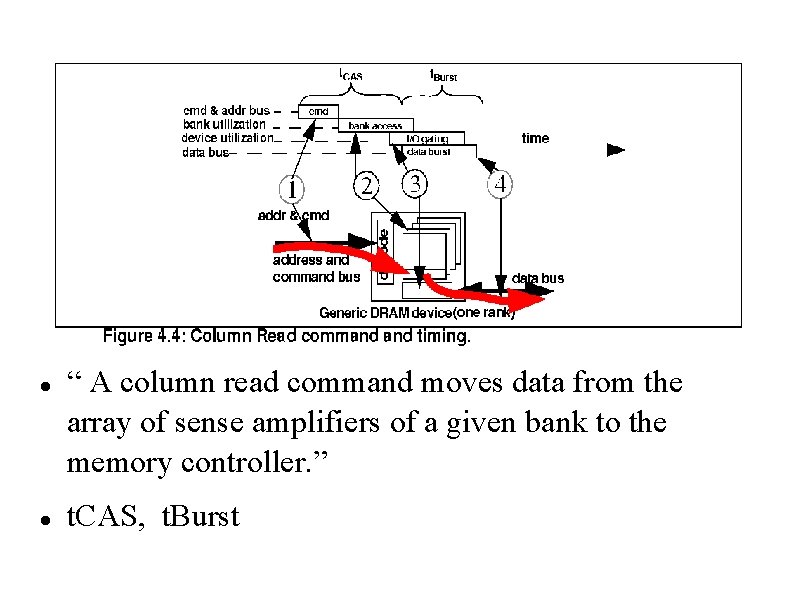

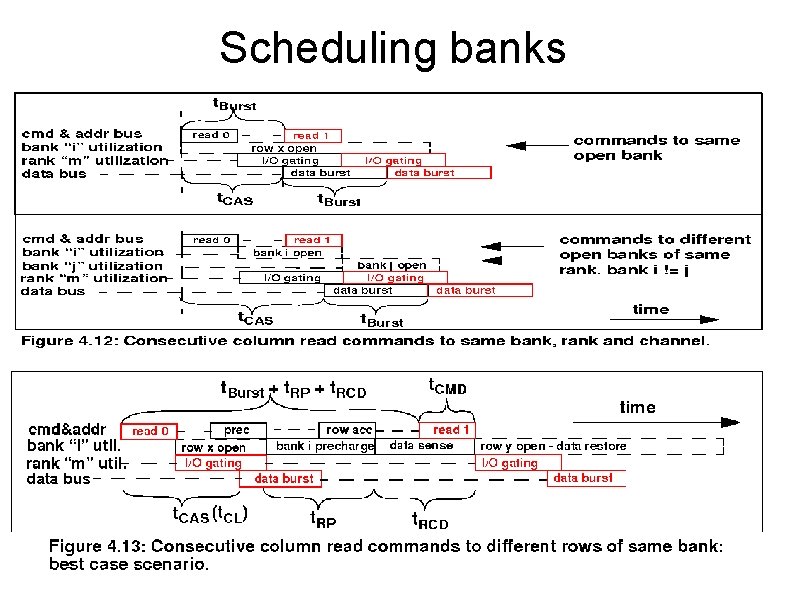

“ A column read command moves data from the array of sense amplifiers of a given bank to the memory controller. ” t. CAS, t. Burst

Precharge: separate phase that is a prerequisite for the subsequent phases of a row access operation (bitlines set to Vcc/2 or Vcc)

Organization, access, protocols

Logical Channels: set of physical channels connected to the same memory controller

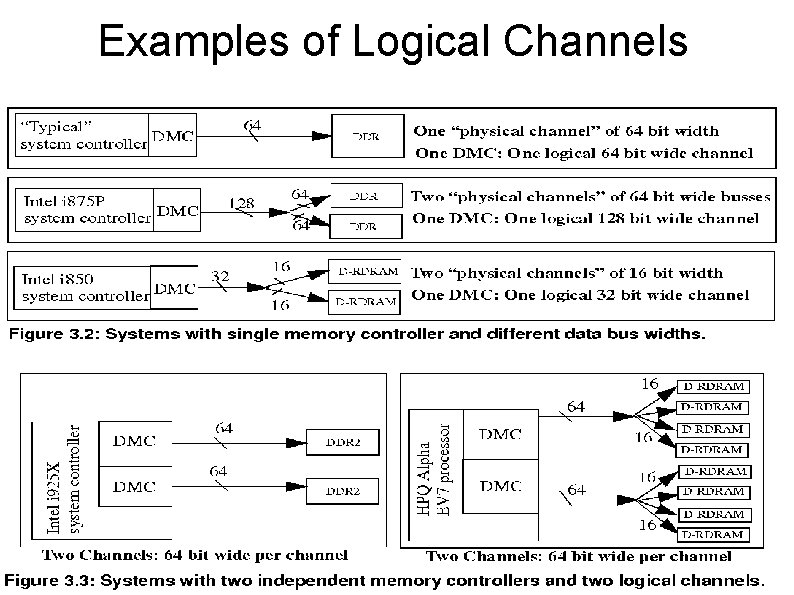

Examples of Logical Channels

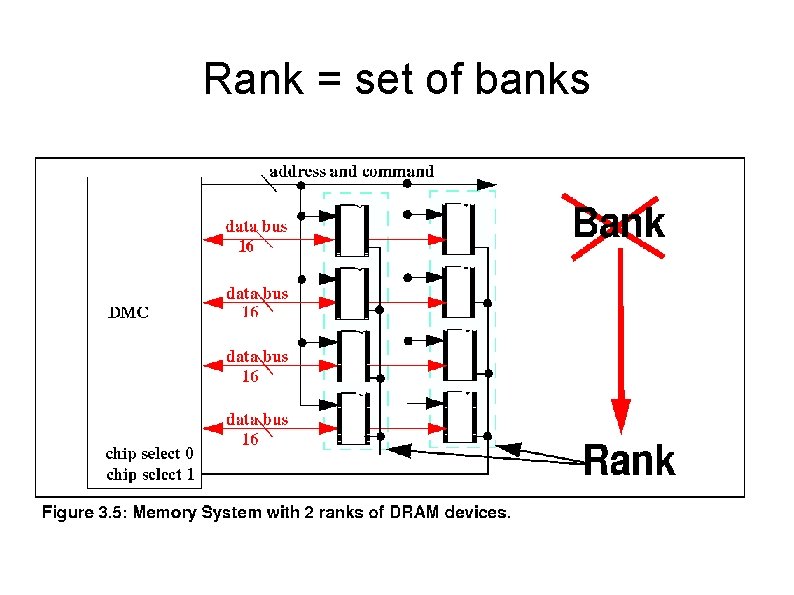

Rank = set of banks

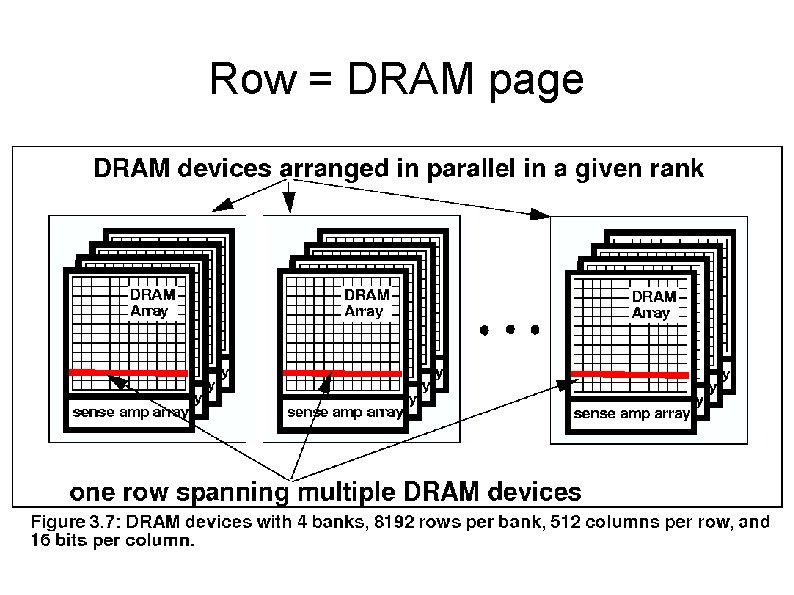

Row = DRAM page

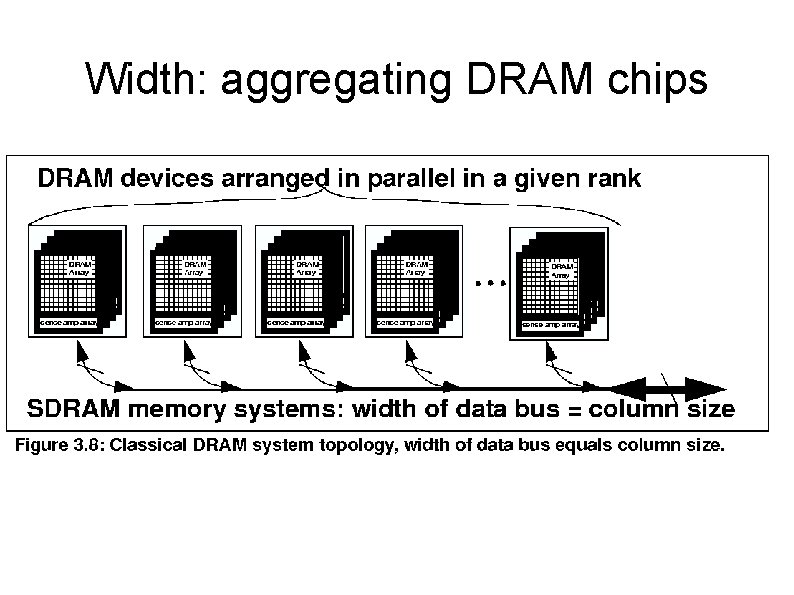

Width: aggregating DRAM chips

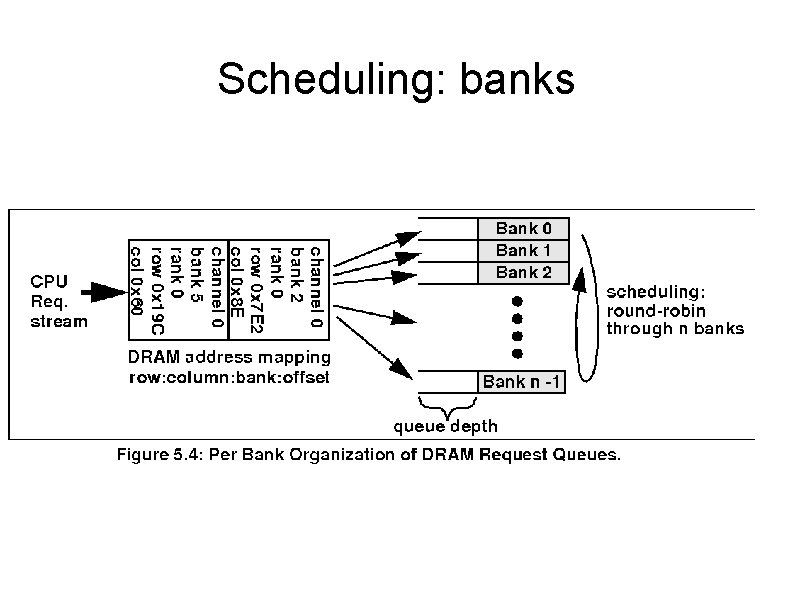

Scheduling: banks

Scheduling banks

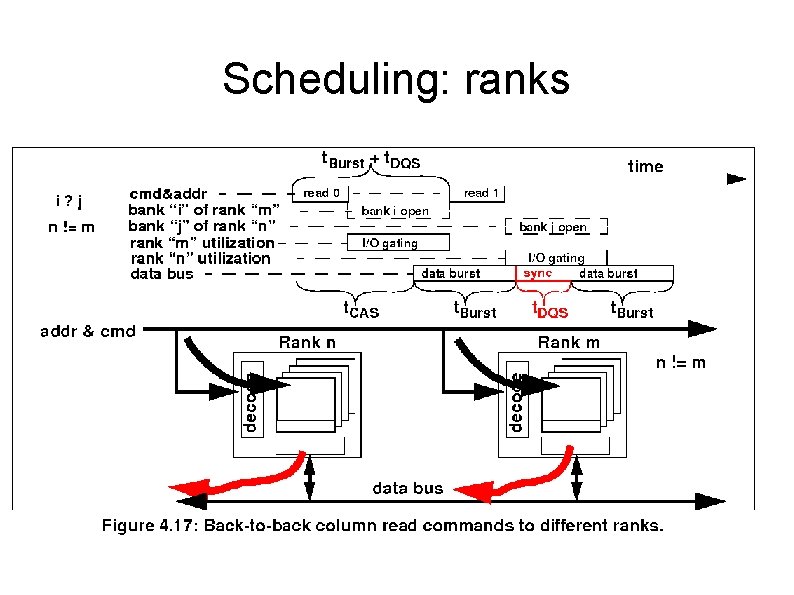

Scheduling: ranks

Open x Close page Open-page: data access to and from cells requires separate row and column commands – Favors accesses on the same row (sense aps open) – Typical general purpose computers (desktop/laptop) Close-page: – Intense amount of requests, favors random accesses – Large multiprocessor/multicore systems

Available Parallelism in DRAM System Organization Channel: Pros: performance different logical channels, independent memory controllers schedulling strategies cons Number of pins, power to deliver Smart but not adaptive firmware

Available Parallelism in DRAM System Organization Rank pros accesses can proceed in parallel in different ranks (busses availability) cons Rank-to-rank switching penalties in high frequency Globally synchronous DRAM (global clock)

Available Parallelism in DRAM System Organization Bank Row Different banks (busses availability) Only 1 row/bank can be active at any time period Column Depends on management (close-page / open-page)

Paper: Fully-Buffered DIMM Memory Architectures: Understanding Mechanisms, Overheads and Scaling, Garnesh, HPCA'07

Issues • parallel bus scaling: frequency, widths, length, depth (man hops => latency ) • #memory controllers increased CPUs, GPUs – #DIMMs/channel (depth) decreases • 4 DIMMs/channel in DDRs • 2 DIMMs/channel in DDR 2 • 1 DIMM/channel in DDR 3 • scheduling

Contributions • Applied DDR based memory controller policies in FBDIMM memory • Evaluation of Performance • Exploit FBDIMM depth: rank (DIMM) parallelism • latency and bandwidth for FBDIMM and DDR – high utilization of the channels, FBDIMM • 7% in latency • 10% – low utilization of the channels • 25% in latency • 10 % in bandwidth

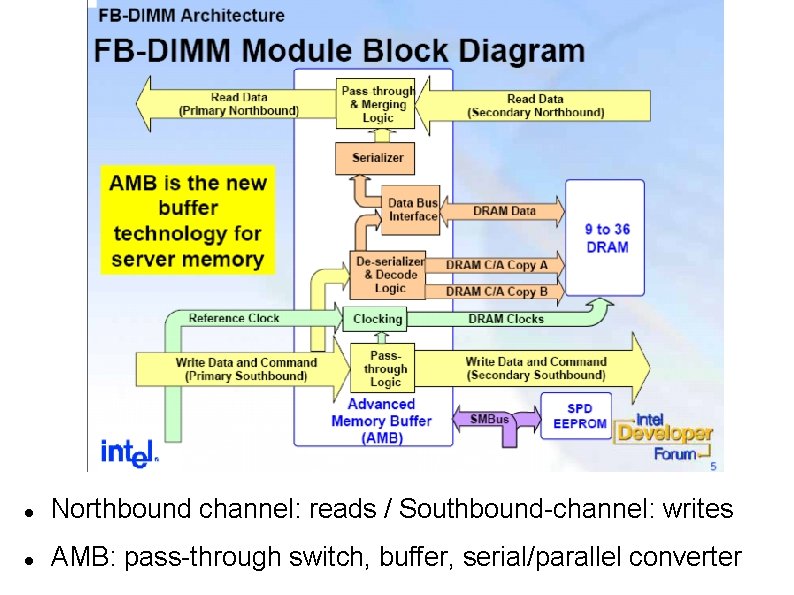

Northbound channel: reads / Southbound-channel: writes AMB: pass-through switch, buffer, serial/parallel converter

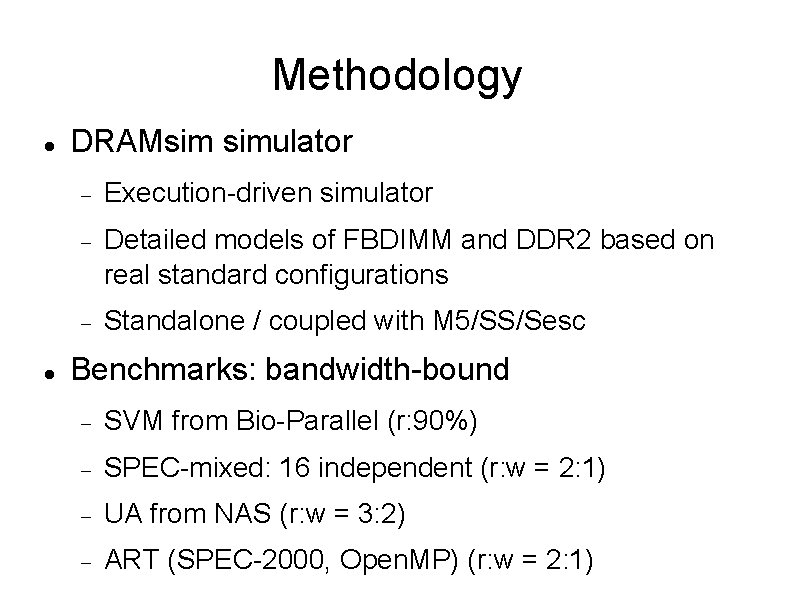

Methodology DRAMsim simulator Execution-driven simulator Detailed models of FBDIMM and DDR 2 based on real standard configurations Standalone / coupled with M 5/SS/Sesc Benchmarks: bandwidth-bound SVM from Bio-Parallel (r: 90%) SPEC-mixed: 16 independent (r: w = 2: 1) UA from NAS (r: w = 3: 2) ART (SPEC-2000, Open. MP) (r: w = 2: 1)

Methodology: cont • Different scheduling policies: greedy, OBF, most/last pending and RIFF • 16 -way CMP, 8 MB L 2 • Multi-threaded traces gathered with CMP$im • SPEC traces using Simplescalar with 1 MB L 2, in-order core • 1 rank/DIMM

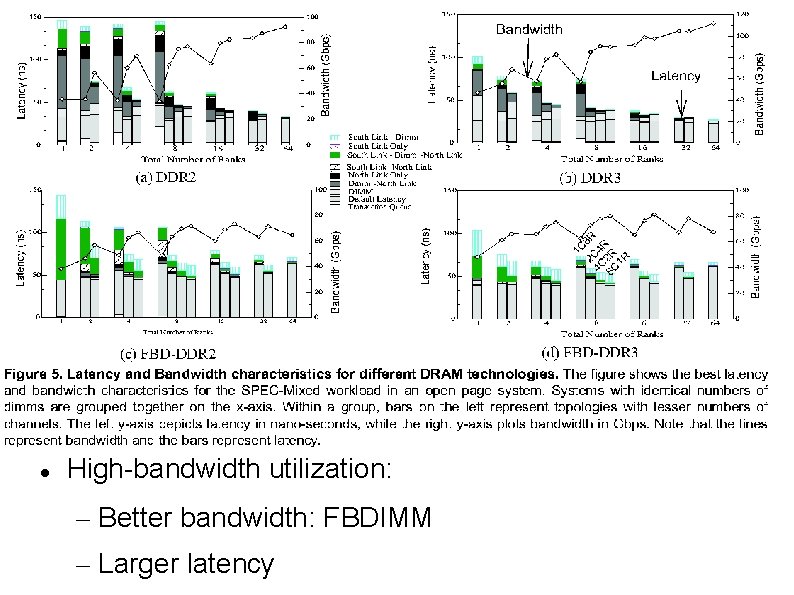

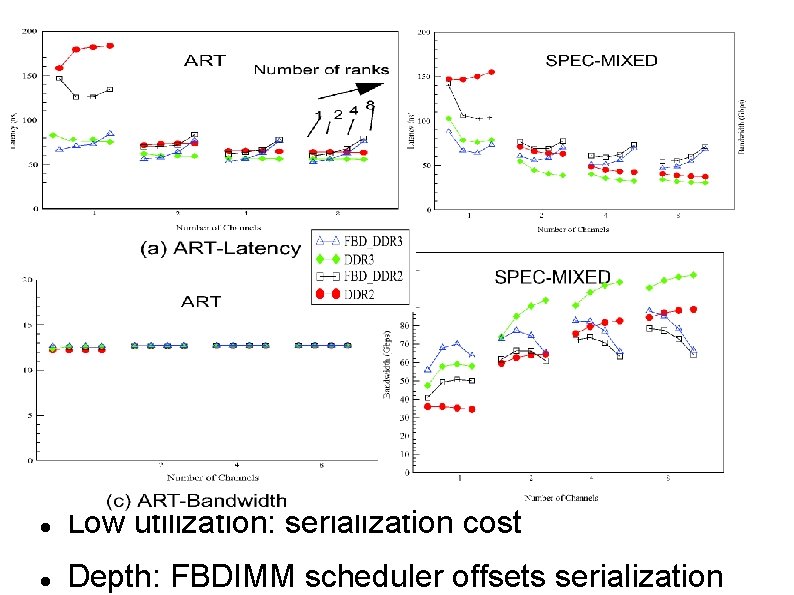

High-bandwidth utilization: – Better bandwidth: FBDIMM – Larger latency

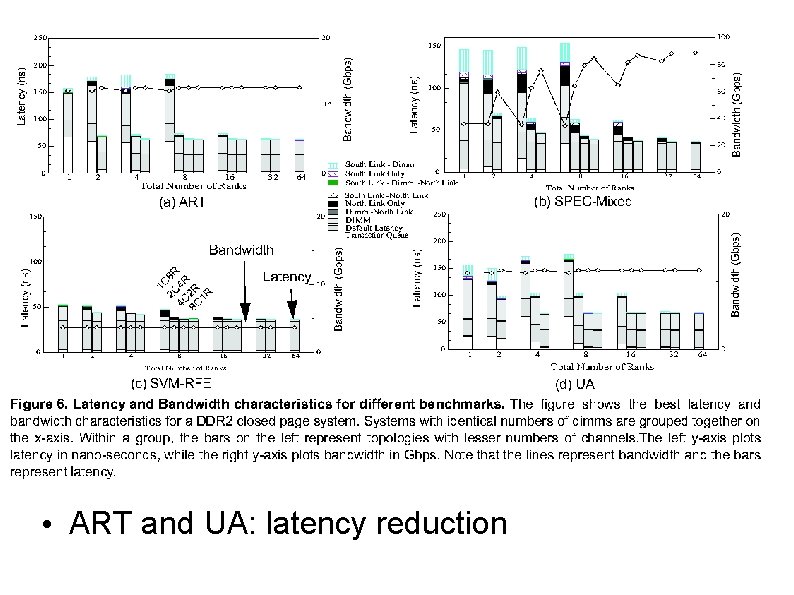

• ART and UA: latency reduction

Low utilization: serialization cost Depth: FBDIMM scheduler offsets serialization

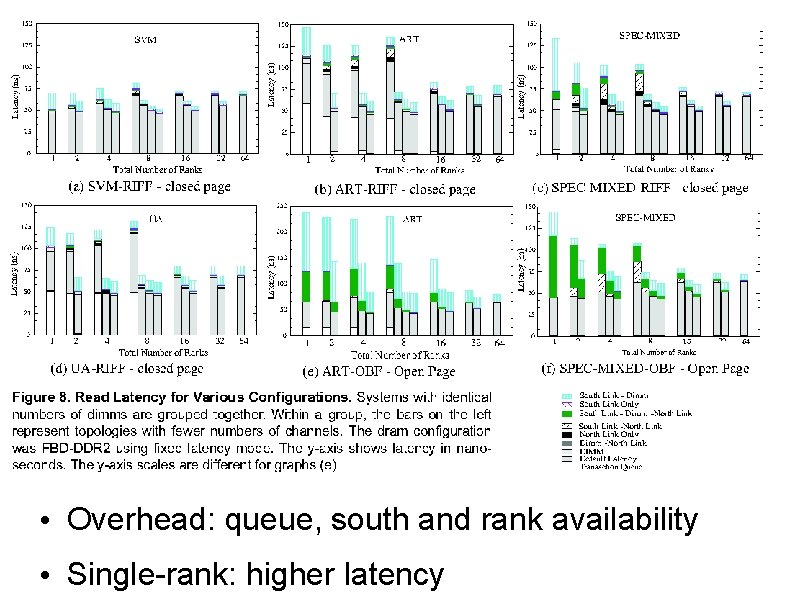

• Overhead: queue, south and rank availability • Single-rank: higher latency

Scheduling • Best: RIFF, priority on reads than writes

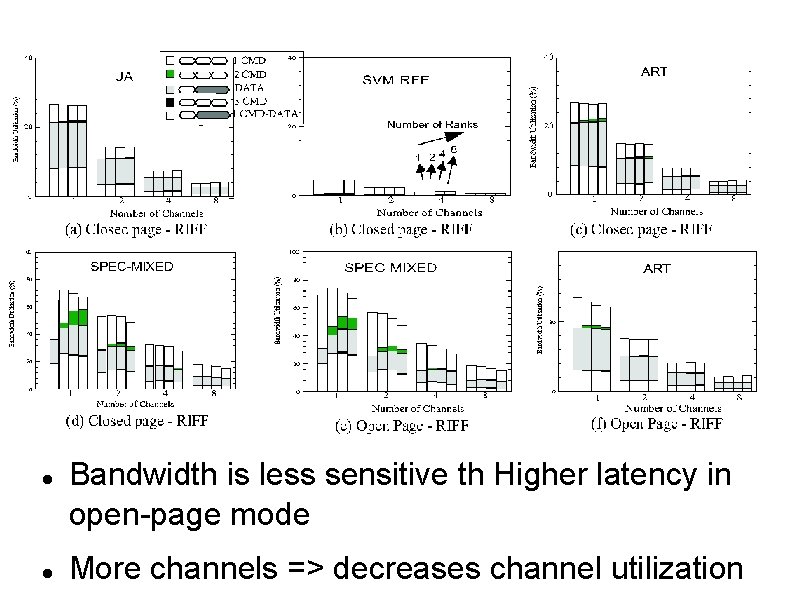

Bandwidth is less sensitive th Higher latency in open-page mode More channels => decreases channel utilization

- Slides: 40