Dr TM Fast Inmemory Transaction Processing using RDMA

- Slides: 74

Dr. TM Fast In-memory Transaction Processing using RDMA and HTM XINDA WEI, JIAXIN SHI, YANZHE CHEN, RONG CHEN, HAIBO CHEN Institute of Parallel and Distributed Systems Shanghai Jiao Tong University, China

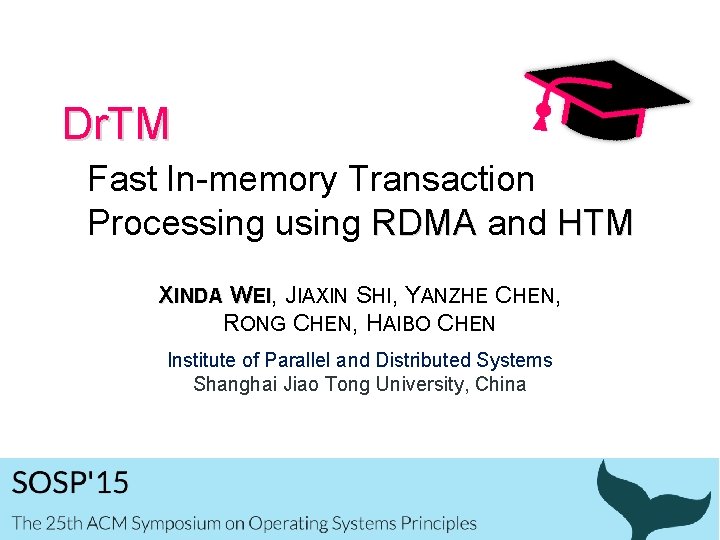

Transaction: Key Pillar for Many Systems $9. 3 billion/day Demand Speedy Distributed Transaction Over Large Data Volumes 9. 56 million tickets/day 11. 6 million payments/day 2

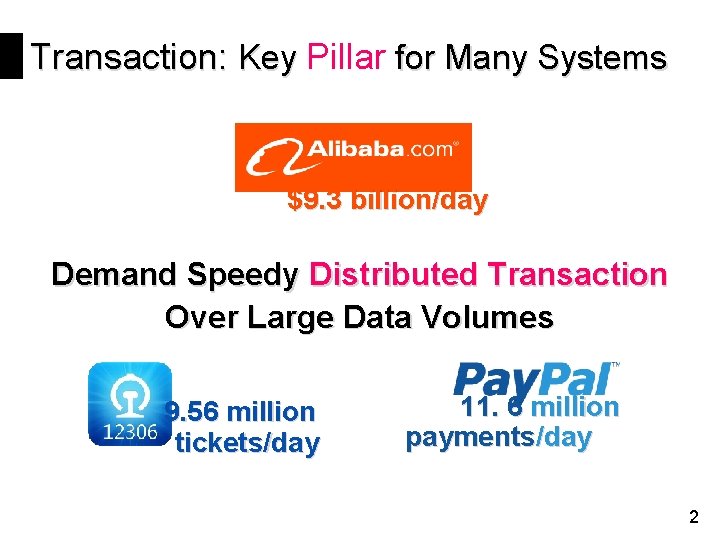

High COST for Distributed TX Many scalable systems have low performance □ Usually 10 s~100 s of thousands of TX/second □ High COST 1 (config. that outperform single thread) □ e. g. , HStore, Calvin. SIGMOD’ 12 Emerging speedy TX systems not scale-out □ Achieve over 100 s of thousands TX/second □ e. g. , Silo. SOSP’ 13, DBXEuro. Sys’ 14 Dilemma: single-node perf. vs. scale-out 1 Salability! But at what Cost? Hot. OS 2015 3

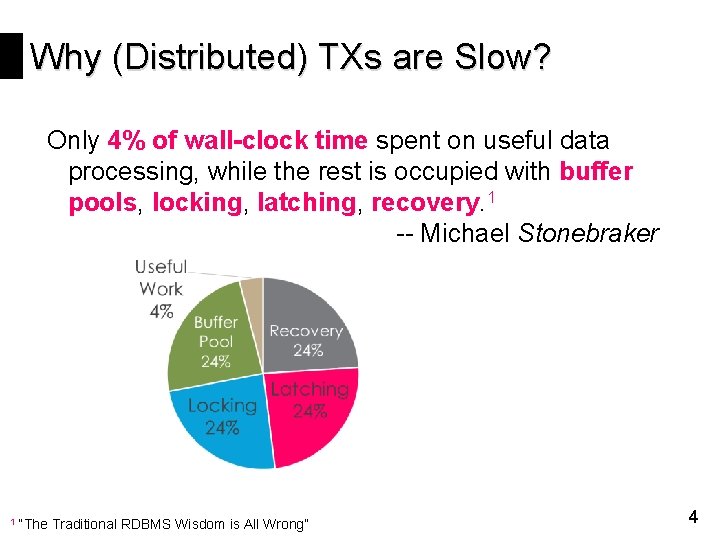

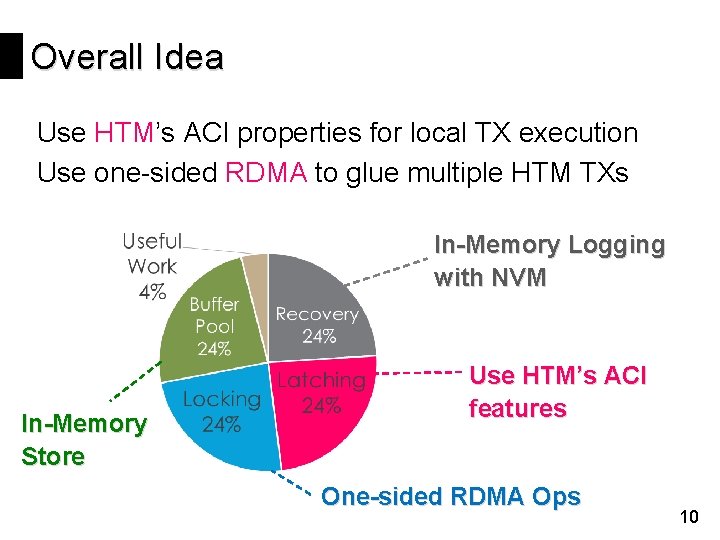

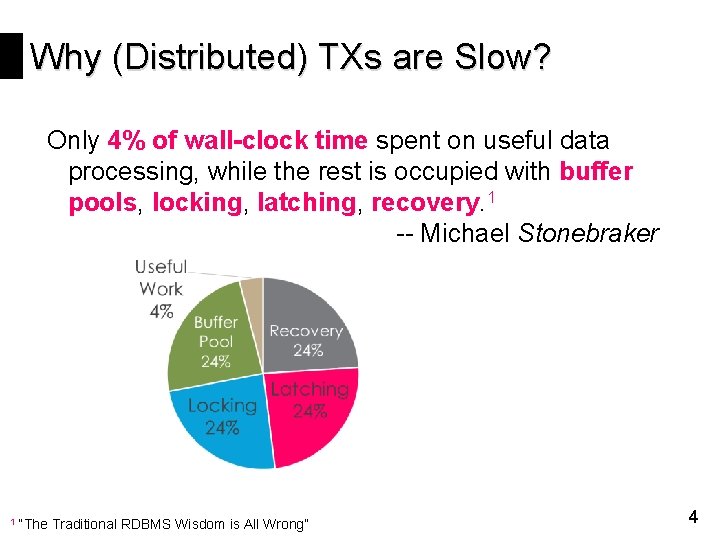

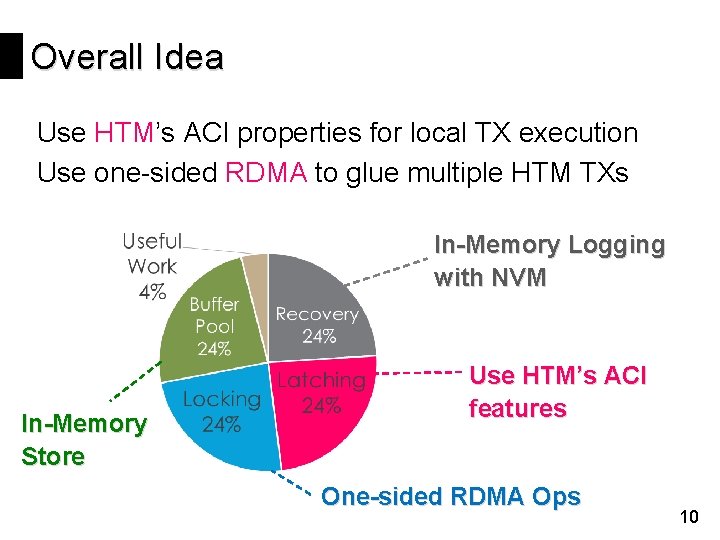

Why (Distributed) TXs are Slow? Only 4% of wall-clock time spent on useful data processing, while the rest is occupied with buffer pools, locking, latching, recovery. 1 -- Michael Stonebraker 1 “The Traditional RDBMS Wisdom is All Wrong” 4

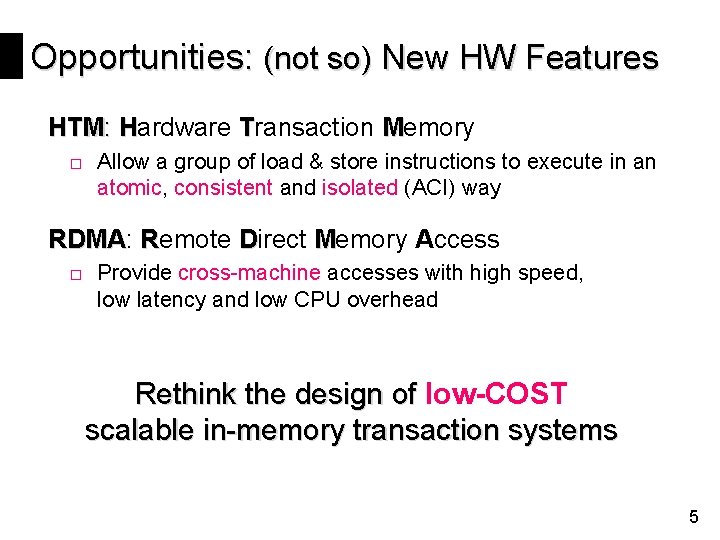

Opportunities: (not so) New HW Features HTM: Hardware Transaction Memory □ Allow a group of load & store instructions to execute in an atomic, consistent and isolated (ACI) way RDMA: RDMA Remote Direct Memory Access □ Provide cross-machine accesses with high speed, low latency and low CPU overhead Rethink the design of low-COST scalable in-memory transaction systems 5

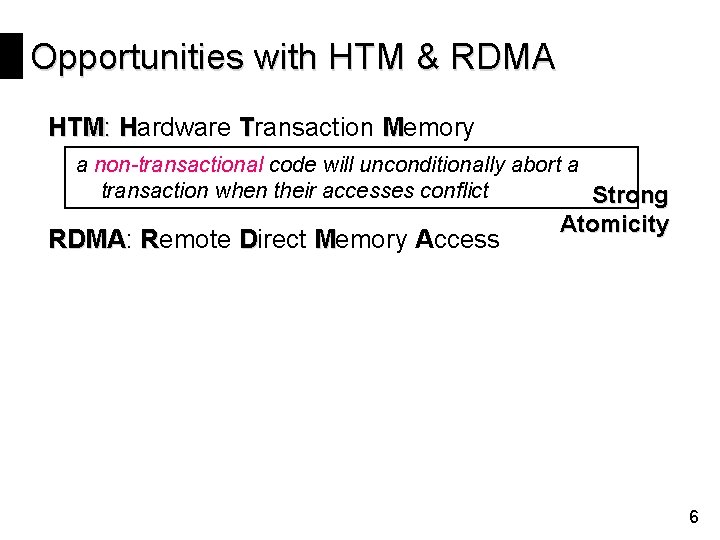

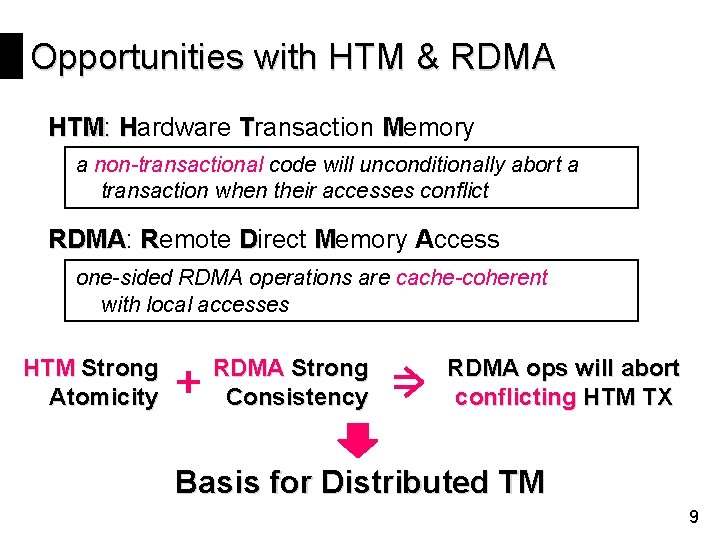

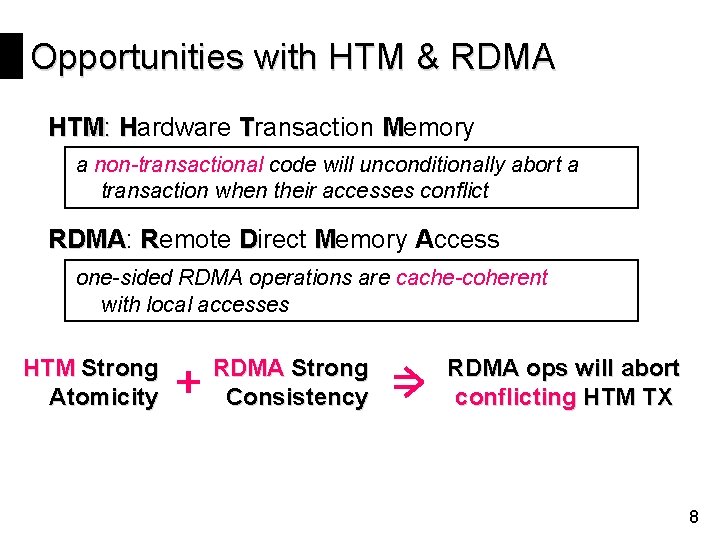

Opportunities with HTM & RDMA HTM: Hardware Transaction Memory a non-transactional code will unconditionally abort a transaction when their accesses conflict Strong RDMA: RDMA Remote Direct Memory Access Atomicity 6

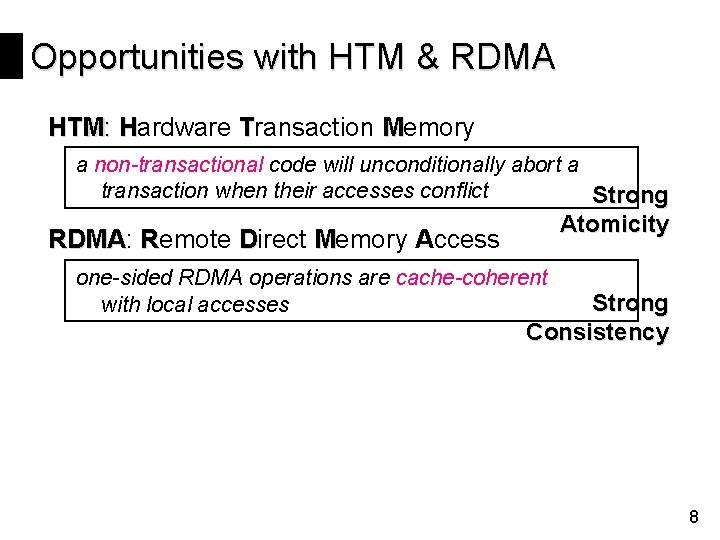

Opportunities with HTM & RDMA HTM: Hardware Transaction Memory a non-transactional code will unconditionally abort a transaction when their accesses conflict Strong Atomicity RDMA: RDMA Remote Direct Memory Access one-sided RDMA operations are cache-coherent with local accesses Strong Consistency 8

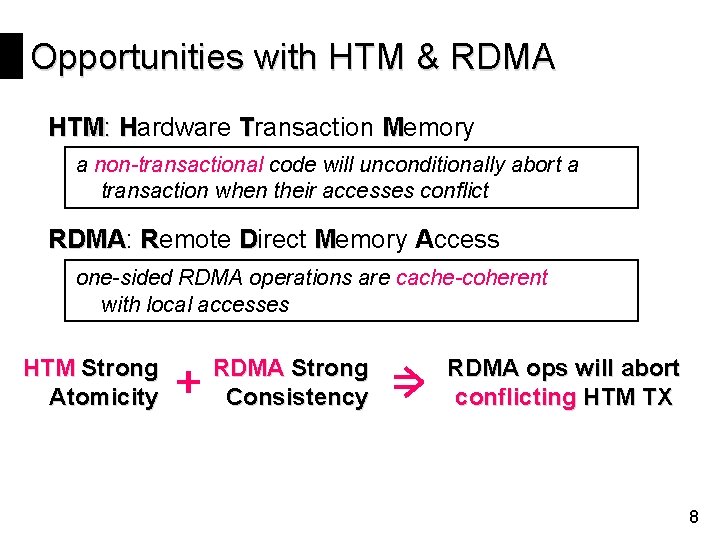

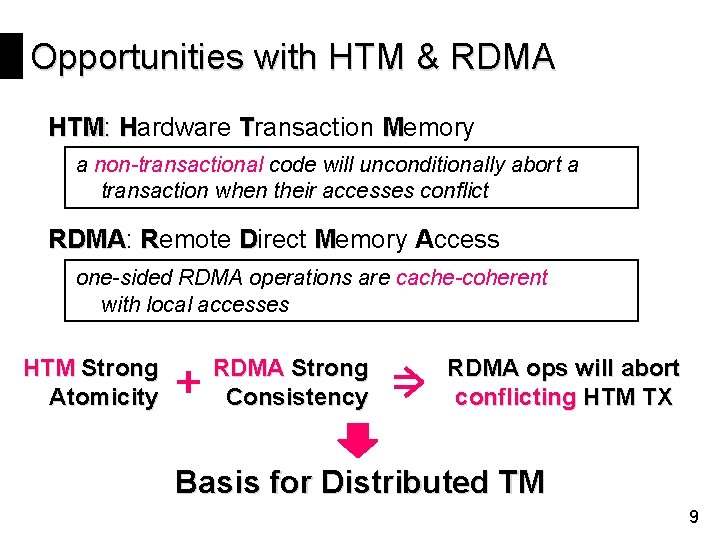

Opportunities with HTM & RDMA HTM: Hardware Transaction Memory a non-transactional code will unconditionally abort a transaction when their accesses conflict RDMA: RDMA Remote Direct Memory Access one-sided RDMA operations are cache-coherent with local accesses HTM Strong Atomicity RDMA Strong Consistency RDMA ops will abort conflicting HTM TX 8

Opportunities with HTM & RDMA HTM: Hardware Transaction Memory a non-transactional code will unconditionally abort a transaction when their accesses conflict RDMA: RDMA Remote Direct Memory Access one-sided RDMA operations are cache-coherent with local accesses HTM Strong Atomicity RDMA Strong Consistency RDMA ops will abort conflicting HTM TX Basis for Distributed TM 9

Overall Idea Use HTM’s ACI properties for local TX execution Use one-sided RDMA to glue multiple HTM TXs In-Memory Logging with NVM In-Memory Store Use HTM’s ACI features One-sided RDMA Ops 10

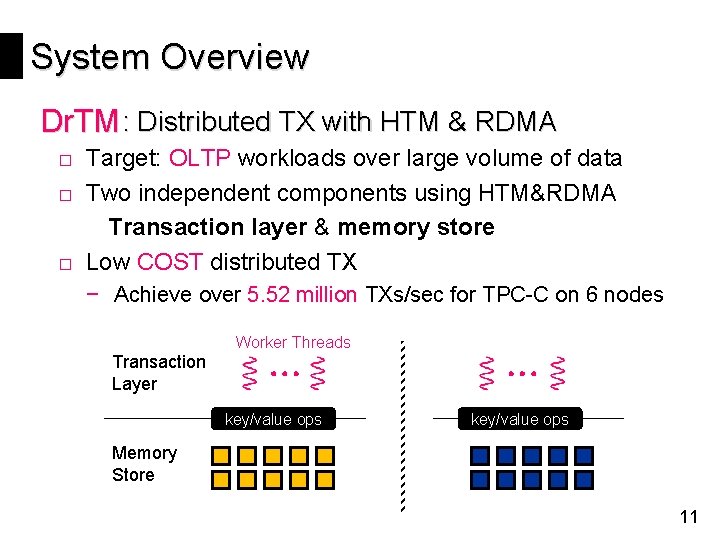

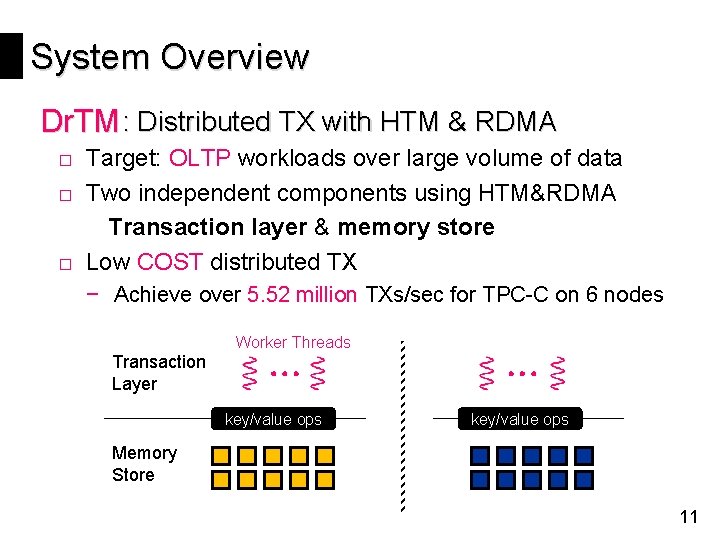

System Overview Dr. TM : Distributed TX with HTM & RDMA □ Target: OLTP workloads over large volume of data □ Two independent components using HTM&RDMA Transaction layer & memory store □ Low COST distributed TX − Achieve over 5. 52 million TXs/sec for TPC-C on 6 nodes Transaction Layer Worker Threads key/value ops Memory Store 11

Agenda Transaction Layer Memory Storage Implementation Evaluation

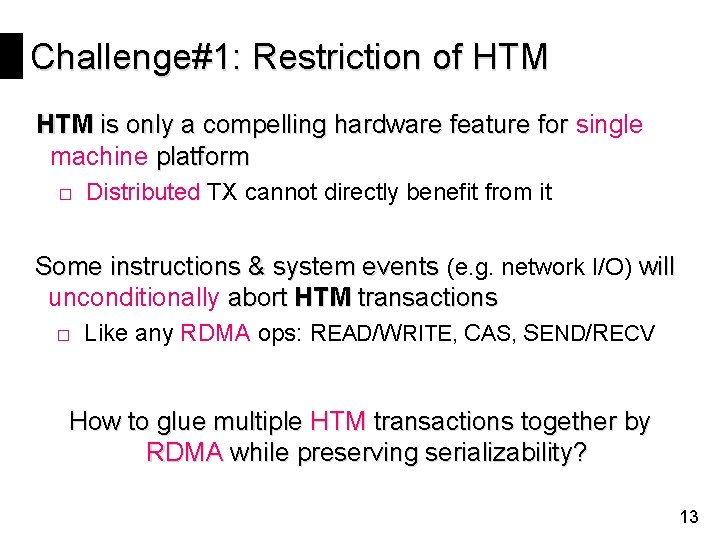

Challenge#1: Restriction of HTM is only a compelling hardware feature for single machine platform □ Distributed TX cannot directly benefit from it Some instructions & system events (e. g. network I/O) will unconditionally abort HTM transactions □ Like any RDMA ops: READ/WRITE, CAS, SEND/RECV How to glue multiple HTM transactions together by RDMA while preserving serializability? 13

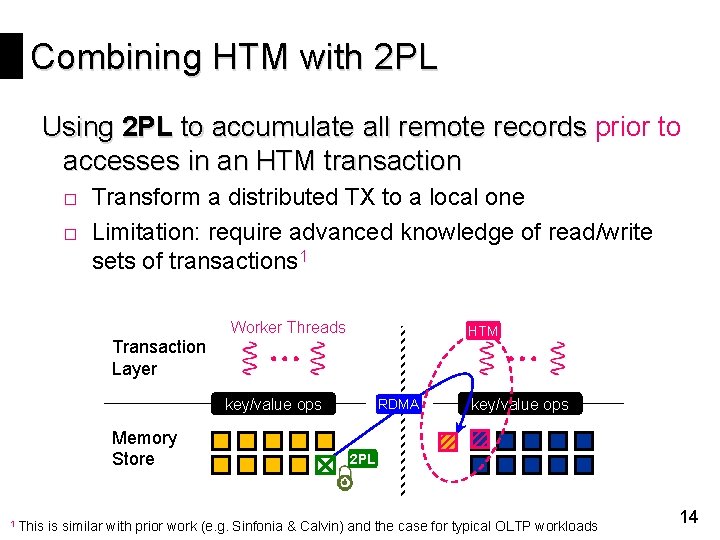

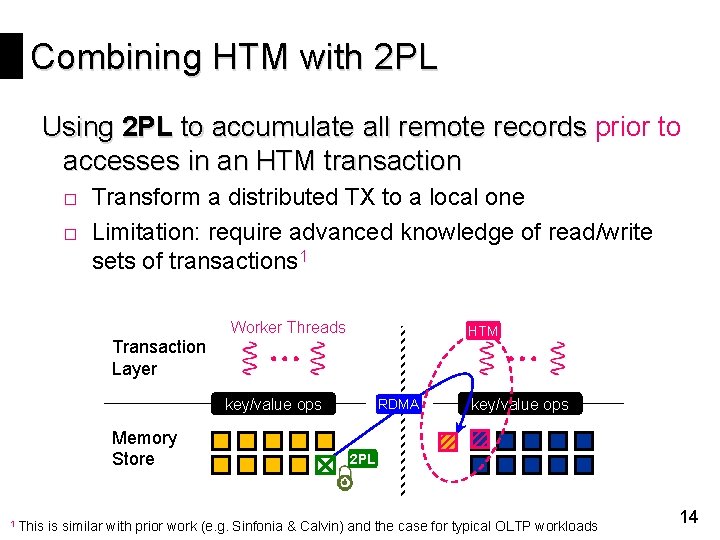

Combining HTM with 2 PL Using 2 PL to accumulate all remote records prior to accesses in an HTM transaction □ Transform a distributed TX to a local one □ Limitation: require advanced knowledge of read/write sets of transactions 1 Worker Threads HTM Transaction Layer key/value ops Memory Store 1 This RDMA key/value ops 2 PL is similar with prior work (e. g. Sinfonia & Calvin) and the case for typical OLTP workloads 14

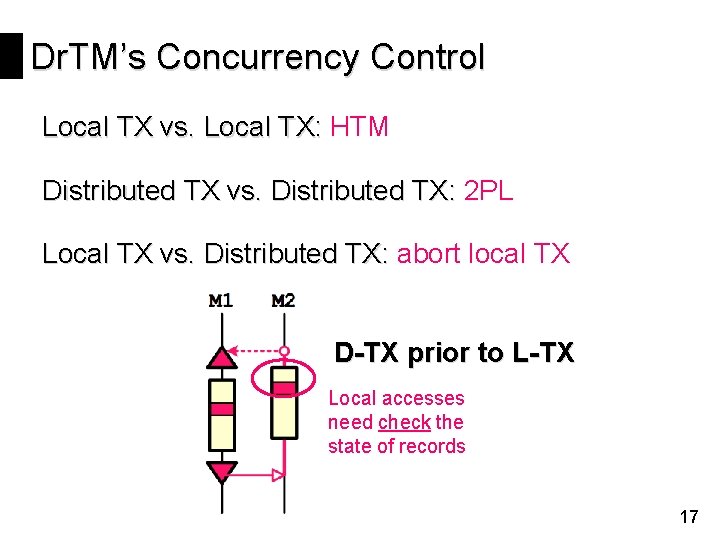

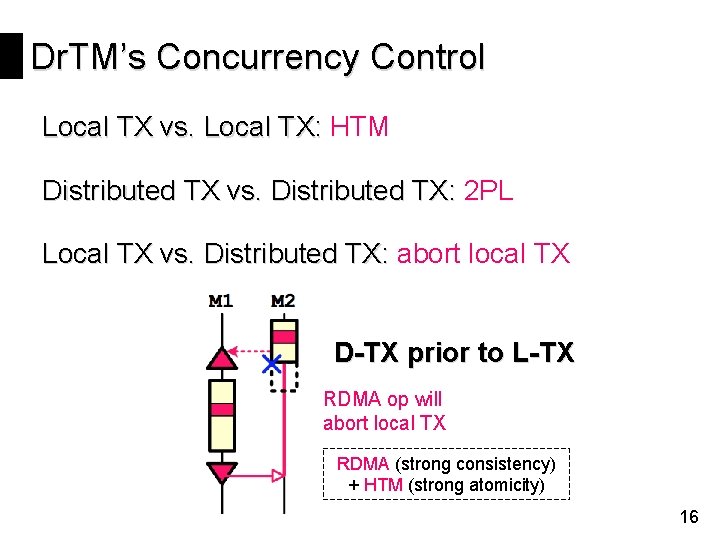

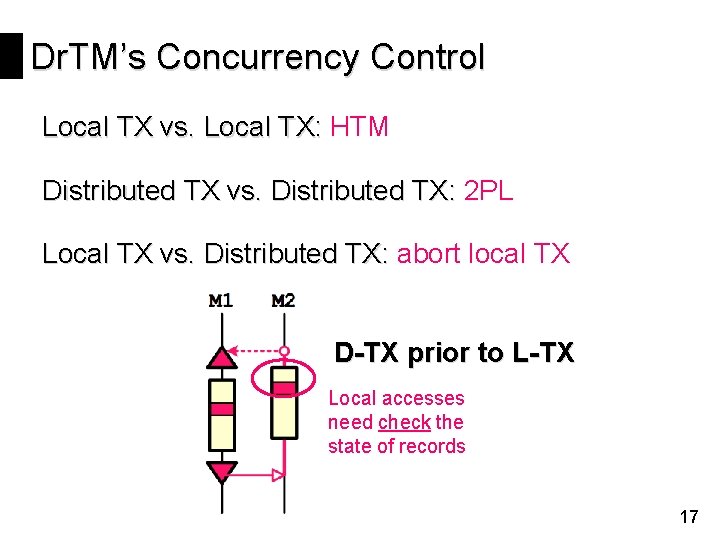

Dr. TM’s Concurrency Control Local TX vs. Local TX: HTM Distributed TX vs. Distributed TX: 2 PL Local TX vs. Distributed TX: abort local TX 15

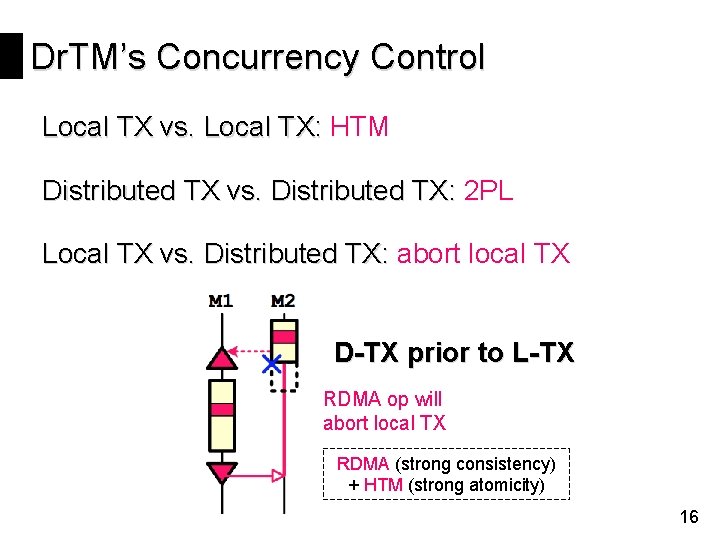

Dr. TM’s Concurrency Control Local TX vs. Local TX: HTM Distributed TX vs. Distributed TX: 2 PL Local TX vs. Distributed TX: abort local TX D-TX prior to L-TX RDMA op will abort local TX RDMA (strong consistency) + HTM (strong atomicity) 16

Dr. TM’s Concurrency Control Local TX vs. Local TX: HTM Distributed TX vs. Distributed TX: 2 PL Local TX vs. Distributed TX: abort local TX D-TX prior to L-TX Local accesses need check the state of records 17

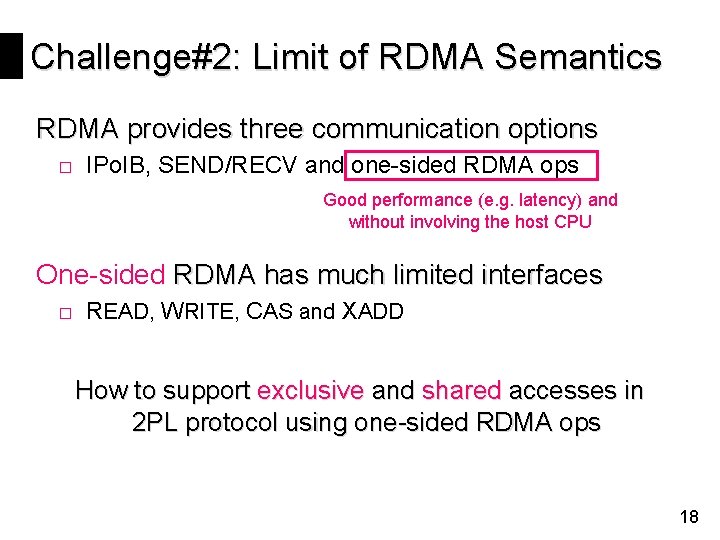

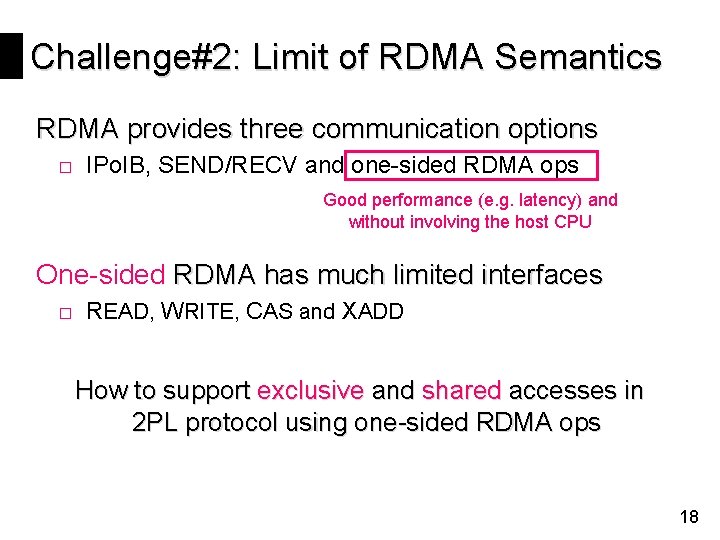

Challenge#2: Limit of RDMA Semantics RDMA provides three communication options □ IPo. IB, SEND/RECV and one-sided RDMA ops Good performance (e. g. latency) and without involving the host CPU One-sided RDMA has much limited interfaces □ READ, WRITE, CAS and XADD How to support exclusive and shared accesses in 2 PL protocol using one-sided RDMA ops 18

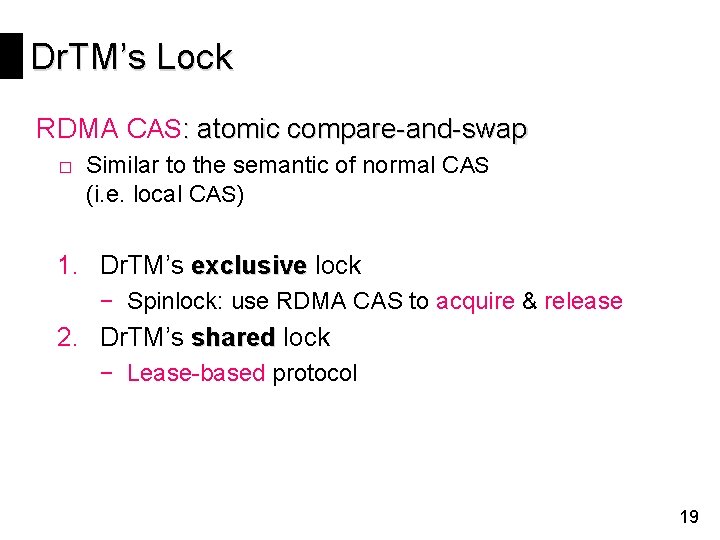

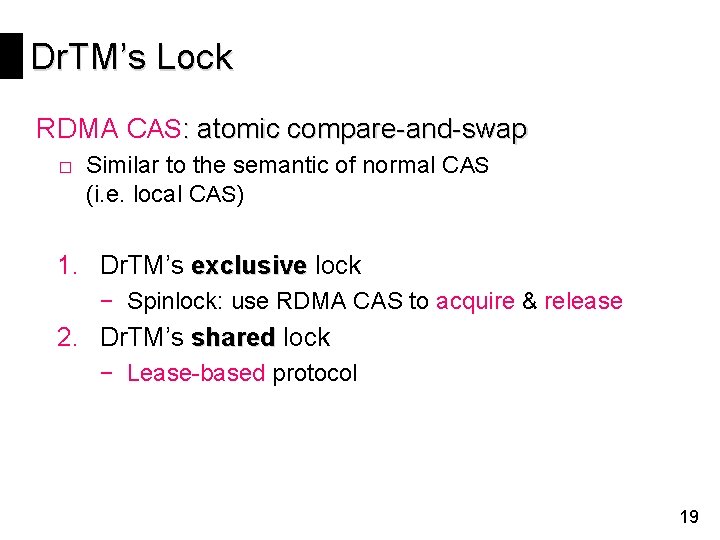

Dr. TM’s Lock RDMA CAS: atomic compare-and-swap □ Similar to the semantic of normal CAS (i. e. local CAS) 1. Dr. TM’s exclusive lock − Spinlock: use RDMA CAS to acquire & release 2. Dr. TM’s shared lock − Lease-based protocol 19

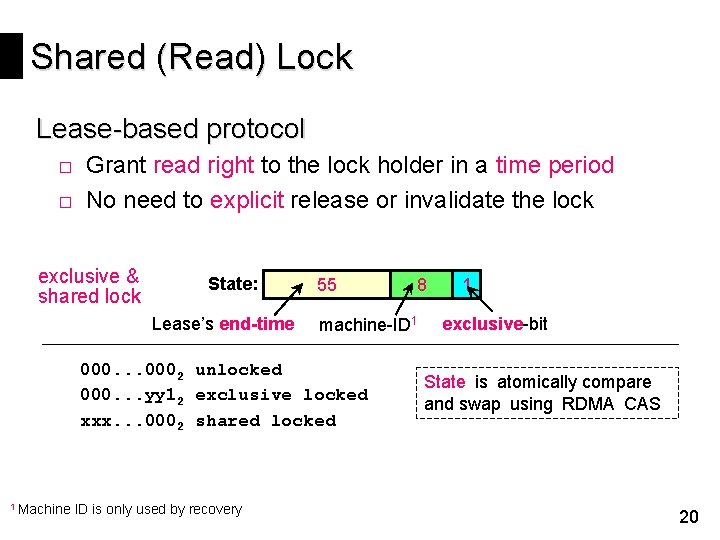

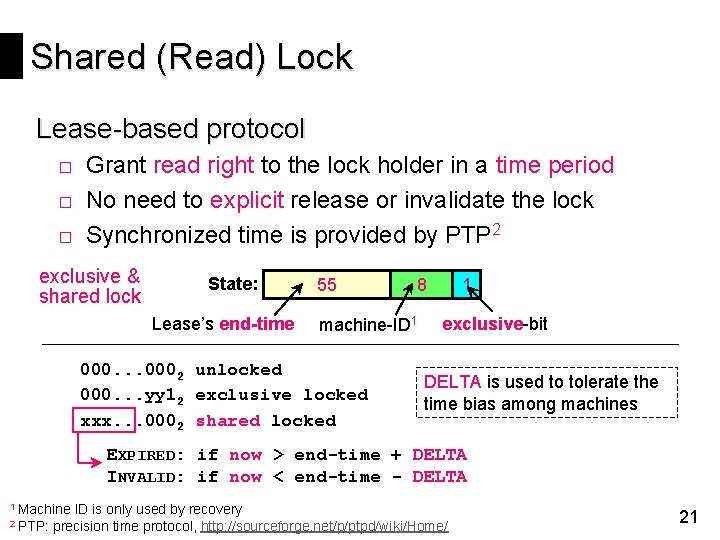

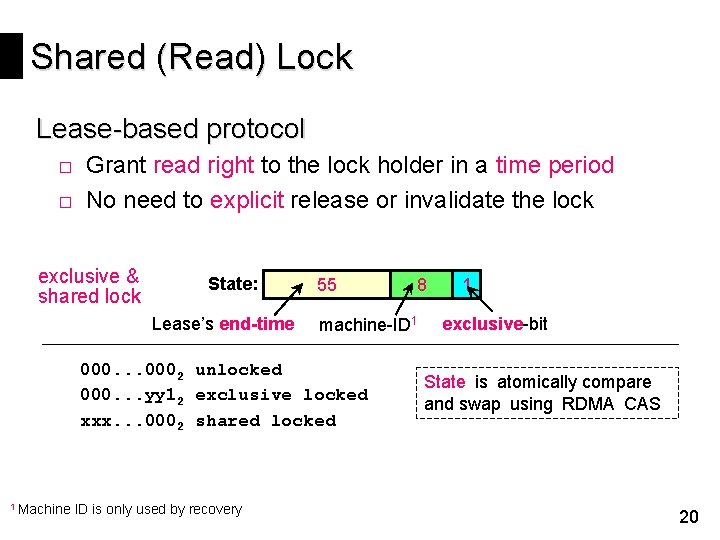

Shared (Read) Lock Lease-based protocol □ Grant read right to the lock holder in a time period □ No need to explicit release or invalidate the lock exclusive & shared lock State: Lease’s end-time 55 machine-ID 1 000. . . 0002 unlocked 000. . . yy 12 exclusive locked xxx. . . 0002 shared locked 1 Machine ID is only used by recovery 8 1 exclusive-bit State is atomically compare and swap using RDMA CAS 20

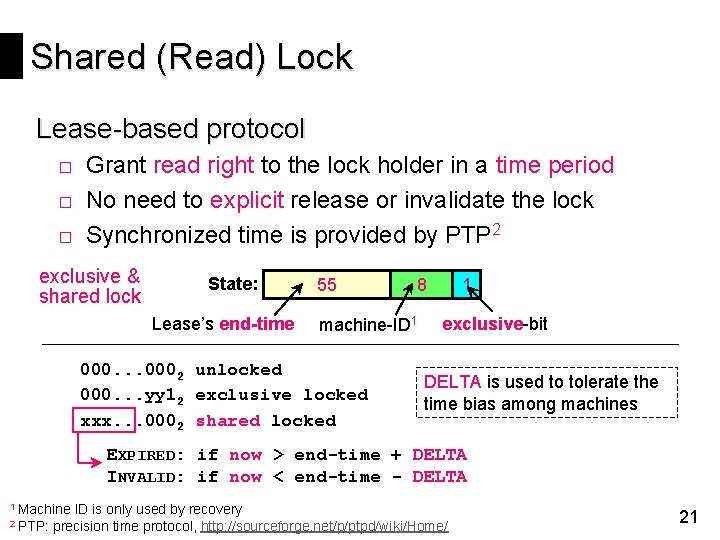

Shared (Read) Lock Lease-based protocol □ Grant read right to the lock holder in a time period □ No need to explicit release or invalidate the lock □ Synchronized time is provided by PTP 2 exclusive & shared lock State: Lease’s end-time 55 8 machine-ID 1 000. . . 0002 unlocked 000. . . yy 12 exclusive locked xxx. . . 0002 shared locked 1 exclusive-bit DELTA is used to tolerate the time bias among machines EXPIRED: if now > end-time + DELTA INVALID: if now < end-time - DELTA 1 Machine 2 PTP: ID is only used by recovery precision time protocol, http: //sourceforge. net/p/ptpd/wiki/Home/ 21

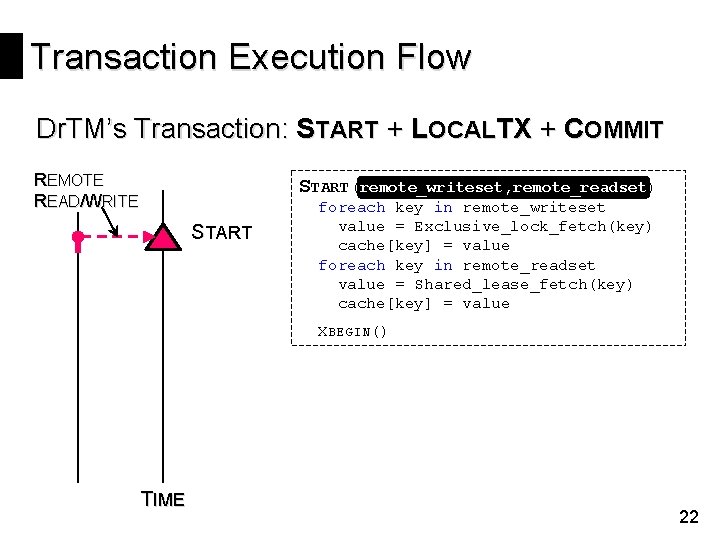

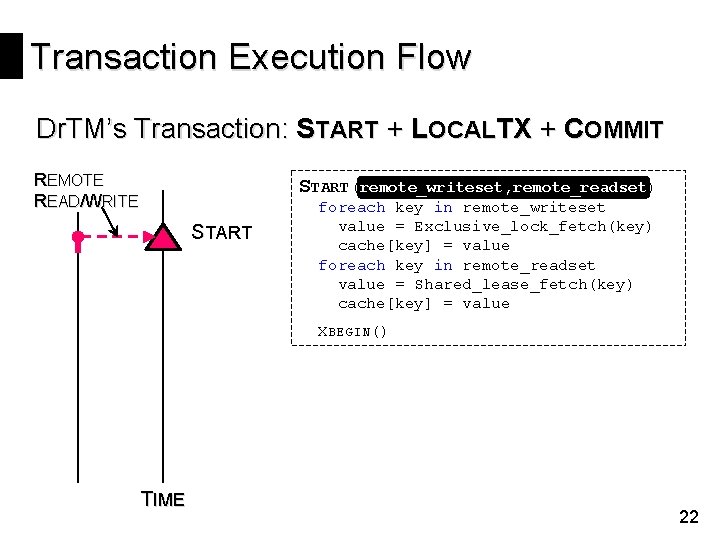

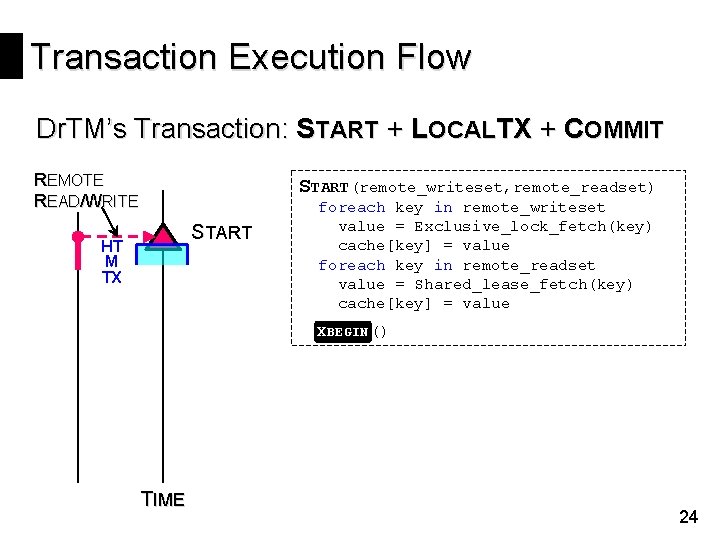

Transaction Execution Flow Dr. TM’s Transaction: START + LOCALTX + COMMIT REMOTE READ/WRITE START(remote_writeset, remote_readset) remote_writeset, remote_readset START foreach key in remote_writeset value = Exclusive_lock_fetch(key) cache[key] = value foreach key in remote_readset value = Shared_lease_fetch(key) cache[key] = value XBEGIN() TIME 22

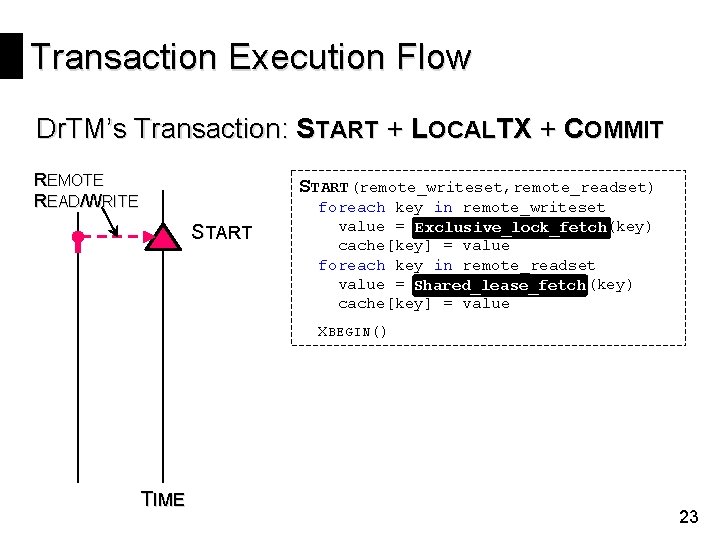

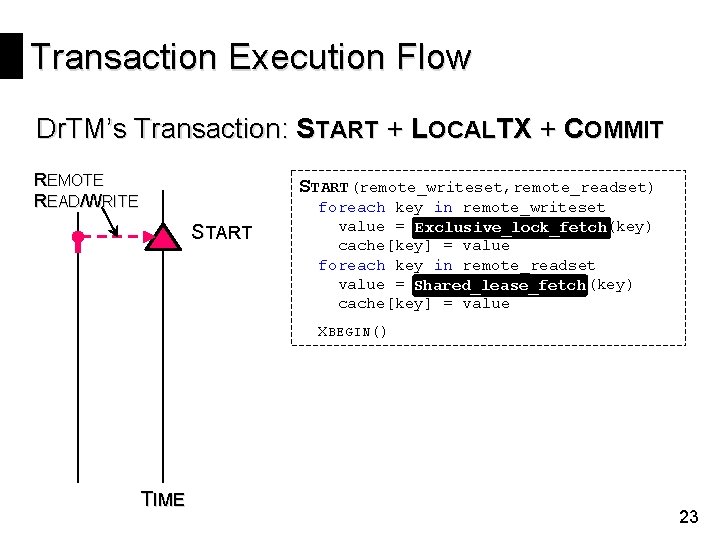

Transaction Execution Flow Dr. TM’s Transaction: START + LOCALTX + COMMIT REMOTE READ/WRITE START(remote_writeset, remote_readset) START foreach key in remote_writeset value = Exclusive_lock_fetch(key) Exclusive_lock_fetch cache[key] = value foreach key in remote_readset value = Shared_lease_fetch(key) cache[key] = value XBEGIN() TIME 23

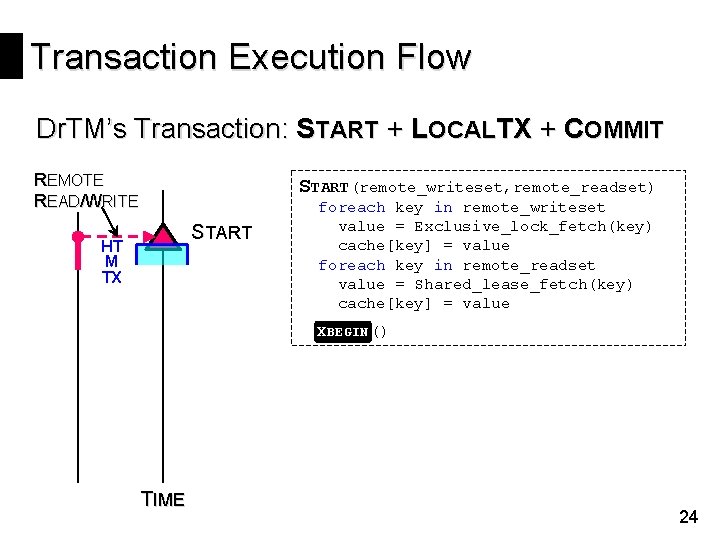

Transaction Execution Flow Dr. TM’s Transaction: START + LOCALTX + COMMIT REMOTE READ/WRITE START(remote_writeset, remote_readset) START HT M TX foreach key in remote_writeset value = Exclusive_lock_fetch(key) cache[key] = value foreach key in remote_readset value = Shared_lease_fetch(key) cache[key] = value XBEGIN() TIME 24

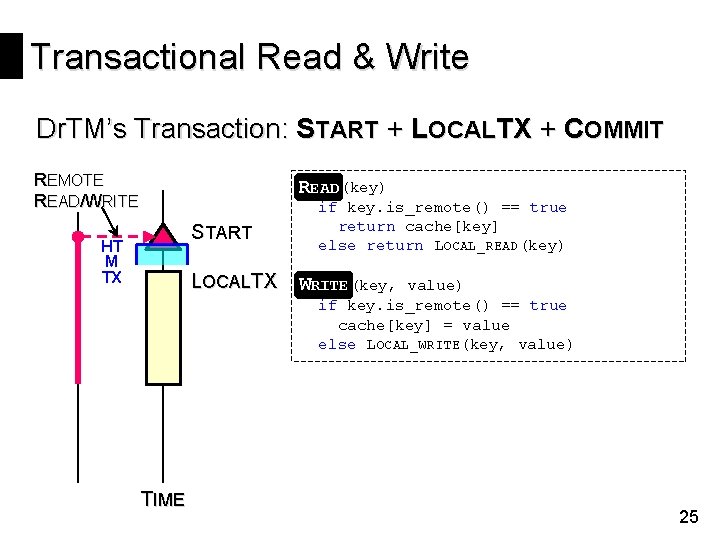

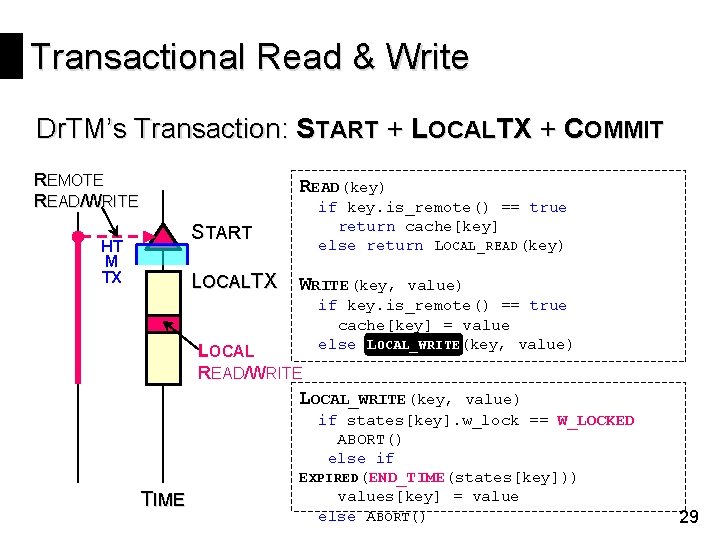

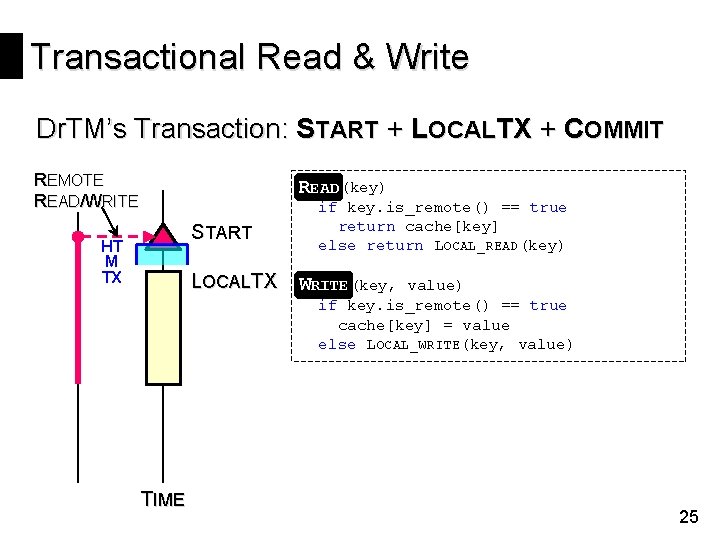

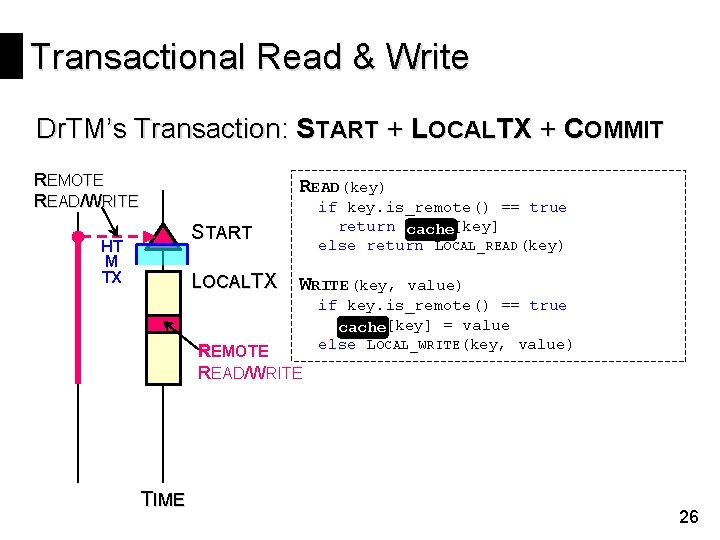

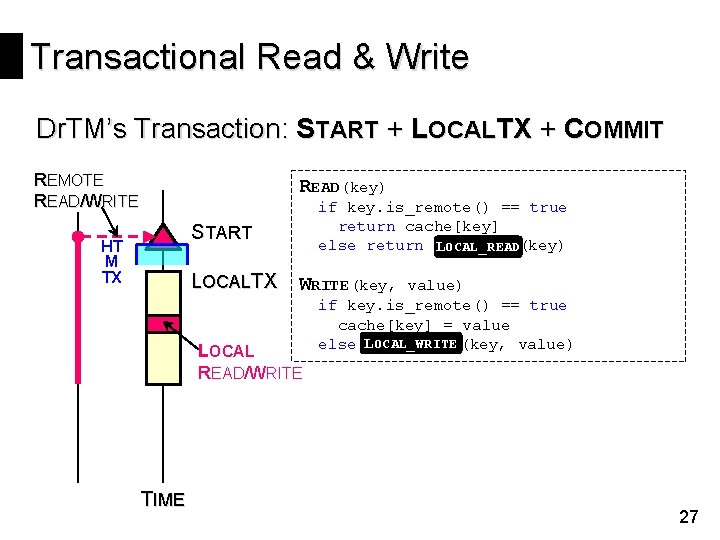

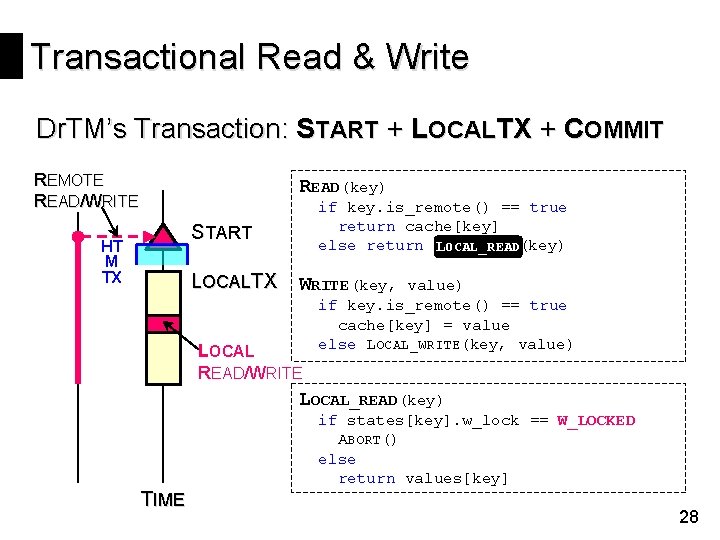

Transactional Read & Write Dr. TM’s Transaction: START + LOCALTX + COMMIT REMOTE READ/WRITE READ EAD(key) START HT M TX LOCALTX TIME if key. is_remote() == true return cache[key] else return LOCAL_READ(key) RITE(key, WRITE value) if key. is_remote() == true cache[key] = value else LOCAL_WRITE(key, value) 25

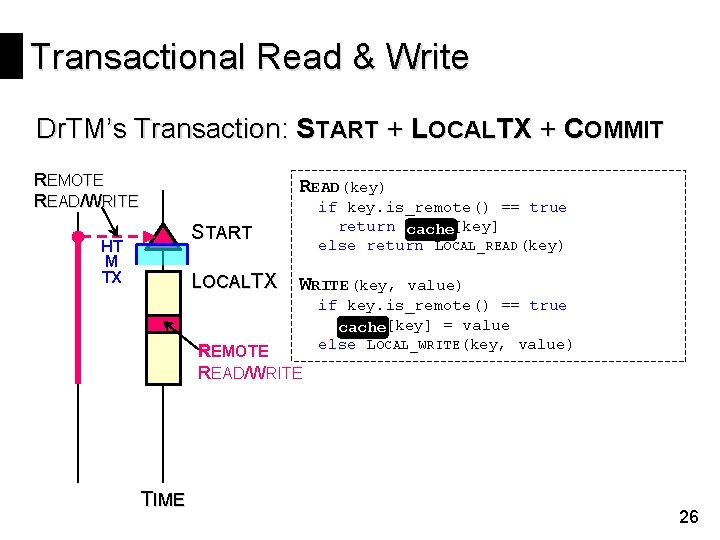

Transactional Read & Write Dr. TM’s Transaction: START + LOCALTX + COMMIT REMOTE READ/WRITE READ(key) if key. is_remote() == true return cache[key] cache else return LOCAL_READ(key) START HT M TX LOCALTX WRITE(key, REMOTE READ/WRITE TIME value) if key. is_remote() == true cache[key] = value cache else LOCAL_WRITE(key, value) 26

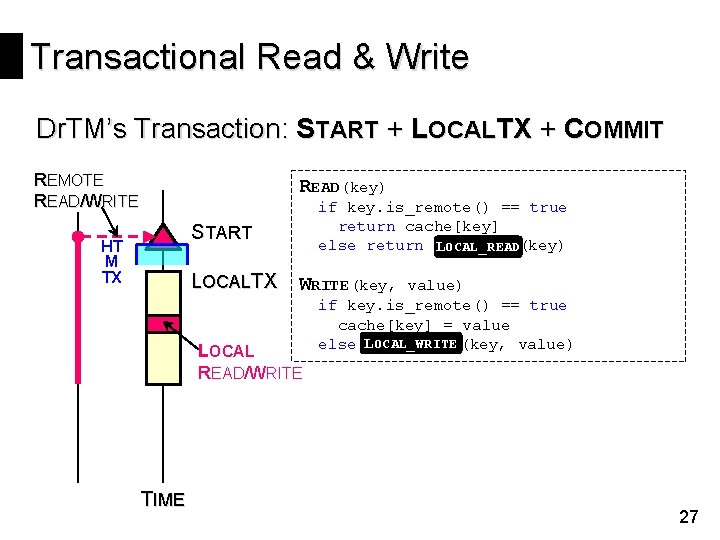

Transactional Read & Write Dr. TM’s Transaction: START + LOCALTX + COMMIT REMOTE READ/WRITE READ(key) if key. is_remote() == true return cache[key] else return LLOCAL_READ(key) START HT M TX LOCALTX WRITE(key, LOCAL READ/WRITE TIME value) if key. is_remote() == true cache[key] = value else LLOCAL_WRITE(key, value) 27

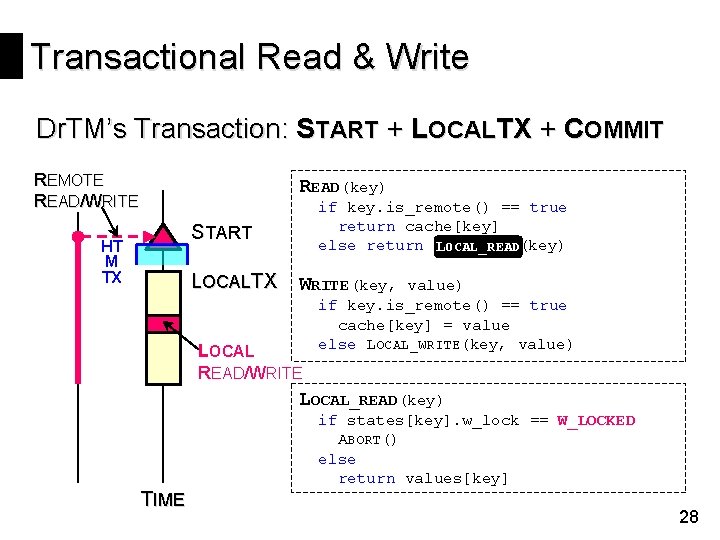

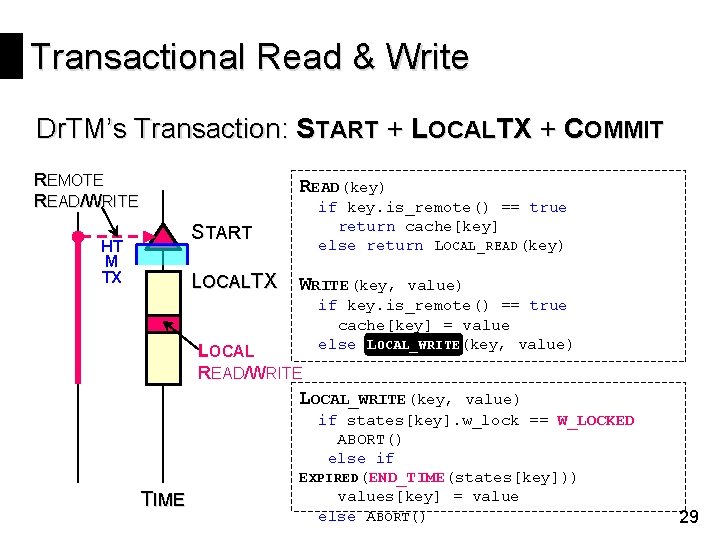

Transactional Read & Write Dr. TM’s Transaction: START + LOCALTX + COMMIT REMOTE READ/WRITE READ(key) if key. is_remote() == true return cache[key] else return LLOCAL_READ(key) START HT M TX LOCALTX WRITE(key, LOCAL READ/WRITE value) if key. is_remote() == true cache[key] = value else LOCAL_WRITE(key, value) LOCAL_READ(key) if states[key]. w_lock == W_LOCKED ABORT() else return values[key] TIME 28

Transactional Read & Write Dr. TM’s Transaction: START + LOCALTX + COMMIT REMOTE READ/WRITE READ(key) if key. is_remote() == true return cache[key] else return LOCAL_READ(key) START HT M TX LOCALTX WRITE(key, LOCAL READ/WRITE value) if key. is_remote() == true cache[key] = value else LOCAL_WRITE(key, value) LOCAL_WRITE(key, TIME value) if states[key]. w_lock == W_LOCKED ABORT() else if EXPIRED(END_TIME(states[key])) values[key] = value else ABORT() 29

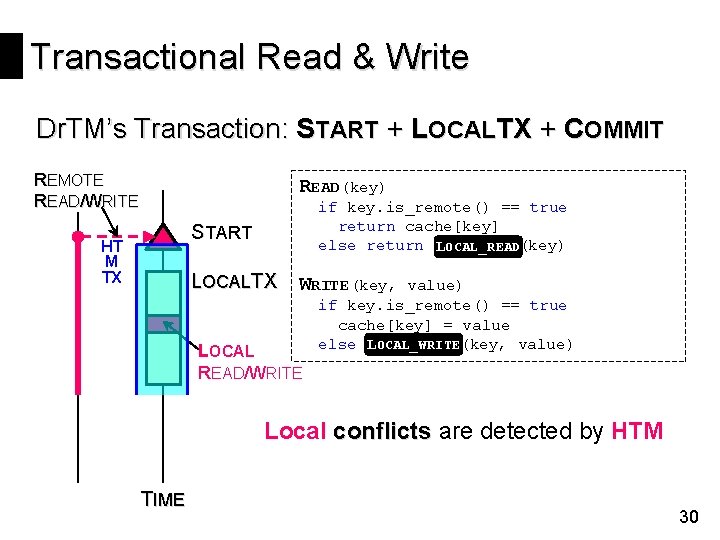

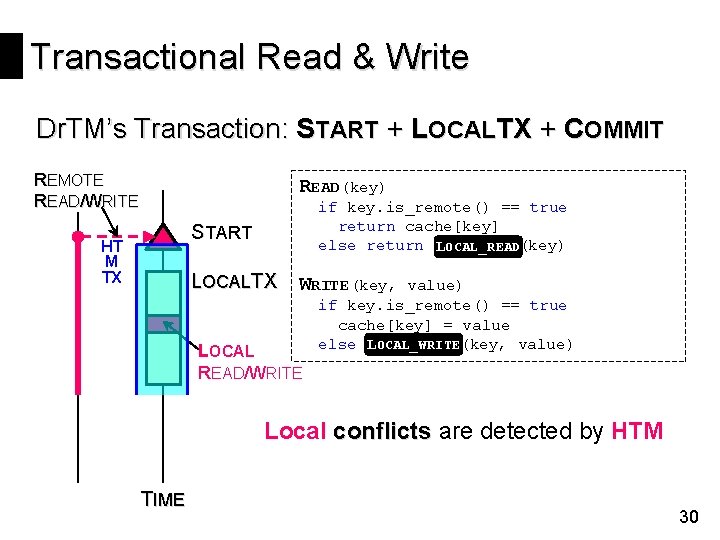

Transactional Read & Write Dr. TM’s Transaction: START + LOCALTX + COMMIT REMOTE READ/WRITE READ(key) if key. is_remote() == true return cache[key] else return LLOCAL_READ(key) START HT M TX LOCALTX WRITE(key, LOCAL READ/WRITE value) if key. is_remote() == true cache[key] = value else LOCAL_WRITE(key, value) Local conflicts are detected by HTM TIME 30

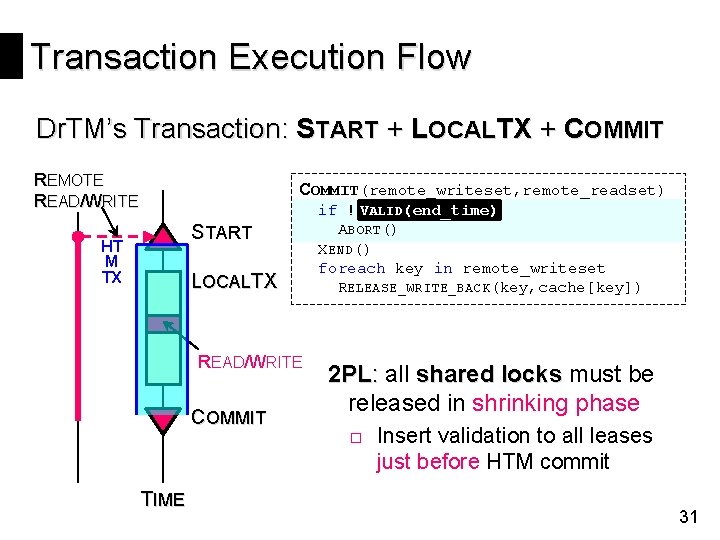

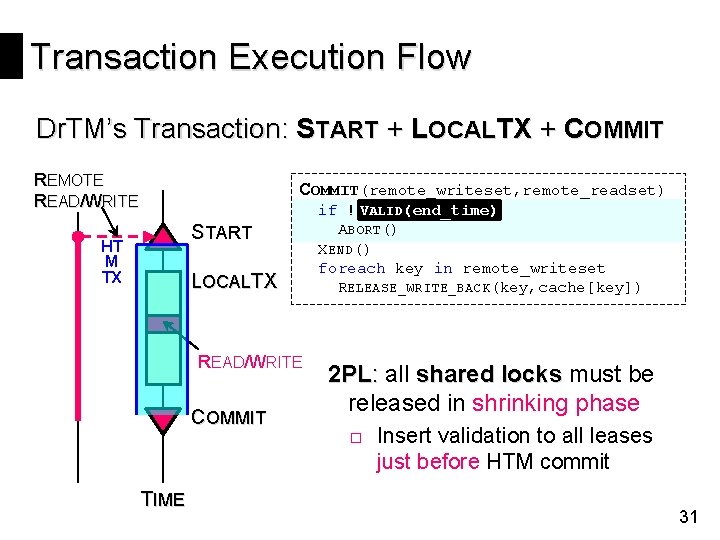

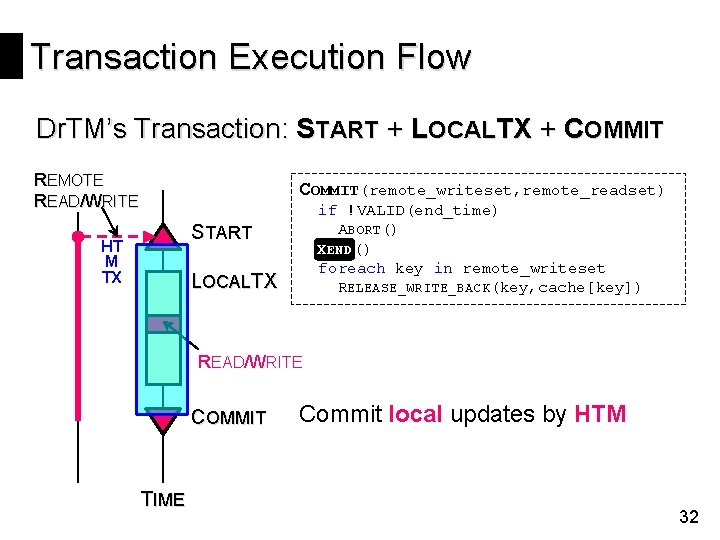

Transaction Execution Flow Dr. TM’s Transaction: START + LOCALTX + COMMIT REMOTE READ/WRITE COMMIT(remote_writeset, remote_readset) START HT M TX LOCALTX READ/WRITE COMMIT TIME VALID(end_time) if !VALID(end_time) ABORT() XEND() foreach key in remote_writeset RELEASE_WRITE_BACK(key, cache[key]) 2 PL: all shared locks must be released in shrinking phase □ Insert validation to all leases just before HTM commit 31

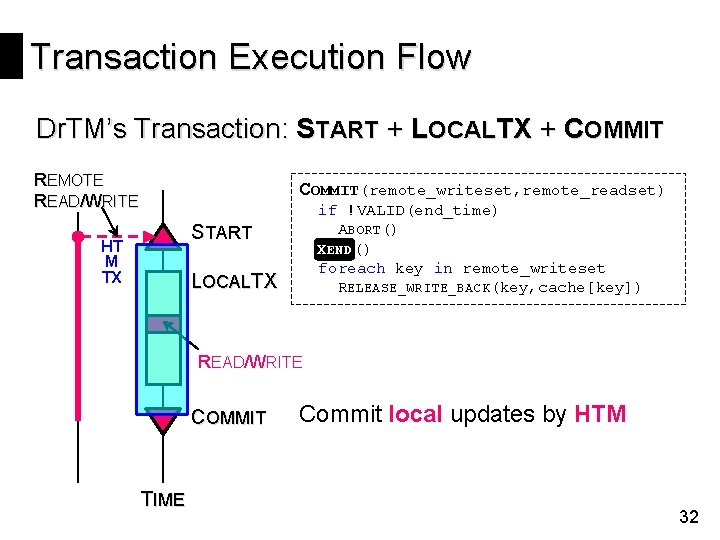

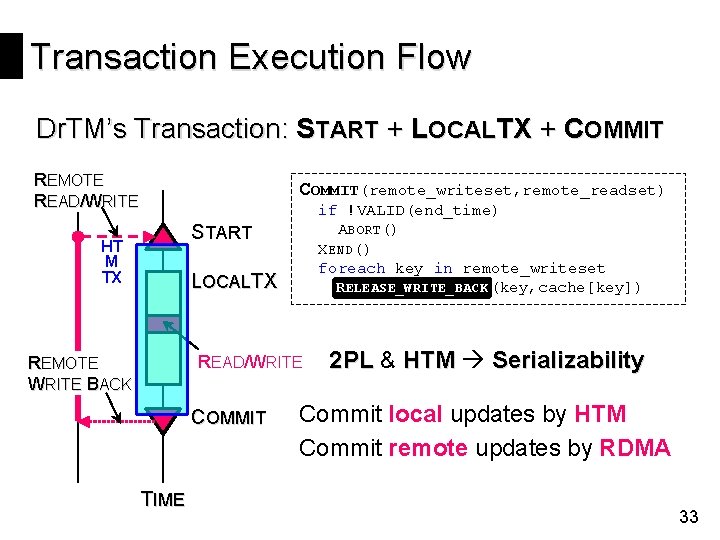

Transaction Execution Flow Dr. TM’s Transaction: START + LOCALTX + COMMIT REMOTE READ/WRITE COMMIT(remote_writeset, remote_readset) if !VALID(end_time) ABORT() XEND () foreach key in remote_writeset RELEASE_WRITE_BACK(key, cache[key]) START HT M TX LOCALTX READ/WRITE COMMIT TIME Commit local updates by HTM 32

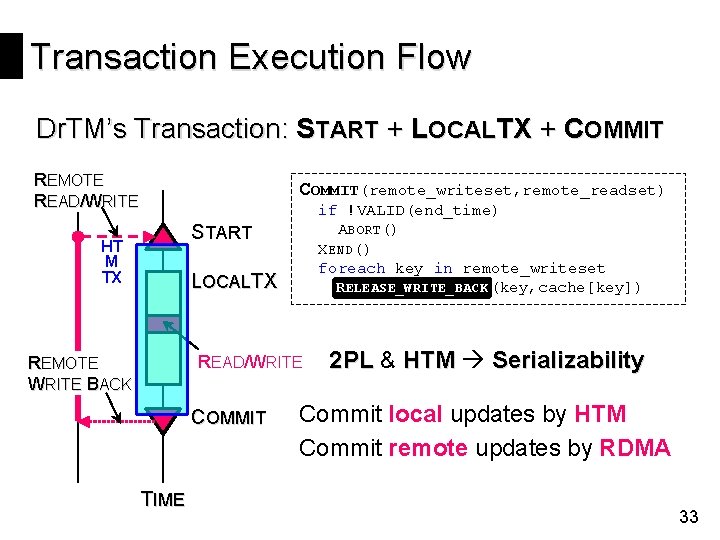

Transaction Execution Flow Dr. TM’s Transaction: START + LOCALTX + COMMIT REMOTE READ/WRITE COMMIT(remote_writeset, remote_readset) if !VALID(end_time) ABORT() XEND() foreach key in remote_writeset ELEASE_WRITE_BACK(key, cache[key]) RRELEASE_WRITE_BACK START HT M TX LOCALTX READ/WRITE REMOTE WRITE BACK COMMIT TIME 2 PL & HTM Serializability Commit local updates by HTM Commit remote updates by RDMA 33

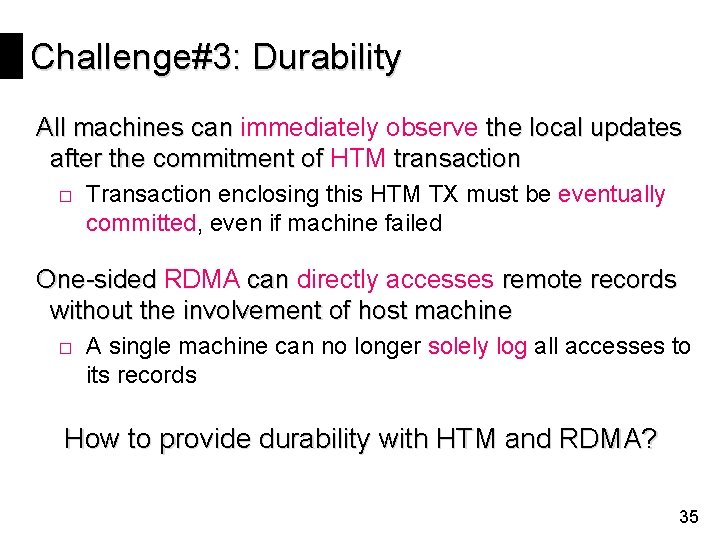

Challenge#3: Durability All machines can immediately observe the local updates after the commitment of HTM transaction □ Transaction enclosing this HTM TX must be eventually committed, even if machine failed One-sided RDMA can directly accesses remote records without the involvement of host machine □ A single machine can no longer solely log all accesses to its records How to provide durability with HTM and RDMA? 35

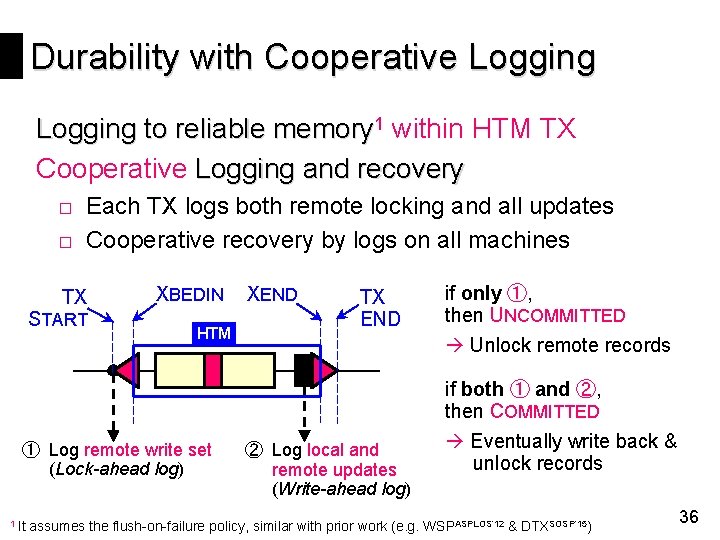

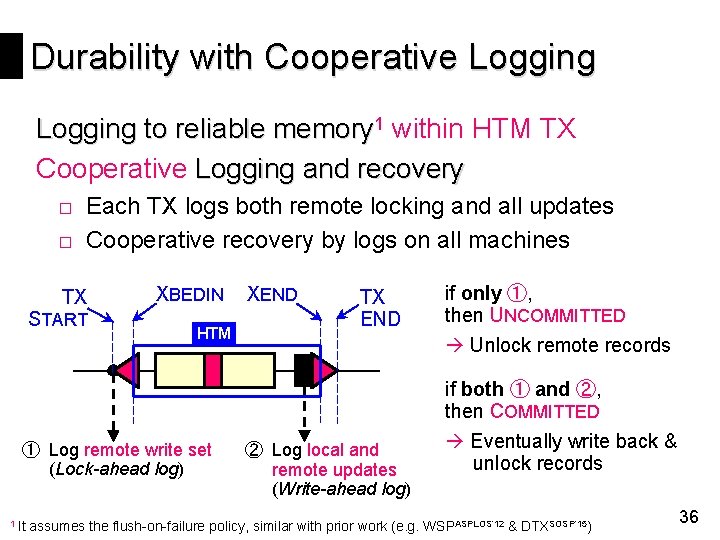

Durability with Cooperative Logging to reliable memory 1 within HTM TX Cooperative Logging and recovery □ Each TX logs both remote locking and all updates □ Cooperative recovery by logs on all machines TX START XBEDIN HTM XEND TX END if only ①, then UNCOMMITTED Unlock remote records if both ① and ②, then COMMITTED ① Log remote write set (Lock-ahead log) 1 It ② Log local and remote updates (Write-ahead log) Eventually write back & unlock records assumes the flush-on-failure policy, similar with prior work (e. g. WSPASPLOS’ 12 & DTXSOSP’ 15) 36

Agenda Transaction Layer Memory Storage Implementation Evaluation

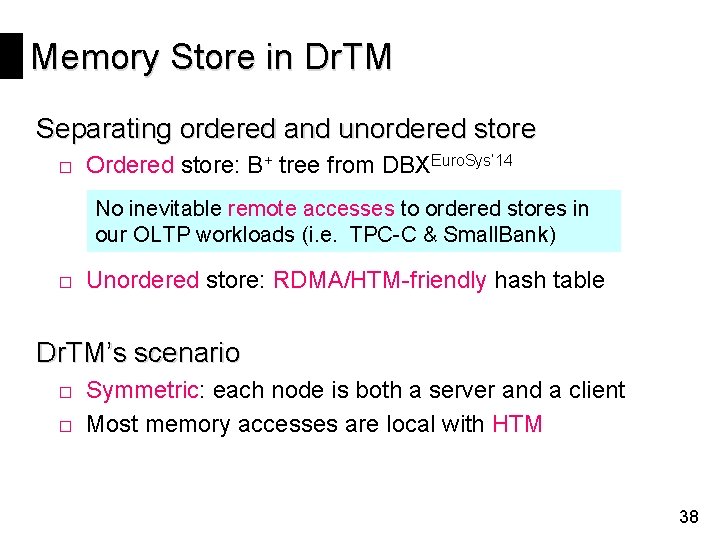

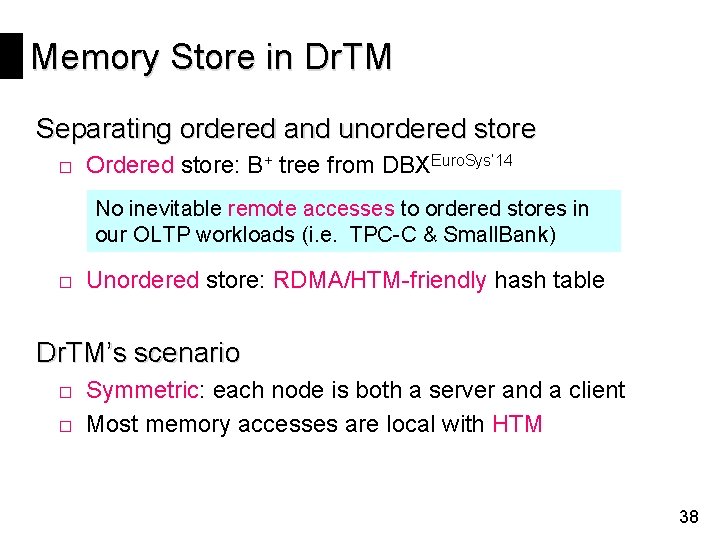

Memory Store in Dr. TM Separating ordered and unordered store □ Ordered store: B+ tree from DBXEuro. Sys’ 14 No inevitable remote accesses to ordered stores in our OLTP workloads (i. e. TPC-C & Small. Bank) □ Unordered store: RDMA/HTM-friendly hash table Dr. TM’s scenario □ Symmetric: each node is both a server and a client □ Most memory accesses are local with HTM 38

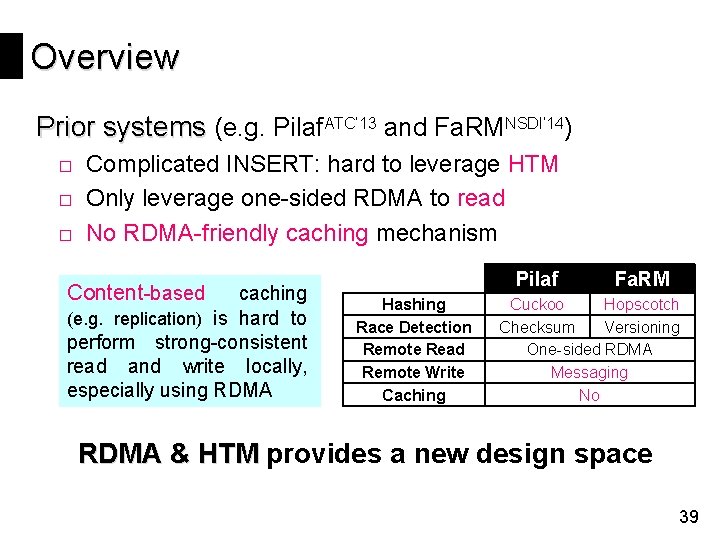

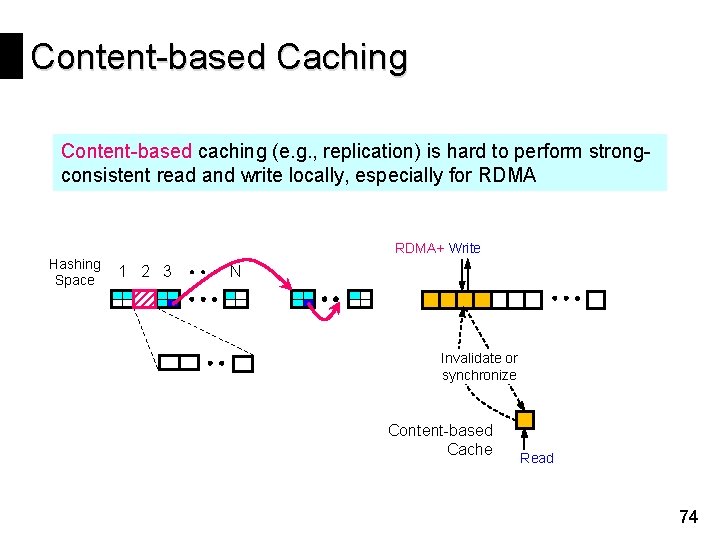

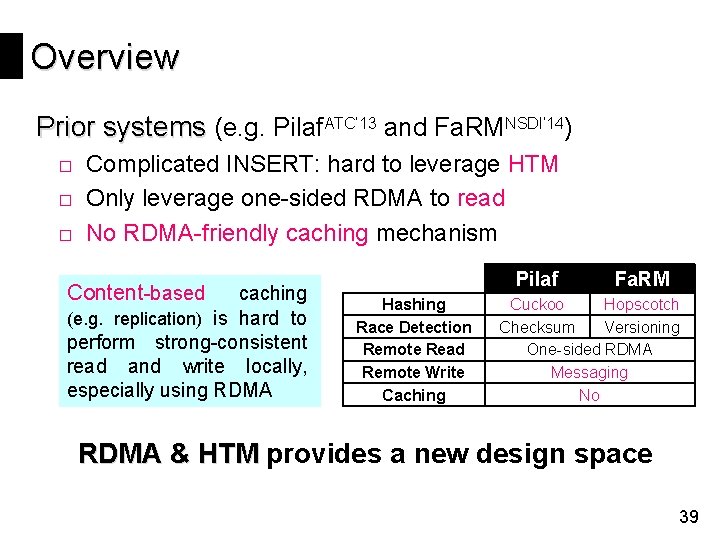

Overview Prior systems (e. g. Pilaf. ATC’ 13 and Fa. RMNSDI’ 14) □ Complicated INSERT: hard to leverage HTM □ Only leverage one-sided RDMA to read □ No RDMA-friendly caching mechanism Content-based caching (e. g. replication) is hard to perform strong-consistent read and write locally, especially using RDMA Pilaf Hashing Race Detection Remote Read Remote Write Caching Fa. RM Cuckoo Hopscotch Checksum Versioning One-sided RDMA Messaging No RDMA & HTM provides a new design space 39

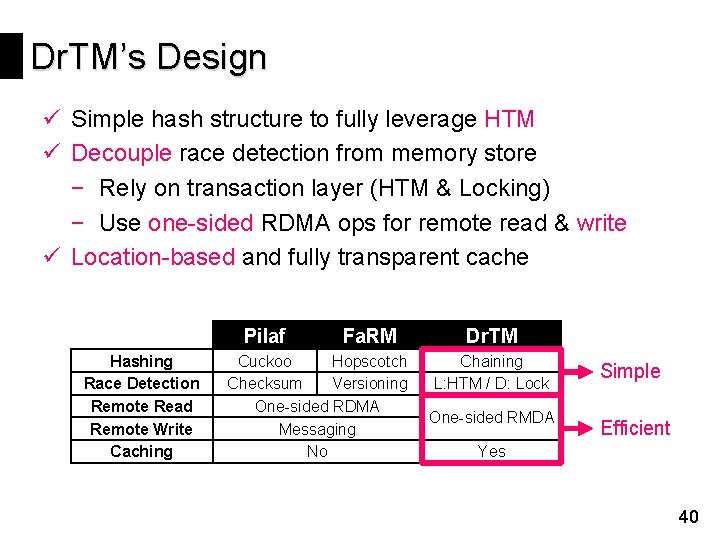

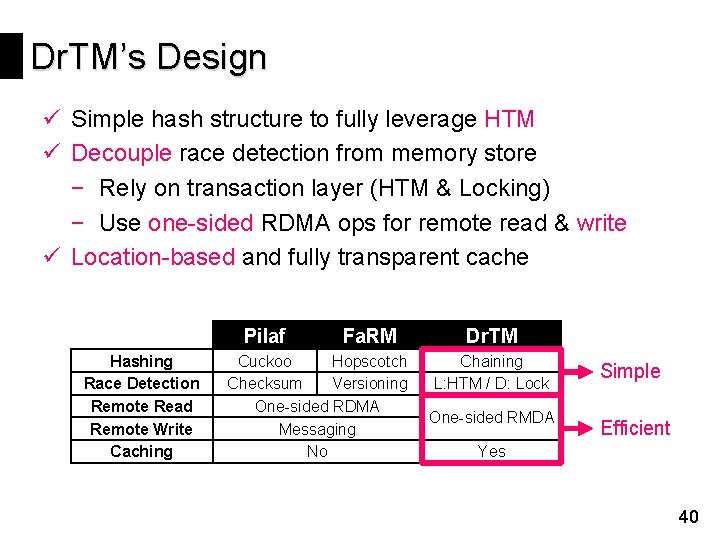

Dr. TM’s Design ü Simple hash structure to fully leverage HTM ü Decouple race detection from memory store − Rely on transaction layer (HTM & Locking) − Use one-sided RDMA ops for remote read & write ü Location-based and fully transparent cache Pilaf Hashing Race Detection Remote Read Remote Write Caching Fa. RM Cuckoo Hopscotch Checksum Versioning One-sided RDMA Messaging No Dr. TM Chaining L: HTM / D: Lock One-sided RMDA Simple Efficient Yes 40

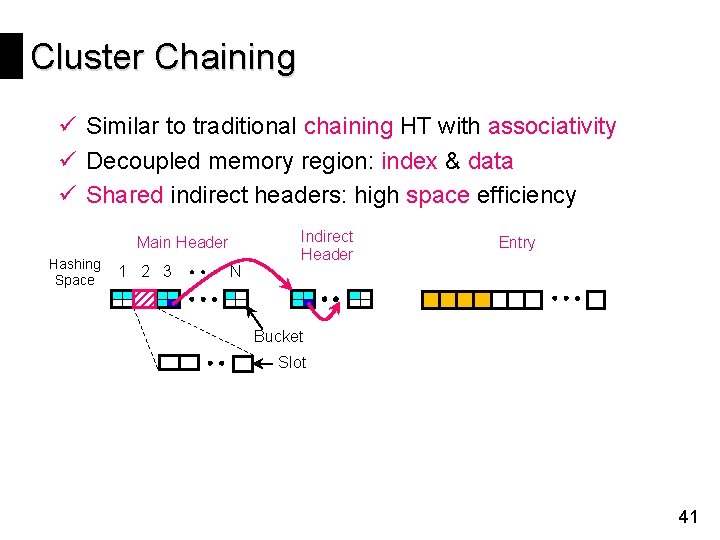

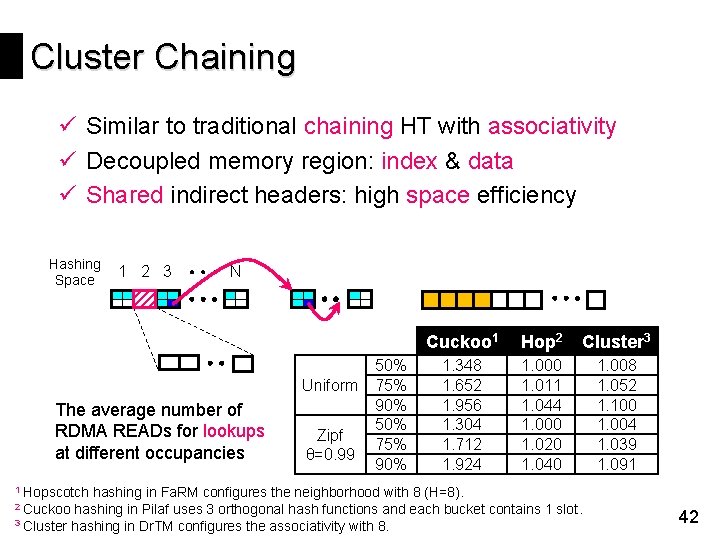

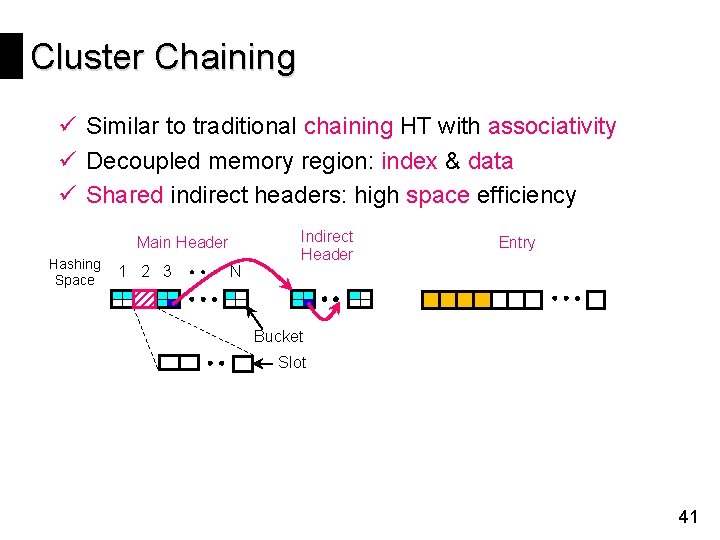

Cluster Chaining ü Similar to traditional chaining HT with associativity ü Decoupled memory region: index & data ü Shared indirect headers: high space efficiency Main Header Hashing Space 1 2 3 N Indirect Header Entry Bucket Slot 41

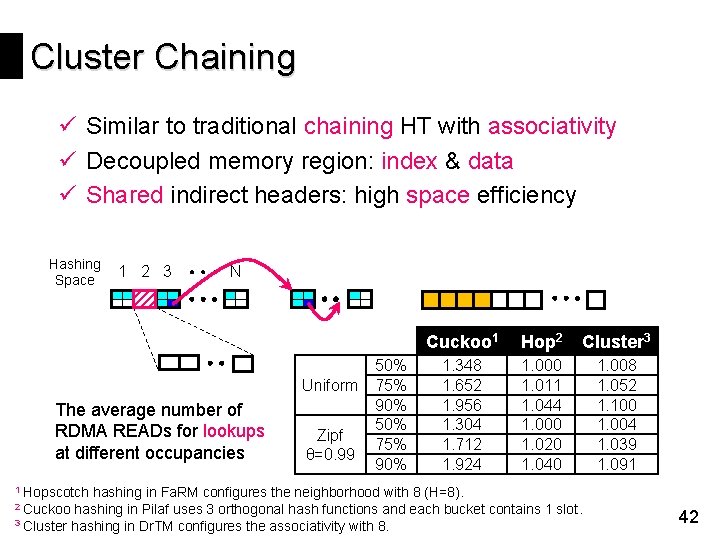

Cluster Chaining ü Similar to traditional chaining HT with associativity ü Decoupled memory region: index & data ü Shared indirect headers: high space efficiency Hashing Space 1 2 3 N Uniform The average number of RDMA READs for lookups at different occupancies Zipf θ=0. 99 50% 75% 90% Cuckoo 1 Hop 2 Cluster 3 1. 348 1. 652 1. 956 1. 304 1. 712 1. 924 1. 000 1. 011 1. 044 1. 000 1. 020 1. 040 1. 008 1. 052 1. 100 1. 004 1. 039 1. 091 1 Hopscotch hashing in Fa. RM configures the neighborhood with 8 (H=8). hashing in Pilaf uses 3 orthogonal hash functions and each bucket contains 1 slot. 3 Cluster hashing in Dr. TM configures the associativity with 8. 2 Cuckoo 42

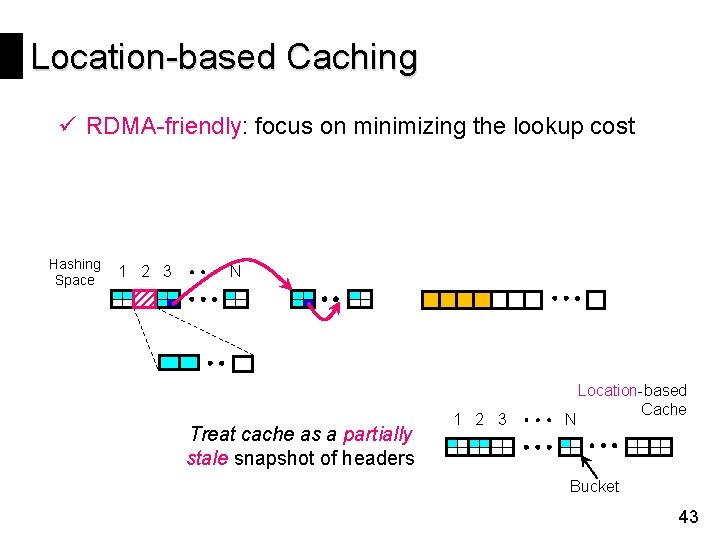

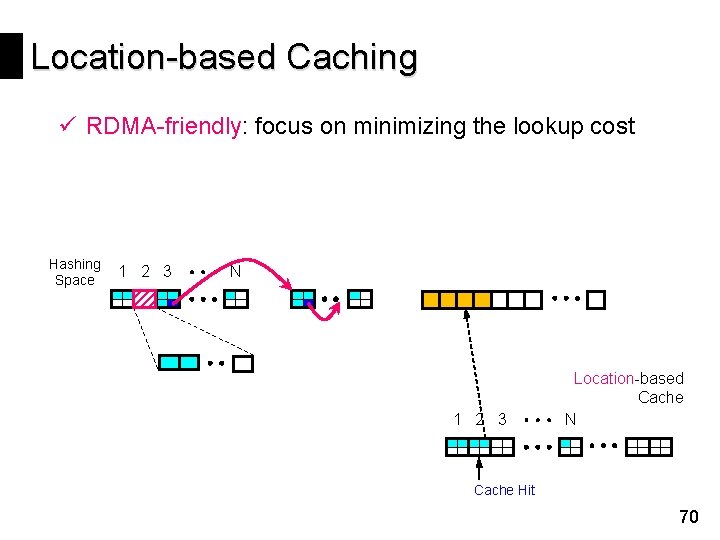

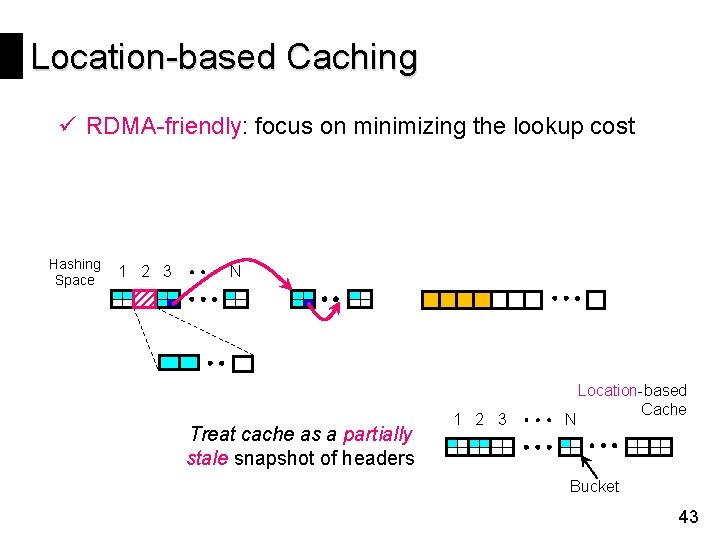

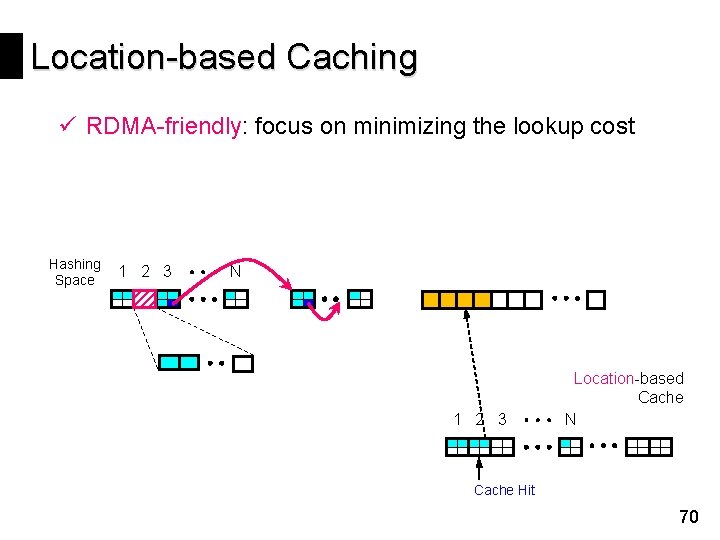

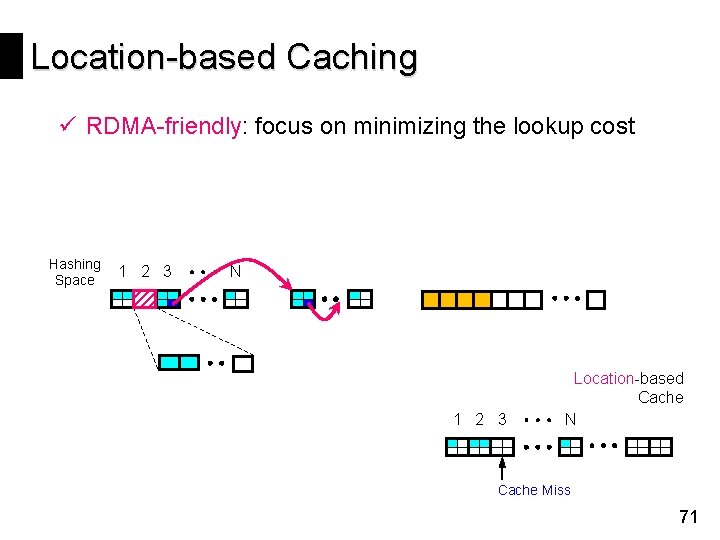

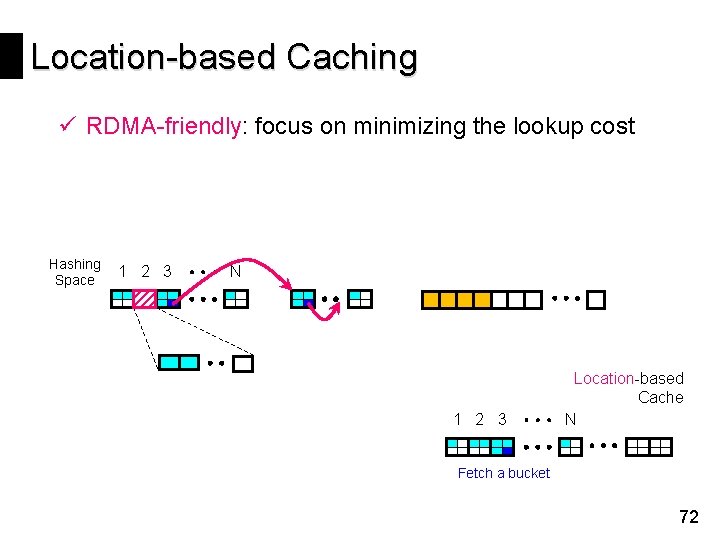

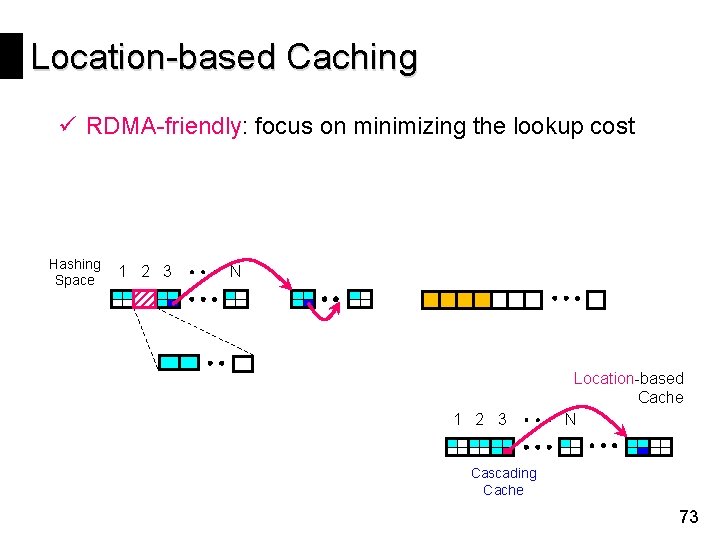

Location-based Caching ü RDMA-friendly: focus on minimizing the lookup cost Hashing Space 1 2 3 N Treat cache as a partially stale snapshot of headers 1 2 3 N Location-based Cache Bucket 43

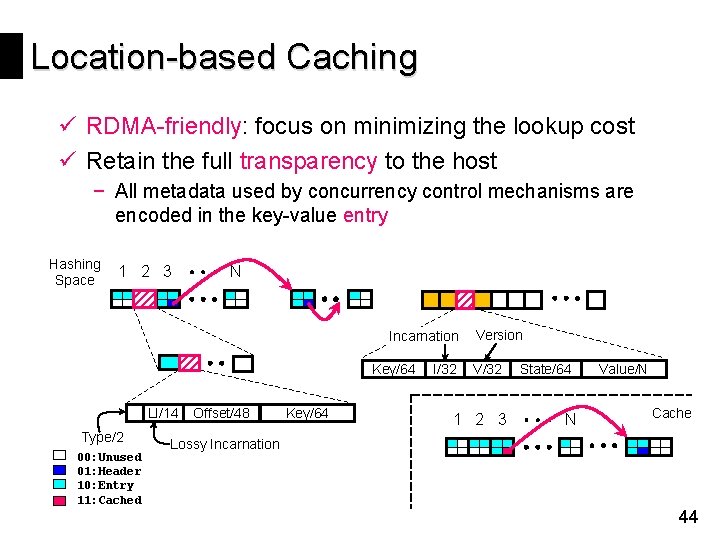

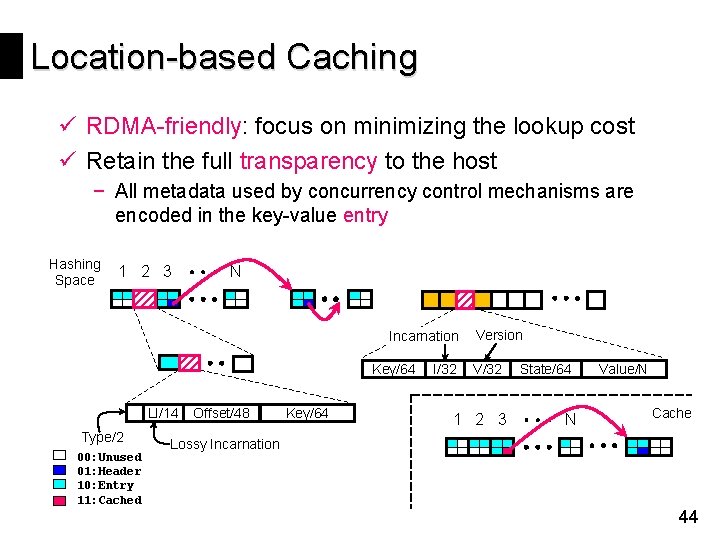

Location-based Caching ü RDMA-friendly: focus on minimizing the lookup cost ü Retain the full transparency to the host − All metadata used by concurrency control mechanisms are encoded in the key-value entry Hashing Space 1 2 3 N Incarnation Key/64 LI/14 Type/2 00: Unused 01: Header 10: Entry 11: Cached Offset/48 Key/64 I/32 Version V/32 1 2 3 State/64 N Value/N Cache Lossy Incarnation 44

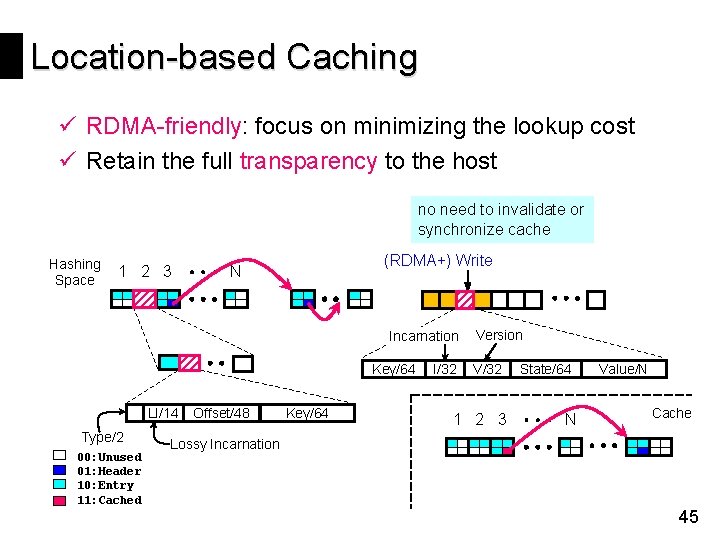

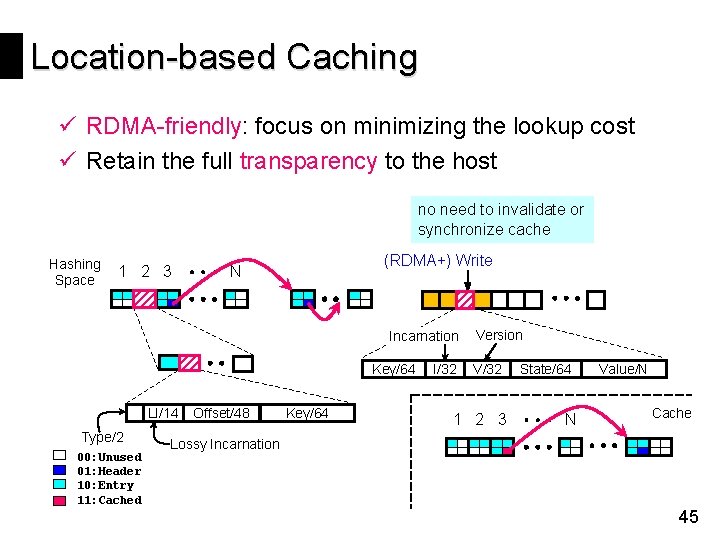

Location-based Caching ü RDMA-friendly: focus on minimizing the lookup cost ü Retain the full transparency to the host no need to invalidate or synchronize cache Hashing Space 1 2 3 (RDMA+) Write N Incarnation Key/64 LI/14 Type/2 00: Unused 01: Header 10: Entry 11: Cached Offset/48 Key/64 I/32 Version V/32 1 2 3 State/64 N Value/N Cache Lossy Incarnation 45

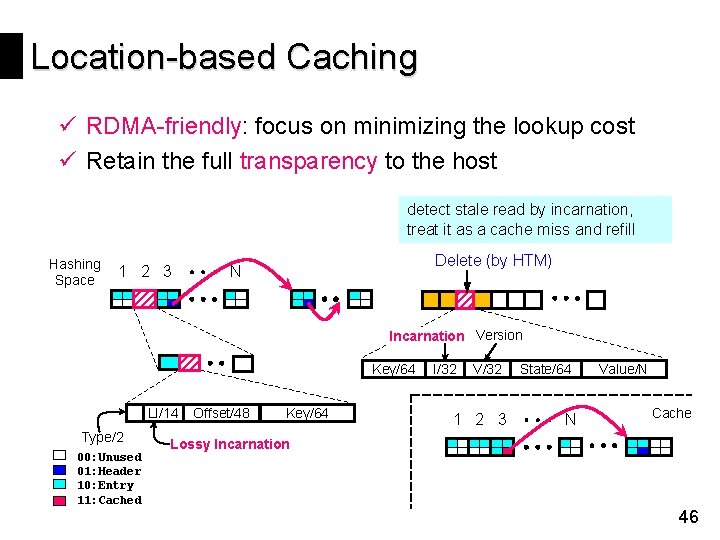

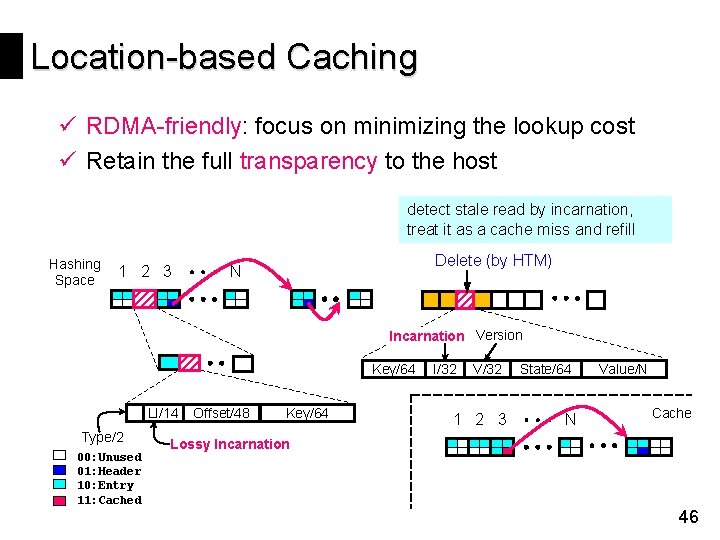

Location-based Caching ü RDMA-friendly: focus on minimizing the lookup cost ü Retain the full transparency to the host detect stale read by incarnation, treat it as a cache miss and refill Hashing Space 1 2 3 Delete (by HTM) N Incarnation Version Key/64 LI/14 Type/2 00: Unused 01: Header 10: Entry 11: Cached Offset/48 Key/64 I/32 V/32 1 2 3 State/64 N Value/N Cache Lossy Incarnation 46

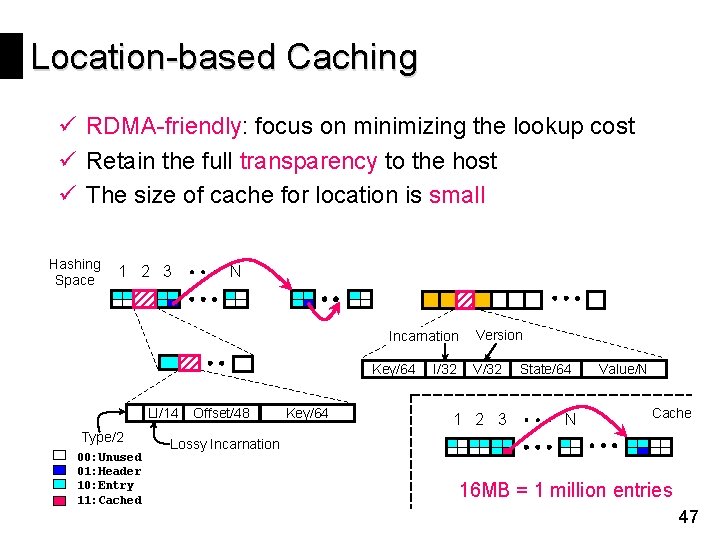

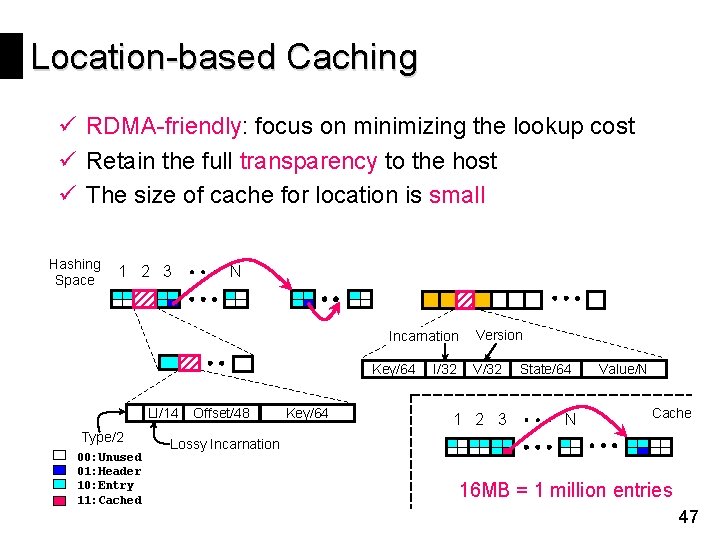

Location-based Caching ü RDMA-friendly: focus on minimizing the lookup cost ü Retain the full transparency to the host ü The size of cache for location is small Hashing Space 1 2 3 N Incarnation Key/64 LI/14 Type/2 00: Unused 01: Header 10: Entry 11: Cached Offset/48 Key/64 I/32 Version V/32 1 2 3 State/64 N Value/N Cache Lossy Incarnation 16 MB = 1 million entries 47

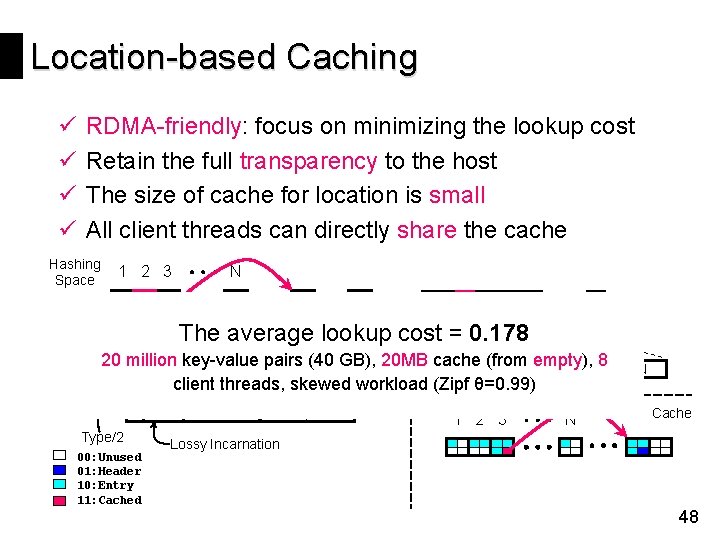

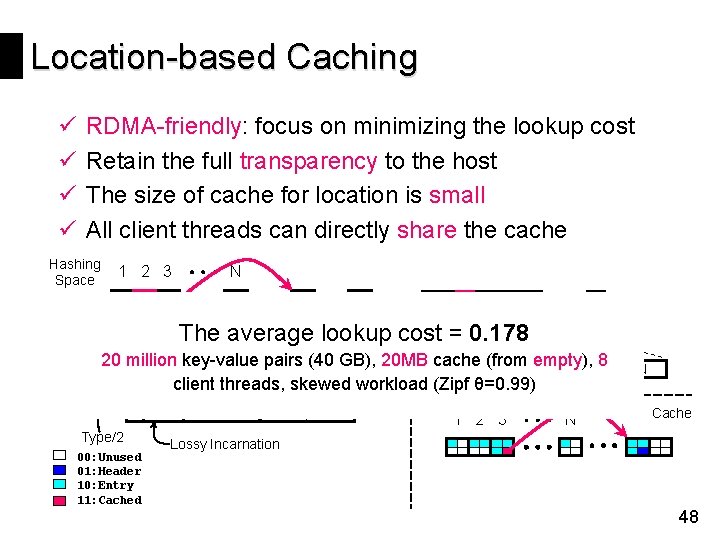

Location-based Caching ü ü RDMA-friendly: focus on minimizing the lookup cost Retain the full transparency to the host The size of cache for location is small All client threads can directly share the cache Hashing Space 1 2 3 N Version The average lookup. Incarnation cost = 0. 178 20 million key-value pairs (40 GB), Key/64 20 MB cache (from empty), 8 I/32 V/32 State/64 Value/N client threads, skewed workload (Zipf θ=0. 99) LI/14 Type/2 00: Unused 01: Header 10: Entry 11: Cached Offset/48 Key/64 1 2 3 N Cache Lossy Incarnation 48

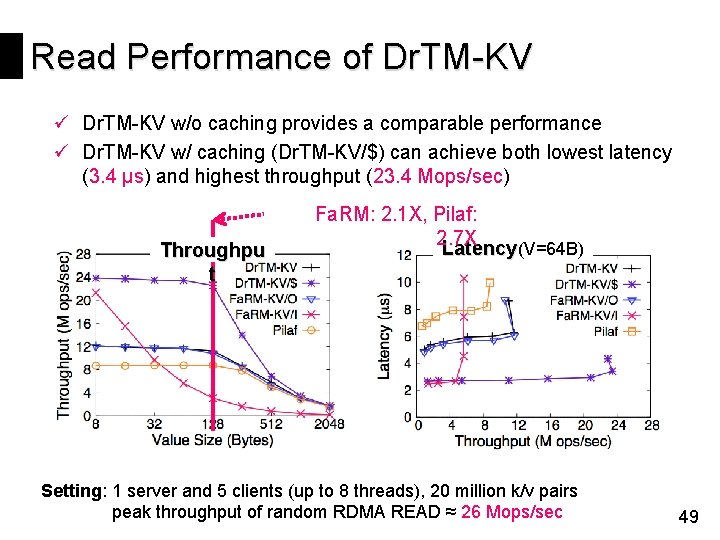

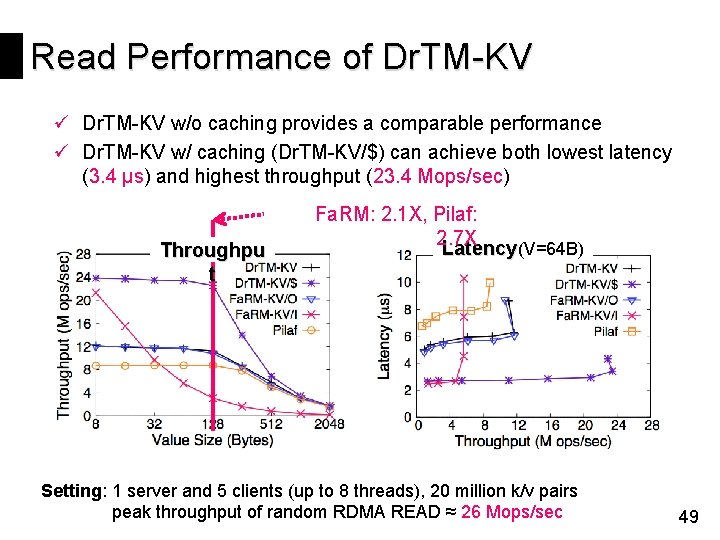

Read Performance of Dr. TM-KV ü Dr. TM-KV w/o caching provides a comparable performance ü Dr. TM-KV w/ caching (Dr. TM-KV/$) can achieve both lowest latency (3. 4 μs) and highest throughput (23. 4 Mops/sec) Throughpu t Fa. RM: 2. 1 X, Pilaf: 2. 7 X Latency(V=64 B) Setting: 1 server and 5 clients (up to 8 threads), 20 million k/v pairs peak throughput of random RDMA READ ≈ 26 Mops/sec 49

Agenda Transaction Layer Memory Storage Implementation Evaluation

Other Specific Implementation Transaction chopping: reduce HTM working set Fine-grained RTM’s fallback handler Atomicity Issues: RDMA CAS vs. Local CAS Horizontal scaling across socket: logical node Avoiding remote range query Platform: Intel E 5 -2650 v 3 RTM-enabled Mellanox Connect. X-3 56 GB Infini. Band 51

Agenda Transaction Layer Memory Storage Implementation Evaluation

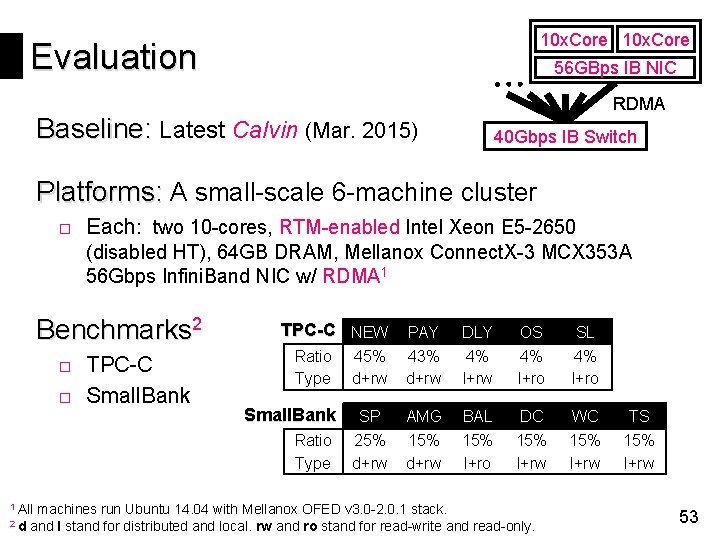

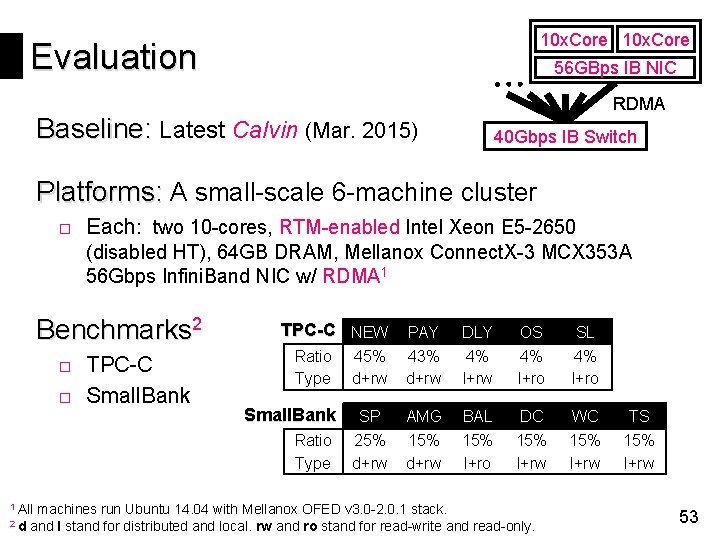

10 x. Core Evaluation 56 GBps IB NIC RDMA Baseline: Latest Calvin (Mar. 2015) 40 Gbps IB Switch Platforms: A small-scale 6 -machine cluster □ Each: two 10 -cores, RTM-enabled Intel Xeon E 5 -2650 (disabled HT), 64 GB DRAM, Mellanox Connect. X-3 MCX 353 A 56 Gbps Infini. Band NIC w/ RDMA 1 Benchmarks 2 □ TPC-C □ Small. Bank TPC-C NEW Ratio Type Small. Bank Ratio Type 1 All 2 d PAY DLY OS SL 45% d+rw 43% d+rw 4% l+ro SP AMG BAL DC WC TS 25% d+rw 15% l+ro 15% l+rw machines run Ubuntu 14. 04 with Mellanox OFED v 3. 0 -2. 0. 1 stack. and l stand for distributed and local. rw and ro stand for read-write and read-only. 53

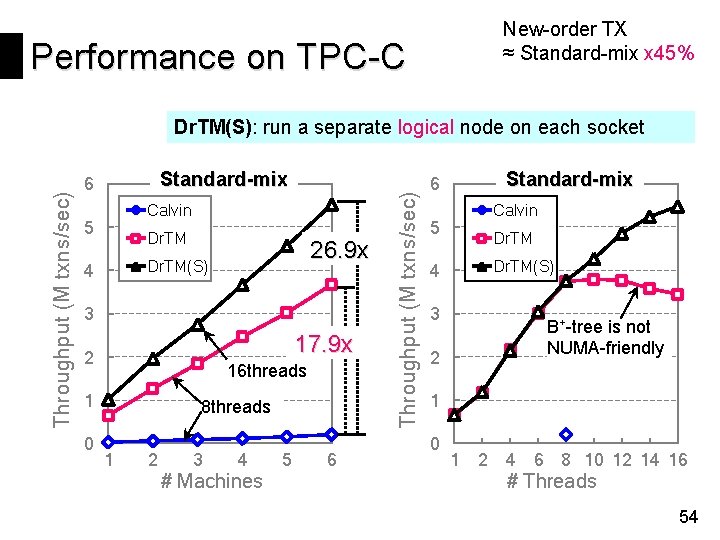

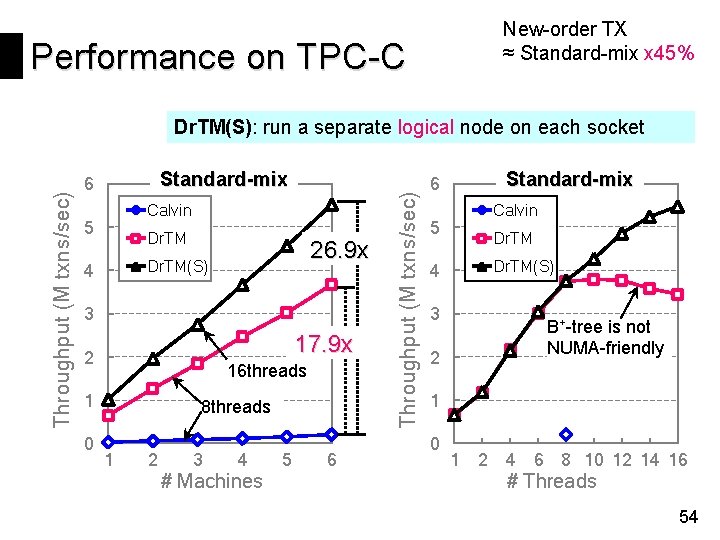

New-order TX ≈ Standard-mix x 45% Performance on TPC-C Standard-mix 6 Calvin 5 Dr. TM 26. 9 x Dr. TM(S) 4 3 17. 9 x 2 16 threads 1 0 8 threads 1 2 3 4 # Machines 5 6 Throughput (M txns/sec) Dr. TM(S): run a separate logical node on each socket 6 5 4 3 2 Standard-mix Calvin Dr. TM(S) B+-tree is not NUMA-friendly 1 0 1 2 4 6 8 10 12 14 16 # Threads 54

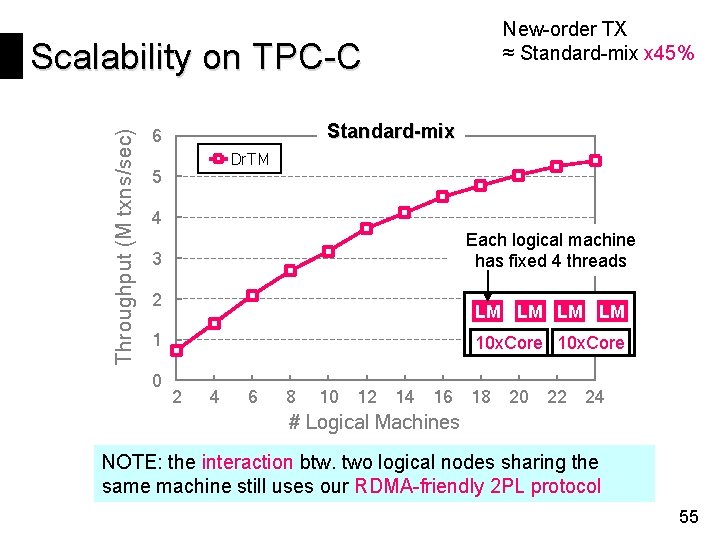

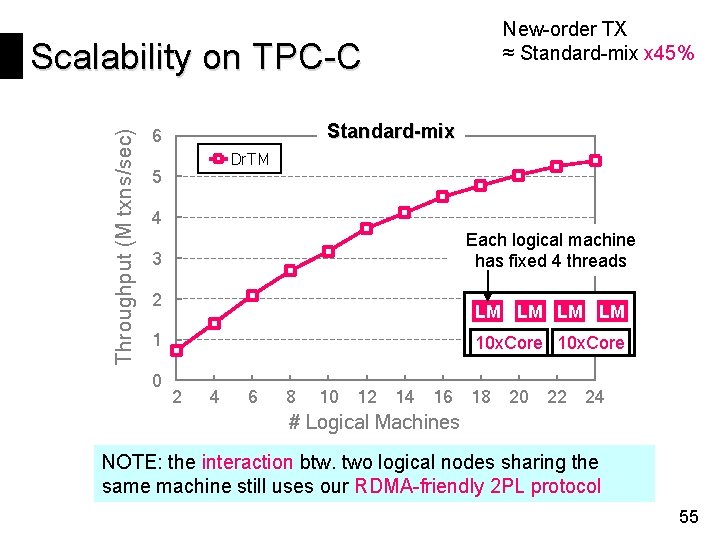

New-order TX ≈ Standard-mix x 45% Throughput (M txns/sec) Scalability on TPC-C Standard-mix 6 Dr. TM 5 4 Each logical machine has fixed 4 threads 3 2 LM LM 1 0 10 x. Core 2 4 6 8 10 12 14 16 18 20 22 24 # Logical Machines NOTE: the interaction btw. two logical nodes sharing the same machine still uses our RDMA-friendly 2 PL protocol 55

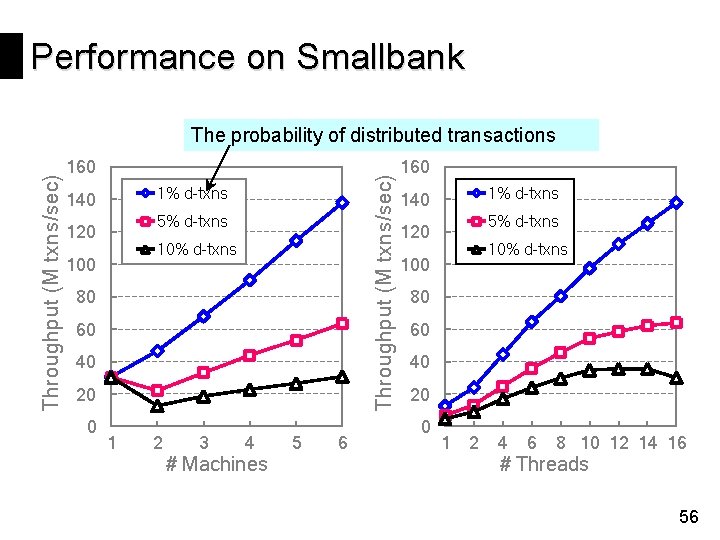

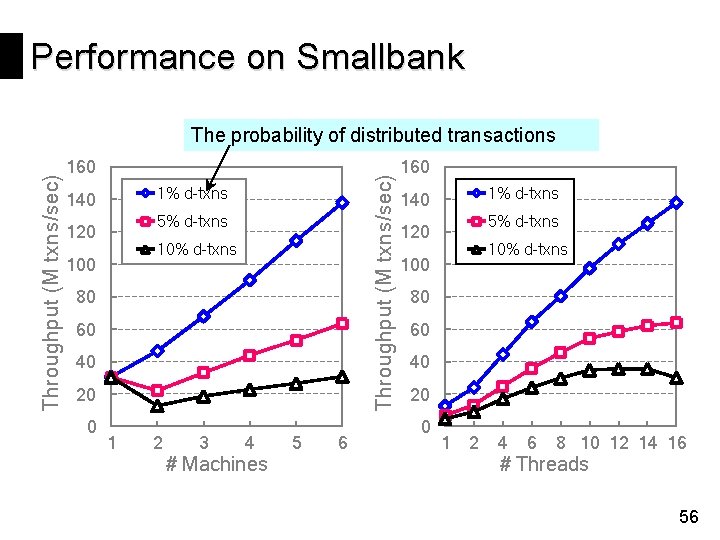

Performance on Smallbank 160 Throughput (M txns/sec) The probability of distributed transactions 1% d-txns 140 5% d-txns 120 10% d-txns 100 80 60 40 20 0 1 2 3 4 # Machines 5 6 160 1% d-txns 140 5% d-txns 120 10% d-txns 100 80 60 40 20 0 1 2 4 6 8 10 12 14 16 # Threads 56

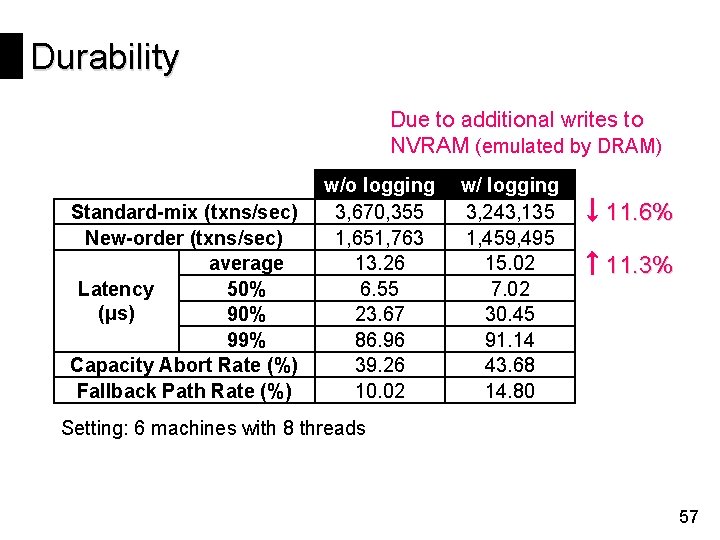

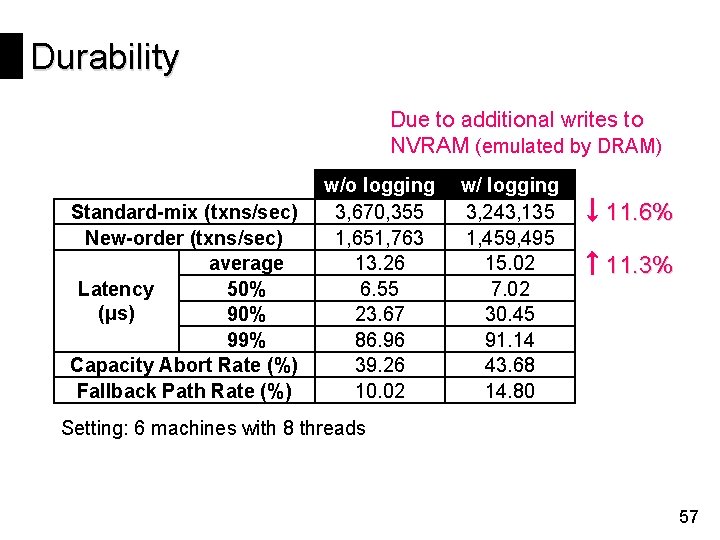

Durability Due to additional writes to NVRAM (emulated by DRAM) Standard-mix (txns/sec) New-order (txns/sec) average 50% Latency (μs) 90% 99% Capacity Abort Rate (%) Fallback Path Rate (%) w/o logging 3, 670, 355 1, 651, 763 13. 26 6. 55 23. 67 86. 96 39. 26 10. 02 w/ logging 3, 243, 135 1, 459, 495 15. 02 7. 02 30. 45 91. 14 43. 68 14. 80 11. 6% 11. 3% Setting: 6 machines with 8 threads 57

Limitations of Dr. TM Require advance knowledge of read/write sets of transactions Provide only an HTM/RDMA-friendly hash table for unordered stores, w/o B+-tree support Preserve durability rather than availability in case of machine failures 58

Conclusion High COST of concurrency control in distributed transactions calls for new designs New hardware technologies open opportunities Dr. TM : The first design and impl. of combining HTM and RDMA to boost in-memory transaction system Achieving orders-of-magnitude higher throughput and lower latency than prior general designs 59

Thanks Dr. TM http: //ipads. se. sjtu. edu. cn/pub/ projects/drtm Institute of Parallel and Distributed Systems Questions

Backup

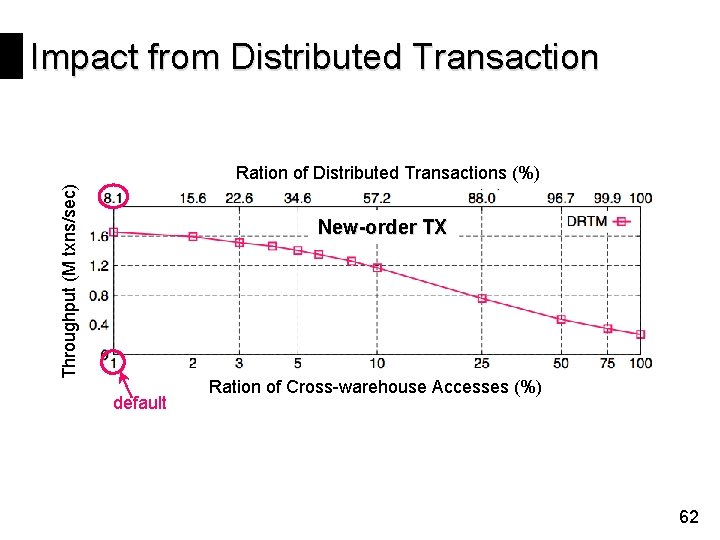

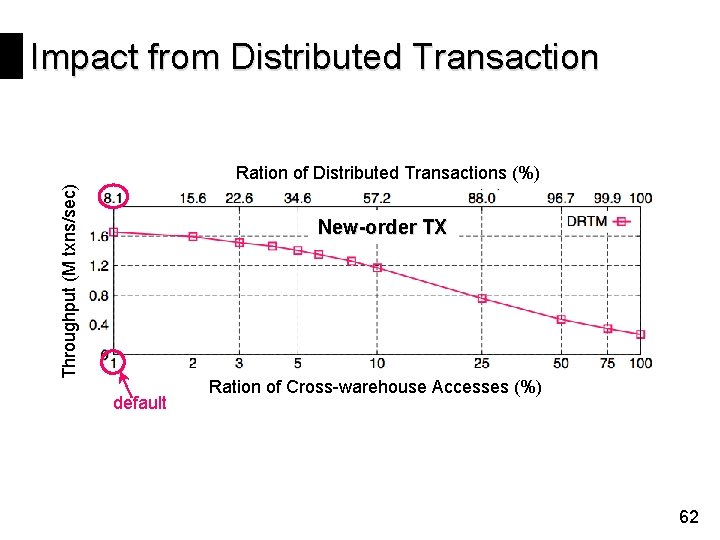

Impact from Distributed Transaction Throughput (M txns/sec) Ration of Distributed Transactions (%) New-order TX default Ration of Cross-warehouse Accesses (%) 62

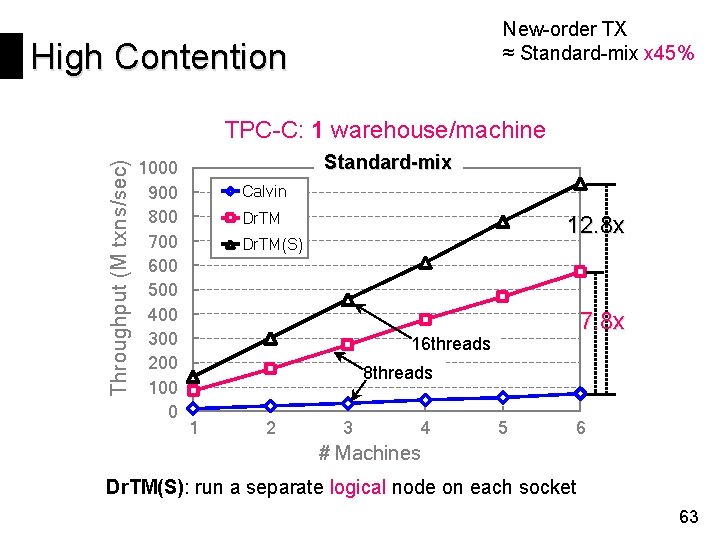

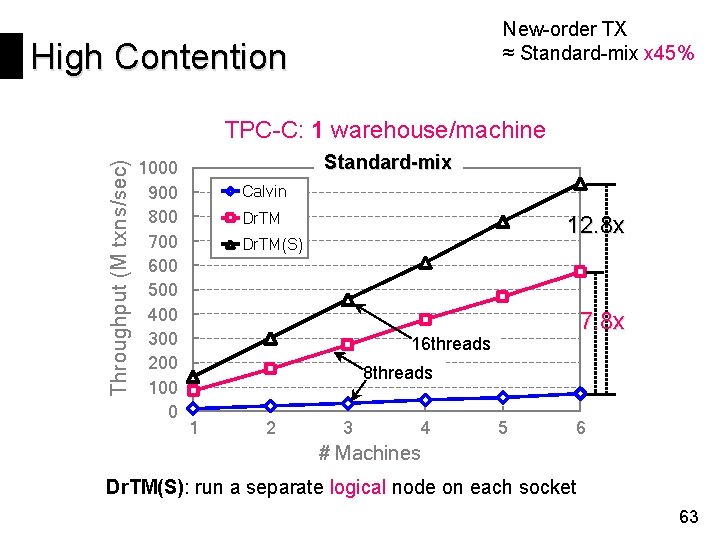

New-order TX ≈ Standard-mix x 45% High Contention Throughput (M txns/sec) TPC-C: 1 warehouse/machine 1000 900 800 700 600 500 400 300 200 100 0 Standard-mix Calvin Dr. TM 12. 8 x Dr. TM(S) 7. 8 x 16 threads 8 threads 1 2 3 4 5 6 # Machines Dr. TM(S): run a separate logical node on each socket 63

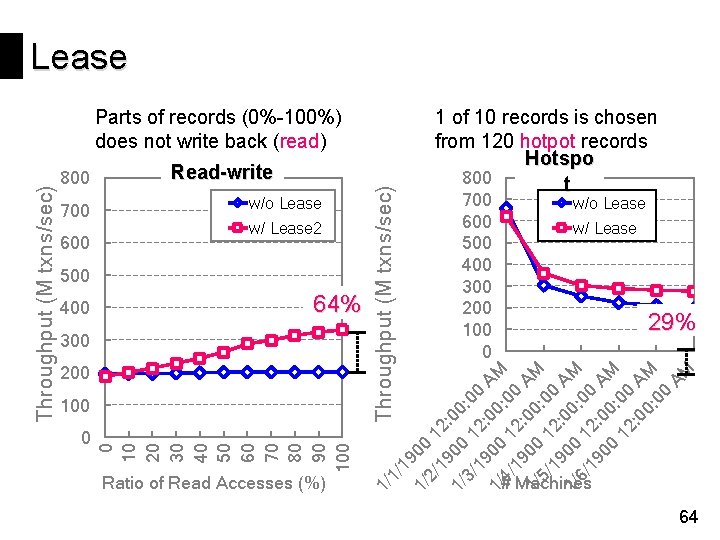

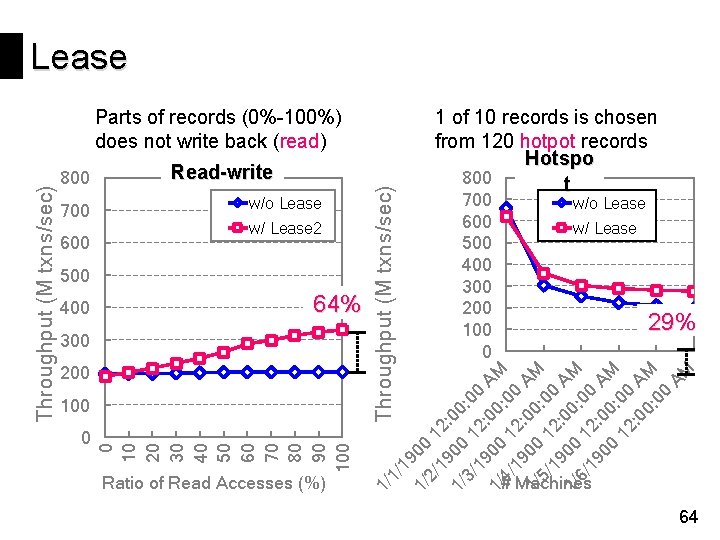

Lease w/ Lease 2 500 400 64% 300 200 100 0 Ratio of Read Accesses (%) (M txns/sec) 600 w/o Lease 1 of 10 records is chosen from 120 hotpot records Hotspo 800 t 700 600 500 400 300 200 100 0 w/o Lease w/ Lease 29% Throughput 1/ 19 1/ 00 2/ 19 12: 1/ 00 00 : 3/ 19 12: 00 1/ 00 00 AM : 4/ 19 12: 00 1/ 00 00 AM : 5/ 19 12: 00 1/ 00 00 AM : 6/ 19 12: 00 00 00 AM 12 : 00 : 0 A 0: M 00 AM 700 Read-write 1/ 800 0 10 20 30 40 50 60 70 80 90 100 Throughput (M txns/sec) Parts of records (0%-100%) does not write back (read) # Machines 64

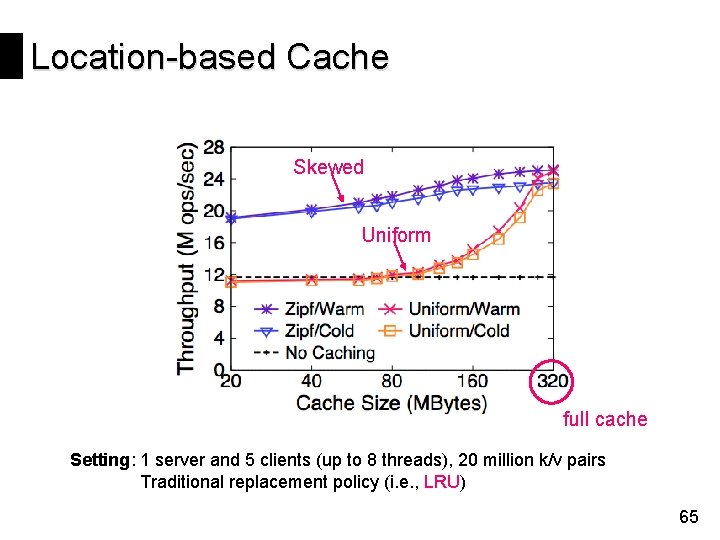

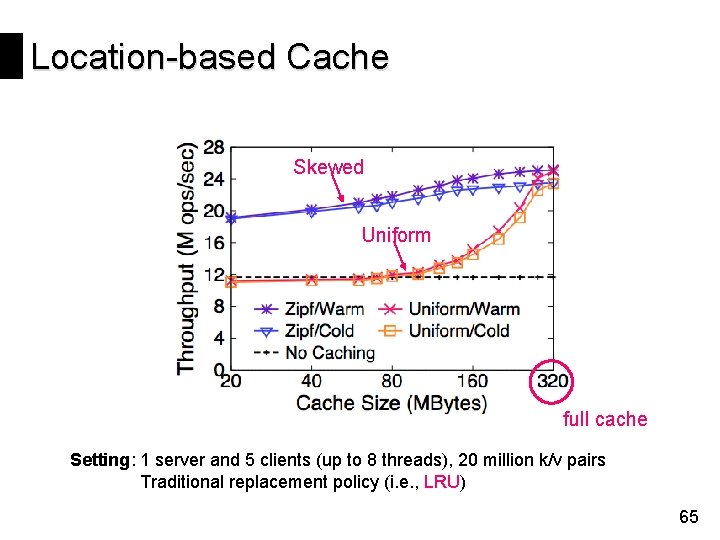

Location-based Cache Skewed Uniform full cache Setting: 1 server and 5 clients (up to 8 threads), 20 million k/v pairs Traditional replacement policy (i. e. , LRU) 65

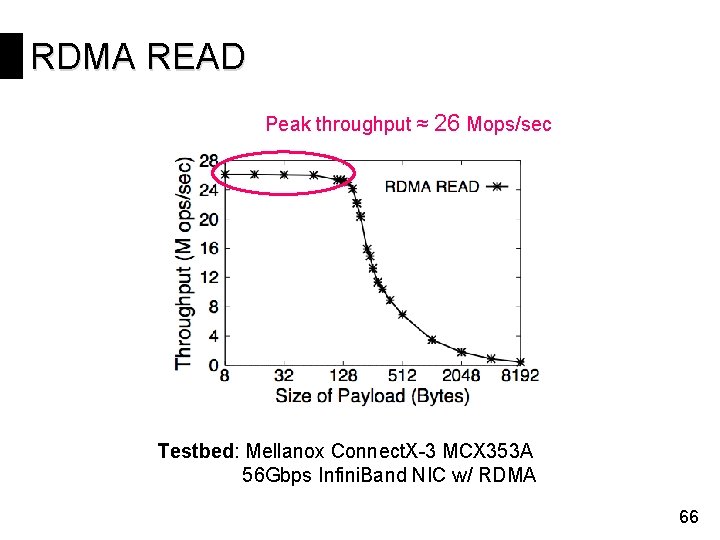

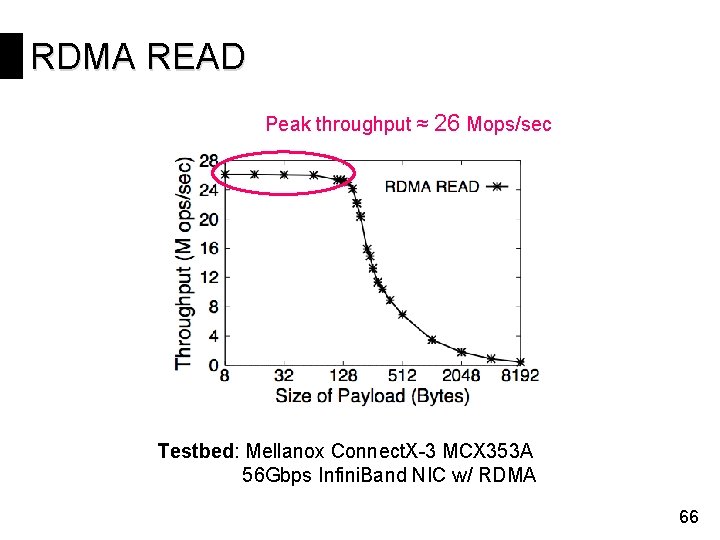

RDMA READ Peak throughput ≈ 26 Mops/sec Testbed: Mellanox Connect. X-3 MCX 353 A 56 Gbps Infini. Band NIC w/ RDMA 66

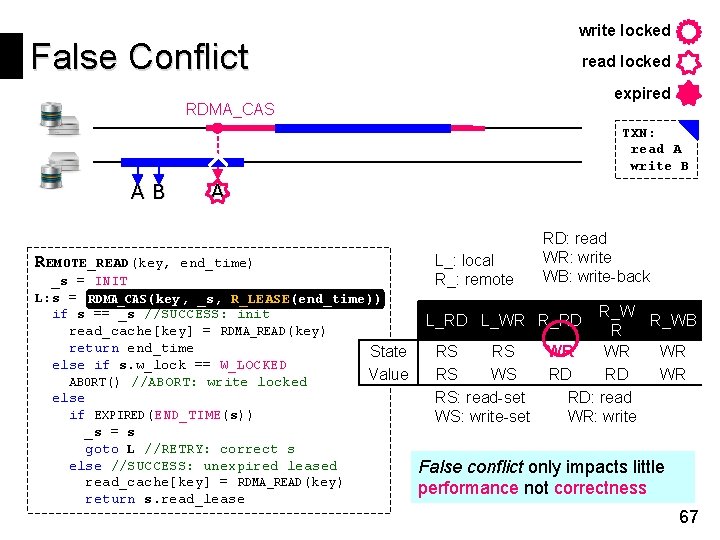

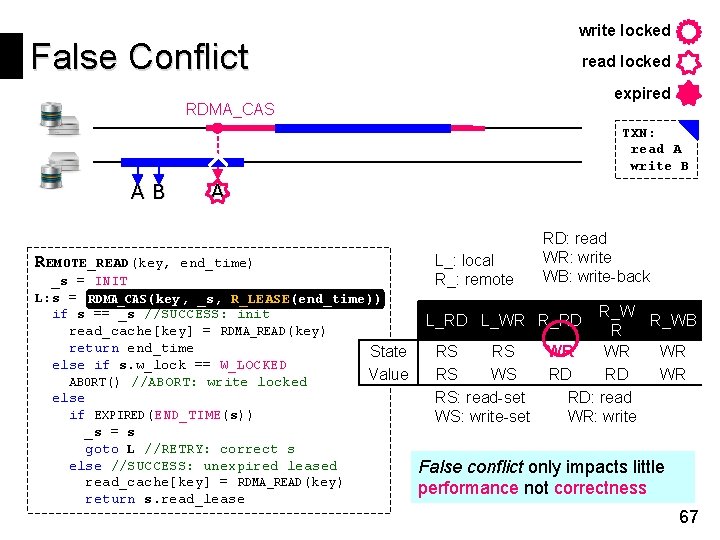

write locked False Conflict read locked expired RDMA_CAS TXN: read A write B AB REMOTE_READ(key, A end_time) _s = INIT L: s = RRDMA_CAS(key, _s, R_LEASE(end_time)) if s == _s //SUCCESS: init read_cache[key] = RDMA_READ(key) return end_time State else if s. w_lock == W_LOCKED Value ABORT() //ABORT: write locked else if EXPIRED(END_TIME(s)) _s = s goto L //RETRY: correct s else //SUCCESS: unexpired leased read_cache[key] = RDMA_READ(key) return s. read_lease L_: local R_: remote RD: read WR: write WB: write-back R_WB R WR WR WR RD RD WR RD: read WR: write L_RD L_WR R_RD RS RS RS WS RS: read-set WS: write-set False conflict only impacts little performance not correctness 67

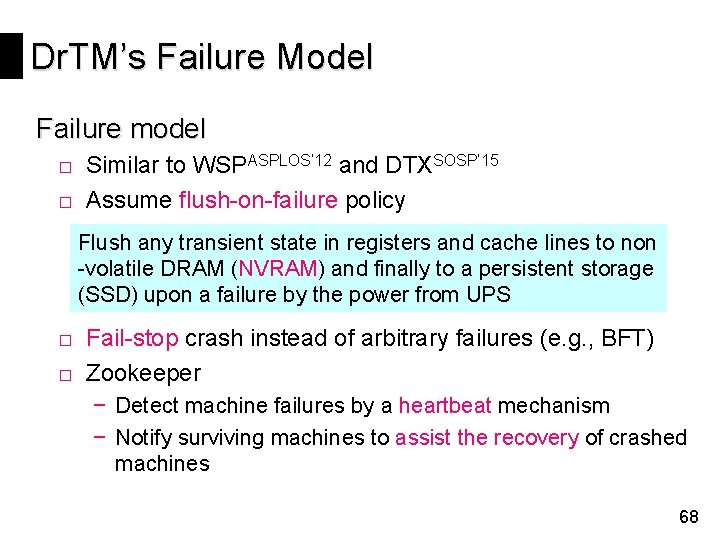

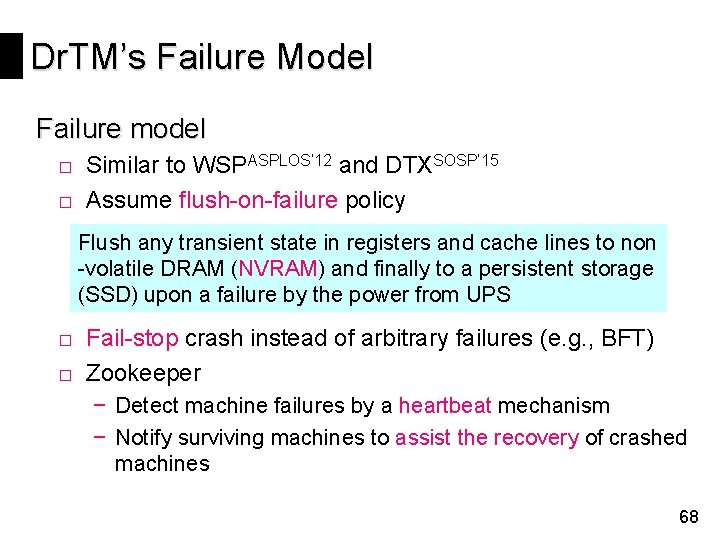

Dr. TM’s Failure Model Failure model □ Similar to WSPASPLOS’ 12 and DTXSOSP’ 15 □ Assume flush-on-failure policy Flush any transient state in registers and cache lines to non -volatile DRAM (NVRAM) and finally to a persistent storage (SSD) upon a failure by the power from UPS □ Fail-stop crash instead of arbitrary failures (e. g. , BFT) □ Zookeeper − Detect machine failures by a heartbeat mechanism − Notify surviving machines to assist the recovery of crashed machines 68

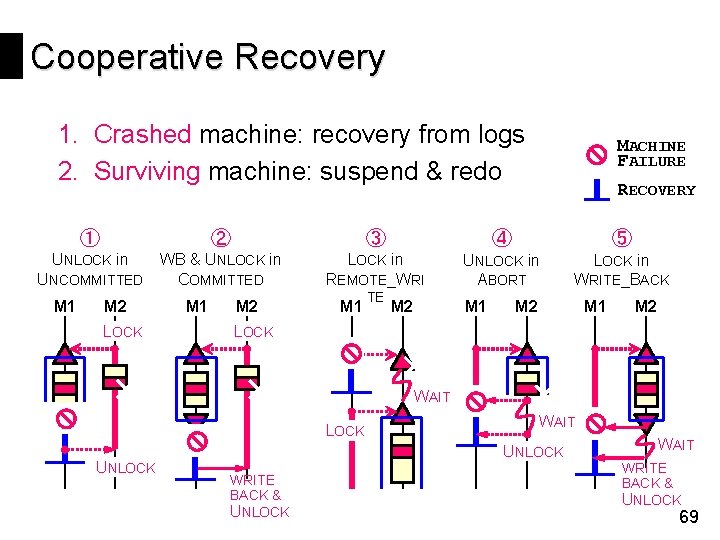

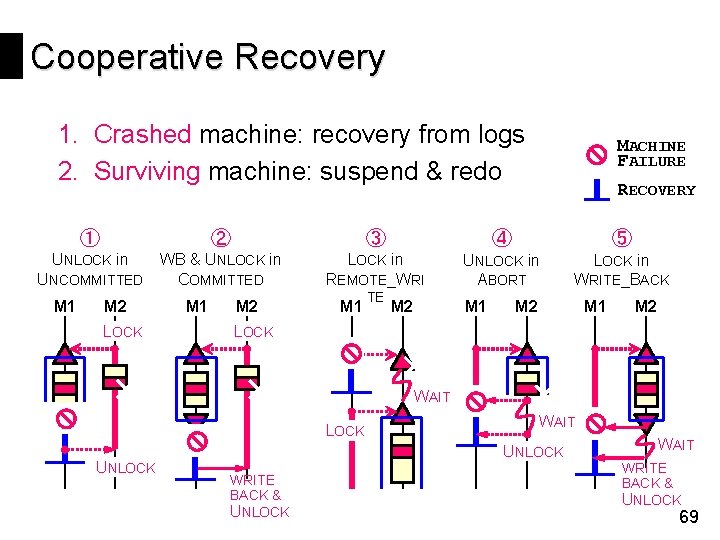

Cooperative Recovery 1. Crashed machine: recovery from logs 2. Surviving machine: suspend & redo MACHINE FAILURE RECOVERY ① ② ③ ④ ⑤ UNLOCK in WB & UNLOCK in COMMITTED LOCK in REMOTE_WRI UNLOCK in ABORT LOCK in WRITE_BACK UNCOMMITTED M 1 M 2 LOCK M 1 M 2 M 1 TE M 2 M 1 M 2 LOCK WAIT LOCK UNLOCK WAIT UNLOCK WRITE BACK & UNLOCK WAIT WRITE BACK & UNLOCK 69

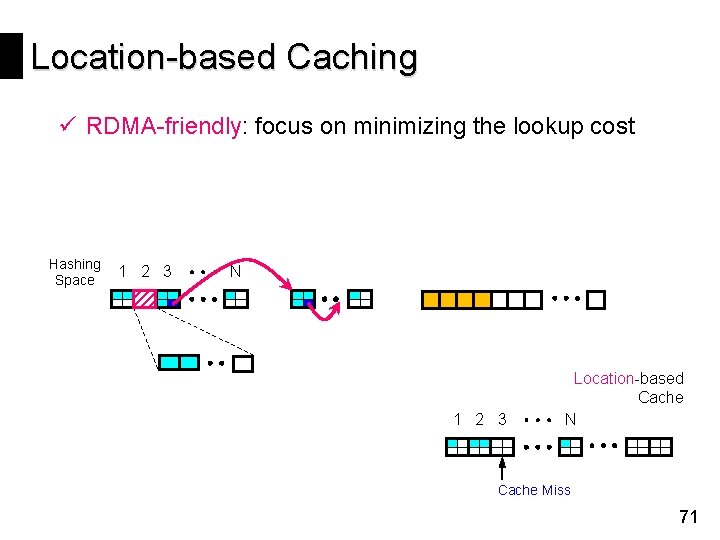

Location-based Caching ü RDMA-friendly: focus on minimizing the lookup cost Hashing Space 1 2 3 N 1 2 3 Location-based Cache N Cache Hit 70

Location-based Caching ü RDMA-friendly: focus on minimizing the lookup cost Hashing Space 1 2 3 N 1 2 3 Location-based Cache N Cache Miss 71

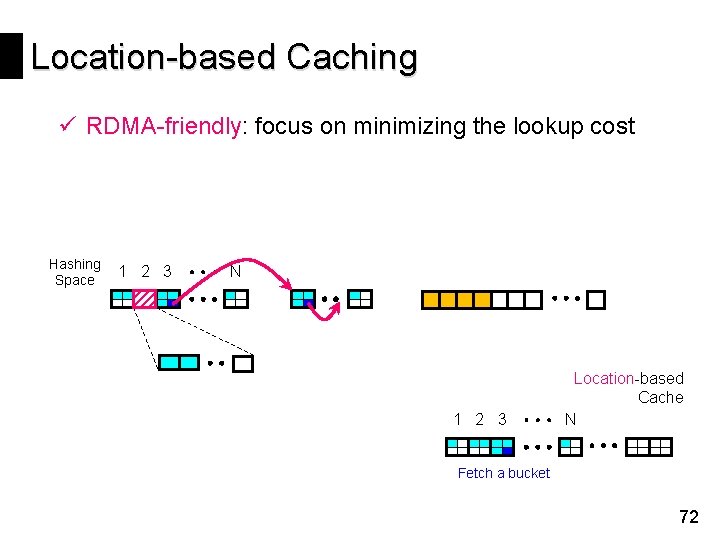

Location-based Caching ü RDMA-friendly: focus on minimizing the lookup cost Hashing Space 1 2 3 N 1 2 3 Location-based Cache N Fetch a bucket 72

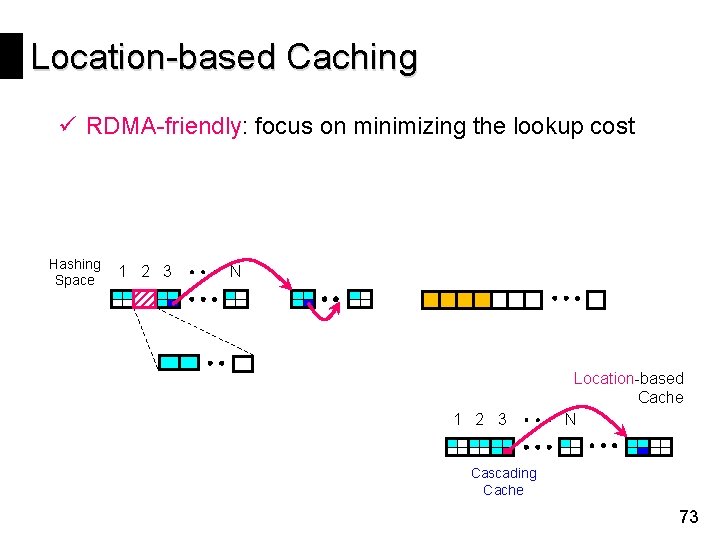

Location-based Caching ü RDMA-friendly: focus on minimizing the lookup cost Hashing Space 1 2 3 N 1 2 3 Location-based Cache N Cascading Cache 73

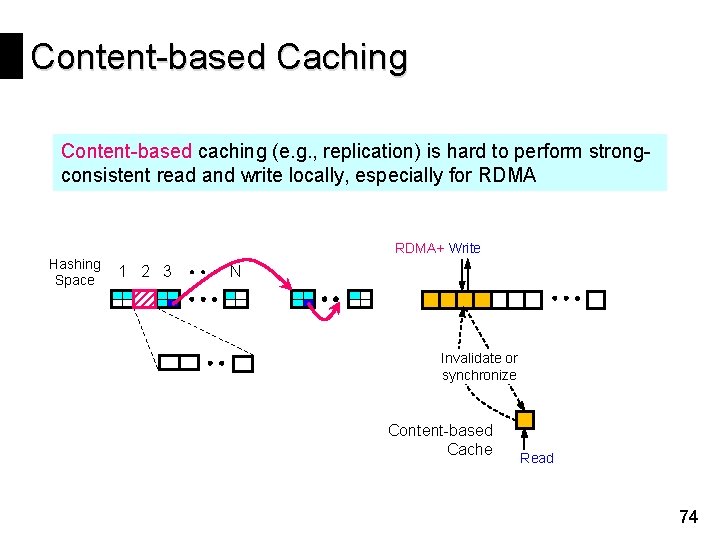

Content-based Caching Content-based caching (e. g. , replication) is hard to perform strongconsistent read and write locally, especially for RDMA Hashing Space RDMA+ Write 1 2 3 N Invalidate or synchronize Content-based Cache Read 74

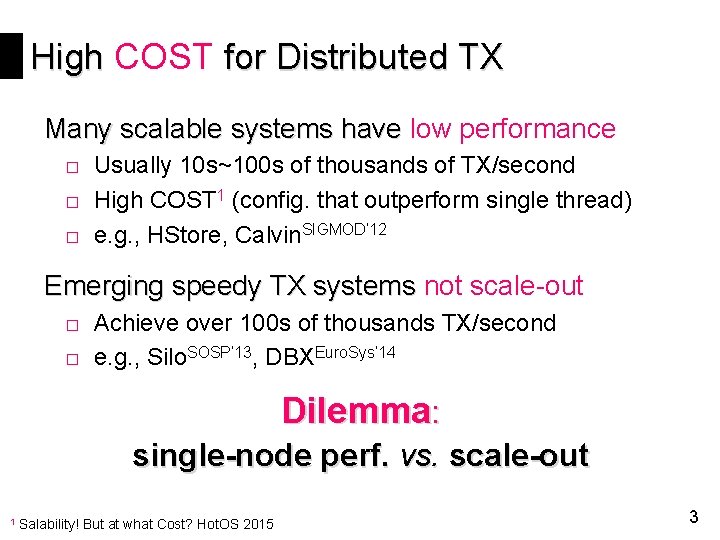

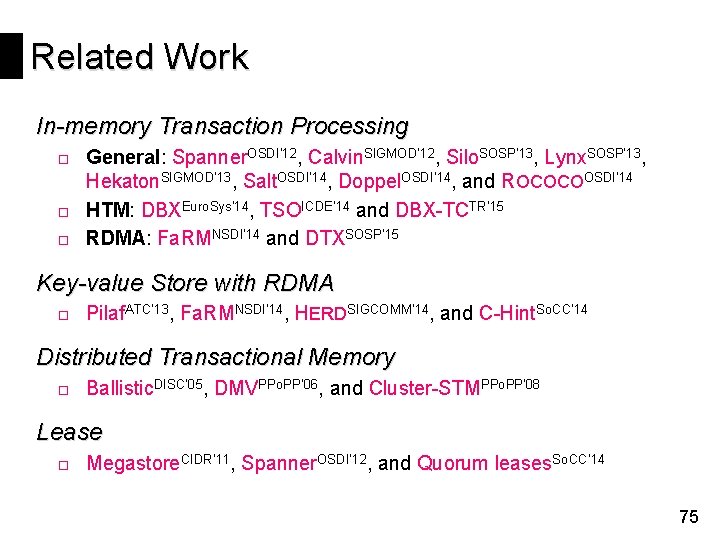

Related Work In-memory Transaction Processing □ General: Spanner. OSDI’ 12, Calvin. SIGMOD’ 12, Silo. SOSP’ 13, Lynx. SOSP’ 13, Hekaton. SIGMOD’ 13, Salt. OSDI’ 14, Doppel. OSDI’ 14, and ROCOCOOSDI’ 14 □ HTM: DBXEuro. Sys’ 14, TSOICDE’ 14 and DBX-TCTR’ 15 □ RDMA: Fa. RMNSDI’ 14 and DTXSOSP’ 15 Key-value Store with RDMA □ Pilaf. ATC’ 13, Fa. RMNSDI’ 14, HERDSIGCOMM’ 14, and C-Hint. So. CC’ 14 Distributed Transactional Memory □ Ballistic. DISC’ 05, DMVPPo. PP’ 06, and Cluster-STMPPo. PP’ 08 Lease □ Megastore. CIDR’ 11, Spanner. OSDI’ 12, and Quorum leases. So. CC’ 14 75