DOECAP Implementation of Effective QA Plans and Procedures

- Slides: 34

DOECAP Implementation of Effective QA Plans and Procedures Joe Pardue Technical Operations Coordinator Department of Energy Consolidated Audit Program DOE Office of Corporate Safety Programs (HSS-23) Presented to AQS-EED 2011 Conference Las Vegas, NV September 25 -27, 2011

Presentation Outline • Analytical Services Program (ASP) – Department of Energy Consolidated Program (DOECAP) – Mixed Analyte Performance Evaluation Program (MAPEP) – Systematic Planning and Data Assessment Tools and Training (SPADAT) • Visual Sample Plan 2

Presentation Outline • Implementation of Sustainable QA Plans and Procedures – Analytical Laboratories – Treatment Storage and Disposal Facilities (TSDFs) 3

Analytical Services Program Component Elements Systematic Planning and Data Assessment Tools and Training Mixed Analyte Performance Evaluation Program Department of Energy Consolidated Audit Program 4

Mission - Analytical Services Program Foster performance improvements of laboratories/TSDFs Reduce environmental data quality uncertainty; thereby, increasing data confidence used in decision-making Ensure radiological/hazardous waste accountability/treatment/disposition Identify potential DOE liabilities/risks 5

DOECAP Overview Program Purpose/Scope: • DOECAP DOES NOT certify, accredit, nor approve laboratories/TSDF’s • Consolidated audits provide information to program line/field managers to determine status of contractual agreements compliance/risks and liabilities • Audit findings are based upon non-conformances with DOE contractual requirements, Quality Systems for Analytical Services (QSAS), SOPs, and/or non-compliance with Federal/State requirements 6

DOECAP Overview • Tracking throughout year of all corrective actions (about 400 findings/observations) • Lessons learned initiatives – Weekly conference calls, Annual Workshop, Annual FY Report • FY 11 -- 37 DOECAP audits (26 laboratories/11 TSDFs) 7

MAPEP Overview Program Purpose/Scope: • Test and evaluate environmental analytical laboratory performance through specific analytes/matrices • Provide defensible environmental data – radionuclides, inorganic metals, and organics • Only DOE reference laboratory • ISO 17025 & ISO 17043 Accredited PT Provider with traceability to NIST • 130 participating laboratories (105 domestic/25 international) 8

MAPEP Background Program Focus: • Radiological, stable inorganic, and organic constituents • Soil, water, air filter, and vegetation media • Interaction with DOECAP and QSAS • MAPEP required for all laboratories performing analysis for DOE • Technical assistance 9

MAPEP Laboratories MAPEP Laboratory Participants: • 110 Domestic Laboratories • 27 International Laboratories • Nuclear Test Ban Treaty Laboratories • New Zealand (1) • Brazil (1) • Ecuador (1) • Spain (1) • Cooperative Monitoring • Canada (2) • United Kingdom (6) 10

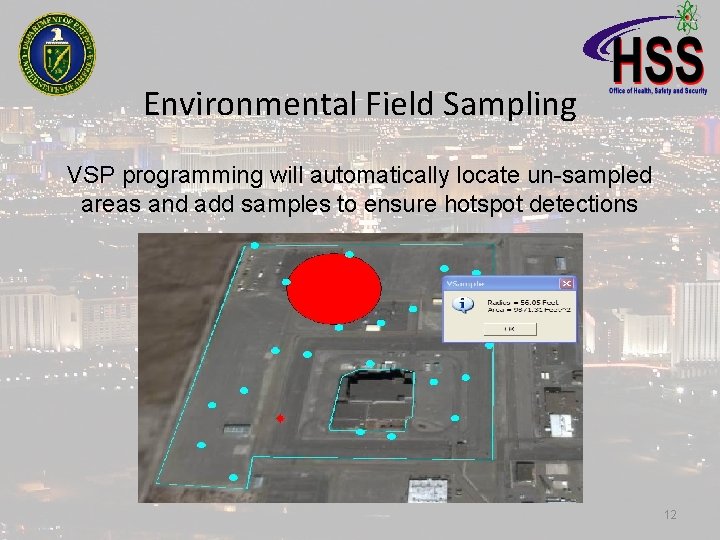

SPADAT Visual Sample Planning Overview Improves quality of environmental field and facility sampling strategies Minimizing number of samples needed to meet regulatory requirements 11

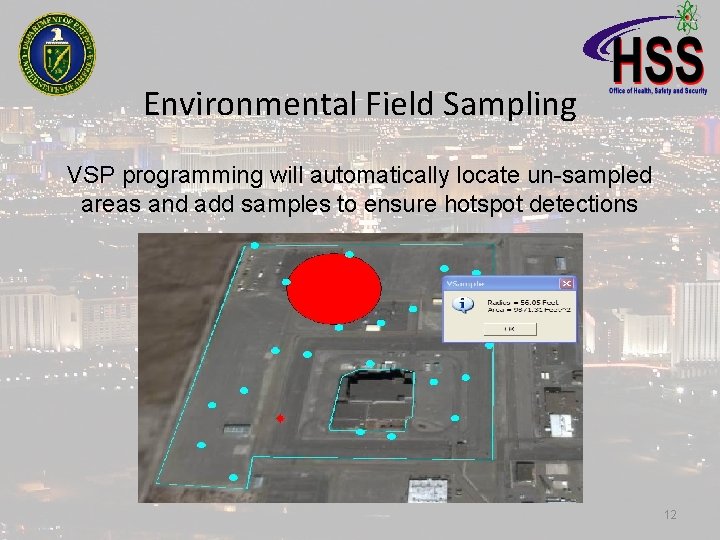

Environmental Field Sampling VSP programming will automatically locate un-sampled areas and add samples to ensure hotspot detections 12

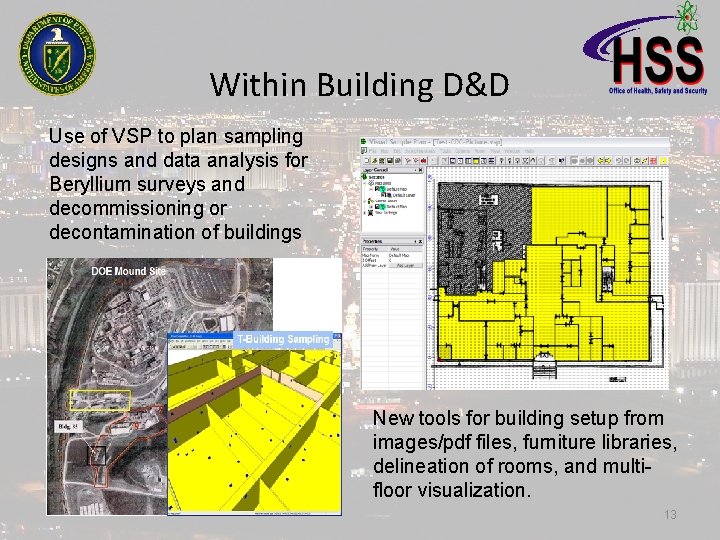

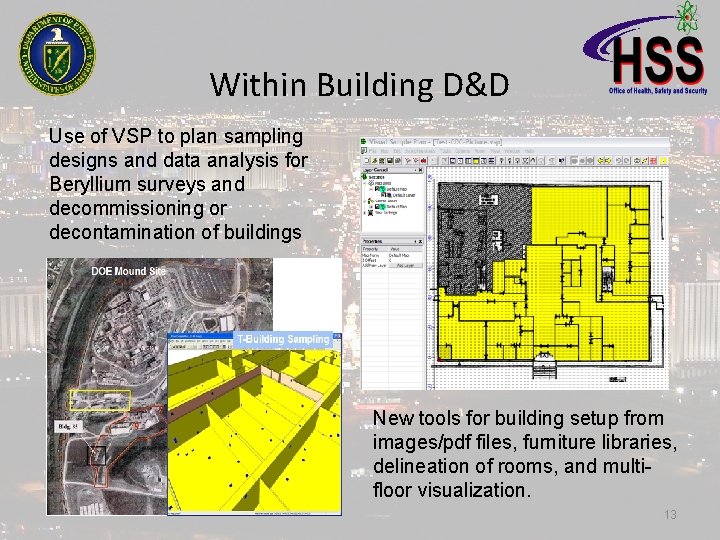

Within Building D&D Use of VSP to plan sampling designs and data analysis for Beryllium surveys and decommissioning or decontamination of buildings New tools for building setup from images/pdf files, furniture libraries, delineation of rooms, and multifloor visualization. 13

DOECAP Laboratory Audits 14

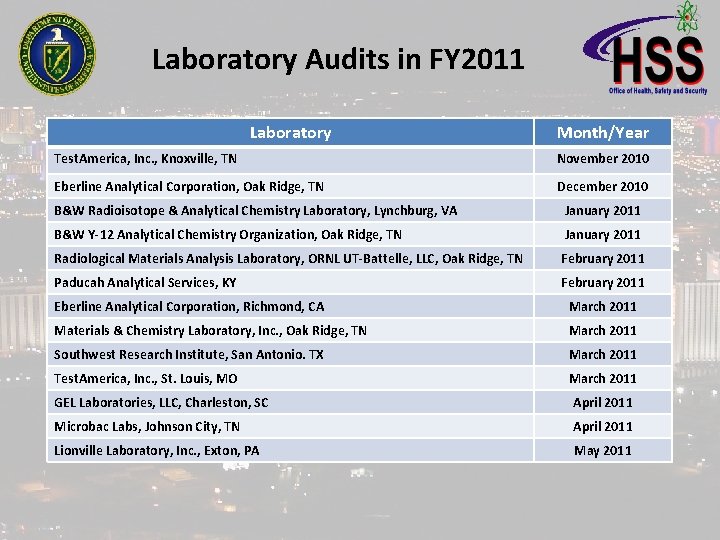

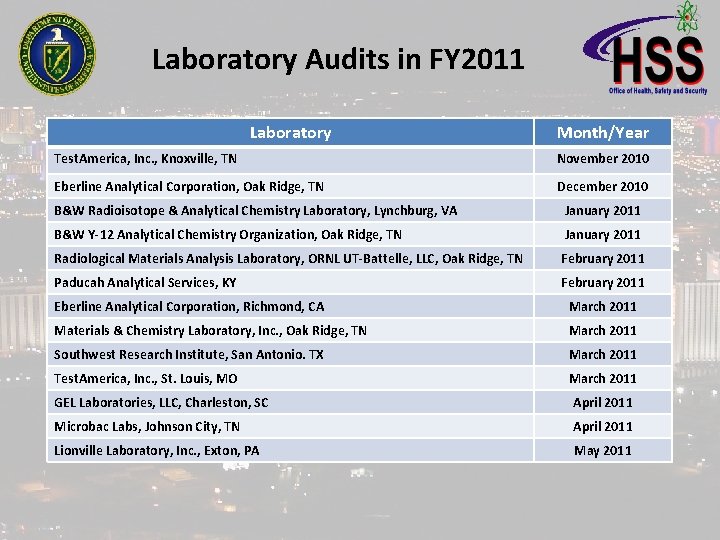

Laboratory Audits in FY 2011 Laboratory Month/Year Test. America, Inc. , Knoxville, TN November 2010 Eberline Analytical Corporation, Oak Ridge, TN December 2010 B&W Radioisotope & Analytical Chemistry Laboratory, Lynchburg, VA January 2011 B&W Y-12 Analytical Chemistry Organization, Oak Ridge, TN January 2011 Radiological Materials Analysis Laboratory, ORNL UT-Battelle, LLC, Oak Ridge, TN February 2011 Paducah Analytical Services, KY February 2011 Eberline Analytical Corporation, Richmond, CA March 2011 Materials & Chemistry Laboratory, Inc. , Oak Ridge, TN March 2011 Southwest Research Institute, San Antonio. TX March 2011 Test. America, Inc. , St. Louis, MO March 2011 GEL Laboratories, LLC, Charleston, SC April 2011 Microbac Labs, Johnson City, TN April 2011 Lionville Laboratory, Inc. , Exton, PA May 2011

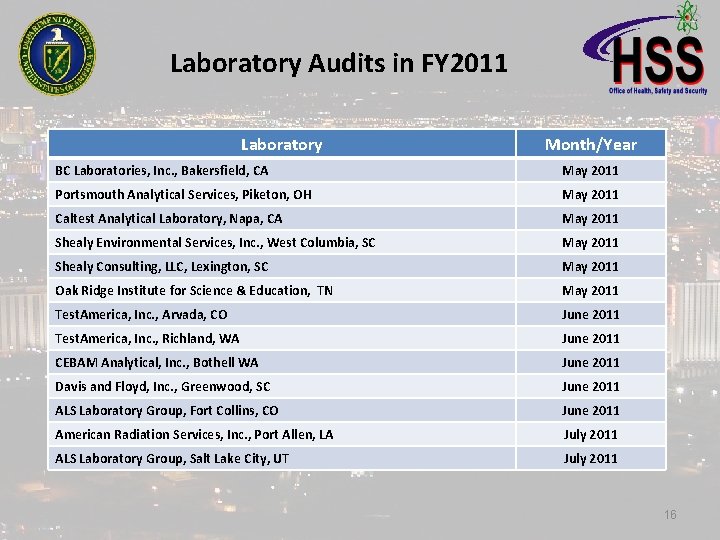

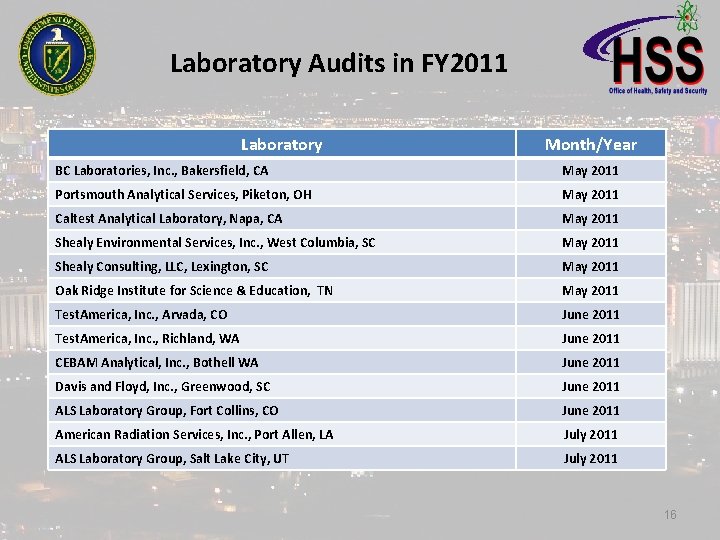

Laboratory Audits in FY 2011 Laboratory Month/Year BC Laboratories, Inc. , Bakersfield, CA May 2011 Portsmouth Analytical Services, Piketon, OH May 2011 Caltest Analytical Laboratory, Napa, CA May 2011 Shealy Environmental Services, Inc. , West Columbia, SC May 2011 Shealy Consulting, LLC, Lexington, SC May 2011 Oak Ridge Institute for Science & Education, TN May 2011 Test. America, Inc. , Arvada, CO June 2011 Test. America, Inc. , Richland, WA June 2011 CEBAM Analytical, Inc. , Bothell WA June 2011 Davis and Floyd, Inc. , Greenwood, SC June 2011 ALS Laboratory Group, Fort Collins, CO June 2011 American Radiation Services, Inc. , Port Allen, LA July 2011 ALS Laboratory Group, Salt Lake City, UT July 2011 16

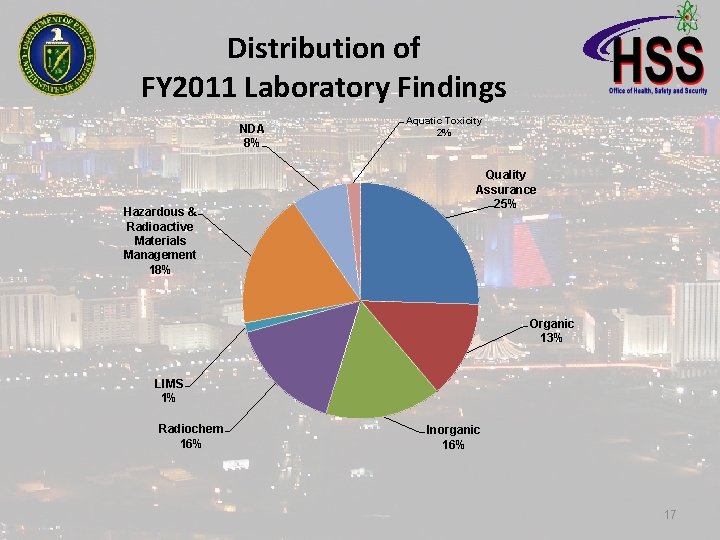

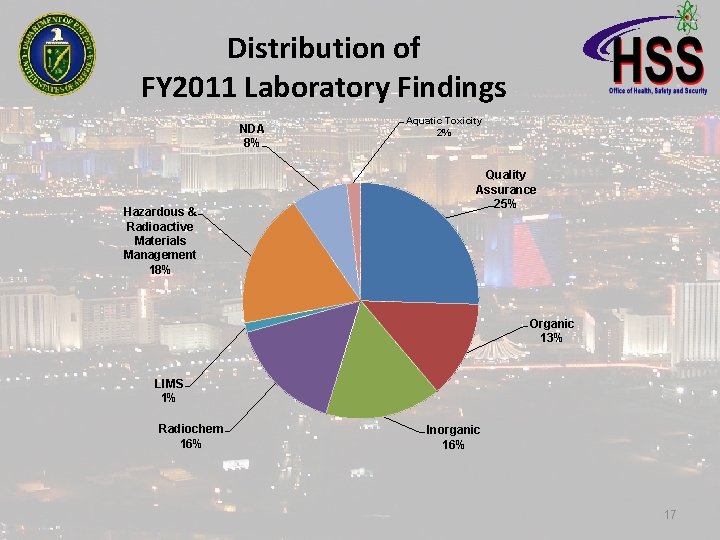

Distribution of FY 2011 Laboratory Findings NDA 8% Hazardous & Radioactive Materials Management 18% Aquatic Toxicity 2% Quality Assurance 25% Organic 13% LIMS 1% Radiochem 16% Inorganic 16% 17

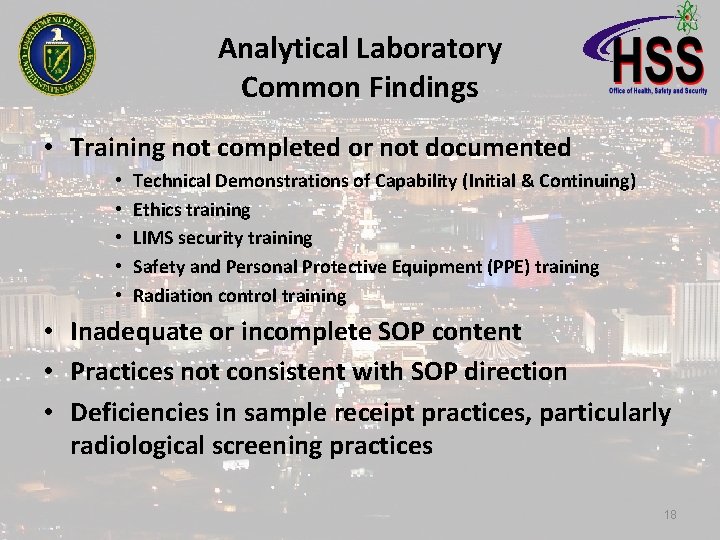

Analytical Laboratory Common Findings • Training not completed or not documented • • • Technical Demonstrations of Capability (Initial & Continuing) Ethics training LIMS security training Safety and Personal Protective Equipment (PPE) training Radiation control training • Inadequate or incomplete SOP content • Practices not consistent with SOP direction • Deficiencies in sample receipt practices, particularly radiological screening practices 18

Analytical Laboratory Common Findings (cont. ) • Performance Test failures • Internal auditing deficiencies • • None performed Schedule not established or maintained No Corrective Action follow-up Not reported to management • Calibration or calibration verification deficiencies • Logbook/Notebook deficiencies • Waste disposal inadequate or facilities being used for waste disposal not adequately reviewed 19

DOECAP Treatment Storage and Disposal Facility Audits 20

TSDFs Audited in FY 2011 Facility Month/Year Diversified Scientific Services, Inc. , Kingston, TN November 2010 Materials and Energy Corporation, Oak Ridge, TN December 2010 Energy. Solutions, LLC, Oak Ridge, TN February 2011 Clean Harbors Env. Services, El Dorado, AR March 2011 Clean Harbors Env. Services, Deer Park, TX April 2011 IMPACT Services, Inc. , Oak Ridge, TN May 2011 Perma-Fix Env. Services of Florida, Gainesville May 2011 Clean Harbors Env. Services, Grantsville, UT May 2011 Waste Control Specialists LLC, Andrews, TX June 2011 Energy. Solutions, LLC, Clive, UT June 2011 Perma-Fix Northwest, Inc. , Richland, WA July 2011 21

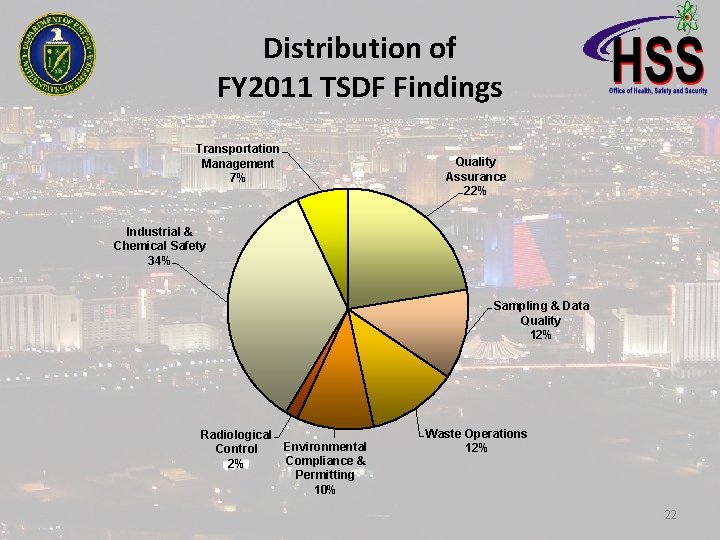

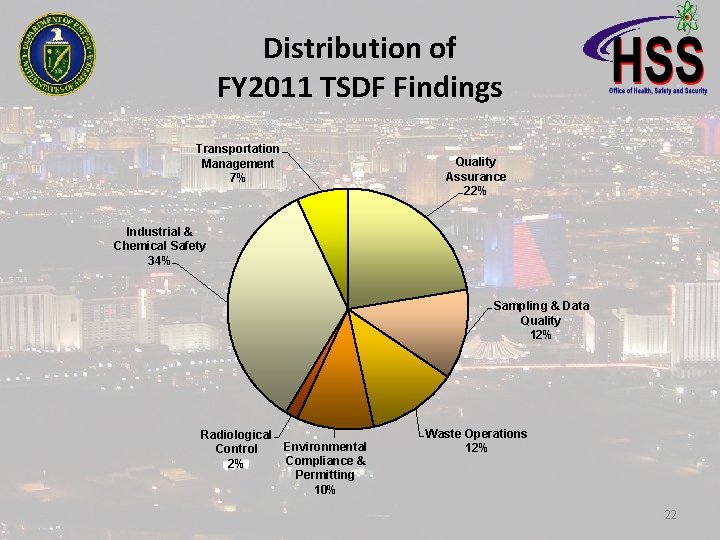

Distribution of FY 2011 TSDF Findings Transportation Management 7% Quality Assurance 22% Industrial & Chemical Safety 34% Sampling & Data Quality 12% Radiological Environmental Control Compliance & 2% Permitting 10% Waste Operations 12% 22

TSDF Common Findings Incomplete or inadequate SOP content Practices do not match SOP direction Inadequate personnel training Incomplete training documentation and records Inadequate labeling and posting (containers, placards, safety, etc. ) • Inappropriate storage of chemical and equipment • Inadequate compliance with permits and regulatory requirements • • • 23

Root Cause of Quality Findings • QA Plans and Practices do not reflect the activities of the laboratory • Conversely, the activities of the laboratory do not reflect the requirements of the QA Plans and Practices. • Lack of attention to quality management systems implementation 24

Root Cause of Quality Findings • • Increasing turnover of QA and facility staff Aging work force Increasing pressure and demands Decreasing federal and state budgets 25

DOECAP Auditing Activities • Annual audit of each facility • Audits are composed of both Federal and contractor personnel • Audits are conducted according to formalized requirements and lines of inquiry are used for the evaluation of the facilities • Findings and Observations are developed for non-compliance to established requirements 26

DOECAP Auditing Activities • There are two types of findings • Priority I – A factual statement issued by the DOECAP to document a significant item of concern, or significant deficiency regarding key management/programmatic control(s) or practice(s), which represents a concern of sufficient magnitude to potentially render the audited facility unacceptable to provide services to the DOE, or present substantial risk and liability to DOE if not resolved via immediate and expedited corrective action(s). • Can result in an immediate stop work as determined by the individual field offices 27

DOECAP Auditing Activities • Priority II Finding A factual statement issued by the DOECAP to document a deficiency representing a concern of sufficient magnitude relative to the procedures or practices of the audited facility that requires determination of a root cause and establishment of corrective actions to remedy the deficiency. • Observation A factual statement issued by the DOECAP to document an isolated deficiency, a deviation from Best Management Practices, a non-requirement-based issue(s), or an opportunity for improvement, which may warrant attention by the audited facility, but does not require documented corrective action. 28

DOECAP Auditing Activities • Following the issuance of a finding, the audited facility must develop a corrective action plan (CAP) that is evaluated by the DOECAP audit team and management • Each CAP must include a causal analysis of the elements that resulted in the out of control condition • The CAP must include a timeline for the implementation of the CAP • CAPs are tracked by the DOECAP Operations Team and are evaluated during the next DOECAP audit 29

Priority II Finding - LAB • M 1 -xxxxx-A: Over the past several months, laboratory data review and reporting processes have exhibited significant deficiencies relative to PT submissions. (Priority II) (QSAS Rev. 2. 6, Section 4. 2. 3. s and 4. 2 DOE-3; XXX QAMP Q-QA-001, Rev. 9, Section 11. 2) • The QSAS requires laboratories to establish data review processes and ensure that data review is inclusive of all quality related steps. The XXX QAMP identifies a threetiered data review process and individual SOPs contain review steps and data review checklists. However, reporting discrepancies and failures identified in multiple PT analyses indicate the process is not fully understood or implemented. 30

Priority II Finding - TSDF • QA-XXXXXX-A: The XXX QAM has not been fully implemented nor does it reflect all of the requirements of NQA-1. (Priority II) (ASME NQA-1, Requirement 4, Procurement Document Control, Requirement 7, Control of Purchased Items and Services References) • The XXX QAM does not include the NQA-1 requirement for “procurement documents to require suppliers to have a QA program consistent with the applicable requirements. ” PO 812225, to Arizona Instrument, LLC, for calibration of a Mercury Vapor Monitor, did not require the supplier to have a QA program consistent with applicable requirements for calibration services. The purchase order did not list any technical requirements for the scope of work, such as range, precision, accuracy, or manufacturer’s recommended practices for calibration. The company was included on the Evaluated Suppliers List. The basis of qualification was International Standards Organization (ISO) 9001. The ISO certificate was reviewed for its scope of activities and calibration was not included as an activity subject to the supplier’s QA program. The correct ISO standard for calibration services is ISO-17025. 31

Summary • Continuing oversight and evaluation of Quality Assurance Plans and Procedures is key to the success of DOECAP • Continuing audits of the facilities have resulted in a reduction of quality related failures. 32

Recognition of HSS • I would like to recognize DOE HSS-23 for the support and funding for this presentation. 33

ASP Program Contact Information George E. Detsis, Analytical Services Program (ASP) Manager Phone: (301) 903 -1488 E-mail: George. Detsis@hq. doe. gov Jorge Ferrer - DOECAP Manager, DOE -- Oak Ridge Phone: (865) 576 -6638 E-mail: ferrerja@oro. doe. gov Guy Marlette, MAPEP Coordinator, DOE -- Idaho Phone: (208) 526 -2532 E-mail: marletgm@id. doe. gov Brent Pulsipher, VSP Coordinator, PNNL Phone: (509) 375 -3989 E-mail: Brent. Pulsipher@pnl. gov 34