Document classification based on web search hit counts

Document classification based on web search hit counts Masaya Kaneko, Shusuke Okamoto, Masaki Kohana, You Inayoshi (Seikei University, Tokyo, Japan) IIWAS '12 Proc. of the 14 th Int. Conf. on Information Integration and Web-based Applications & Services, pp. 223 -228. ACM. 2013/10/29 haseshun 1

INTRODUCTION ▌ Advent of web systems and Internet technologies ►The amount of information becomes enormous. →Some automatic classification is needed. ▌ We can have a huge number of re-search documents as PDF files. ▌ A technique to classify research documents automatically. ►Use the number of hit for AND-search on two words 2 with a web search engine.

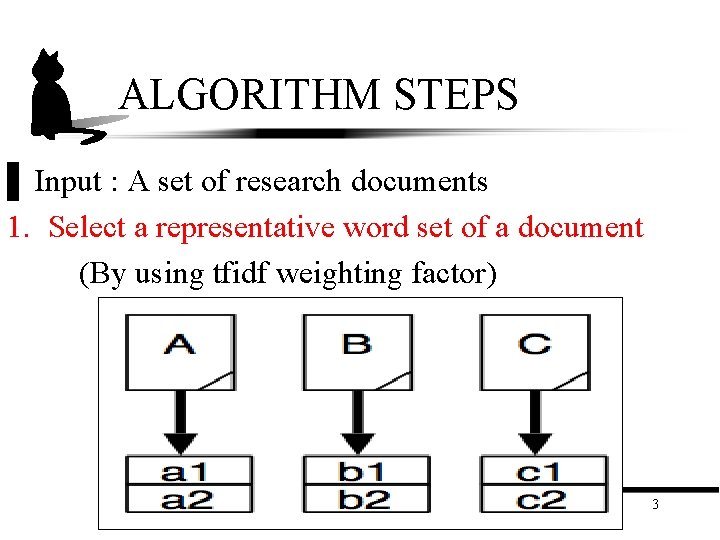

ALGORITHM STEPS ▌ Input : A set of research documents 1. Select a representative word set of a document (By using tfidf weighting factor) 3

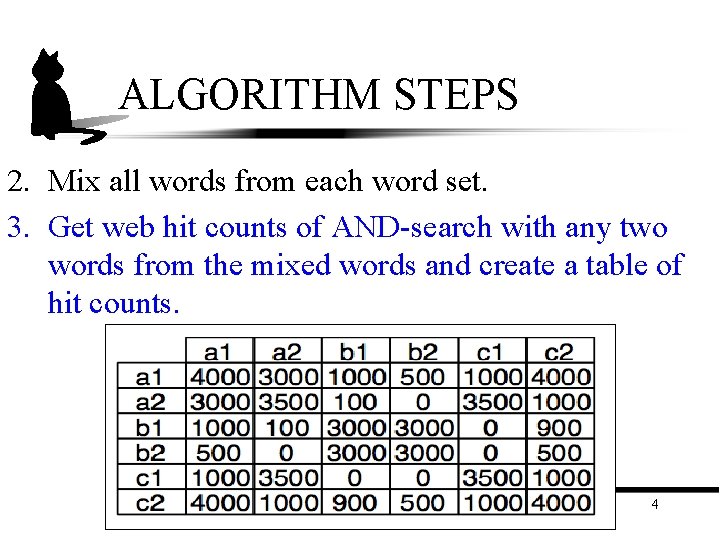

ALGORITHM STEPS 2. Mix all words from each word set. 3. Get web hit counts of AND-search with any two words from the mixed words and create a table of hit counts. 4

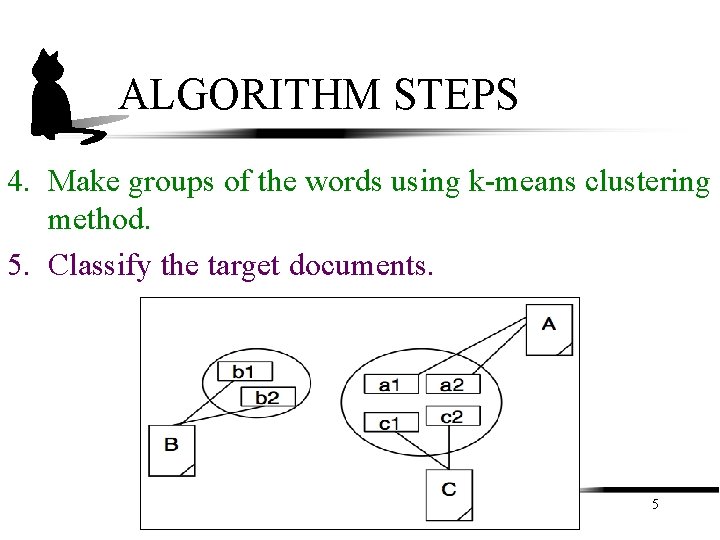

ALGORITHM STEPS 4. Make groups of the words using k-means clustering method. 5. Classify the target documents. 5

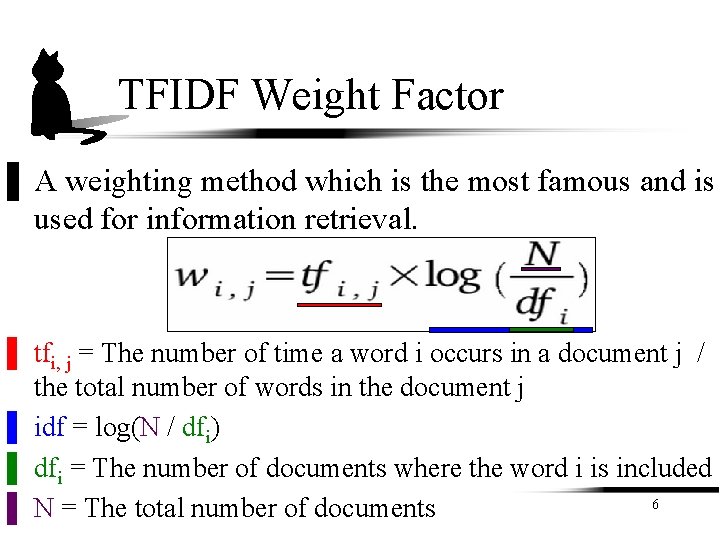

TFIDF Weight Factor ▌ A weighting method which is the most famous and is used for information retrieval. ▌ tfi, j = The number of time a word i occurs in a document j / the total number of words in the document j ▌ idf = log(N / dfi) ▌ dfi = The number of documents where the word i is included 6 ▌ N = The total number of documents

TFIDF Weight Factor ▌ Calculate tfidf weight factor for each word in a document ▌ Remove some kind of words ►Short words less than three characters ►Words which begins with a capital letter ▌ Remove words to their base form or stem ►ex) The plural of noun、The ‘ing’ form of verb 7

TFIDF Weight Factor ▌ The representative words of the document are selected ►Each selected word has its tfidf value which is greater than or equal to the average of 30 values from higher rank words in each document. ►The selection is done by at least three words and at most ten words. 8

AND-search on the web ▌ Web hit counts of AND-search with any two words from the representative words are retrieved. ▌ A table of hit counts is created. ▌ Diagonal element H(xi, xi) of this table ✕The result of the search ”xi xi” or “xi” The maximum value in the same column 9

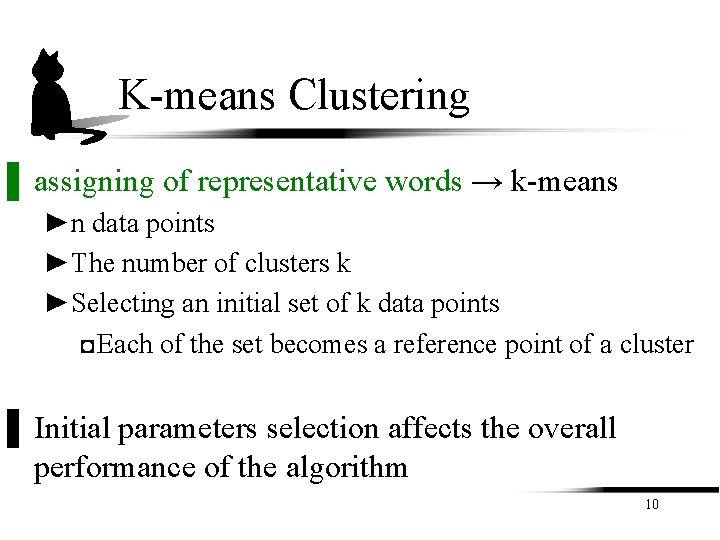

K-means Clustering ▌ assigning of representative words → k-means ►n data points ►The number of clusters k ►Selecting an initial set of k data points ◘Each of the set becomes a reference point of a cluster ▌ Initial parameters selection affects the overall performance of the algorithm 10

K-means Clustering ▌ n data points ►calculate the nearest reference point ►allocate it to the cluster related to the nearest ▌ Each of new clusters ►calculate the mean to be the new reference point in the cluster ▌ The repetition stops when no cluster changes ►The set of clusters at that point becomes the result of this 11 algorithm

K-means Clustering Algorithm ▌ The disadvantage of K-means clustering algorithm ►Cluster centroids are chosen randomly. 1. Selection of centroid affects the convergence time of the algorithm. 2. For the same data set, different centroid selection will produce different kinds of clusters. In this paper ・・・ ▌ The word with the higher tfidf value is selected preferentially. ►Give an unique result in several trials, because no 18 randomness is used

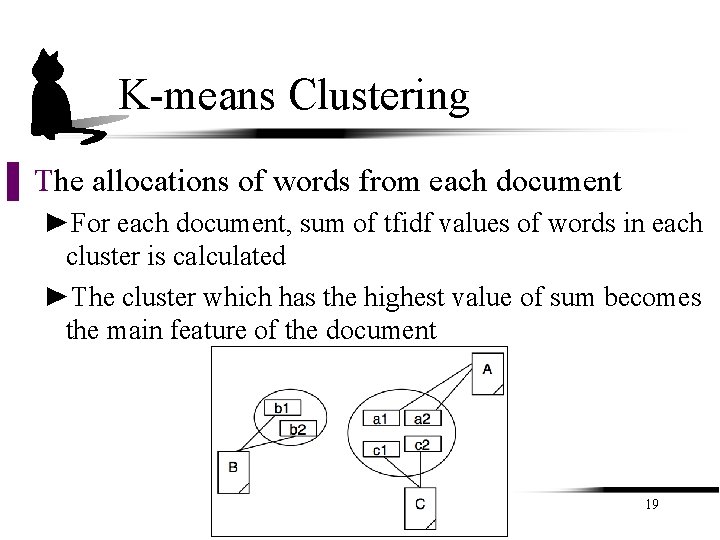

K-means Clustering ▌ The allocations of words from each document ►For each document, sum of tfidf values of words in each cluster is calculated ►The cluster which has the highest value of sum becomes the main feature of the document 19

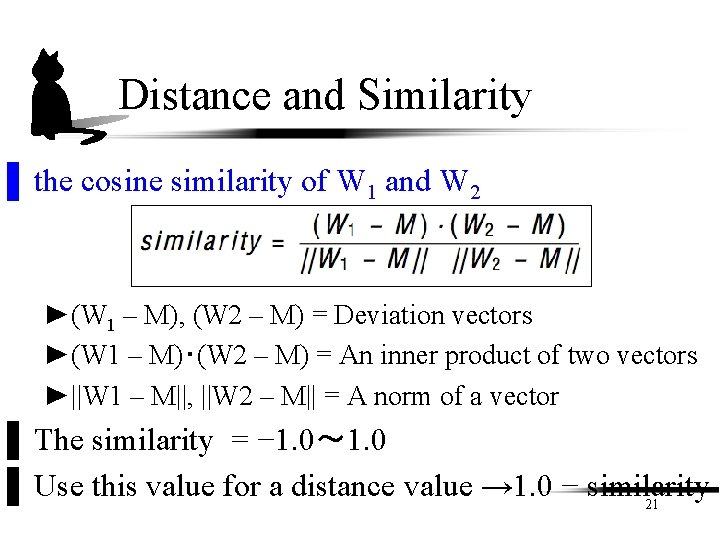

Distance and Similarity ▌ a distance value for any two words ►A set of web hit counts to each word → A word vector ►The cosine similarity is used to calculate a distance ▌ A word vector Wi ►n = The total number of words which the table of hit counts consists of ►tfidf(xi) = The tfidf value for the word xi ►H(xi、xj) = The value from the table of hit counts ►ex) The mean vector M = (W 1 + W 2 + W 3 + W 4)20/ 4

Distance and Similarity ▌ the cosine similarity of W 1 and W 2 ►(W 1 – M), (W 2 – M) = Deviation vectors ►(W 1 – M)・(W 2 – M) = An inner product of two vectors ►||W 1 – M||, ||W 2 – M|| = A norm of a vector ▌ The similarity = − 1. 0〜 1. 0 ▌ Use this value for a distance value → 1. 0 − similarity 21

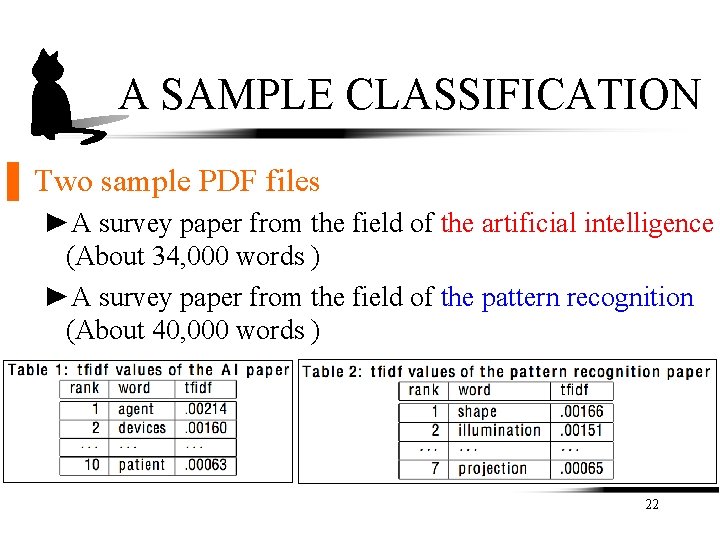

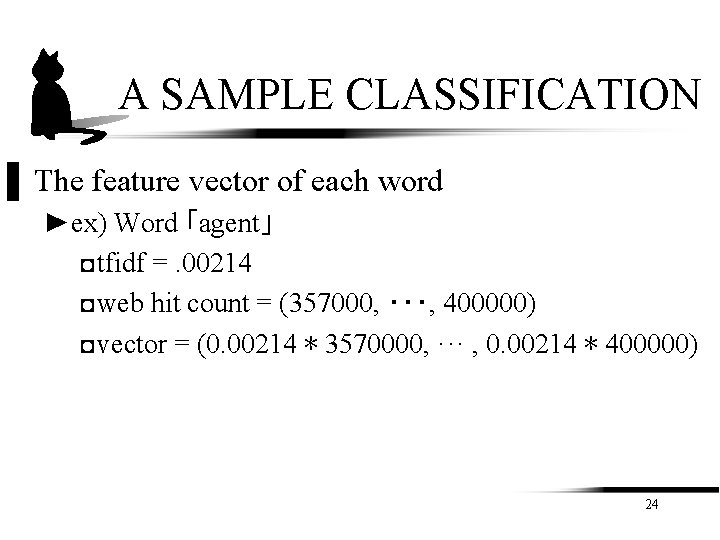

A SAMPLE CLASSIFICATION ▌ Two sample PDF files ►A survey paper from the field of the artificial intelligence (About 34, 000 words ) ►A survey paper from the field of the pattern recognition (About 40, 000 words ) 22

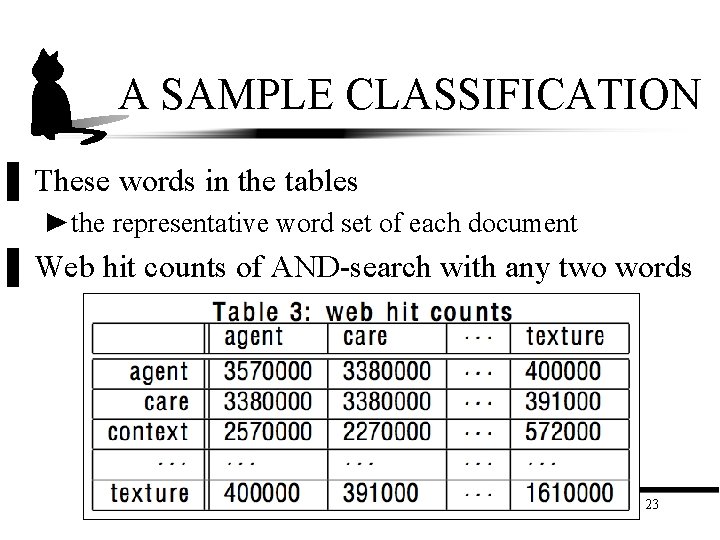

A SAMPLE CLASSIFICATION ▌ These words in the tables ►the representative word set of each document ▌ Web hit counts of AND-search with any two words 23

A SAMPLE CLASSIFICATION ▌ The feature vector of each word ►ex) Word 「agent」 ◘tfidf =. 00214 ◘web hit count = (357000, ・・・, 400000) ◘vector = (0. 00214 ∗ 3570000, ··· , 0. 00214 ∗ 400000) 24

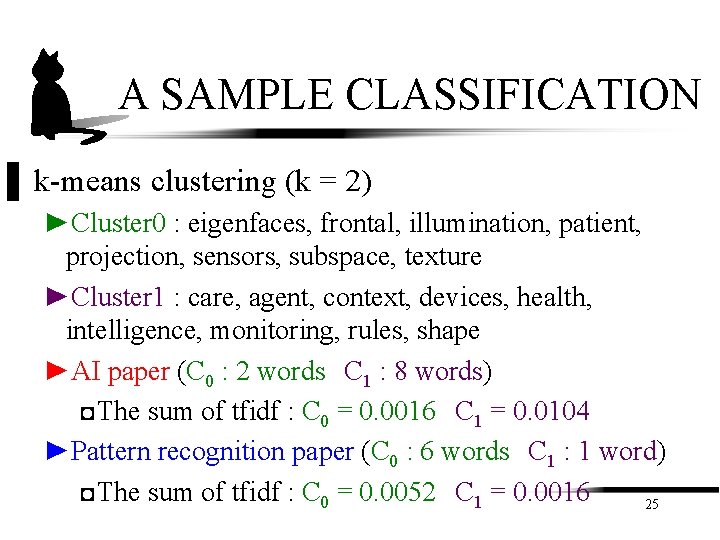

A SAMPLE CLASSIFICATION ▌ k-means clustering (k = 2) ►Cluster 0 : eigenfaces, frontal, illumination, patient, projection, sensors, subspace, texture ►Cluster 1 : care, agent, context, devices, health, intelligence, monitoring, rules, shape ►AI paper (C 0 : 2 words C 1 : 8 words) ◘The sum of tfidf : C 0 = 0. 0016 C 1 = 0. 0104 ►Pattern recognition paper (C 0 : 6 words C 1 : 1 word) ◘The sum of tfidf : C 0 = 0. 0052 C 1 = 0. 0016 25

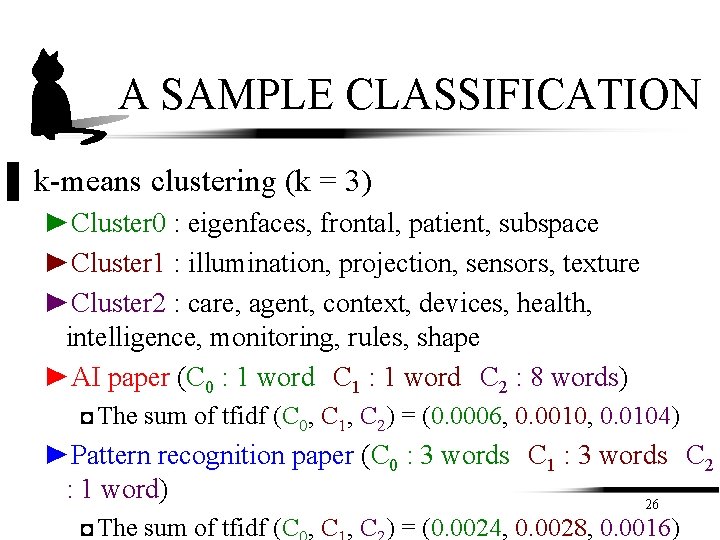

A SAMPLE CLASSIFICATION ▌ k-means clustering (k = 3) ►Cluster 0 : eigenfaces, frontal, patient, subspace ►Cluster 1 : illumination, projection, sensors, texture ►Cluster 2 : care, agent, context, devices, health, intelligence, monitoring, rules, shape ►AI paper (C 0 : 1 word C 1 : 1 word C 2 : 8 words) ◘ The sum of tfidf (C 0, C 1, C 2) = (0. 0006, 0. 0010, 0. 0104) ►Pattern recognition paper (C 0 : 3 words C 1 : 3 words C 2 : 1 word) 26 ◘ The sum of tfidf (C , C ) = (0. 0024, 0. 0028, 0. 0016)

EXPERIMENTAL RESULT ▌ Experiment ►Web hit counts : Google Scholar ►Two preliminary experiment ◘ 20 newsgroup data set ◘survey papers from ACM Computing Surveys 27

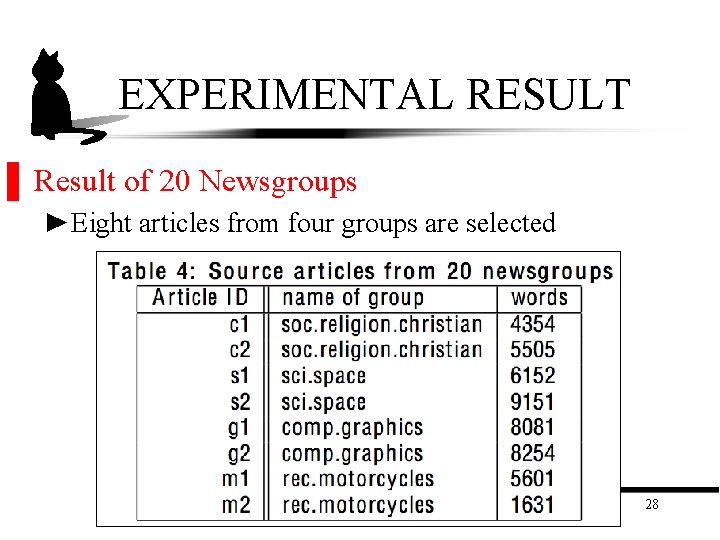

EXPERIMENTAL RESULT ▌ Result of 20 Newsgroups ►Eight articles from four groups are selected 28

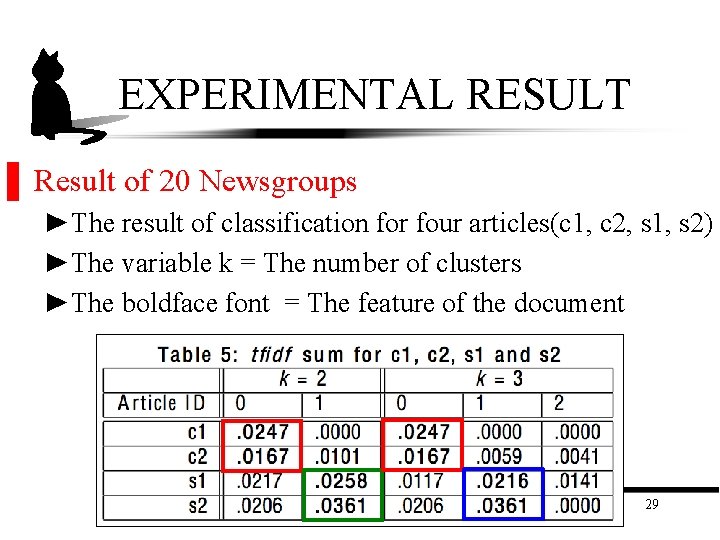

EXPERIMENTAL RESULT ▌ Result of 20 Newsgroups ►The result of classification for four articles(c 1, c 2, s 1, s 2) ►The variable k = The number of clusters ►The boldface font = The feature of the document 29

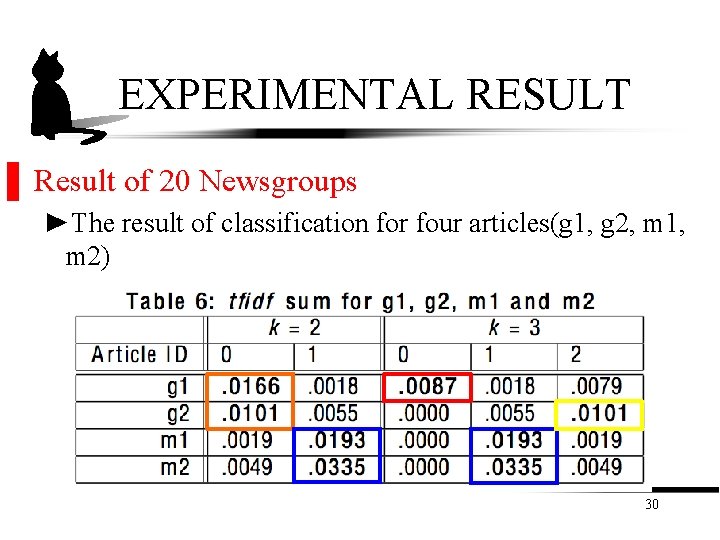

EXPERIMENTAL RESULT ▌ Result of 20 Newsgroups ►The result of classification for four articles(g 1, g 2, m 1, m 2) 30

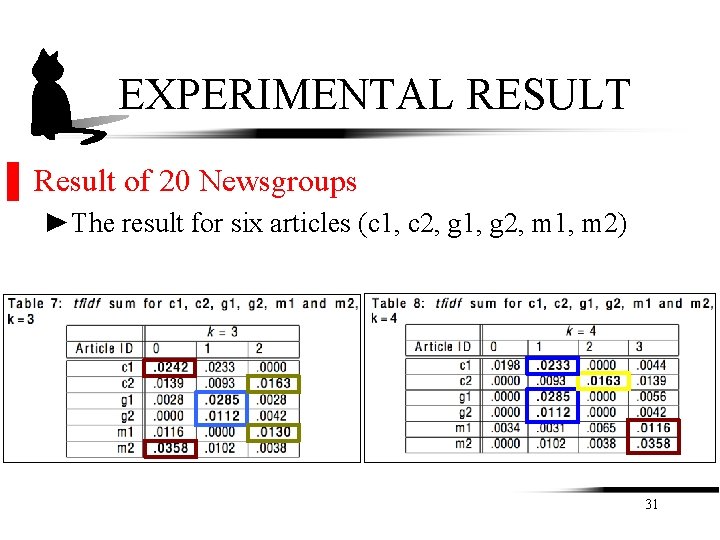

EXPERIMENTAL RESULT ▌ Result of 20 Newsgroups ►The result for six articles (c 1, c 2, g 1, g 2, m 1, m 2) 31

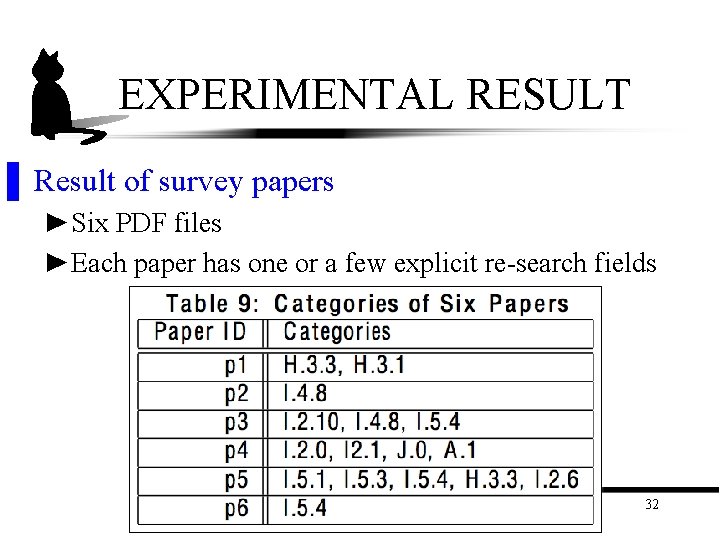

EXPERIMENTAL RESULT ▌ Result of survey papers ►Six PDF files ►Each paper has one or a few explicit re-search fields 32

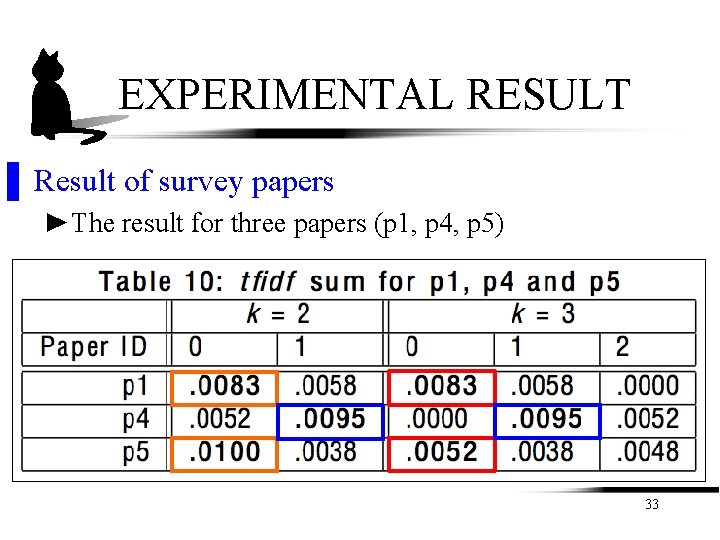

EXPERIMENTAL RESULT ▌ Result of survey papers ►The result for three papers (p 1, p 4, p 5) 33

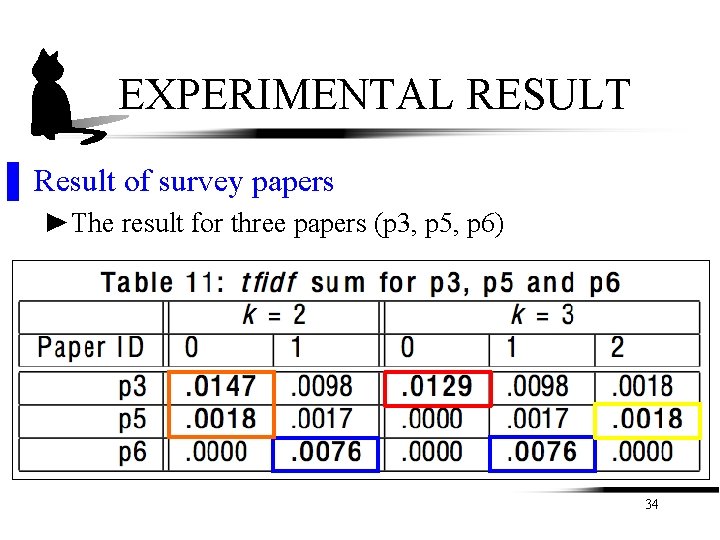

EXPERIMENTAL RESULT ▌ Result of survey papers ►The result for three papers (p 3, p 5, p 6) 34

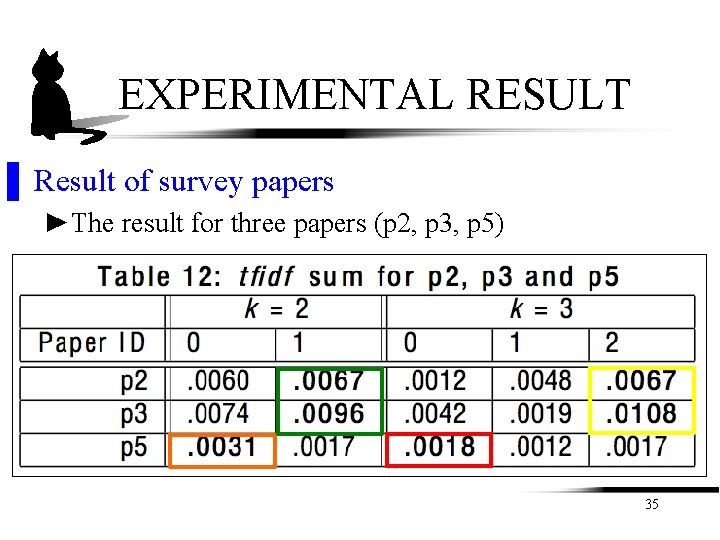

EXPERIMENTAL RESULT ▌ Result of survey papers ►The result for three papers (p 2, p 3, p 5) 35

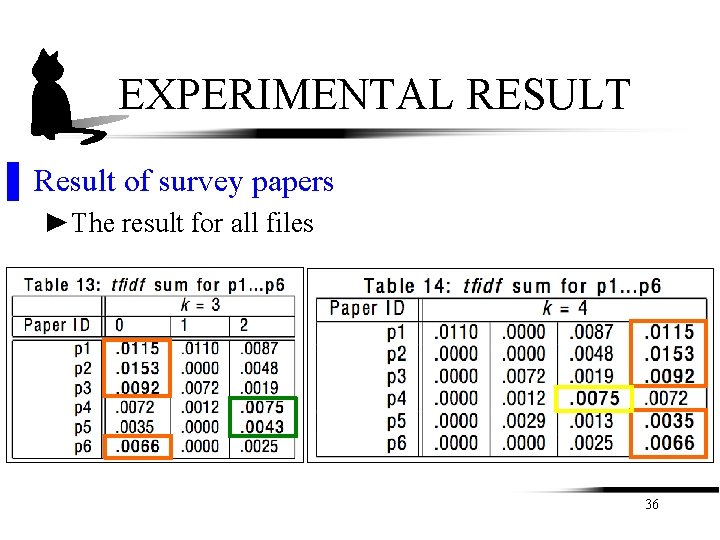

EXPERIMENTAL RESULT ▌ Result of survey papers ►The result for all files 36

CONCLUSION ▌ Propose a document classification method based on web search hit counts ▌ Use AND-search on two words from representative words of each document ▌ Utilize both tfidf value of each word, the cosine similarity and k-means clustering method ▌ Preliminary experimental result shows that it produces a classification, which is not random ▌ One of key points to improve this algorithm is the selection 37 of representative words

- Slides: 31