Distributed Transaction Management Outline n Introduction n Concurrency

Distributed Transaction Management

Outline n Introduction n Concurrency Control Protocols êLocking êTimestamping n Deadlock Handling n Replication

Introduction

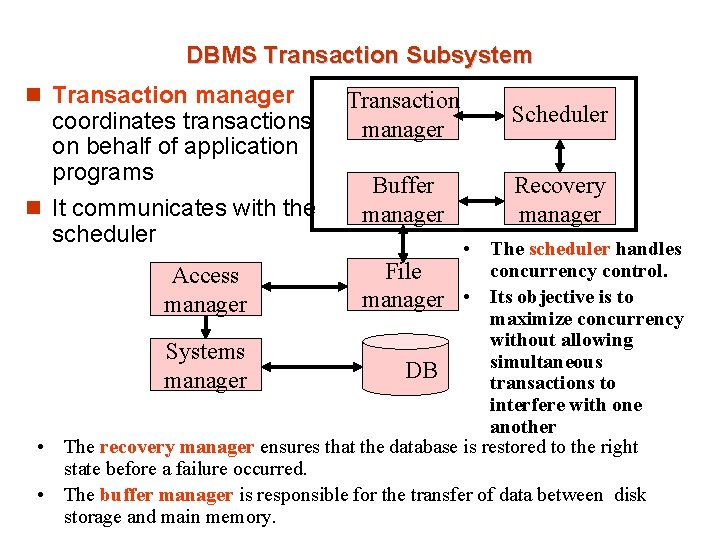

DBMS Transaction Subsystem n Transaction manager coordinates transactions on behalf of application programs n It communicates with the scheduler Transaction manager Scheduler Buffer manager Recovery manager • The scheduler handles concurrency control. File Access manager • Its objective is to manager maximize concurrency without allowing Systems simultaneous DB manager transactions to interfere with one another • The recovery manager ensures that the database is restored to the right state before a failure occurred. • The buffer manager is responsible for the transfer of data between disk storage and main memory.

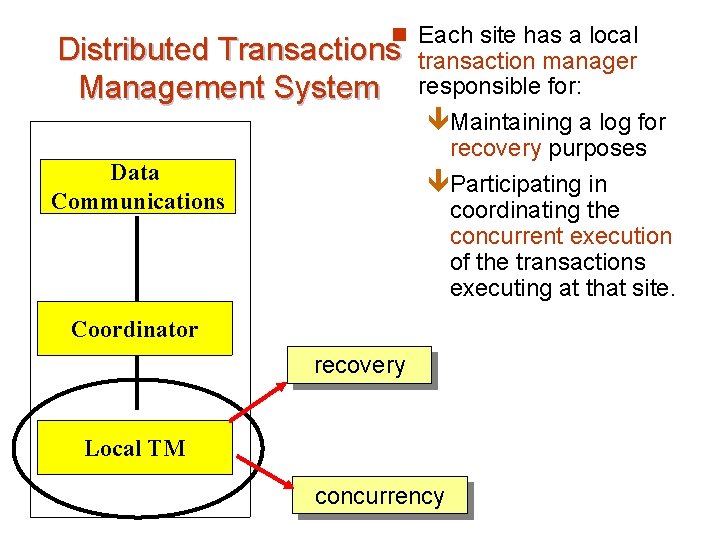

n Each site has a local Distributed Transactions Management System Data Communications transaction manager responsible for: êMaintaining a log for recovery purposes êParticipating in coordinating the concurrent execution of the transactions executing at that site. Coordinator recovery Local TM concurrency

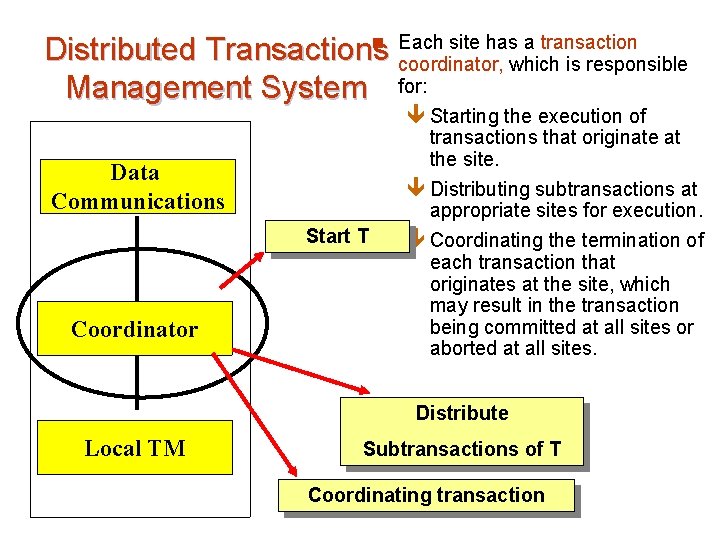

n Each site has a transaction Distributed Transactions coordinator, which is responsible Management System for: ê Starting the execution of Data Communications Start T Coordinator transactions that originate at the site. ê Distributing subtransactions at appropriate sites for execution. ê Coordinating the termination of each transaction that originates at the site, which may result in the transaction being committed at all sites or aborted at all sites. Distribute Local TM Subtransactions of T Coordinating transaction

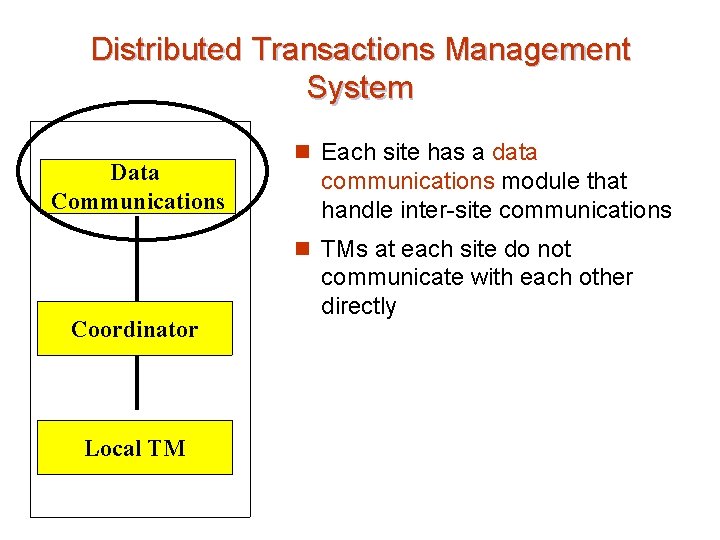

Distributed Transactions Management System Data Communications n Each site has a data communications module that handle inter-site communications n TMs at each site do not Coordinator Local TM communicate with each other directly

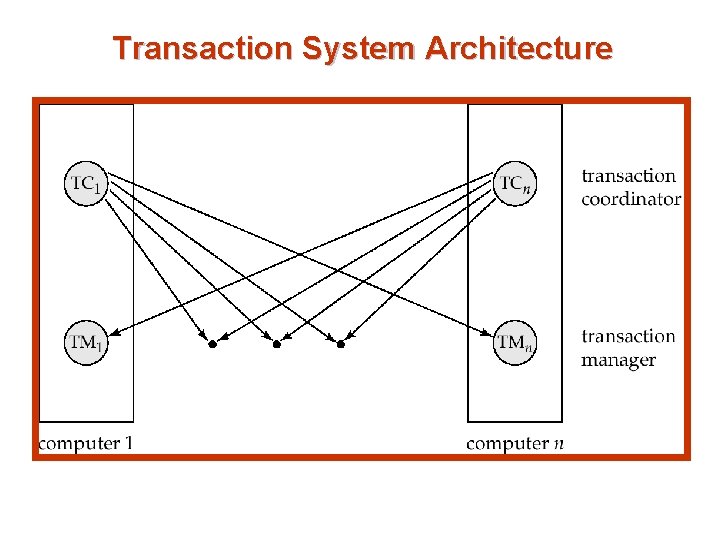

Transaction System Architecture

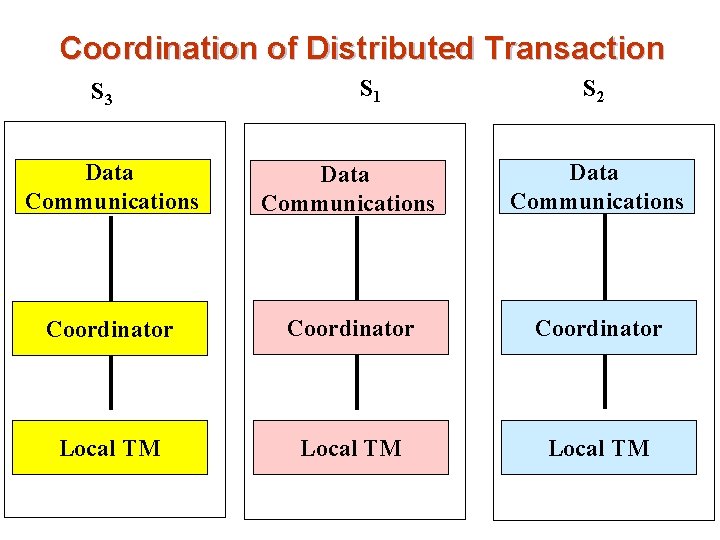

Coordination of Distributed Transaction S 3 S 1 S 2 Data Communications Coordinator Local TM

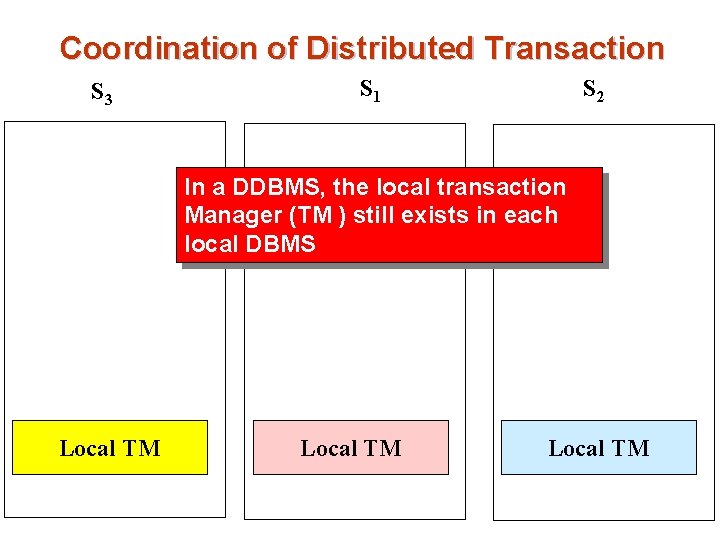

Coordination of Distributed Transaction S 3 S 1 S 2 In a DDBMS, the local transaction Manager (TM ) still exists in each local DBMS Local TM

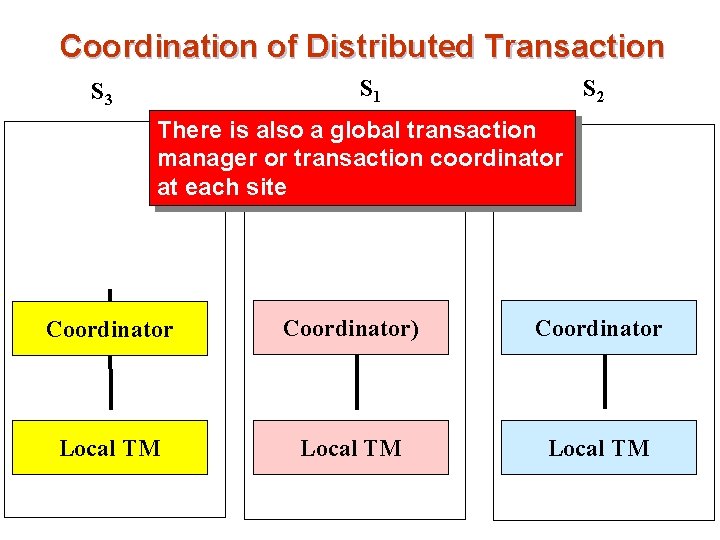

Coordination of Distributed Transaction S 1 S 3 S 2 There is also a global transaction manager or transaction coordinator at each site Coordinator) Coordinator Local TM

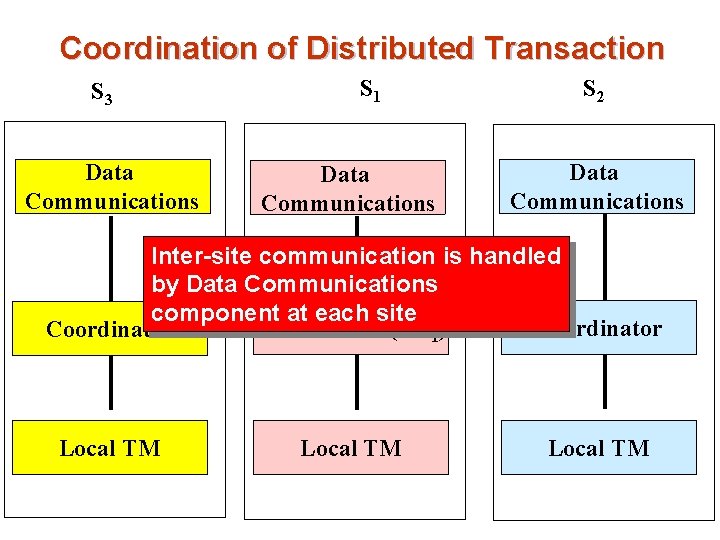

Coordination of Distributed Transaction S 3 Data Communications S 1 Data Communications S 2 Data Communications Inter-site communication is handled by Data Communications component at each site Coordinator (TC 1) Coordinator Local TM

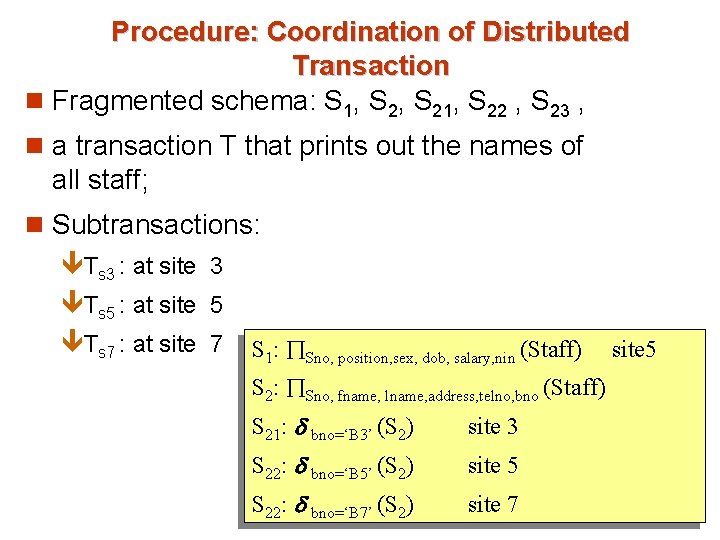

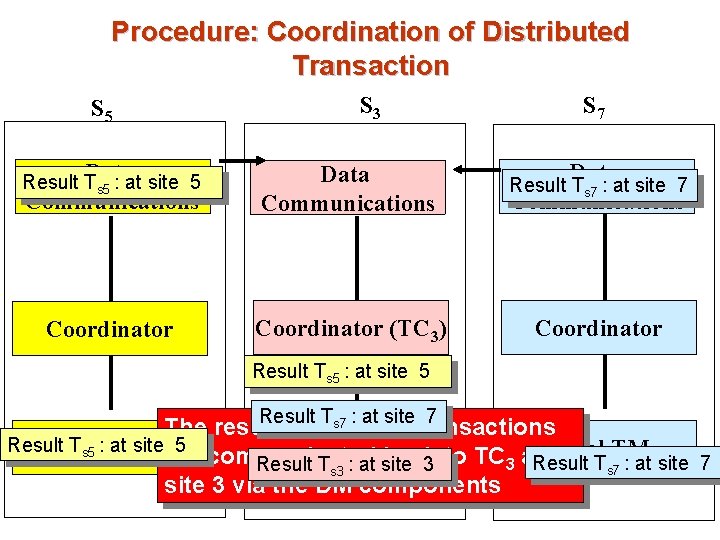

Procedure: Coordination of Distributed Transaction n Fragmented schema: S 1, S 21, S 22 , S 23 , n a transaction T that prints out the names of all staff; n Subtransactions: êTs 3 : at site 3 êTs 5 : at site 5 êTs 7 : at site 7 S 1: Sno, position, sex, dob, salary, nin (Staff) S 2: Sno, fname, lname, address, telno, bno (Staff) S 21: bno=‘B 3’ (S 2) site 3 S 22: bno=‘B 5’ (S 2) site 5 S 22: bno=‘B 7’ (S 2) site 7 site 5

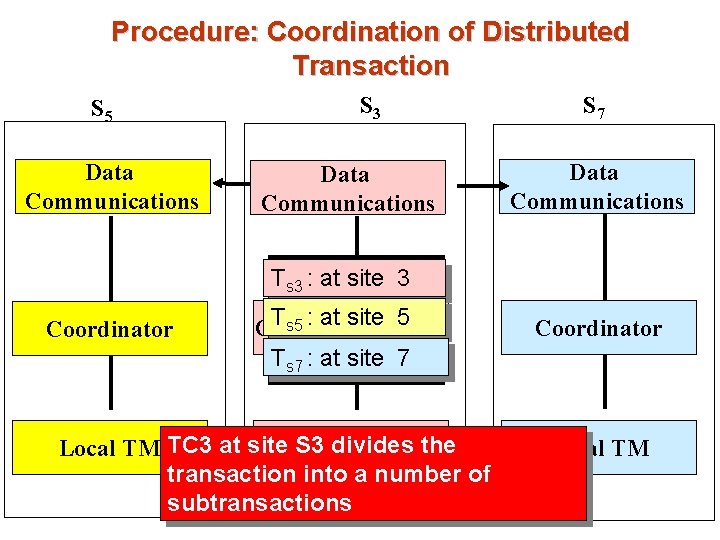

Procedure: Coordination of Distributed Transaction S 5 Data Communications S 3 Data Communications S 7 Data Communications Ts 3 : at site 3 Coordinator Ts 5 : at site 5 ) T (TC Coordinator 1 Ts 7 : at site 7 divides Local TM TC 3 at site S 3 Local TM the transaction into a number of subtransactions Coordinator Local TM

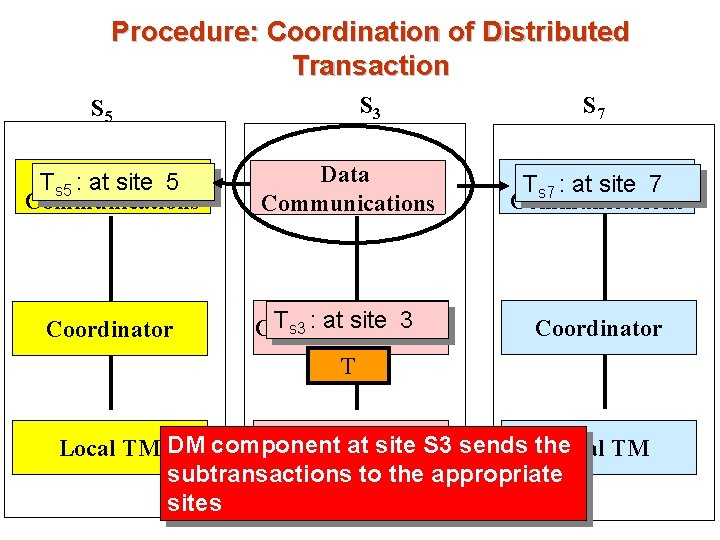

Procedure: Coordination of Distributed Transaction S 3 S 5 S 7 Ts 5 : Data at site 5 Communications Data Communications Ts 7 : Data at site 7 Communications Coordinator Ts 3 : at site (TC 3 ) Coordinator 1 Coordinator T site S 3 sends the Local TM DM component Localat. TM Local TM subtransactions to the appropriate sites

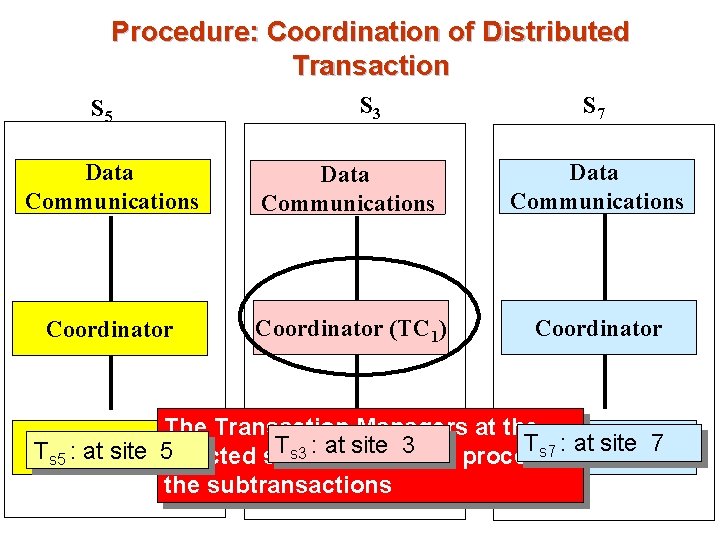

Procedure: Coordination of Distributed Transaction S 5 S 3 S 7 Data Communications Coordinator (TC 1) Coordinator The Transaction Managers at the Ts 7 Local : at site Ts 3 Local : (S 5 at site TM 5 affected sites TM TM 7 Ts 5 Local : at site and 3 S 7) process the subtransactions

Procedure: Coordination of Distributed Transaction S 5 S 3 S 7 Data Result T s 5 : at site 5 Communications Data Communications Result Ts 7 : at site 7 Data Communications Coordinator (TC 3) Coordinator Result Ts 5 : at site 5 Result Ts 7 : at site 7 The results of the subtransactions Result. Local Ts 5 : at. TM site 5 Local TM are communicated back to TC at 3 Result Ts 7 : at site 7 Result Ts 3 : at site 3 via the DM components

Concurrency Control in Distributed Data Bases

Problems in Concurrent Use of DDBMS n Lost update n Uncommitted dependency n Inconsistent analysis n Multiple-copy consistency problem

Concurrency Control in DDB n Modify concurrency control schemes ( locking and timestamping) for use in distributed environment. n We assume that each site participates in the execution of a commit protocol to ensure global transaction atomicity. n We assume (for now) all replicas of any item are updated.

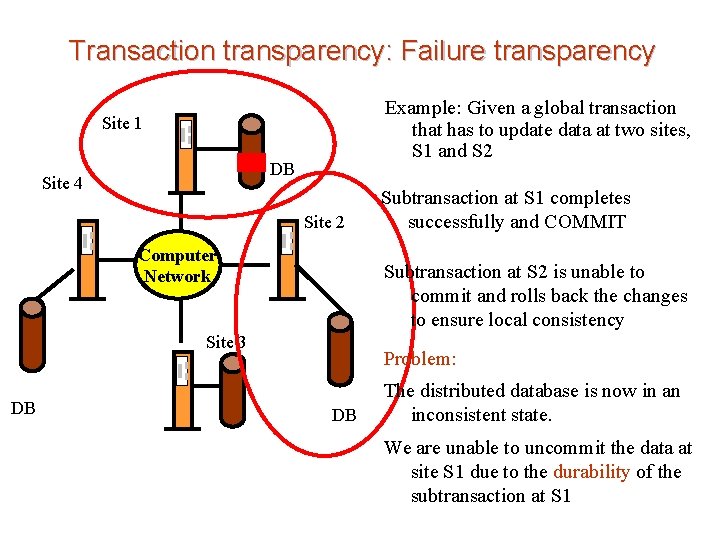

Transaction transparency: Failure transparency Example: Given a global transaction that has to update data at two sites, S 1 and S 2 Site 1 DB Site 4 Site 2 Computer Network Subtransaction at S 2 is unable to commit and rolls back the changes to ensure local consistency Site 3 DB Subtransaction at S 1 completes successfully and COMMIT Problem: DB The distributed database is now in an inconsistent state. We are unable to uncommit the data at site S 1 due to the durability of the subtransaction at S 1

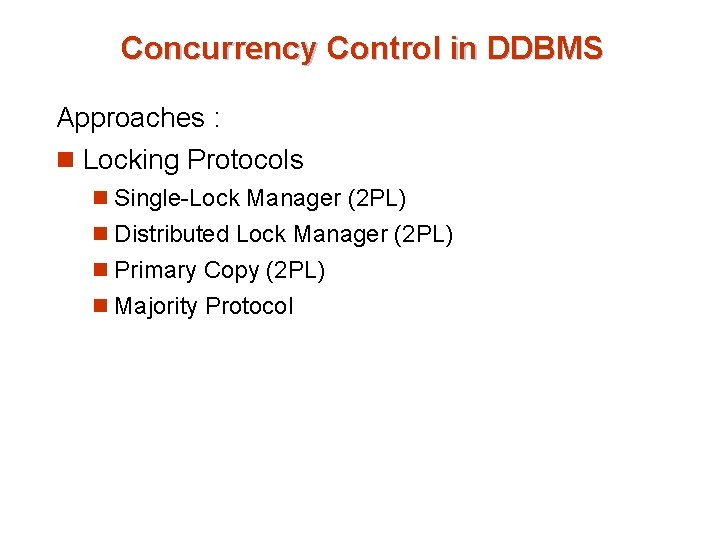

Concurrency Control in DDBMS Approaches : n Locking Protocols n Single-Lock Manager (2 PL) n Distributed Lock Manager (2 PL) n Primary Copy (2 PL) n Majority Protocol

Locking Protocols

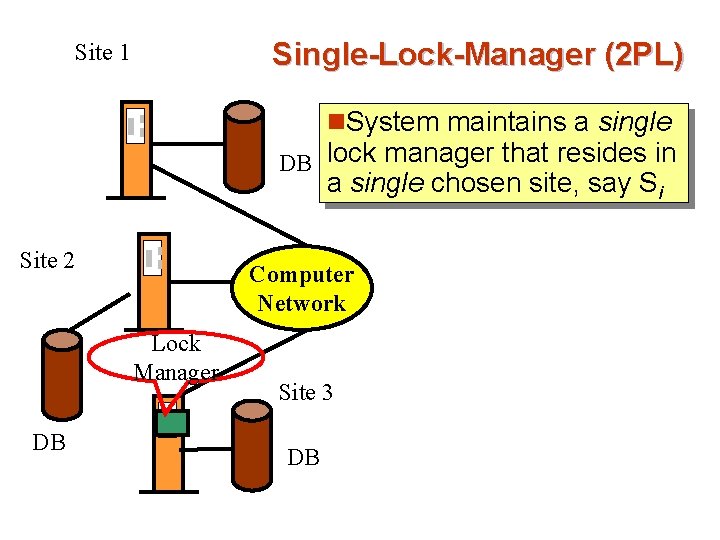

Single-Lock-Manager (2 PL) Site 1 n. System maintains a single DB lock manager that resides in a single chosen site, say Si Site 2 Computer Network Lock Manager DB Site 3 DB

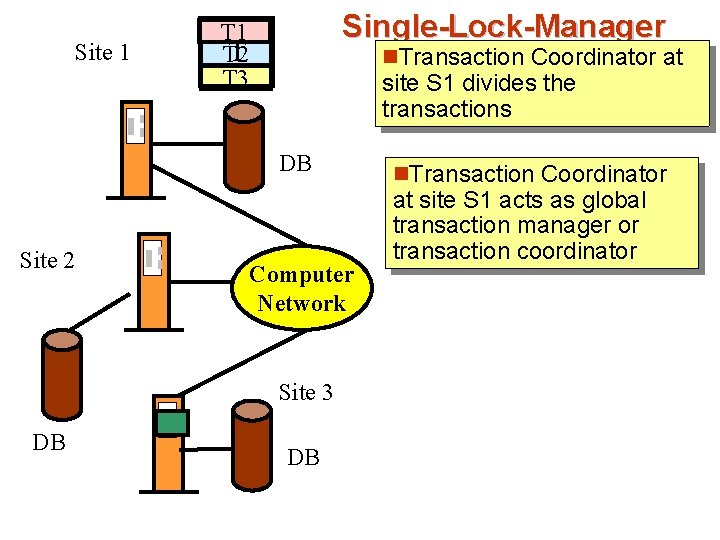

Site 1 Single-Lock-Manager T 1 T T 2 T 3 n. Transaction Coordinator at site S 1 divides the transactions DB Site 2 Computer Network Site 3 DB DB n. Transaction Coordinator at site S 1 acts as global transaction manager or transaction coordinator

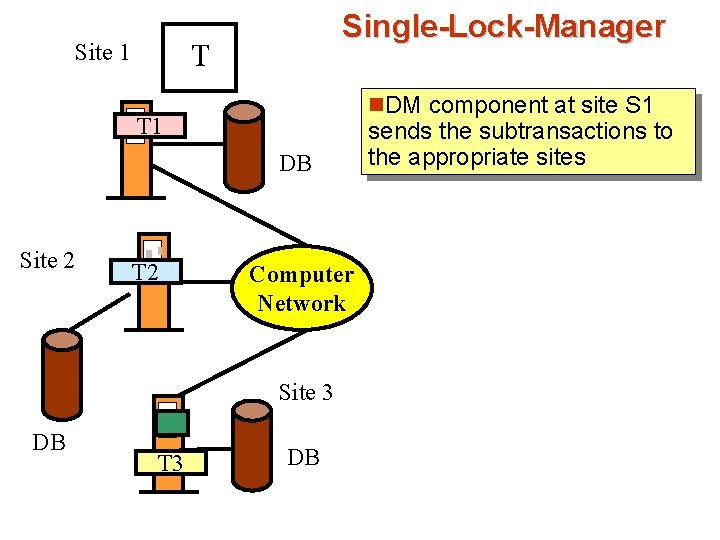

Single-Lock-Manager T Site 1 n. DM component at site S 1 T 1 DB Site 2 T 2 Computer Network Site 3 DB T 3 DB sends the subtransactions to the appropriate sites

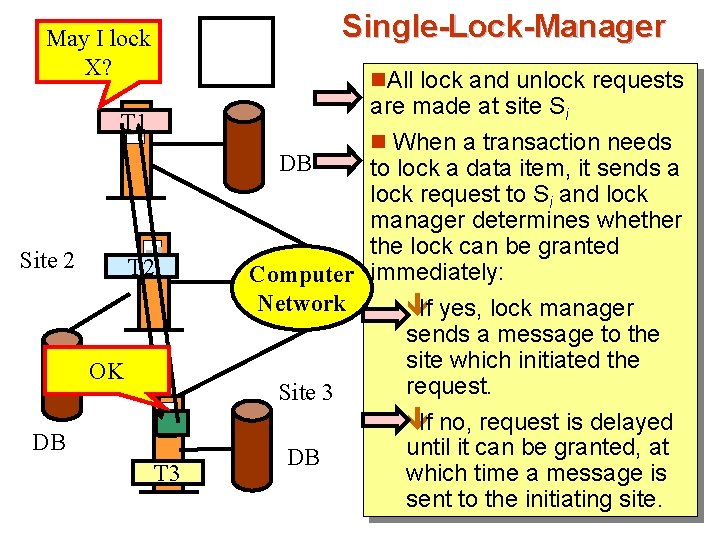

Single-Lock-Manager May I lock Site 1 X? n. All lock and unlock requests T 1 Site 2 T 2 OK DB T 3 are made at site Si n When a transaction needs DB to lock a data item, it sends a lock request to Si and lock manager determines whether the lock can be granted Computer immediately: Network êIf yes, lock manager sends a message to the site which initiated the request. Site 3 êIf no, request is delayed until it can be granted, at DB which time a message is sent to the initiating site.

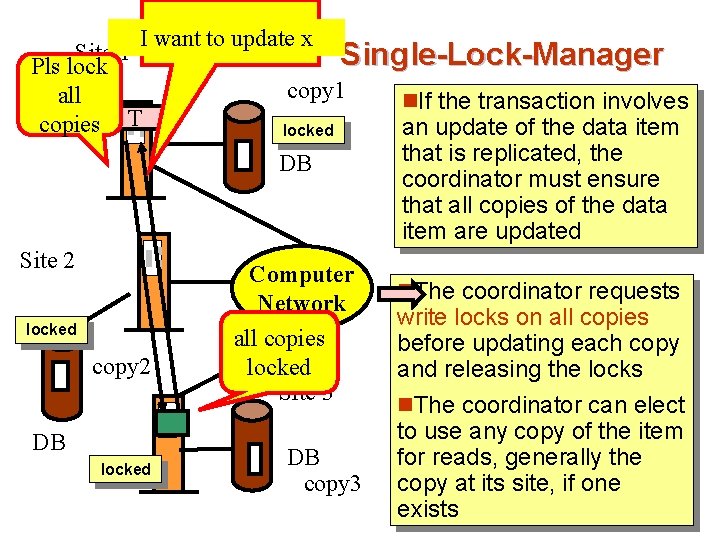

I want to update x Site 1 Pls lock all copies T Single-Lock-Manager copy 1 locked DB Site 2 locked copy 2 DB locked Computer Network all copies locked Site 3 DB copy 3 n. If the transaction involves an update of the data item that is replicated, the coordinator must ensure that all copies of the data item are updated n. The coordinator requests write locks on all copies before updating each copy and releasing the locks n. The coordinator can elect to use any copy of the item for reads, generally the copy at its site, if one exists

Single-Lock-Manager Approach Advantages of scheme: êSimplementation êSimple deadlock handling n Disadvantages of scheme are: êBottleneck: lock manager site becomes a bottleneck êVulnerability: system is vulnerable to lock manager site failure.

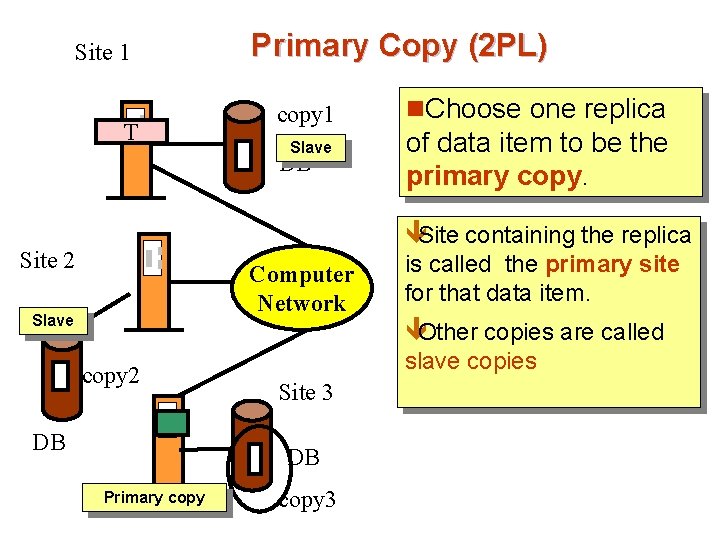

Site 1 T Primary Copy (2 PL) copy 1 Slave DB n. Choose one replica of data item to be the primary copy. êSite containing the replica Site 2 Computer Network Slave is called the primary site for that data item. êOther copies are called copy 2 DB slave copies Site 3 DB Primary copy 3

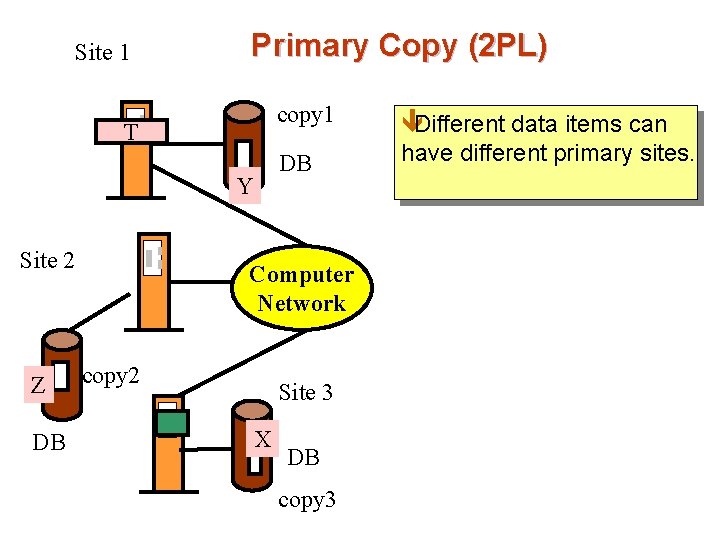

Site 1 Primary Copy (2 PL) T Y Site 2 Z DB copy 1 êDifferent data items can DB have different primary sites. Computer Network copy 2 Site 3 X DB copy 3

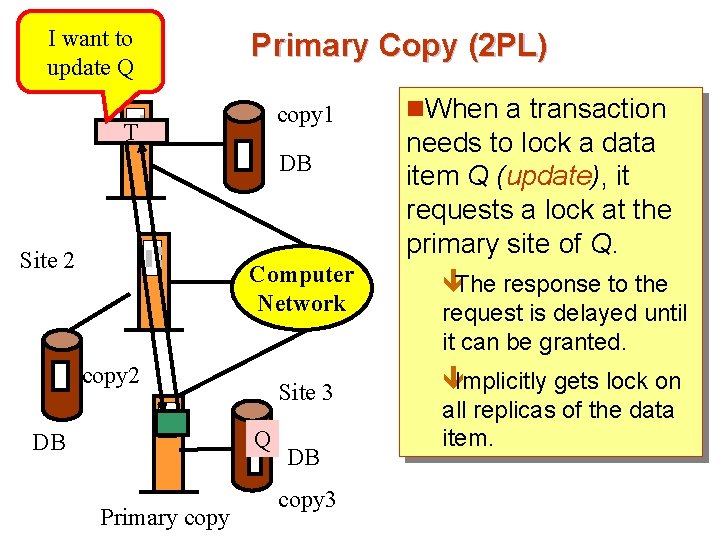

I want to Site 1 update Q Primary Copy (2 PL) copy 1 T DB Site 2 Computer Network copy 2 Site 3 Q DB Primary copy DB copy 3 n. When a transaction needs to lock a data item Q (update), it requests a lock at the primary site of Q. êThe response to the request is delayed until it can be granted. êImplicitly gets lock on all replicas of the data item.

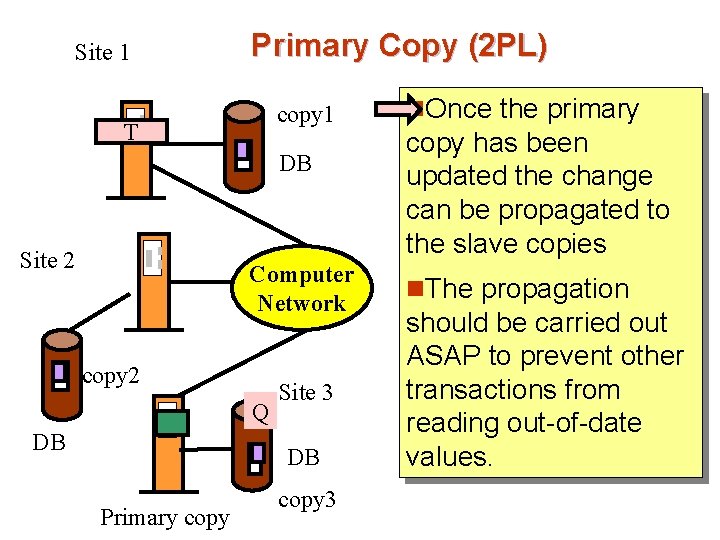

Site 1 Primary Copy (2 PL) copy 1 T DB Site 2 Computer Network copy 2 Q DB Site 3 DB Primary copy 3 n. Once the primary copy has been updated the change can be propagated to the slave copies n. The propagation should be carried out ASAP to prevent other transactions from reading out-of-date values.

Primary Copy (2 PL) n Benefit êCan be used when data is selectively replicated, updates are infrequent and sites do not always need the very latest version of the data n Drawback êIf the primary site of Q fails, Q is inaccessible even though other sites containing a replica may be accessible.

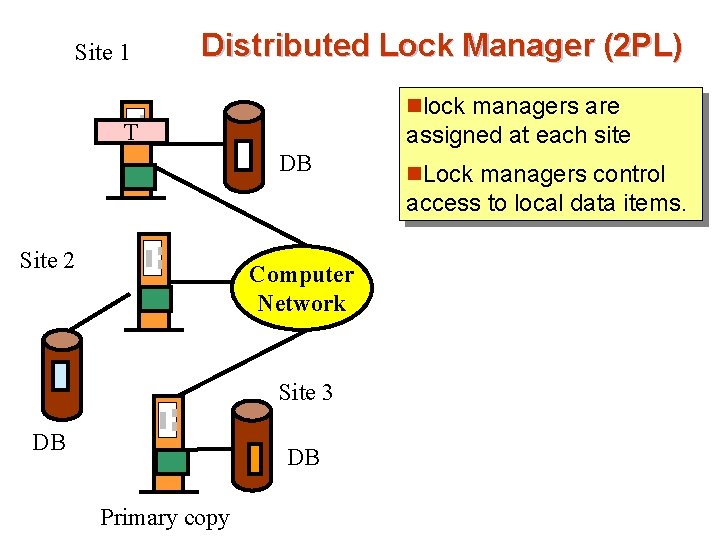

Site 1 Distributed Lock Manager (2 PL) nlock managers are T assigned at each site DB n. Lock managers control access to local data items. Site 2 Computer Network Site 3 DB DB Primary copy

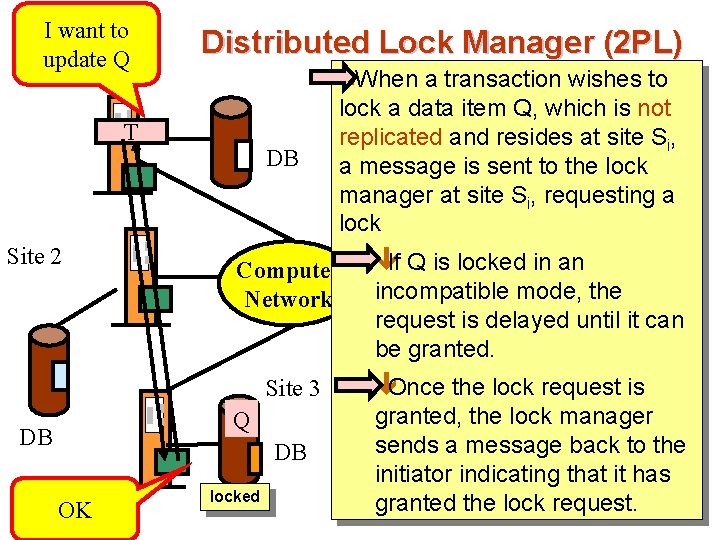

I want to Site 1 Q update Distributed Lock Manager (2 PL) n. When a transaction wishes to T Site 2 DB Computer Network Site 3 Q DB DB OK lock a data item Q, which is not replicated and resides at site Si, a message is sent to the lock manager at site Si, requesting a locked êIf Q is locked in an incompatible mode, the request is delayed until it can be granted. êOnce the lock request is granted, the lock manager sends a message back to the initiator indicating that it has granted the lock request.

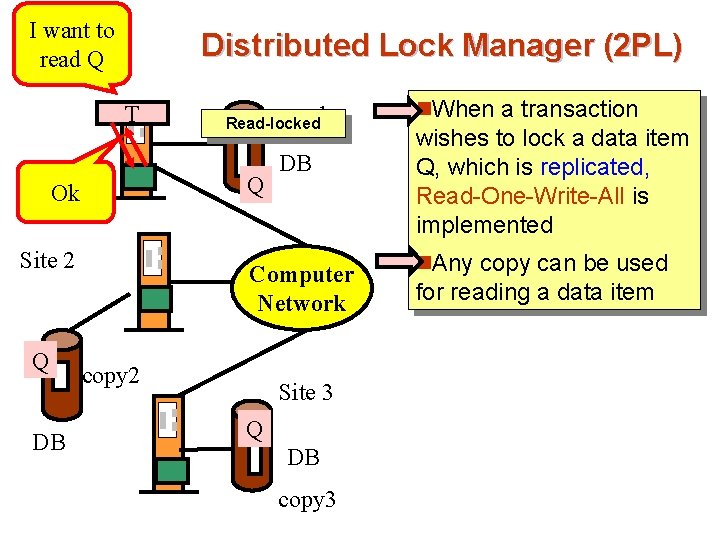

I want to read. Site Q 1 T Site 2 DB copy 1 Read-locked Q Ok Q Distributed Lock Manager (2 PL) DB Computer Network copy 2 Site 3 Q DB copy 3 n. When a transaction wishes to lock a data item Q, which is replicated, Read-One-Write-All is implemented n. Any copy can be used for reading a data item

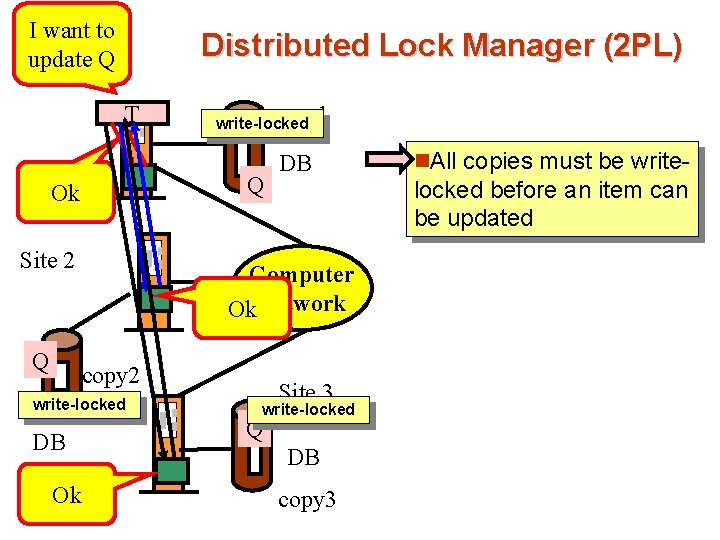

I want to Site update Q 1 T copy 1 write-locked Q Ok Site 2 Q Distributed Lock Manager (2 PL) Computer Ok. Network copy 2 write-locked DB Ok DB Site 3 write-locked Q DB copy 3 n. All copies must be write- locked before an item can be updated

Distributed Lock Manager (2 PL) n Advantage: êwork is distributed and can be made robust to failures n Disadvantage: êdeadlock detection is more complicated due to multiple lock managers

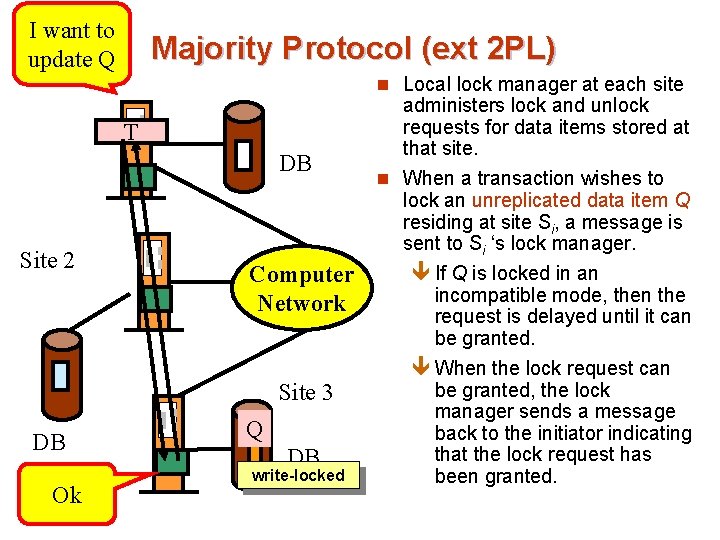

I want to Site update Q 1 Majority Protocol (ext 2 PL) n Local lock manager at each site T DB Site 2 Computer Network Site 3 DB Ok Q DB write-locked administers lock and unlock requests for data items stored at that site. n When a transaction wishes to lock an unreplicated data item Q residing at site Si, a message is sent to Si ‘s lock manager. ê If Q is locked in an incompatible mode, then the request is delayed until it can be granted. ê When the lock request can be granted, the lock manager sends a message back to the initiator indicating that the lock request has been granted.

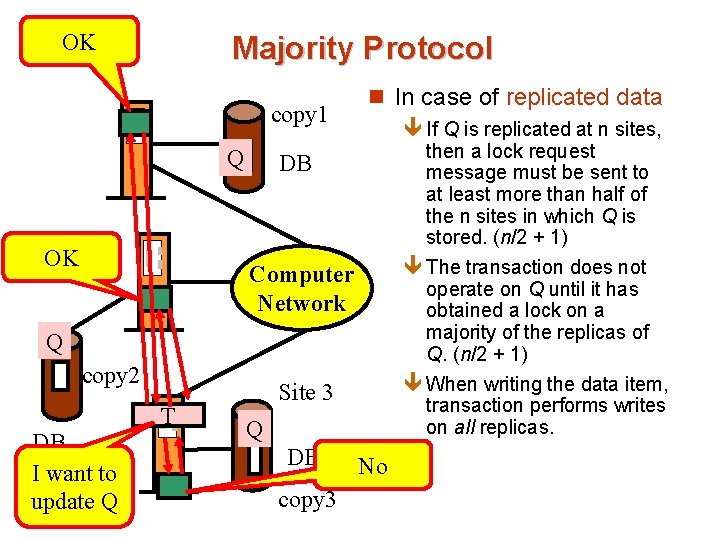

OK Site 1 Majority Protocol copy 1 Q OK Site 2 n In case of replicated data ê If Q is replicated at n sites, then a lock request message must be sent to at least more than half of the n sites in which Q is stored. (n/2 + 1) ê The transaction does not operate on Q until it has obtained a lock on a majority of the replicas of Q. (n/2 + 1) ê When writing the data item, transaction performs writes on all replicas. DB Computer Network Q copy 2 DB I want to update Q T Site 3 Q DB copy 3 No

Majority Protocol n Benefit Can be used even when some sites are unavailable

Majority Protocol n Drawback êThere is a potential for deadlock even with single item Ø Example : system with four sites and full replication. Ø T 1 and T 2 wish to lock data item Q in exclusive mode. Ø T 1 succeeds locking Q in sites S 1 and S 3 while T 2 succeeds locking Q in sites S 2 and S 4 Ø T 1 and T 2 must wait to acquire third lock, hence a deadlock has occurred

Timestamping

Timestamping n Timestamp based concurrency-control protocols can be used in distributed systems n Each transaction must be given a unique timestamp n Methods for generating unique timestamps êCentralized scheme – êDistributed scheme

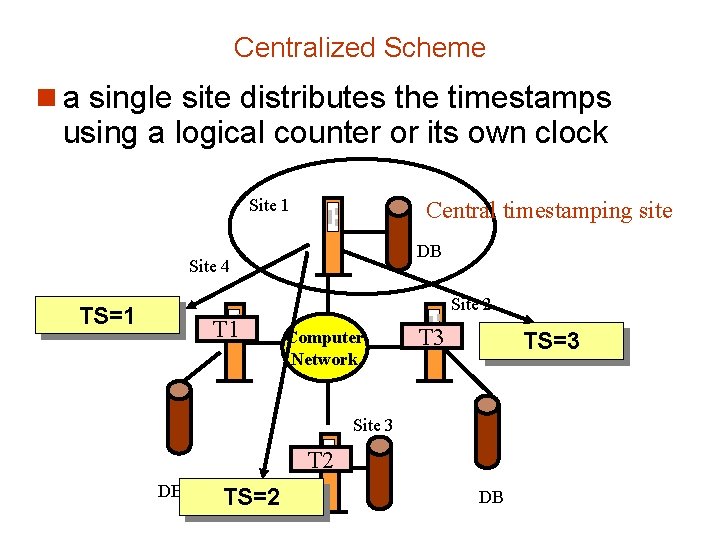

Centralized Scheme n a single site distributes the timestamps using a logical counter or its own clock Site 1 Central timestamping site DB Site 4 Site 2 TS=1 T 1 Computer Network T 3 TS=3 Site 3 T 2 DB TS=2 DB

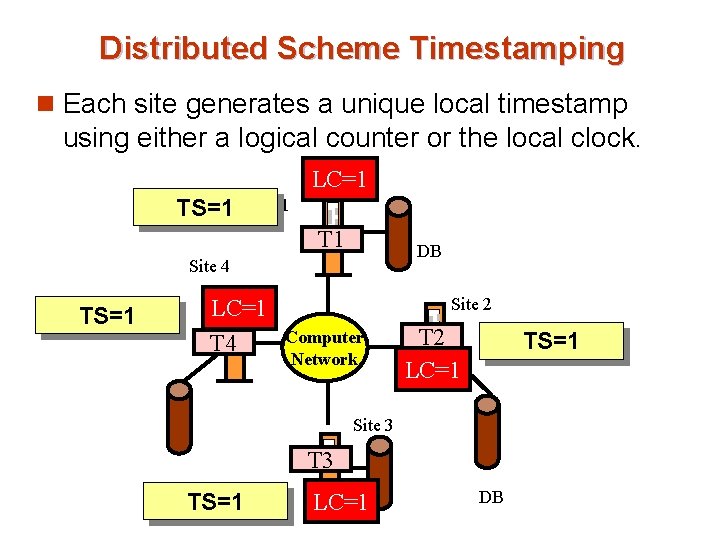

Distributed Scheme Timestamping n Each site generates a unique local timestamp using either a logical counter or the local clock. LC=1 TS=1 Site 1 T 1 DB Site 4 LC=1 T 4 TS=1 Site 2 Computer Network T 2 LC=1 TS=1 Site 3 T 3 DB TS=1 LC=1 DB

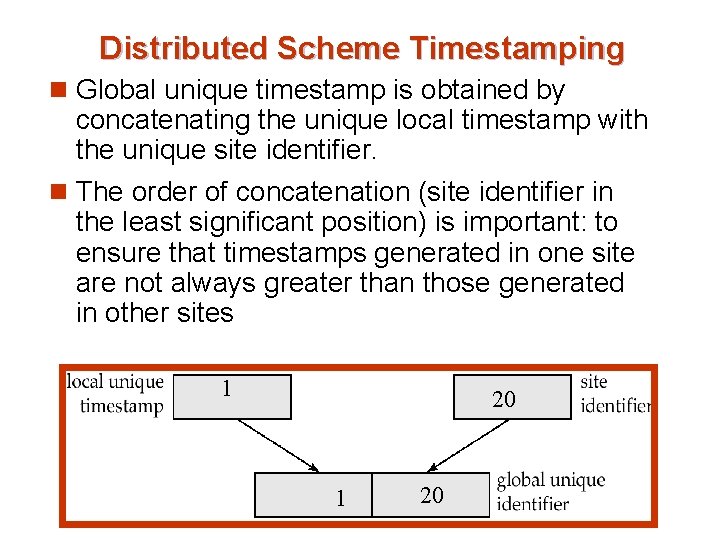

Distributed Scheme Timestamping n Global unique timestamp is obtained by concatenating the unique local timestamp with the unique site identifier. n The order of concatenation (site identifier in the least significant position) is important: to ensure that timestamps generated in one site are not always greater than those generated in other sites 1 20

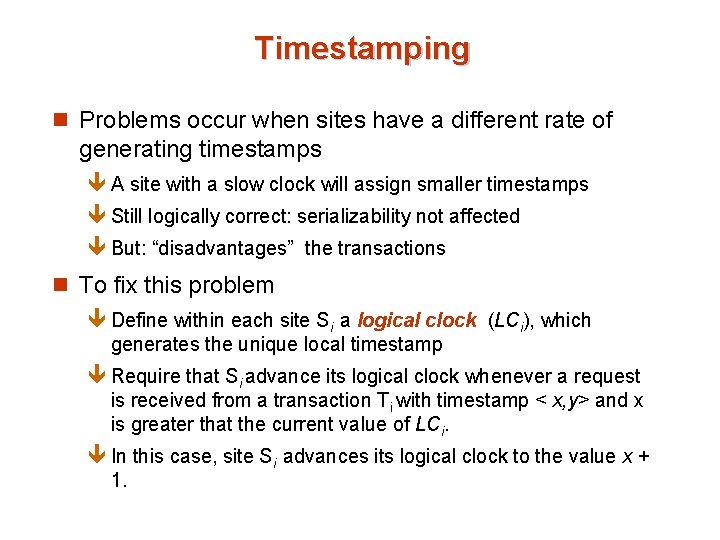

Timestamping n Problems occur when sites have a different rate of generating timestamps ê A site with a slow clock will assign smaller timestamps ê Still logically correct: serializability not affected ê But: “disadvantages” the transactions n To fix this problem ê Define within each site Si a logical clock (LCi), which generates the unique local timestamp ê Require that Si advance its logical clock whenever a request is received from a transaction Ti with timestamp < x, y> and x is greater that the current value of LCi. ê In this case, site Si advances its logical clock to the value x + 1.

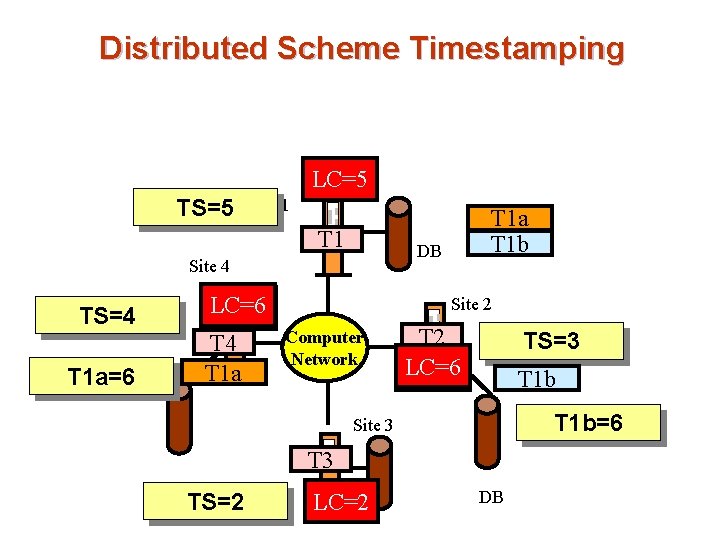

Distributed Scheme Timestamping LC=5 TS=5 Site 1 T 1 DB Site 4 LC=6 LC=4 T 1 a TS=4 T 1 a=6 T 1 a T 1 b Site 2 Computer Network T 2 LC=6 LC=3 TS=3 T 1 b=6 Site 3 T 3 DB TS=2 LC=2 DB

Deadlock Handling

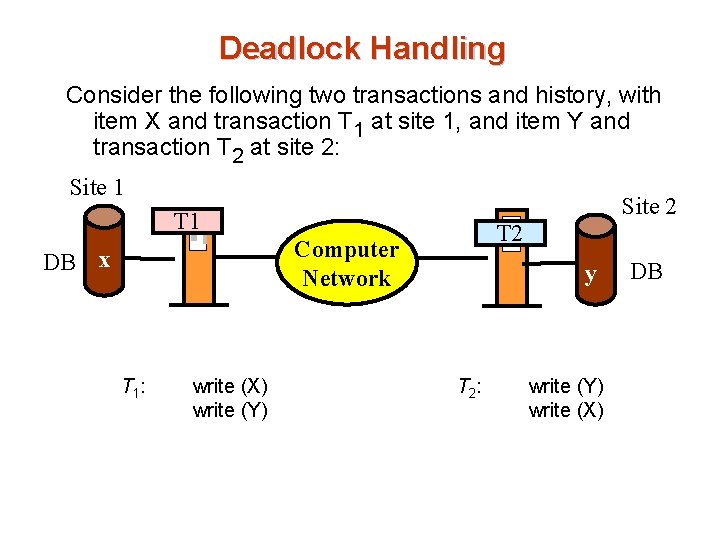

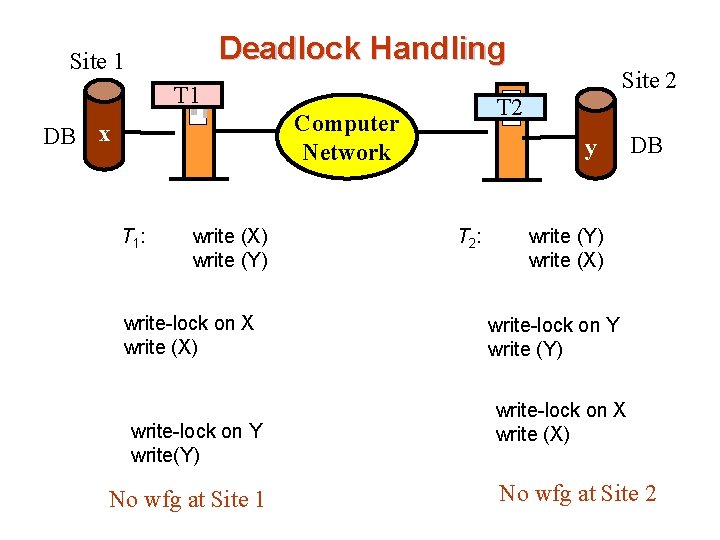

Deadlock Handling Consider the following two transactions and history, with item X and transaction T 1 at site 1, and item Y and transaction T 2 at site 2: Site 1 Site 2 T 1 T 2 Computer Network DB x T 1 : write (X) write (Y) y T 2 : write (Y) write (X) DB

Deadlock Handling Site 1 Site 2 T 1 T 2 Computer Network DB x T 1 : write (X) write (Y) write-lock on X write (X) write-lock on Y write(Y) No wfg at Site 1 y T 2 : DB write (Y) write (X) write-lock on Y write (Y) write-lock on X write (X) No wfg at Site 2

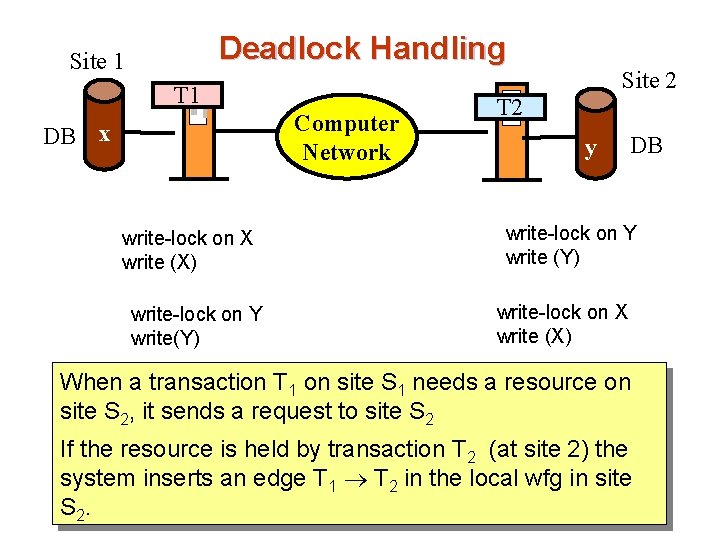

Deadlock Handling Site 1 Site 2 T 1 Computer Network DB x write-lock on X write (X) write-lock on Y write(Y) T 2 y DB write-lock on Y write (Y) write-lock on X write (X) When a transaction T 1 on site S 1 needs a resource on site S 2, it sends a request to site S 2 If the resource is held by transaction T 2 (at site 2) the system inserts an edge T 1 T 2 in the local wfg in site S 2.

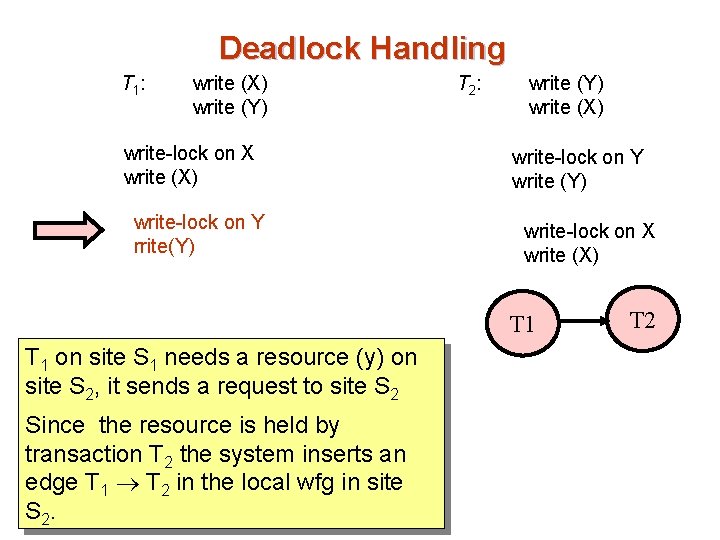

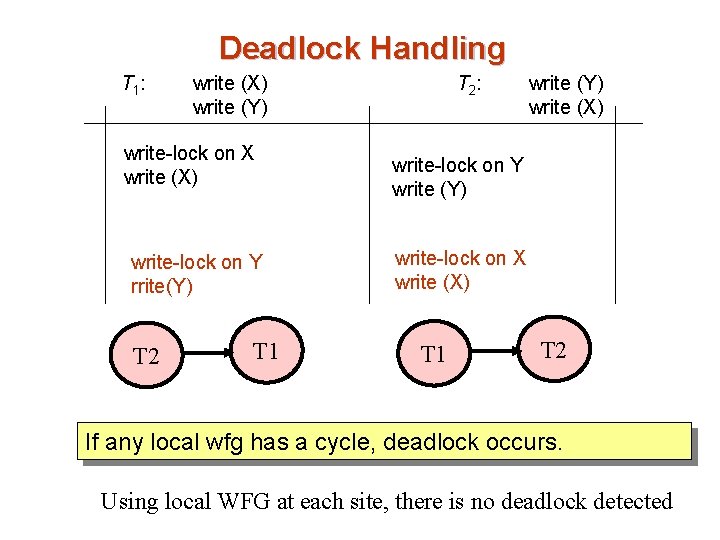

Deadlock Handling T 1 : write (X) write (Y) write-lock on X write (X) write-lock on Y rrite(Y) T 2 : write (Y) write (X) write-lock on Y write (Y) write-lock on X write (X) T 1 on site S 1 needs a resource (y) on site S 2, it sends a request to site S 2 Since the resource is held by transaction T 2 the system inserts an edge T 1 T 2 in the local wfg in site S 2. T 2

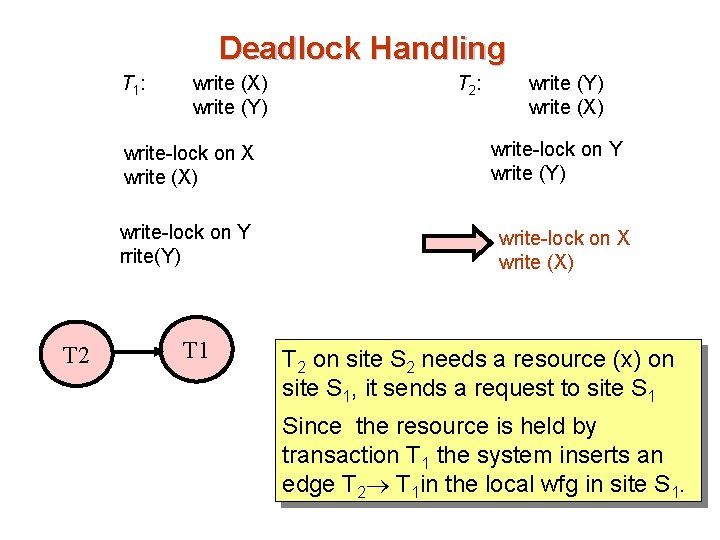

Deadlock Handling T 1 : write (X) write (Y) write-lock on X write (X) write-lock on Y rrite(Y) T 2 T 1 T 2 : write (Y) write (X) write-lock on Y write (Y) write-lock on X write (X) T 2 on site S 2 needs a resource (x) on site S 1, it sends a request to site S 1 Since the resource is held by transaction T 1 the system inserts an edge T 2 T 1 in the local wfg in site S 1.

Deadlock Handling T 1 : write (X) write (Y) write-lock on X write (X) write-lock on Y rrite(Y) T 2 T 1 T 2 : write (Y) write (X) write-lock on Y write (Y) write-lock on X write (X) T 1 T 2 If any local wfg has a cycle, deadlock occurs. Using local WFG at each site, there is no deadlock detected

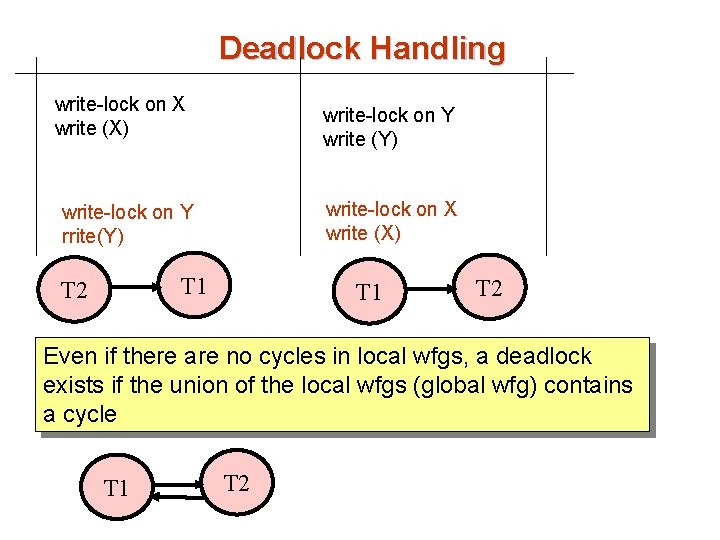

Deadlock Handling write-lock on X write (X) write-lock on Y write (Y) write-lock on X write (X) write-lock on Y rrite(Y) T 1 T 2 Even if there are no cycles in local wfgs, a deadlock exists if the union of the local wfgs (global wfg) contains a cycle T 1 T 2

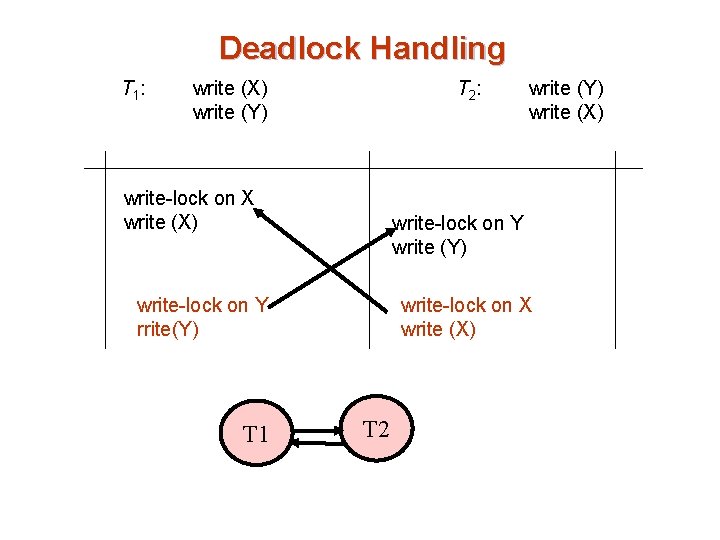

Deadlock Handling T 1 : write (X) write (Y) T 2 : write-lock on X write (X) write-lock on Y write (Y) write-lock on X write (X) write-lock on Y rrite(Y) T 1 write (Y) write (X) T 2

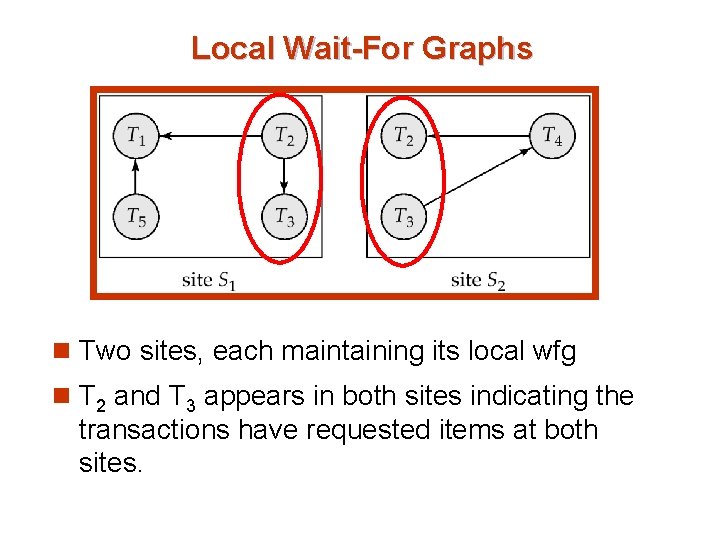

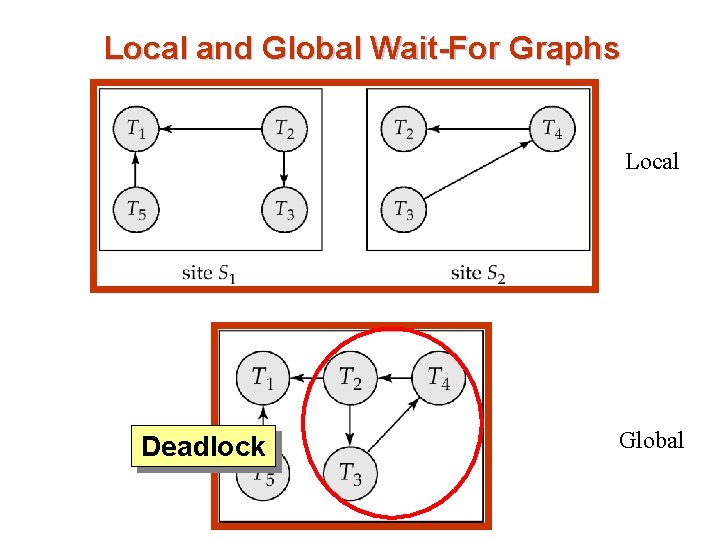

Local Wait-For Graphs n Two sites, each maintaining its local wfg n T 2 and T 3 appears in both sites indicating the transactions have requested items at both sites.

Local and Global Wait-For Graphs Local Deadlock Global

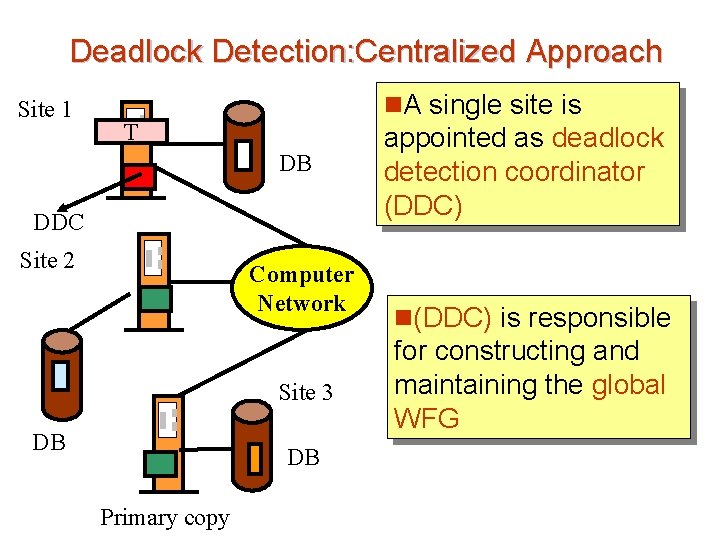

Deadlock Detection: Centralized Approach Site 1 n. A single site is T DB DDC Site 2 Computer Network Site 3 DB DB Primary copy appointed as deadlock detection coordinator (DDC) n(DDC) is responsible for constructing and maintaining the global WFG

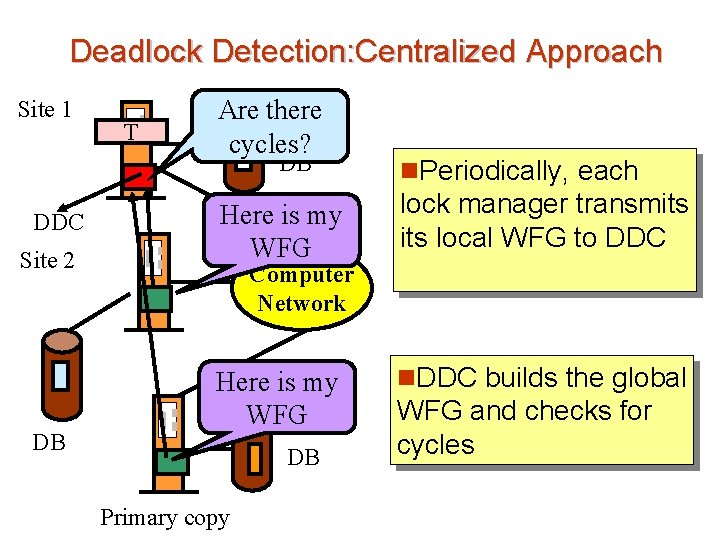

Deadlock Detection: Centralized Approach Site 1 T Are there cycles? DB DDC Site 2 DB n. Periodically, each Here is my WFG lock manager transmits local WFG to DDC Here is my Site 3 WFG n. DDC builds the global Computer Network DB Primary copy WFG and checks for cycles

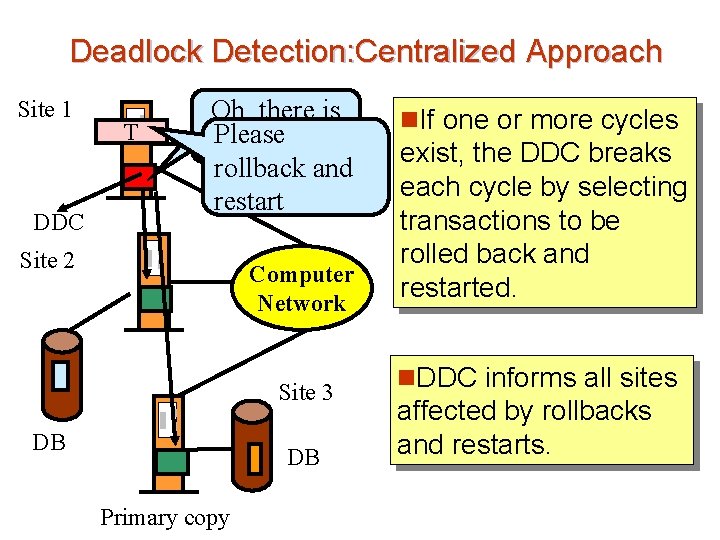

Deadlock Detection: Centralized Approach Site 1 DDC T Oh, there is Please a cycle… DB and rollback restart Site 2 Computer Network Site 3 DB DB Primary copy n. If one or more cycles exist, the DDC breaks each cycle by selecting transactions to be rolled back and restarted. n. DDC informs all sites affected by rollbacks and restarts.

Centralized Approach n the global wait-for graph can be (re)constructed when: êa new edge is inserted in or removed from one of the local wait-for graphs. êa number of changes have occurred in a local wait -for graph. êthe coordinator needs to invoke cycle-detection. n If the coordinator finds a cycle, it selects a victim and notifies all sites. The sites roll back the victim transaction.

Types of WFG Types of wfg (due to communication delay) n Real graph: describes the real, but unknown, state of the system at any instance of time n Constructed graph: Approximation generated by the controller during the execution of its algorithm.

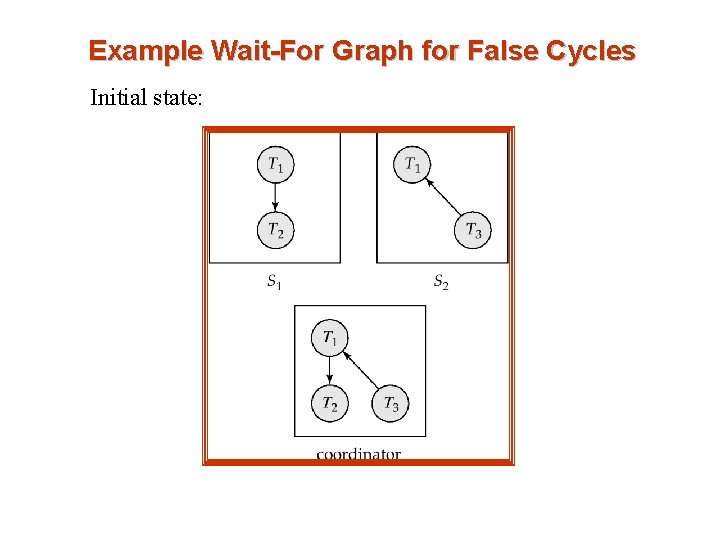

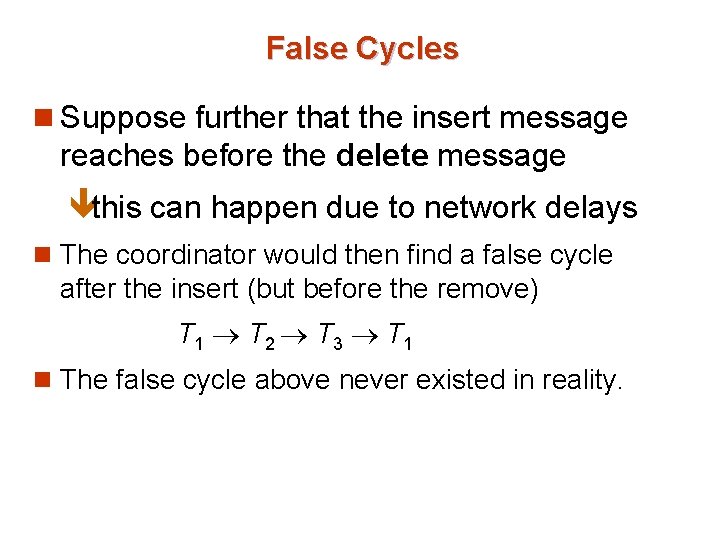

Example Wait-For Graph for False Cycles Initial state:

False Cycles n Suppose that starting from the state shown in figure, 1. T 2 releases resources at S 1 Ø resulting in a message remove T 1 T 2 message from the Transaction Manager at site S 1 to the coordinator) 2. And then T 2 requests a resource held by T 3 at site S 2 Ø resulting in a message insert T 2 T 3 from S 2 to the coordinator

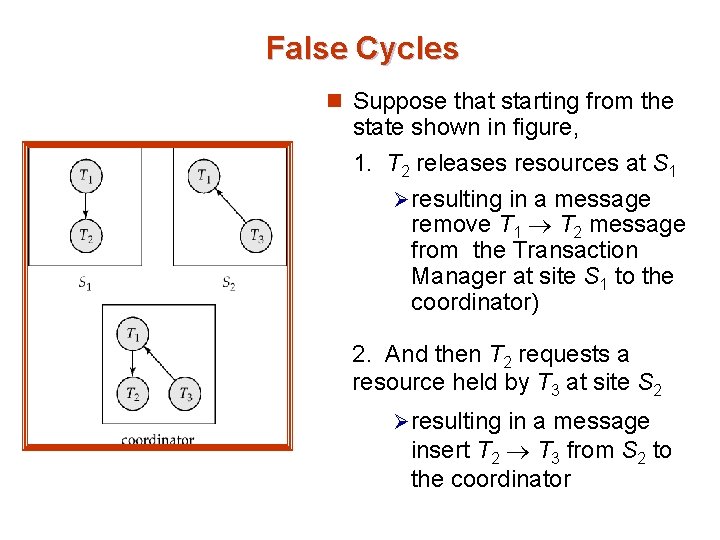

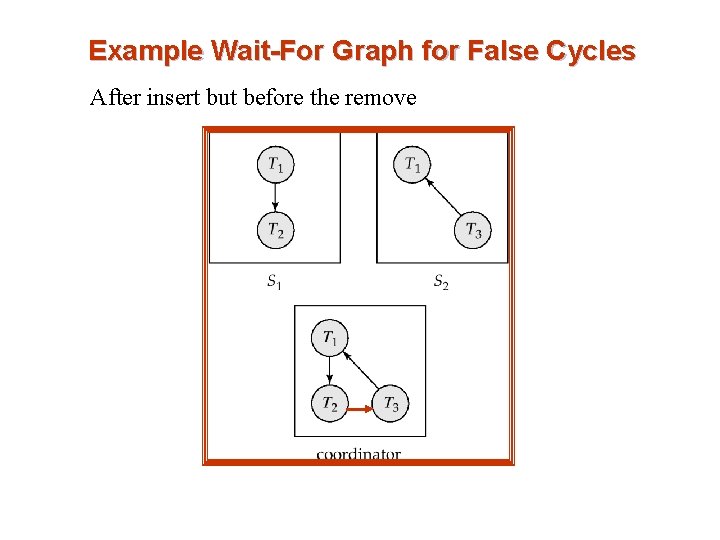

False Cycles n Suppose further that the insert message reaches before the delete message êthis can happen due to network delays n The coordinator would then find a false cycle after the insert (but before the remove) T 1 T 2 T 3 T 1 n The false cycle above never existed in reality.

Example Wait-For Graph for False Cycles After insert but before the remove

Unnecessary Rollbacks n Unnecessary rollbacks may result when deadlock has indeed occurred and a victim has been picked, and meanwhile one of the transactions was aborted for reasons unrelated to the deadlock. n Unnecessary rollbacks can result from false cycles in the global wait-for graph; however, likelihood of false cycles is low.

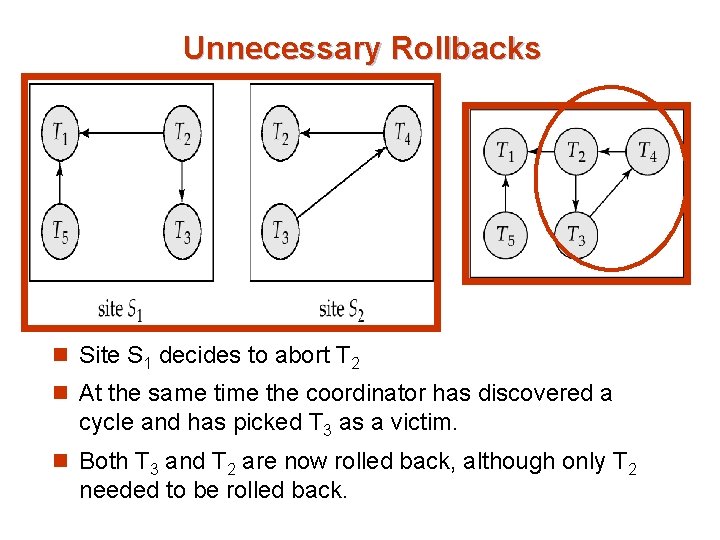

Unnecessary Rollbacks n Site S 1 decides to abort T 2 n At the same time the coordinator has discovered a cycle and has picked T 3 as a victim. n Both T 3 and T 2 are now rolled back, although only T 2 needed to be rolled back.

Replication with Weak Consistency n Many commercial databases support replication of data with weak degrees of consistency (i. e. , without a guarantee of serializabiliy) n Types of replication: êMaster-slave replication êMultimaster replication êLazy propagation

master-slave replication: n updates are only performed at a single “master” site, and n n n propagated to “slave” sites. (update conflicts do not occur) Propagation is not part of the update transaction: its is decoupled êMay be immediately after transaction commits êMay be periodic Data may only be read at slave sites, not updated No need to obtain locks at any remote site Particularly useful for distributing information êE. g. from central office to branch-office Also useful for running read-only queries offline from the main database

- Slides: 74