Distributed Systems Topic 12 Recovery and Fault Tolerance

Distributed Systems Topic 12: Recovery and Fault Tolerance Computer Science & Engineering Department The Chinese University of Hong Kong © Chinese University, CSE Dept. Distributed Systems / 12 - 1

Outline 1 Introduction 2 Transaction Recovery 3 Fault Tolerance 4 Hierarchical and Group Masking of Faults 5 CORBA Fault Tolerance Service 6 Summary © Chinese University, CSE Dept. Distributed Systems / 12 - 2

1 Introduction ¨ Fault tolerance: the survival attribute of systems. ¨ Fault-tolerant applications: – transaction based – process control ¨ Recovery aspects of distributed transactions. ¨ The design of real time services. – Fail-stop vs Byzantine failure. ¨ Masking failures in a service. ¨ CORBA fault tolerance service. © Chinese University, CSE Dept. Distributed Systems / 12 - 3

1 Basic Approaches ¨ Fault Detection: – Push Model: Server objects send heartbeat messages to Fault Manager. – Pull Model: Fault Manager polls (or pings) server objects through their is_alive() interface. ¨ Data Recovery: – Checkpoint and rollback: Save the server object states. Roll back to checkpointed states at recovery. – Message logging and replay: Log all messages. Replay them at recovery. © Chinese University, CSE Dept. Distributed Systems / 12 - 4

2 Transaction Recovery ¨ Recovery concerns data durability (permanent and volatile data) and failure atomicity. ¨ A server keeps data in volatile memory and records committed data in a recovery file. ¨ Recovery manager – save data items in permanent storage – Restore the server’s data items after a crash – reorganize the recovery file for better performance – reclaim storage space (in the recovery file) © Chinese University, CSE Dept. Distributed Systems / 12 - 5

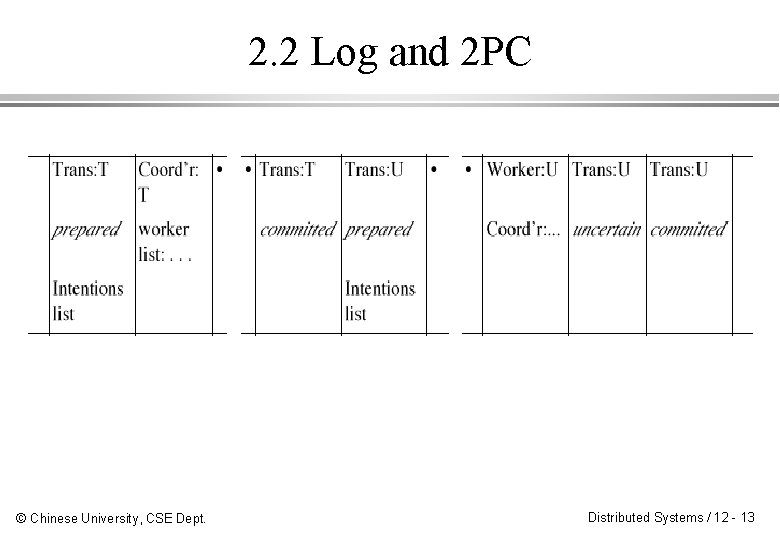

2 Intentions List ¨ An intentions list of a server is a list of data item names and values altered by a transaction. ¨ The server uses the intentions list when a transaction commits or aborts. ¨ When a server prepares to commit, it must have saved the intentions list in its recovery file. ¨ The recovery files contain sufficient information to ensure the transaction is committed by all the servers. © Chinese University, CSE Dept. Distributed Systems / 12 - 6

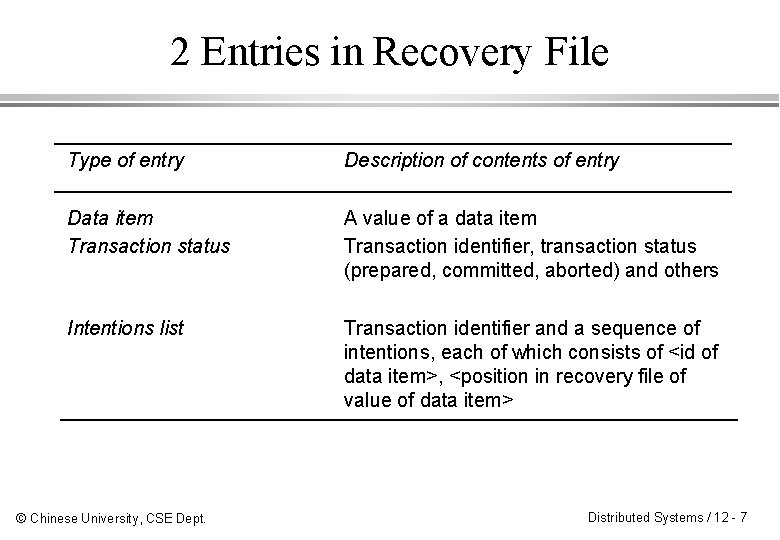

2 Entries in Recovery File Type of entry Description of contents of entry Data item Transaction status A value of a data item Transaction identifier, transaction status (prepared, committed, aborted) and others Intentions list Transaction identifier and a sequence of intentions, each of which consists of <id of data item>, <position in recovery file of value of data item> © Chinese University, CSE Dept. Distributed Systems / 12 - 7

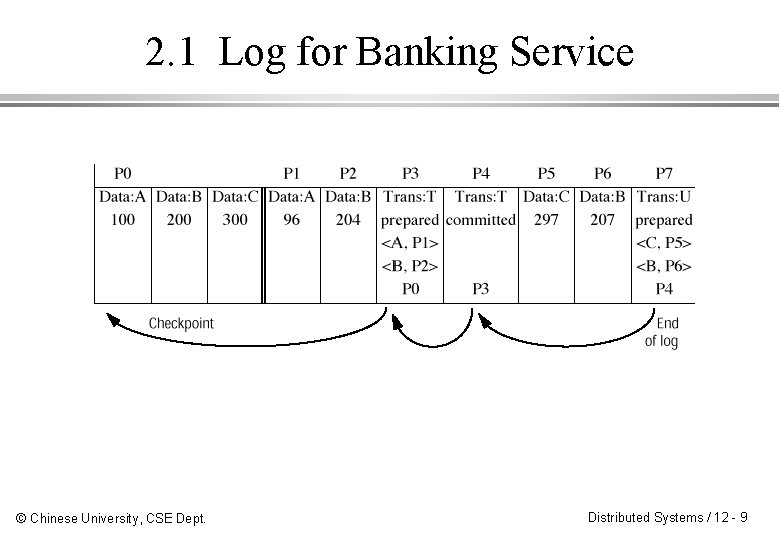

2. 1 Logging ¨ A log contains history of all the transactions performed by a server. ¨ The recovery file contains a recent snapshot of the values of all the data items in the server followed by a history of transactions. ¨ When a server is prepared to commit, the recover manager appends all the data items in its intentions list to the recovery file. ¨ The recovery manager associates a unique identifier with each data item. © Chinese University, CSE Dept. Distributed Systems / 12 - 8

2. 1 Log for Banking Service © Chinese University, CSE Dept. Distributed Systems / 12 - 9

2. 1 Recovery by Logging ¨ Recovery of data items – Recovery manager is responsible for restoring the server’s data items. – The most recent information is at the end of the log. – A recovery manager gets corresponding intentions list from the recovery file. ¨ Reorganizing the recovery file – Checkpointing: the process of writing the current committed values (checkpoint) to a new recovery file. – Can be done periodically or right after recovery. © Chinese University, CSE Dept. Distributed Systems / 12 - 10

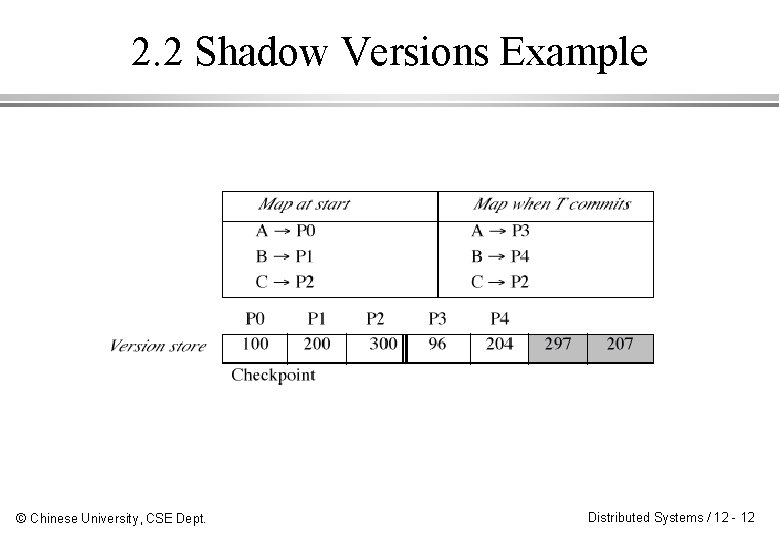

2. 2 Shadow Versions ¨ Shadow versions technique uses a map to locate versions of the server’s data items in a file called a version store. ¨ The versions written by each transaction are shadows of the previous committed versions. ¨ When prepared to commit, any changed data are appended to the version store. ¨ When committing, a new map is made. When complete, new map replaces the old map. © Chinese University, CSE Dept. Distributed Systems / 12 - 11

2. 2 Shadow Versions Example © Chinese University, CSE Dept. Distributed Systems / 12 - 12

2. 2 Log and 2 PC © Chinese University, CSE Dept. Distributed Systems / 12 - 13

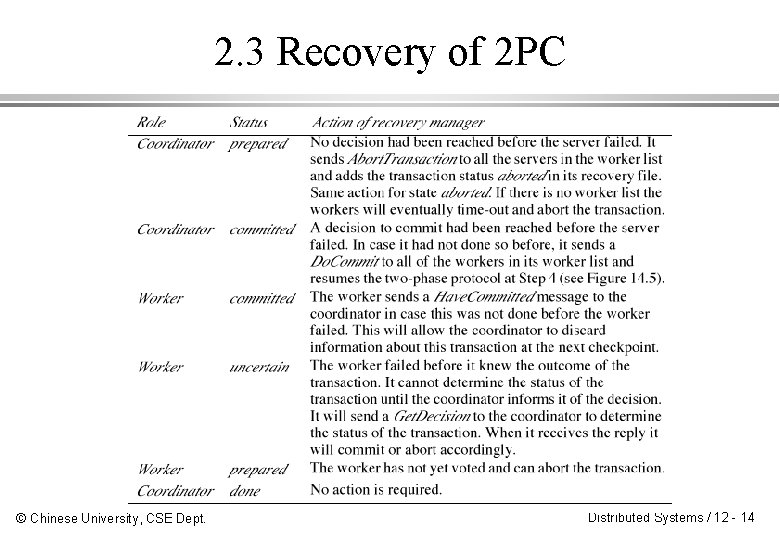

2. 3 Recovery of 2 PC © Chinese University, CSE Dept. Distributed Systems / 12 - 14

3 Fault Tolerance ¨ Two contrasting points on distributed systems: – The operation of a service depends on the correct operation of other services. – Joint execution of a set of servers is less likely to fail than any one of the individual components. ¨ Designers of a service should specify its correct behavior and the way it may fail ¨ Failure semantics: a description of the ways a service may fail. Can be used for its clients to mask its failures. © Chinese University, CSE Dept. Distributed Systems / 12 - 15

3 Characteristics of Faults Class of failure Omission failure Response failure Subclass Value failure State transition failure Timing failure Crash failure Amnesia-crash Pause-crash Halting-crash © Chinese University, CSE Dept. Description A server omits to respond to a request Server responds incorrectly to a request Return wrong value Has wrong effect on resources (for example, sets wrong values in data items) Response not within a specified time interval Repeated omission failure: a server repeatedly fails to respond to requests until it is restarted A server starts in its initial state, having forgotten its state at the time of the crash A server restarts in the state before the crash Server never restarts Distributed Systems / 12 - 16

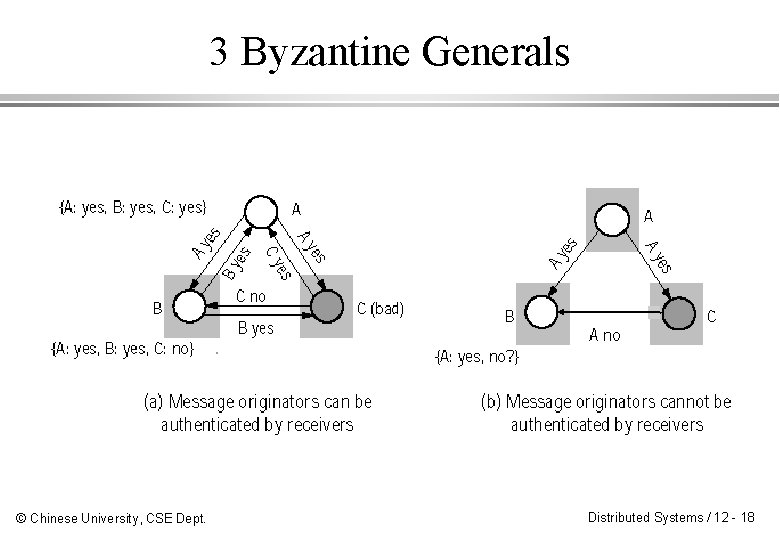

3 Fail-Stop vs Byzantine Failures ¨ A fail-stop server is one that fails cleanly. That is, it either functions, or else it crashes. ¨ Byzantine failure behavior is used to describe the worse possible failure semantics of a server: it fails maliciously or arbitrarily. ¨ Byzantine agreement is intended for correct behaviors within response time requirement in the presence of faulty hardware. ¨ It depends on if messages can be authenticated. © Chinese University, CSE Dept. Distributed Systems / 12 - 17

3 Byzantine Generals © Chinese University, CSE Dept. Distributed Systems / 12 - 18

3 Byzantine Agreement Algorithms ¨ Byzantine agreement algorithms send more messages and use more active servers. ¨ When messages can be authenticated, 2 N+1 servers are required to tolerate N bad servers. ¨ When messages cannot be authenticated, 3 N+1 servers are required. ¨ With enough good servers, solutions require O(N 2) messages with constant delay time. ¨ Fortunately, the good news is. . . © Chinese University, CSE Dept. Distributed Systems / 12 - 19

4 Hierarchical Masking of Faults ¨ We describe two approaches to masking faults: hierarchical failure masking and group failure masking. ¨ In hierarchical failure masking, a server of higher level tries to mask faults at lower-level. ¨ When a lower-level failure cannot be masked, it is converted to a higher level exception. ¨ Example: Server crash is masked in RR protocol by raising an exception to the client. © Chinese University, CSE Dept. Distributed Systems / 12 - 20

4 Group Failure Masking ¨ A service can be made fault tolerant by implementing it by a group of servers. ¨ A group is t-fault tolerant if it can tolerate up to t member failures. ¨ For fail-stop failures, t+1 servers are needed. ¨ For Byzantine failures, 2 t+1 servers needed. ¨ To ensure correctness, the server program must be deterministic, and each operation must be atomic w. r. t. other operations. © Chinese University, CSE Dept. Distributed Systems / 12 - 21

4 Group Failure Masking ¨ A group can be closely synchronized or loosely synchronized. ¨ In a closely synchronized group of servers: – All members execute requests immediately. – Server programs are both deterministic and atomic. – Suitable for real time system and Byzantine failures. ¨ In a loosely synchronized group of servers: – One server (primary) performs requests, others (backup) log the requests and take over if needed. – Requires less resource but takes longer to recover. © Chinese University, CSE Dept. Distributed Systems / 12 - 22

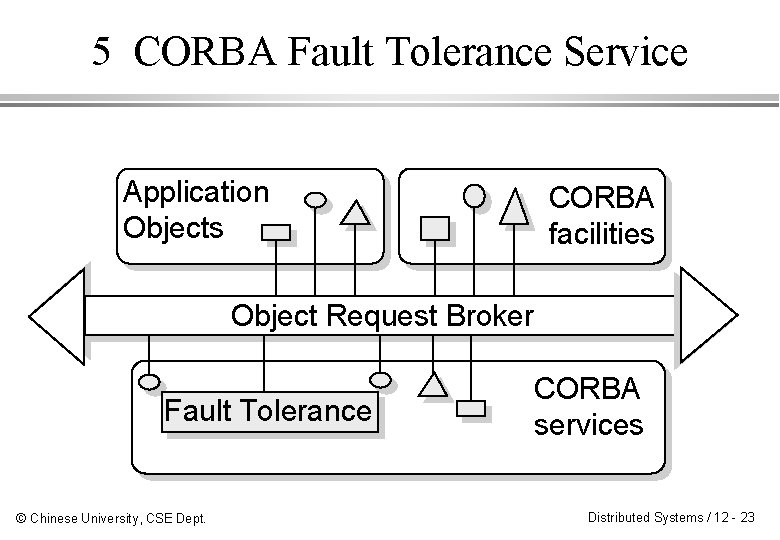

5 CORBA Fault Tolerance Service Application Objects CORBA facilities Object Request Broker Fault Tolerance © Chinese University, CSE Dept. CORBA services Distributed Systems / 12 - 23

5 Outline of Fault Tolerant CORBA ¨ Fault Tolerance Properties ¨ Replication Styles, Membership Styles, Consistency Styles, Fault Monitoring Styles ¨ Infrastructure-Controlled and Application-Controlled ¨ Object Group References and Alternative Destinations ¨ At-Most-Once Invocation (repeated requests detected) ¨ Fault Detection and Notification ¨ Checkpointing and Logging © Chinese University, CSE Dept. Distributed Systems / 12 - 24

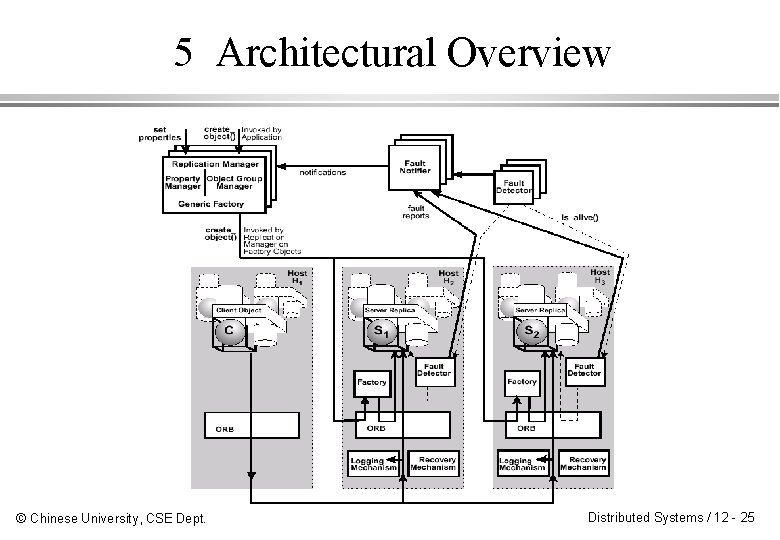

5 Architectural Overview © Chinese University, CSE Dept. Distributed Systems / 12 - 25

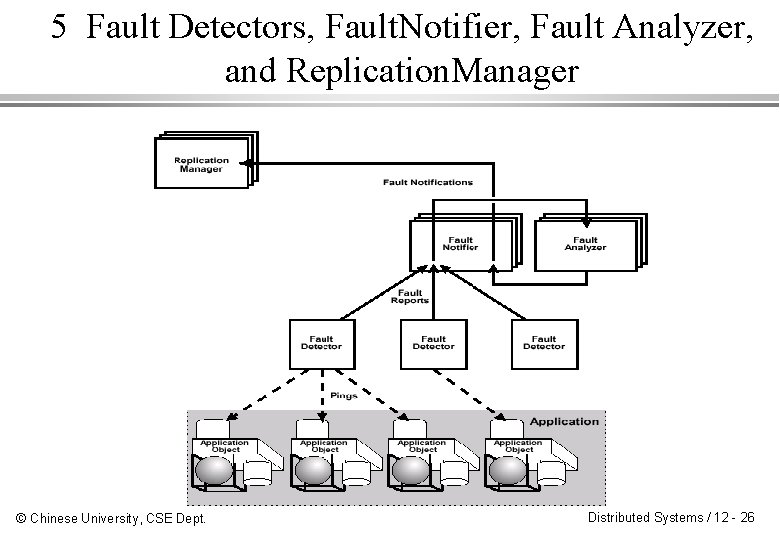

5 Fault Detectors, Fault. Notifier, Fault Analyzer, and Replication. Manager © Chinese University, CSE Dept. Distributed Systems / 12 - 26

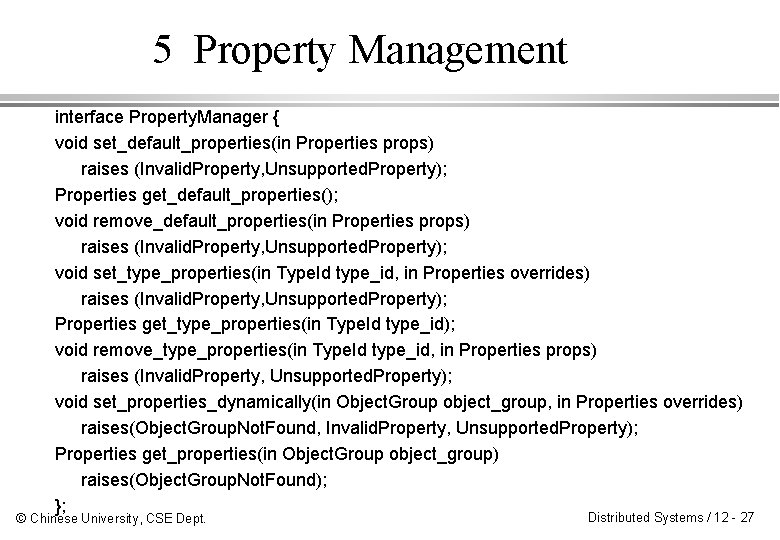

5 Property Management interface Property. Manager { void set_default_properties(in Properties props) raises (Invalid. Property, Unsupported. Property); Properties get_default_properties(); void remove_default_properties(in Properties props) raises (Invalid. Property, Unsupported. Property); void set_type_properties(in Type. Id type_id, in Properties overrides) raises (Invalid. Property, Unsupported. Property); Properties get_type_properties(in Type. Id type_id); void remove_type_properties(in Type. Id type_id, in Properties props) raises (Invalid. Property, Unsupported. Property); void set_properties_dynamically(in Object. Group object_group, in Properties overrides) raises(Object. Group. Not. Found, Invalid. Property, Unsupported. Property); Properties get_properties(in Object. Group object_group) raises(Object. Group. Not. Found); }; © Chinese University, CSE Dept. Distributed Systems / 12 - 27

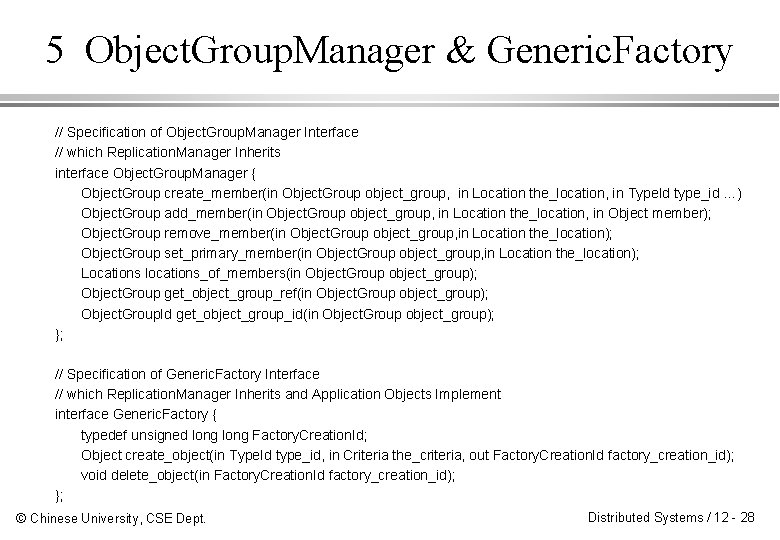

5 Object. Group. Manager & Generic. Factory // Specification of Object. Group. Manager Interface // which Replication. Manager Inherits interface Object. Group. Manager { Object. Group create_member(in Object. Group object_group, in Location the_location, in Type. Id type_id …) Object. Group add_member(in Object. Group object_group, in Location the_location, in Object member); Object. Group remove_member(in Object. Group object_group, in Location the_location); Object. Group set_primary_member(in Object. Group object_group, in Location the_location); Locations locations_of_members(in Object. Group object_group); Object. Group get_object_group_ref(in Object. Group object_group); Object. Group. Id get_object_group_id(in Object. Group object_group); }; // Specification of Generic. Factory Interface // which Replication. Manager Inherits and Application Objects Implement interface Generic. Factory { typedef unsigned long Factory. Creation. Id; Object create_object(in Type. Id type_id, in Criteria the_criteria, out Factory. Creation. Id factory_creation_id); void delete_object(in Factory. Creation. Id factory_creation_id); }; © Chinese University, CSE Dept. Distributed Systems / 12 - 28

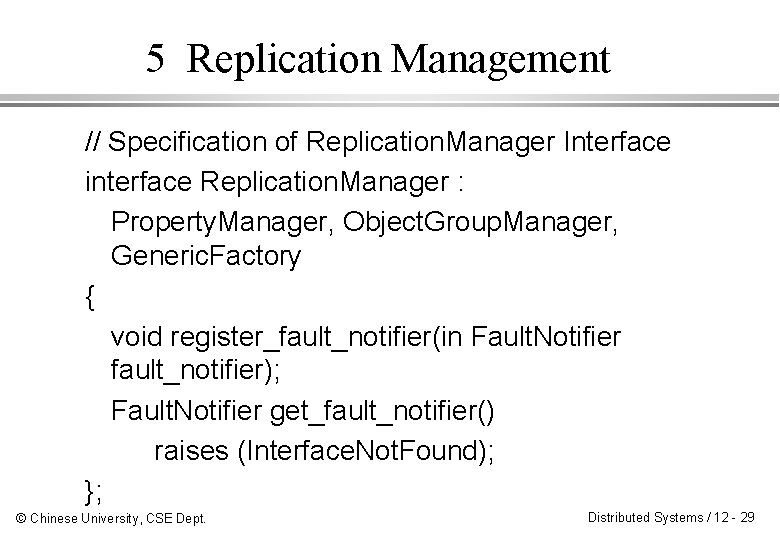

5 Replication Management // Specification of Replication. Manager Interface interface Replication. Manager : Property. Manager, Object. Group. Manager, Generic. Factory { void register_fault_notifier(in Fault. Notifier fault_notifier); Fault. Notifier get_fault_notifier() raises (Interface. Not. Found); }; © Chinese University, CSE Dept. Distributed Systems / 12 - 29

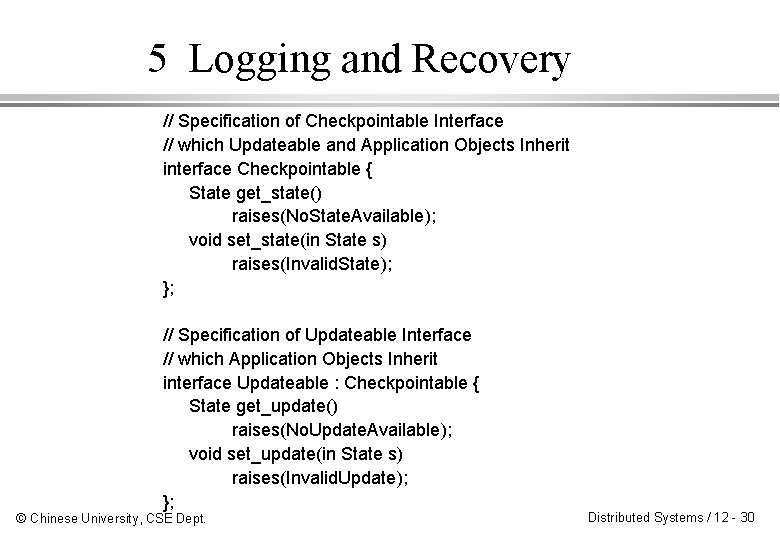

5 Logging and Recovery // Specification of Checkpointable Interface // which Updateable and Application Objects Inherit interface Checkpointable { State get_state() raises(No. State. Available); void set_state(in State s) raises(Invalid. State); }; // Specification of Updateable Interface // which Application Objects Inherit interface Updateable : Checkpointable { State get_update() raises(No. Update. Available); void set_update(in State s) raises(Invalid. Update); }; © Chinese University, CSE Dept. Distributed Systems / 12 - 30

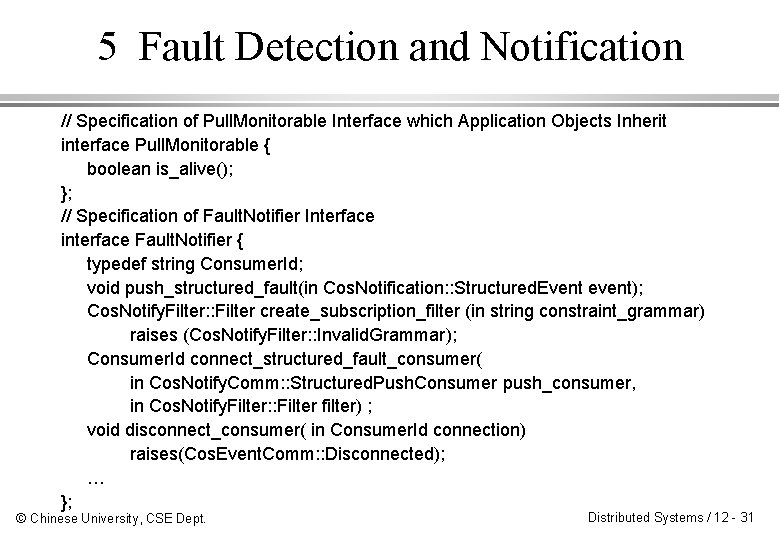

5 Fault Detection and Notification // Specification of Pull. Monitorable Interface which Application Objects Inherit interface Pull. Monitorable { boolean is_alive(); }; // Specification of Fault. Notifier Interface interface Fault. Notifier { typedef string Consumer. Id; void push_structured_fault(in Cos. Notification: : Structured. Event event); Cos. Notify. Filter: : Filter create_subscription_filter (in string constraint_grammar) raises (Cos. Notify. Filter: : Invalid. Grammar); Consumer. Id connect_structured_fault_consumer( in Cos. Notify. Comm: : Structured. Push. Consumer push_consumer, in Cos. Notify. Filter: : Filter filter) ; void disconnect_consumer( in Consumer. Id connection) raises(Cos. Event. Comm: : Disconnected); … }; © Chinese University, CSE Dept. Distributed Systems / 12 - 31

6 Summary ¨ Transaction recovery – long-life application and data integrity – atomic commit protocol is the key – checkpoints and logging in a recovery file ¨ Fault tolerance – real-time application – importance of fault semantics – primary-backup server for fail-stop failures – closely synchronized group for Byzantine failures ¨ Emerging CORBA Fault Tolerance Service © Chinese University, CSE Dept. Distributed Systems / 12 - 32

- Slides: 32