Distributed Systems Overview Ali Ghodsi aligcs berkeley edu

Distributed Systems Overview Ali Ghodsi alig@cs. berkeley. edu

Replicated State Machine (RSM) • Distributed Systems 101 – Fault-tolerance (partial, byzantine, recovery, . . . ) – Concurrency (ordering, asynchrony, timing, . . . ) • Generic solution for distributed systems: Replicated State Machine approach – Represent your system with a deterministic state machine – Replicate the state machine – Feed input to all replicas in the same order

Total Order Reliable Broadcast aka Atomic Broadcast • Reliable broadcast – All or none correct nodes get the message (even if src fails) • Atomic Broadcast – Reliable broadcast that guarantees: All messages delivered in the same order • Replicated state machine trivial with atomic broadcast

Consensus? • Consensus problem – All nodes propose a value – All correct nodes must agree on one of the values – Must eventually reach a decision (availability) • Atomic Broadcast → Consensus – Broadcast proposal, Decide on first received value • Consensus → Atomic Broadcast – – Unreliably broadcast message to all 1 consensus per round: propose set of messages seen but not delivered Each round deliver one decided message • Atomic Broadcast equivalent to Atomic Broadcast

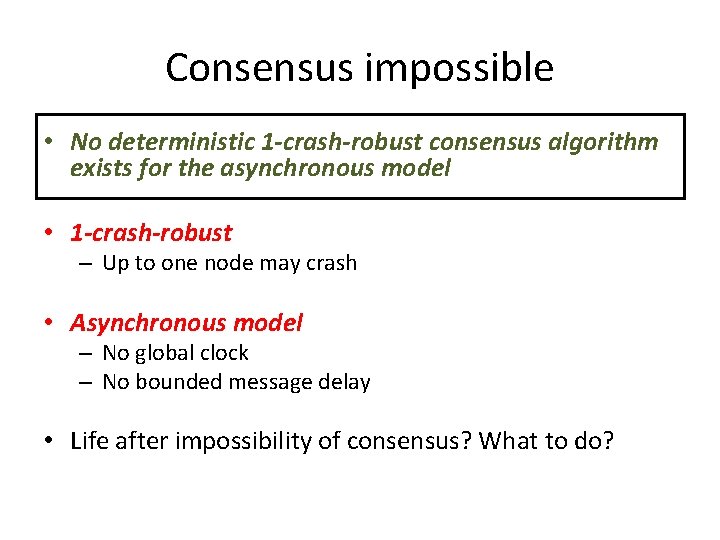

Consensus impossible • No deterministic 1 -crash-robust consensus algorithm exists for the asynchronous model • 1 -crash-robust – Up to one node may crash • Asynchronous model – No global clock – No bounded message delay • Life after impossibility of consensus? What to do?

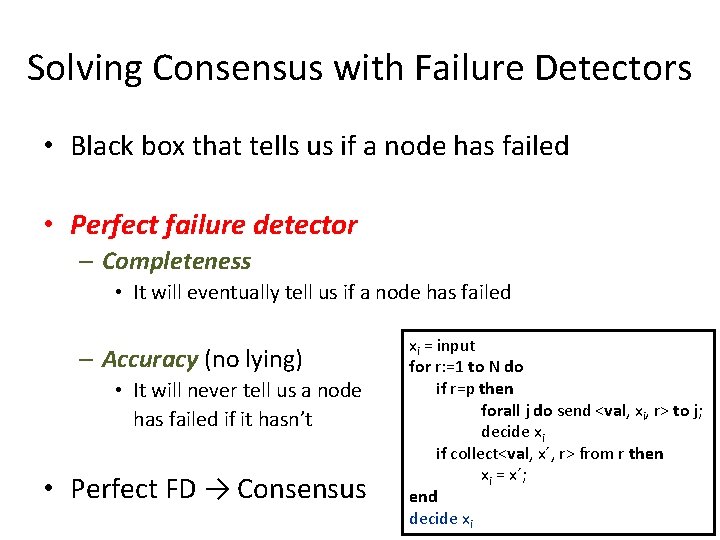

Solving Consensus with Failure Detectors • Black box that tells us if a node has failed • Perfect failure detector – Completeness • It will eventually tell us if a node has failed – Accuracy (no lying) • It will never tell us a node has failed if it hasn’t • Perfect FD → Consensus xi = input for r: =1 to N do if r=p then forall j do send <val, xi, r> to j; decide xi if collect<val, x´, r> from r then xi = x´; end decide xi

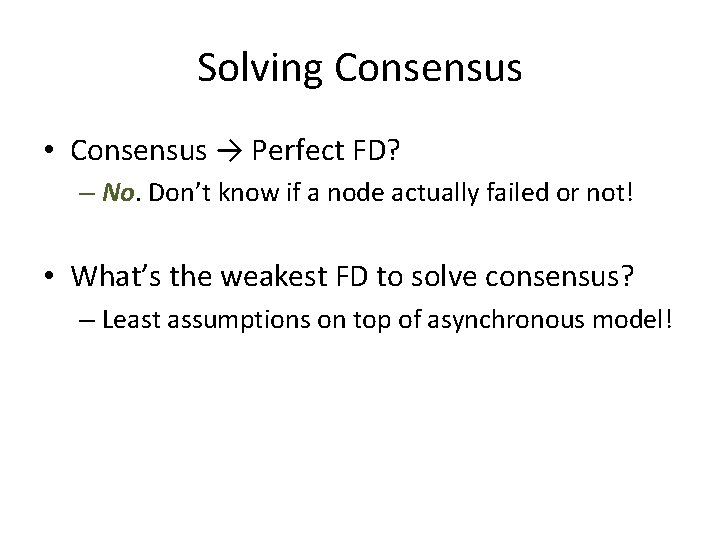

Solving Consensus • Consensus → Perfect FD? – No. Don’t know if a node actually failed or not! • What’s the weakest FD to solve consensus? – Least assumptions on top of asynchronous model!

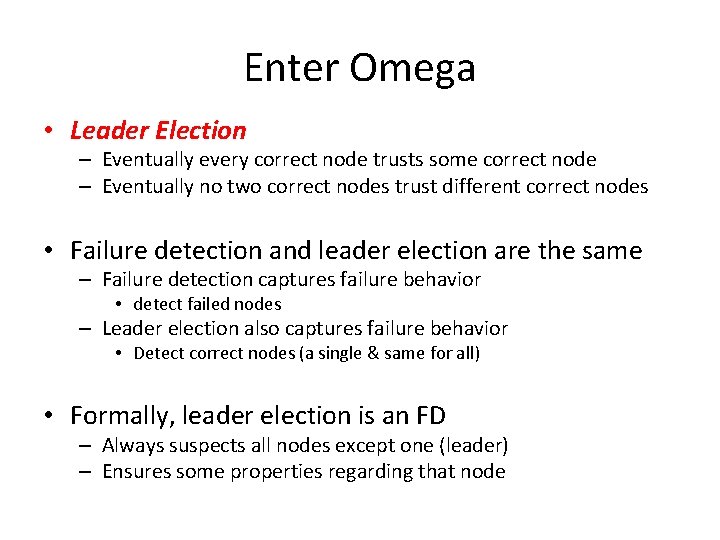

Enter Omega • Leader Election – Eventually every correct node trusts some correct node – Eventually no two correct nodes trust different correct nodes • Failure detection and leader election are the same – Failure detection captures failure behavior • detect failed nodes – Leader election also captures failure behavior • Detect correct nodes (a single & same for all) • Formally, leader election is an FD – Always suspects all nodes except one (leader) – Ensures some properties regarding that node

Weakest Failure Detector for Consensus • Omega the weakest failure detector for consensus – How to prove it? – Easy to implement in practice

High Level View of Paxos • Elect a single proposer using Ω – Proposer imposes its proposal to everyone – Everyone decides – Done! • Problem with Ω – Several nodes might initially be proposers (contention) • Solution is abortable consensus – Proposer attempts to enforce decision – Might abort if there is contention (safety) – Ω ensures eventually 1 proposer succeeds (liveness) 10

Replicated State Machine • Paxos approach (Lamport) – Client sends input to leader Paxos – Leader executes Paxos instance to agree on command – Well-understood, many papers, optimizations • View-stamp approach (Liskov) – Have one leader that writes commands to a quorum (no Paxos) – When failures happen, use Paxos to agree – Less understood (Mazieres tutorial)

Paxos Siblings • Cheap Paxos (LM’ 04) – Fewer messages – Directly contact a quorum (e. g. 3 nodes out of 5) – If fail to get response from 3, expand to 5 • Fast Paxos (L’ 06) – Reduce from 3 delays to 2 delays (delays ~ delays) – Clients optimistically write to a quorum – Requires recovery

Paxos Siblings • Gaios/SMARTER (Bolosky’ 11) – Make logging to disk efficient for crash-recovery – Uses pipelining and batching • Generalized Paxos (LM’ 05) – Commutative operations for repl. state machine

Atomic Commit • Atomic Commit – Commit IFF no failures and everyone votes commit – Else Abort • Consensus on Transaction Commit (LG’ 04) – One Paxos instance for every TM – Only commit if every instance said Commit

Reconfigurable Paxos • Change the set of nodes – Replace failed nodes – Add/remove new nodes (change size of quorum) • Lamport’s idea – Part of the state of state-machine: set of nodes • SMART (Eurosys’ 06) – Many problems (e. g. {A, B, C}->{A, B, D} and A fails) – Basic idea, run multiple Paxos instances side by side

- Slides: 15