Distributed Systems Lecture 4 Failure detection 1 Previous

Distributed Systems Lecture 4 Failure detection 1

Previous lecture • Big Data • Map. Reduce 2

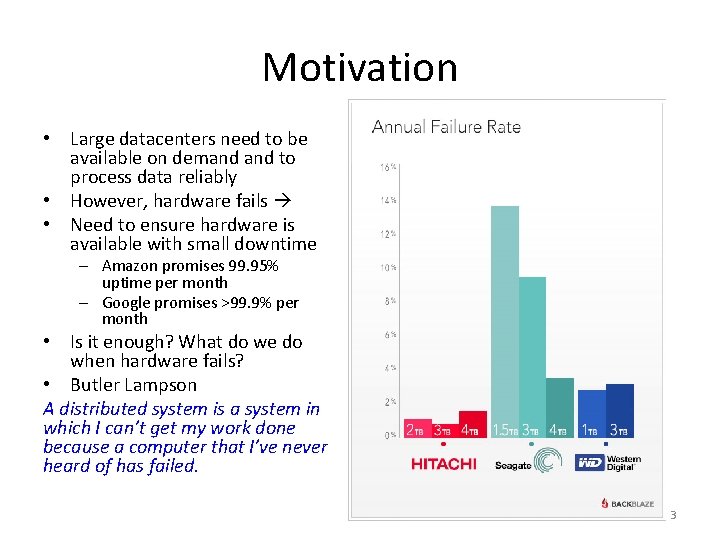

Motivation • Large datacenters need to be available on demand to process data reliably • However, hardware fails • Need to ensure hardware is available with small downtime – Amazon promises 99. 95% uptime per month – Google promises >99. 9% per month • Is it enough? What do we do when hardware fails? • Butler Lampson A distributed system is a system in which I can’t get my work done because a computer that I’ve never heard of has failed. 3

Example • Rate of disk failure is once every 10 years • For 120 servers in the datacenter this translates to once every month • For 120, 000 servers it goes down to 7. 2 hours What are our options? 1. 2. Hire a team to monitor machines in the datacenter and report to you when they fails Write a failure detector program (distributed) that automatically detects failures and reports to your workstation In 2002 when ASCI Q supercomputer (2 nd fastest in the world at the time) was installed at New Mexico Lab the computer could not run more than an hour without crashing 4

Two types of DSs Synchronous Distributed System – Each message is received within bounded time – Each step in a process takes lb < time < ub • Each local clock’s drift has a known bound – Example: Multiprocessor systems Asynchronous Distributed System – No bounds on message transmission delays – No bounds on process execution • The drift of a clock is arbitrary – Example: Internet, wireless networks, datacenters, most real systems 5

Problems with Distributed Systems • Failures are more frequent – Many places to fail – More complex • More pieces • New challenges: asynchrony, communication • Potential problems of failures – Single system everything stops – Distributed some parts may continue 6

First step: Goals • Availability – Can I use it now? • Reliability – Will it be up as long as I need it? • Safety – If it fails, what are the consequences? • Maintainability – How easy is it to fix if it breaks? 7

Next step: Failure models • Failure: System does not behave as expected – Component-level failure (can compensate) – System-level failure (incorrect result) • Fault: Cause of failure (component-level) – Transient: Not repeatable – Intermittent: Repeats, but (apparently) independent of system operations – Permanent: Exists until component repaired • Failure model: How the system behaves when it doesn’t behave properly • Failure semantics: describes and classifies errors that distributed systems can experience 8

Failure classification • Correct – In response to inputs, behaves in a manner consistent with the service specification • Omission Failure – Does not respond to input • Crash: After first omission failure, subsequent requests result in omission failure • Timing failure (early, late) – Correct response, but outside required time window • Response failure – Value: Wrong output for inputs – State Transition: Server ends in wrong state 9

Crash failure types (based on recovery behavior) • Crash-stop (fail-stop) – process halts and does not execute any further operations – Halting • Never restarts • Crash-recovery – process halts, but then recovers (reboots) after a while – Special case of crash-stop model (uses a new identifier on recovery) – Classification: • Amnesia – Server recovers to predefined state independent of operations before crash • Partial amnesia – Some part of state is as before crash, rest to predefined state • Pause – Recovers to state before omission failure 10

Hierarchical failure masking • Hierarchical failure masking – Dependency: Higher level gets (at best) failure semantics of lower level – Can compensate for lower level failure to improve this • Example: – TCP fixes communication errors, so some failure semantics not propagated to higher level 11

Group failure masking • Redundant servers – Failed server masked by others in group – Allows failure semantics of group to be higher than individuals • k-fault tolerant – Group can mask k concurrent group member failures from client • May “upgrade” failure semantics – Example: Group detects non-responsive server, other member picks up the slack – Omission failure becomes performance failure 12

Detecting failures pi pj 13

Detecting failures Crash-stop failure (pj is a failed process) pi pj X 14

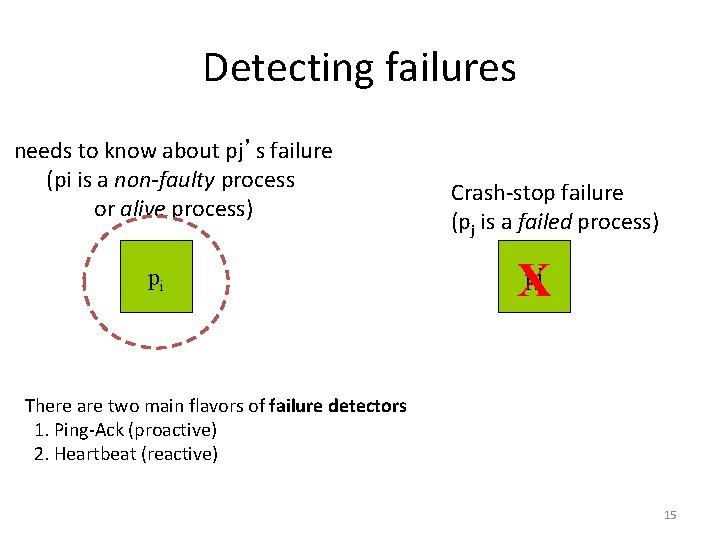

Detecting failures needs to know about pj’s failure (pi is a non-faulty process or alive process) pi Crash-stop failure (pj is a failed process) pj X There are two main flavors of failure detectors 1. Ping-Ack (proactive) 2. Heartbeat (reactive) 15

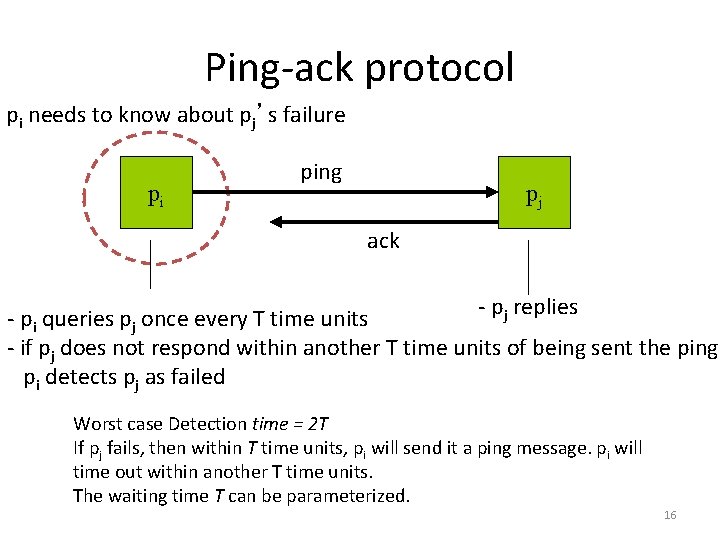

Ping-ack protocol pi needs to know about pj’s failure pi ping pj ack - pj replies - pi queries pj once every T time units - if pj does not respond within another T time units of being sent the ping, pi detects pj as failed Worst case Detection time = 2 T If pj fails, then within T time units, pi will send it a ping message. pi will time out within another T time units. The waiting time T can be parameterized. 16

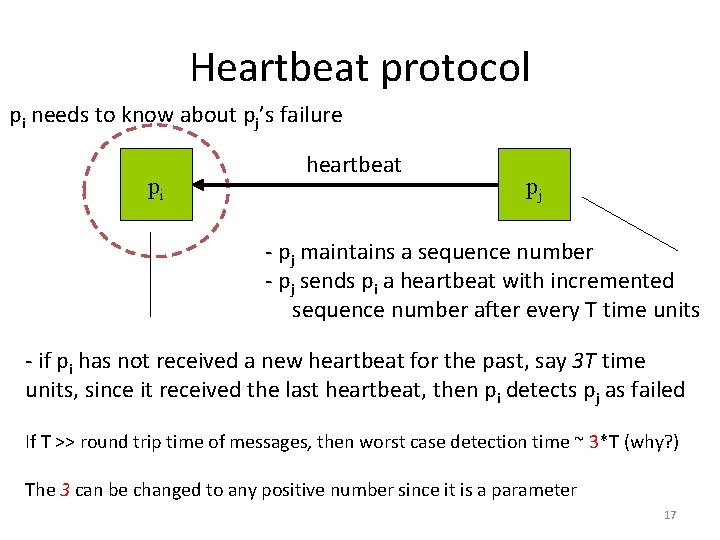

Heartbeat protocol pi needs to know about pj’s failure pi heartbeat pj - pj maintains a sequence number - pj sends pi a heartbeat with incremented sequence number after every T time units - if pi has not received a new heartbeat for the past, say 3 T time units, since it received the last heartbeat, then pi detects pj as failed If T >> round trip time of messages, then worst case detection time ~ 3*T (why? ) The 3 can be changed to any positive number since it is a parameter 17

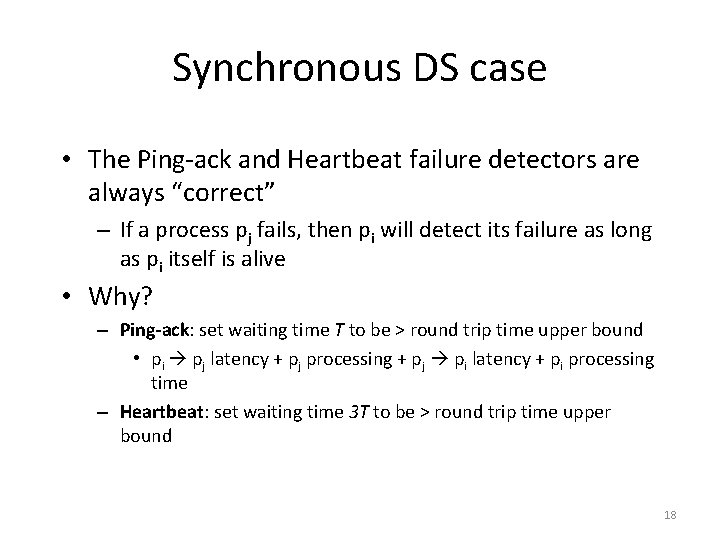

Synchronous DS case • The Ping-ack and Heartbeat failure detectors are always “correct” – If a process pj fails, then pi will detect its failure as long as pi itself is alive • Why? – Ping-ack: set waiting time T to be > round trip time upper bound • pi pj latency + pj processing + pj pi latency + pi processing time – Heartbeat: set waiting time 3 T to be > round trip time upper bound 18

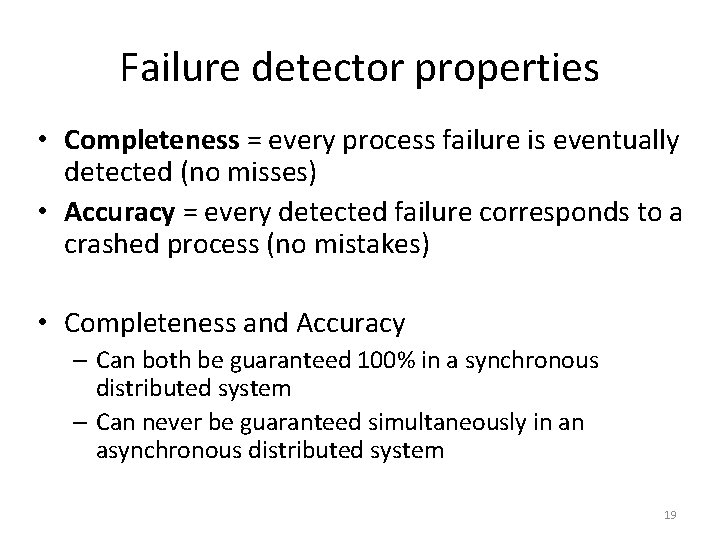

Failure detector properties • Completeness = every process failure is eventually detected (no misses) • Accuracy = every detected failure corresponds to a crashed process (no mistakes) • Completeness and Accuracy – Can both be guaranteed 100% in a synchronous distributed system – Can never be guaranteed simultaneously in an asynchronous distributed system 19

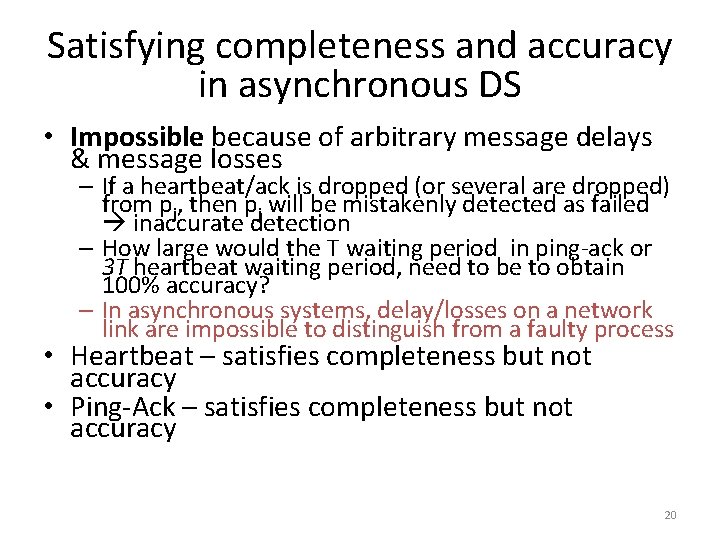

Satisfying completeness and accuracy in asynchronous DS • Impossible because of arbitrary message delays & message losses – If a heartbeat/ack is dropped (or several are dropped) from pj, then pj will be mistakenly detected as failed inaccurate detection – How large would the T waiting period in ping-ack or 3 T heartbeat waiting period, need to be to obtain 100% accuracy? – In asynchronous systems, delay/losses on a network link are impossible to distinguish from a faulty process • Heartbeat – satisfies completeness but not accuracy • Ping-Ack – satisfies completeness but not accuracy 20

Completeness or accuracy in asynchronous DS • Most failure detector implementations are willing to tolerate some inaccuracy, but require 100% completeness • Many distributed apps designed assuming 100% completeness, e. g. , P 2 P systems – “Err on the side of caution” – Processes not “stuck” waiting for other processes • If error in identifying is made then victim process rejoins as a new process and catches up • Hearbeating and Ping-ack provide – Probabilistic accuracy: for a process detected as failed, with some probability close to 1. 0 (but not equal) it is true that it has actually crashed 21

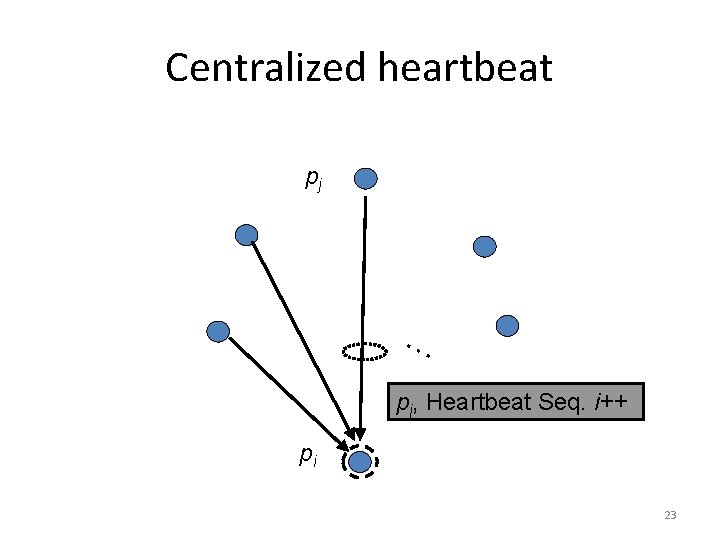

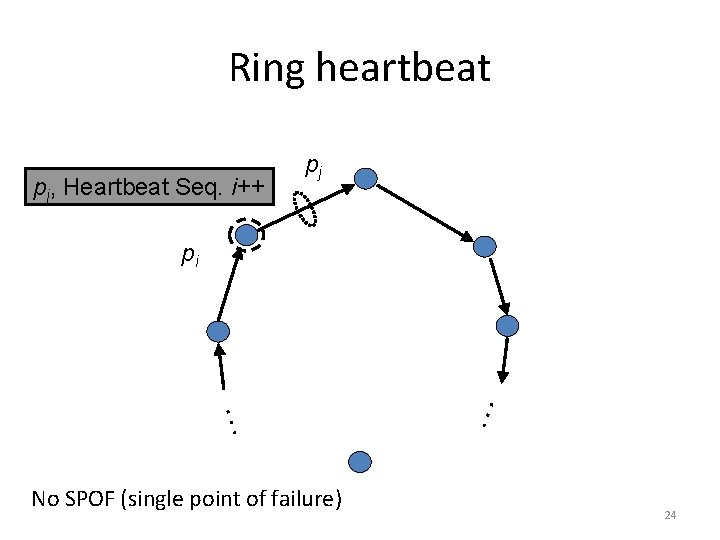

Failure detection across the DS • We want failure detection of not merely one process (pj), but all processes in the DS • Approaches: – Centralized heartbeat – Ring heartbeat – All-to-all heartbeat Who guards the failure detectors? 22

Centralized heartbeat pj … pj, Heartbeat Seq. i++ pi 23

Ring heartbeat pj, Heartbeat Seq. i++ pj pi … … No SPOF (single point of failure) 24

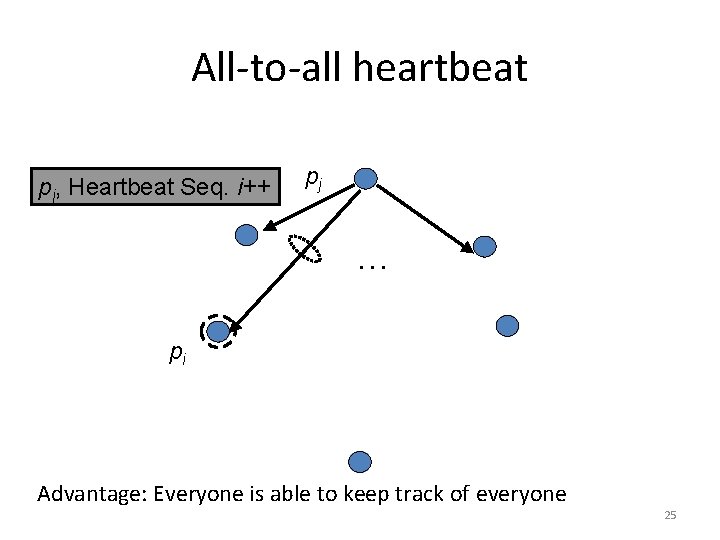

All-to-all heartbeat pj, Heartbeat Seq. i++ pj … pi Advantage: Everyone is able to keep track of everyone 25

Detection efficiency metrics • Bandwidth: – the number of messages sent in the system during steady state (no failures) – Small is good • Detection time – Time between a process crash and its detection – Small is good • Scalability: – How do bandwidth and detection properties scale with N, the number of processes? • Accuracy – Large is good 26

Accuracy metrics • False Detection Rate/False Positive Rate (inaccuracy) – Multiple possible metrics 1. 2. Average number of failures detected per second, when there are in fact no failures Fraction of failure detections that are false • Tradeoffs: If you increase the T waiting period in pingack or 3 T waiting period in heartbeating what happens to: – Detection Time? – False positive rate? – Where would you set these waiting periods? 27

Membership protocols • Maintain a list of other alive (non-faulty) processes at each process in the system • Failure detector is a component in membership protocol – Failure of pj detected delete pj from membership list – New machine joins pj sends message to everyone add pj to membership list • Flavors – Strongly consistent: all membership lists identical at all times (hard, may not scale) – Weakly consistent: membership lists not identical at all times – Eventually consistent: membership lists always moving towards becoming identical eventually (scales well) 28

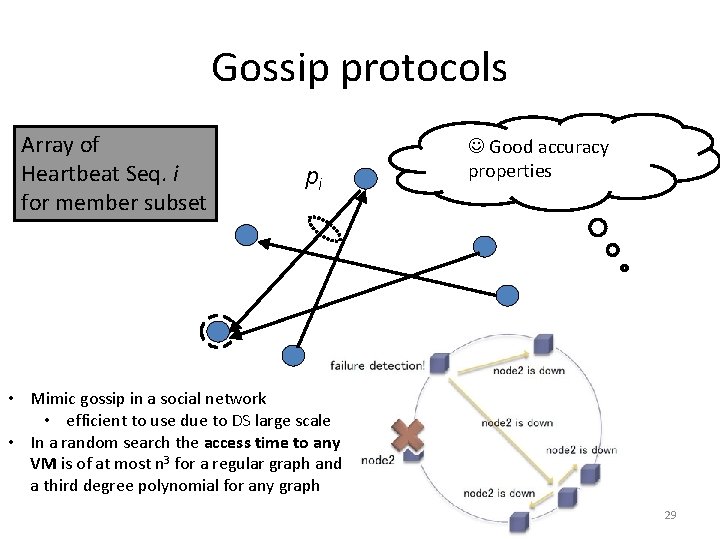

Gossip protocols Array of Heartbeat Seq. i for member subset pi Good accuracy properties • Mimic gossip in a social network • efficient to use due to DS large scale • In a random search the access time to any VM is of at most n 3 for a regular graph and a third degree polynomial for any graph 29

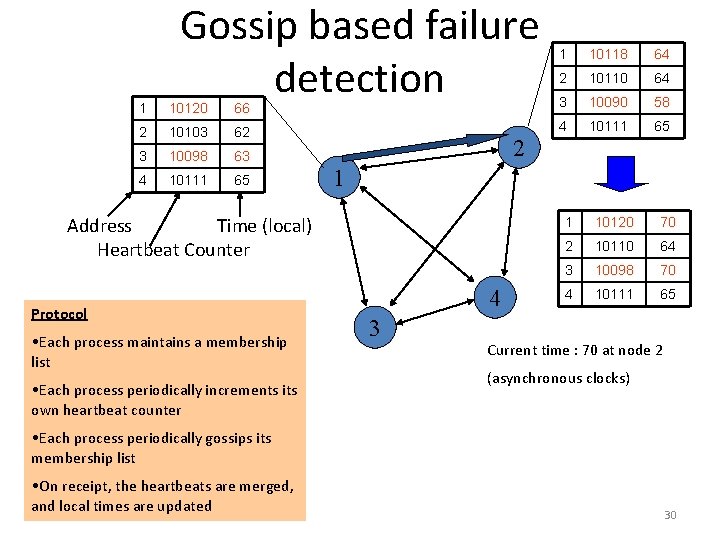

Gossip based failure detection 1 10118 64 2 10110 64 1 10120 66 3 10090 58 2 10103 62 4 10111 65 3 10098 63 4 10111 65 2 1 Address Time (local) Heartbeat Counter Protocol • Each process maintains a membership list • Each process periodically increments its own heartbeat counter 4 3 1 10120 70 2 10110 64 3 10098 70 4 10111 65 Current time : 70 at node 2 (asynchronous clocks) • Each process periodically gossips its membership list • On receipt, the heartbeats are merged, and local times are updated 30

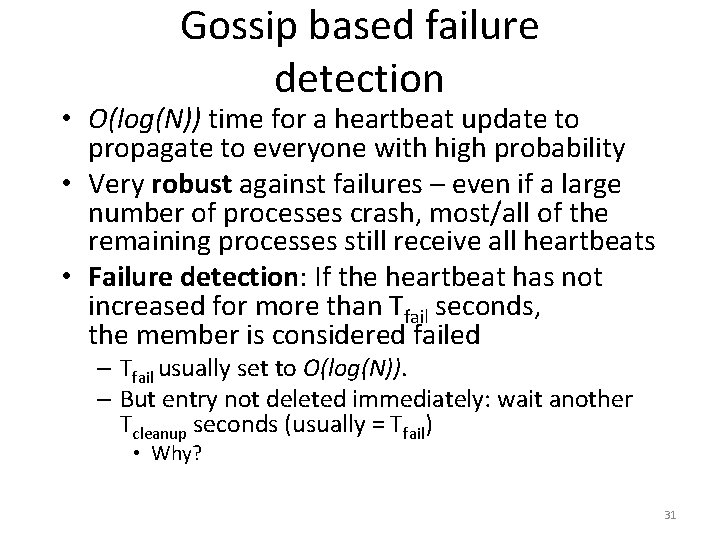

Gossip based failure detection • O(log(N)) time for a heartbeat update to propagate to everyone with high probability • Very robust against failures – even if a large number of processes crash, most/all of the remaining processes still receive all heartbeats • Failure detection: If the heartbeat has not increased for more than Tfail seconds, the member is considered failed – Tfail usually set to O(log(N)). – But entry not deleted immediately: wait another Tcleanup seconds (usually = Tfail) • Why? 31

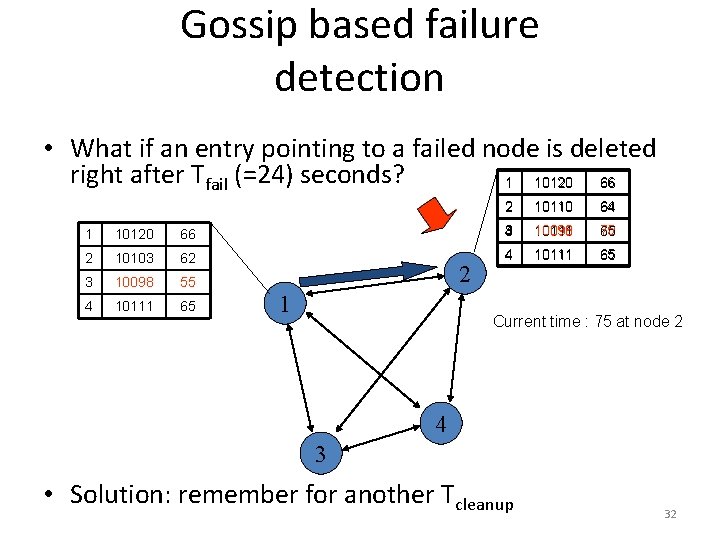

Gossip based failure detection • What if an entry pointing to a failed node is deleted right after Tfail (=24) seconds? 1 10120 66 2 10110 64 1 10120 66 3 4 10098 10111 75 50 65 2 10103 62 4 10111 65 3 10098 55 4 10111 65 2 1 Current time : 75 at node 2 4 3 • Solution: remember for another Tcleanup 32

Other types of failures • Communication omission failures – Send-omission: loss of messages between the sending process and the outgoing message buffer (both inclusive) – Channel omission: loss of message in the communication channel – Receive-omission: loss of messages between the incoming message buffer and the receiving process (both inclusive) • Arbitrary failures – Arbitrary process failure: arbitrarily omits intended processing steps or takes unintended processing steps. – Arbitrary channel failures: messages may be corrupted, duplicated, delivered out of order, incur extremely large delays; or non-existent messages may be delivered. – These are known as Byzantine failures, e. g. , due to hackers, man-in-themiddle attacks, viruses, worms, etc. , and even bugs in the code – A variety of Byzantine fault-tolerant protocols have been designed in literature 33

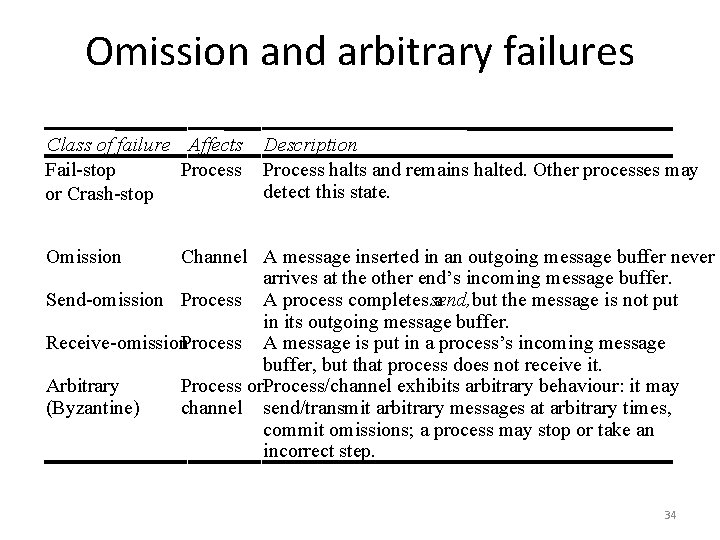

Omission and arbitrary failures Class of failure Affects Fail-stop Process or Crash-stop Description Process halts and remains halted. Other processes may detect this state. Omission Channel A message inserted in an outgoing message buffer never arrives at the other end’s incoming message buffer. Send-omission Process A process completes send, a but the message is not put in its outgoing message buffer. Receive-omission. Process A message is put in a process’s incoming message buffer, but that process does not receive it. Arbitrary Process or. Process/channel exhibits arbitrary behaviour: it may (Byzantine) channel send/transmit arbitrary messages at arbitrary times, commit omissions; a process may stop or take an incorrect step. 34

Next lecture • Time and synchronization 35

- Slides: 35