Distributed Systems Interprocess Communication IPC Processes are either

Distributed Systems

Interprocess Communication (IPC) • Processes are either independent or cooperating – Threads provide a gray area – Cooperating processes can affect other processes execution • Reasons for cooperation – – Information sharing Parallelization Modularity Convenience • Cooperating processes must communicate (IPC) • Two models: – Shared memory – Message passing

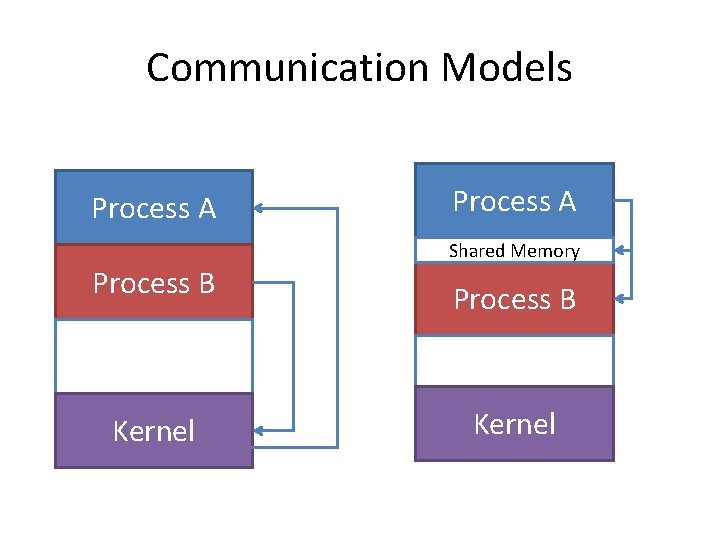

Communication Models Process A Process B Kernel Process A Shared Memory Process B Kernel

Shared Memory • Processes – Each process has private address space – Must explicitly setup shared memory segments inside each process address space • Threads – Default behavior, always on • Advantages – Fast and easy to share data • Disadvantages – Must synchronize data accesses; error prone

Signals • Signal – Software based notification of event – Available signals for applications • SIGUSR 1, SIGUSR 2, etc… • Signal Reception – Catch: Process specifies a handler to call – Default is to ignore signal – Signals can also be masked • Disadvantages – Does not exchange data – Complex thread semantics

Message Passing • Processes exchange messages – Messages are arbitrary pieces of information – Explicit send and receive operations • Advantages – Explicit sharing (easier to reason about) – Improves modularity (well defined interfaces) – Improved isolation • Disadvantage – Performance overhead for handling messages

Message Passing • Where do you send a message? – How do you NAME recipients? • Depends on environment: IP + port, PID, UNIX pipe file • How do you parse a message? – Requires a protocol • Message format • Message order • Process states • Directionality – One way: one process always sends data, other receives – Two way: Receiver sends a reply for each message • Example: Remote Procedure Calls

Remote Procedure Calls • Invocation of a function in a separate process – Identifies which function to invoke – Provides function arguments – Sends return value back to caller • Function invocation info must be encapsulated into message – Marshaling • Transform arguments and return values into message data • Requires knowledge about data types • Either by hand or automated

Distributed File systems • Goal: View a distributed system as a file system – Storage is distributed • Issues not common to local file systems – Naming transparency – Load balancing – Scalability – Location and network transparency – Fault tolerance

Transfer Model • Upload/Download model – Client downloads file and modifies local copy – Uploads final result back to server – Simple with good performance • Remote Access model – File only exists on server, all I/O operations forwarded by client

Naming Transparency • Naming is a mapping from logical to physical objects • Ideally client interface should be transparent – No difference between local and remote files – No partitioning of namespace • 2 types of transparency – Location transparency: • path provides no info about location – Location independence: • Move files without changing names • Name space separate from storage device hierarchy

Caching • Keep repeatedly accessed blocks in cache – Improves performance of further accesses • Very similar to buffer cache – But cached copy can reside on local disk – Synchronization occurs much less frequently – Cache consistency becomes a much larger issue • Multiple levels of caching – Memory: Faster accesses, less state to track (NFS) – Disk: Reliable, allows disconnected operation (AFS)

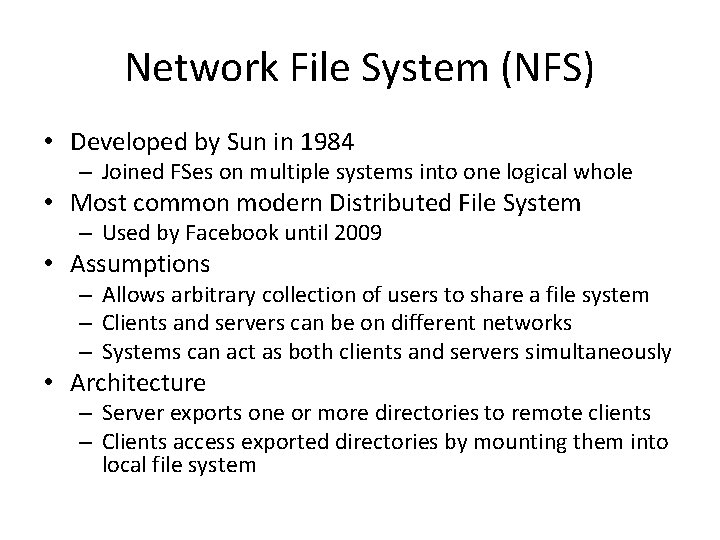

Network File System (NFS) • Developed by Sun in 1984 – Joined FSes on multiple systems into one logical whole • Most common modern Distributed File System – Used by Facebook until 2009 • Assumptions – Allows arbitrary collection of users to share a file system – Clients and servers can be on different networks – Systems can act as both clients and servers simultaneously • Architecture – Server exports one or more directories to remote clients – Clients access exported directories by mounting them into local file system

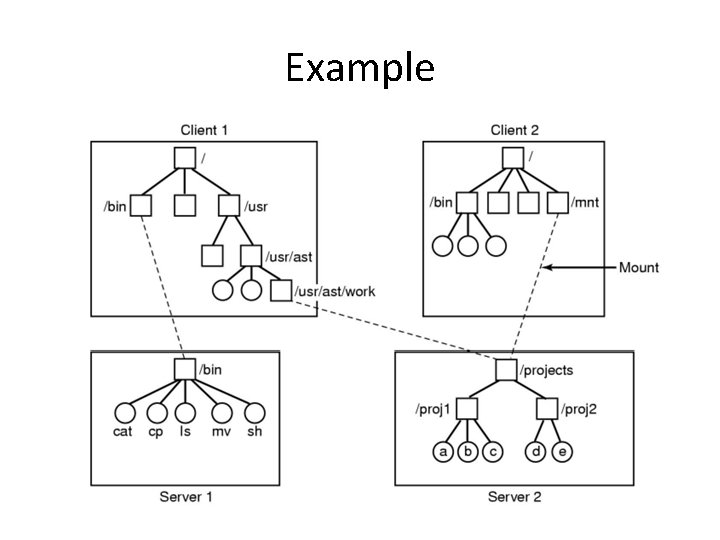

Example

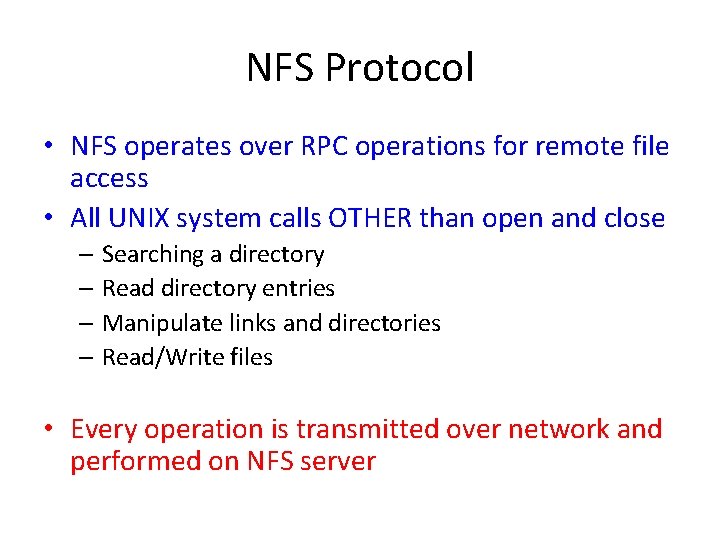

NFS Protocol • NFS operates over RPC operations for remote file access • All UNIX system calls OTHER than open and close – Searching a directory – Read directory entries – Manipulate links and directories – Read/Write files • Every operation is transmitted over network and performed on NFS server

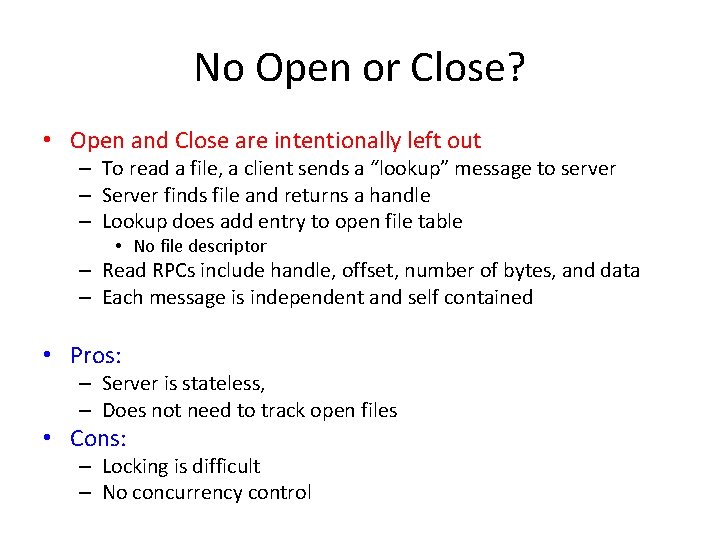

No Open or Close? • Open and Close are intentionally left out – To read a file, a client sends a “lookup” message to server – Server finds file and returns a handle – Lookup does add entry to open file table • No file descriptor – Read RPCs include handle, offset, number of bytes, and data – Each message is independent and self contained • Pros: – Server is stateless, – Does not need to track open files • Cons: – Locking is difficult – No concurrency control

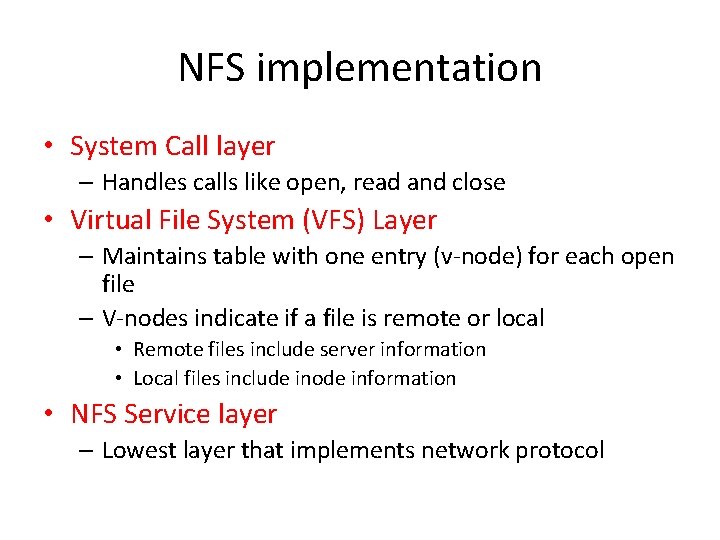

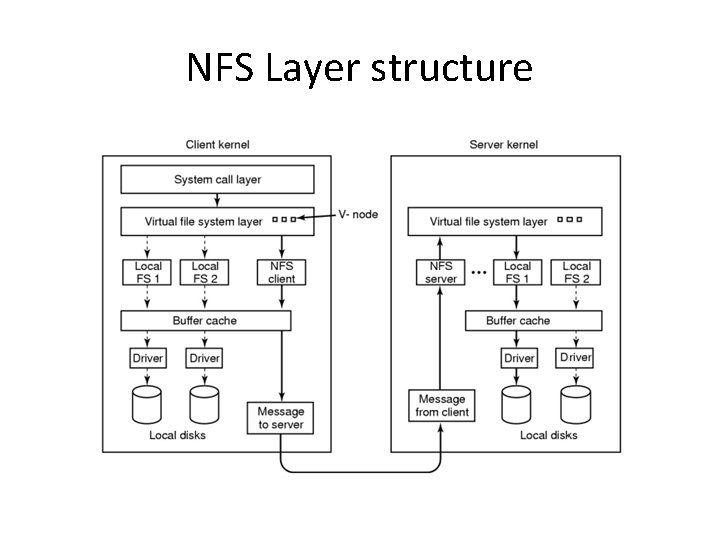

NFS implementation • System Call layer – Handles calls like open, read and close • Virtual File System (VFS) Layer – Maintains table with one entry (v-node) for each open file – V-nodes indicate if a file is remote or local • Remote files include server information • Local files include inode information • NFS Service layer – Lowest layer that implements network protocol

NFS Layer structure

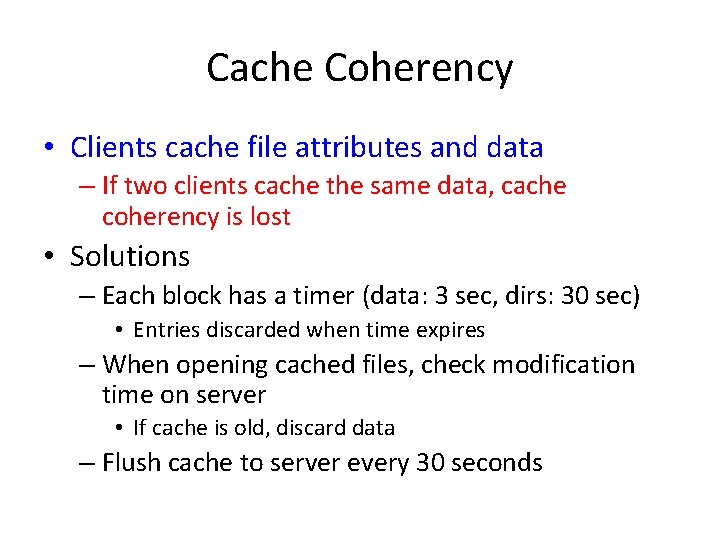

Cache Coherency • Clients cache file attributes and data – If two clients cache the same data, cache coherency is lost • Solutions – Each block has a timer (data: 3 sec, dirs: 30 sec) • Entries discarded when time expires – When opening cached files, check modification time on server • If cache is old, discard data – Flush cache to server every 30 seconds

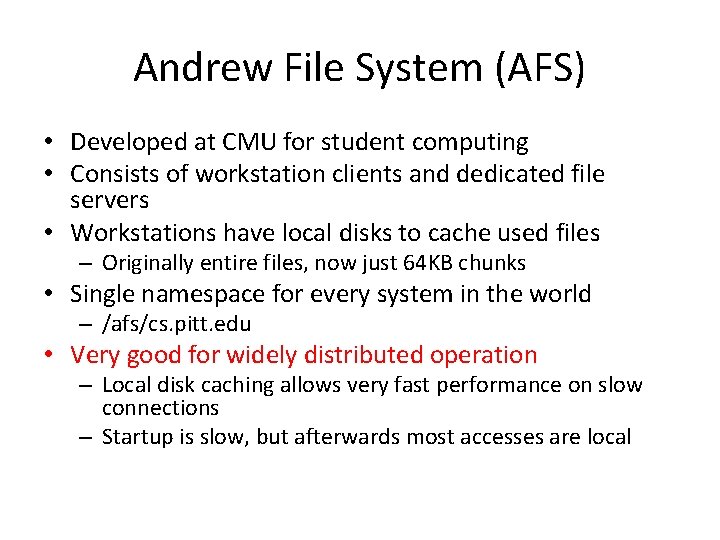

Andrew File System (AFS) • Developed at CMU for student computing • Consists of workstation clients and dedicated file servers • Workstations have local disks to cache used files – Originally entire files, now just 64 KB chunks • Single namespace for every system in the world – /afs/cs. pitt. edu • Very good for widely distributed operation – Local disk caching allows very fast performance on slow connections – Startup is slow, but afterwards most accesses are local

AFS Overview • Based on upload/download model – Clients download and cache files – Server keeps track of clients with cached copies – Clients upload files at end of session • Whole file caching is central idea behind AFS – Later changed to block operations – Simple and effective • AFS servers are stateful – Keep track of clients that have cached files – Recall files that have been modified

AFS Details • Based on dedicated server machines • Clients see partitioned namespace – Local name space and shared name space – Cluster of dedicated AFS servers present shared name space • AFS file names work anywhere in the world – /afs/cs. pitt. edu/usr 0/jacklange

AFS: Operations and Consistency • AFS caches entire files from servers – Client interacts with servers only for open/close • OS on client intercepts calls, and passes it to local AFS process – Local process caches files from servers – Contacts AFS servers for each open/close • Only if file is not in cache – File reads/writes operate on local cached copy

AFS Caching and Consistency • Need for scaling led to reduction of client-server message traffic • Once a file is cached, all operations are performed locally – Cache is on disk, so normal FS operations are used • On close, if file is modified, it is replaced on the server – What happens if multiple clients share a file? • Client assumes its cache is up to date… – Unless it receives a callback message from server – On file open, if client has received callback for that file, it must fetch a new copy

Summary • NFS – Simple distributed file system protocol – Stateless (No open/close) – Has problems with cache consistency and locking • AFS – More complex protocol – Stateful server – Session semantics – Consistency on close

- Slides: 25