Distributed Servers Architecture for Networked Video Services S

Distributed Servers Architecture for Networked Video Services S. H. Gary Chan, Member IEEE, and Fouad Tobagi, Fellow IEEE

Contents § § § Introduction System Architecture Caching schemes for video-on-demand services Analytical Model Results Conclusion

Introduction (1) § In a Vo. D system, the central server has limited streaming capacities § Distributed servers architecture § § § Hierarchy of servers Local servers cache the videos Some servers are placed close to the user cluster § Determine which movie and how much video data should be stored to minimize the total cost

Introduction (2) § Three models of distributed servers architecture § § § Uncooperative - unicast Uncooperative - multicast Cooperative - communicating servers § This paper studied a number of caching schemes, all employing circular buffers and partial caching § All requests arriving during the cache window duration are served from the local cache § Claim that using partial caching on temporary storage can lower the system cost by an order of magnitude

System Cost (1) § Cost associated with storing a movie in a local server § How much and how long the storage is used § For example, 1 -GB disk costing about $200 § § Disk to be amortized one year Cost = $200/(365 X 6) = 0. 091/h per GB Streaming rate = 5 Mb/s One minute of video data (0. 0375 GB) costs: § 0. 091/h X 0. 0375 = $5. 71 X 10 -5 /min

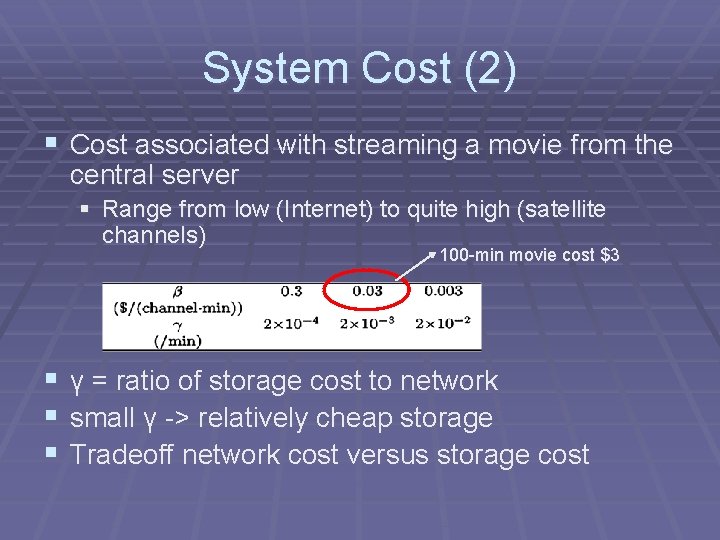

System Cost (2) § Cost associated with streaming a movie from the central server § Range from low (Internet) to quite high (satellite channels) 100 -min movie cost $3 § § § γ = ratio of storage cost to network small γ -> relatively cheap storage Tradeoff network cost versus storage cost

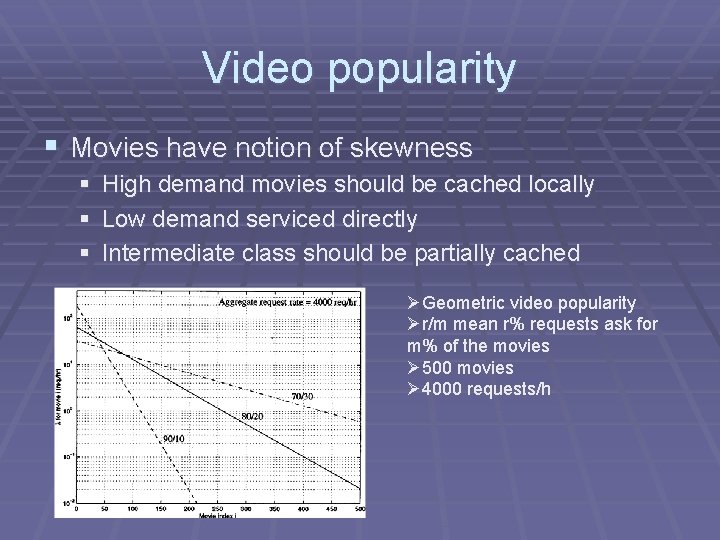

Video popularity § Movies have notion of skewness § High demand movies should be cached locally § Low demand serviced directly § Intermediate class should be partially cached ØGeometric video popularity Ør/m mean r% requests ask for m% of the movies Ø 500 movies Ø 4000 requests/h

Caching Scheme (1) § Unicast Delivery § A new arrival for a video opens a network channel of Th minutes to stream the video from the central server § Local server caches W minutes of data with circular buffer § All requests within a window size (W) form a group § Arrivals more than W minutes start a new stream

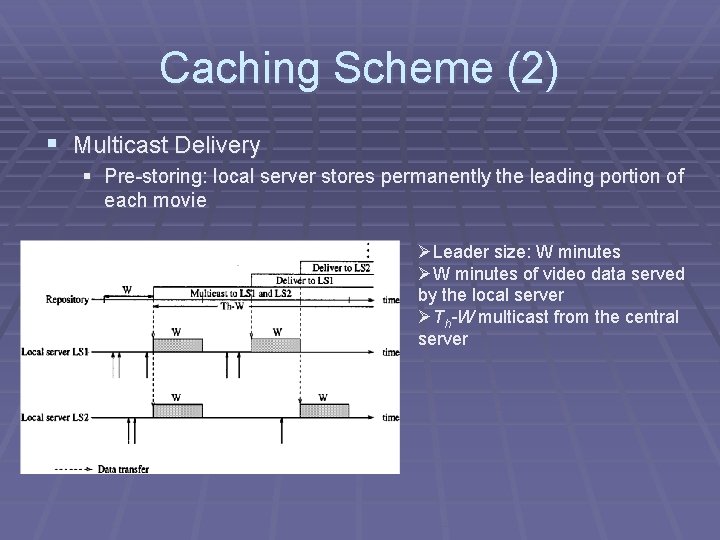

Caching Scheme (2) § Multicast Delivery § Pre-storing: local server stores permanently the leading portion of each movie ØLeader size: W minutes ØW minutes of video data served by the local server ØTh-W multicast from the central server

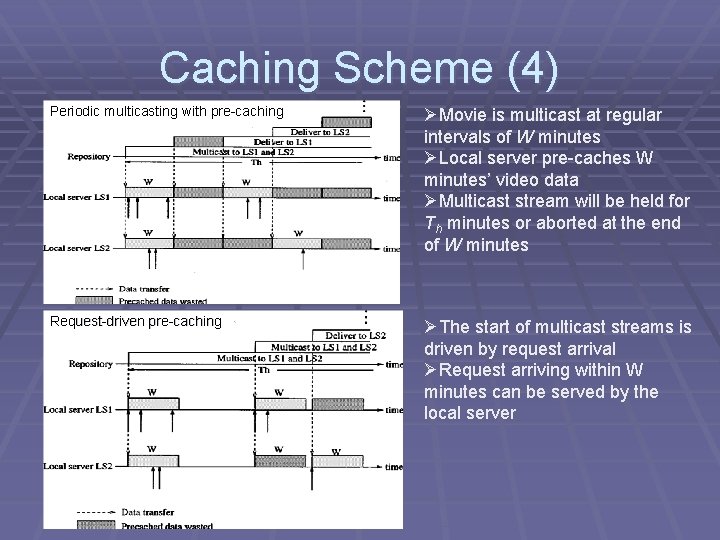

Caching Scheme (3) § Multicast Delivery § Pre-caching: local server decides if it should cache a multicast video or not § Two schemes depending on the multicast schedule § Periodic multicasting with pre-caching § Request-driven pre-caching

Caching Scheme (4) Periodic multicasting with pre-caching ØMovie is multicast at regular intervals of W minutes ØLocal server pre-caches W minutes’ video data ØMulticast stream will be held for Th minutes or aborted at the end of W minutes Request-driven pre-caching ØThe start of multicast streams is driven by request arrival ØRequest arriving within W minutes can be served by the local server

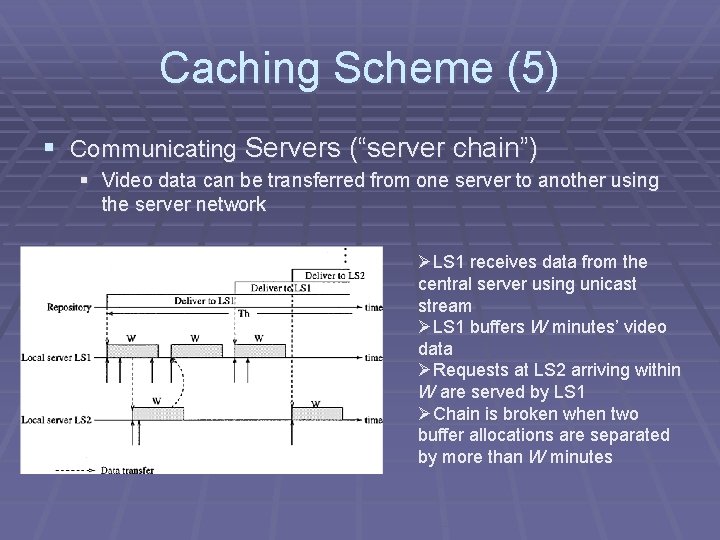

Caching Scheme (5) § Communicating Servers (“server chain”) § Video data can be transferred from one server to another using the server network ØLS 1 receives data from the central server using unicast stream ØLS 1 buffers W minutes’ video data ØRequests at LS 2 arriving within W are served by LS 1 ØChain is broken when two buffer allocations are separated by more than W minutes

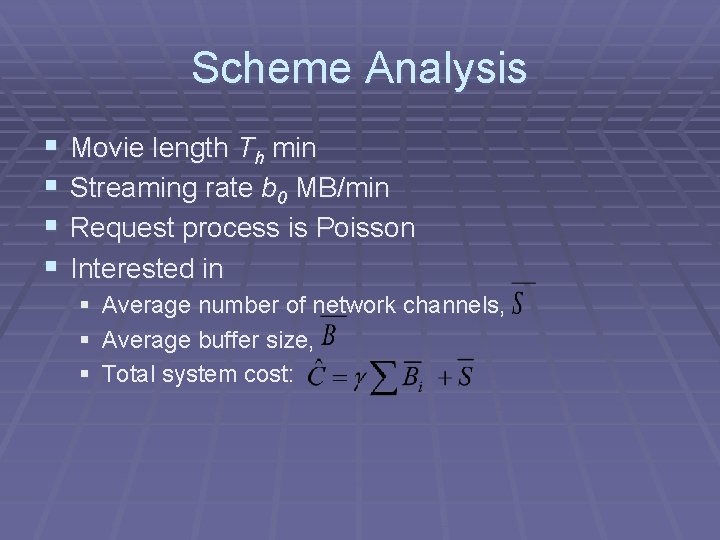

Scheme Analysis § § Movie length Th min Streaming rate b 0 MB/min Request process is Poisson Interested in § § § Average number of network channels, Average buffer size, Total system cost:

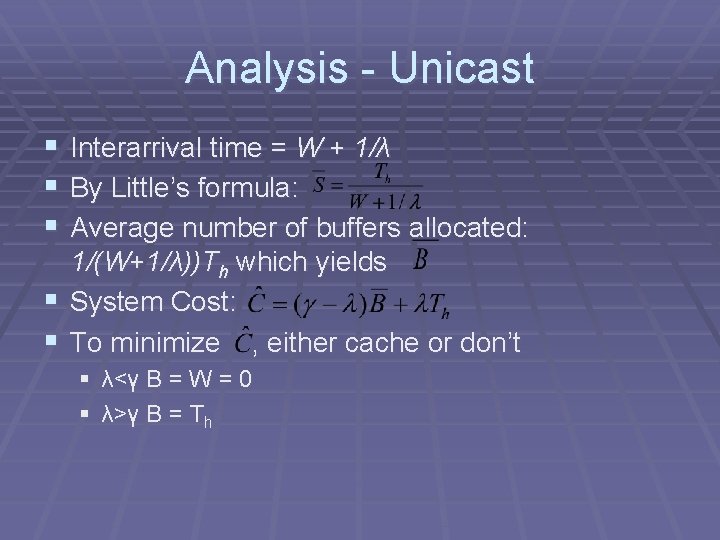

Analysis - Unicast § Interarrival time = W + 1/λ § By Little’s formula: § Average number of buffers allocated: § § 1/(W+1/λ))Th which yields System Cost: To minimize , either cache or don’t § λ<γ B = W = 0 § λ>γ B = Th

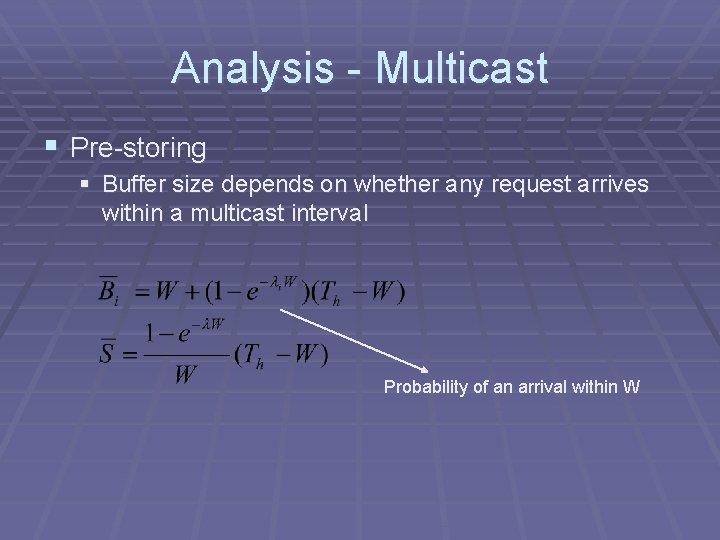

Analysis - Multicast § Pre-storing § Buffer size depends on whether any request arrives within a multicast interval Probability of an arrival within W

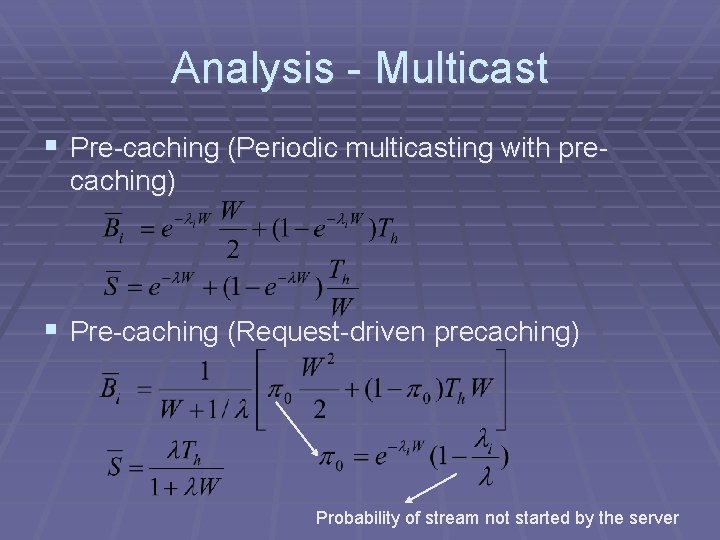

Analysis - Multicast § Pre-caching (Periodic multicasting with precaching) § Pre-caching (Request-driven precaching) Probability of stream not started by the server

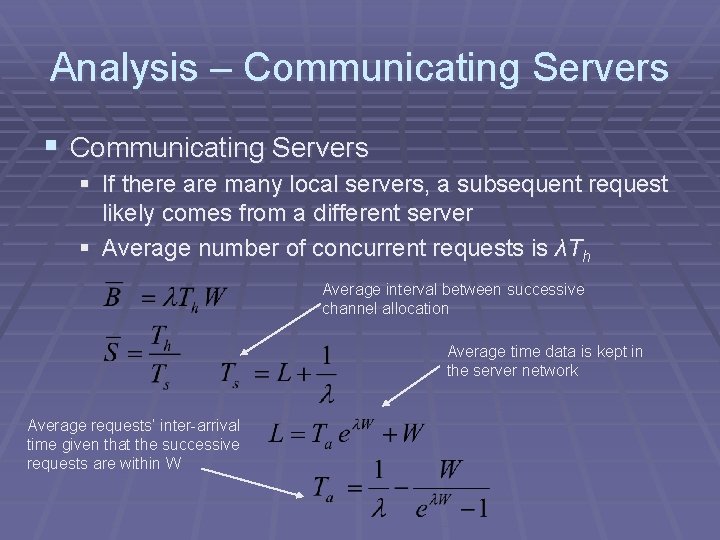

Analysis – Communicating Servers § If there are many local servers, a subsequent request likely comes from a different server § Average number of concurrent requests is λTh Average interval between successive channel allocation Average time data is kept in the server network Average requests’ inter-arrival time given that the successive requests are within W

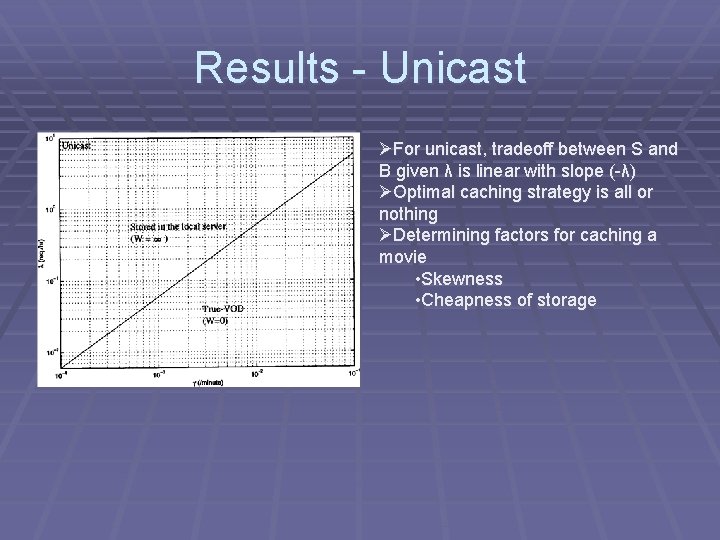

Results - Unicast ØFor unicast, tradeoff between S and B given λ is linear with slope (-λ) ØOptimal caching strategy is all or nothing ØDetermining factors for caching a movie • Skewness • Cheapness of storage

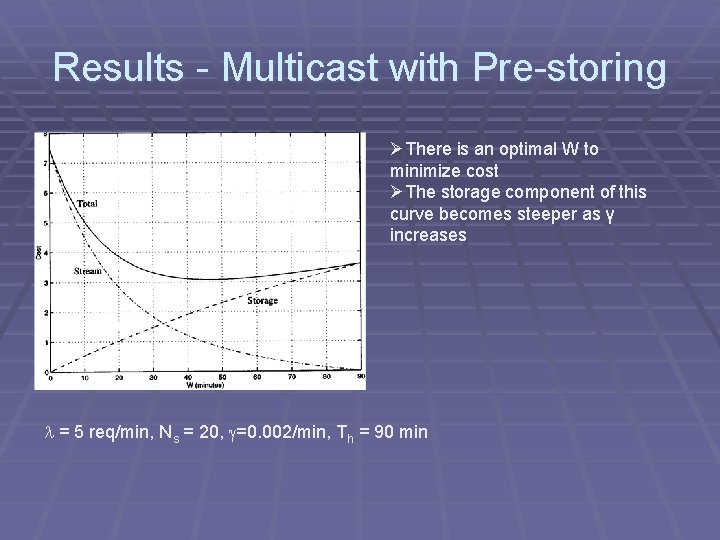

Results - Multicast with Pre-storing ØThere is an optimal W to minimize cost ØThe storage component of this curve becomes steeper as γ increases = 5 req/min, Ns = 20, =0. 002/min, Th = 90 min

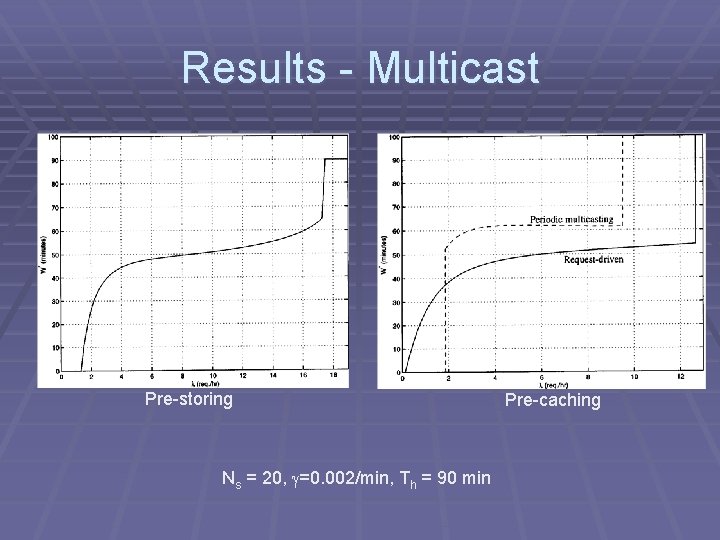

Results - Multicast Pre-storing Ns = 20, =0. 002/min, Th = 90 min Pre-caching

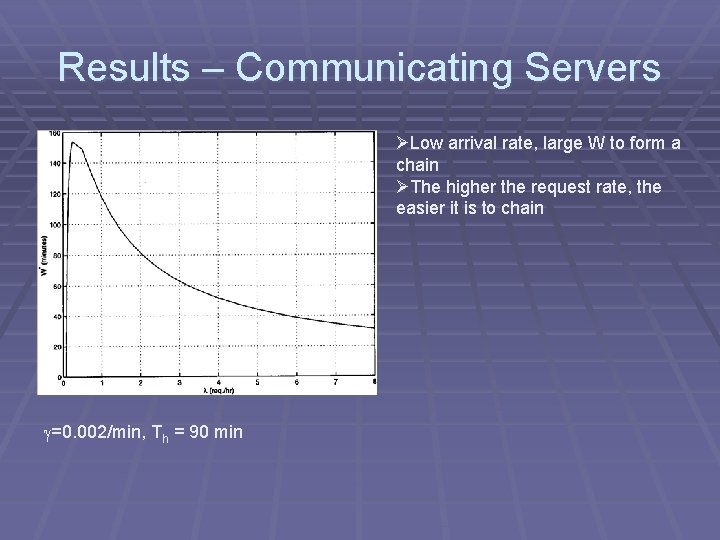

Results – Communicating Servers ØLow arrival rate, large W to form a chain ØThe higher the request rate, the easier it is to chain =0. 002/min, Th = 90 min

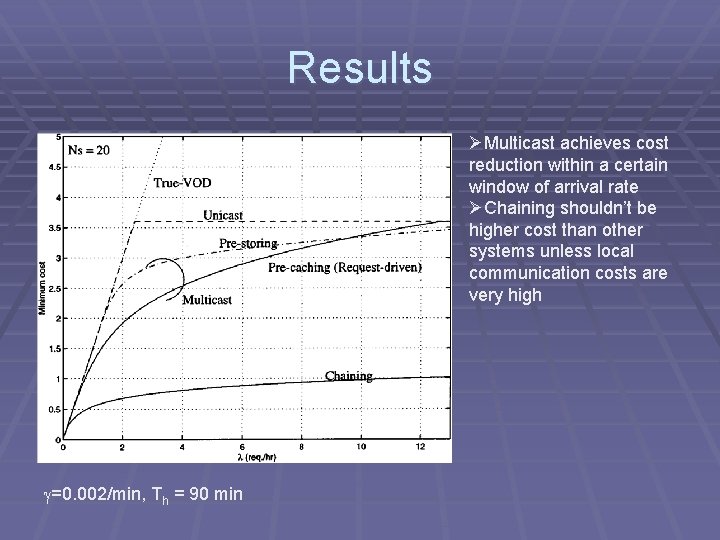

Results ØMulticast achieves cost reduction within a certain window of arrival rate ØChaining shouldn’t be higher cost than other systems unless local communication costs are very high =0. 002/min, Th = 90 min

System Using Batching and Multicast § Requests arriving within a period of time are grouped together § Batching allows fewer multicast streams to be used, thus lowering the associated cost § Users will tolerate some delay, Dmax

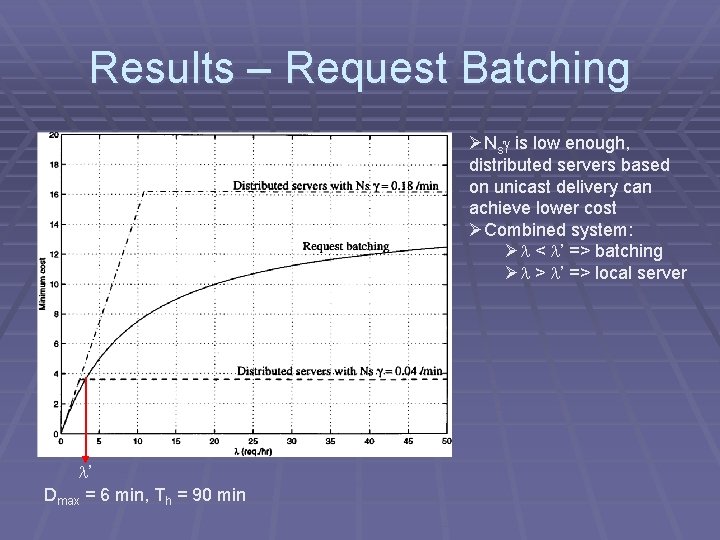

Results – Request Batching ØNs is low enough, distributed servers based on unicast delivery can achieve lower cost ØCombined system: Ø < ’ => batching Ø > ’ => local server ’ Dmax = 6 min, Th = 90 min

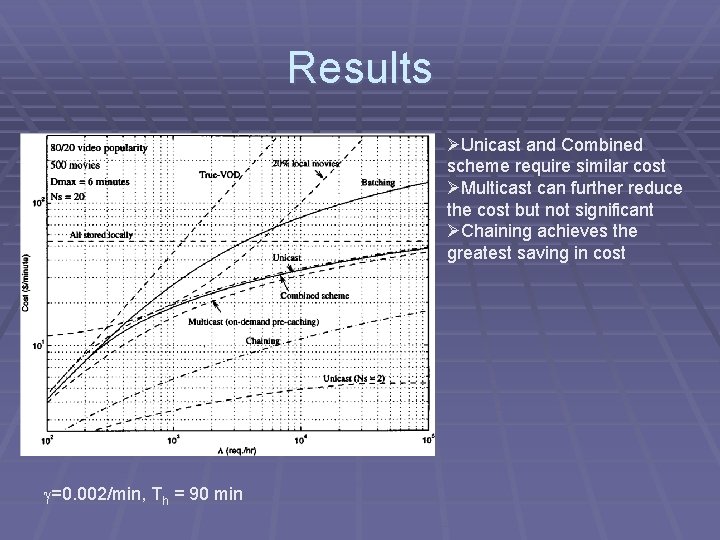

Results ØUnicast and Combined scheme require similar cost ØMulticast can further reduce the cost but not significant ØChaining achieves the greatest saving in cost =0. 002/min, Th = 90 min

Conclusion § Distributed servers architecture to provideoon-demand service § Different local caching for video streaming § Given certain cost function, determine § which video and how much video data should be cached § More skewed the video popularity is, the more saving a distributed servers architecture can achieve

- Slides: 26