Distributed Representations of Words and Phrases and their

“Distributed Representations of Words and Phrases and their Compositionality “ – part 2 2017. 9. 12 최 현영 숭실대학교

Contents Skip-gram model for Word 2 vec Hierarchical softmax Negative sampling Sub. Sampling 2 / 20

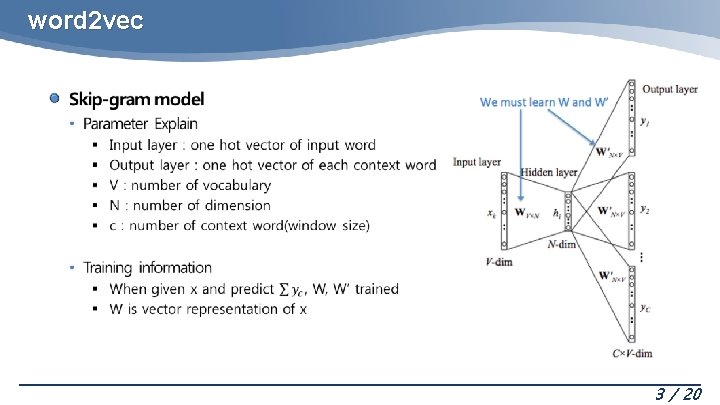

word 2 vec 3 / 20

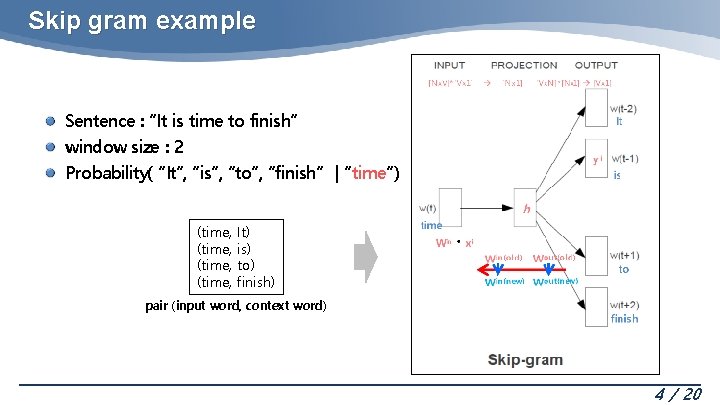

Skip gram example Sentence : “It is time to finish” window size : 2 Probability( “It”, “is”, “to”, “finish” | “time”) (time, It) is) to) finish) pair (input word, context word) 4 / 20

word 2 vec – Cost Function Compute probability by softmax function 5 / 20

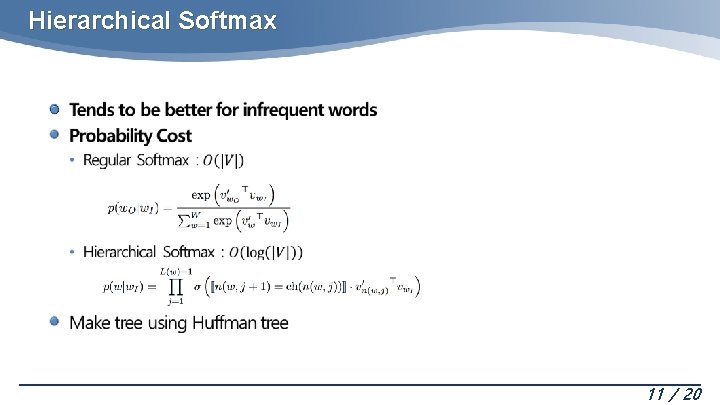

Optimizing Computational Efficiency Hierarchical Softmax Negative Sampling Subsampling 6 / 20

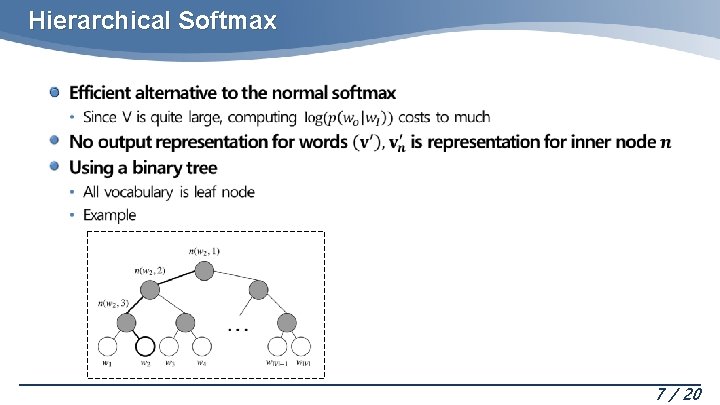

Hierarchical Softmax 7 / 20

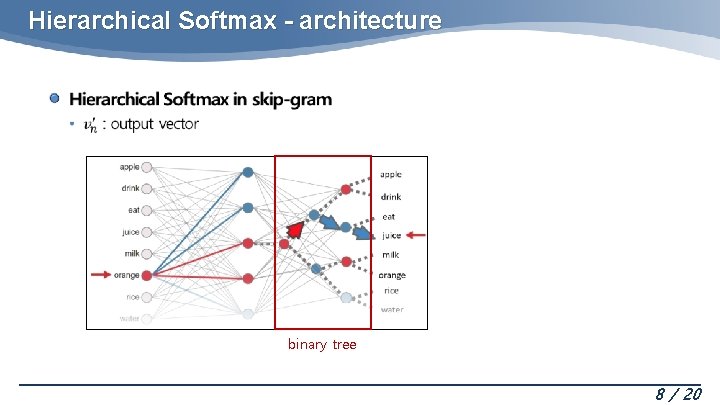

Hierarchical Softmax - architecture binary tree 8 / 20

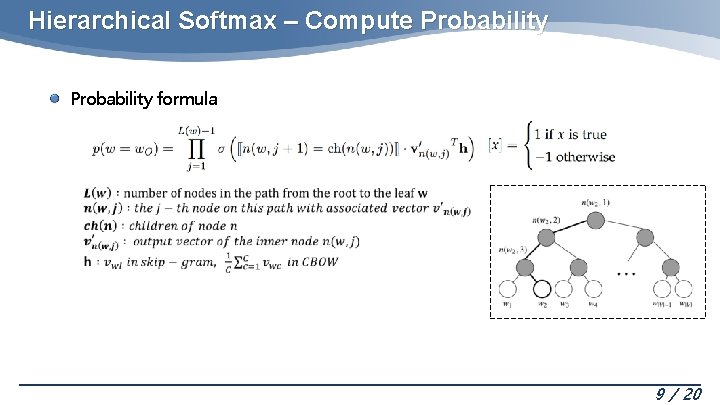

Hierarchical Softmax – Compute Probability formula 9 / 20

Hierarchical Softmax – Example of probability 10 / 20

Hierarchical Softmax 11 / 20

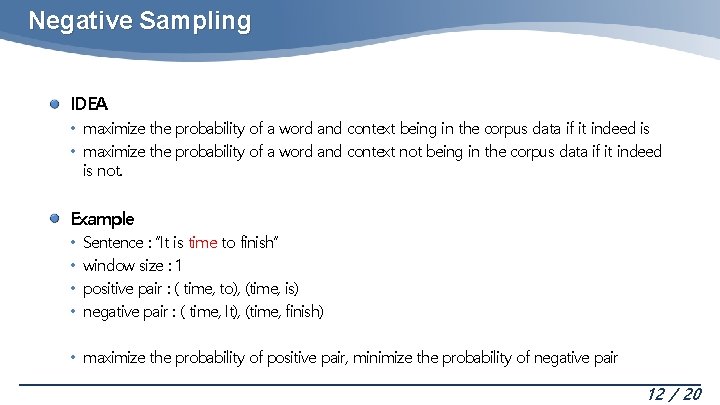

Negative Sampling IDEA • maximize the probability of a word and context being in the corpus data if it indeed is • maximize the probability of a word and context not being in the corpus data if it indeed is not. Example • • Sentence : “It is time to finish” window size : 1 positive pair : ( time, to), (time, is) negative pair : ( time, It), (time, finish) • maximize the probability of positive pair, minimize the probability of negative pair 12 / 20

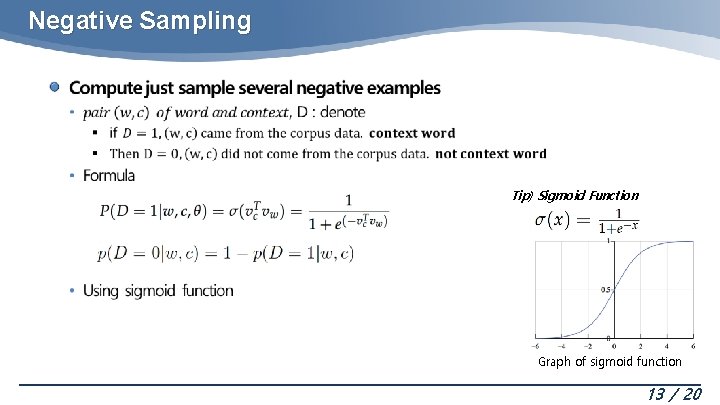

Negative Sampling Tip) Sigmoid Function Graph of sigmoid function 13 / 20

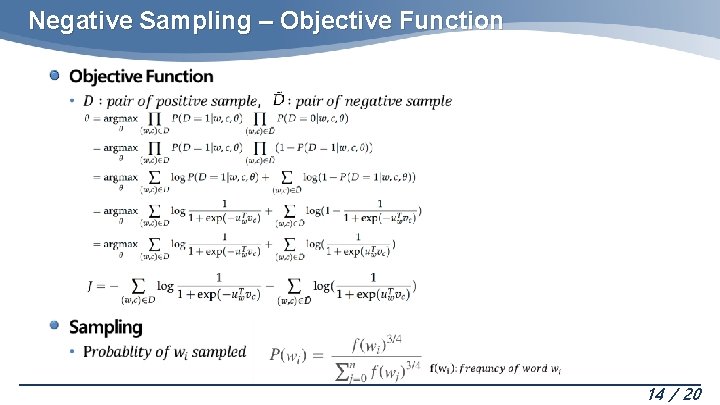

Negative Sampling – Objective Function 14 / 20

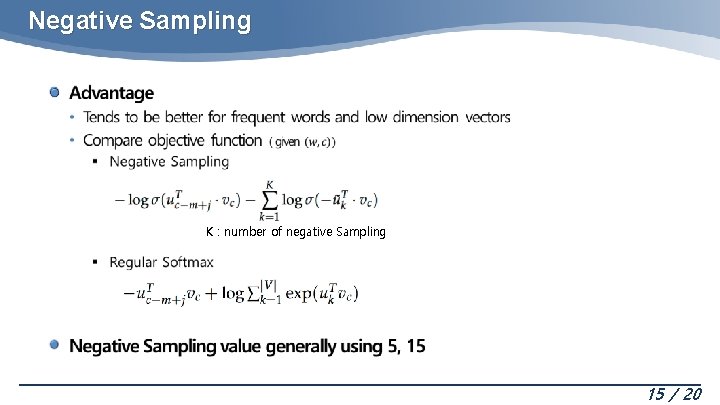

Negative Sampling K : number of negative Sampling 15 / 20

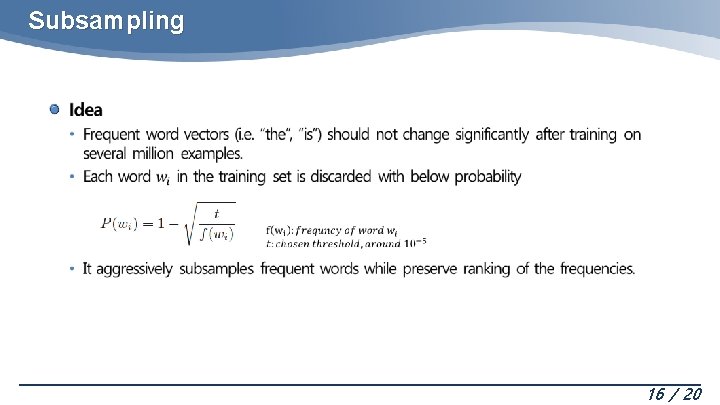

Subsampling 16 / 20

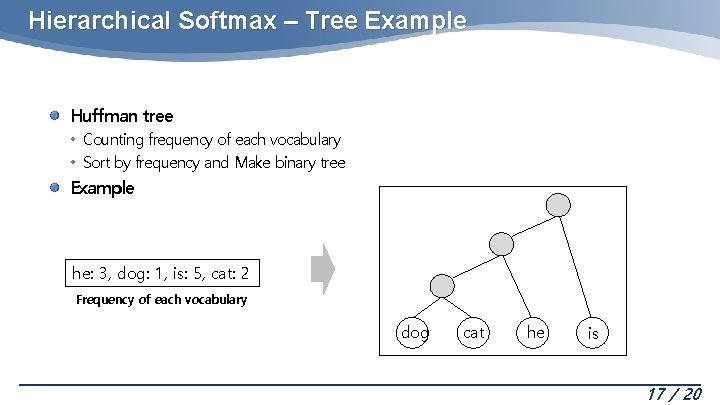

Hierarchical Softmax – Tree Example Huffman tree • Counting frequency of each vocabulary • Sort by frequency and Make binary tree Example he: 3, dog: 1, is: 5, cat: 2 Frequency of each vocabulary dog cat he is 17 / 20

- Slides: 17