Distributed Processing with MPI International Summer School 2015

Distributed Processing with MPI International Summer School 2015 Tomsk Polytechnic University Assistant Professor Dr. Sergey Axyonov

Overview §Introduction & Basic functions §Point-to-point communication §Blocking and non-blocking communication §Collective communication

MPI Intro I §MPI is a language-independent communications protocol used to program parallel computers. § De facto standard for communication among processes that model a parallel program running on a distributed memory system. §MPI implementations consist of a specific set of routines directly callable from C, C++, Fortran and any language able to interface with such libraries.

MPI Intro II: Basic functions §MPI_Init §MPI_Finalize §MPI_Comm_Rank §MPI_Send §MPI_Recv

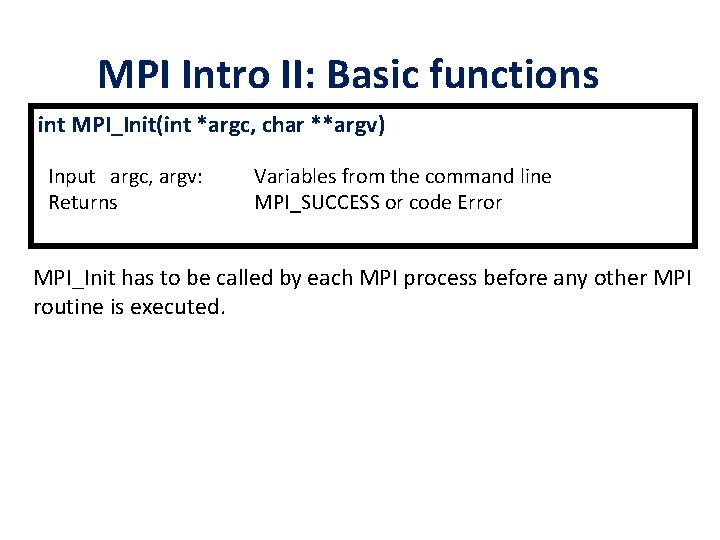

MPI Intro II: Basic functions int MPI_Init(int *argc, char **argv) Input argc, argv: Returns Variables from the command line MPI_SUCСESS or code Error MPI_Init has to be called by each MPI process before any other MPI routine is executed.

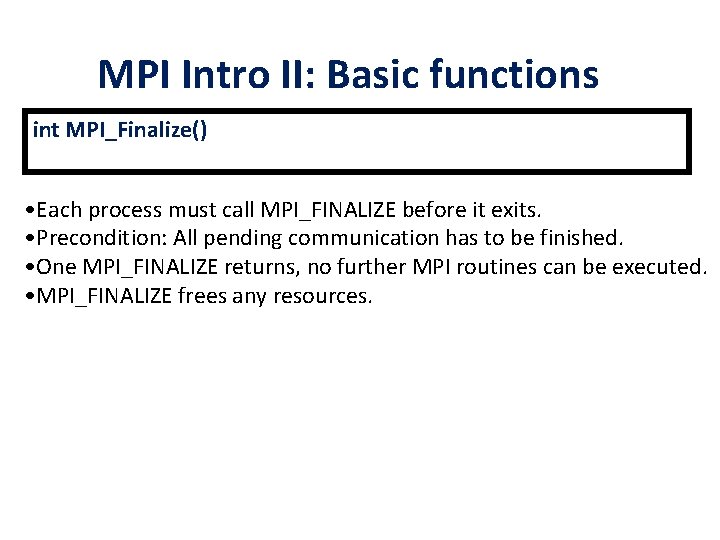

MPI Intro II: Basic functions int MPI_Finalize() • Each process must call MPI_FINALIZE before it exits. • Precondition: All pending communication has to be finished. • One MPI_FINALIZE returns, no further MPI routines can be executed. • MPI_FINALIZE frees any resources.

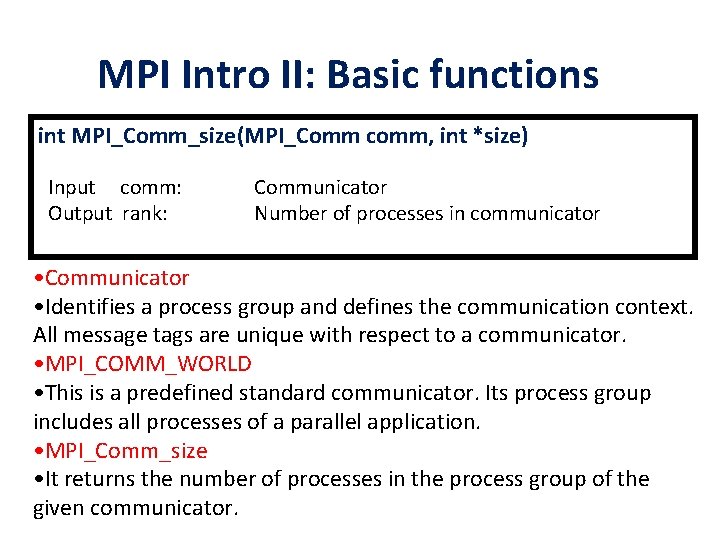

MPI Intro II: Basic functions int MPI_Comm_size(MPI_Comm comm, int *size) Input comm: Output rank: Communicator Number of processes in communicator • Communicator • Identifies a process group and defines the communication context. All message tags are unique with respect to a communicator. • MPI_COMM_WORLD • This is a predefined standard communicator. Its process group includes all processes of a parallel application. • MPI_Comm_size • It returns the number of processes in the process group of the given communicator.

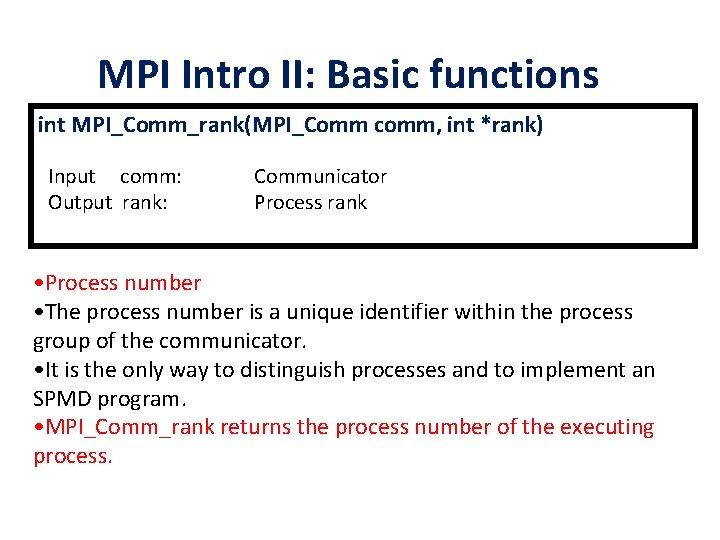

MPI Intro II: Basic functions int MPI_Comm_rank(MPI_Comm comm, int *rank) Input comm: Output rank: Communicator Process rank • Process number • The process number is a unique identifier within the process group of the communicator. • It is the only way to distinguish processes and to implement an SPMD program. • MPI_Comm_rank returns the process number of the executing process.

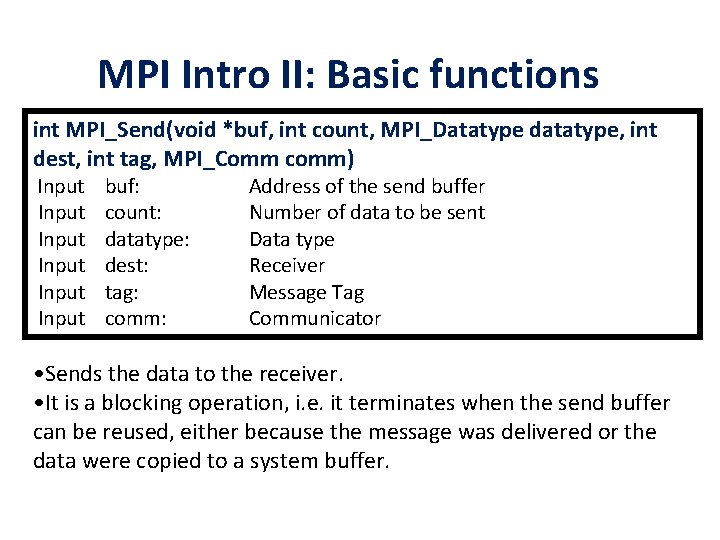

MPI Intro II: Basic functions int MPI_Send(void *buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm) Input Input buf: count: datatype: dest: tag: comm: Address of the send buffer Number of data to be sent Data type Receiver Message Tag Communicator • Sends the data to the receiver. • It is a blocking operation, i. e. it terminates when the send buffer can be reused, either because the message was delivered or the data were copied to a system buffer.

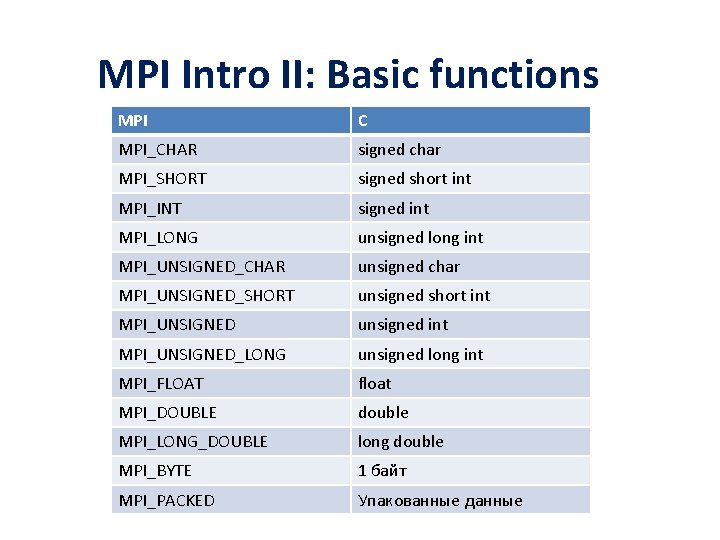

MPI Intro II: Basic functions MPI C MPI_CHAR signed char MPI_SHORT signed short int MPI_INT signed int MPI_LONG unsigned long int MPI_UNSIGNED_CHAR unsigned char MPI_UNSIGNED_SHORT unsigned short int MPI_UNSIGNED unsigned int MPI_UNSIGNED_LONG unsigned long int MPI_FLOAT float MPI_DOUBLE double MPI_LONG_DOUBLE long double MPI_BYTE 1 байт MPI_PACKED Упакованные данные

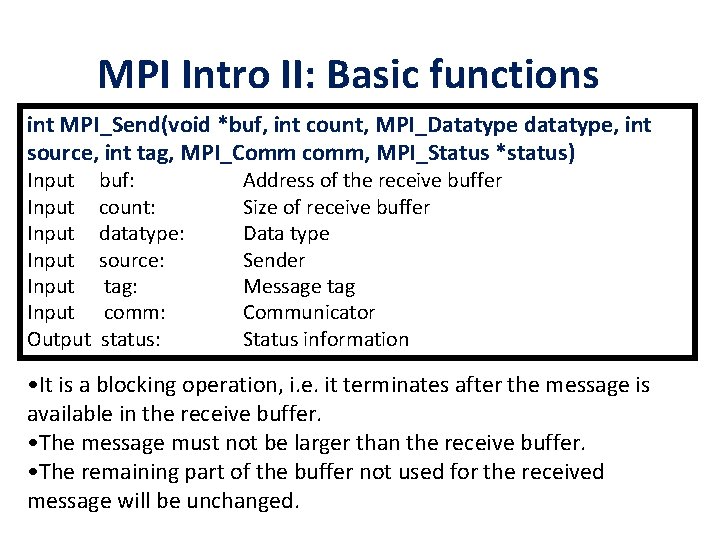

MPI Intro II: Basic functions int MPI_Send(void *buf, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Status *status) Input Input Output buf: count: datatype: source: tag: comm: status: Address of the receive buffer Size of receive buffer Data type Sender Message tag Communicator Status information • It is a blocking operation, i. e. it terminates after the message is available in the receive buffer. • The message must not be larger than the receive buffer. • The remaining part of the buffer not used for the received message will be unchanged.

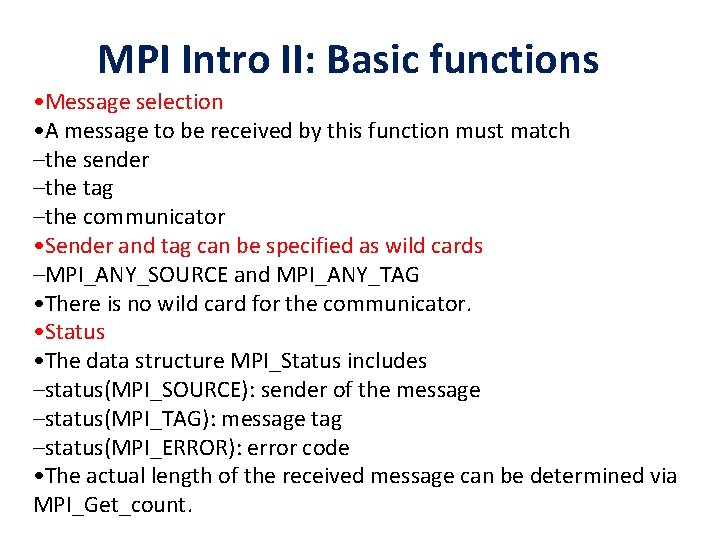

MPI Intro II: Basic functions • Message selection • A message to be received by this function must match –the sender –the tag –the communicator • Sender and tag can be specified as wild cards –MPI_ANY_SOURCE and MPI_ANY_TAG • There is no wild card for the communicator. • Status • The data structure MPI_Status includes –status(MPI_SOURCE): sender of the message –status(MPI_TAG): message tag –status(MPI_ERROR): error code • The actual length of the received message can be determined via MPI_Get_count.

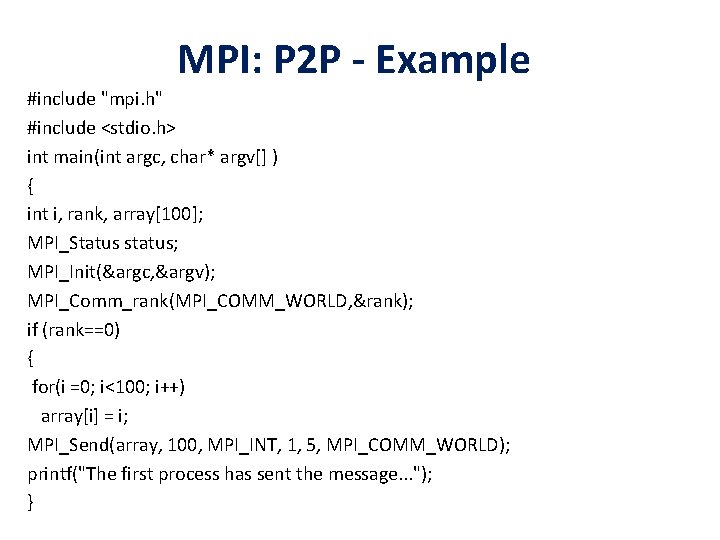

MPI: P 2 P - Example #include "mpi. h" #include <stdio. h> int main(int argc, char* argv[] ) { int i, rank, array[100]; MPI_Status status; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); if (rank==0) { for(i =0; i<100; i++) array[i] = i; MPI_Send(array, 100, MPI_INT, 1, 5, MPI_COMM_WORLD); printf("The first process has sent the message. . . "); }

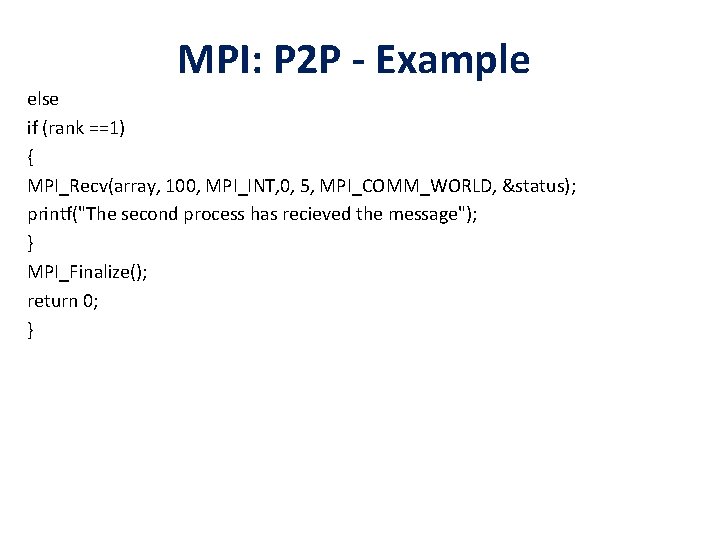

MPI: P 2 P - Example else if (rank ==1) { MPI_Recv(array, 100, MPI_INT, 0, 5, MPI_COMM_WORLD, &status); printf("The second process has recieved the message"); } MPI_Finalize(); return 0; }

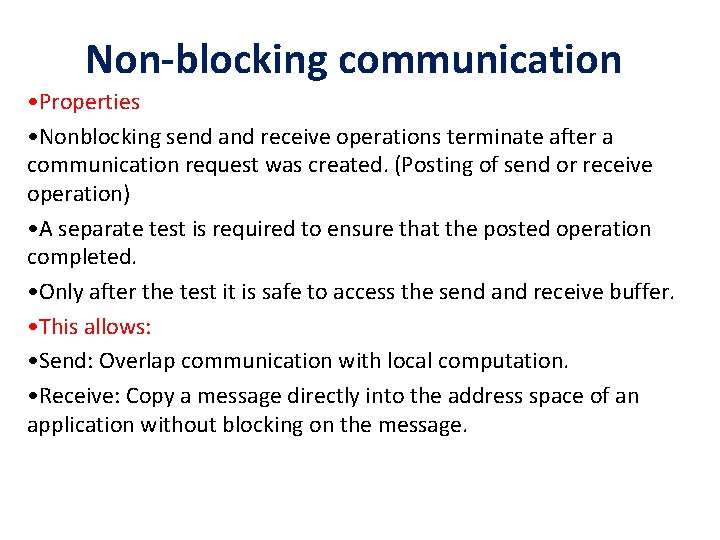

Non-blocking communication • Properties • Nonblocking send and receive operations terminate after a communication request was created. (Posting of send or receive operation) • A separate test is required to ensure that the posted operation completed. • Only after the test it is safe to access the send and receive buffer. • This allows: • Send: Overlap communication with local computation. • Receive: Copy a message directly into the address space of an application without blocking on the message.

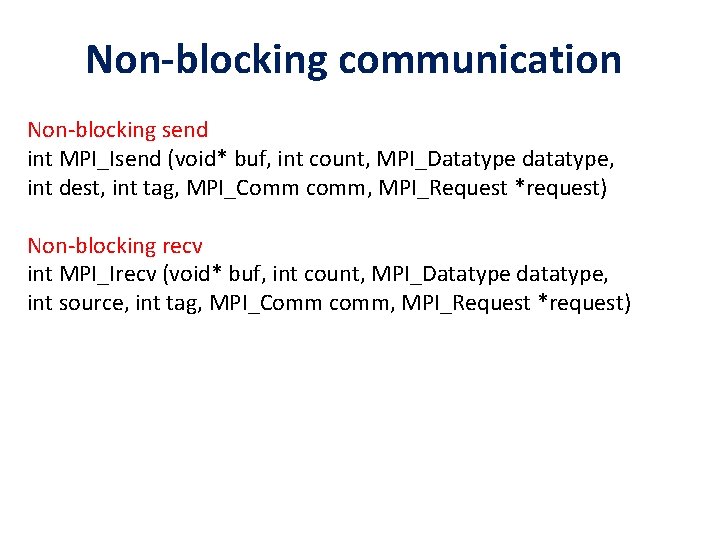

Non-blocking communication Non-blocking send int MPI_Isend (void* buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm, MPI_Request *request) Non-blocking recv int MPI_Irecv (void* buf, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Request *request)

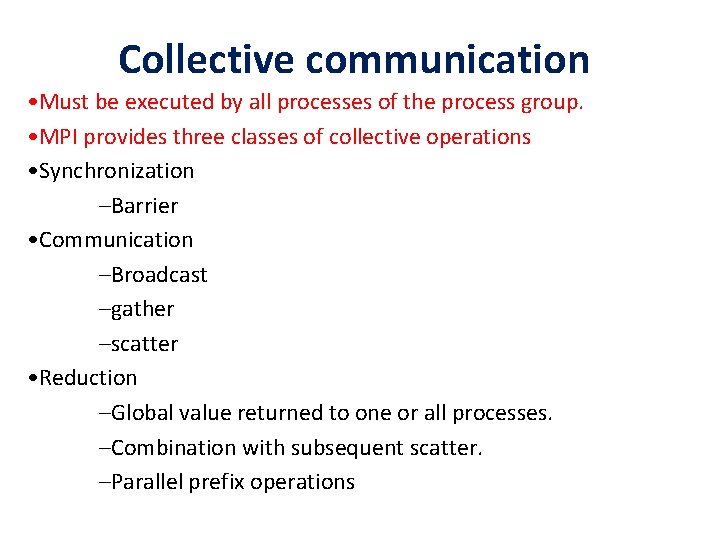

Collective communication • Must be executed by all processes of the process group. • MPI provides three classes of collective operations • Synchronization –Barrier • Communication –Broadcast –gather –scatter • Reduction –Global value returned to one or all processes. –Combination with subsequent scatter. –Parallel prefix operations

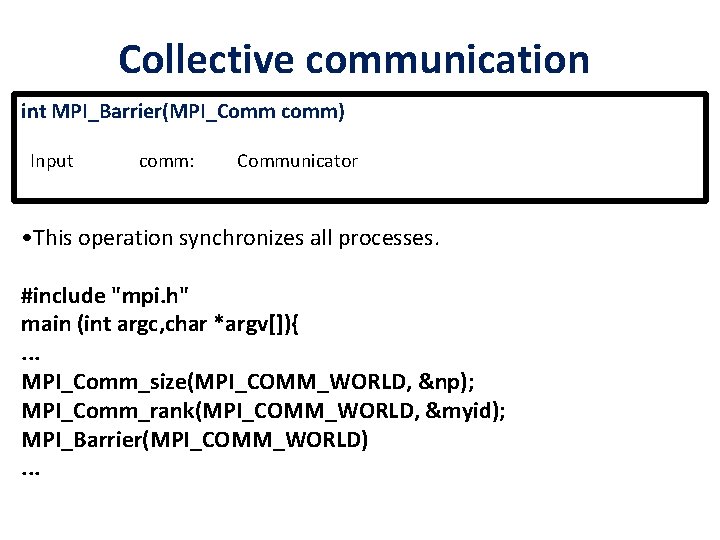

Collective communication int MPI_Barrier(MPI_Comm comm) Input comm: Communicator • This operation synchronizes all processes. #include "mpi. h" main (int argc, char *argv[]){. . . MPI_Comm_size(MPI_COMM_WORLD, &np); MPI_Comm_rank(MPI_COMM_WORLD, &myid); MPI_Barrier(MPI_COMM_WORLD). . .

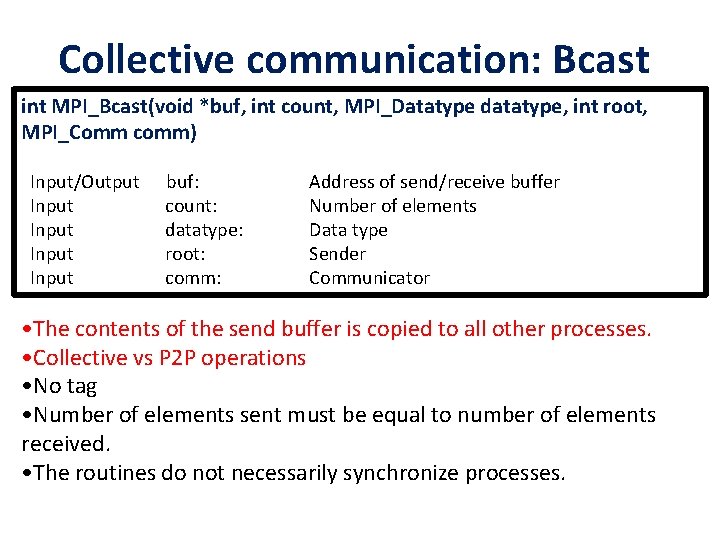

Collective communication: Bcast int MPI_Bcast(void *buf, int count, MPI_Datatype datatype, int root, MPI_Comm comm) Input/Output Input buf: count: datatype: root: comm: Address of send/receive buffer Number of elements Data type Sender Communicator • The contents of the send buffer is copied to all other processes. • Collective vs P 2 P operations • No tag • Number of elements sent must be equal to number of elements received. • The routines do not necessarily synchronize processes.

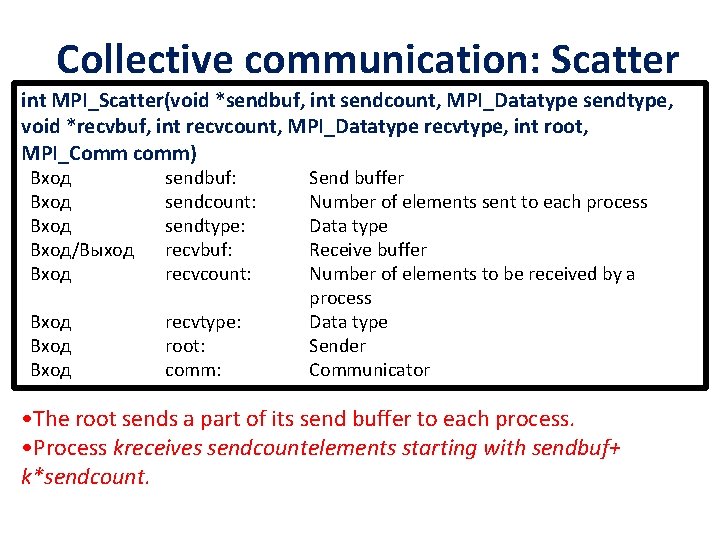

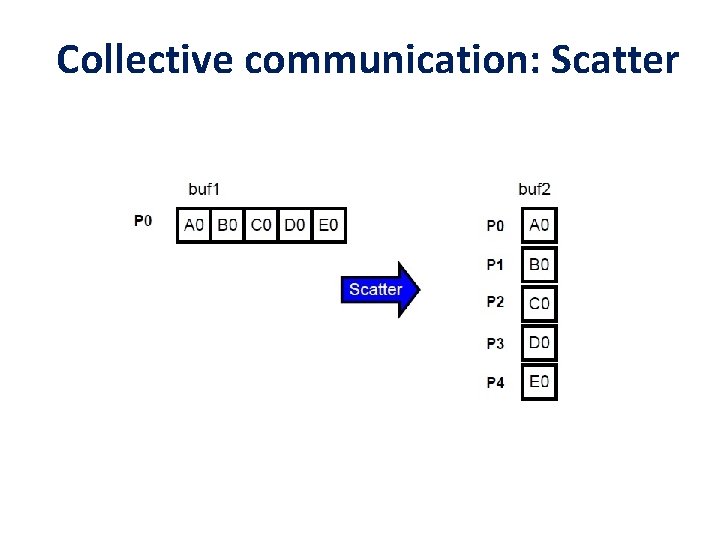

Collective communication: Scatter int MPI_Scatter(void *sendbuf, int sendcount, MPI_Datatype sendtype, void *recvbuf, int recvcount, MPI_Datatype recvtype, int root, MPI_Comm comm) Вход/Выход Вход sendbuf: sendcount: sendtype: recvbuf: recvcount: Вход recvtype: root: comm: Send buffer Number of elements sent to each process Data type Receive buffer Number of elements to be received by a process Data type Sender Communicator • The root sends a part of its send buffer to each process. • Process kreceives sendcountelements starting with sendbuf+ k*sendcount.

Collective communication: Scatter

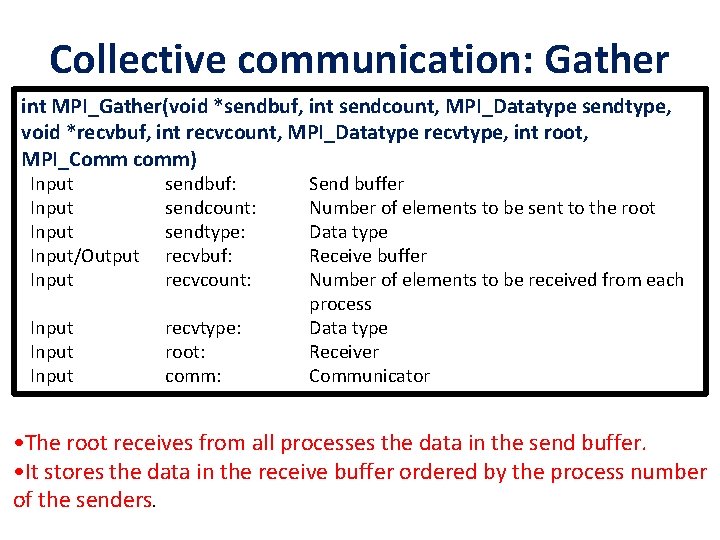

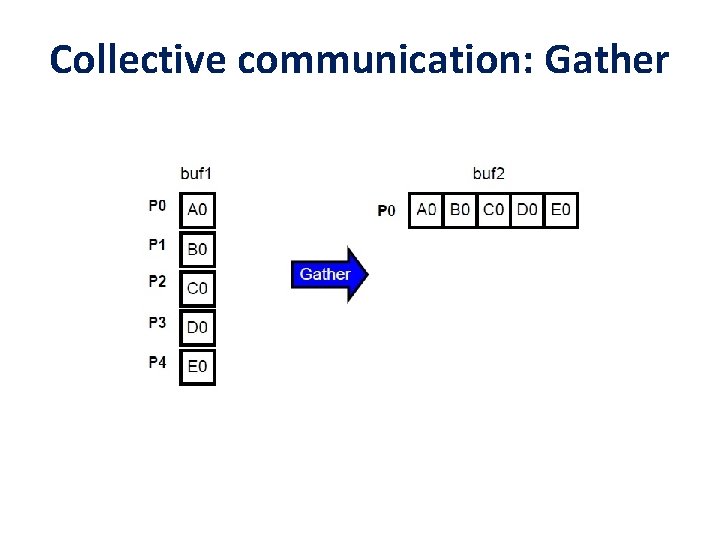

Collective communication: Gather int MPI_Gather(void *sendbuf, int sendcount, MPI_Datatype sendtype, void *recvbuf, int recvcount, MPI_Datatype recvtype, int root, MPI_Comm comm) Input/Output Input sendbuf: sendcount: sendtype: recvbuf: recvcount: Input recvtype: root: comm: Send buffer Number of elements to be sent to the root Data type Receive buffer Number of elements to be received from each process Data type Receiver Communicator • The root receives from all processes the data in the send buffer. • It stores the data in the receive buffer ordered by the process number of the senders.

Collective communication: Gather

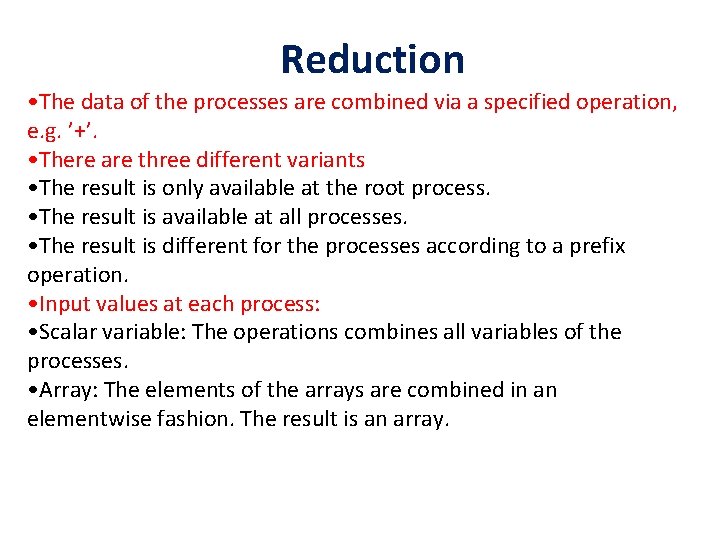

Reduction • The data of the processes are combined via a specified operation, e. g. ’+’. • There are three different variants • The result is only available at the root process. • The result is available at all processes. • The result is different for the processes according to a prefix operation. • Input values at each process: • Scalar variable: The operations combines all variables of the processes. • Array: The elements of the arrays are combined in an elementwise fashion. The result is an array.

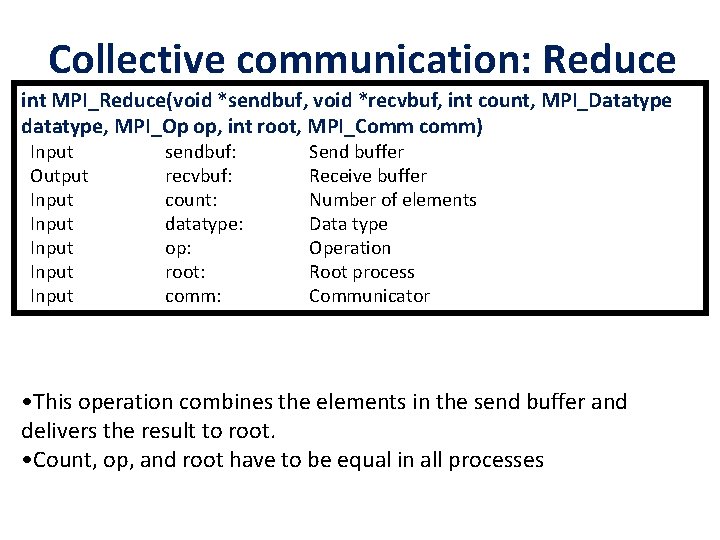

Collective communication: Reduce int MPI_Reduce(void *sendbuf, void *recvbuf, int count, MPI_Datatype datatype, MPI_Op op, int root, MPI_Comm comm) Input Output Input Input sendbuf: recvbuf: count: datatype: op: root: comm: Send buffer Receive buffer Number of elements Data type Operation Root process Communicator • This operation combines the elements in the send buffer and delivers the result to root. • Count, op, and root have to be equal in all processes

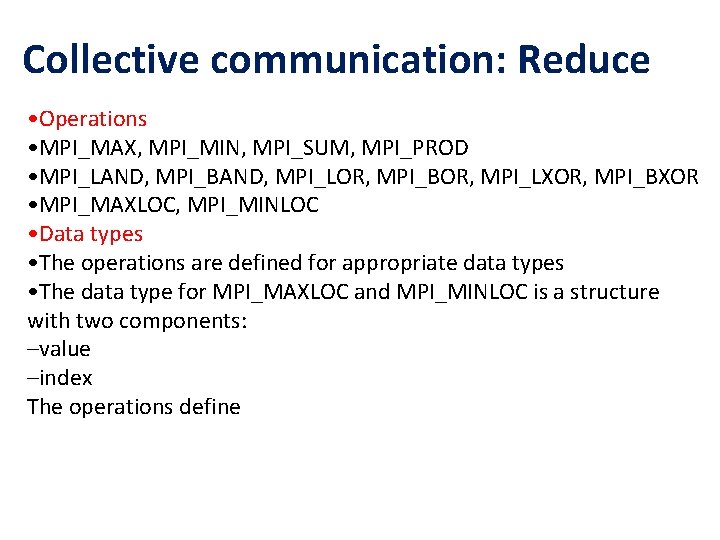

Collective communication: Reduce • Operations • MPI_MAX, MPI_MIN, MPI_SUM, MPI_PROD • MPI_LAND, MPI_BAND, MPI_LOR, MPI_BOR, MPI_LXOR, MPI_BXOR • MPI_MAXLOC, MPI_MINLOC • Data types • The operations are defined for appropriate data types • The data type for MPI_MAXLOC and MPI_MINLOC is a structure with two components: –value –index The operations define

![Collective communication: Reduce int main (int argc, char *argv[]) { int *sendbuf, *recvbuf, i, Collective communication: Reduce int main (int argc, char *argv[]) { int *sendbuf, *recvbuf, i,](http://slidetodoc.com/presentation_image_h2/845893b0554066d3f58c35c056fc7c0e/image-27.jpg)

Collective communication: Reduce int main (int argc, char *argv[]) { int *sendbuf, *recvbuf, i, n=5, rank; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); sendbuf = malloc(5*sizeof(int)); recvbuf = malloc(5*sizeof(int)); // Here we fill sendbufs MPI_Reduce(sendbuf, recvbuf, n, MPI_INT, MPI_MAX, 0, MPI_COMM_WORLD); if (rank==0) for (i=0; i<5; i++) printf(“MAX Value at %d is %d n“, i, recvbuf[i] ); MPI_Finalize(); return 0: }

- Slides: 27