Distributed Control Algorithms for Artificial Intelligence by Avi

Distributed Control Algorithms for Artificial Intelligence by Avi Nissimov, DAI seminar @ HUJI, 2003

Control methods Goal: deliberation on task that should be executed, and on time when it should be executed. l Control in centralized algorithms l – Loops, branches l Control in distributed algorithms – Control messages l Control for distributed AI – Search coordination

Centralized versus Distributed computation models “Default” centralized computation model: l – Turing machine. Open issues in distributed models: l – – – Synchronization Predefined structure of network Network graph structure knowledge on processors Processor identification Processor roles

Notes about proposed computational model l Asynchronous – (and therefore non-deterministic) l Unstructured (connected) network graph l No global knowledge – neighbors only l Each processor has unique id l No server-client roles, but there is a computation initiator

Complexity measures l Communication – Number of exchanged messages l Time – In terms of slowest message (no weights on network graph edges); ignore local processing l Storage – Common number of bits/words required

Control issues l Graph exploration – Communication over the graph l Termination detection – Detection of state when no node is running and no message is sent

Graph exploration: Tasks l Routing of message from node to node l Broadcasting l Connectivity determination l Communication capacity usage

Echo algorithm l Goal: spanning tree building l Intuition: got a message – let it go on l On reception of message on first time, send it to all of the neighbors, ignoring the rest l Termination detection – after the nodes respond, send [echo] message to father

![Echo alg. : implementation receive[echo] from w; father: =w; received: =1; for all (v Echo alg. : implementation receive[echo] from w; father: =w; received: =1; for all (v](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-9.jpg)

Echo alg. : implementation receive[echo] from w; father: =w; received: =1; for all (v in Neighbors-{w}) send[echo] to v; while (received < Neighbors. size) do receive[echo]; received++; send [echo] to father

Echo algorithm - properties l Very useful in practice, since no faster exploration can happen l Reasonable assumption – “fast” edges tend to stay fast l Theoretical model allows worst execution, since every spanning tree can be a result of the algorithm

![DFS spanning tree algorithm: Centralized version DFS(u, father) if (visited[u]) then return; visited[u]=true; father[u]=father; DFS spanning tree algorithm: Centralized version DFS(u, father) if (visited[u]) then return; visited[u]=true; father[u]=father;](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-11.jpg)

DFS spanning tree algorithm: Centralized version DFS(u, father) if (visited[u]) then return; visited[u]=true; father[u]=father; for all (neigh in neighbors[u]) DFS(neigh, u);

![DFS spanning tree algorithm: Distributed version On reception of [dfs] from v if (visited[u]) DFS spanning tree algorithm: Distributed version On reception of [dfs] from v if (visited[u])](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-12.jpg)

DFS spanning tree algorithm: Distributed version On reception of [dfs] from v if (visited[u]) then send [return] to v; status[v]: =returned; return; visited: =true; status[v]: =father; send. To. Next();

![DFS spanning tree algorithm: Distributed version (Cont. ) On reception of [return] from v DFS spanning tree algorithm: Distributed version (Cont. ) On reception of [return] from v](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-13.jpg)

DFS spanning tree algorithm: Distributed version (Cont. ) On reception of [return] from v status[v]: =returned; send. To. Next(); send. To. Next: if there is w s. t. status[w]=unused then send [dfs] to w; else send [return] to father

Discussion, complexity analysis l Sequential in nature l There is 2 messages on each node therefore – Communication complexity is 2 m l All the messages are sent in sequence – Time complexity is 2 m as well l Explicitly un-utilizing parallel execution

Awerbuch linear time algorithm for DFS tree l Main idea: why to send to node that is visited? l Each node sends [visited] message in parallel to all the neighbors l Neighbors update their knowledge on status of the node before they are visited in O(1) for each node (in parallel)

Awerbuch algorithm: complexity analysis l Let (u, v) be edge, suppose u is visited before v. Then u sends [visit] message on (u, v); and v sends back [ok] message to u. l If (u, v) is also a tree edge, [dfs], [return] messages are sent too. l Comm. complexity: 2 m+2(n-1) l Time complexity: 2 n+2(n-1)=4 n-2

Relaxation algorithm - idea l DFS-tree property: if (u, v) is edge in original graph, then v is in path (root, . . , u) or u is in path of (root, . . , v). l Union of lexically minimal simple paths (lmsp) satisfies this property. l Therefore, all we need is to find lmsp for each node in graph

![Relaxation algorithm – Implementation On arrival of [path, <path>] if (current. Path>(<path>, u)) then Relaxation algorithm – Implementation On arrival of [path, <path>] if (current. Path>(<path>, u)) then](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-18.jpg)

Relaxation algorithm – Implementation On arrival of [path, <path>] if (current. Path>(<path>, u)) then current. Path: =(<path>, u); send all neighbors [path, current. Path] // (in parallel, of course)

Relaxation algorithm – analysis and conclusions l Advantages – low complexity: – In k steps, all the nodes with length k of lmsp are set up, therefore time complexity is n l Disadvantages: – Unlimited message length – Termination detection required (see further)

Other variations and notes l Minimal spanning tree – Requires weighting the nodes, much like Kruskal’s MST algorithm l BFS – Very hard, since there is no synchronization; much like iterative deepening DFS l Linear message solution – Like centralized; sends all the information to next node; unlimited message length.

Connectivity Certificates l Idea: let G be network graph. Throw from G some edges, while preserving k paths when available in G; and all the paths if G itself contains less than k paths (for each {u, v}) l Applications: – Network capacity utilization – Transport reliability insurance

Connectivity certificate: Goals l The main idea of certificates is to use as less edges as possible, there always is the trivial certificate – whole graph. l Finding minimal certificate is NP-hard problem l Sparse certificate is one that contains no more than k*n edges

Sparse connectivity certificate: Solution l Let E(i) be a spanning forest in graph GUnion(E(j)) for 1<=j<=i-1; then Union(E(i)) is a sparse connectivity certificate l Algorithm idea – calculate all the forests simultaneously – if an edge closes a cycle in tree of i-th forest, then add the edge to forest (i+1)-th (rank of the edge is i+1)

Distributed certificate algorithm Search(father) if (not visited) then for all neighbor v s. t. rank[v] ==0 send[give_rank] to v; receive[ranked, <i>] from v; rank[v]: =i; visited: =true;

![Distributed certificate algorithm (cont. ) Search(v) (cont. ) for all w s. t. needs_search[w] Distributed certificate algorithm (cont. ) Search(v) (cont. ) for all w s. t. needs_search[w]](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-25.jpg)

Distributed certificate algorithm (cont. ) Search(v) (cont. ) for all w s. t. needs_search[w] and rank[w]>=rank[father] in decreasing order needs_search[w]: =false; send [search ] to w; receive [return]; send [return] to father

![Distributed certificate algorithm (cont. ) On receipt of [give_rank] from v rank[v]: =min(i) s. Distributed certificate algorithm (cont. ) On receipt of [give_rank] from v rank[v]: =min(i) s.](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-26.jpg)

Distributed certificate algorithm (cont. ) On receipt of [give_rank] from v rank[v]: =min(i) s. t. i>rank[w] for all w; send [ranked, <rank[v]>] to v; On receipt of [search] from father Search(father);

Complexity analysis and discussion l There is no reference to k in algorithm; it calculates sparse certificates for all k’s l There is at most 4 messages on each edge – therefore time and communication complexity is at most 4 m=O(m) l Ranking the nodes in parallel, we can achieve 2 n+2 m complexity

Termination detection: definition l Problem: detect a state when all the nodes are awaiting for messages in passive state l Similar to garbage collection problem – determine the nodes that no longer can accept the messages (until “reallocated” – reactivated) l Two approaches: tracing vs. probe

![Processor states of execution: global picture l Send – pre-condition: {state=active}; – action: send[message]; Processor states of execution: global picture l Send – pre-condition: {state=active}; – action: send[message];](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-29.jpg)

Processor states of execution: global picture l Send – pre-condition: {state=active}; – action: send[message]; l Receive – pre-condition: {message queue is not empty}; – action: state: =active; l Finish activity – pre-condition: {state=active}; – action: state: =passive;

Tracing Similar to “reference counting” garbage collection algorithm l On sending a message, increases children counter l On receiving message [finished_work], decreases children counter l When finishes work, and when children counter equals zero, sends a [finished_work] message to the father l

Analysis and discussion l Main disadvantage: doubles (!!) the communication complexity l Advantages: simplicity, immediate termination detection (because the message is initiated by terminator). l Variations may send [finished_work] message on chosen messages; so called “weak reference”

Probe algorithms l Main idea: Once per some time, “collect garbage” – calculate number of sent minus number of received messages per processor l If sum of these numbers is 0 – then there is no message running on the network. l In parallel, find out if there is an active processor.

Probe algorithms – details l We will introduce new role – controller; and we will assume it is in fact connected to each node. l Once in some period (delta), controller sends [request] message to all the nodes. l Each processor sends back [deficit= <sent_number-received_number>].

Think it works? Not yet… Suppose U sends a message to V and becomes passive; then U receives [request] message and replies (immediately) [deficit=1]. Next processor W receives [request] message; it replies [def=0] since it got no message yet Meanwhile V activates W by sending it a message, receives reply from W and stops; receives [request] and replies [def=-1] But W is still active….

How to work it out? As we saw, a message can pass “behind the back” of the controller, since the model is asynchronous l Yet, if we add some additional boolean variable on each of processors, such as “was active since last request”, we can deal with this problem l But that means, we will detect termination only in 2*delta time after the termination actually occurs l

Variations, discussion, analysis l If there is more one edge between the controller and a node, usage of “echo” when initiator=controller, sum calculated inline l Not immediate detection, initiated by controller l Small delta causes to communication bottleneck, while large delta causes long period before detection

CSP and Arc Consistency l Formal definition: find x(i) from D(i) so that if Cij(v, w) and x(i)=v then x(j)=w l Problem is NP-complete in general l Arc-consistency problem is removing all values that are redundant: if for all w from D(j) Cij(v, w)=false then remove v from D(i) l Of course, Arc-consistency is just the primary step of CSP solution

Sequential AC 4 algorithm For all Cij, v in Di, w in Dj if Cij(v, w) then count[i, v, j]++; Supp[j, w]. insert(, <i, v>); For all Cij, v in Di check. Redundant(i, v, j) ; While not Q. empty <j, w> =Q. deque(); forall <i, v> in Supp[j, w] count[i, v, j]--; check. Redundant(i, v, j);

![Sequential AC 4 algorithm: redundancy check. Redundant(i, v, j) if (count[i, v, j]=0) then Sequential AC 4 algorithm: redundancy check. Redundant(i, v, j) if (count[i, v, j]=0) then](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-39.jpg)

Sequential AC 4 algorithm: redundancy check. Redundant(i, v, j) if (count[i, v, j]=0) then Q. enque(<i, v>); Di. remove(v);

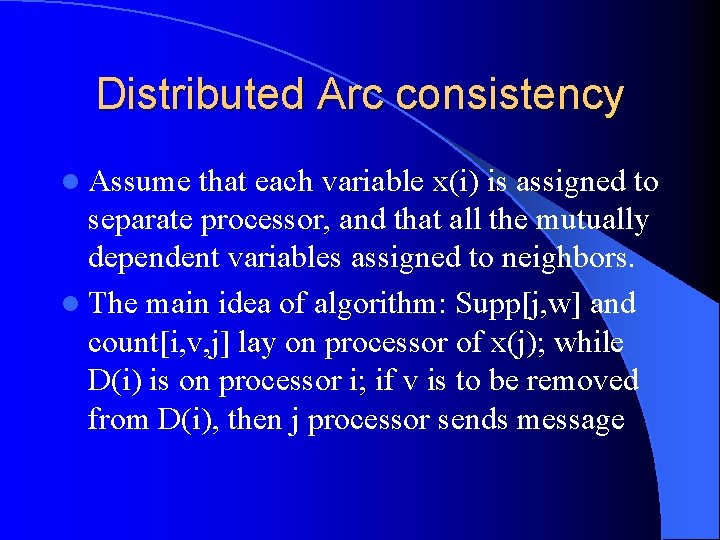

Distributed Arc consistency l Assume that each variable x(i) is assigned to separate processor, and that all the mutually dependent variables assigned to neighbors. l The main idea of algorithm: Supp[j, w] and count[i, v, j] lay on processor of x(j); while D(i) is on processor i; if v is to be removed from D(i), then j processor sends message

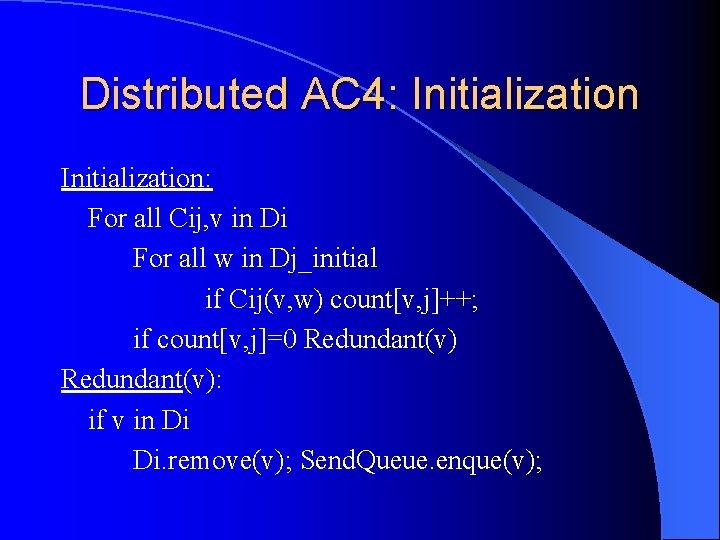

Distributed AC 4: Initialization: For all Cij, v in Di For all w in Dj_initial if Cij(v, w) count[v, j]++; if count[v, j]=0 Redundant(v): if v in Di Di. remove(v); Send. Queue. enque(v);

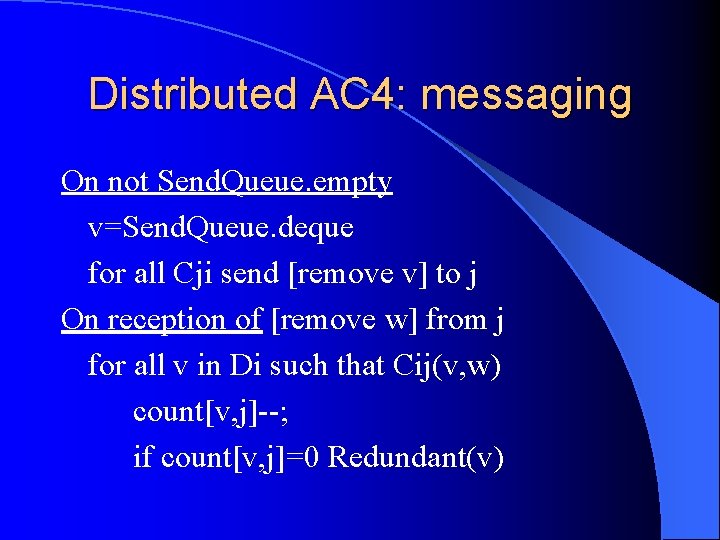

Distributed AC 4: messaging On not Send. Queue. empty v=Send. Queue. deque for all Cji send [remove v] to j On reception of [remove w] from j for all v in Di such that Cij(v, w) count[v, j]--; if count[v, j]=0 Redundant(v)

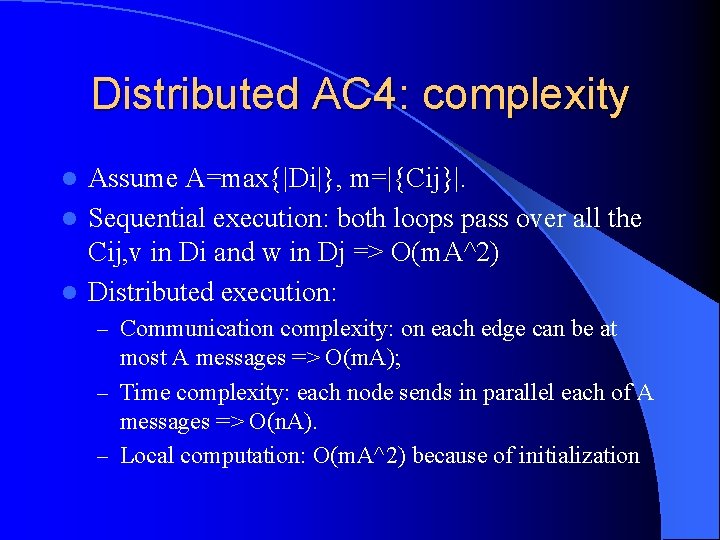

Distributed AC 4: complexity Assume A=max{|Di|}, m=|{Cij}|. l Sequential execution: both loops pass over all the Cij, v in Di and w in Dj => O(m. A^2) l Distributed execution: l – Communication complexity: on each edge can be at most A messages => O(m. A); – Time complexity: each node sends in parallel each of A messages => O(n. A). – Local computation: O(m. A^2) because of initialization

Dist. AC 4 – Final details l Termination detection is not obvious, and requires explicit implementation – Usually probe algorithm is preferred because of big quantity of messages l AC 4 ends in three possible – Contradiction – Solution – Arc Consistent sub-set states

Task assignment for AC 4 l Our assumption was that each variable is assigned to different processor. l Special case is multiprocessor computer, when all the resources are on hand l In fact, that is NP-hard problem to minimize communication cost when assignment has to be done by computer => heuristic approximation algorithms.

From AC 4 to CSP l There are many heuristics, taught mainly in introduction AI course (such as most restricted variable and most restricting value) that tells which variables should be removed after arc-consistency is reached l On contradiction termination – usage in back-tracing

Loop cut-set example l Definition: Pit in loop L – a vertex in directed graph, such that both edges of L are incoming. l Goal: break loops in directed graph. l Formulation: Let G=<V, E> be graph; find C subset of V such that any loop in G contains at least one non-pit vertex. l Applications: Belief networks algorithms

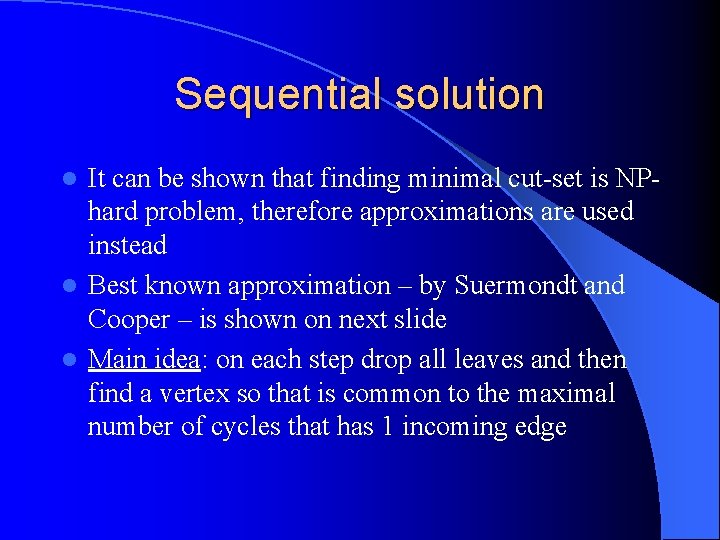

Sequential solution It can be shown that finding minimal cut-set is NPhard problem, therefore approximations are used instead l Best known approximation – by Suermondt and Cooper – is shown on next slide l Main idea: on each step drop all leaves and then find a vertex so that is common to the maximal number of cycles that has 1 incoming edge l

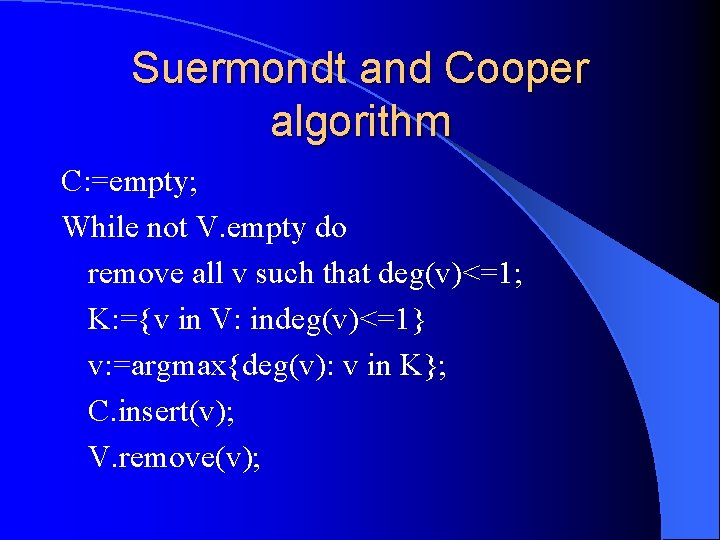

Suermondt and Cooper algorithm C: =empty; While not V. empty do remove all v such that deg(v)<=1; K: ={v in V: indeg(v)<=1} v: =argmax{deg(v): v in K}; C. insert(v); V. remove(v);

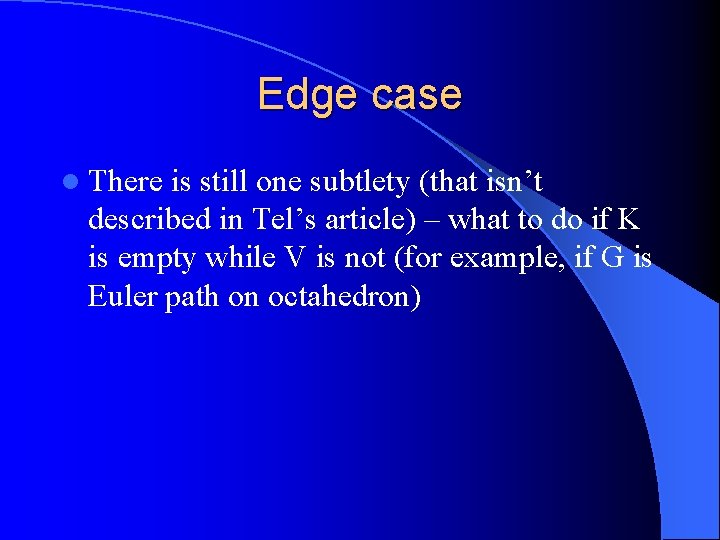

Edge case l There is still one subtlety (that isn’t described in Tel’s article) – what to do if K is empty while V is not (for example, if G is Euler path on octahedron)

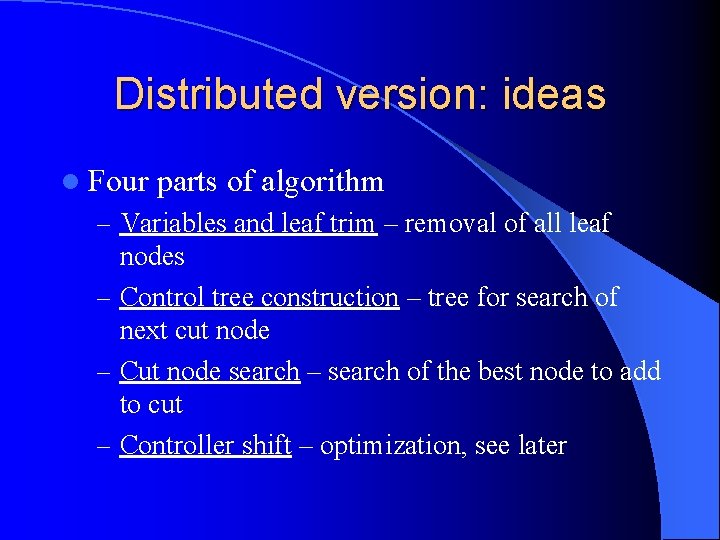

Distributed version: ideas l Four parts of algorithm – Variables and leaf trim – removal of all leaf nodes – Control tree construction – tree for search of next cut node – Cut node search – search of the best node to add to cut – Controller shift – optimization, see later

Data structures for distributed version l Each node contains – its activity status (nas: {yes, cut, non-cut}) – activity status of all adjacent edges-links (las: {yes, no}) – control status of all adjacent links (lcs: {basic, son, father, frond})

Leaf trim part l Idea: remove all leafs from the graph (put them to non-cut state). l If the algorithm discovers that a node has 1 active edge left, it sends its unique neighbor [remove] message l Tracing-like termination detection

![Leaf trim implementation Var las[x]=yes; nas=yes; procedure Trim. Test if |{x: las[x]}|=1 then nas: Leaf trim implementation Var las[x]=yes; nas=yes; procedure Trim. Test if |{x: las[x]}|=1 then nas:](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-54.jpg)

Leaf trim implementation Var las[x]=yes; nas=yes; procedure Trim. Test if |{x: las[x]}|=1 then nas: =noncut; las[x]: =no; send [remove] to x and receive [return] or [remove] back; On reception of [remove] from x: las[x]: =no; Trim. Test; send [return] to x

Control tree search l For this goal echo algorithm is used (with appropriate variation – now each father should know list of its children l This task is completely independent from the previous (leaf trim), therefore they can be executed in parallel l During this task, lcs variable is set up

![Control tree construction: implementation Procedure construct. Subtree For all x s. t. lcs[x]=basic do Control tree construction: implementation Procedure construct. Subtree For all x s. t. lcs[x]=basic do](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-56.jpg)

Control tree construction: implementation Procedure construct. Subtree For all x s. t. lcs[x]=basic do send [construct, father] to x while exists x s. t. lcs[x]=basic receive [construct, <i>] from y lcs[y]: = (<i>=father)? frond : son First [construct, father] message from x lcs[x]: =father; {construct. Subtree & Trim. Test}; send [construct, son] to x;

Cut node search l Idea: pass over the control tree and combine l For this reason we will need to collect (unbroadcast) on control tree the maximal degree of a node in the sub-tree l Note, that only nodes with indeg<=1 (for this reason, Income represents incoming edges-neighbors), that still are active.

![Cut node search: implementation Procedure Node. Search: my_degree: = ( |{x in Income: las[x]}| Cut node search: implementation Procedure Node. Search: my_degree: = ( |{x in Income: las[x]}|](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-58.jpg)

Cut node search: implementation Procedure Node. Search: my_degree: = ( |{x in Income: las[x]}| <2)? |{x : las[x]}| : 0; best_degree: =my_degree; for all x: lst[x]=son send [search] to x; do |{x: lst[x]=son}| times receive [best_is, d] from x; if (best_degree<d) then best_degree: =d; best_branch: =x; send [best_is, best_degree] to father;

Controller shift l This task has no parallel code in sequential algorithm and is only optimization issue l Idea: because the newly selected cut-code is the center of trim activity, the root of control tree should pass there. l In fact, this part doesn’t involve search, since we already on this stage the path to best degree on best branches

![Controller shift: root change On [change_root] message from x lcs[x]: =son; if (best_branch=u) then Controller shift: root change On [change_root] message from x lcs[x]: =son; if (best_branch=u) then](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-60.jpg)

Controller shift: root change On [change_root] message from x lcs[x]: =son; if (best_branch=u) then Trim. From. Neighbors; Init. Search. Cutnode; else lcs[best_branch]: =father; send[change_root] to best_branch

![Trim from neighbors Trim. From. Neighbors: for all x: las[x] send [remove] to x; Trim from neighbors Trim. From. Neighbors: for all x: las[x] send [remove] to x;](http://slidetodoc.com/presentation_image_h/206b7b2cdbaa762e32fcc44f0580fb28/image-61.jpg)

Trim from neighbors Trim. From. Neighbors: for all x: las[x] send [remove] to x; do | {x: las[x]}| times receive [return] or [remove] from x; las[x]: =no;

Complexity New measures: s=C. size; d=tree diameter l Communication complexity l – 2 m for [remove/return]+2 m for [construct]+ 2(n-1)(s+1) for [search/best_is]+sd for [change_root] => 4 m+2 sn l Time complexity without trim: – 2 d+2(s+1)d+sd=(3 s+4 )d l Trim time complexity: – Worst case: 2(n-s)

Controller shift:

- Slides: 63