Distance Metric Common Properties of a Distance Distances

- Slides: 13

Distance Metric

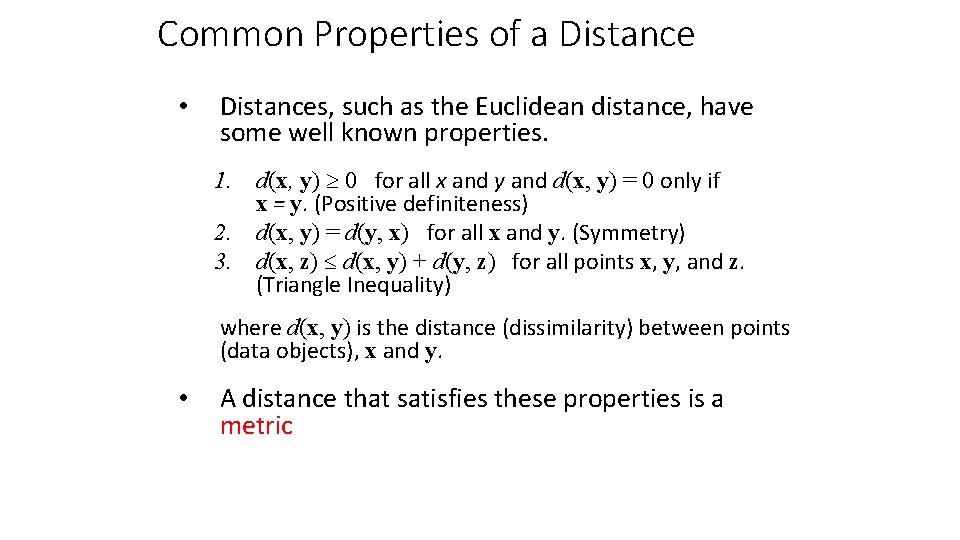

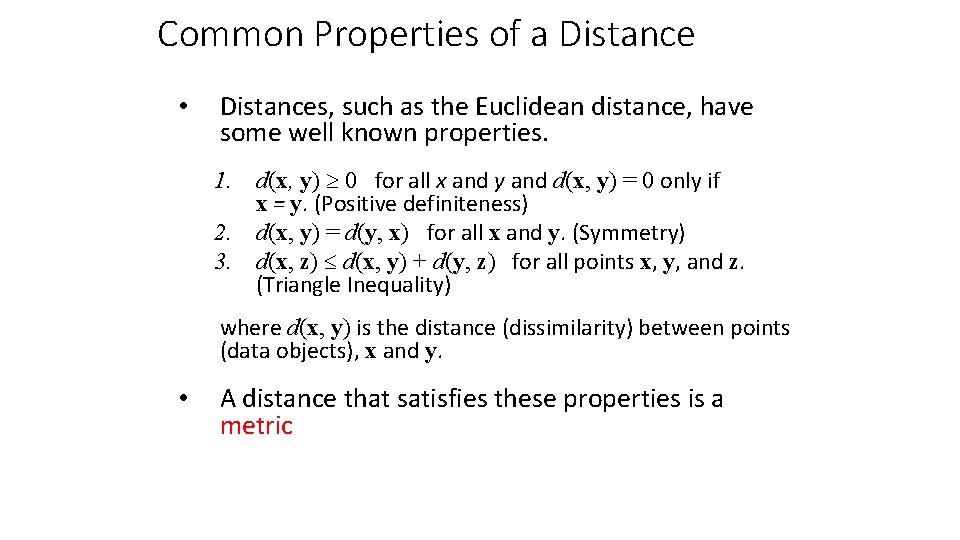

Common Properties of a Distance • Distances, such as the Euclidean distance, have some well known properties. 1. d(x, y) 0 for all x and y and d(x, y) = 0 only if x = y. (Positive definiteness) 2. d(x, y) = d(y, x) for all x and y. (Symmetry) 3. d(x, z) d(x, y) + d(y, z) for all points x, y, and z. (Triangle Inequality) where d(x, y) is the distance (dissimilarity) between points (data objects), x and y. • A distance that satisfies these properties is a metric

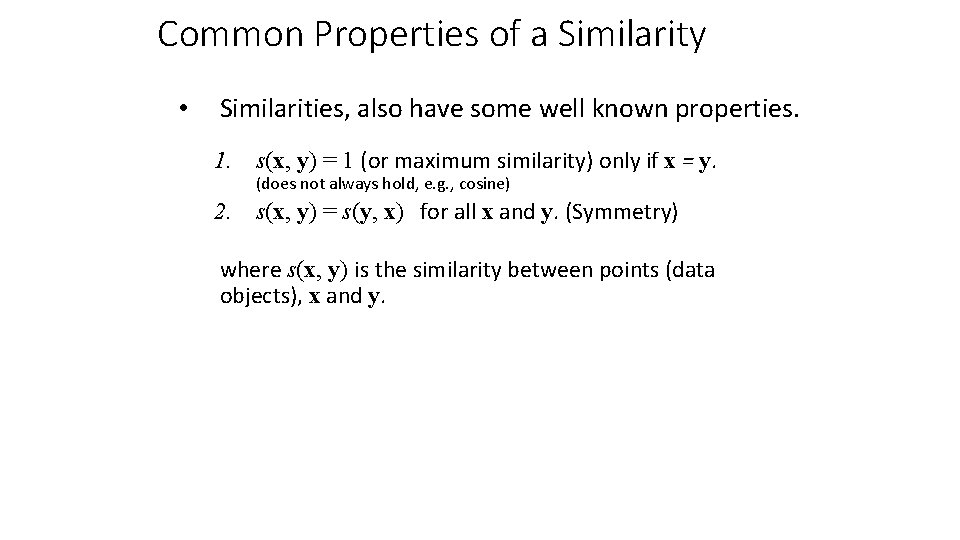

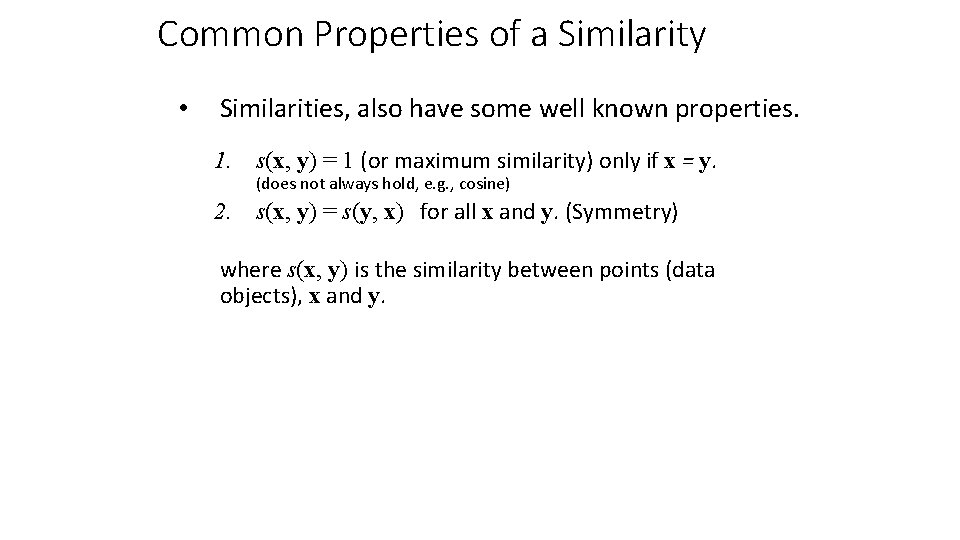

Common Properties of a Similarity • Similarities, also have some well known properties. 1. s(x, y) = 1 (or maximum similarity) only if x = y. (does not always hold, e. g. , cosine) 2. s(x, y) = s(y, x) for all x and y. (Symmetry) where s(x, y) is the similarity between points (data objects), x and y.

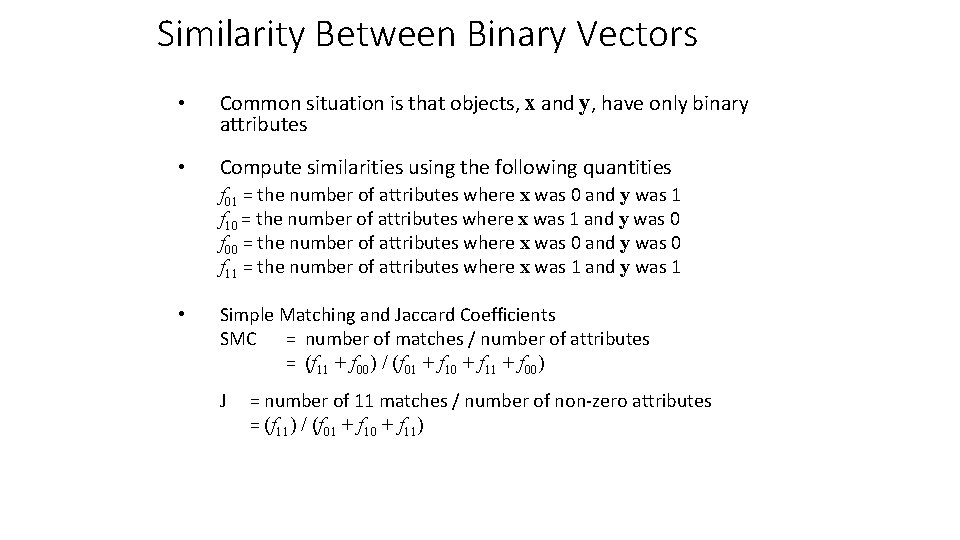

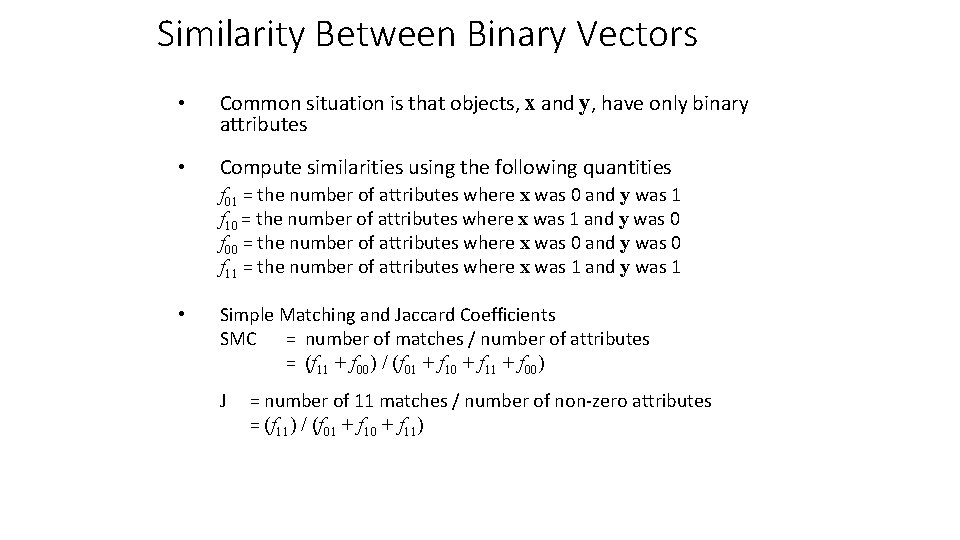

Similarity Between Binary Vectors • Common situation is that objects, x and y, have only binary attributes • Compute similarities using the following quantities f 01 = the number of attributes where x was 0 and y was 1 f 10 = the number of attributes where x was 1 and y was 0 f 00 = the number of attributes where x was 0 and y was 0 f 11 = the number of attributes where x was 1 and y was 1 • Simple Matching and Jaccard Coefficients SMC = number of matches / number of attributes = (f 11 + f 00) / (f 01 + f 10 + f 11 + f 00) J = number of 11 matches / number of non-zero attributes = (f 11) / (f 01 + f 10 + f 11)

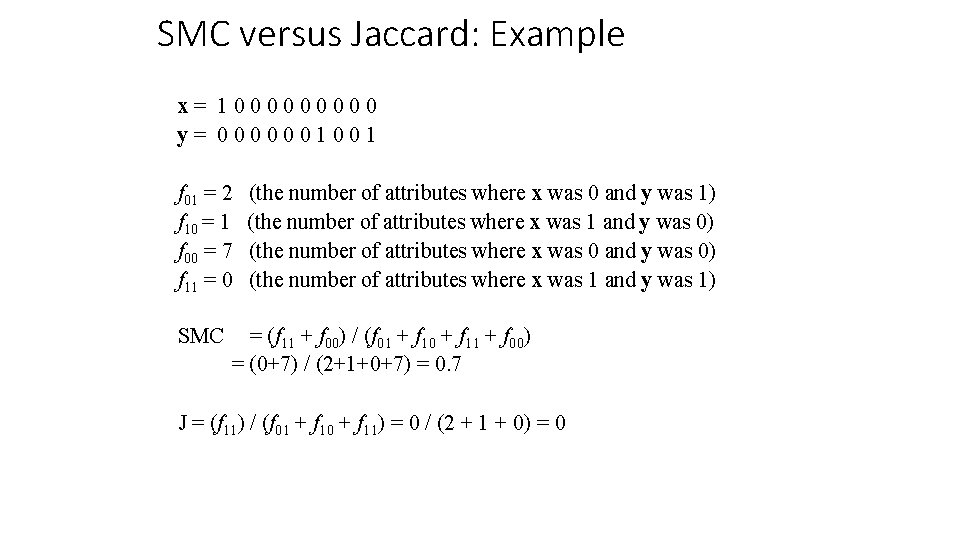

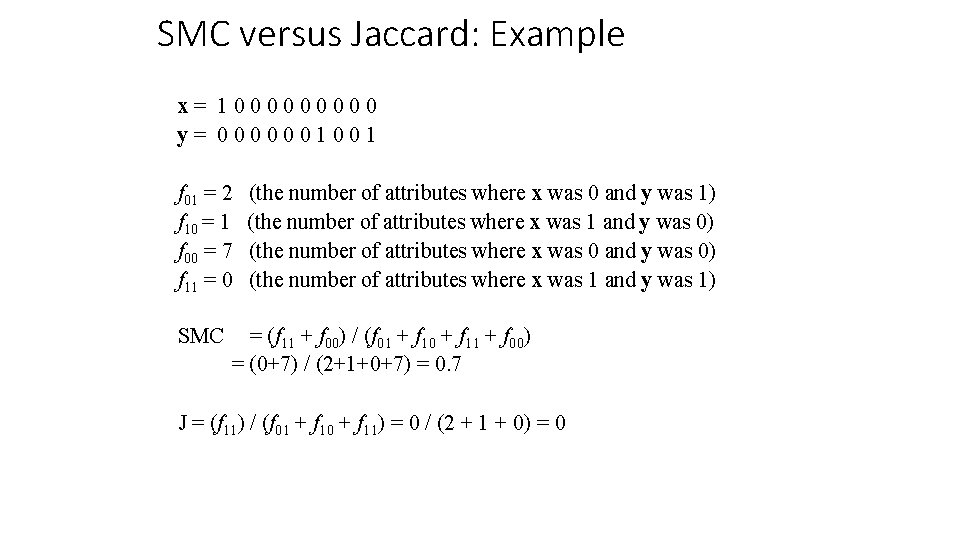

SMC versus Jaccard: Example x= 100000 y= 0000001001 f 01 = 2 f 10 = 1 f 00 = 7 f 11 = 0 SMC (the number of attributes where x was 0 and y was 1) (the number of attributes where x was 1 and y was 0) (the number of attributes where x was 0 and y was 0) (the number of attributes where x was 1 and y was 1) = (f 11 + f 00) / (f 01 + f 10 + f 11 + f 00) = (0+7) / (2+1+0+7) = 0. 7 J = (f 11) / (f 01 + f 10 + f 11) = 0 / (2 + 1 + 0) = 0

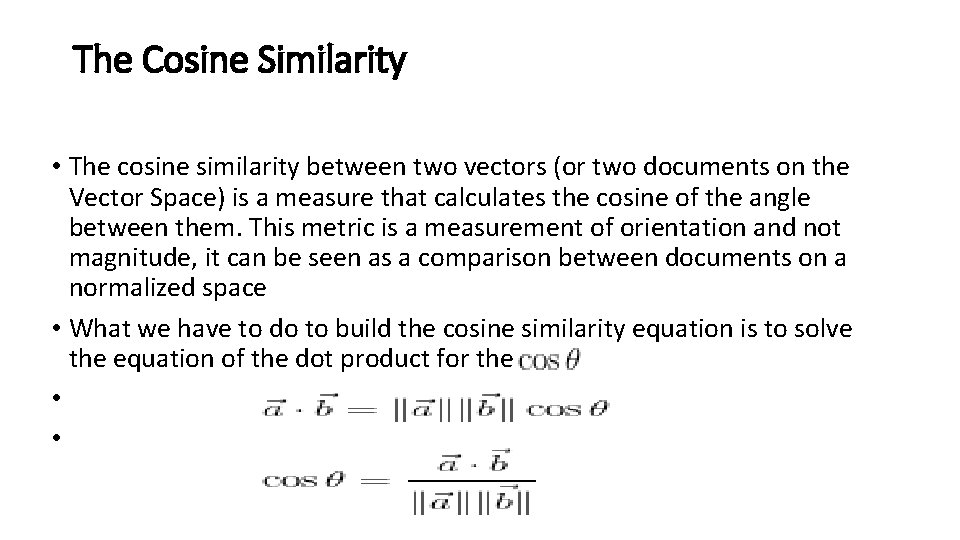

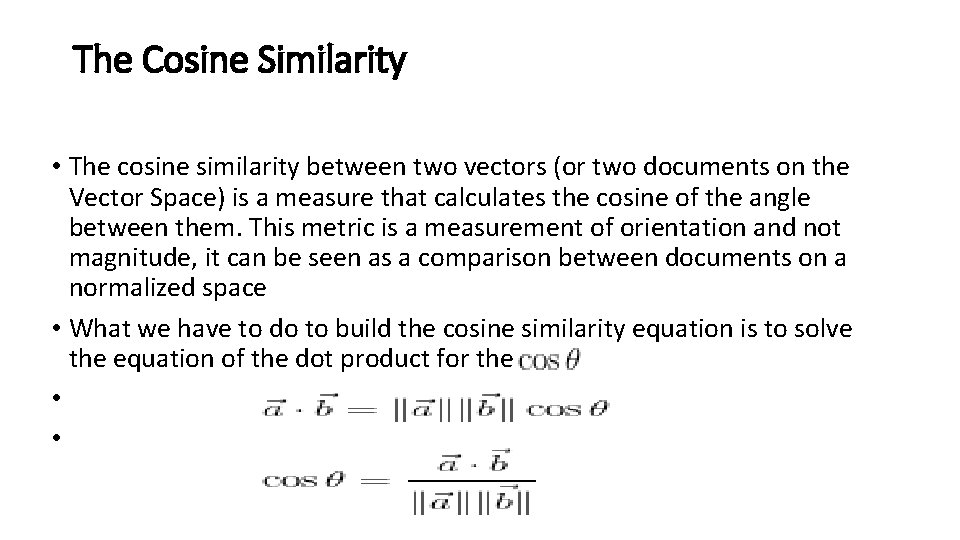

The Cosine Similarity • The cosine similarity between two vectors (or two documents on the Vector Space) is a measure that calculates the cosine of the angle between them. This metric is a measurement of orientation and not magnitude, it can be seen as a comparison between documents on a normalized space • What we have to do to build the cosine similarity equation is to solve the equation of the dot product for the • •

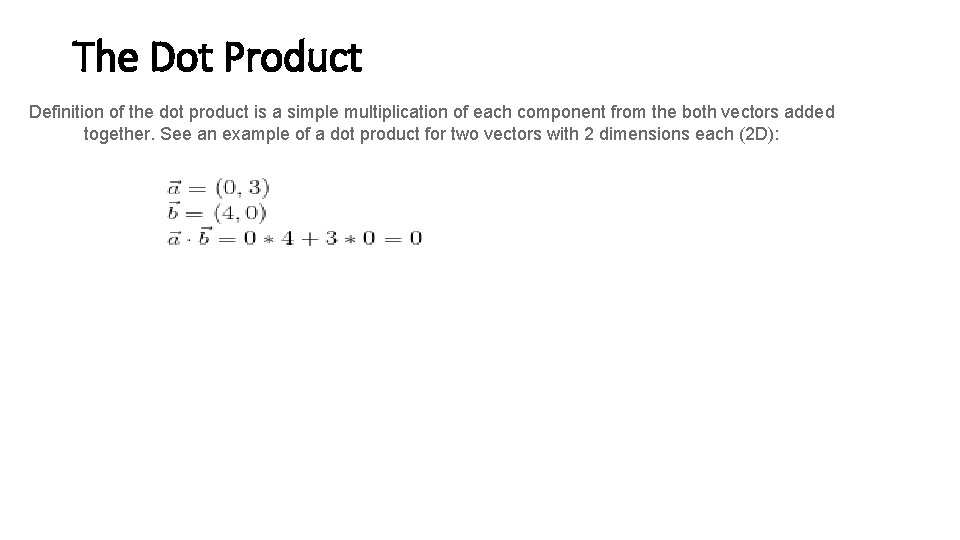

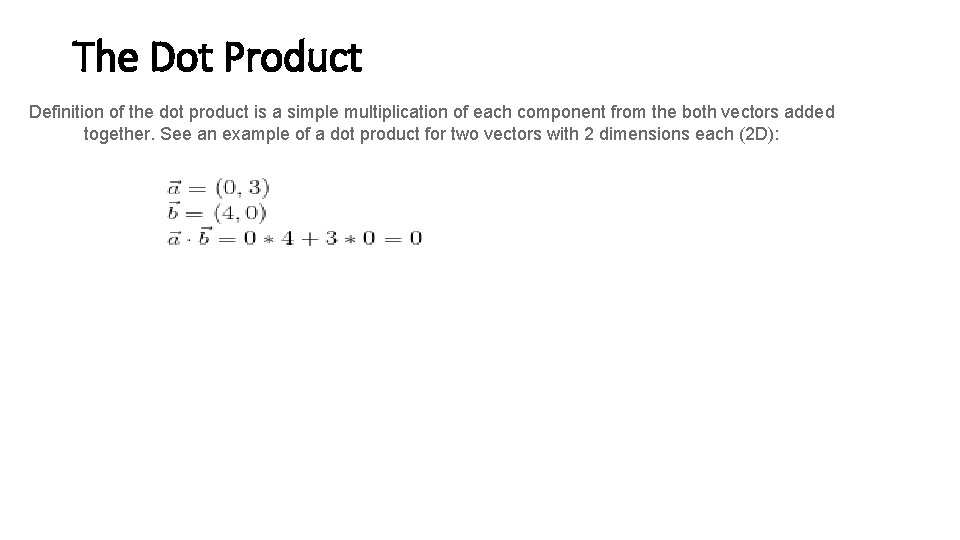

The Dot Product Definition of the dot product is a simple multiplication of each component from the both vectors added together. See an example of a dot product for two vectors with 2 dimensions each (2 D):

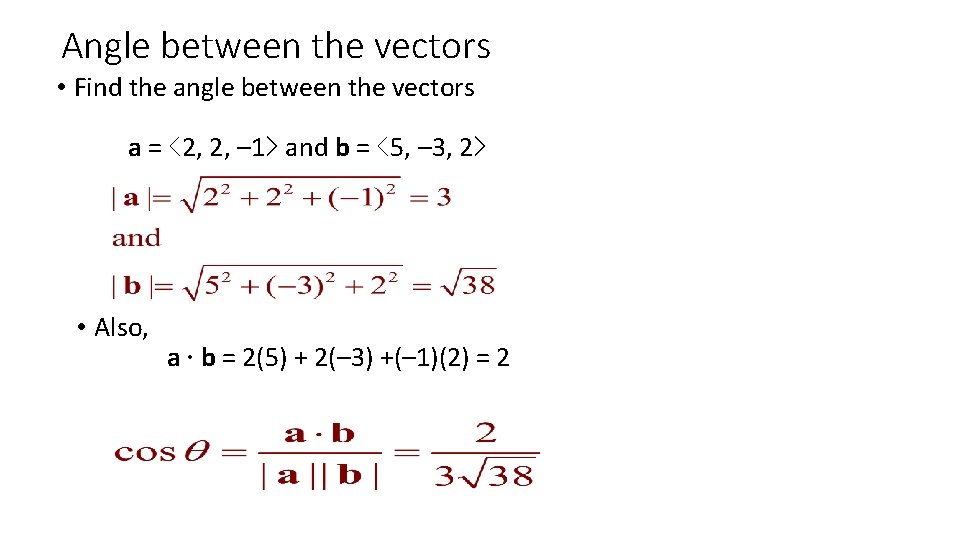

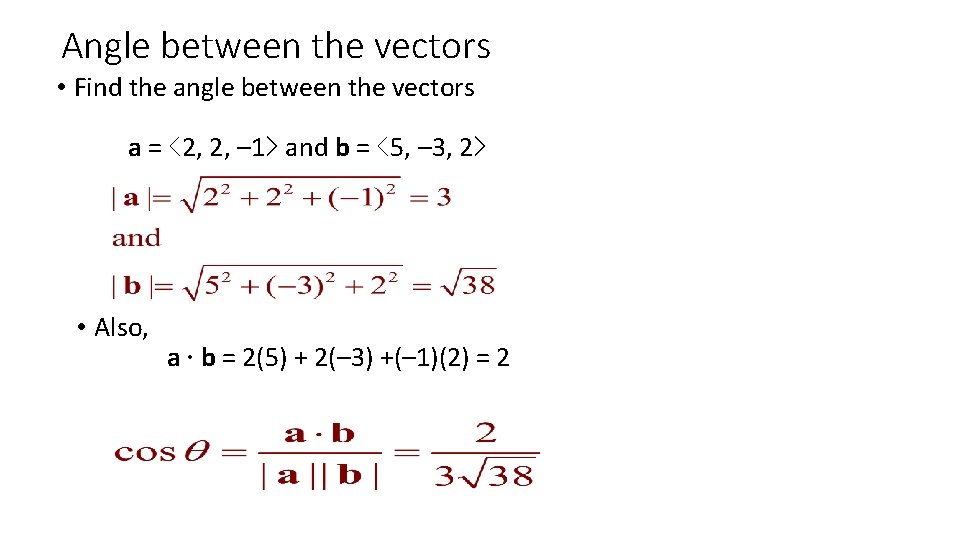

Angle between the vectors • Find the angle between the vectors a = ‹ 2, 2, – 1› and b = ‹ 5, – 3, 2› • Also, a ∙ b = 2(5) + 2(– 3) +(– 1)(2) = 2

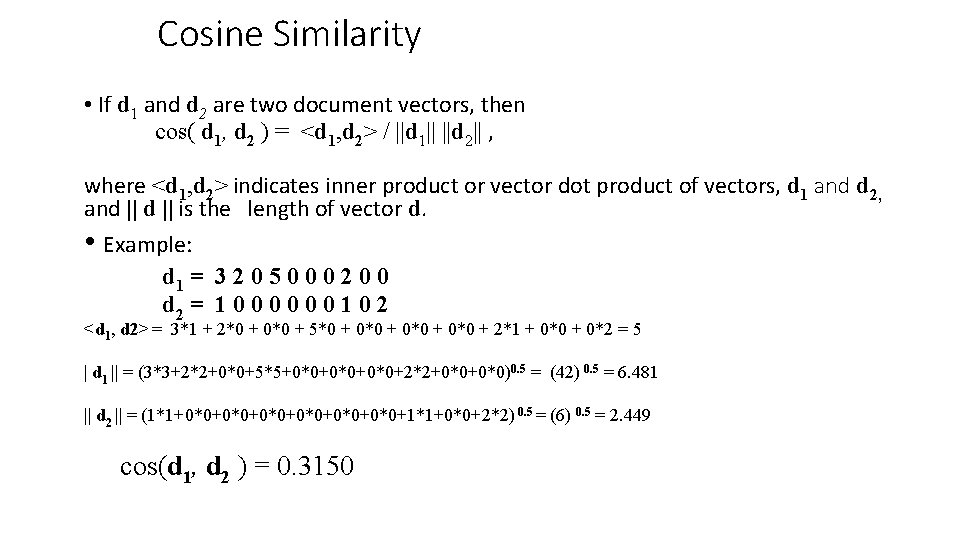

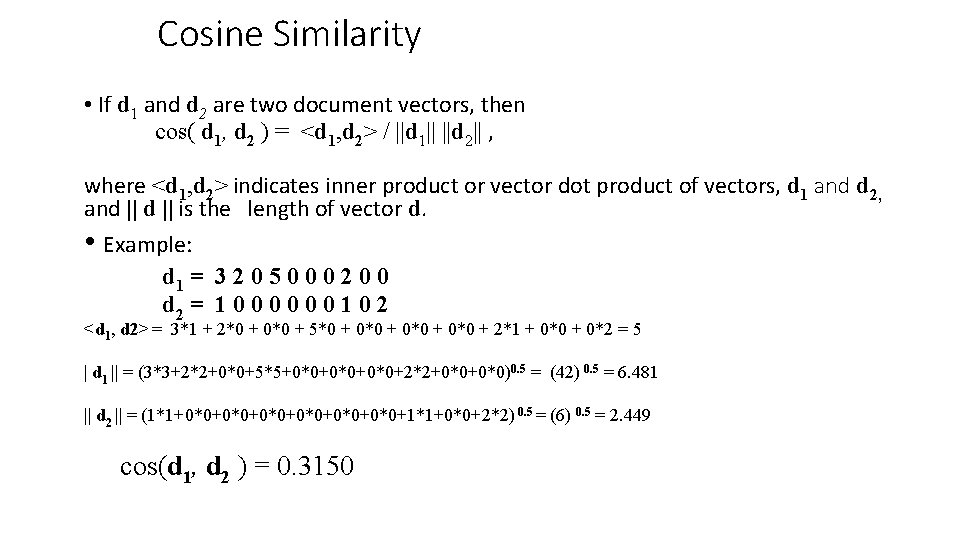

Cosine Similarity • If d 1 and d 2 are two document vectors, then cos( d 1, d 2 ) = <d 1, d 2> / ||d 1|| ||d 2|| , where <d 1, d 2> indicates inner product or vector dot product of vectors, d 1 and d 2, and || is the length of vector d. • Example: d 1 = 3 2 0 5 0 0 0 2 0 0 d 2 = 1 0 0 0 1 0 2 <d 1, d 2> = 3*1 + 2*0 + 0*0 + 5*0 + 0*0 + 2*1 + 0*0 + 0*2 = 5 | d 1 || = (3*3+2*2+0*0+5*5+0*0+0*0+2*2+0*0)0. 5 = (42) 0. 5 = 6. 481 || d 2 || = (1*1+0*0+0*0+0*0+1*1+0*0+2*2) 0. 5 = (6) 0. 5 = 2. 449 cos(d 1, d 2 ) = 0. 3150

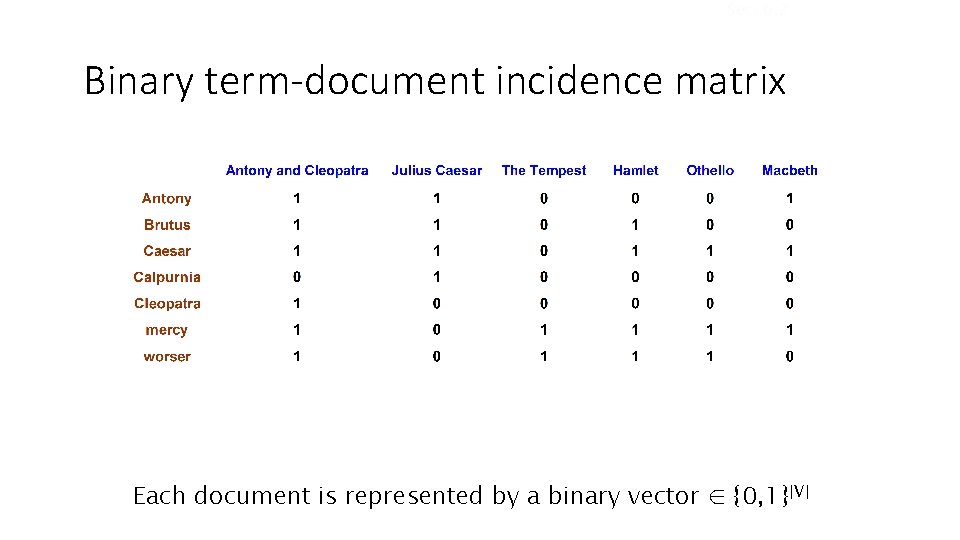

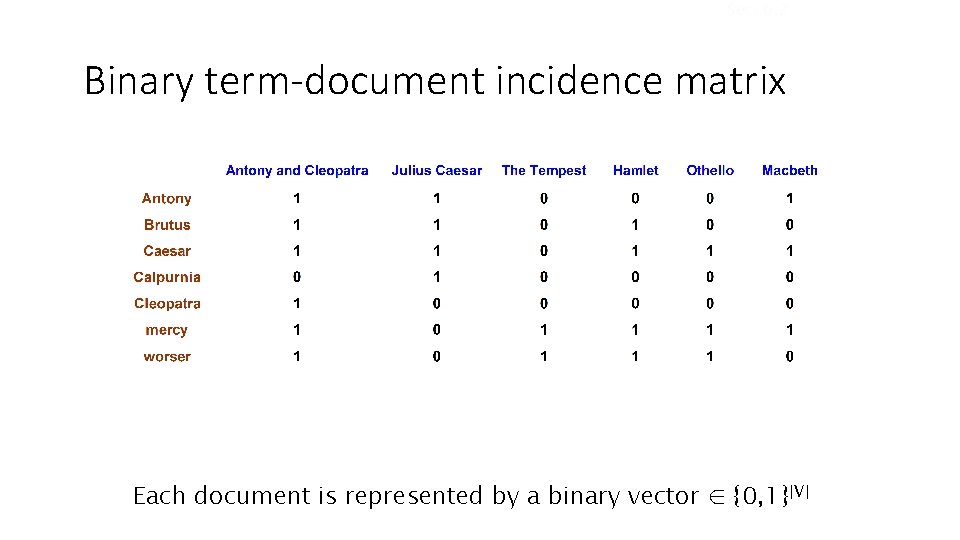

Sec. 6. 2 Binary term-document incidence matrix Each document is represented by a binary vector ∈ {0, 1}|V|

Sec. 6. 2 Term-document count matrices • Consider the number of occurrences of a term in a document: • Each document is a count vector in ℕv: a column below

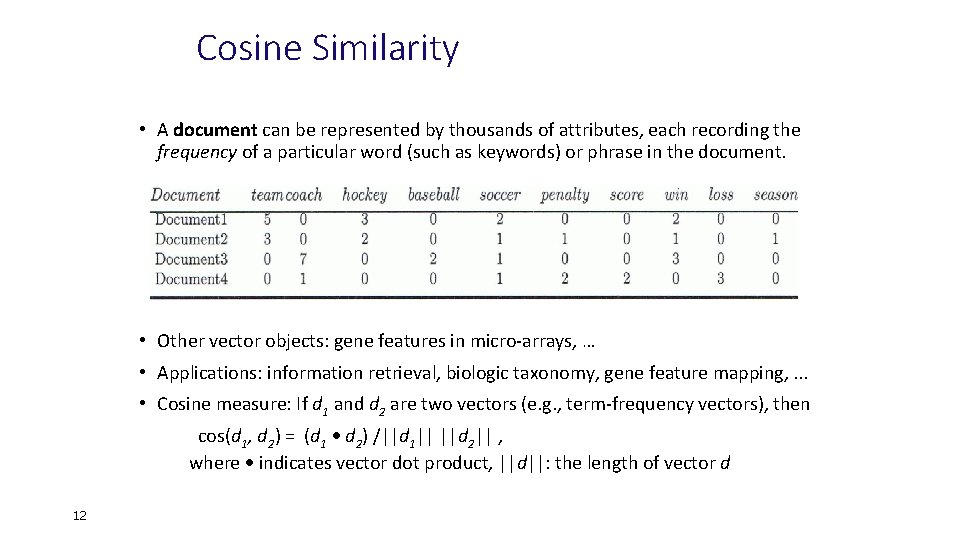

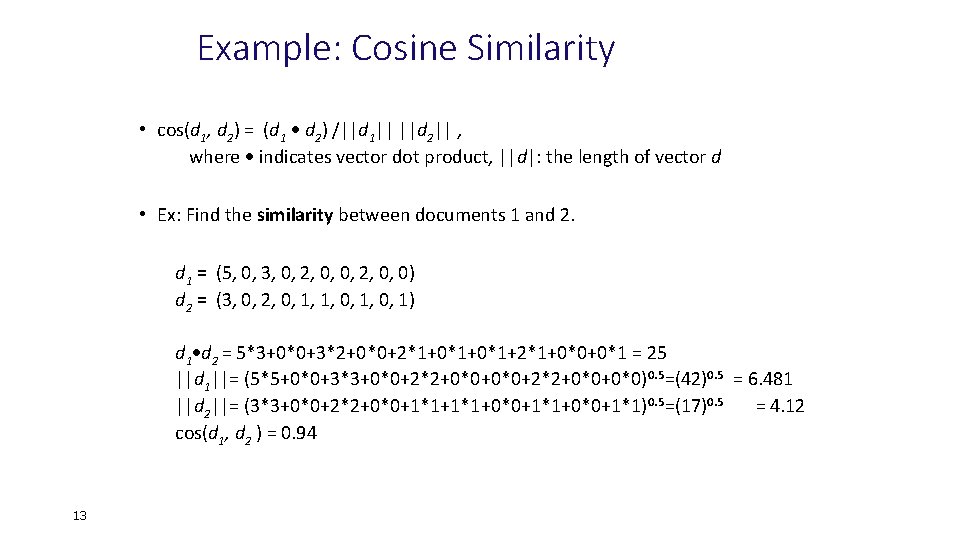

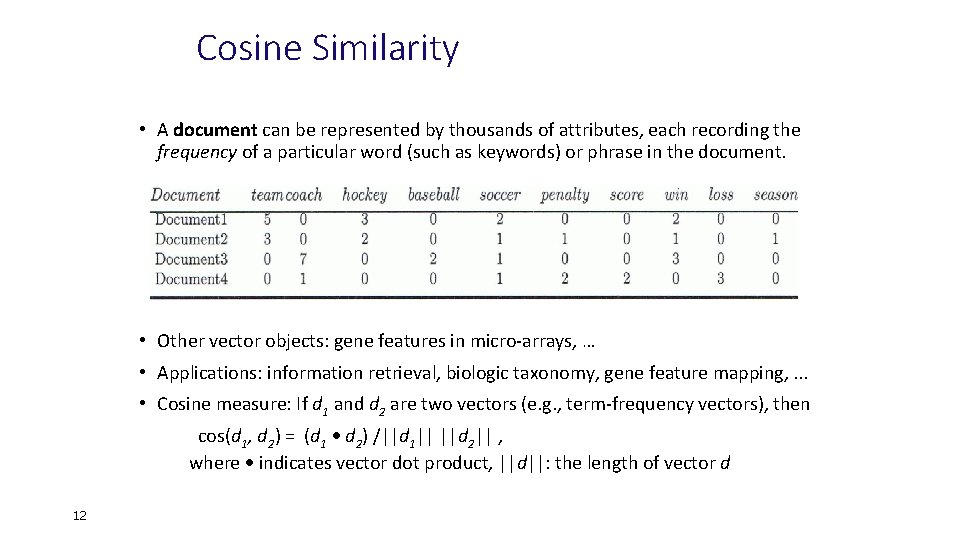

Cosine Similarity • A document can be represented by thousands of attributes, each recording the frequency of a particular word (such as keywords) or phrase in the document. • Other vector objects: gene features in micro-arrays, … • Applications: information retrieval, biologic taxonomy, gene feature mapping, . . . • Cosine measure: If d 1 and d 2 are two vectors (e. g. , term-frequency vectors), then cos(d 1, d 2) = (d 1 d 2) /||d 1|| ||d 2|| , where indicates vector dot product, ||d||: the length of vector d 12

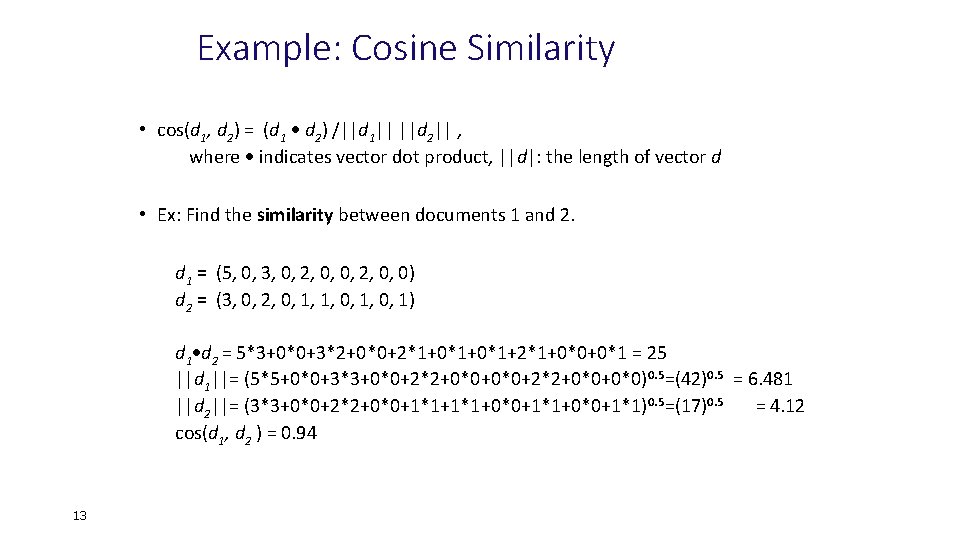

Example: Cosine Similarity • cos(d 1, d 2) = (d 1 d 2) /||d 1|| ||d 2|| , where indicates vector dot product, ||d|: the length of vector d • Ex: Find the similarity between documents 1 and 2. d 1 = (5, 0, 3, 0, 2, 0, 0) d 2 = (3, 0, 2, 0, 1, 1, 0, 1) d 1 d 2 = 5*3+0*0+3*2+0*0+2*1+0*1+2*1+0*0+0*1 = 25 ||d 1||= (5*5+0*0+3*3+0*0+2*2+0*0+0*0)0. 5=(42)0. 5 = 6. 481 ||d 2||= (3*3+0*0+2*2+0*0+1*1+0*0+1*1)0. 5=(17)0. 5 = 4. 12 cos(d 1, d 2 ) = 0. 94 13

Properties of distance metric

Properties of distance metric Metric mania metric conversions

Metric mania metric conversions Measurement of vertical distances

Measurement of vertical distances The amount of speed per unit of time

The amount of speed per unit of time Iso 11620

Iso 11620 Astronomers measure large distances

Astronomers measure large distances Calm area where warm air rises

Calm area where warm air rises A simple pendulum of length 40 cm subtends 60

A simple pendulum of length 40 cm subtends 60 Hexagonal agility test clockwise time

Hexagonal agility test clockwise time Nfpa separation distances

Nfpa separation distances How do waves form

How do waves form Gas particles are separated by relatively large distances

Gas particles are separated by relatively large distances The furthest distance ive travelled

The furthest distance ive travelled Far distances

Far distances