Discriminative Training for Large Vocabulary Speech Recognition Daniel

Discriminative Training for Large Vocabulary Speech Recognition Daniel Povey Peterhouse, University of Cambridge Ph. D Dissertation Jen-Wei Kuo

Outline Objective Functions – From Minimum Bayes Risk Function Maximization Update Formula MAP updates Implementation 2020/11/4 Speech Lab. NTNU 2

Notations 2020/11/4 Speech Lab. NTNU 3

![Minimum Bayes Risk Decoding Training Overall risk [ Classifier Design ] Conventional MAP decoding, Minimum Bayes Risk Decoding Training Overall risk [ Classifier Design ] Conventional MAP decoding,](http://slidetodoc.com/presentation_image/50eb33be476acfaa6f8553397d4af70e/image-4.jpg)

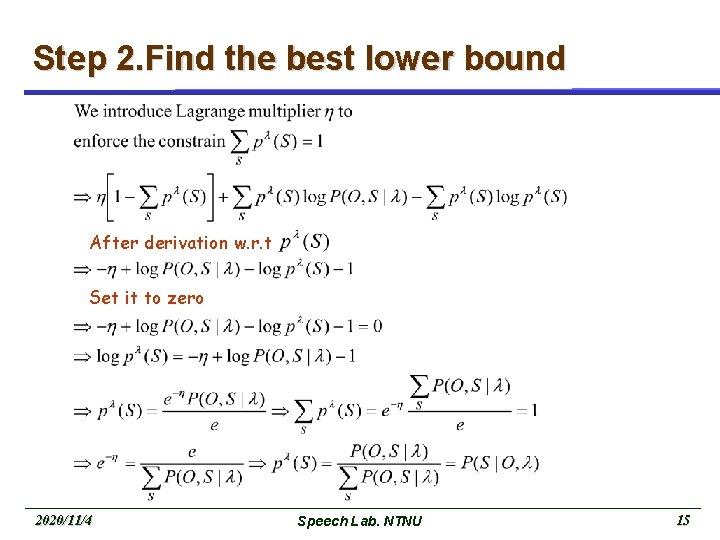

Minimum Bayes Risk Decoding Training Overall risk [ Classifier Design ] Conventional MAP decoding, Hypothesis tesing, WER minimization (Sausage), MBR Recognition … 2020/11/4 Speech Lab. NTNU 4

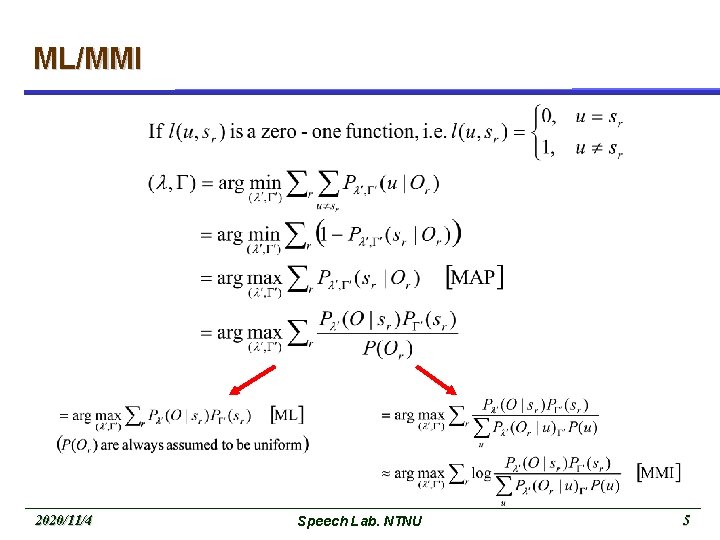

ML/MMI 2020/11/4 Speech Lab. NTNU 5

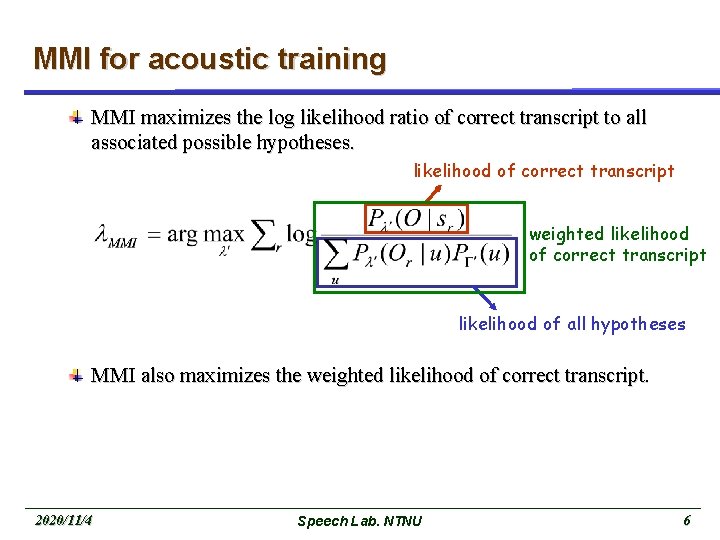

MMI for acoustic training MMI maximizes the log likelihood ratio of correct transcript to all associated possible hypotheses. likelihood of correct transcript weighted likelihood of correct transcript likelihood of all hypotheses MMI also maximizes the weighted likelihood of correct transcript. 2020/11/4 Speech Lab. NTNU 6

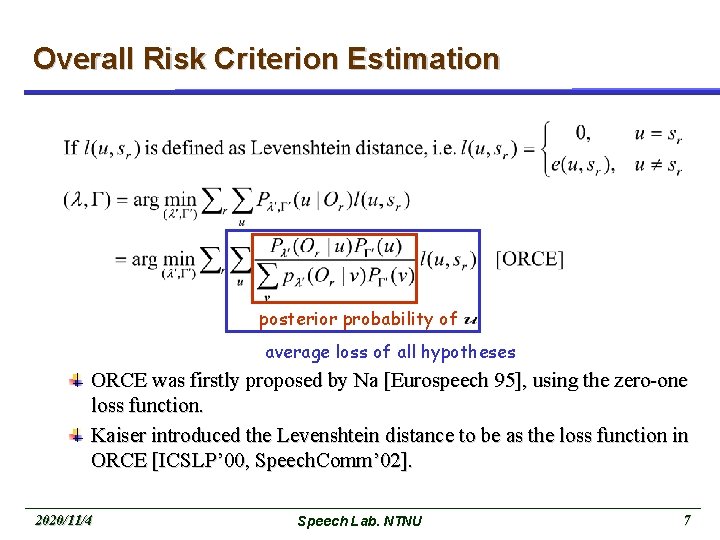

Overall Risk Criterion Estimation posterior probability of average loss of all hypotheses ORCE was firstly proposed by Na [Eurospeech 95], using the zero-one loss function. Kaiser introduced the Levenshtein distance to be as the loss function in ORCE [ICSLP’ 00, Speech. Comm’ 02]. 2020/11/4 Speech Lab. NTNU 7

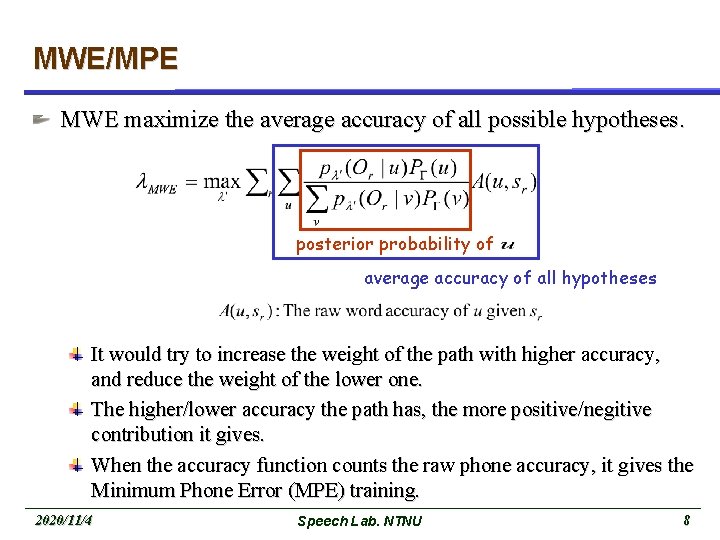

MWE/MPE MWE maximize the average accuracy of all possible hypotheses. posterior probability of average accuracy of all hypotheses It would try to increase the weight of the path with higher accuracy, and reduce the weight of the lower one. The higher/lower accuracy the path has, the more positive/negitive contribution it gives. When the accuracy function counts the raw phone accuracy, it gives the Minimum Phone Error (MPE) training. 2020/11/4 Speech Lab. NTNU 8

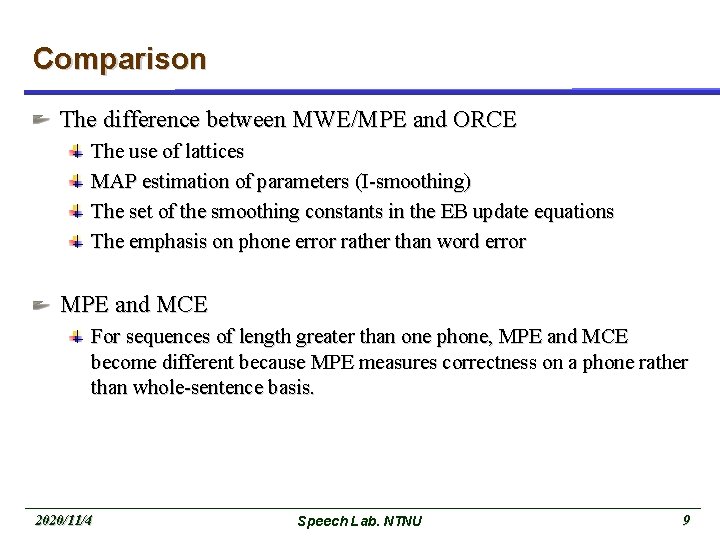

Comparison The difference between MWE/MPE and ORCE The use of lattices MAP estimation of parameters (I-smoothing) The set of the smoothing constants in the EB update equations The emphasis on phone error rather than word error MPE and MCE For sequences of length greater than one phone, MPE and MCE become different because MPE measures correctness on a phone rather than whole-sentence basis. 2020/11/4 Speech Lab. NTNU 9

![Expectation Maximum [Berlin Chen] How to do? 2020/11/4 Speech Lab. NTNU 10 Expectation Maximum [Berlin Chen] How to do? 2020/11/4 Speech Lab. NTNU 10](http://slidetodoc.com/presentation_image/50eb33be476acfaa6f8553397d4af70e/image-10.jpg)

Expectation Maximum [Berlin Chen] How to do? 2020/11/4 Speech Lab. NTNU 10

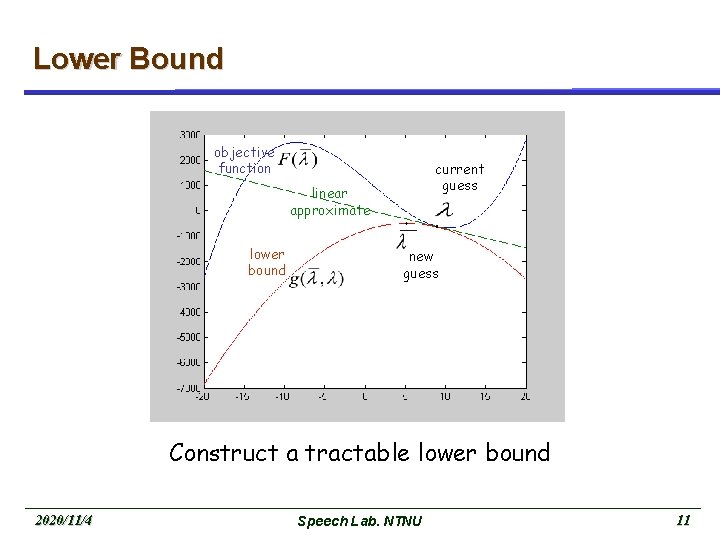

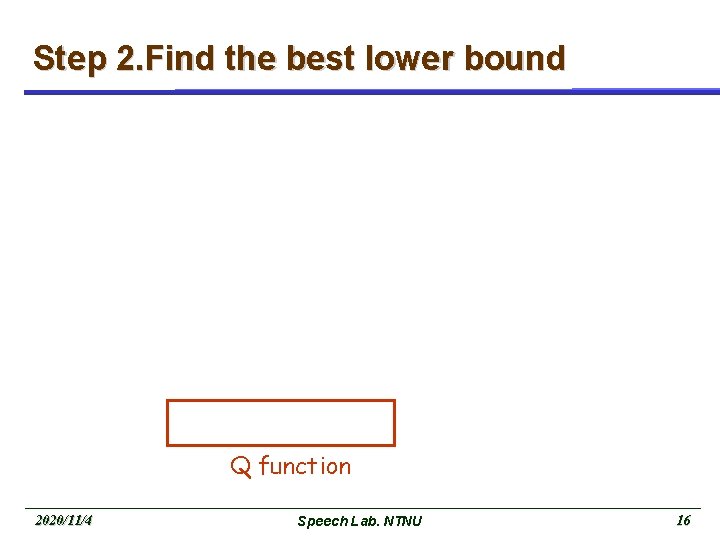

Lower Bound objective function current guess linear approximate lower bound new guess Construct a tractable lower bound 2020/11/4 Speech Lab. NTNU 11

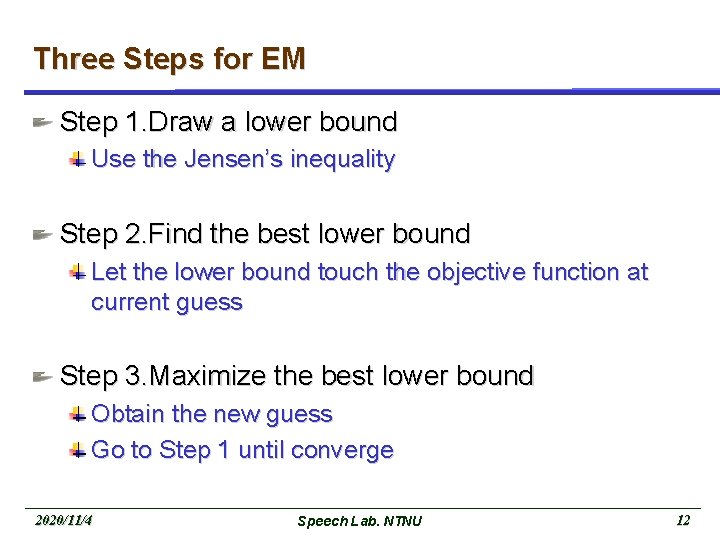

Three Steps for EM Step 1. Draw a lower bound Use the Jensen’s inequality Step 2. Find the best lower bound Let the lower bound touch the objective function at current guess Step 3. Maximize the best lower bound Obtain the new guess Go to Step 1 until converge 2020/11/4 Speech Lab. NTNU 12

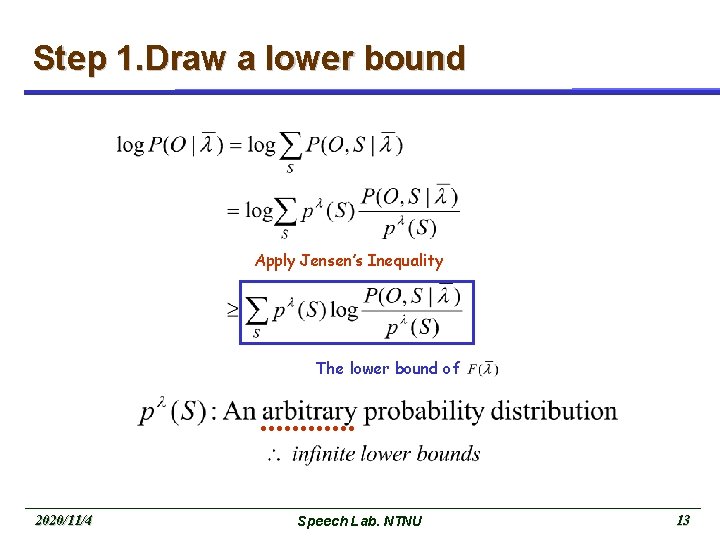

Step 1. Draw a lower bound Apply Jensen’s Inequality The lower bound of 2020/11/4 Speech Lab. NTNU 13

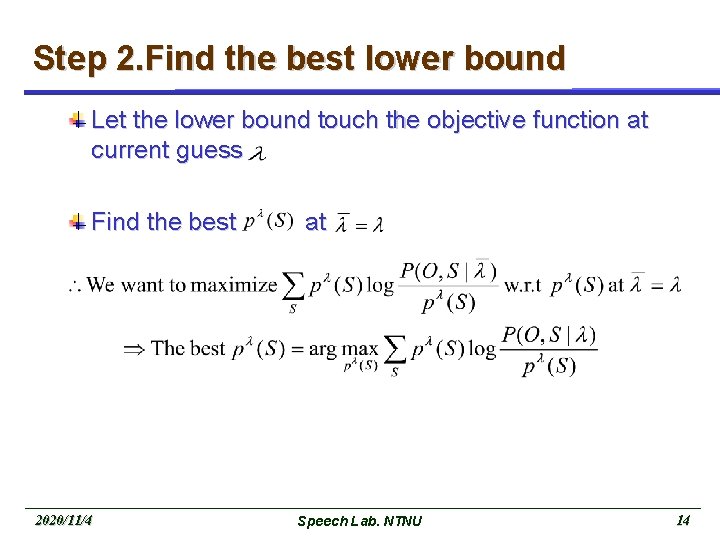

Step 2. Find the best lower bound Let the lower bound touch the objective function at current guess Find the best 2020/11/4 at Speech Lab. NTNU 14

Step 2. Find the best lower bound After derivation w. r. t Set it to zero 2020/11/4 Speech Lab. NTNU 15

Step 2. Find the best lower bound Q function 2020/11/4 Speech Lab. NTNU 16

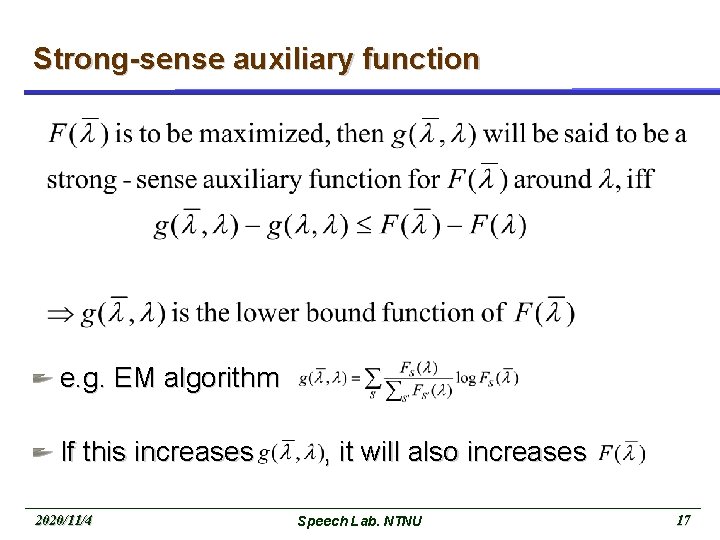

Strong-sense auxiliary function e. g. EM algorithm If this increases 2020/11/4 , it will also increases Speech Lab. NTNU 17

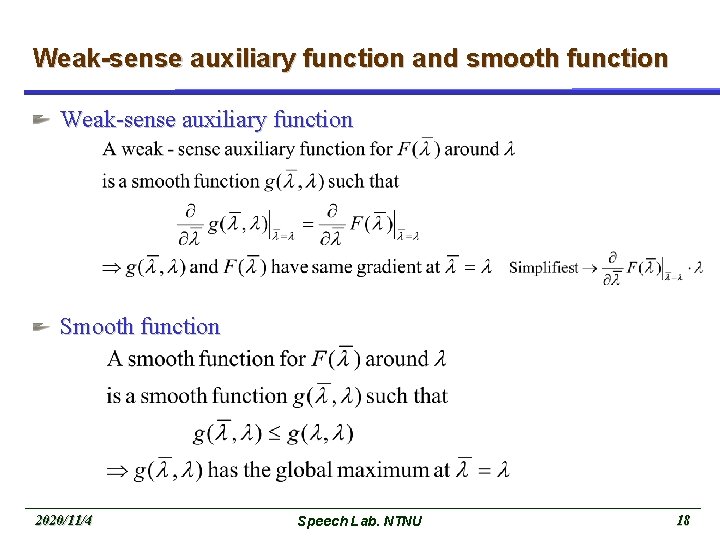

Weak-sense auxiliary function and smooth function Weak-sense auxiliary function Smooth function 2020/11/4 Speech Lab. NTNU 18

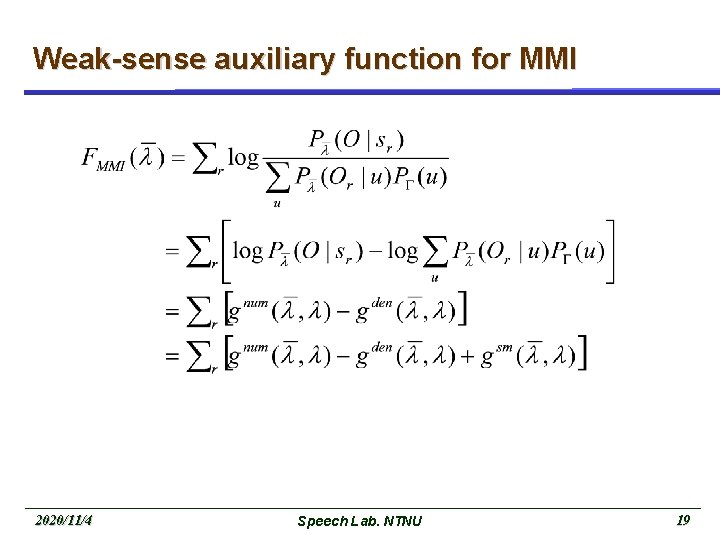

Weak-sense auxiliary function for MMI 2020/11/4 Speech Lab. NTNU 19

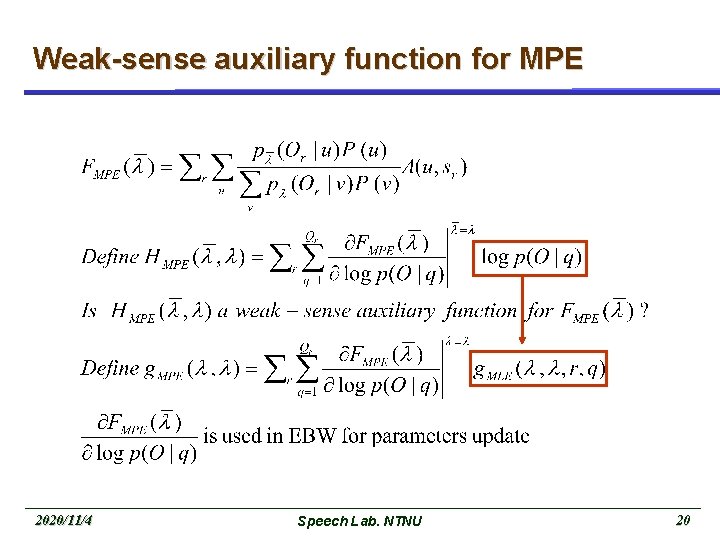

Weak-sense auxiliary function for MPE 2020/11/4 Speech Lab. NTNU 20

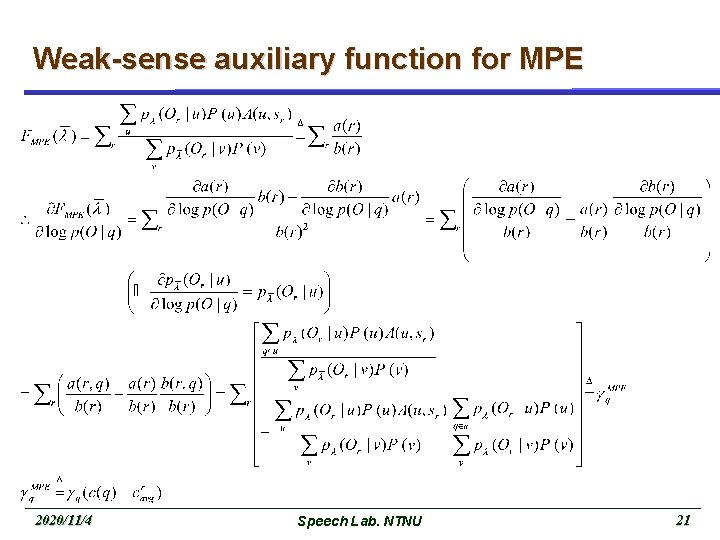

Weak-sense auxiliary function for MPE 2020/11/4 Speech Lab. NTNU 21

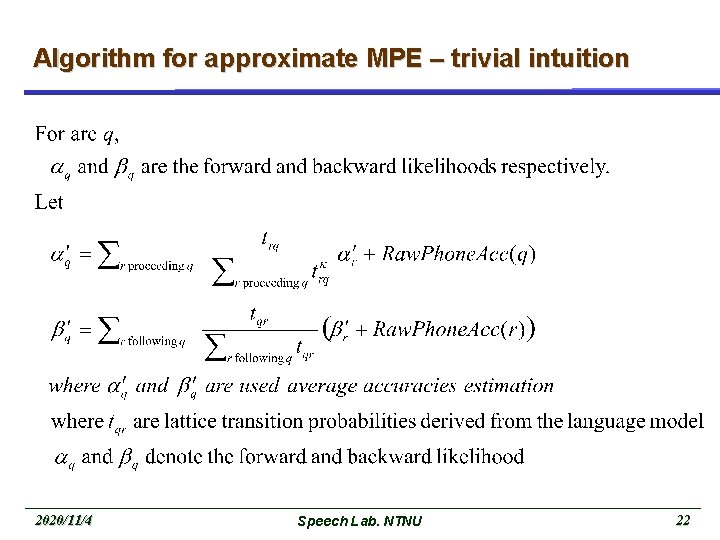

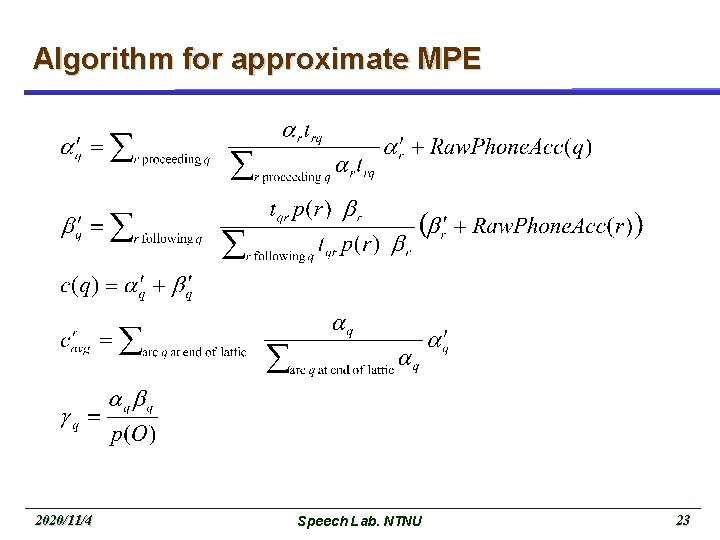

Algorithm for approximate MPE – trivial intuition 2020/11/4 Speech Lab. NTNU 22

Algorithm for approximate MPE 2020/11/4 Speech Lab. NTNU 23

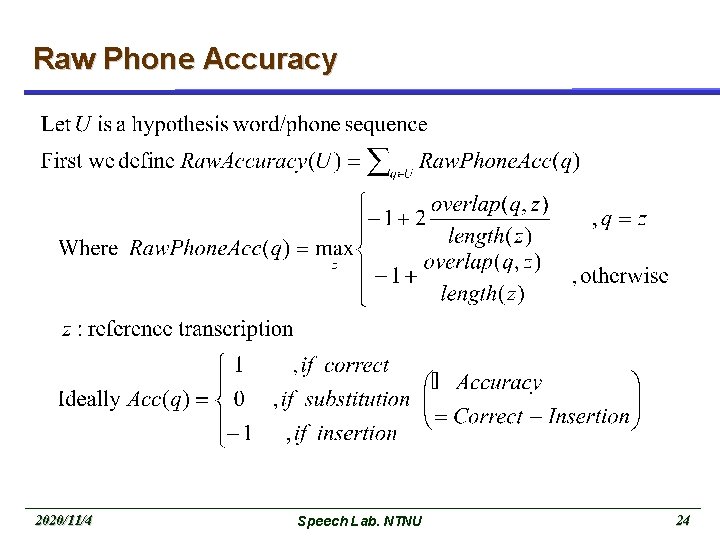

Raw Phone Accuracy 2020/11/4 Speech Lab. NTNU 24

![Illustration of computation for Raw Phone Accuracy [ Povey 2004 ] 2020/11/4 Speech Lab. Illustration of computation for Raw Phone Accuracy [ Povey 2004 ] 2020/11/4 Speech Lab.](http://slidetodoc.com/presentation_image/50eb33be476acfaa6f8553397d4af70e/image-25.jpg)

Illustration of computation for Raw Phone Accuracy [ Povey 2004 ] 2020/11/4 Speech Lab. NTNU 25

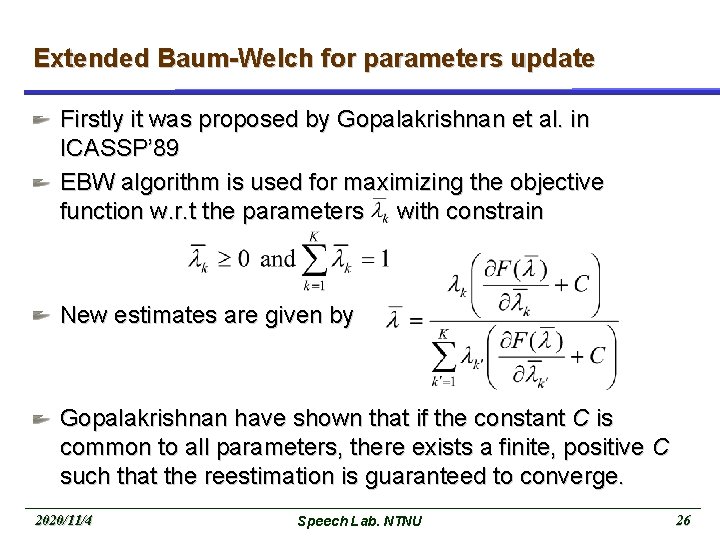

Extended Baum-Welch for parameters update Firstly it was proposed by Gopalakrishnan et al. in ICASSP’ 89 EBW algorithm is used for maximizing the objective function w. r. t the parameters with constrain New estimates are given by Gopalakrishnan have shown that if the constant C is common to all parameters, there exists a finite, positive C such that the reestimation is guaranteed to converge. 2020/11/4 Speech Lab. NTNU 26

![EBW for CDHMM – from discrete to continuous [ Normandin 1991 ] Discrete case EBW for CDHMM – from discrete to continuous [ Normandin 1991 ] Discrete case](http://slidetodoc.com/presentation_image/50eb33be476acfaa6f8553397d4af70e/image-27.jpg)

EBW for CDHMM – from discrete to continuous [ Normandin 1991 ] Discrete case for emission probability update 2020/11/4 Speech Lab. NTNU 27

![EBW for CDHMM – from discrete to continuous [ Normandin 1991 ] j j EBW for CDHMM – from discrete to continuous [ Normandin 1991 ] j j](http://slidetodoc.com/presentation_image/50eb33be476acfaa6f8553397d4af70e/image-28.jpg)

EBW for CDHMM – from discrete to continuous [ Normandin 1991 ] j j j M subintervals Ik of width 2020/11/4 Speech Lab. NTNU 28

![EBW for CDHMM – from discrete to continuous [ Normandin 1991 ] 2020/11/4 Speech EBW for CDHMM – from discrete to continuous [ Normandin 1991 ] 2020/11/4 Speech](http://slidetodoc.com/presentation_image/50eb33be476acfaa6f8553397d4af70e/image-29.jpg)

EBW for CDHMM – from discrete to continuous [ Normandin 1991 ] 2020/11/4 Speech Lab. NTNU 29

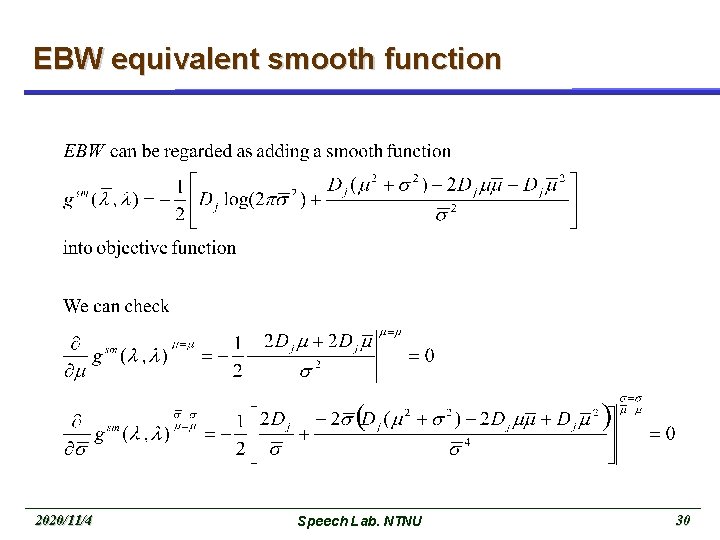

EBW equivalent smooth function 2020/11/4 Speech Lab. NTNU 30

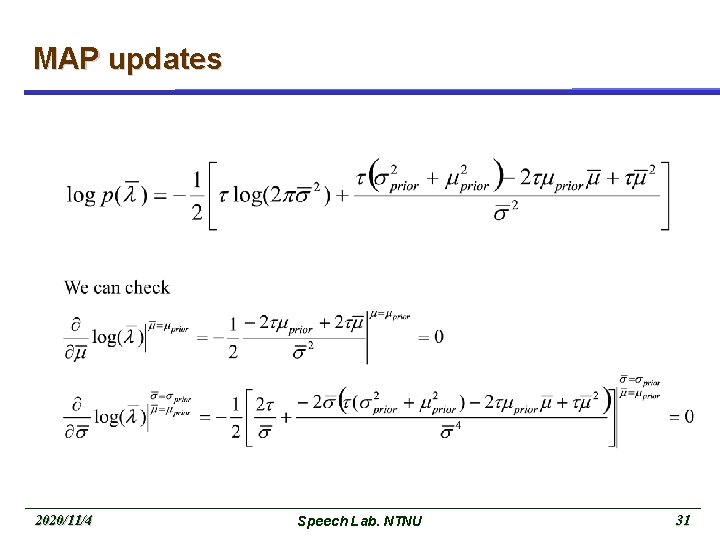

MAP updates 2020/11/4 Speech Lab. NTNU 31

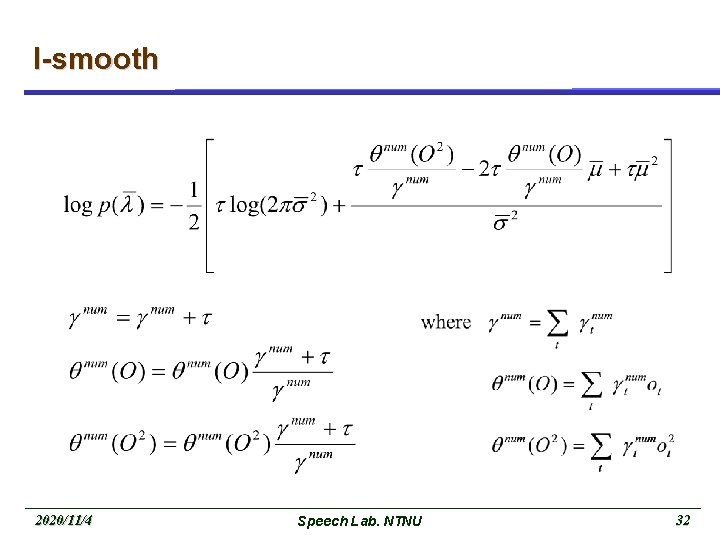

I-smooth 2020/11/4 Speech Lab. NTNU 32

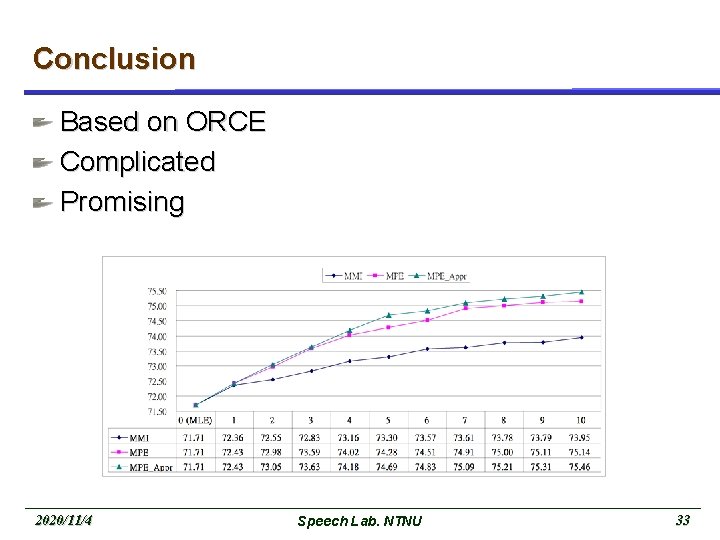

Conclusion Based on ORCE Complicated Promising 2020/11/4 Speech Lab. NTNU 33

- Slides: 33