Disconnected Operation In The Coda File System James

- Slides: 27

Disconnected Operation In The Coda File System James J Kistler & M Satyanarayanan Carnegie Mellon University Presented By Abhishek Khaitan Adhip Joshi

Background • We are back to 1990 s. • Network is slow and not stable • Terminal “powerful” client – 33 MHz CPU, 16 MB RAM, 100 MB hard drive • Mobile Users appeared – 1 st IBM Thinkpad in 1992 • We can do sth at client without network

Outline • Introduction • Design Overview • Design Rationale • Detailed Design • Status & Evaluation • Future Work • Conclusion

Introduction • Motivation –popularity of DS collaboration between users delegation of administration – remote failure • Disconnected operation –a temporary deviation from normal operation as a client of a shared repository • Why – enhance availability • How – • data cache Solution ? ?

Design overview • Coda - Evolved from AFS • Central Idea – Use Caching to Improve availability • Application area: academic and research, not for highly concurrent, fine granularity data access, safety-critical systems.

Design overview (contd. ) • Coda FS – location transparent, shared, Unix FS • Coda namespace – mapped to individual file server at the granularity of volumes • Venus (cache manager) – obtains and caches volume mappings • High Availability – How? ? • Server Replication - VSG - the set of replication sites for a Volume - AVSG - currently accessible VSG

Design overview (contd. ) • Cache Coherence Protocol based on callbacks Callback - When a workstation caches a file or directory, the server promise to notify it before allowing modification by others • Disconnected Operation - AVSG becomes empty - Venus acts as pseudo-server - Reintegrates on reconnection

Design Rationale • Scalability • Portable Workstation • First- vs. Second-Class Replication • Optimistic vs. Pessimistic Replica Control

Design Rationale(contd. ) Scalability – Prepare for growth a priori, rather than afterthought – Place functionality on clients rather than servers – Mechanism: - Callback based cache coherence - Whole-file caching

Design Rationale (contd. ) • Portable Stations – Manual caching --> Automatic caching --> Same namespace – Good prediction on future file access needs, how? • First- vs. Second-Class Replication – First-class replicas on servers over, Second-class replicas on clients, higher quality, more persistent, widely known, secure, available, complete and accurate. – Cache coherence protocol, balance between performance and scalability with quality. – When disconnection operation, data quality degraded for second-class, preserved for first-class. Is it true? – Server Replication/disconnected operation –> + quality / - cost trade-off

Design Rationale(contd. ) Optimistic vs. Pessimistic Replication Control – Central to the design of disconnected operation – Pessimistic - disallow or restrict read and write, no conflicts, acquire control (Locker) prior to disconnection • exclusive control • shared control – Related Problems • Acquire control, involuntary or voluntary disconnection • Retain control – Brief or Extended – Shared or Exclusive – lease

Design Rationale(contd. ) • Optimistic, permit read and write anywhere, potential conflicts, detect and resolve them after their occurrence – Provide the highest possible availability of data – Application dependent • Unix File System, low degree of write-sharing. – Conflicts resolution • Automatically resolve when possible • Manually repair, annoyance • Cost?

Design And implementation

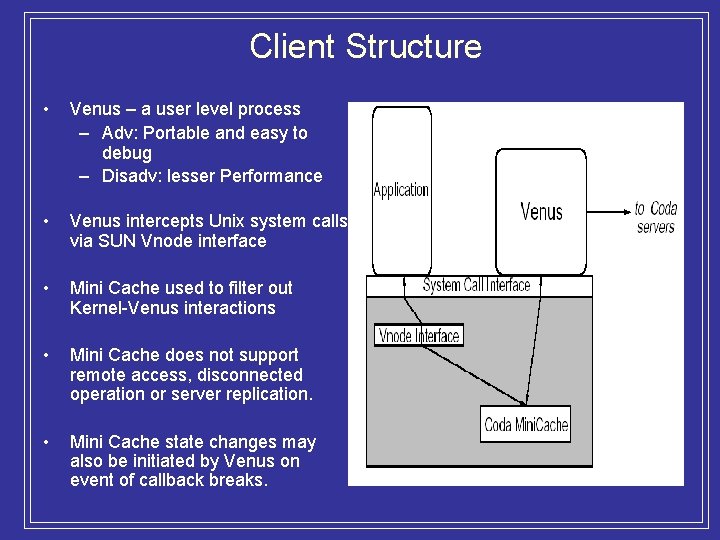

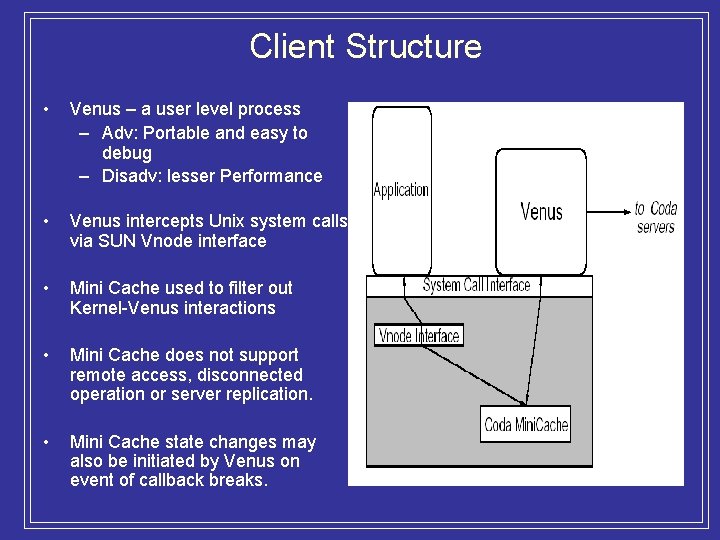

Client Structure • Venus – a user level process – Adv: Portable and easy to debug – Disadv: lesser Performance • Venus intercepts Unix system calls via SUN Vnode interface • Mini Cache used to filter out Kernel-Venus interactions • Mini Cache does not support remote access, disconnected operation or server replication. • Mini Cache state changes may also be initiated by Venus on event of callback breaks.

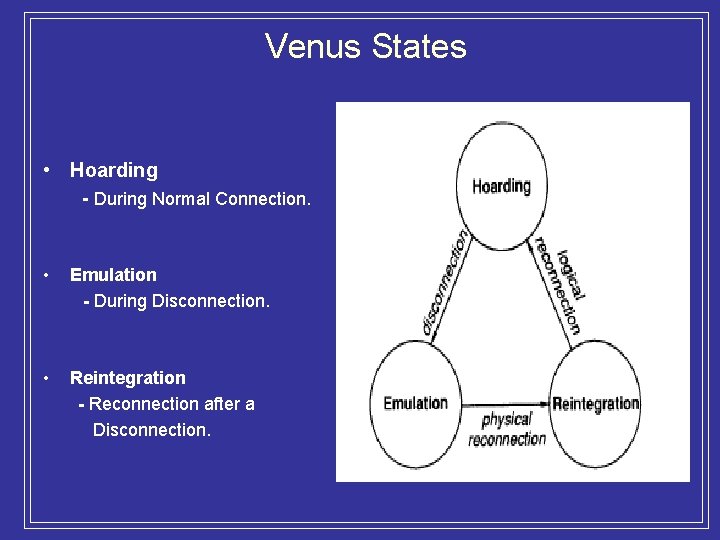

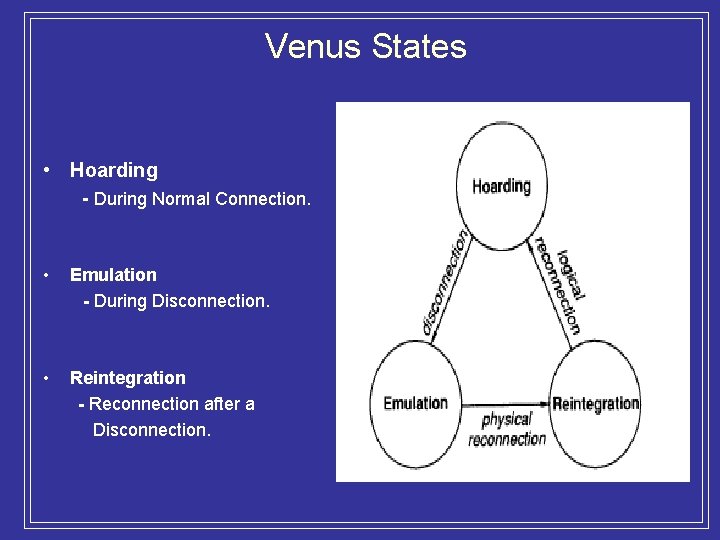

Venus States • Hoarding - During Normal Connection. • Emulation - During Disconnection. • Reintegration - Reconnection after a Disconnection.

Hoarding Steps –Hoard useful data in anticipation of disconnection – Must Balance the needs of connected and disconnected operation. – To improve performance, cache currently used files but also to be prepared for disconnection, cache critical files too. Reasons which make Hoarding difficult … – File reference behavior. –Unpredictable disconnections and reconnections. – How to measure true cost of cache miss during Disconnection ? – Activity of other clients must be accounted. – Cache space is finite. Possible Solutions ? – Use prioritized algorithm for caching – Periodically re-evaluate which objects merit retention – Hoard Walking.

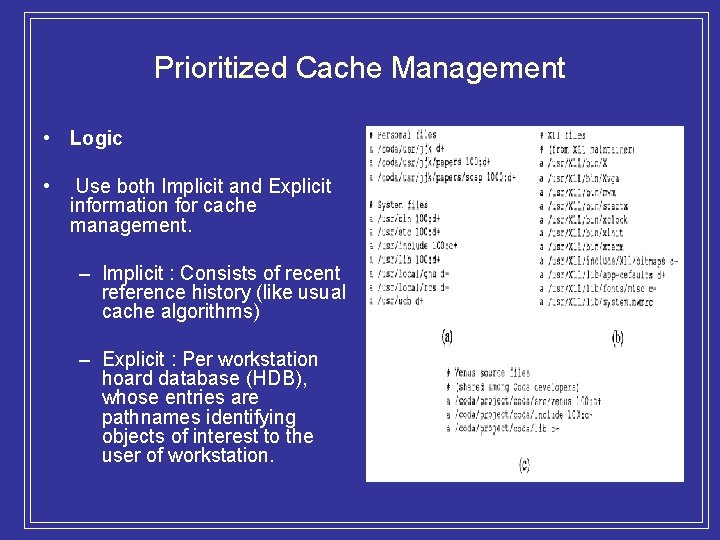

Prioritized Cache Management • Logic • Use both Implicit and Explicit information for cache management. – Implicit : Consists of recent reference history (like usual cache algorithms) – Explicit : Per workstation hoard database (HDB), whose entries are pathnames identifying objects of interest to the user of workstation.

Prioritized Cache Management (contd. ) • Simple Front End to update HDB – customize HDB. – support meta expansion of HDB entries. – Entry may optionally indicate priority. – Prioritized algorithm: – User defined hoard priority p: how interest it is? – Recent Usage q – Object priority = f(p, q) – Objects with lower priority are deleted when cache space is needed. • Perform Hierarchical cache management. – Assign infinite priority to directories with cached children.

Hoard Walking • • • Why do we need Hoard Walking ? To ensure no uncached object has higher priority than a cached object. Steps Do a Hoard walk every 10 mins. Phase 1 – evaluate name bindings of HDB entries to reflect update activity. Phase 2 – evaluate priorities of all the entries to restore equilibrium.

Hoard Walking (contd. ) • Optimizations • For files and symbolic links, purge objects on callback break and re-fetch it on demand or during next hoard walk. • For directories, don't purge on callback but mark it as suspicious. • A callback break on directory means that an entry has been added to or deleted from a directory.

Emulation Actions Performed • Responsibility for Access & Semantic checks. • Generating temporary file identifiers. • Logging Maintains sufficient information to replay update activity when it reintegrates. (system calls) Maintains a replay log. Follows many optimization mechanisms like reducing log lengths & maintaining a copy of the log in cache. • Persistence Backing Up cache & related data structures in non-volatile storage. RVM-Recoverable Virtual Memory. Meta-data is mapped to venus address space (RVM)

Emulation • Resource Exhaustion - File cache becoming filled with modified files - RVM space allocated to replay logs becomes full –Compress file cache & RVM contents. –Selectively back out updates made while disconnected. –Using removable media.

Reintegration • Reintegration - Changes roles from pseudo-server to cache manager. – Replay algorithm. Replay logs parsed, changes propagated to AVSG in parallel, transactions committed – Conflict Handling. Write-write conflict Tag (storeid) to resolve conflicts

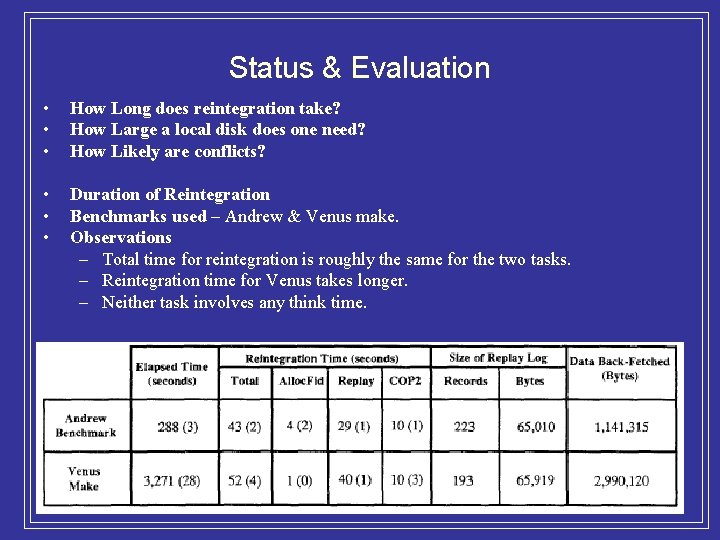

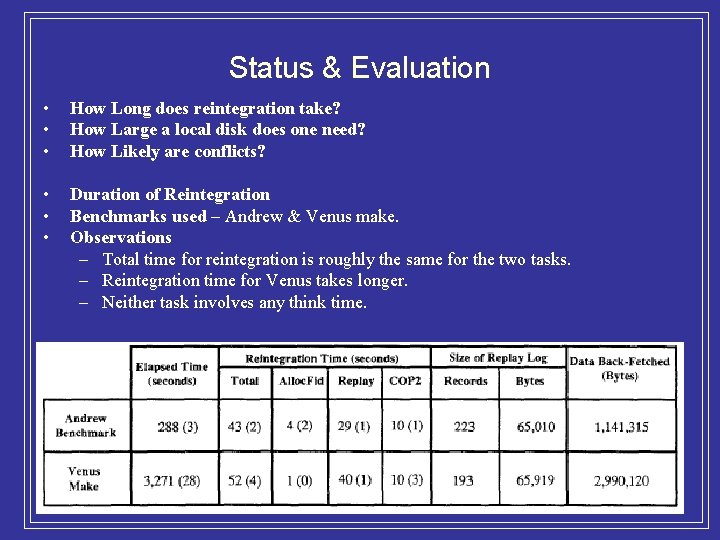

Status & Evaluation • • • How Long does reintegration take? How Large a local disk does one need? How Likely are conflicts? • • • Duration of Reintegration Benchmarks used – Andrew & Venus make. Observations – Total time for reintegration is roughly the same for the two tasks. – Reintegration time for Venus takes longer. – Neither task involves any think time.

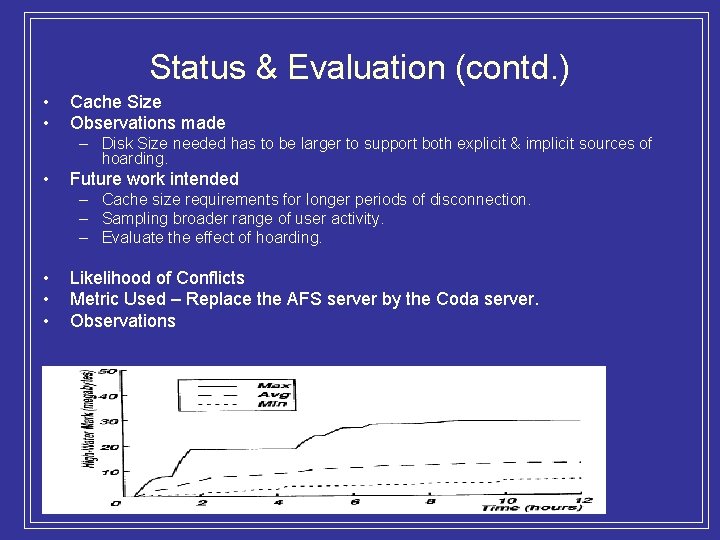

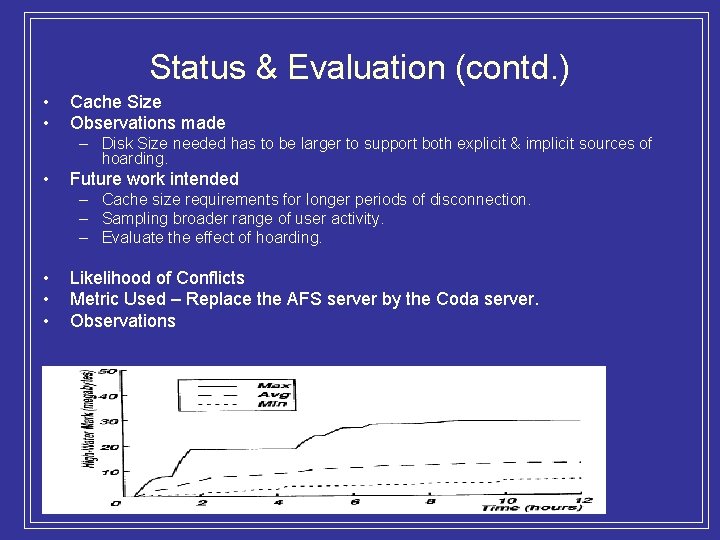

Status & Evaluation (contd. ) • • Cache Size Observations made – Disk Size needed has to be larger to support both explicit & implicit sources of hoarding. • Future work intended – Cache size requirements for longer periods of disconnection. – Sampling broader range of user activity. – Evaluate the effect of hoarding. • • • Likelihood of Conflicts Metric Used – Replace the AFS server by the Coda server. Observations

Do we still need disconnection? • WAN and wireless is not very reliable, and is slow • PDA is not very powerful – 200 MHz strong. ARM, 128 M CF Card – Electric power constrained

Conclusion Related work – Cedar, FACE, PCMAIL etc Conclusion Strengths –Tried & Tested. –Optimistic Replication. Weaknesses –Relevance in current scenario. Relevance –Application Dependent. –Imply Certain Concepts.