Disaggregated Memory for Expansion and Sharing in Blade

Disaggregated Memory for Expansion and Sharing in Blade Servers Kevin Lim*, Jichuan Chang +, Trevor Mudge*, Parthasarathy Ranganathan +, Steven K. Reinhardt* †, Thomas F. Wenisch* June 23, 2009 * University of Michigan + HP Labs † AMD

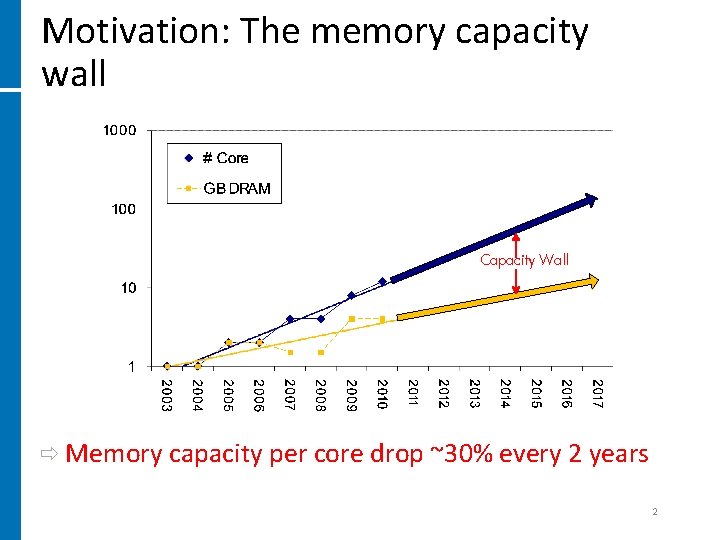

Motivation: The memory capacity wall Capacity Wall ð Memory capacity per core drop ~30% every 2 years 2

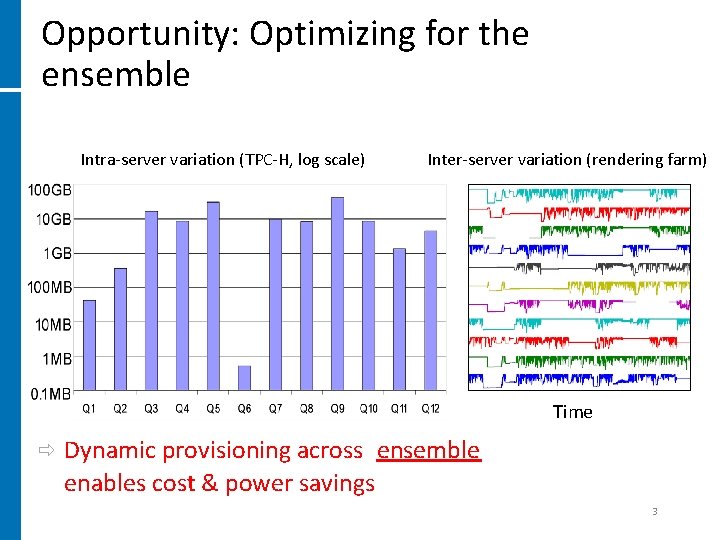

Opportunity: Optimizing for the ensemble Intra-server variation (TPC-H, log scale) Inter-server variation (rendering farm) Time ð Dynamic provisioning across ensemble enables cost & power savings 3

Contributions Goal: Expand capacity & provision for typical usage • New architectural building block: memory blade − Breaks traditional compute-memory co-location • Two architectures for transparent mem. expansion • Capacity expansion: • − 8 x performance over provisioning for median usage − Higher consolidation Capacity sharing: − Lower power and costs − Better performance / dollar 4

Outline • Introduction • Disaggregated memory architecture − Concept − Challenges − Architecture • Methodology and results • Conclusion 5

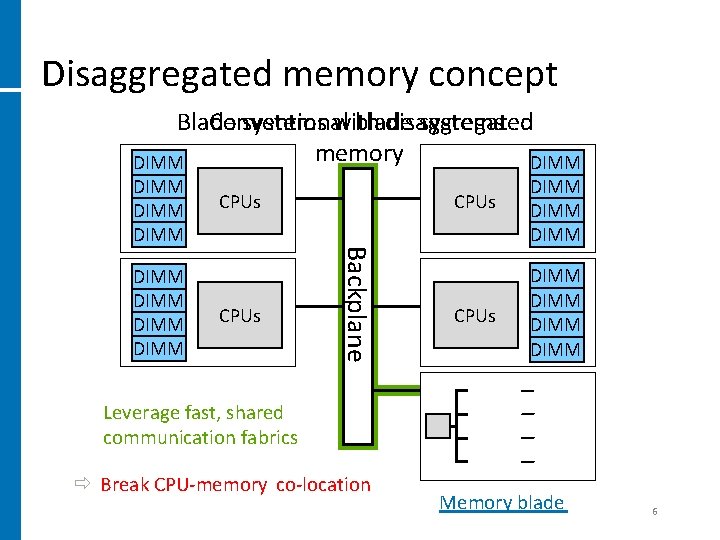

Disaggregated memory concept Blade Conventional systems with blade disaggregated systems memory DIMM DIMM CPUs Backplane DIMM DIMM CPUs DIMM Leverage fast, shared communication fabrics ð Break CPU-memory co-location Memory blade 6

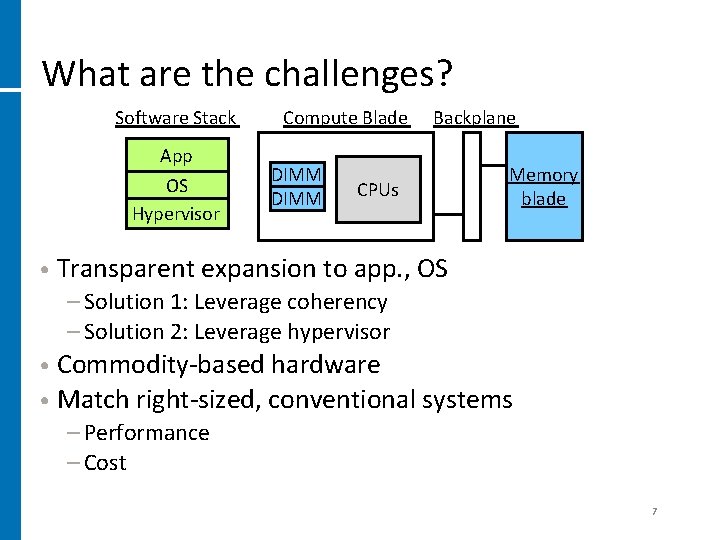

What are the challenges? Software Stack App OS Hypervisor Compute Blade DIMM Backplane CPUs Memory blade • Transparent expansion to app. , OS − Solution 1: Leverage coherency − Solution 2: Leverage hypervisor • Commodity-based hardware • Match right-sized, conventional systems − Performance − Cost 7

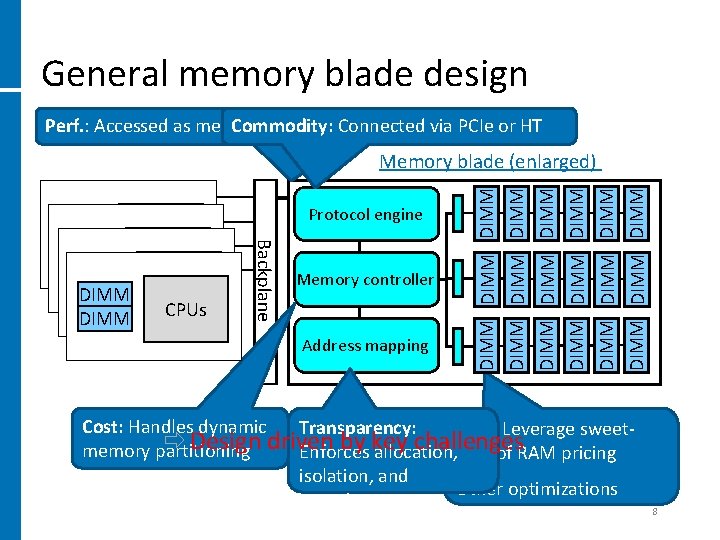

General memory blade design Perf. : Accessed as memory, Commodity: not swap. Connected space via PCIe or HT Memory controller Address mapping DIMM DIMM DIMM DIMM DIMM Protocol engine Backplane DIMM DIMM CPUs DIMM Memory blade (enlarged) Cost: Handles dynamic Transparency: Cost: Leverage sweetð Design driven by key challenges memory partitioning Enforces allocation, spot of RAM pricing isolation, and Other optimizations mapping 8

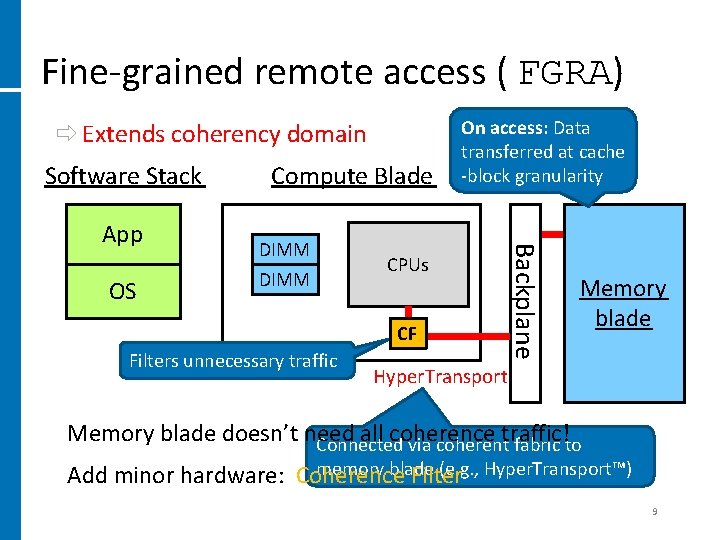

Fine-grained remote access ( FGRA) ð Extends coherency domain Software Stack OS DIMM CPUs CF Filters unnecessary traffic Backplane App Compute Blade On access: Data transferred at cache -block granularity Memory blade Hyper. Transport Memory blade doesn’t need all coherence traffic! Connected via coherent fabric to memory blade (e. g. , Hyper. Transport™) Add minor hardware: Coherence Filter 9

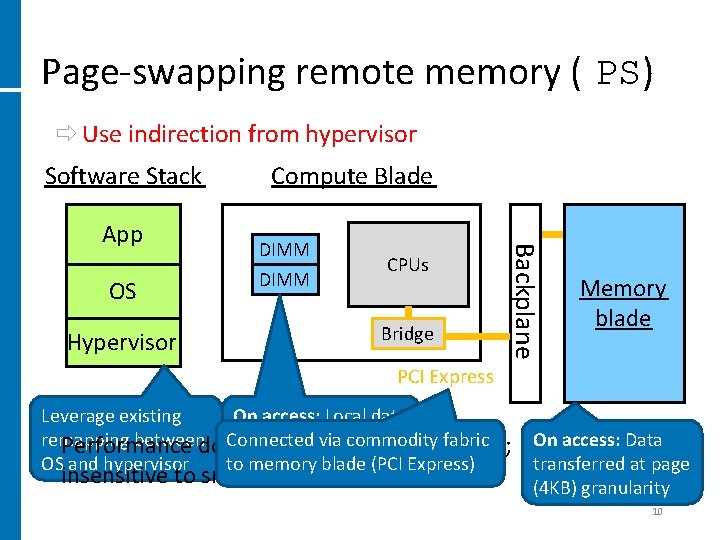

Page-swapping remote memory ( PS) ð Use indirection from hypervisor Software Stack OS DIMM Hypervisor CPUs Bridge Backplane App Compute Blade Memory blade PCI Express Leverage existing On access: Local data Connected via transfer commodity fabric remapping betweendominated page swapped with Performance by latency; to memory blade OS and hypervisor remote data page(PCI Express) insensitive to small changes On access: Data transferred at page (4 KB) granularity 10

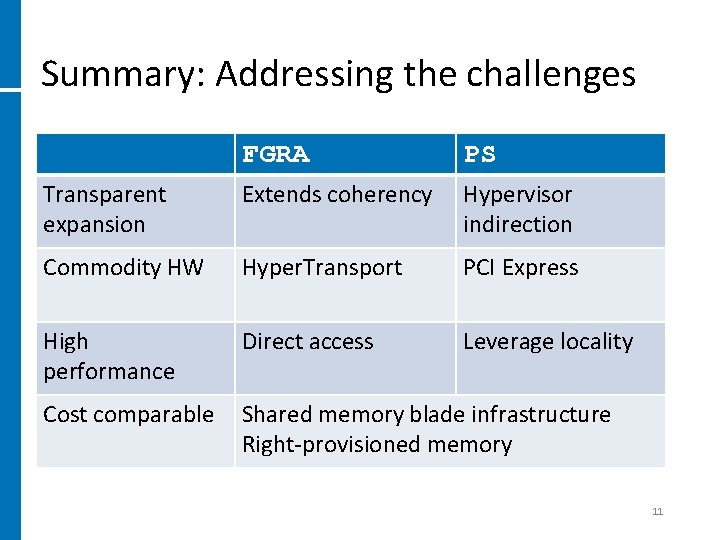

Summary: Addressing the challenges FGRA PS Transparent expansion Extends coherency Hypervisor indirection Commodity HW Hyper. Transport PCI Express High performance Direct access Leverage locality Cost comparable Shared memory blade infrastructure Right-provisioned memory 11

Outline • Introduction • Disaggregated memory architecture • Methodology and results − Performance-per-cost • Conclusion 12

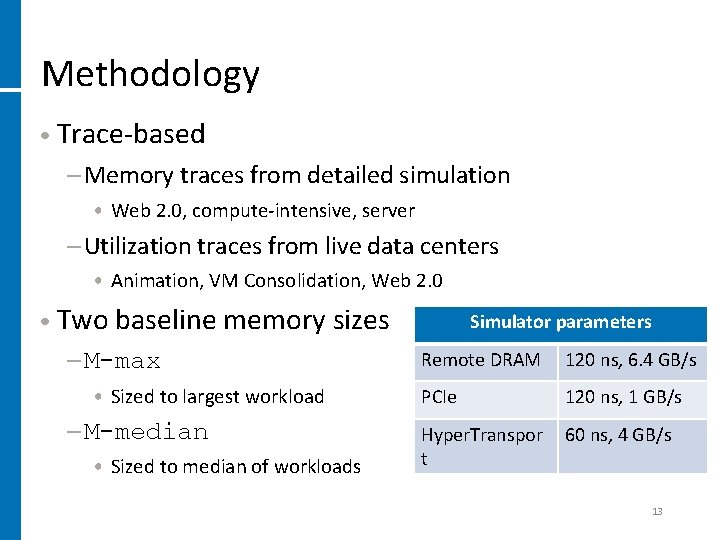

Methodology • Trace-based − Memory traces from detailed simulation • Web 2. 0, compute-intensive, server − Utilization traces from live data centers • Animation, VM Consolidation, Web 2. 0 • Two baseline memory sizes − M-max • Sized to largest workload − M-median • Sized to median of workloads Simulator parameters Remote DRAM 120 ns, 6. 4 GB/s PCIe 120 ns, 1 GB/s Hyper. Transpor t 60 ns, 4 GB/s 13

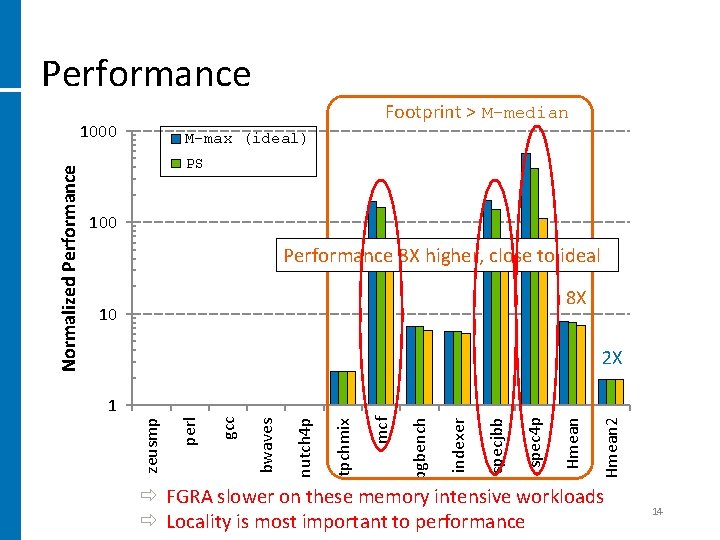

Performance Footprint > M-median M-max (ideal) PS 100 Performance 8 X higher, close to ideal 8 X 10 2 X Hmean 2 Hmean spec 4 p specjbb indexer pgbench mcf tpchmix nutch 4 p bwaves gcc perl 1 zeusmp Normalized Performance 1000 M-median local intensive + disk workloads ð FGRABaseline: slower on these memory ð Locality is most important to performance 14

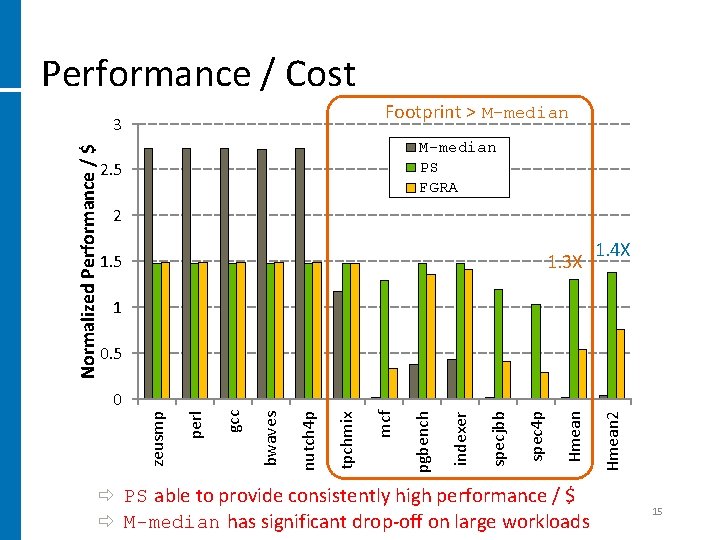

Performance / Cost Footprint > M-median Normalized Performance / $ 3 M-median PS FGRA 2. 5 2 1. 3 X 1. 5 1. 4 X 1 0. 5 ð PS able to. Baseline: provide consistently M-max localhigh + disk performance / $ ð M-median has significant drop-off on large workloads Hmean 2 Hmean spec 4 p specjbb indexer pgbench mcf tpchmix nutch 4 p bwaves gcc perl zeusmp 0 15

Conclusions • Motivation: Impending memory capacity wall • Opportunity: Optimizing for the ensemble • Solution: Memory disaggregation − Transparent, commodity HW, high perf. , low cost − Dedicated memory blade for expansion, sharing − PS and FGRA provide transparent support • Please see paper for more details! 16

Thank you! Any questions? ktlim@umich. edu 17

- Slides: 17