Direct Observation of Clinical Skills During Patient Care

Direct Observation of Clinical Skills During Patient Care NEW INSIGHTS – REYNOLDS MEETING 2012 Direct Observation Team: J. Kogan, L. Conforti, W. Iobst, E. Holmboe

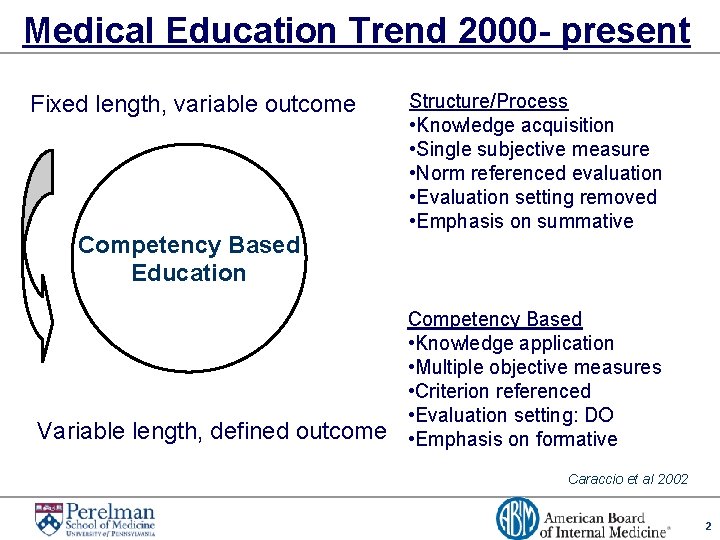

Medical Education Trend 2000 - present Fixed length, variable outcome Competency Based Education Variable length, defined outcome Structure/Process • Knowledge acquisition • Single subjective measure • Norm referenced evaluation • Evaluation setting removed • Emphasis on summative Competency Based • Knowledge application • Multiple objective measures • Criterion referenced • Evaluation setting: DO • Emphasis on formative Caraccio et al 2002 2

In-Training Performance Assessment ØAssessment in authentic situations Ø Learners’ ability to combine knowledge, skills, judgments, attitudes in dealing with realistic problems of professional practice ØAssessment in day to day practice Ø Enables assessment of a range of essential competencies, some of which cannot be validly assessed otherwise Govaerts MJB et al. Adv Health Sci Edu. 2007; 12: 239 -60 3

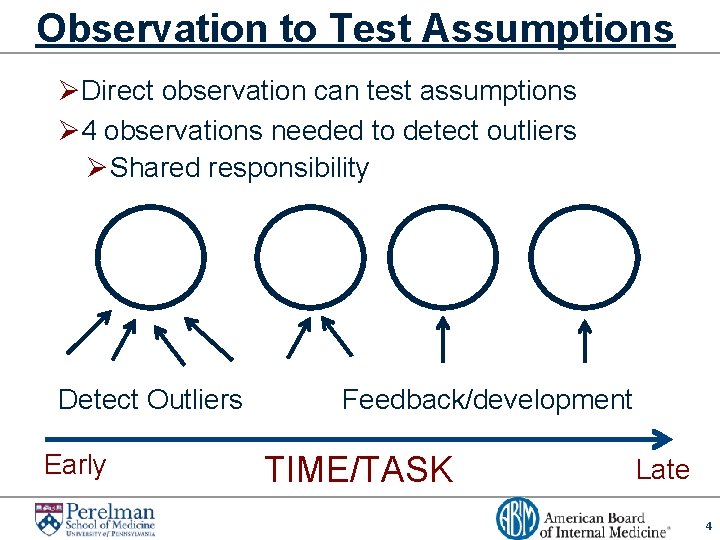

Observation to Test Assumptions ØDirect observation can test assumptions Ø 4 observations needed to detect outliers Ø Shared responsibility Detect Outliers Early Feedback/development TIME/TASK Late 4

Observation and Safe Patient Care ØImportance of appropriate supervision ØEntrustment Trainee performance* X Appropriate level of supervision** Must = Safe, effective patient-centered care * a function of level of competence in context **a function of attending competence in context 5

Types of Supervision ØRoutine oversight Ø Clinical oversight planned in advance (i. e. what we normally do) ØResponsive oversight: Ø Clinical activities that occur in response to trainee or patient specific issues (i. e. you do more than usual) ØDirect patient care: Ø When supervisor moves beyond oversight to actively providing care for the patient ØBackstage oversight: Ø Clinical oversight which the trainee is not aware of Kennedy TJT et al. JGIM 2007. 22: 1080 -85 . 6

Your Supervision ØHow do you usually supervise? ØWhen do you supervise more closely? ØHow do you change your supervision to ensure patients get safe, effective, patient-centered care? ØWhat did you learn observing that will change how you supervise going forward? ØREMEMBER: SUPERVISION ALSO FOR FEEDBACK 7

Entrustment Ø“A practitioner has demonstrated the necessary knowledge, skills, and attitudes to be trusted to independently perform this activity. ” Ten Cate O, Scheele F. Acad Med 2007; 82: 542 -7 8

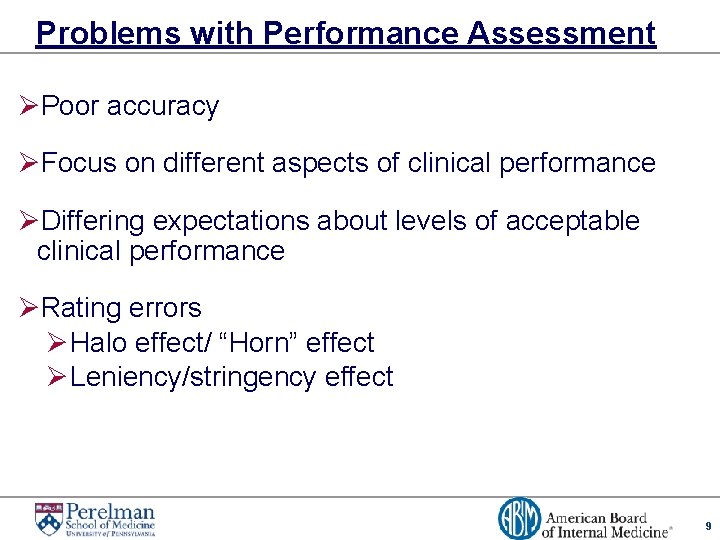

Problems with Performance Assessment ØPoor accuracy ØFocus on different aspects of clinical performance ØDiffering expectations about levels of acceptable clinical performance ØRating errors Ø Halo effect/ “Horn” effect Ø Leniency/stringency effect 9

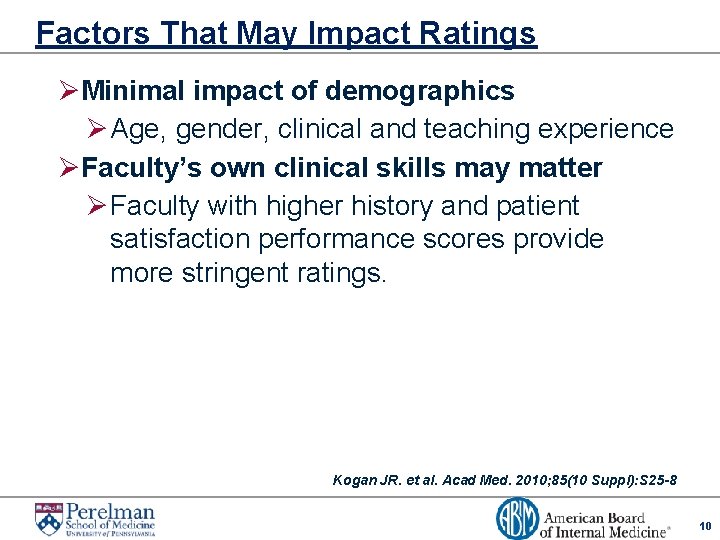

Factors That May Impact Ratings ØMinimal impact of demographics Ø Age, gender, clinical and teaching experience ØFaculty’s own clinical skills may matter Ø Faculty with higher history and patient satisfaction performance scores provide more stringent ratings. Kogan JR. et al. Acad Med. 2010; 85(10 Suppl): S 25 -8 10

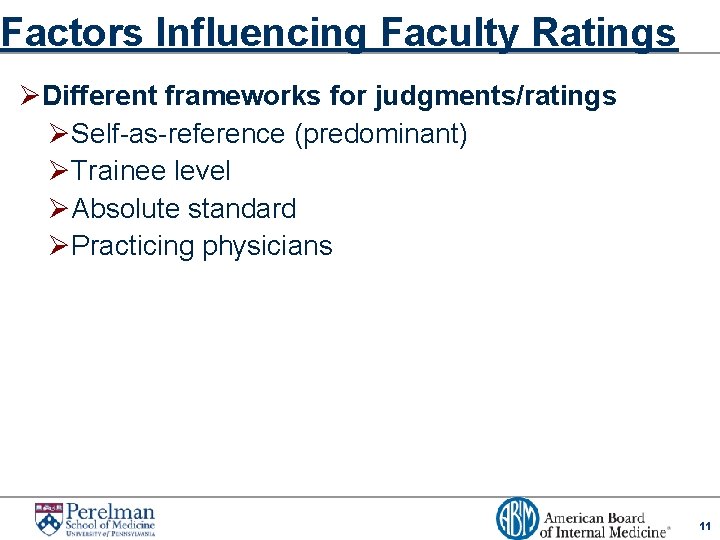

Factors Influencing Faculty Ratings ØDifferent frameworks for judgments/ratings Ø Self-as-reference (predominant) Ø Trainee level Ø Absolute standard Ø Practicing physicians 11

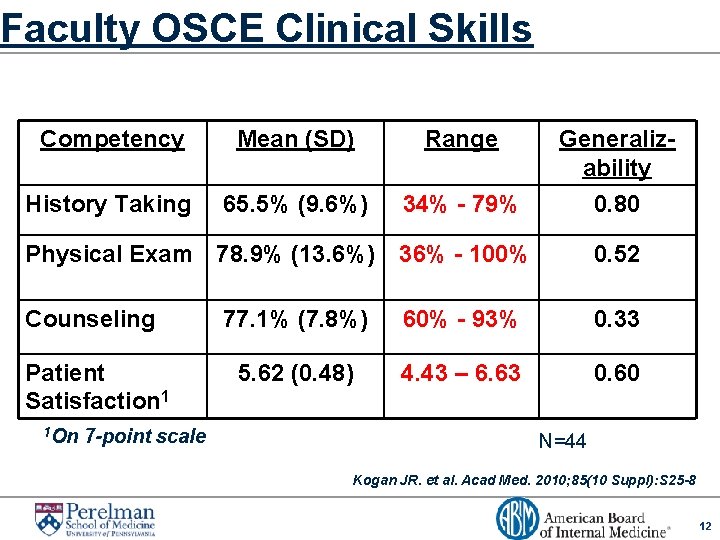

Faculty OSCE Clinical Skills Competency Mean (SD) Range History Taking 65. 5% (9. 6%) 34% - 79% Generalizability 0. 80 Physical Exam 78. 9% (13. 6%) 36% - 100% 0. 52 Counseling 77. 1% (7. 8%) 60% - 93% 0. 33 Patient Satisfaction 1 5. 62 (0. 48) 4. 43 – 6. 63 0. 60 1 On 7 -point scale N=44 Kogan JR. et al. Acad Med. 2010; 85(10 Suppl): S 25 -8 12

Other Factors Influencing Ratings Ø Factors external to resident performance ØEncounter complexity ØResident characteristics ØInstitutional culture ØEmotional impact of constructive feedback ØRole of inference 13

Definition of Inference a. The act or process of deriving logical conclusions from premises known or assumed to be true. b. The act of reasoning from factual knowledge or evidence. 14

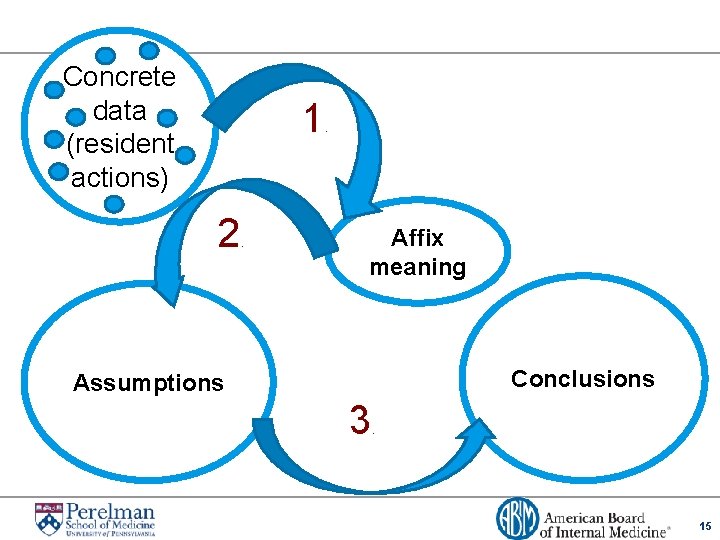

Concrete data (resident actions) 1 2 . . Affix meaning Conclusions Assumptions 3 . 15

The Problem with Inference ØInferences are not recognized ØInferences are rarely validated for accuracy ØInferences can be wrong 16

Types of Inference about Residents ØSkills Ø Knowledge Ø Competence Ø Work-ethic ØPrior experiences Ø Familiarity with scenario ØFeelings Ø Comfort Ø Confidence Ø Intentions Ø Ownership ØPersonality ØCulture 17

High Level Inference 18

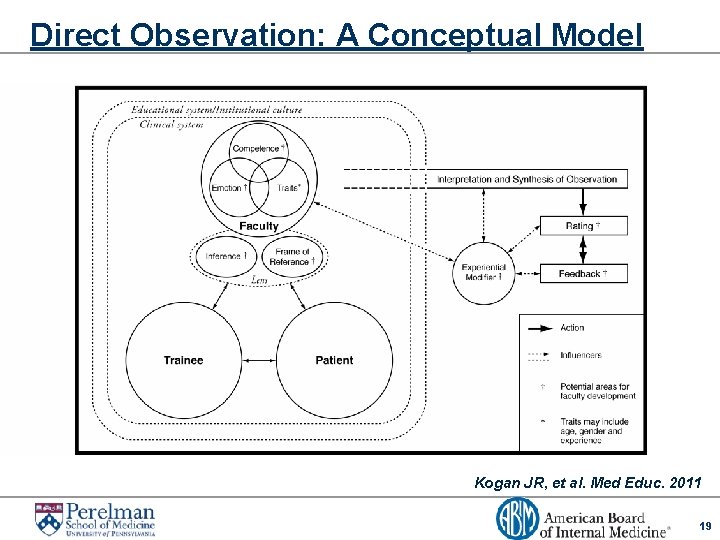

Direct Observation: A Conceptual Model Kogan JR, et al. Med Educ. 2011 19

International Comparative Work Ø Yeates (UK) Ø Differential salience Ø Criterion uncertainty Ø Information integration Ø Govaerts (Netherlands) Ø Use of task-specific and person schemas Ø Substantial inference in person schema Ø Rater idiosyncrasy Ø Gingerich (Canada) Ø Impact of social models: clusters; person; labels 20

Achieving Accurate, Reliable Ratings ØForm is not the magic bullet ØAssessment requires faculty training Ø Similar frameworks Ø Agreed upon levels of competence Ø Move to criterion referenced assessment 21

Questions 22

- Slides: 22