Digital Video Cluster Simulation Martin Milkovits CS 699

![PCI Bus Module Components n n n Queue – pending RWM’s pci. Bus[max. Devices] PCI Bus Module Components n n n Queue – pending RWM’s pci. Bus[max. Devices]](https://slidetodoc.com/presentation_image_h2/6358a0e9771a5e07cb7195bf6cc1522e/image-9.jpg)

- Slides: 17

Digital Video Cluster Simulation Martin Milkovits CS 699 – Professional Seminar April 26, 2005

Goal of Simulation n n Build an accurate performance model of the interconnecting fabrics in a Digital Video cluster Assumptions RAID Controller would follow a triangular distribution of I/O interarrival times n Gigabit Ethernet IP edge card would not impress any backpressure on the I/Os n

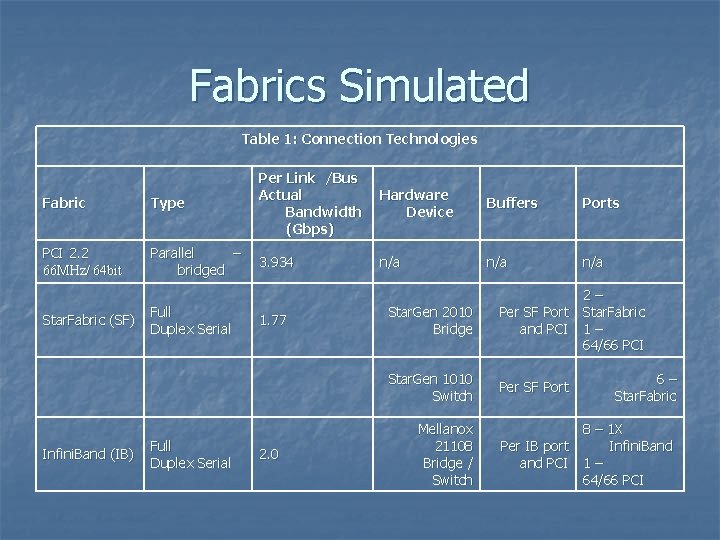

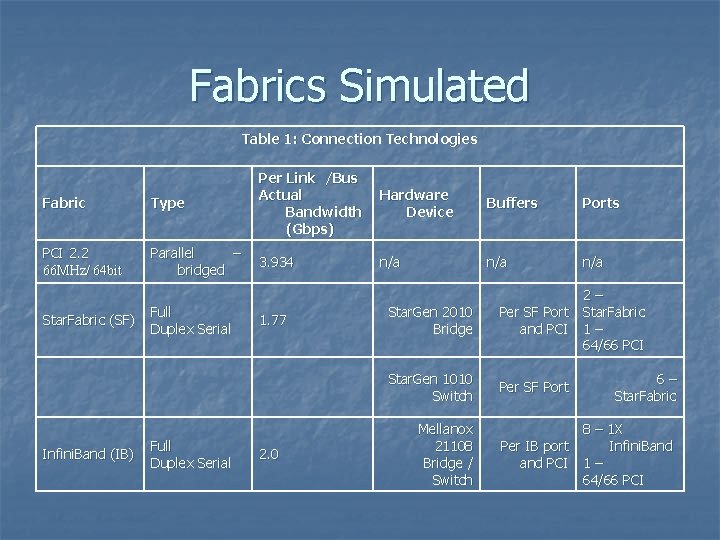

Fabrics Simulated Table 1: Connection Technologies Fabric Type Per Link /Bus Actual Bandwidth (Gbps) PCI 2. 2 66 MHz/ 64 bit Parallel – bridged 3. 934 Star. Fabric (SF) Infini. Band (IB) Full Duplex Serial 1. 77 2. 0 Hardware Device Buffers Ports n/a n/a Star. Gen 2010 Bridge 2– Per SF Port Star. Fabric and PCI 1 – 64/66 PCI Star. Gen 1010 Switch Per SF Port Mellanox 21108 Bridge / Switch 6– Star. Fabric 8 – 1 X Per IB port Infini. Band PCI 1 – 64/66 PCI

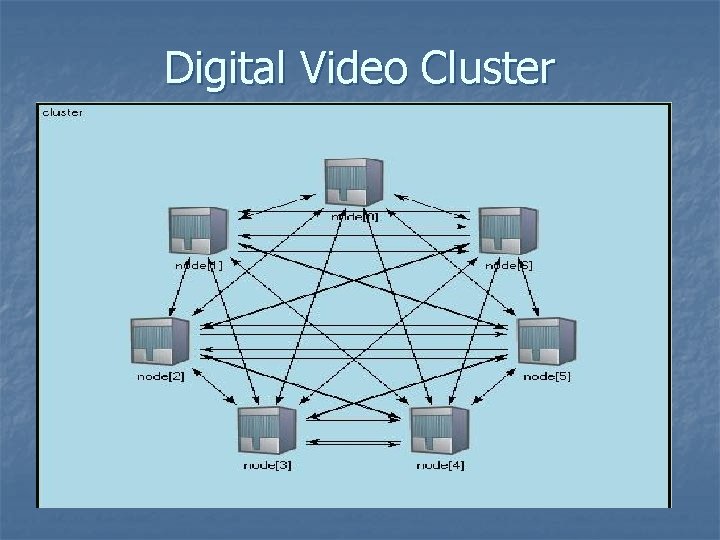

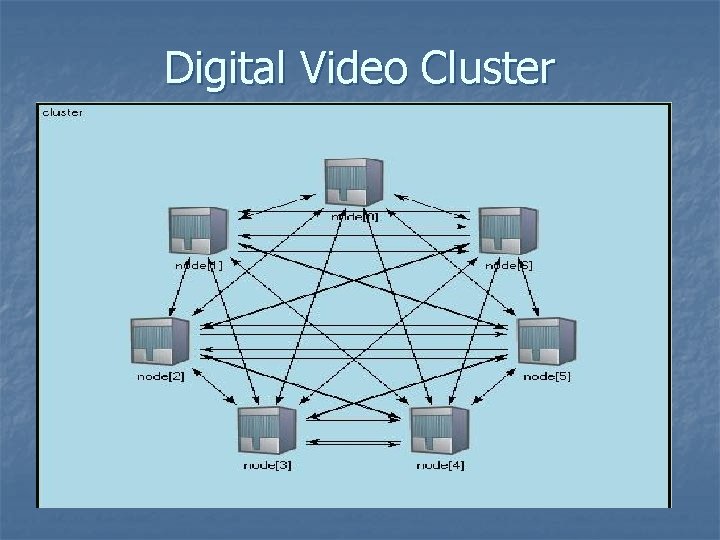

Digital Video Cluster

Digital Video Node

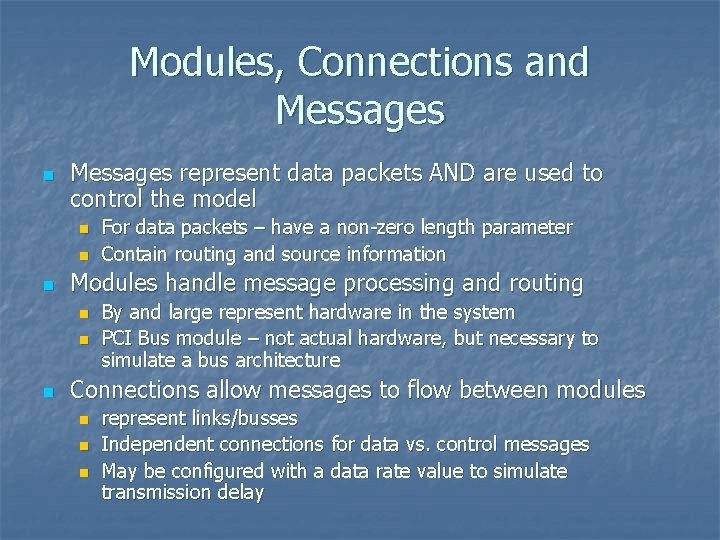

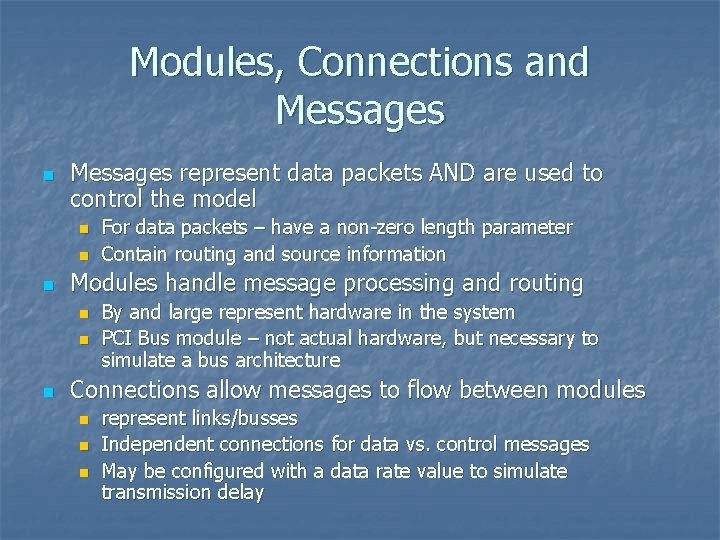

Modules, Connections and Messages n Messages represent data packets AND are used to control the model n n n Modules handle message processing and routing n n n For data packets – have a non-zero length parameter Contain routing and source information By and large represent hardware in the system PCI Bus module – not actual hardware, but necessary to simulate a bus architecture Connections allow messages to flow between modules n n n represent links/busses Independent connections for data vs. control messages May be configured with a data rate value to simulate transmission delay

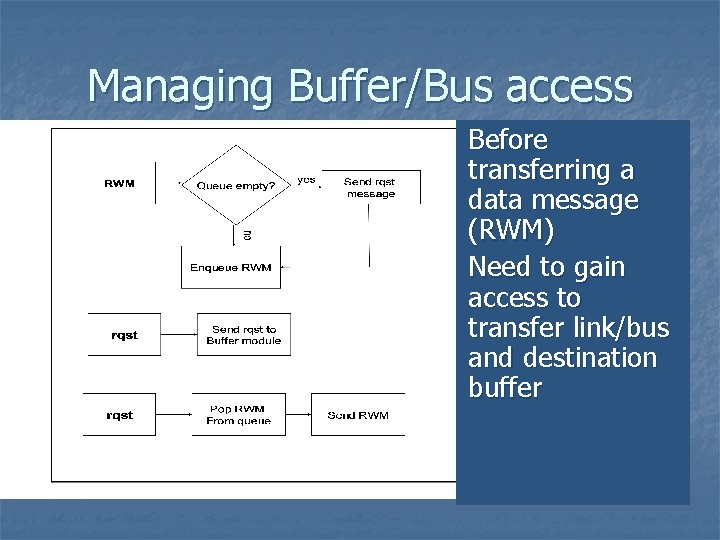

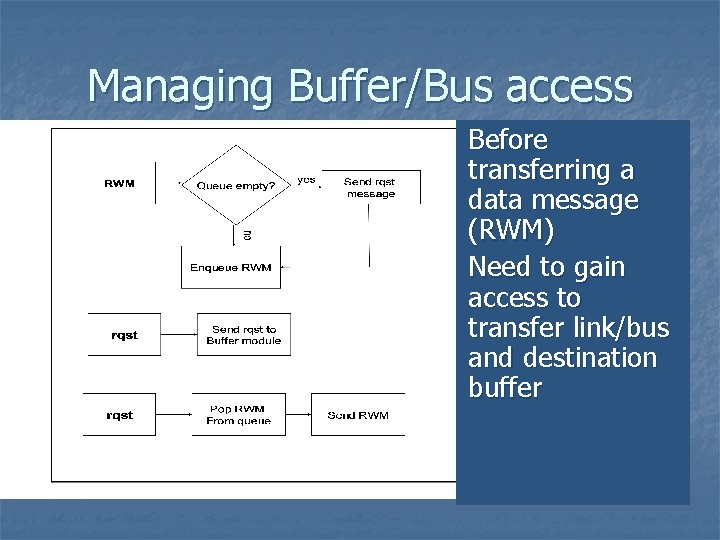

Managing Buffer/Bus access Before transferring a data message (RWM) Need to gain access to transfer link/bus and destination buffer

PCI Bus Challenges n n n Maintain Bus fairness Allow multiple PCI bus masters to interleave transactions (account for retry overhead) Allow bursting if only one master

![PCI Bus Module Components n n n Queue pending RWMs pci Busmax Devices PCI Bus Module Components n n n Queue – pending RWM’s pci. Bus[max. Devices]](https://slidetodoc.com/presentation_image_h2/6358a0e9771a5e07cb7195bf6cc1522e/image-9.jpg)

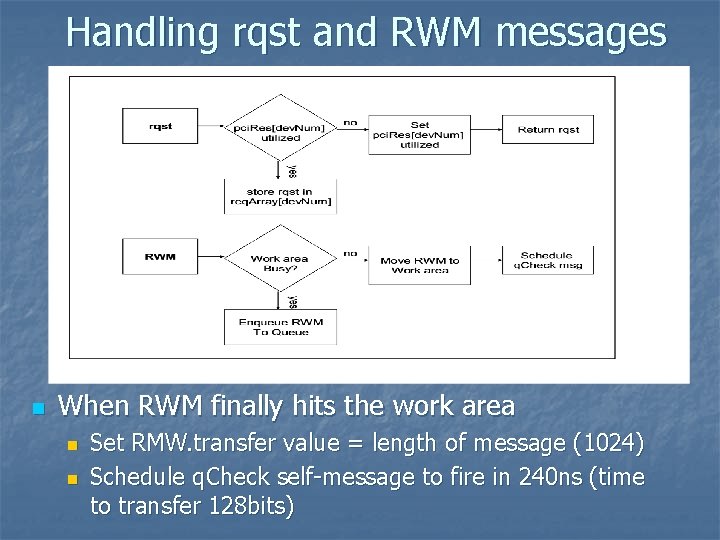

PCI Bus Module Components n n n Queue – pending RWM’s pci. Bus[max. Devices] array – utilization key req. Array[max. Devices] – pending rqst messages Work area – manages RWM actually being transferred by the PCI bus 3 Message types to handle n n n rqst messages from PCI bus masters RMW messages q. Check self-messages

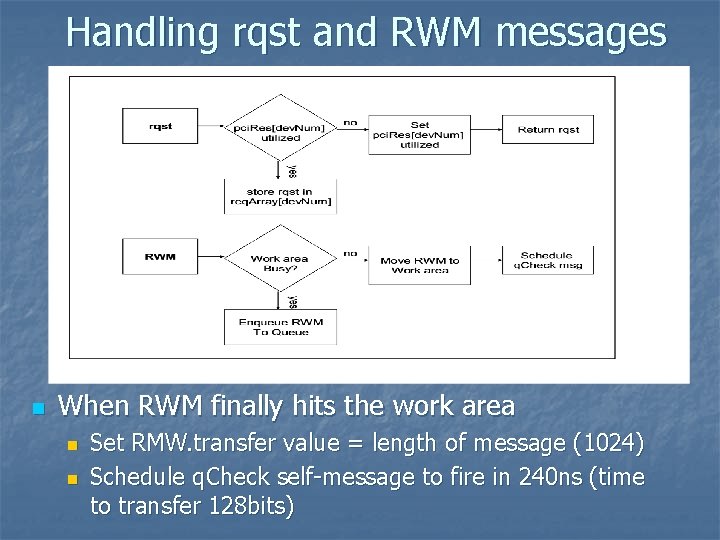

Handling rqst and RWM messages n When RWM finally hits the work area n n Set RMW. transfer value = length of message (1024) Schedule q. Check self-message to fire in 240 ns (time to transfer 128 bits)

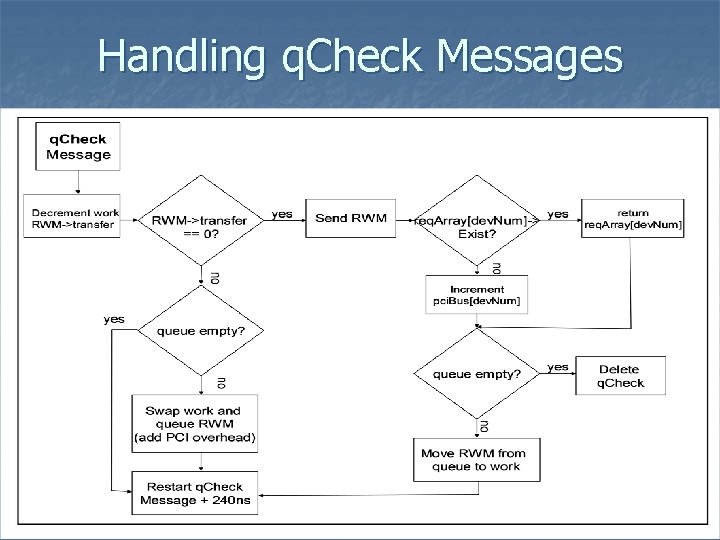

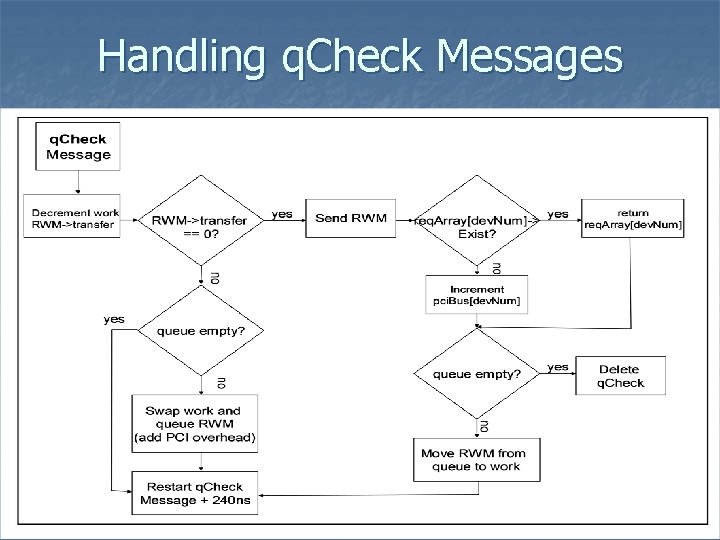

Handling q. Check Messages

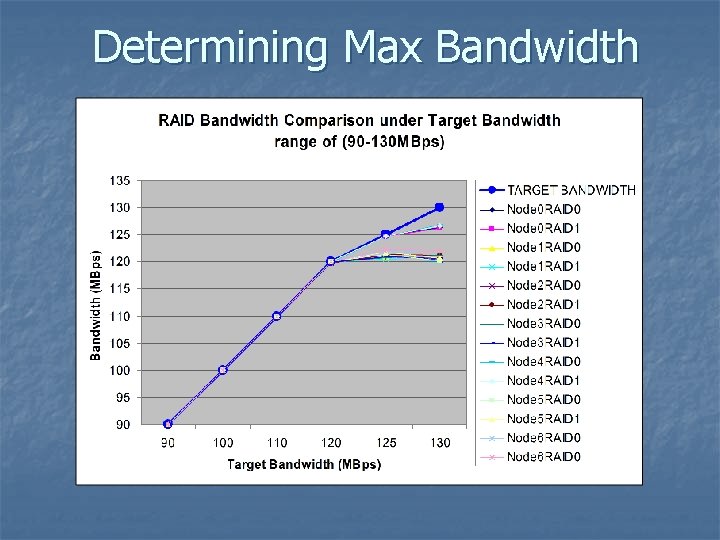

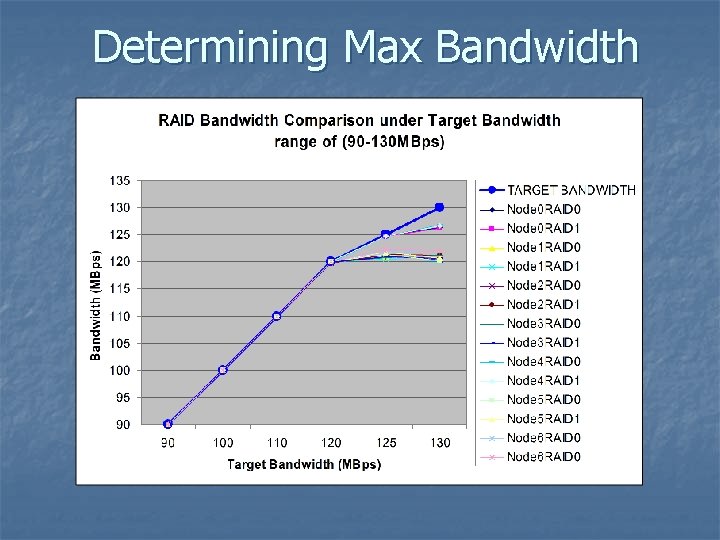

Determining Max Bandwidth

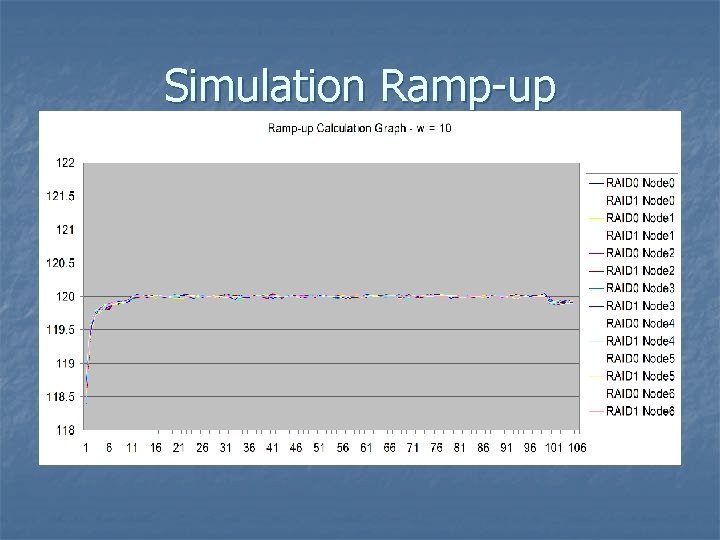

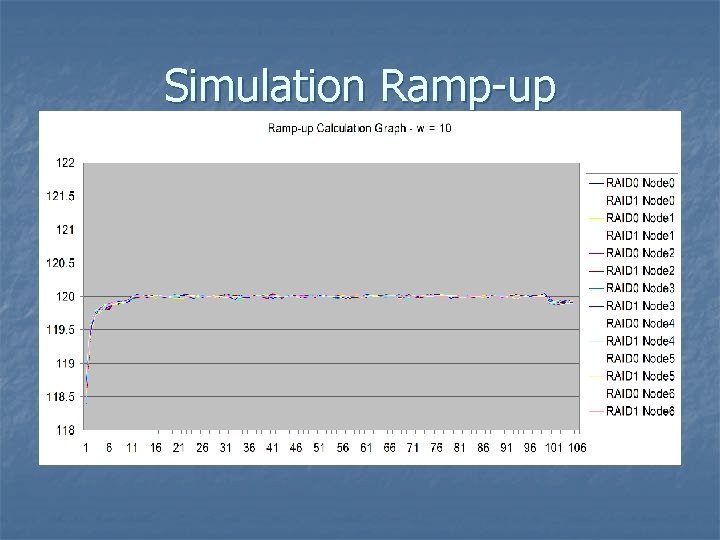

Simulation Ramp-up

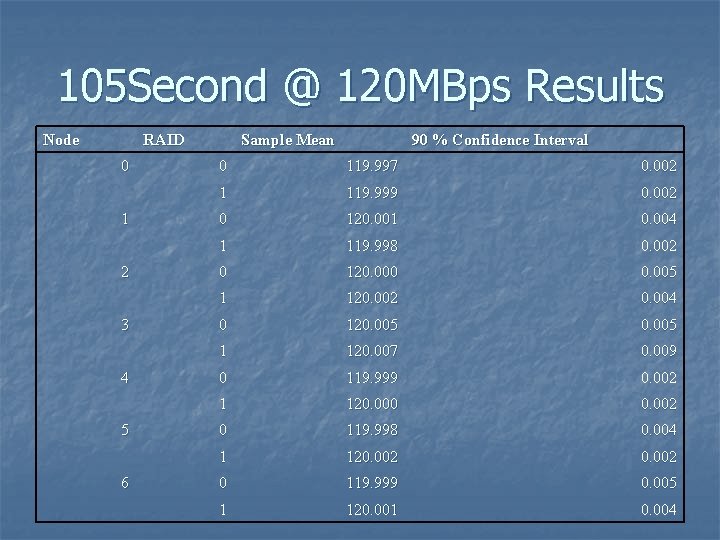

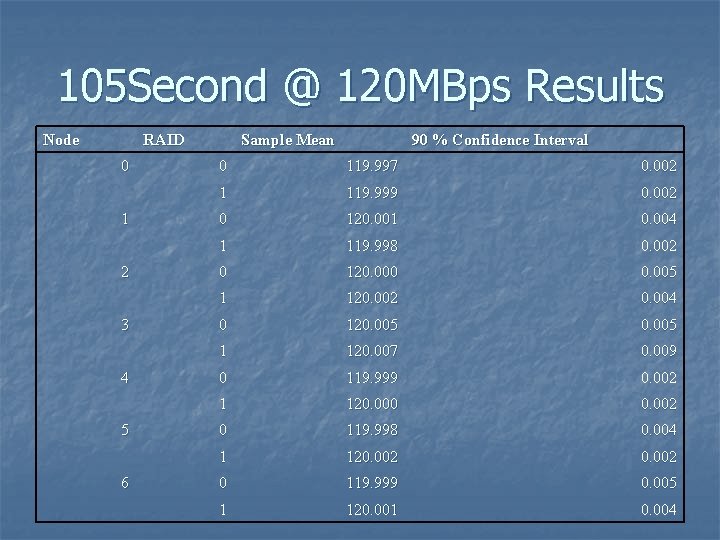

105 Second @ 120 MBps Results Node RAID 0 1 2 3 4 5 6 Sample Mean 90 % Confidence Interval 0 119. 997 0. 002 1 119. 999 0. 002 0 120. 001 0. 004 1 119. 998 0. 002 0 120. 000 0. 005 1 120. 002 0. 004 0 120. 005 1 120. 007 0. 009 0 119. 999 0. 002 1 120. 000 0. 002 0 119. 998 0. 004 1 120. 002 0 119. 999 0. 005 1 120. 001 0. 004

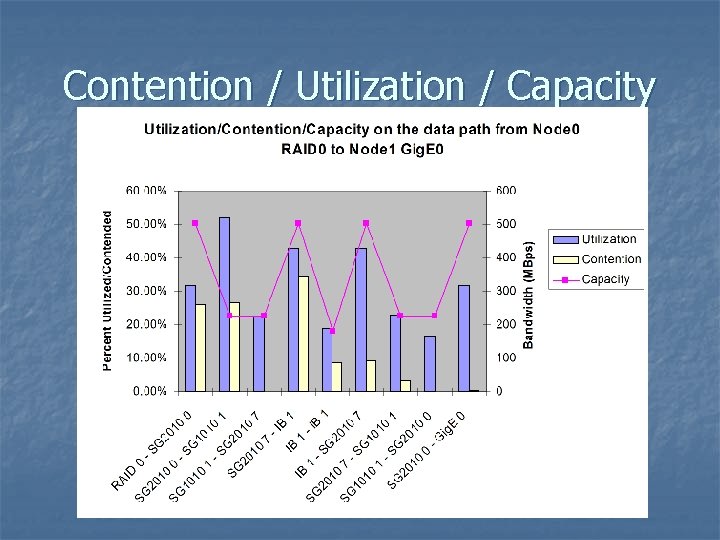

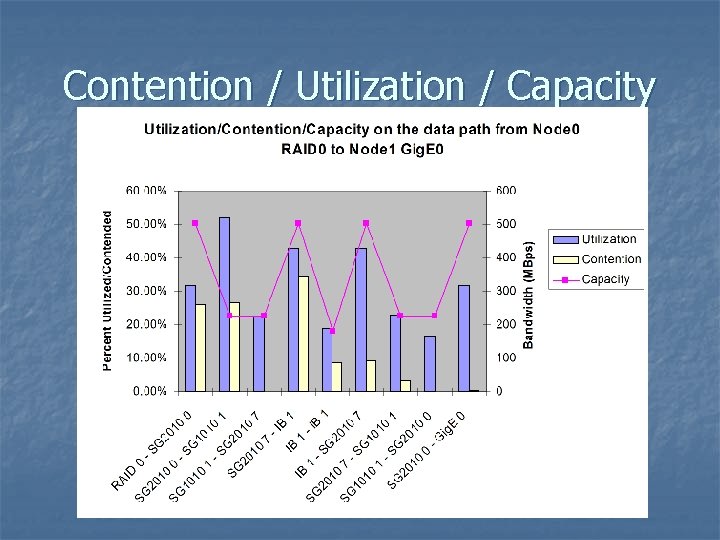

Contention / Utilization / Capacity

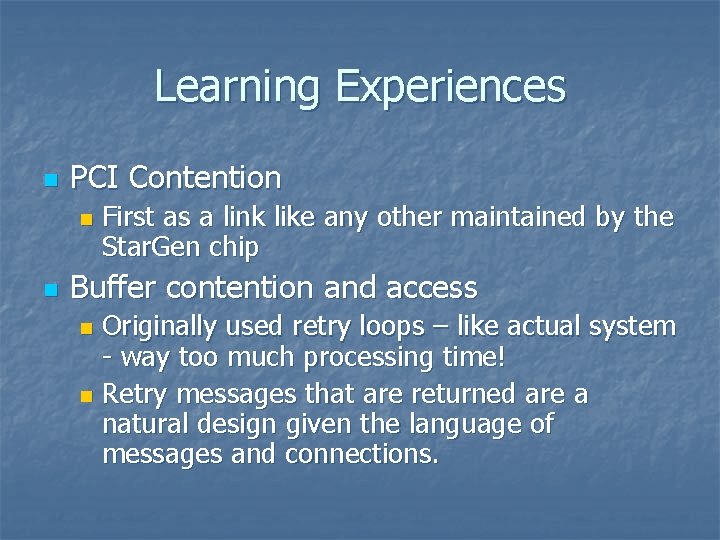

Learning Experiences n PCI Contention n n First as a link like any other maintained by the Star. Gen chip Buffer contention and access Originally used retry loops – like actual system - way too much processing time! n Retry messages that are returned are a natural design given the language of messages and connections. n

Conclusion / Future Work n n Simulation performed within 7% of actual system performance PCI bus between IB and Star. Gen potential hotspot Complete more iterations with minor system modifications (dual. DMA, scheduling) Submitted paper to the Winter Simulation Conference