Dictionary data structures for the Inverted Index Ch

- Slides: 29

Dictionary data structures for the Inverted Index

Ch. 3 This lecture n “Tolerant” retrieval n n n n Brute-force approach Edit-distance, via Dyn. Prog Weighted Edit-distance, via Dyn. Prog One-error correction Idea of filtering for edit distance Wild-card queries Spelling correction Soundex

One-error correction

Sec. 3. 3. 5 The problem n n n 1 -error = insertion, deletion or substitution of one single character Farragina Ferragina (substitution) Feragina Ferragina (insertion) Ferrragina Ferragina (deletion) You could have many candidates You can still deploy keyboard layout or other statistical information (web, wikipedia, …)

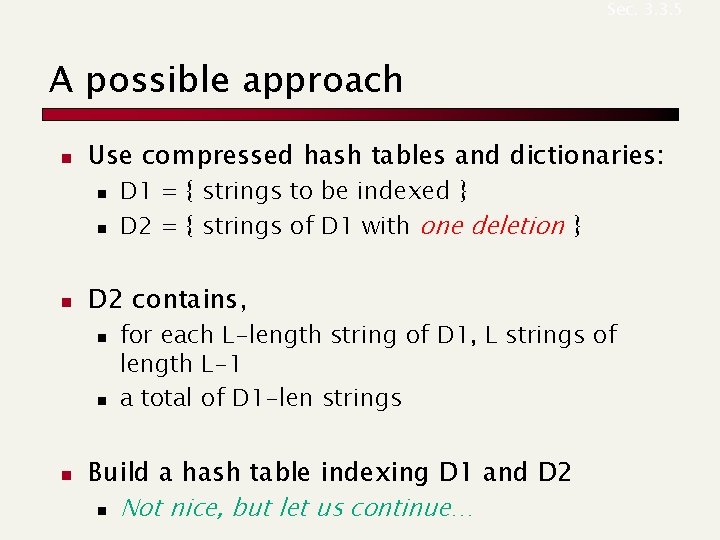

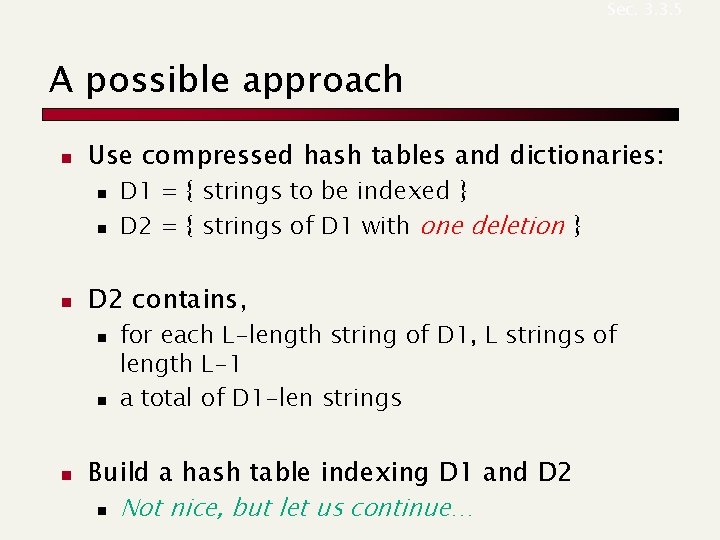

Sec. 3. 3. 5 A possible approach n Use compressed hash tables and dictionaries: n n n D 2 contains, n n n D 1 = { strings to be indexed } D 2 = { strings of D 1 with one deletion } for each L-length string of D 1, L strings of length L-1 a total of D 1 -len strings Build a hash table indexing D 1 and D 2 n Not nice, but let us continue…

Sec. 3. 3. 5 An example D 1 = {cat, cast, cst, dag} D 2 = {ag [dag], ast [cast], at [cat], cas [cast], cat [cast], cs [cst], cst [cast], ct [cat], da [dag], dg [dag], st [cst]} n n Every string in D 2 keeps a pointer to its originating string in D 1 (indicated btw […]) Query( «cat» ): n n Perfect match: Search for P in D 1, 1 query [e. g. cat] Insertion in P: Search for P in D 2 , 1 query [e. g. cast] Deletion in P: Search for P -1 char in D 1 , p queries [e. g. {at, ca} No match] Substitution: Search for P -1 char in D 2 , p queries [e. g. {at, ca} at (cat), ct (cst), ca (cat)]

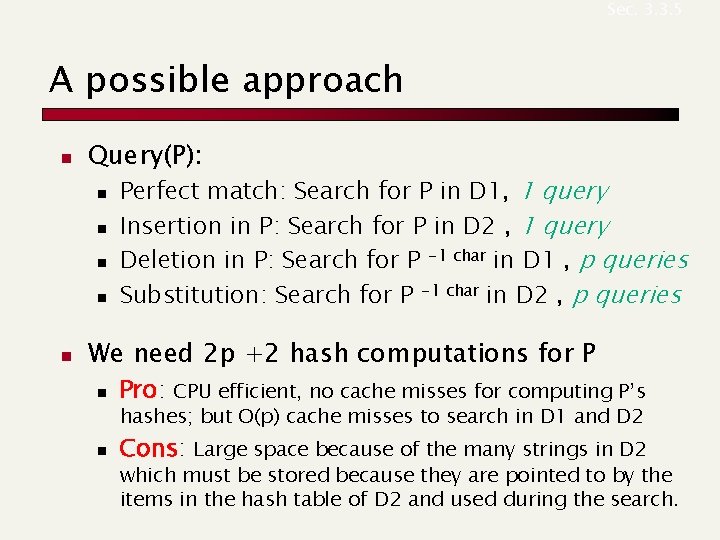

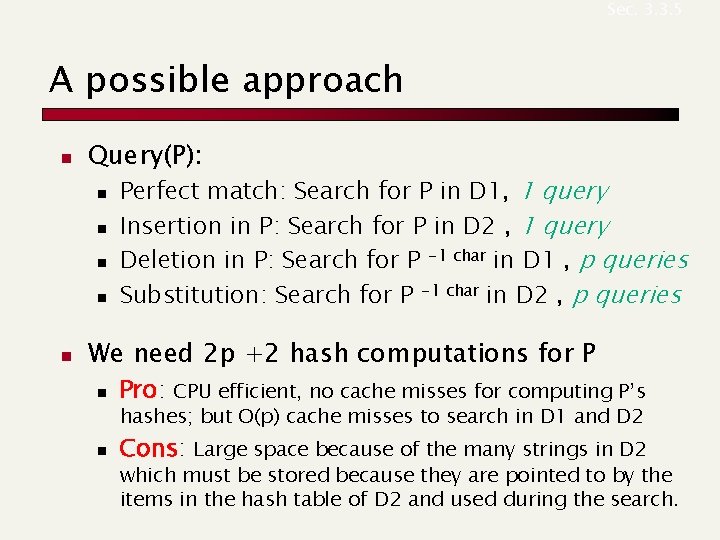

Sec. 3. 3. 5 A possible approach n Query(P): n n n Perfect match: Search for P in D 1, 1 query Insertion in P: Search for P in D 2 , 1 query Deletion in P: Search for P -1 char in D 1 , p queries Substitution: Search for P -1 char in D 2 , p queries We need 2 p +2 hash computations for P n Pro: CPU efficient, no cache misses for computing P’s hashes; but O(p) cache misses to search in D 1 and D 2 n Cons: Large space because of the many strings in D 2 which must be stored because they are pointed to by the items in the hash table of D 2 and used during the search.

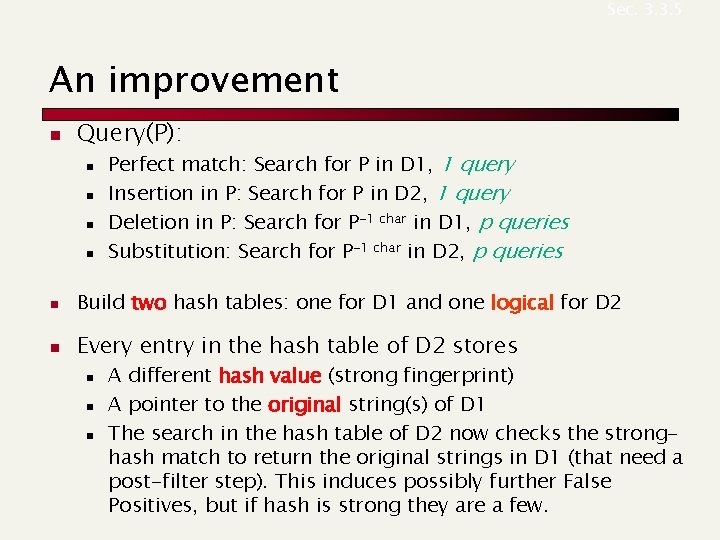

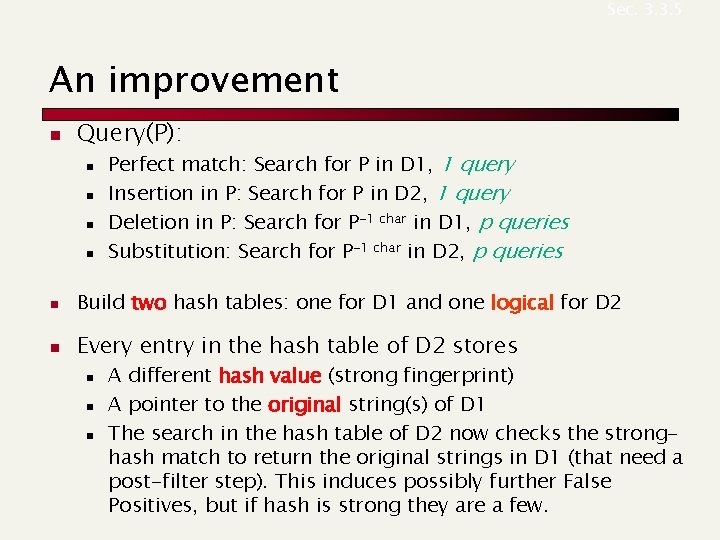

Sec. 3. 3. 5 An improvement n Query(P): n n Perfect match: Search for P in D 1, 1 query Insertion in P: Search for P in D 2, 1 query Deletion in P: Search for P-1 char in D 1, p queries Substitution: Search for P-1 char in D 2, p queries n Build two hash tables: one for D 1 and one logical for D 2 n Every entry in the hash table of D 2 stores n n n A different hash value (strong fingerprint) A pointer to the original string(s) of D 1 The search in the hash table of D 2 now checks the stronghash match to return the original strings in D 1 (that need a post-filter step). This induces possibly further False Positives, but if hash is strong they are a few.

Overlap vs Edit distances

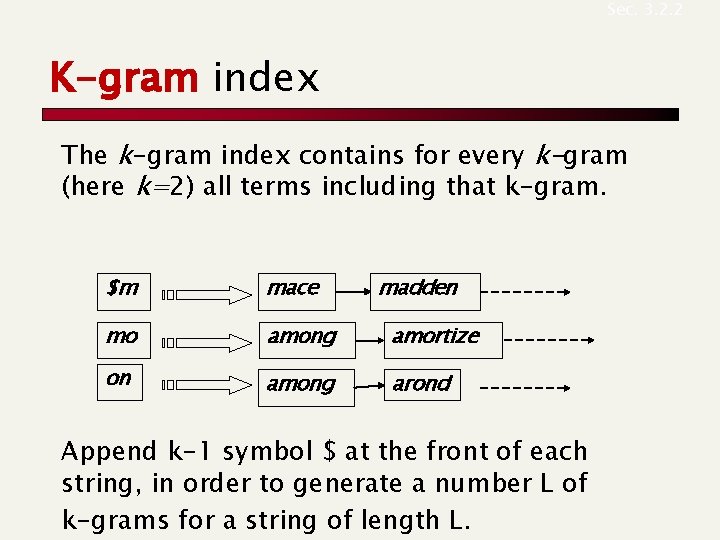

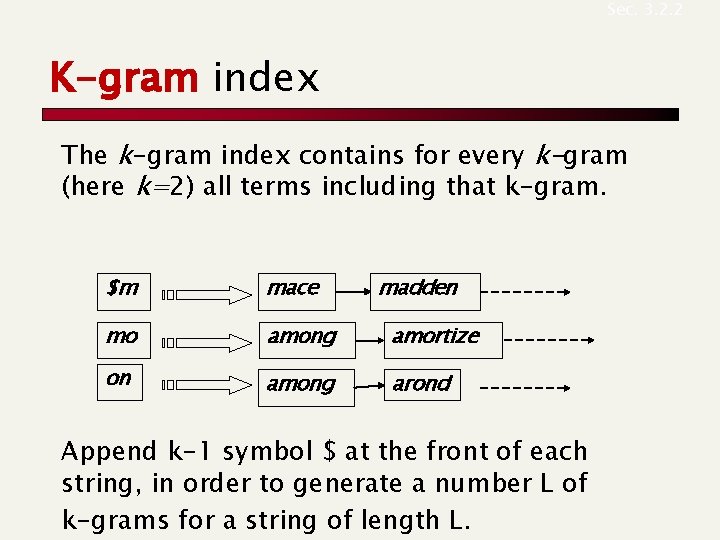

Sec. 3. 2. 2 K-gram index The k-gram index contains for every k-gram (here k=2) all terms including that k-gram. $m mace madden mo among amortize on among arond Append k-1 symbol $ at the front of each string, in order to generate a number L of k-grams for a string of length L.

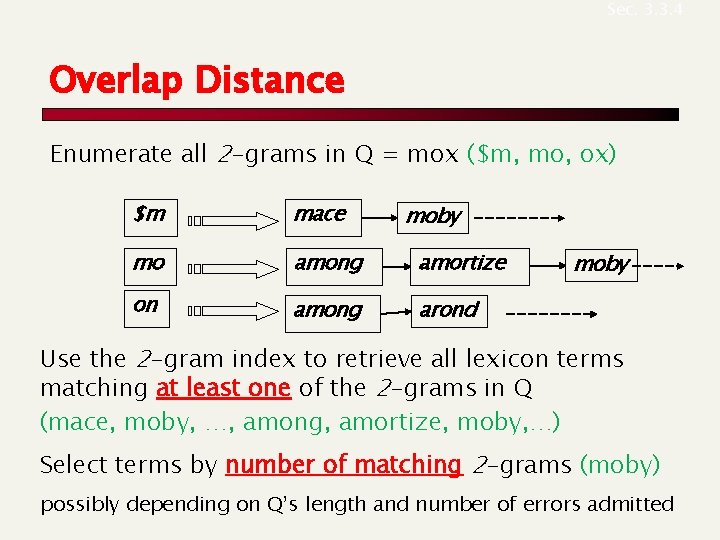

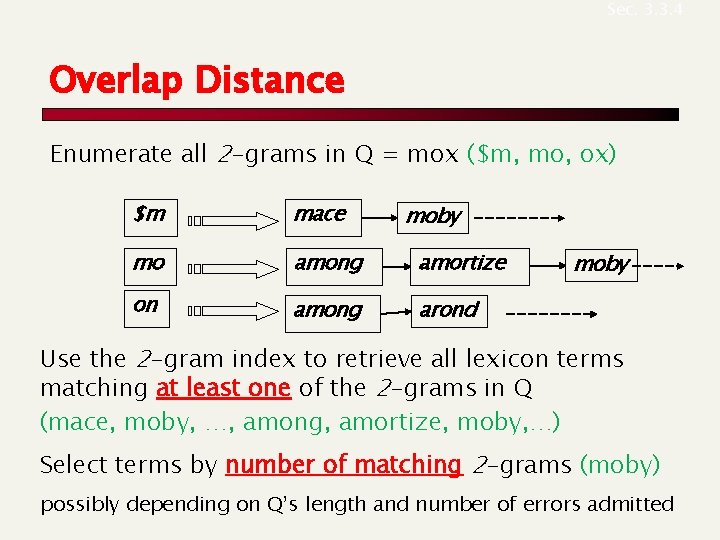

Sec. 3. 3. 4 Overlap Distance Enumerate all 2 -grams in Q = mox ($m, mo, ox) $m mace mo among amortize on among arond moby Use the 2 -gram index to retrieve all lexicon terms matching at least one of the 2 -grams in Q (mace, moby, …, among, amortize, moby, …) Select terms by number of matching 2 -grams (moby) possibly depending on Q’s length and number of errors admitted

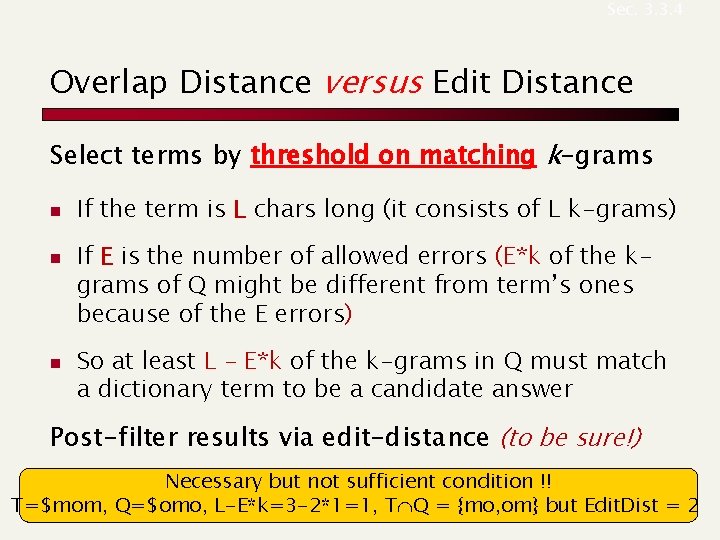

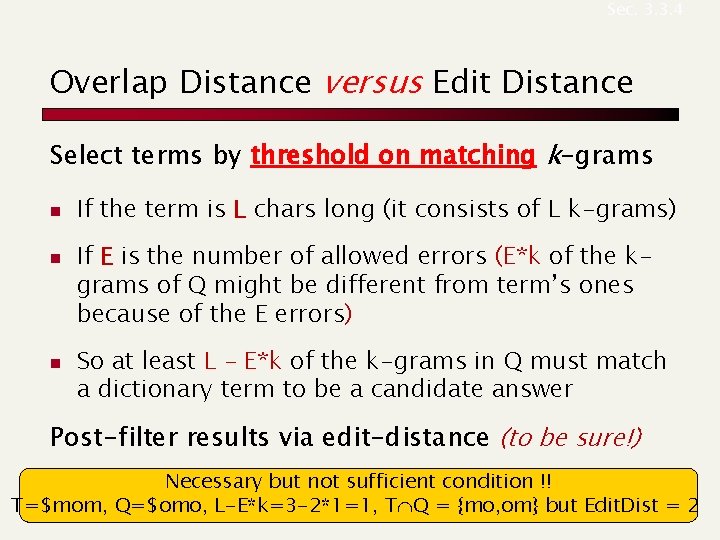

Sec. 3. 3. 4 Overlap Distance versus Edit Distance Select terms by threshold on matching k-grams n n n If the term is L chars long (it consists of L k-grams) If E is the number of allowed errors (E*k of the kgrams of Q might be different from term’s ones because of the E errors) So at least L – E*k of the k-grams in Q must match a dictionary term to be a candidate answer Post-filter results via edit-distance (to be sure!) Necessary but not sufficient condition !! T=$mom, Q=$omo, L-E*k=3 -2*1=1, T Q = {mo, om} but Edit. Dist = 2

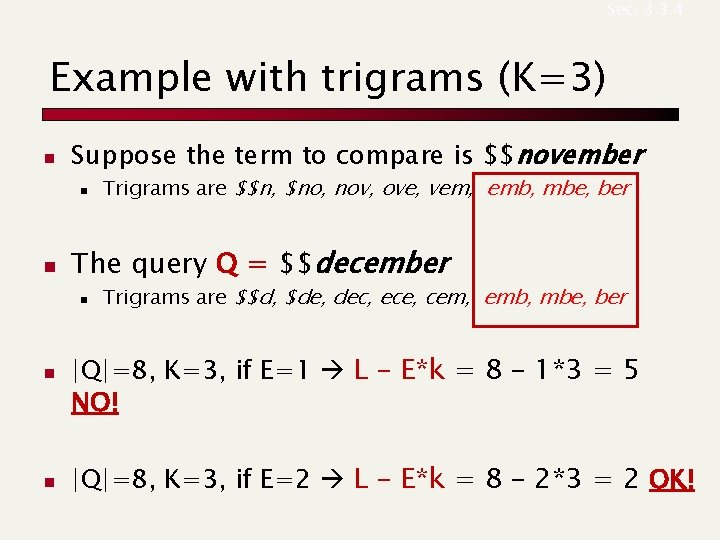

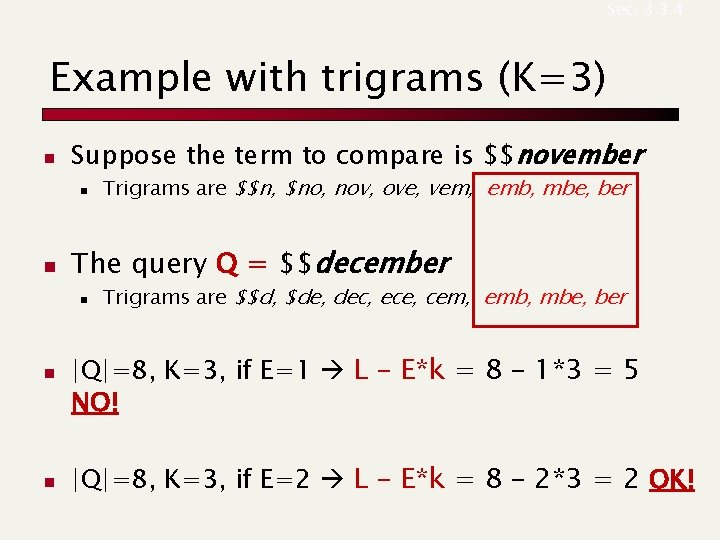

Sec. 3. 3. 4 Example with trigrams (K=3) n Suppose the term to compare is $$november n n The query Q = $$december n n n Trigrams are $$n, $no, nov, ove, vem, emb, mbe, ber Trigrams are $$d, $de, dec, ece, cem, emb, mbe, ber |Q|=8, K=3, if E=1 L – E*k = 8 – 1*3 = 5 NO! |Q|=8, K=3, if E=2 L – E*k = 8 – 2*3 = 2 OK!

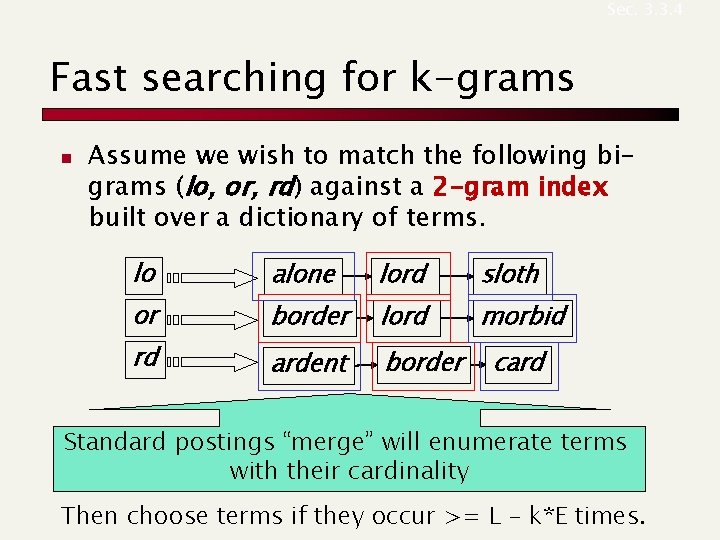

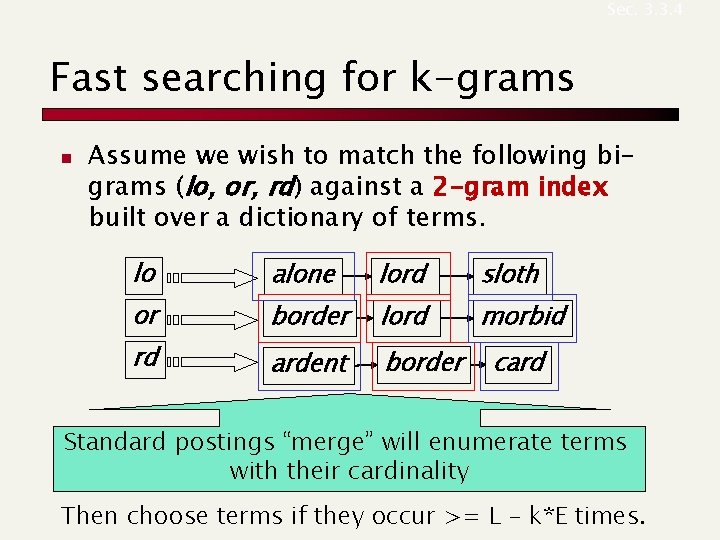

Sec. 3. 3. 4 Fast searching for k-grams n Assume we wish to match the following bigrams (lo, or, rd) against a 2 -gram index built over a dictionary of terms. lo alone lord sloth or border lord morbid rd ardent border card Standard postings “merge” will enumerate terms with their cardinality Then choose terms if they occur >= L – k*E times.

Sec. 3. 3. 5 Context-sensitive spell correction Text: I flew from Heathrow to Narita. n n Consider the phrase query “flew form Heathrow” We’d like to respond Did you mean “flew from Heathrow”? because no docs matched the query phrase.

Sec. 3. 3. 5 General issues in spell correction n n We enumerate multiple alternatives and then need to figure out which to present to the user for “Did you mean? ” Use heuristics n n The alternative hitting most docs Query log analysis + tweaking n n For especially popular, topical queries Spell-correction is computationally expensive n Run only on queries that matched few docs

Other sophisticated queries

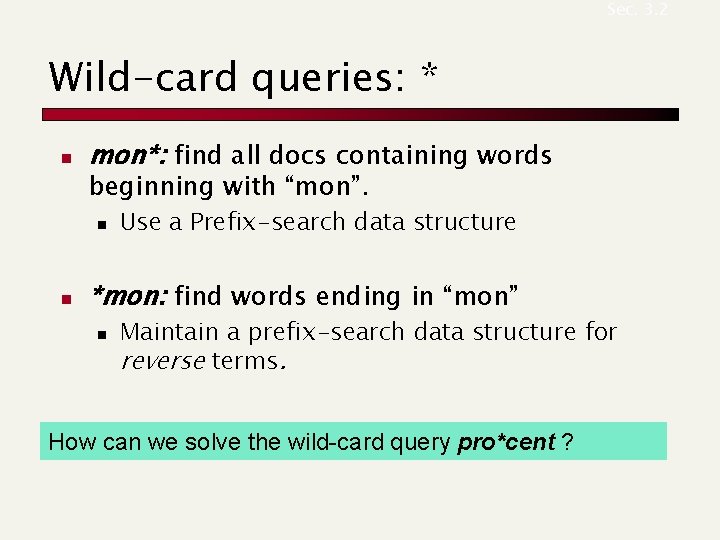

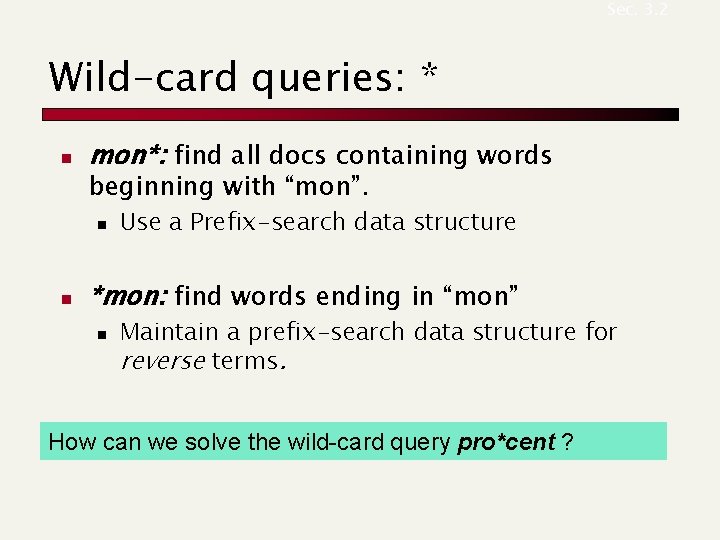

Sec. 3. 2 Wild-card queries: * n mon*: find all docs containing words beginning with “mon”. n n Use a Prefix-search data structure *mon: find words ending in “mon” n Maintain a prefix-search data structure for reverse terms. How can we solve the wild-card query pro*cent ?

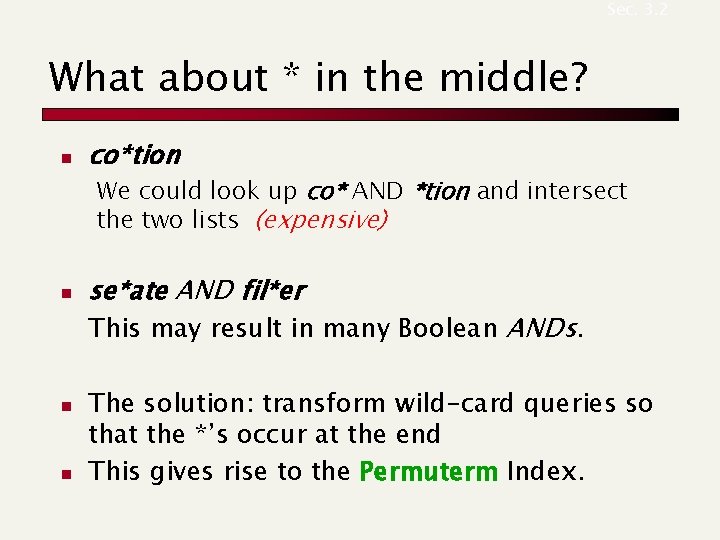

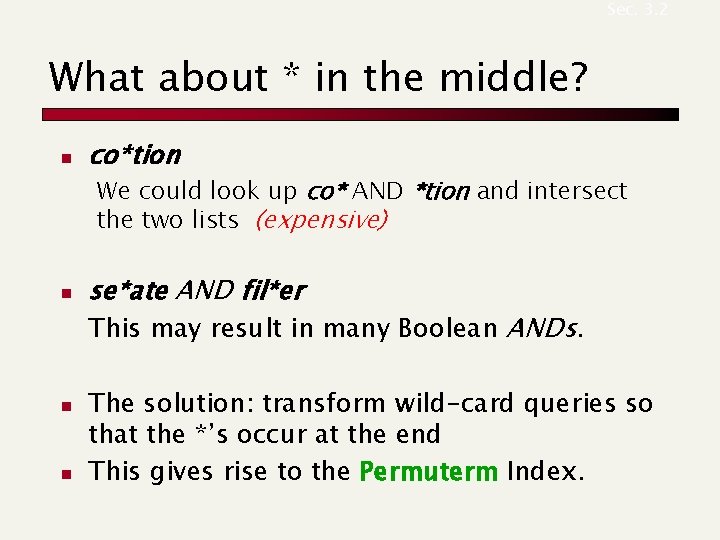

Sec. 3. 2 What about * in the middle? n co*tion We could look up co* AND *tion and intersect the two lists (expensive) n se*ate AND fil*er This may result in many Boolean ANDs. n n The solution: transform wild-card queries so that the *’s occur at the end This gives rise to the Permuterm Index.

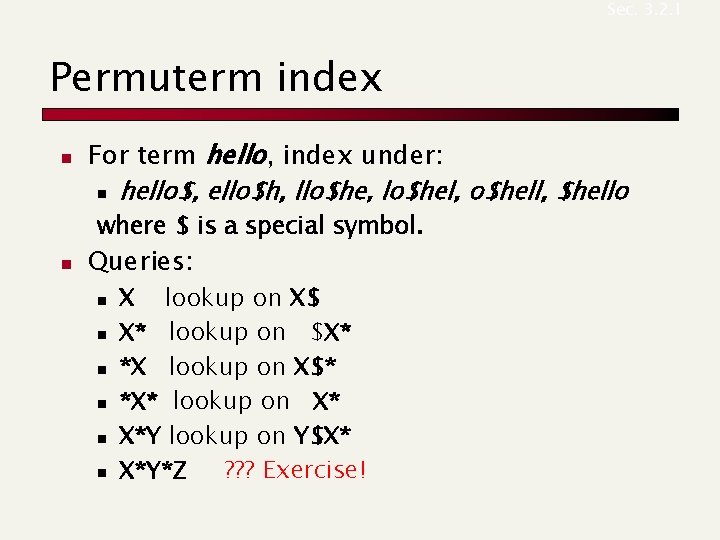

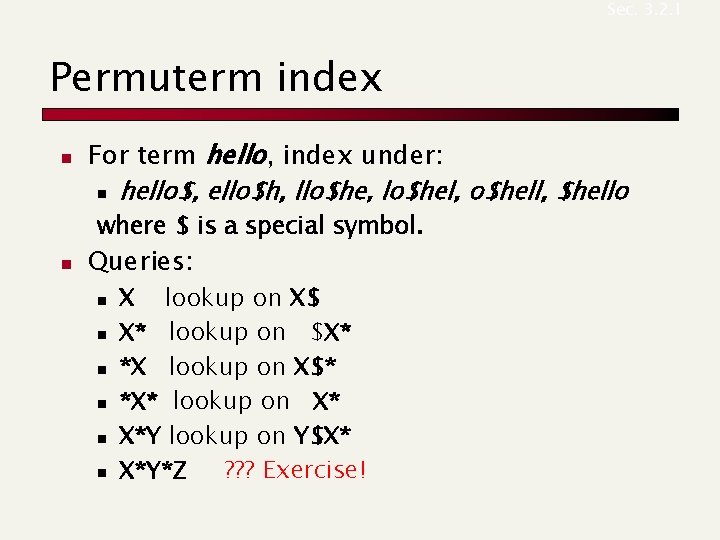

Sec. 3. 2. 1 Permuterm index n For term hello, index under: n hello$, ello$h, llo$he, lo$hel, o$hell, $hello where $ is a special symbol. n Queries: n n n X lookup on X$ X* lookup on $X* *X lookup on X$* *X* lookup on X* X*Y lookup on Y$X* X*Y*Z ? ? ? Exercise!

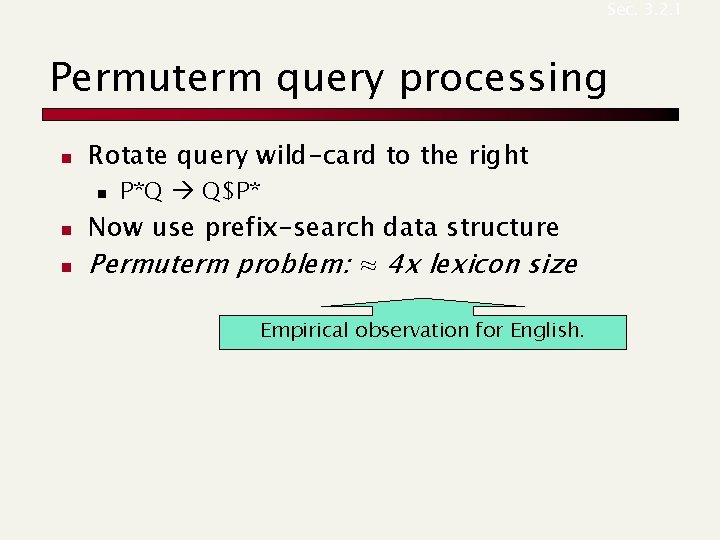

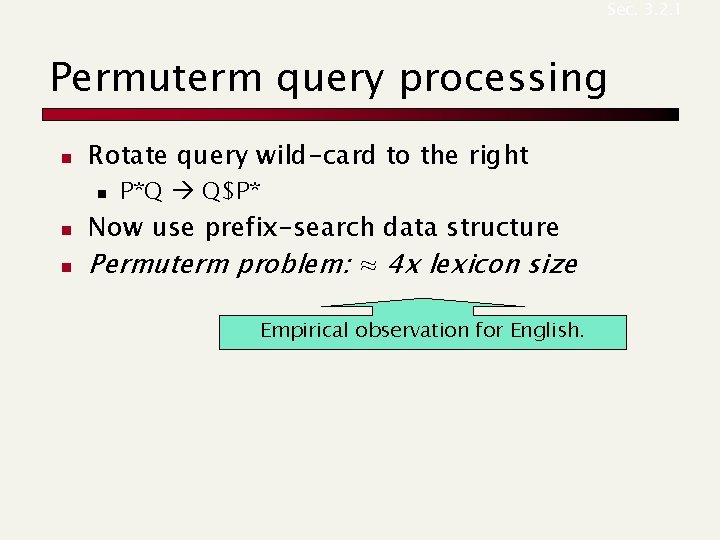

Sec. 3. 2. 1 Permuterm query processing n Rotate query wild-card to the right n n n P*Q Q$P* Now use prefix-search data structure Permuterm problem: ≈ 4 x lexicon size Empirical observation for English.

Sec. 3. 2. 2 K-gram for wild-cards queries n Query mon* can now be run as n $m AND mo AND on n Gets terms that match AND version of our wildcard query. n Post-filter these terms against query, using regex matching.

Soundex

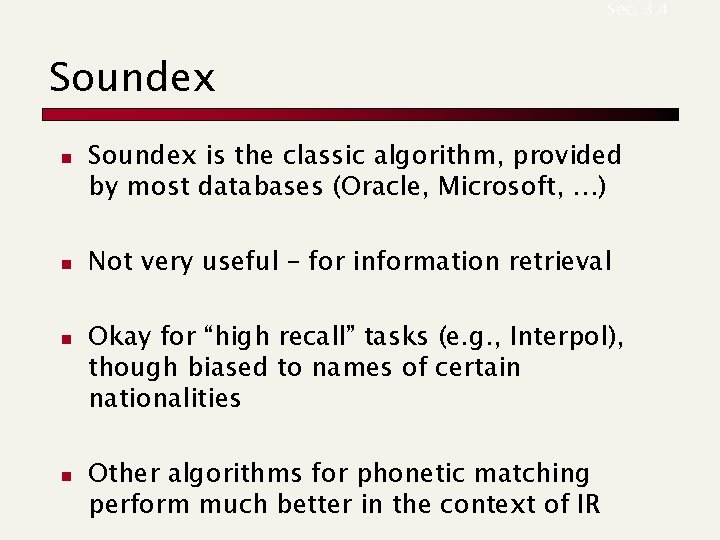

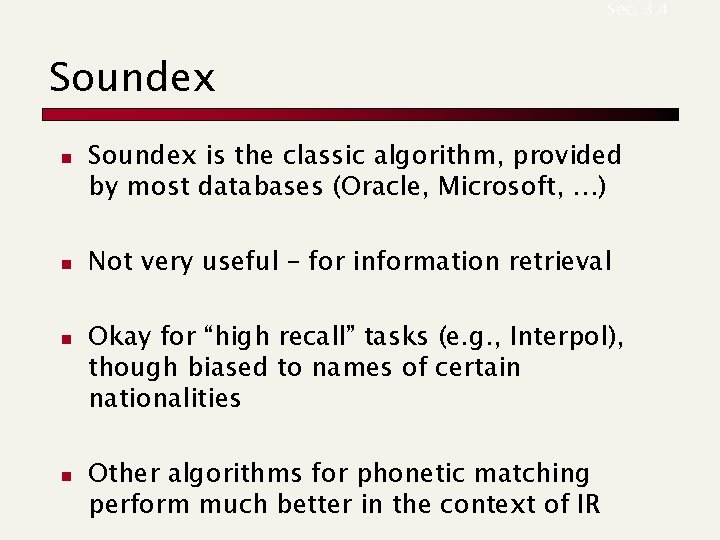

Sec. 3. 4 Soundex n Class of heuristics to expand a query into phonetic equivalents n n n Language specific – mainly for names E. g. , chebyshev tchebycheff Invented for the U. S. census in 1918

Sec. 3. 4 Soundex – typical algorithm n n n Turn every token to be indexed into a reduced form consisting of 4 chars Do the same with query terms Build and search an index on the reduced forms

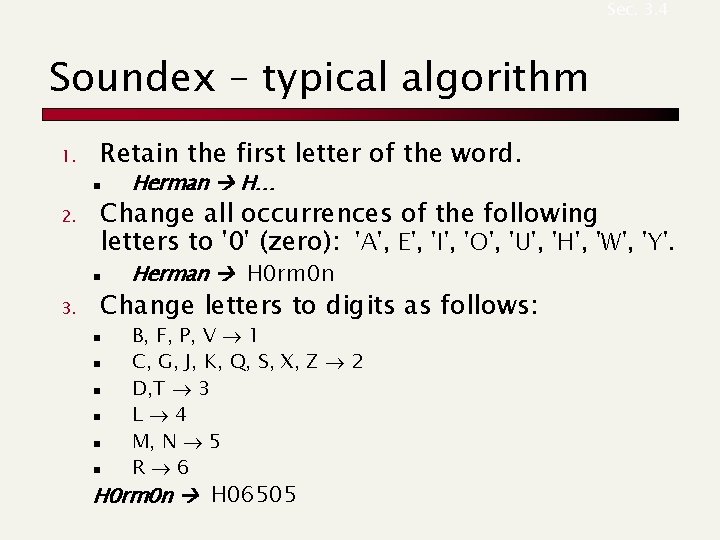

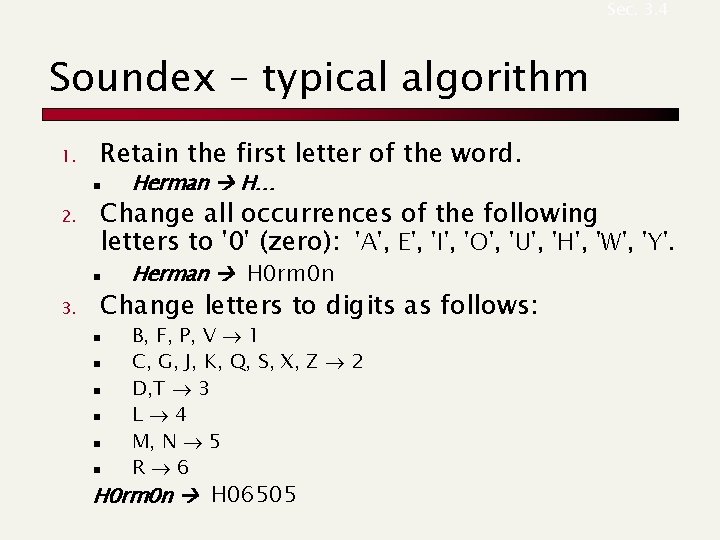

Sec. 3. 4 Soundex – typical algorithm 1. Retain the first letter of the word. n 2. Change all occurrences of the following letters to '0' (zero): 'A', E', 'I', 'O', 'U', 'H', 'W', 'Y'. n 3. Herman H… Herman H 0 rm 0 n Change letters to digits as follows: n n n B, F, P, V 1 C, G, J, K, Q, S, X, Z 2 D, T 3 L 4 M, N 5 R 6 H 0 rm 0 n H 06505

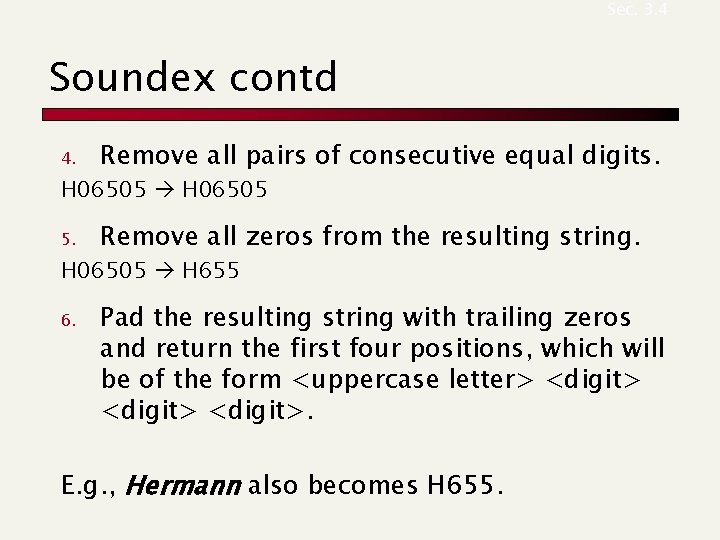

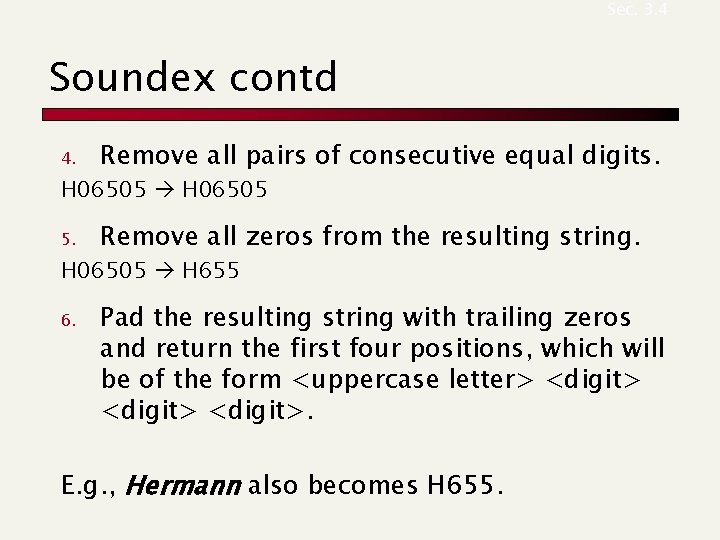

Sec. 3. 4 Soundex contd 4. Remove all pairs of consecutive equal digits. H 06505 5. Remove all zeros from the resulting string. H 06505 H 655 6. Pad the resulting string with trailing zeros and return the first four positions, which will be of the form <uppercase letter> <digit>. E. g. , Hermann also becomes H 655.

Sec. 3. 4 Soundex n n Soundex is the classic algorithm, provided by most databases (Oracle, Microsoft, …) Not very useful – for information retrieval Okay for “high recall” tasks (e. g. , Interpol), though biased to names of certain nationalities Other algorithms for phonetic matching perform much better in the context of IR

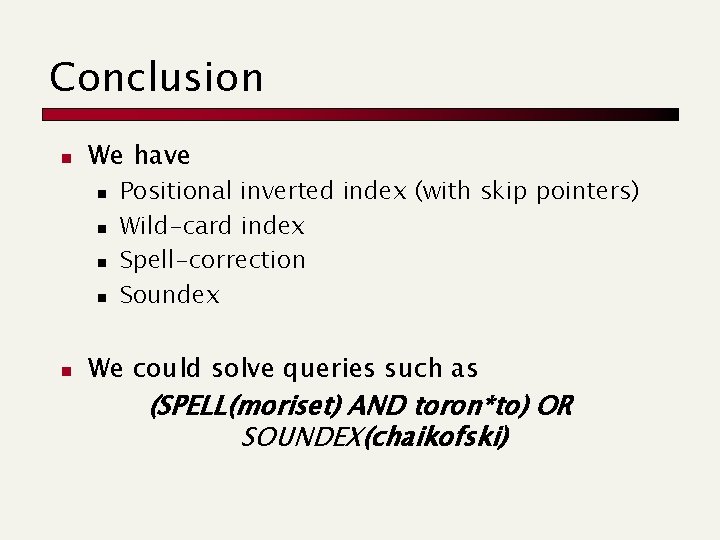

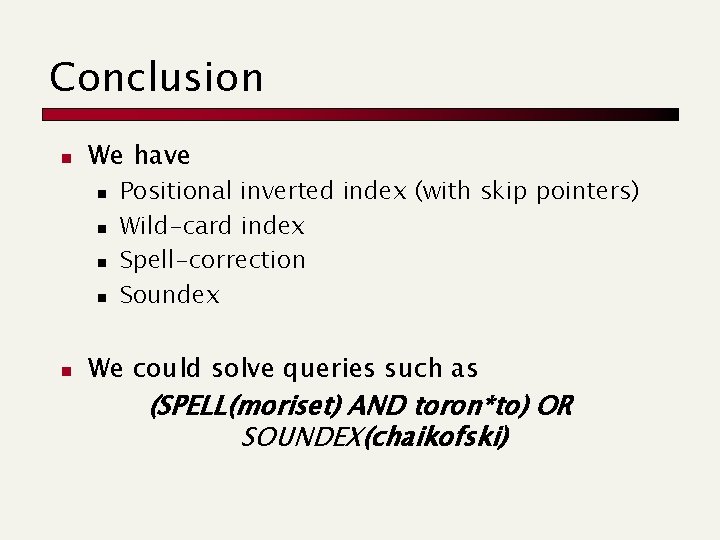

Conclusion n We have n n n Positional inverted index (with skip pointers) Wild-card index Spell-correction Soundex We could solve queries such as (SPELL(moriset) AND toron*to) OR SOUNDEX(chaikofski)