Developing Measuring and Improving Program Fidelity Achieving positive

- Slides: 27

Developing, Measuring, and Improving Program Fidelity: Achieving positive outcomes through high-fidelity implementation SPDG National Conference Washington, DC March 5, 2012 Allison Metz, Ph. D, Associate Director, NIRN Frank Porter Graham Child Development Institute University of North Carolina

Program Fidelity 6 Questions • • • What is it? Why is it important? When are we ready to assess fidelity? How do we measure fidelity? How can we produce high fidelity use of interventions in practice? • How can we use fidelity data for program improvement?

“PROGRAM FIDELITY” “The degree to which the program or practice is implemented ‘as intended’ by the program developers and researchers. ” “Fidelity measures detect the presence and strength of an intervention in practice. ”

Question 1 What is fidelity? • Three components – Context: Structural aspects that encompass the framework for service delivery – Compliance: The extent to which the practitioner uses the core program components – Competence: Process aspects that encompass the level of skill shown by the practitioner and the “way in which the service is delivered”

Question 2 Why is fidelity important? • Interpret outcomes – is this an implementation challenge or intervention challenge? • Detect variations in implementation • Replicate consistently • Ensure compliance and competence • Develop and refine interventions in the context of practice • Identify “active ingredients” of program

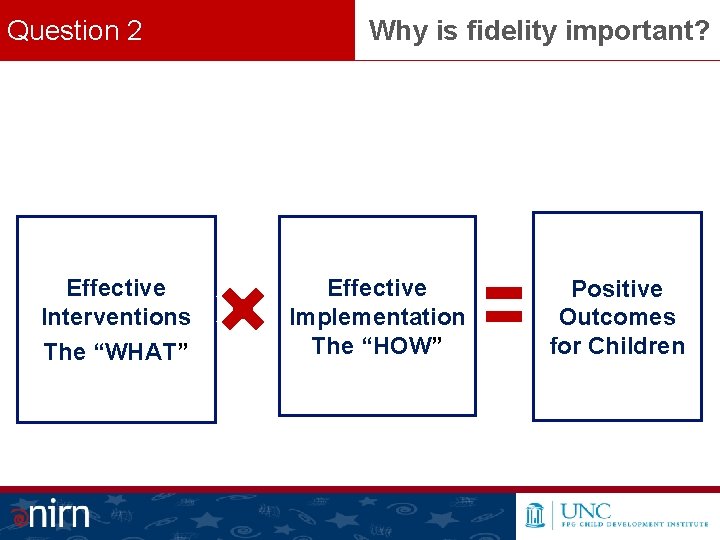

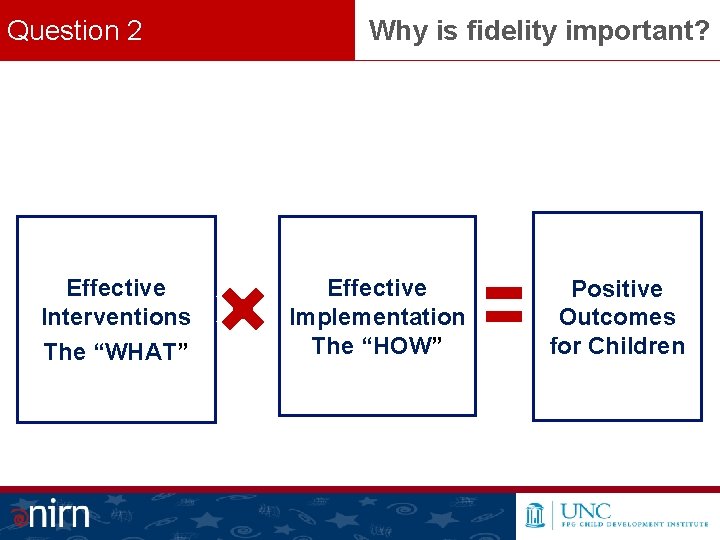

Question 2 Effective Interventions The “WHAT” Why is fidelity important? Effective Implementation The “HOW” Positive Outcomes for Children

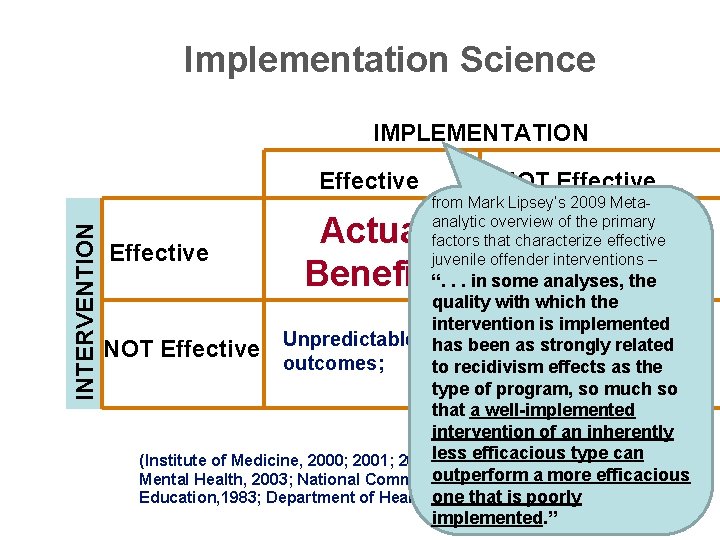

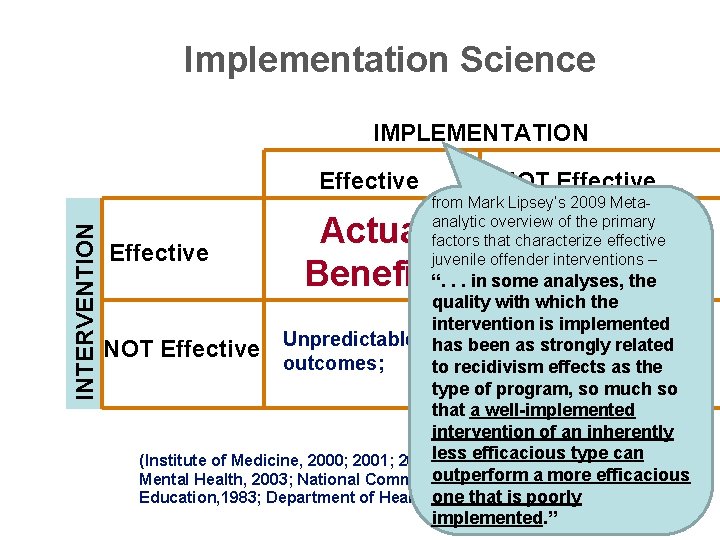

Implementation Science IMPLEMENTATION INTERVENTION Effective NOT Effective from Mark Lipsey’s 2009 Metaanalytic overview of the primary Inconsistent; factors that characterize effective Not Sustainable; juvenile offender interventions – Actual Poor analyses, outcomesthe Benefits“. . . in some quality with which the intervention is implemented poor outcomes; been. Poor as strongly related NOT Effective Unpredictable orhas outcomes; Sometimes harmful to recidivism effects as the type of program, so much so that a well-implemented intervention of an inherently type can on (Institute of Medicine, 2000; 2001; 2009; less Newefficacious Freedom Commission outperform a more Mental Health, 2003; National Commission on Excellence in efficacious that is Services, poorly 1999) Education, 1983; Department of Health one and Human implemented. ”

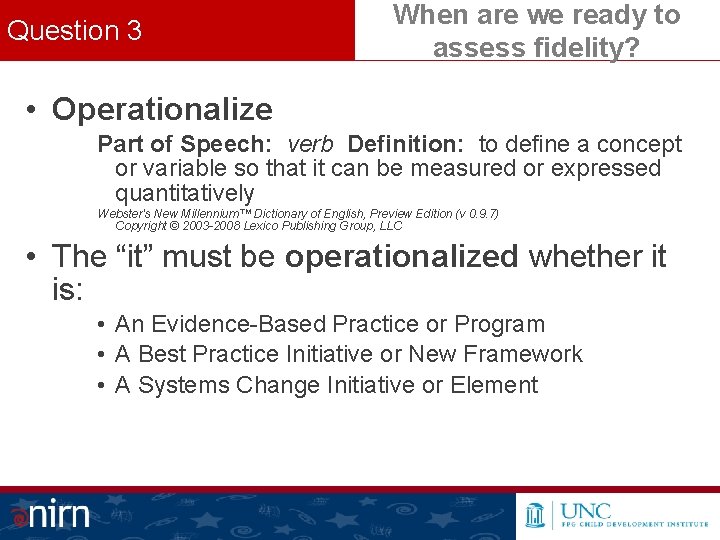

Question 3 When are we ready to assess fidelity? • Operationalize Part of Speech: verb Definition: to define a concept or variable so that it can be measured or expressed quantitatively Webster's New Millennium™ Dictionary of English, Preview Edition (v 0. 9. 7) Copyright © 2003 -2008 Lexico Publishing Group, LLC • The “it” must be operationalized whether it is: • An Evidence-Based Practice or Program • A Best Practice Initiative or New Framework • A Systems Change Initiative or Element

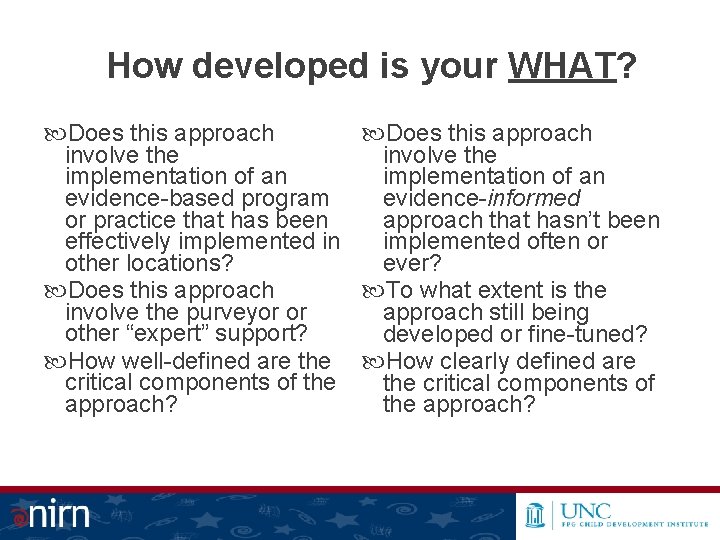

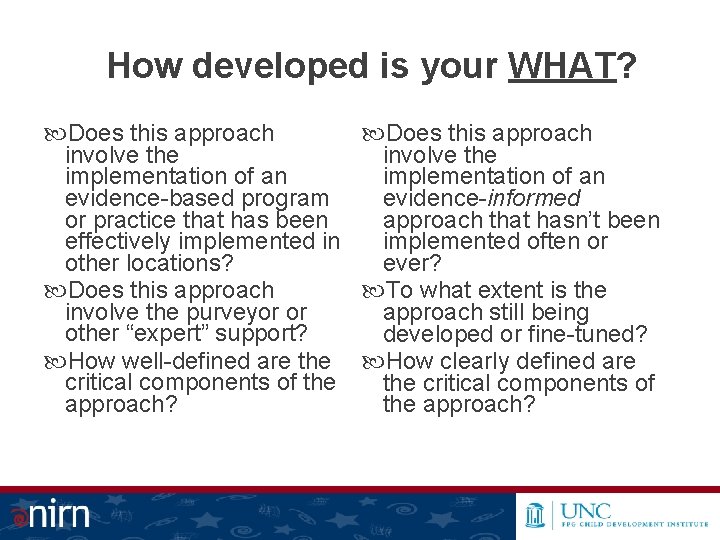

How developed is your WHAT? Does this approach involve the implementation of an evidence-based program evidence-informed or practice that has been approach that hasn’t been effectively implemented in implemented often or other locations? ever? Does this approach To what extent is the involve the purveyor or approach still being other “expert” support? developed or fine-tuned? How well-defined are the How clearly defined are critical components of the critical components of approach? the approach?

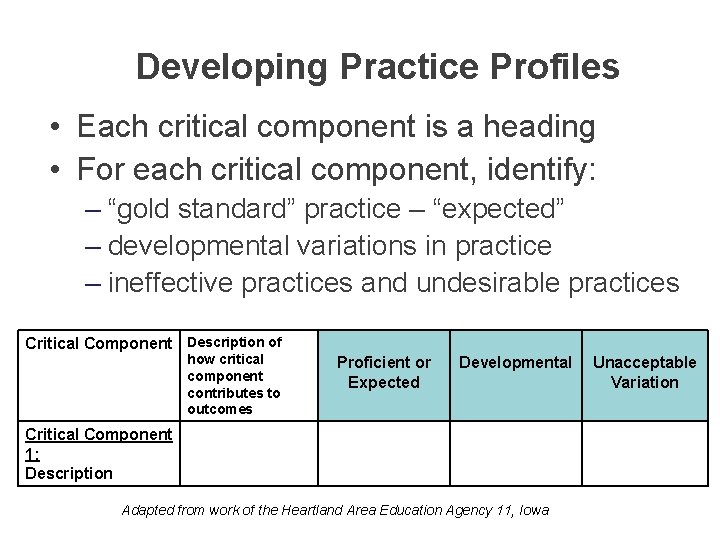

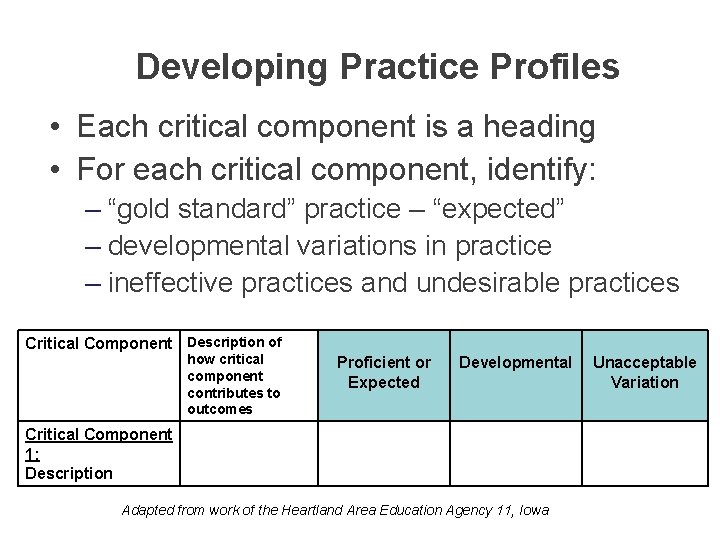

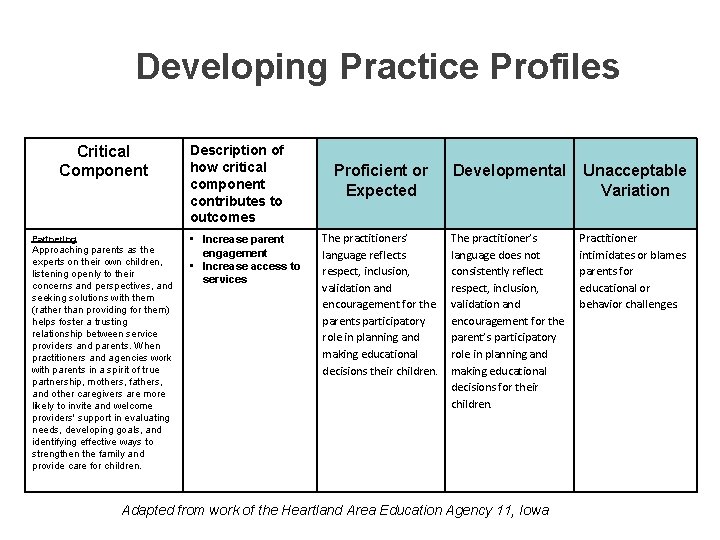

Developing Practice Profiles • Each critical component is a heading • For each critical component, identify: – “gold standard” practice – “expected” – developmental variations in practice – ineffective practices and undesirable practices Critical Component Description of how critical component contributes to outcomes Proficient or Expected Developmental Critical Component 1: Description Adapted from work of the Heartland Area Education Agency 11, Iowa Unacceptable Variation

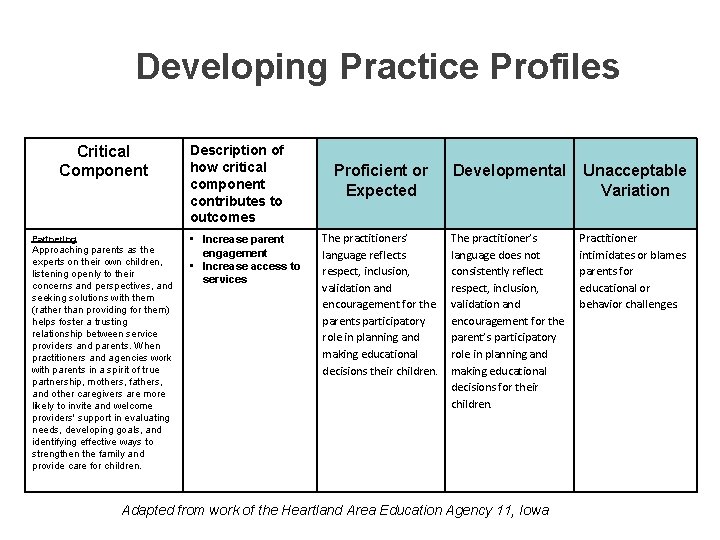

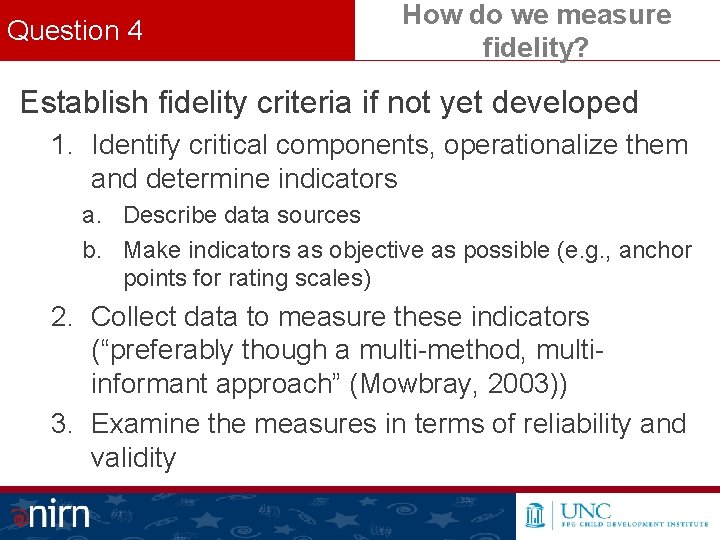

Developing Practice Profiles Critical Component Partnering Approaching parents as the experts on their own children, listening openly to their concerns and perspectives, and seeking solutions with them (rather than providing for them) helps foster a trusting relationship between service providers and parents. When practitioners and agencies work with parents in a spirit of true partnership, mothers, fathers, and other caregivers are more likely to invite and welcome providers' support in evaluating needs, developing goals, and identifying effective ways to strengthen the family and provide care for children. Description of how critical component contributes to outcomes • Increase parent engagement • Increase access to services Proficient or Expected Developmental Unacceptable Variation The practitioners’ language reflects respect, inclusion, validation and encouragement for the parents participatory role in planning and making educational decisions their children. The practitioner’s language does not consistently reflect respect, inclusion, validation and encouragement for the parent’s participatory role in planning and making educational decisions for their children. Practitioner intimidates or blames parents for educational or behavior challenges. Adapted from work of the Heartland Area Education Agency 11, Iowa

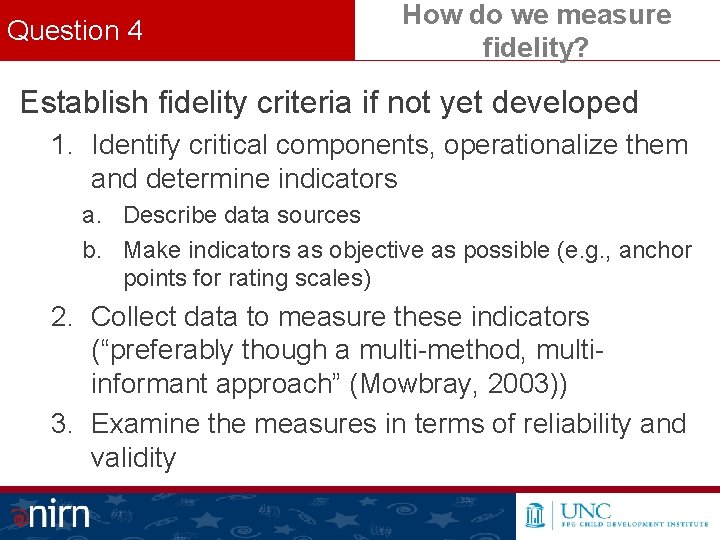

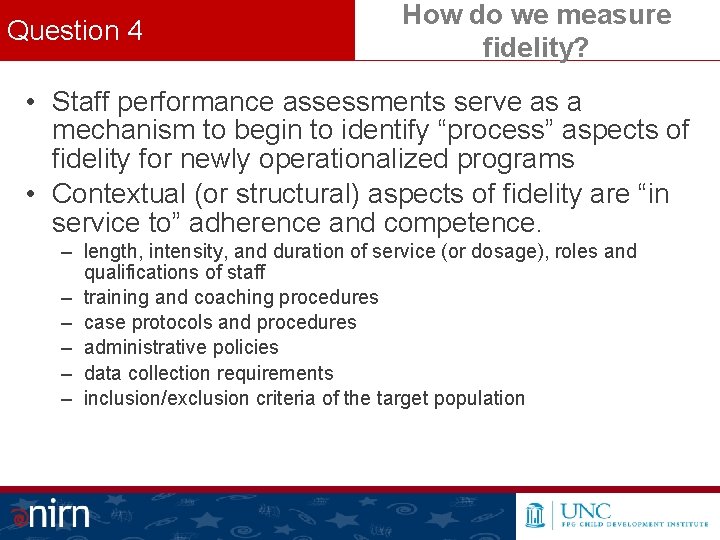

Question 4 How do we measure fidelity? Establish fidelity criteria if not yet developed 1. Identify critical components, operationalize them and determine indicators a. Describe data sources b. Make indicators as objective as possible (e. g. , anchor points for rating scales) 2. Collect data to measure these indicators (“preferably though a multi-method, multiinformant approach” (Mowbray, 2003)) 3. Examine the measures in terms of reliability and validity

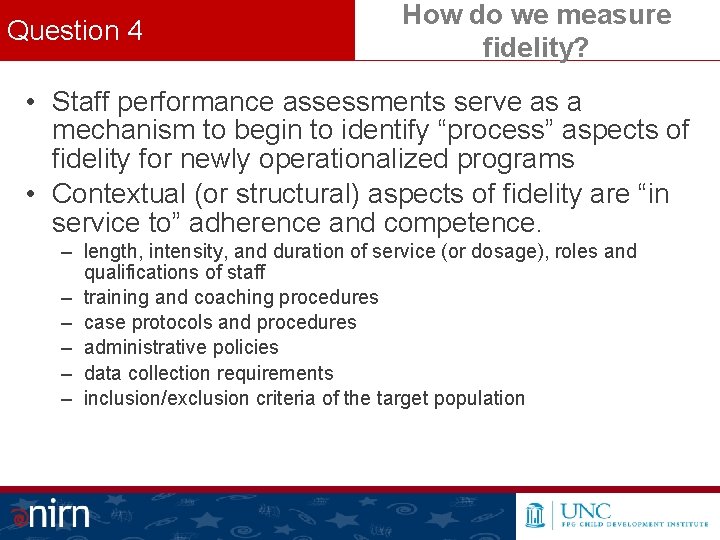

Question 4 How do we measure fidelity? • Staff performance assessments serve as a mechanism to begin to identify “process” aspects of fidelity for newly operationalized programs • Contextual (or structural) aspects of fidelity are “in service to” adherence and competence. – length, intensity, and duration of service (or dosage), roles and qualifications of staff – training and coaching procedures – case protocols and procedures – administrative policies – data collection requirements – inclusion/exclusion criteria of the target population

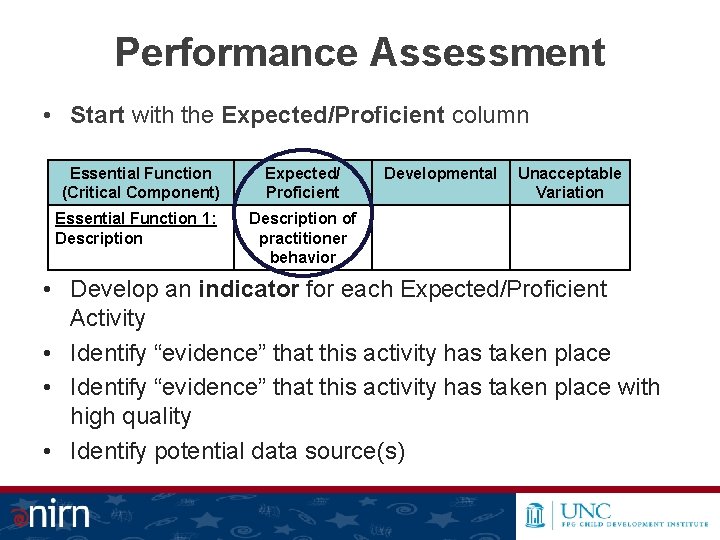

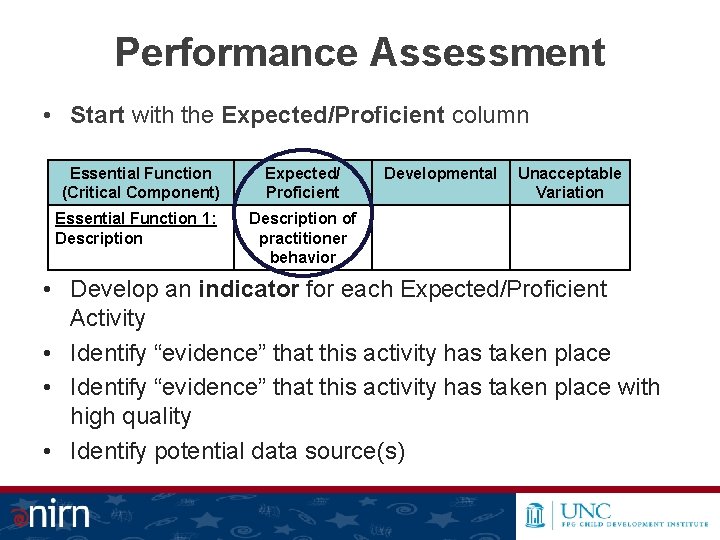

Performance Assessment • Start with the Expected/Proficient column Essential Function (Critical Component) Essential Function 1: Description Expected/ Proficient Developmental Unacceptable Variation Description of practitioner behavior • Develop an indicator for each Expected/Proficient Activity • Identify “evidence” that this activity has taken place with high quality • Identify potential data source(s)

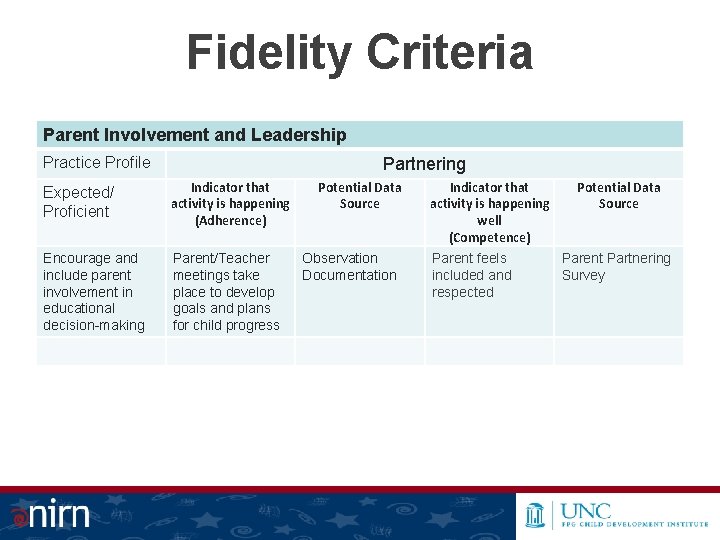

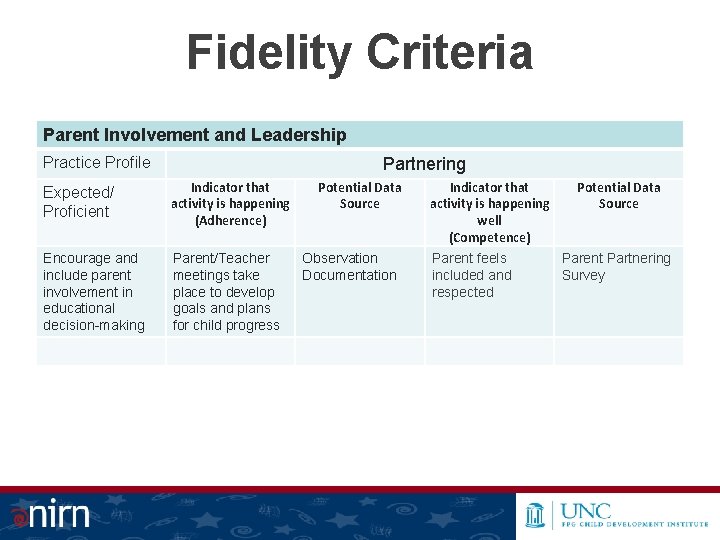

Fidelity Criteria Parent Involvement and Leadership Practice Profile Partnering Expected/ Proficient Indicator that activity is happening (Adherence) Encourage and include parent involvement in educational decision-making Parent/Teacher meetings take place to develop goals and plans for child progress Potential Data Source Observation Documentation Indicator that Potential Data activity is happening Source well (Competence) Parent feels Parent Partnering included and Survey respected

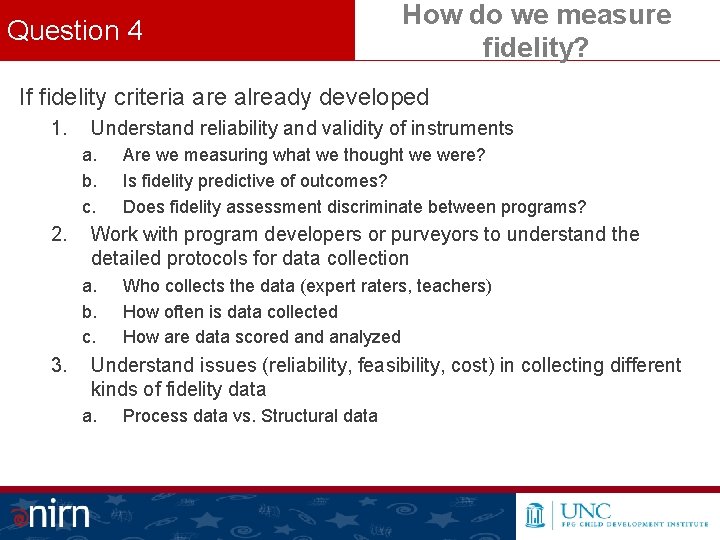

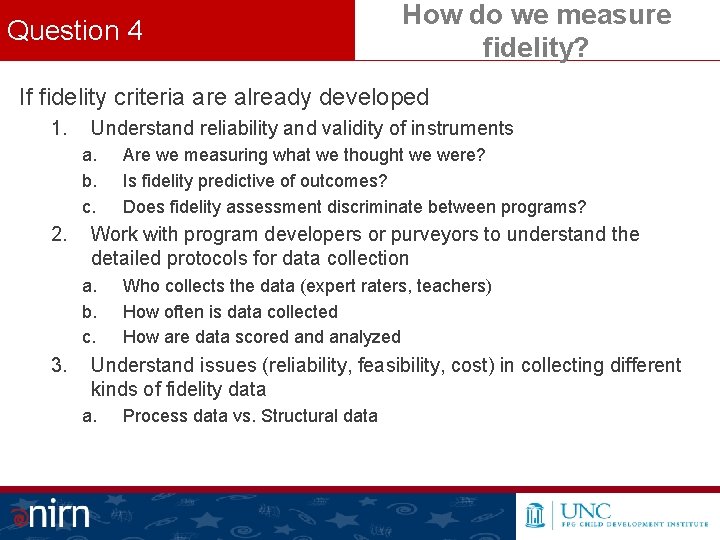

Question 4 How do we measure fidelity? If fidelity criteria are already developed 1. Understand reliability and validity of instruments a. b. c. 2. Work with program developers or purveyors to understand the detailed protocols for data collection a. b. c. 3. Are we measuring what we thought we were? Is fidelity predictive of outcomes? Does fidelity assessment discriminate between programs? Who collects the data (expert raters, teachers) How often is data collected How are data scored analyzed Understand issues (reliability, feasibility, cost) in collecting different kinds of fidelity data a. Process data vs. Structural data

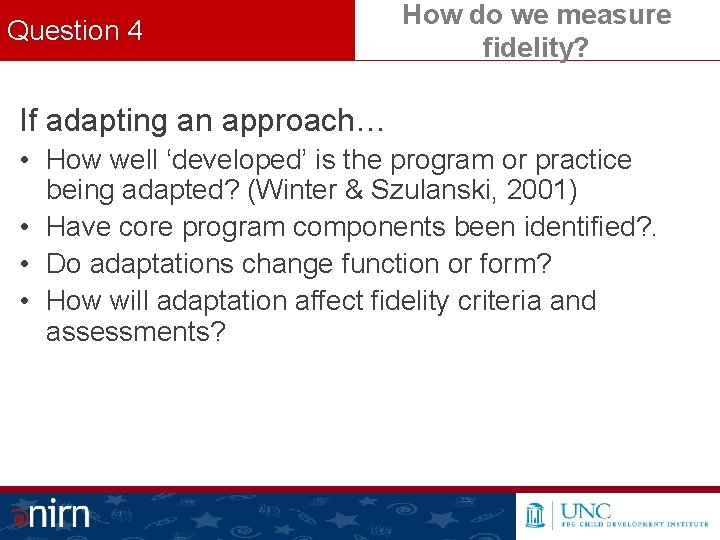

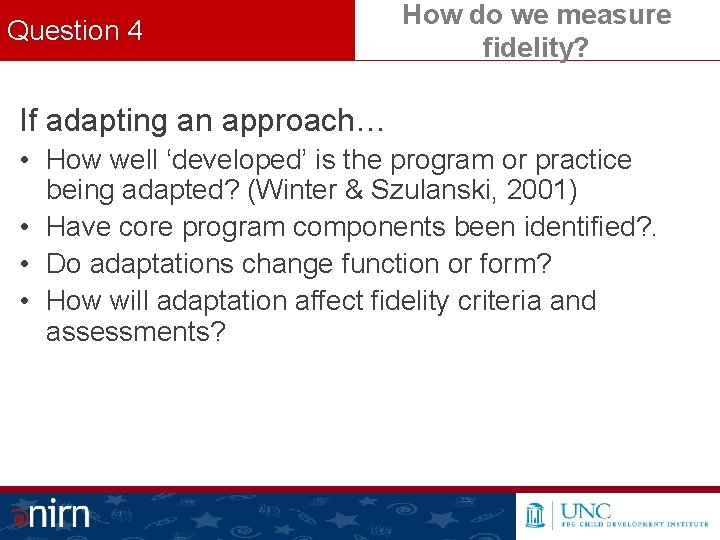

Question 4 How do we measure fidelity? If adapting an approach… • How well ‘developed’ is the program or practice being adapted? (Winter & Szulanski, 2001) • Have core program components been identified? . • Do adaptations change function or form? • How will adaptation affect fidelity criteria and assessments?

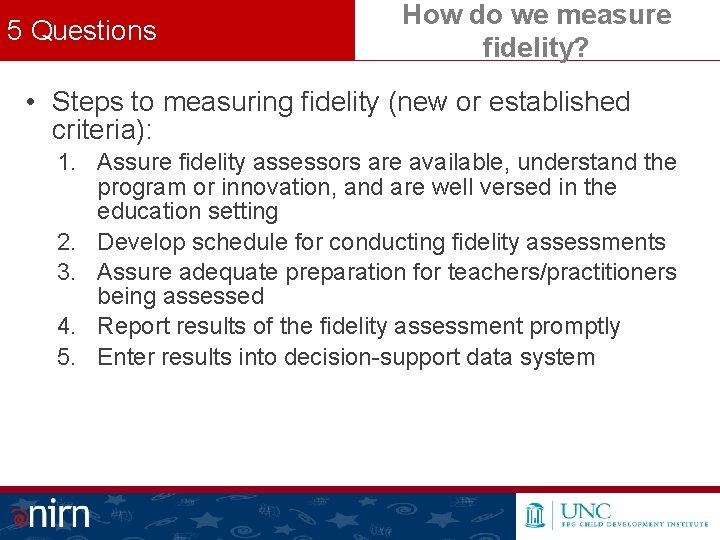

5 Questions How do we measure fidelity? • Steps to measuring fidelity (new or established criteria): 1. Assure fidelity assessors are available, understand the program or innovation, and are well versed in the education setting 2. Develop schedule for conducting fidelity assessments 3. Assure adequate preparation for teachers/practitioners being assessed 4. Report results of the fidelity assessment promptly 5. Enter results into decision-support data system

How can we produce high-fidelity implementation in practice? • Build, improve and sustain practitioner competency • Create hospitable organizational and systems environments • Appropriate leadership strategies

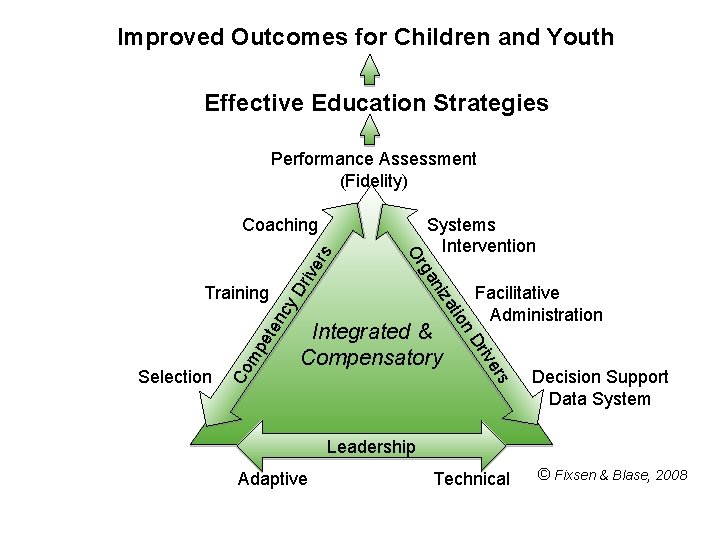

“IMPLEMENTATION DRIVERS” Common features of successful supports to help make full and effective uses of a wide variety of innovations

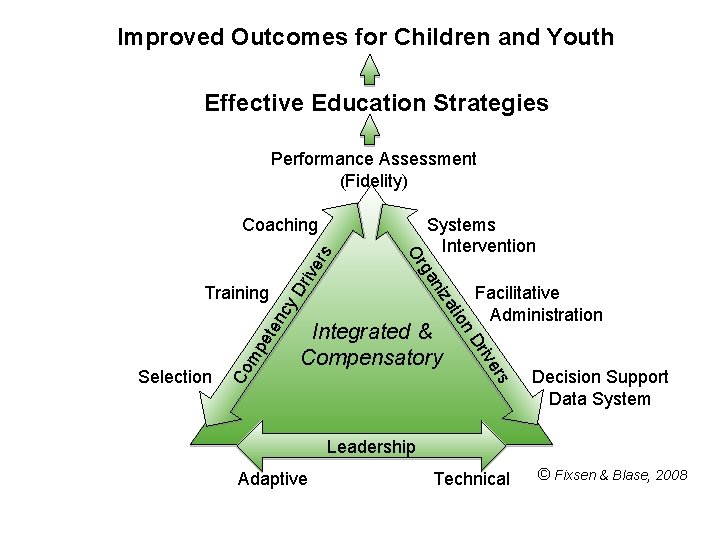

Improved Outcomes for Children and Youth Effective Education Strategies Performance Assessment (Fidelity) s riv e y D mp ete nc Co Integrated & Compensatory Facilitative Administration er riv Selection D on ati niz ga Training Systems Intervention Or rs Coaching Decision Support Data System Leadership Adaptive Technical © Fixsen & Blase, 2008

Question 5 Produce high-fidelity implementation? • Fidelity is an implementation outcome ◦ Implementation Drivers influence how well or how poorly a program is implemented ◦ The full and integrated use of the Implementation Drivers supports practitioners in consistent, high-fidelity implementation of program ◦ Staff performance assessments are designed to assess the use and outcomes of the skills that are required for the high-fidelity implementation of a new program or practice

Question 5 Produce high-fidelity implementation? • Competency Drivers – Demonstrate knowledge, skills and abilities – Practice to criteria – Coach for competence and confidence • Organizational Drivers – Use data to assess fidelity and improve program operations – Administer policies and procedures that support high-fidelity implementation – Implement needed systems interventions • Leadership Drivers – Use appropriate leadership strategies to identify and solve challenges to effective implementation

Question 6 Use fidelity data for program improvement? • Program Review Process to create sustainable improvement cycle for program – Process and Outcome Data – measures, data sources, data collection plan – Detection Systems for Barriers – roles and responsibilities – Communication protocols – accountable, moving information up and down the system • Questions to Ask – – What formal and informal data have we reviewed? What is the data telling us? What barriers have we encountered? Would improving the functioning of any Implementation Driver help address barrier?

Summary Program Fidelity • Fidelity has multiple facets and is critical to achieving outcomes • Fully operationalized programs are pre-requisites for developing fidelity criteria • Valid and reliable fidelity criteria need to be collected carefully with guidance from program developers or purveyors • Fidelity is an implementation outcome; effective use of Implementation Drivers can increase our chances of high-fidelity implementation • Fidelity data can and should be used for program improvement

Resources Program Fidelity Examples of fidelity instruments • Teaching Pyramid Observation Tool for Preschool Classrooms (TPOT), Research Edition, Mary Louise Hemmeter and Lise Fox • The PBIS fidelity measure (the SET) described at http: //www. pbis. org/pbis_resource_detail_page. aspx? Type=4&PB IS_Resource. ID=222 Articles • Sanetti, L. & Kratochwill, T. (2009). Toward Developing a Science of Treatment Integrity: Introduction to the Special Series. School Psychology Review, Volume 38, No. 4, pp. 445– 459. • Mowbray, C. T. , Holter, M. C. , Teague, G. B. , Bybee, D. (2003). Fidelity Criteria: Development, Measurement and Validation. American Journal of Evaluation, 24 (3), 315 -340. • Hall, G. E. , & Hord, S. M. (2011). Implementing Change: Patterns, principles and potholes (3 rd ed. )Boston: Allyn and Bacon.

Stay Connected! Allison. metz@unc. edu nirn. fpg. unc. edu www. scalingup. org www. implementationconference. org