DEVELOPING AN ARABIC ENCODING FRAMEWORK FOR DIALECT IDENTIFICATION

DEVELOPING AN ARABIC ENCODING FRAMEWORK FOR DIALECT IDENTIFICATION USING CONVOLUTIONAL NEURAL NETWORK BY: ADNAN AL-KUJUK SEIDENBERG SCHOOL OF CSIS, PACE UNIVERSITY, PLEASANTVILLE, NY

MSA VERSES DIALECTS VARIETIES • The Arabic language is distinguished by an unusual semantic dichotomy, whereby the written form of the dialect, Modern Standard Arabic (MSA), varies from the different spoken Arabic varieties in a non-trivial way. • Such forms of ' daily ' are the dialects of Arabic. Egyptian (EGY), Gulf (GLF), Levantine (LEV) and Moroccan (MOR) are instances of this. Classical Arabic also appears in relation to MSA and dialects and is a series of ancient literary texts and religious discourse.

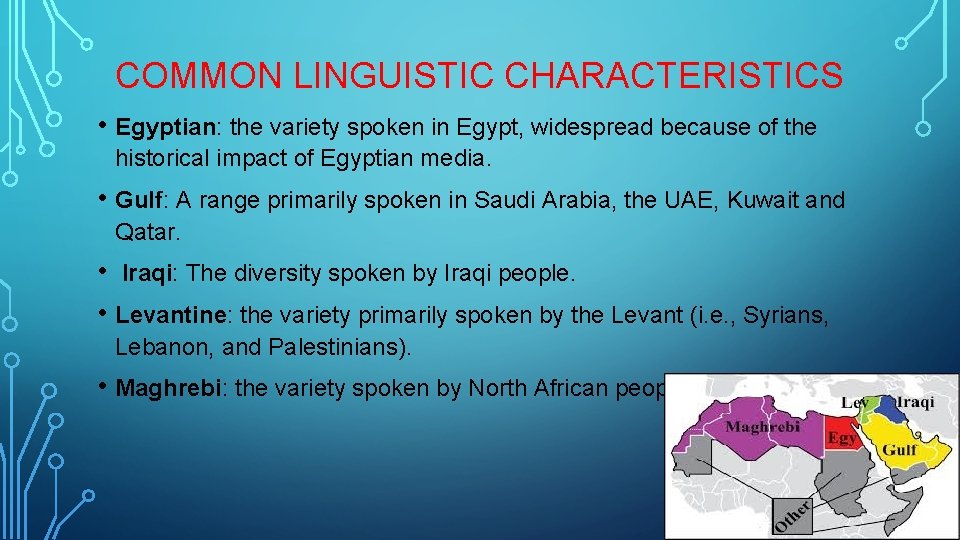

COMMON LINGUISTIC CHARACTERISTICS • Egyptian: the variety spoken in Egypt, widespread because of the historical impact of Egyptian media. • Gulf: A range primarily spoken in Saudi Arabia, the UAE, Kuwait and Qatar. • Iraqi: The diversity spoken by Iraqi people. • Levantine: the variety primarily spoken by the Levant (i. e. , Syrians, Lebanon, and Palestinians). • Maghrebi: the variety spoken by North African people, except Egypt.

ARABIC DIALECTAL DATA • the spread of social media, dialects began to find their way in writing, giving researchers the opportunity to use these data for NLP. • AOC is made up of 3 M MSA and dialectal statements, 108, 173 of which are marked by crowd sourcing. For our experiment we used the training data set having 86, 541, testing data set having 10812 and the validation set having 10821.

DATASET: ARABIC ONLINE COMMENTARY (AOC)

DEEP LEARNING MODEL • Deep learning models have recently been applied successfully to language modeling and text classification tasks. • We use pre-trained word vectors based on word 2 vec for initializing networks in all of our models. Next, when studying, we fine-tune weights.

WORD-BASED CONVOLUTIONAL NEURAL NETWORK (CNN) • This design is conceptually similar to Kim's (2014) system and has the following structure: • Input layer • Convolution layer • Maxpooling • Dense layer • Softmax layer

PRE-PROCESSING AND EMBEDDING USED • In our conventional and in-depth research projects, we process our information as follows: • Tokenization and normalization • AOC-based embedding use the AOC ~ 3 M unlabeled comments by training a "continuous word bag" (CBOW) model to exploit them. • use the settings in Abdul-Mageed to train the model to acquire 300 dimensional word vectors.

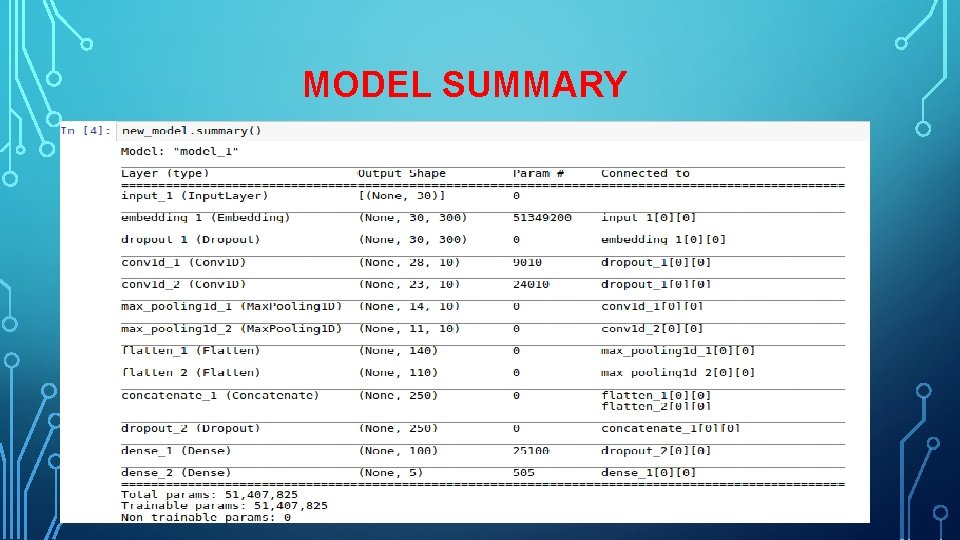

MODEL SUMMARY

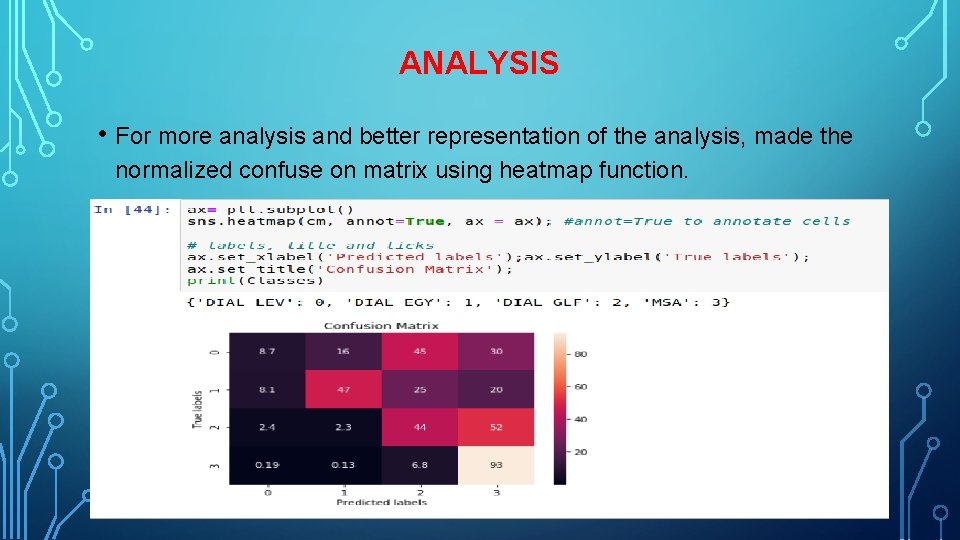

ANALYSIS • For more analysis and better representation of the analysis, made the normalized confuse on matrix using heatmap function.

CONCLUSION • Compared the AOC dataset, a popular dataset of Arabic online comments, to deep learning work focused on the identification of dialects. • Developed classifiers (cnn based on deep learning) to provide a strong foundation for the task. • Results show well the model worked on this task, especially when initialized using the embedding model of a large dialect specific word. • The accuracy can be improved further when provided with more dataset in future.

- Slides: 11