Developing a Hiring System Reliability of Measurement Key

Developing a Hiring System Reliability of Measurement

Key Measurement Issues • Measurement is imperfect • Reliability--how accurately do our measurements reflect the underlying attributes? • Validity --how accurate are the inferences we draw from our measurements? – refers to the uses we make of the measurements

What is Reliability? • The extent to which a measure is free of measurement error • Obtained score = – True Score + – Random Error + – Constant Error

What is Reliability? Reliability coefficient = % of obtained score due to true score – e. g. , Performance measure with ryy =. 60 is 60% “accurate” in measuring differences in true performance Different “types” of reliability reflect different sources of measurement error

Types of Reliability • Test-retest Reliability – Assesses stability (over time/situations) • Internal Consistency Reliability – Assesses consistency of content of measure • Parallel Forms Reliability – Assesses equivalence of measures – Inter-rater reliability is special case

Developing a Hiring System Validity of Measurement

What is Validity? The accuracy of inferences drawn from scores on a measure • Example: An employer uses an honesty test to hire employees. – The inference is that high scorers will be less likely to steal. – Validation confirms this inference.

Validity vs. Reliability • Reliability is a characteristic of the measure – Error in measurement – A measure either is or isn’t reliable • Validity refers to the uses of the measures – Error in inferences drawn – May be valid for one purpose but not for another

Validity and Job Relatedness • Federal regulations require employer to document job-relatedness of selection procedures that have adverse impact • Good practice also dictates that selection decisions should be job-related • Validation is the typical way of documenting job relatedness

Methods of Validation • Empirical: showing a statistical relationship between predictor scores and criterion scores – showing that high-scoring applicants are better employees • Content: showing a logical relationship between predictor content and job content – showing that the predictor measures the same knowledge or skills that are required on the job

Methods of Validation • Construct: developing a “theory” of why a predictor is job-relevant • Validity Generalization: “Borrowing” the results of empirical validation studies done on the same job in other organizations

Empirical Validation • Concurrent Criterion-Related Validation – • Predictive Criterion-Related Validation –

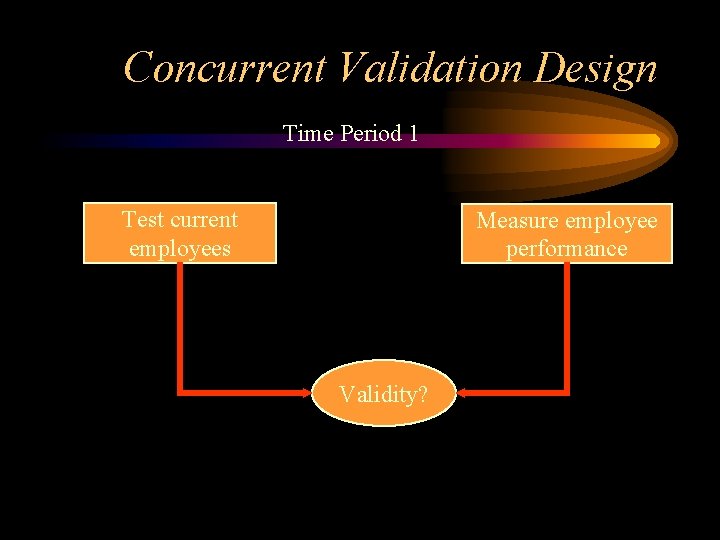

Concurrent Validation Design Time Period 1 Test current employees Measure employee performance Validity?

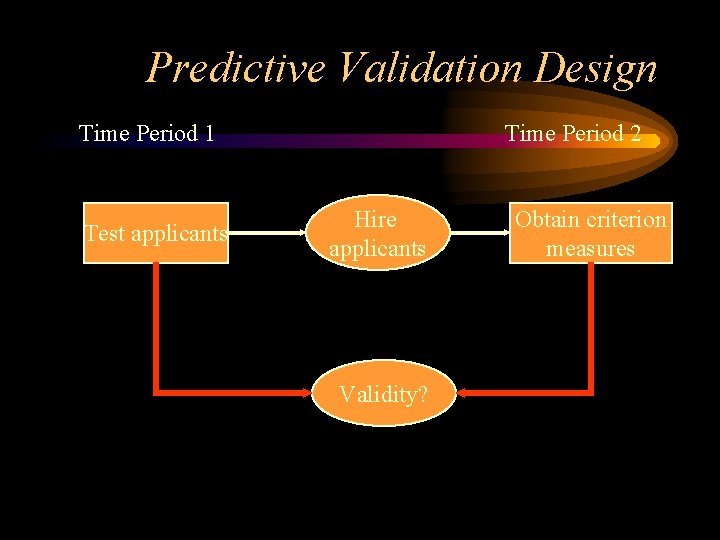

Predictive Validation Design Time Period 1 Test applicants Time Period 2 Hire applicants Validity? Obtain criterion measures

Empirical Validation: Limitations • • •

Content Validation • Inference being tested is that the predictor samples actual job skills and knowledge – not that predictor scores predict job performance • Avoids the problems of empirical validation because no statistical relationship is tested – potentially useful for smaller employers

Content Validation: Limitations • •

Construct Validation Making a persuasive argument that hiring tool is job-relevant 1. Why attribute is necessary – job & organizational analysis 2. Tool measures the attribute – existing data usually provided by developer of tool

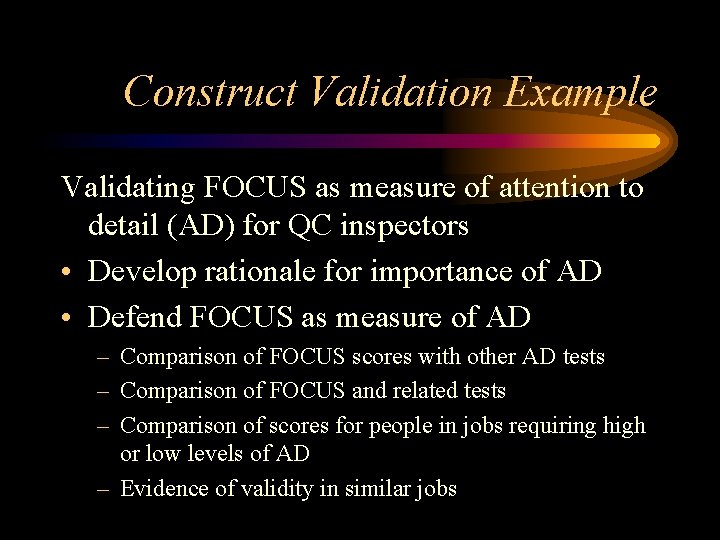

Construct Validation Example Validating FOCUS as measure of attention to detail (AD) for QC inspectors • Develop rationale for importance of AD • Defend FOCUS as measure of AD – Comparison of FOCUS scores with other AD tests – Comparison of FOCUS and related tests – Comparison of scores for people in jobs requiring high or low levels of AD – Evidence of validity in similar jobs

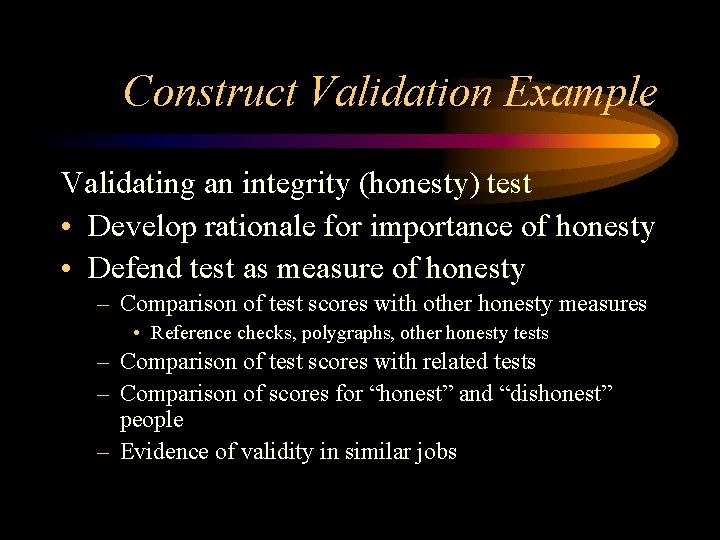

Construct Validation Example Validating an integrity (honesty) test • Develop rationale for importance of honesty • Defend test as measure of honesty – Comparison of test scores with other honesty measures • Reference checks, polygraphs, other honesty tests – Comparison of test scores with related tests – Comparison of scores for “honest” and “dishonest” people – Evidence of validity in similar jobs

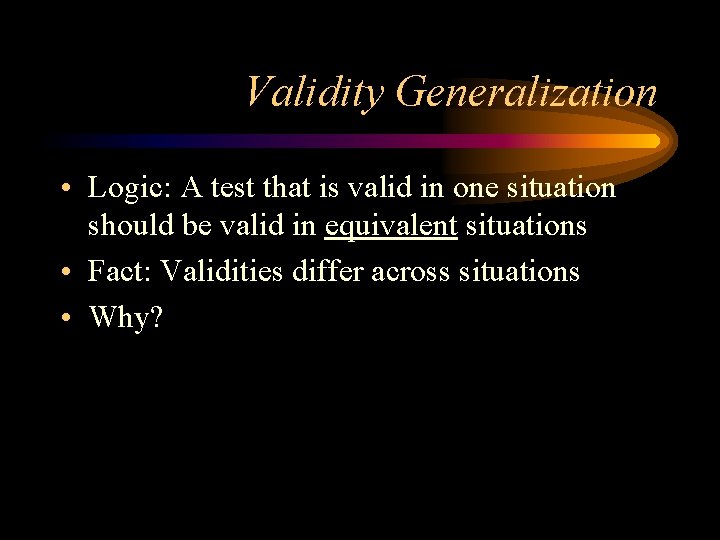

Validity Generalization • Logic: A test that is valid in one situation should be valid in equivalent situations • Fact: Validities differ across situations • Why?

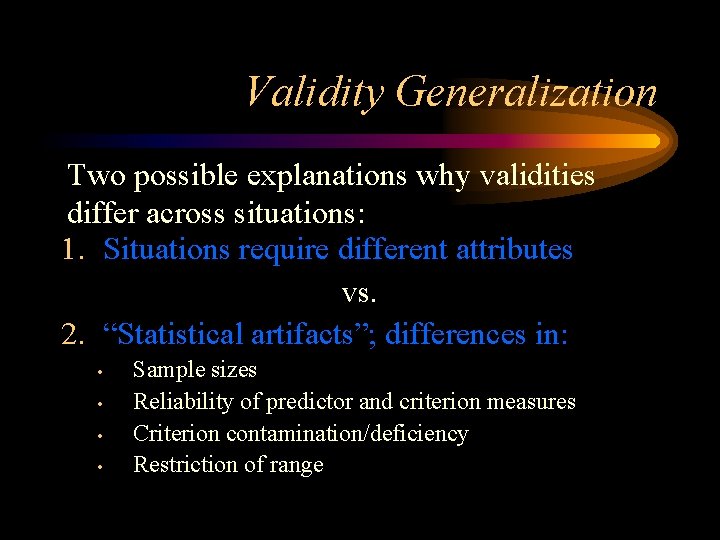

Validity Generalization Two possible explanations why validities differ across situations: 1. Situations require different attributes vs. 2. “Statistical artifacts”; differences in: • • Sample sizes Reliability of predictor and criterion measures Criterion contamination/deficiency Restriction of range

VG Implications • Validities are larger and more consistent • Validities are generalizable to comparable situations • Tests that are valid for majority are usually valid for minority groups • There is at least one valid test for all jobs • It’s hard to show validity with small Ns

Validation: Summary • Criterion-Related – Predictive – Concurrent • • Content Construct Validity Generalization “Face Validity”

- Slides: 24