Detour Distributed Systems Techniques v v v Paxos

Detour: Distributed Systems Techniques v v v Paxos overview (based on Lampson’s talk) Google: Paxos made live (only briefly) Zookeeper: -- wait-free coordination system by Yahoo! CSci 8211: Distributed Systems: Paxos & zookeeper 1

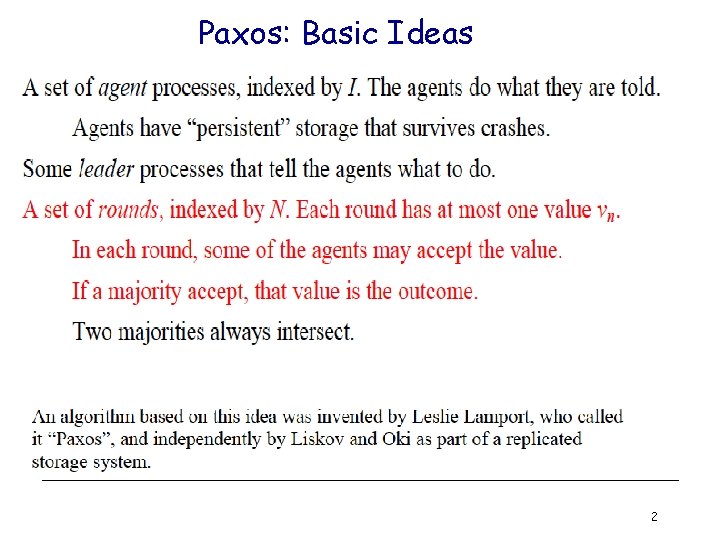

Paxos: Basic Ideas 2

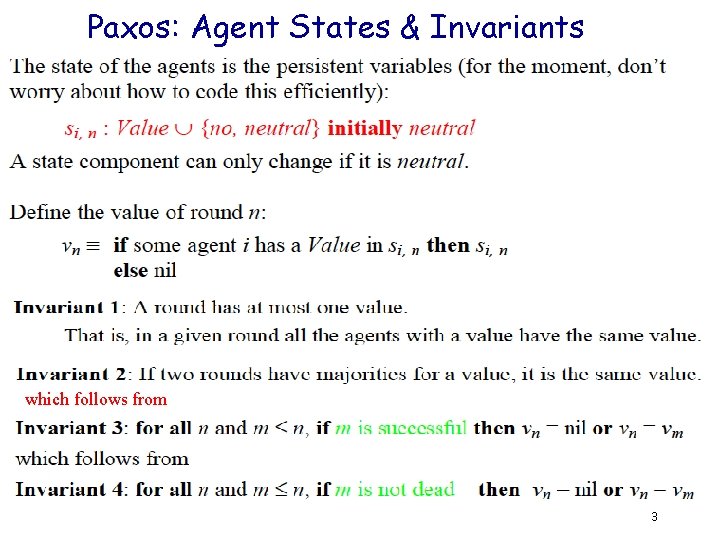

Paxos: Agent States & Invariants which follows from 3

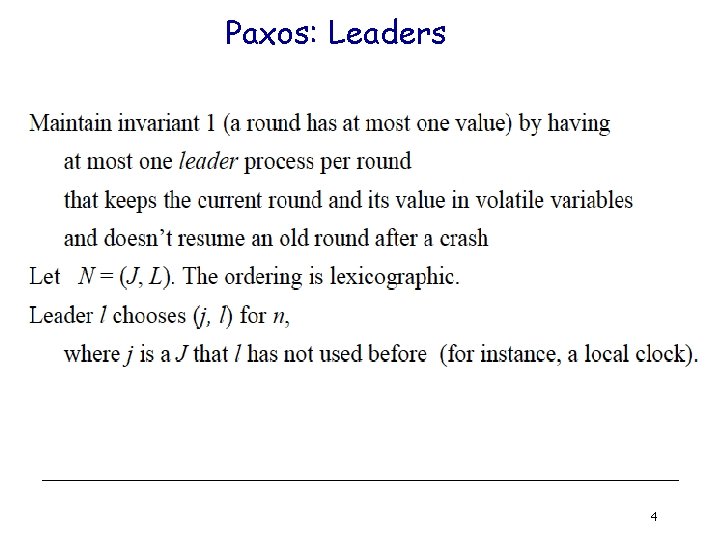

Paxos: Leaders 4

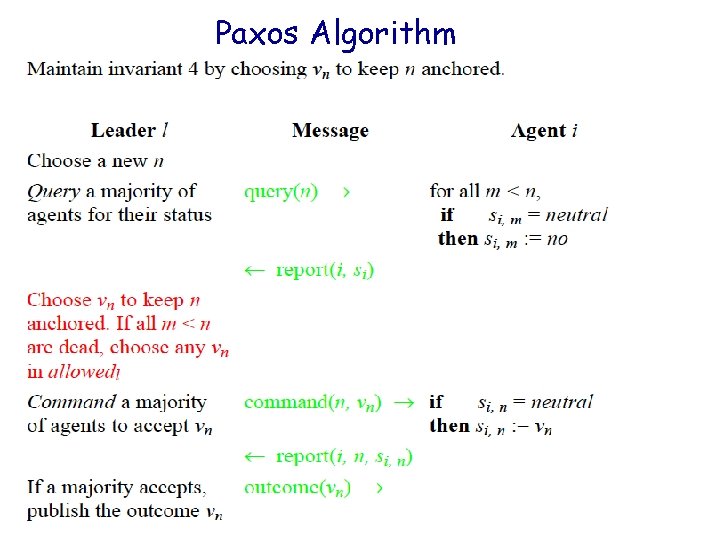

Paxos Algorithm 5

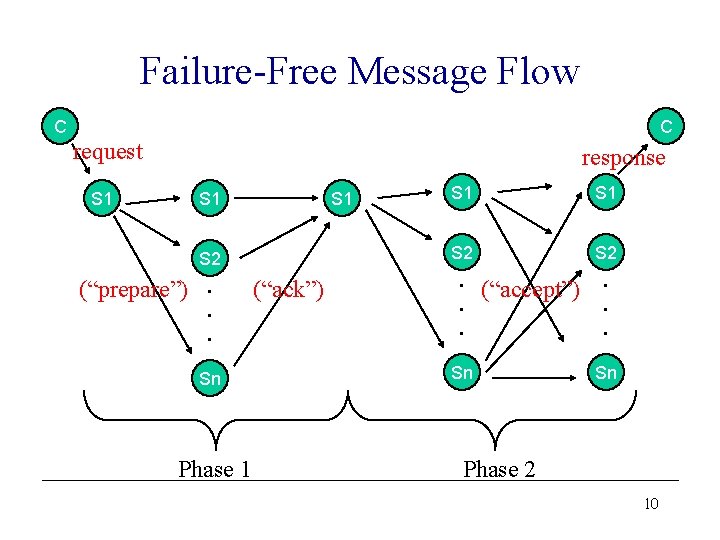

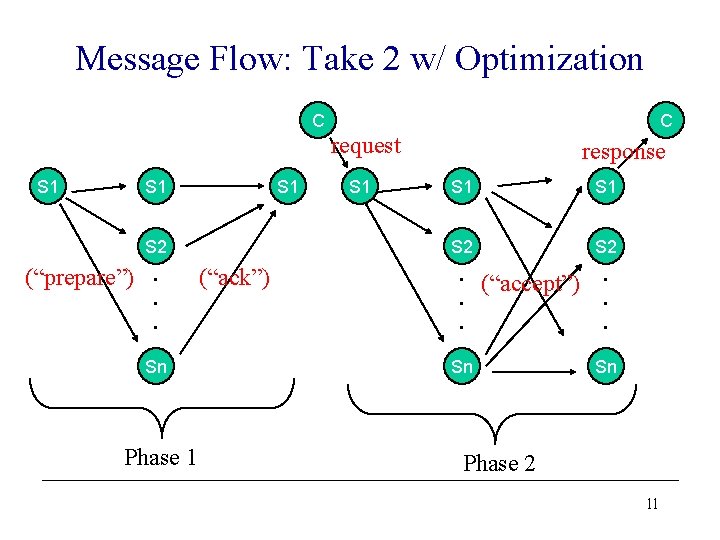

Paxos Algorithm in Plain English • Phase 1 (prepare): – A proposer selects a proposal number n and sends a prepare request with number n to majority of acceptors. – If an acceptor receives a prepare request with number n greater than that of any prepare request it saw, it responses YES to that request with a promise not to accept any more proposals numbered less than n and include the highestnumbered proposal (if any) that it has accepted.

Paxos Algorithm in Plain English … • Phase 2 (accept): – If the proposer receives a response YES to its prepare requests from a majority of acceptors, then it sends an accept request to each of those acceptors for a proposal numbered n with a values v which is the value of the highest-numbered proposal among the responses. – If an acceptor receives an accept request for a proposal numbered n, it accepts the proposal unless it has already responded to a prepare request having a number greater than n.

Paxos’s Properties (Invariants) • P 1: Any proposal number is unique. • P 2: Any two set of acceptors have at least one acceptor in common. • P 3: the value sent out in phase 2 is the value of the highest-numbered proposal of all the responses in phase 1.

The Paxos Atomic Broadcast Algorithm • Leader based: each process has an estimate of who is the current leader • To order an operation, a process sends it to its current leader • The leader sequences the operation and launches a Consensus algorithm (Synod) to fix the agreement 9

Failure-Free Message Flow C C request S 1 response S 1 S 2 (“prepare”). . . Sn Phase 1 (“ack”) S 1 S 2 . . . (“accept”). Sn Phase 2 10

Message Flow: Take 2 w/ Optimization C C request S 1 S 1 S 2 (“prepare”). . . Sn Phase 1 (“ack”) S 1 response S 1 S 2 . . . (“accept”). Sn Phase 2 11

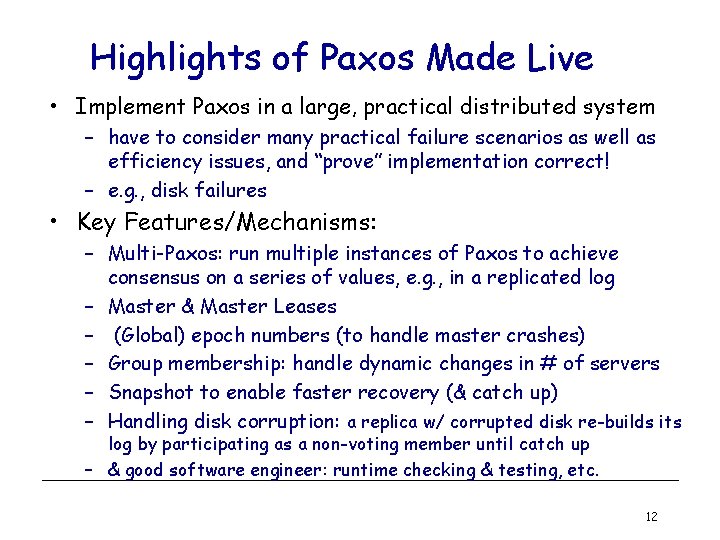

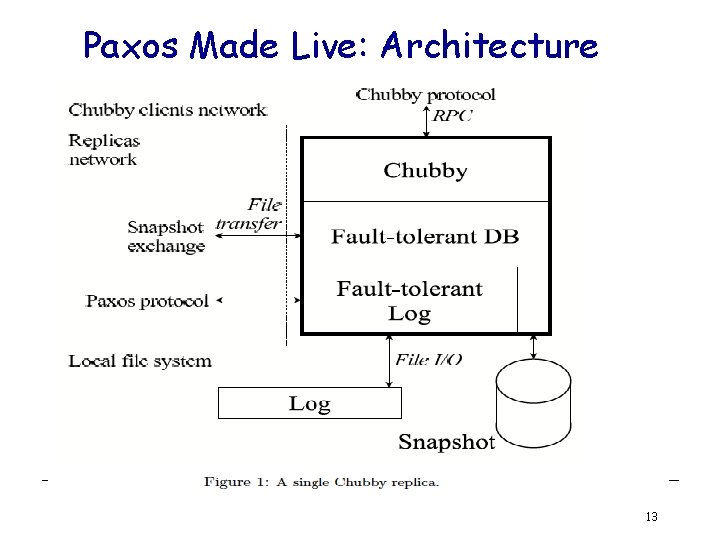

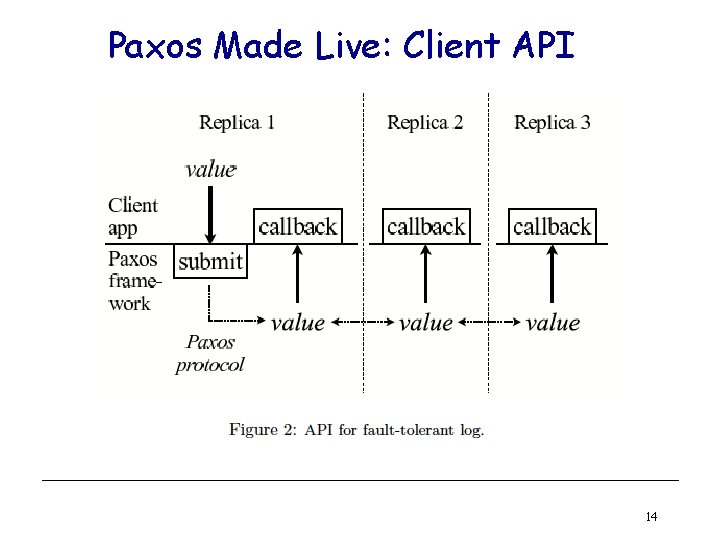

Highlights of Paxos Made Live • Implement Paxos in a large, practical distributed system – have to consider many practical failure scenarios as well as efficiency issues, and “prove” implementation correct! – e. g. , disk failures • Key Features/Mechanisms: – Multi-Paxos: run multiple instances of Paxos to achieve consensus on a series of values, e. g. , in a replicated log – Master & Master Leases – (Global) epoch numbers (to handle master crashes) – Group membership: handle dynamic changes in # of servers – Snapshot to enable faster recovery (& catch up) – Handling disk corruption: a replica w/ corrupted disk re-builds its log by participating as a non-voting member until catch up – & good software engineer: runtime checking & testing, etc. 12

Paxos Made Live: Architecture 13

Paxos Made Live: Client API 14

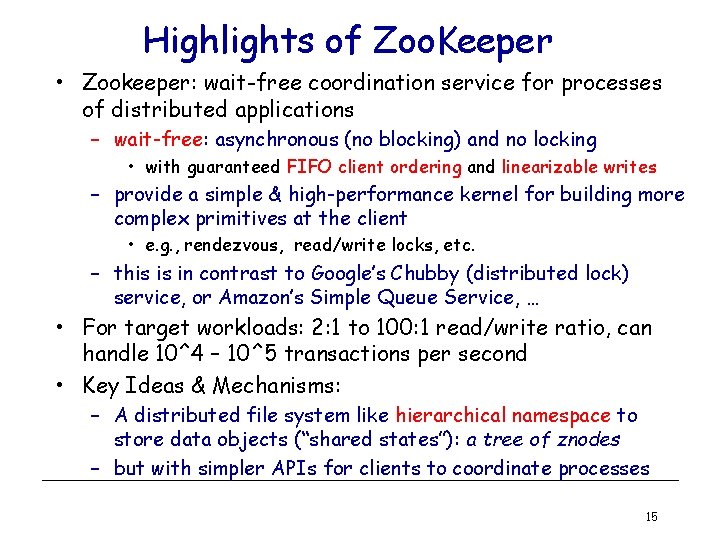

Highlights of Zoo. Keeper • Zookeeper: wait-free coordination service for processes of distributed applications – wait-free: asynchronous (no blocking) and no locking • with guaranteed FIFO client ordering and linearizable writes – provide a simple & high-performance kernel for building more complex primitives at the client • e. g. , rendezvous, read/write locks, etc. – this is in contrast to Google’s Chubby (distributed lock) service, or Amazon’s Simple Queue Service, … • For target workloads: 2: 1 to 100: 1 read/write ratio, can handle 10^4 – 10^5 transactions per second • Key Ideas & Mechanisms: – A distributed file system like hierarchical namespace to store data objects (“shared states”): a tree of znodes – but with simpler APIs for clients to coordinate processes 15

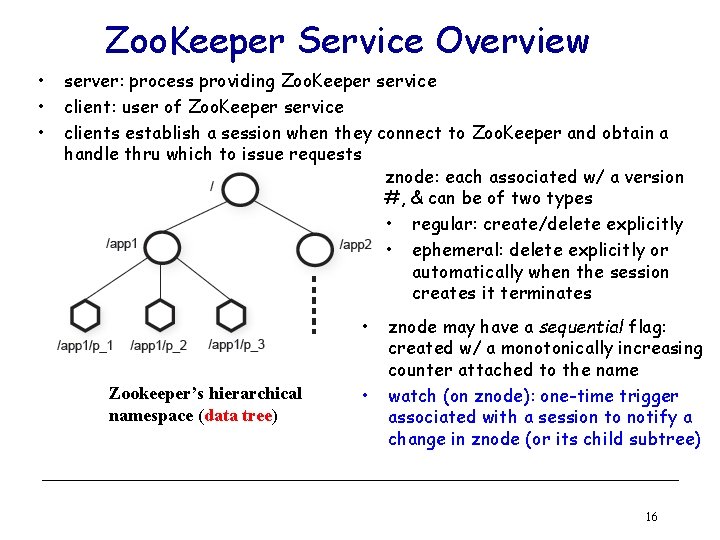

Zoo. Keeper Service Overview • • • server: process providing Zoo. Keeper service client: user of Zoo. Keeper service clients establish a session when they connect to Zoo. Keeper and obtain a handle thru which to issue requests znode: each associated w/ a version #, & can be of two types • regular: create/delete explicitly • ephemeral: delete explicitly or automatically when the session creates it terminates • Zookeeper’s hierarchical namespace (data tree) • znode may have a sequential flag: created w/ a monotonically increasing counter attached to the name watch (on znode): one-time trigger associated with a session to notify a change in znode (or its child subtree) 16

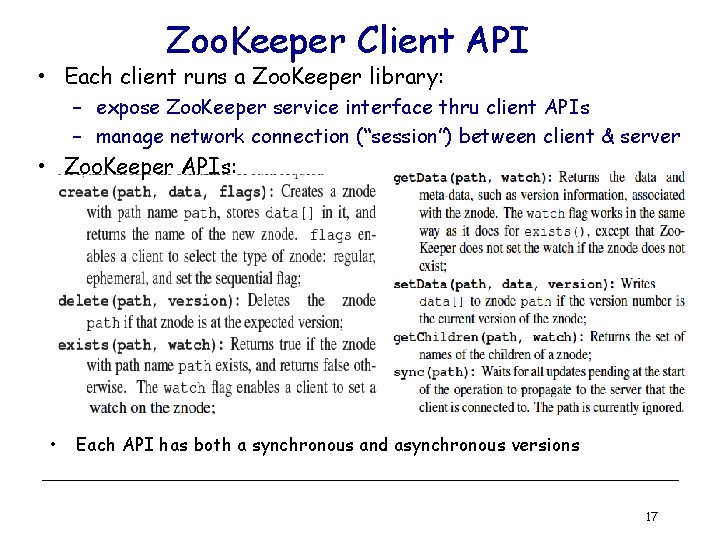

Zoo. Keeper Client API • Each client runs a Zoo. Keeper library: – expose Zoo. Keeper service interface thru client APIs – manage network connection (“session”) between client & server • Zoo. Keeper APIs: • Each API has both a synchronous and asynchronous versions 17

Zoo. Keeper Primitive Examples • Configuration Management: – E. g. , two clients A & B shares a configuration, and can directly communicate w/ each • A makes a change to the configuration & notify B (but the two servers’ configuration replicas may be out of sync!) • • • Rendezvous Group Membership Simple Lock (w & w/o Herd Effect) Read/Write Locks Double Barrier Yahoo and other services using Zoo. Keeper: – Fetch Service (“Yahoo crawler”) – Katta: a distributed indexer – Yahoo! Message Broker (YMB) 18

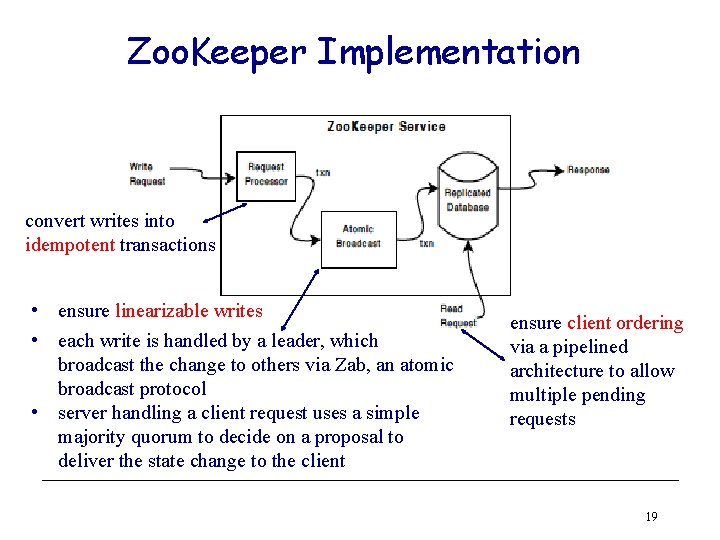

Zoo. Keeper Implementation convert writes into idempotent transactions • ensure linearizable writes • each write is handled by a leader, which broadcast the change to others via Zab, an atomic broadcast protocol • server handling a client request uses a simple majority quorum to decide on a proposal to deliver the state change to the client ensure client ordering via a pipelined architecture to allow multiple pending requests 19

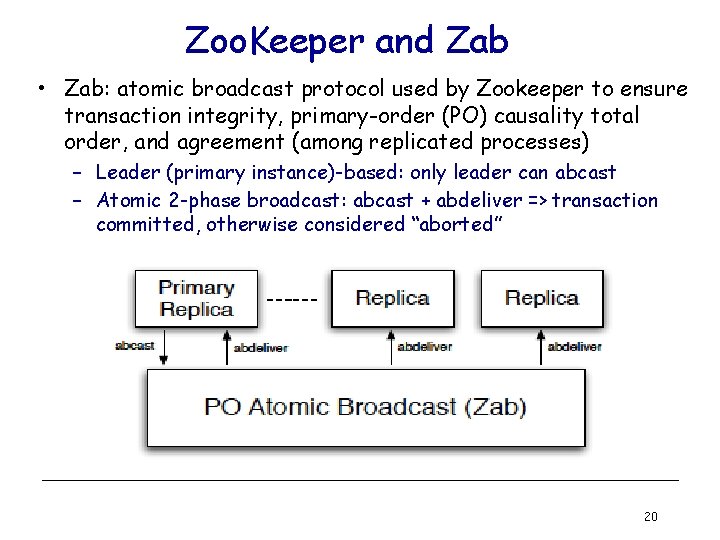

Zoo. Keeper and Zab • Zab: atomic broadcast protocol used by Zookeeper to ensure transaction integrity, primary-order (PO) causality total order, and agreement (among replicated processes) – Leader (primary instance)-based: only leader can abcast – Atomic 2 -phase broadcast: abcast + abdeliver => transaction committed, otherwise considered “aborted” 20

More on Zab • Zab atomic broadcast ensures primary-order causality: – “causability” defined only w. r. t. primary instance • Zab also ensures strict causality (or total ordering) – if a process delivers two transactions, one must precede the other in the PO causality order • Zab assumes a separate leader election/selection process (with a leader selection oracle) – processes: leader (starting w/ a new epoch #) and followers • Zab uses a 3 -phase protocol w/ quorum (similar to Raft): – Phase 1 (Discovery): agree on new epoch # and discover history – Phase 2 (Synchronization): synchronize the history of all processes using 2 PC-like protocol, commit based on quorum – Phase 3 (broadcast): commit a new transaction via a 2 PC-like protocol, commit based on quorum 21

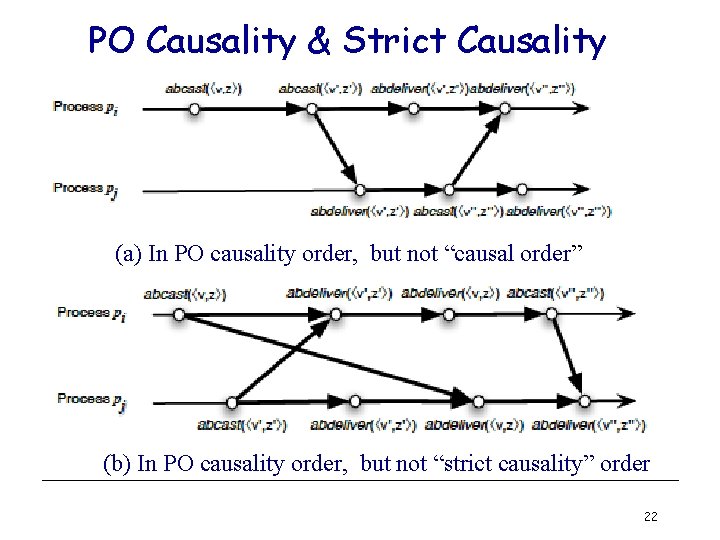

PO Causality & Strict Causality (a) In PO causality order, but not “causal order” (b) In PO causality order, but not “strict causality” order 22

- Slides: 22