Determining the number of clusters using information entropy

Determining the number of clusters using information entropy for mixed data Presenter : Hong-Yi, Cai Authors : Jiye Liang, Xingwang Zhao, Deyu Li, Fuyuan Cao, Chuangyin Dang PR, 2012 1

Outlines • • • 2 Motivation Objectives Methodology Experiments Conclusions Comments

Motivation • The determination of the initial parameters of cluster is the most difficult problem. • None of cluster algorithms can cluster effectively mixed data set. 3

Objectives • To propose a generalized mechanism on mixed data set by integrating Renyi entropy and complement entropy. • To improve k-prototype algorithm by using new generalized mechanism. 4

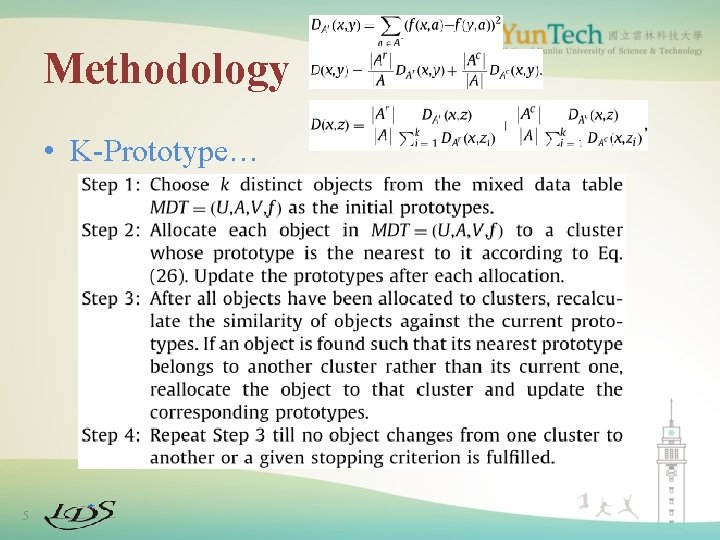

Methodology • K-Prototype… 5

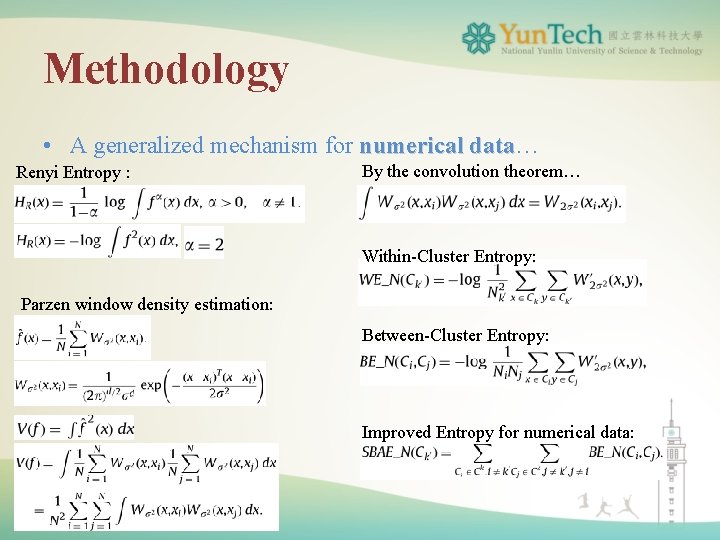

Methodology • A generalized mechanism for numerical data… data Renyi Entropy : By the convolution theorem… Within-Cluster Entropy: Parzen window density estimation: Between-Cluster Entropy: Improved Entropy for numerical data: 6

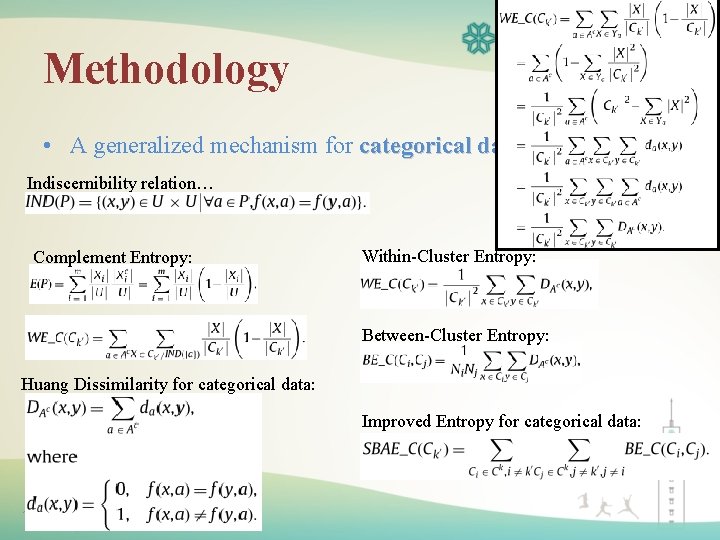

Methodology • A generalized mechanism for categorical data… data Indiscernibility relation… Complement Entropy: Within-Cluster Entropy: Between-Cluster Entropy: Huang Dissimilarity for categorical data: Improved Entropy for categorical data: 7

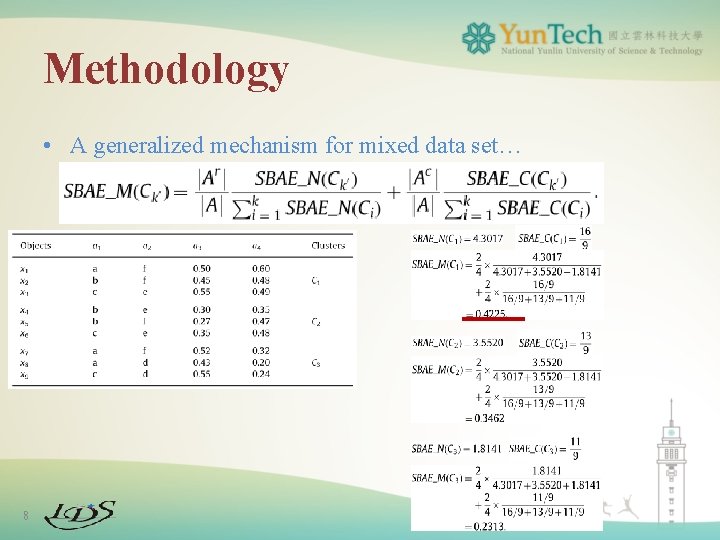

Methodology • A generalized mechanism for mixed data set… 8

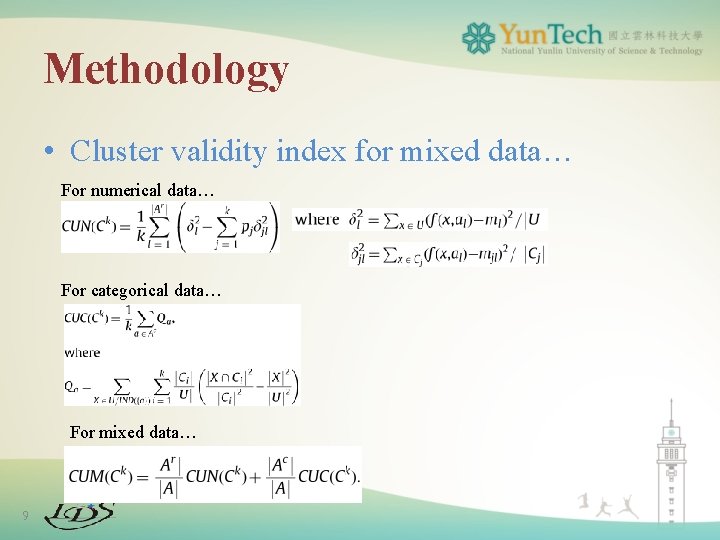

Methodology • Cluster validity index for mixed data… For numerical data… For categorical data… For mixed data… 9

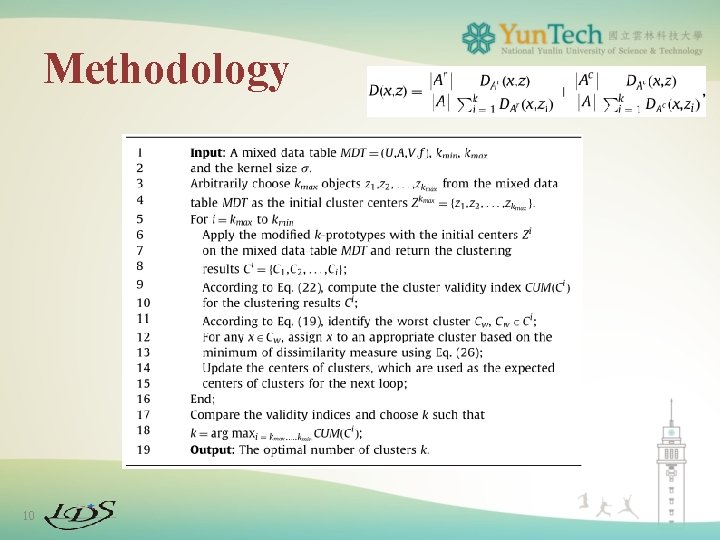

Methodology 10

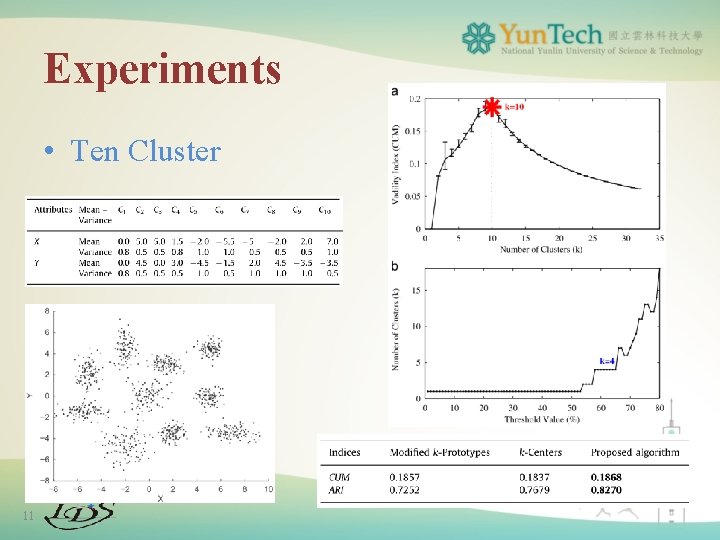

Experiments • Ten Cluster 11

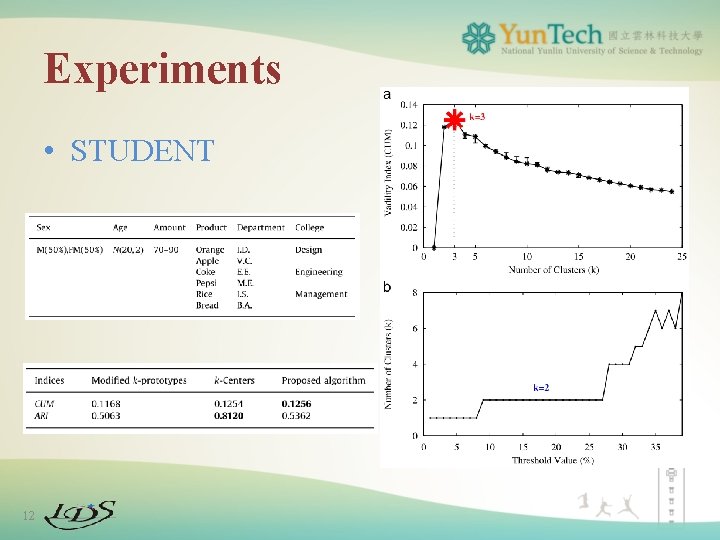

Experiments • STUDENT 12

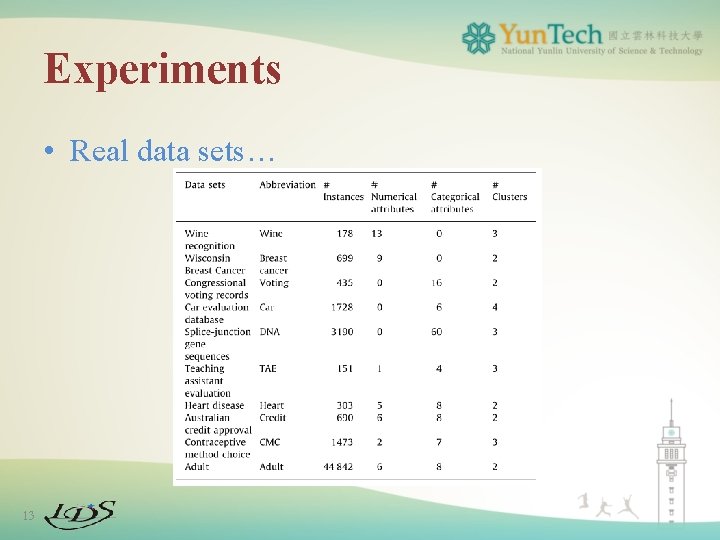

Experiments • Real data sets… 13

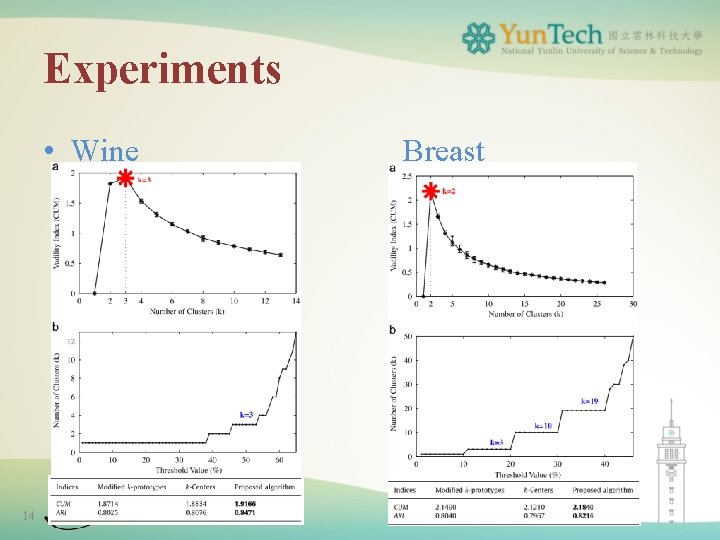

Experiments • Wine 14 Breast

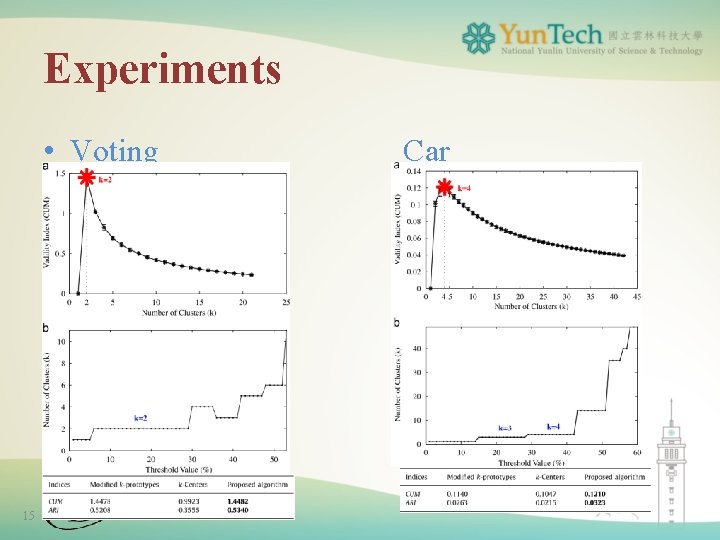

Experiments • Voting 15 Car

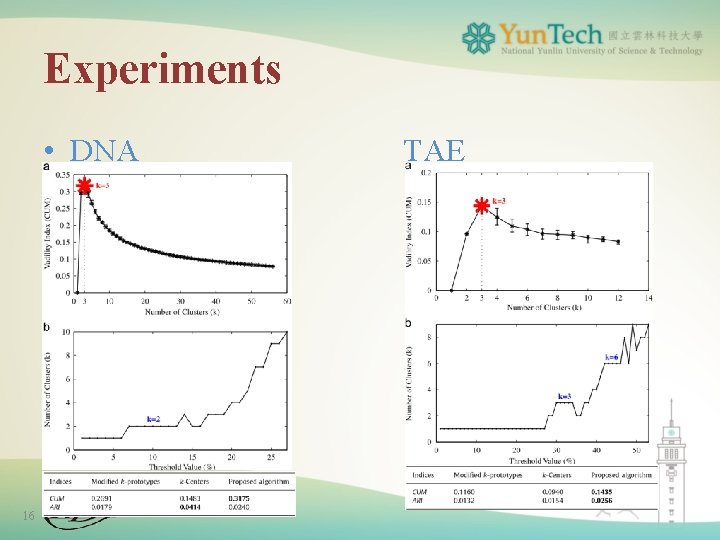

Experiments • DNA 16 TAE

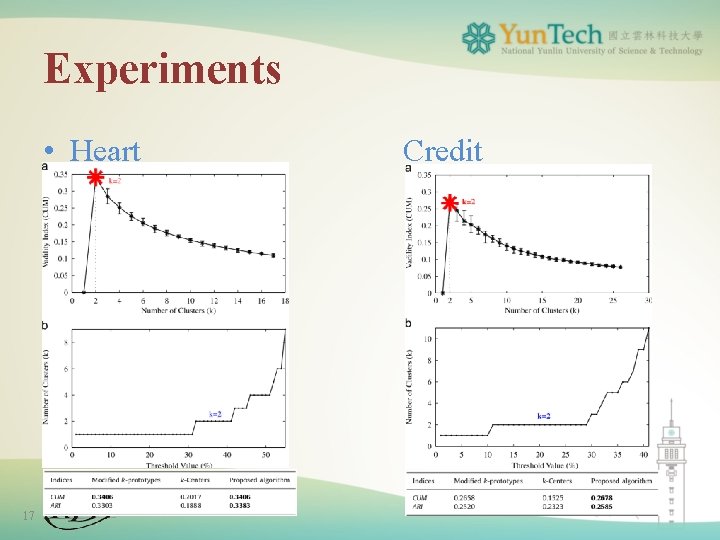

Experiments • Heart 17 Credit

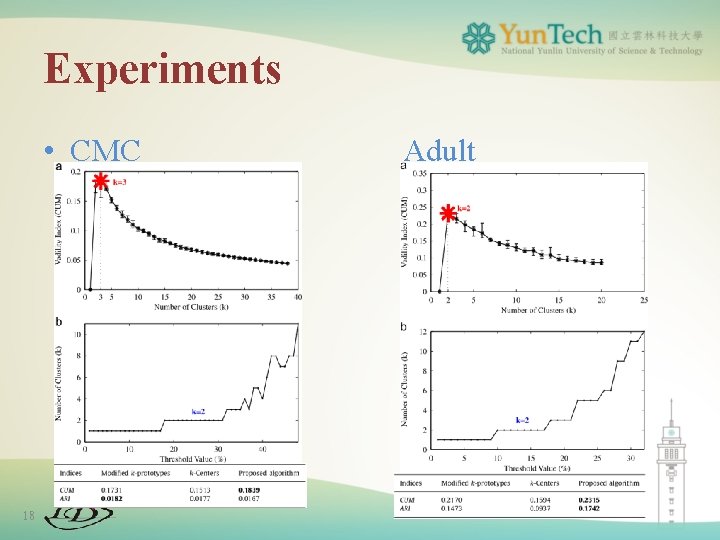

Experiments • CMC 18 Adult

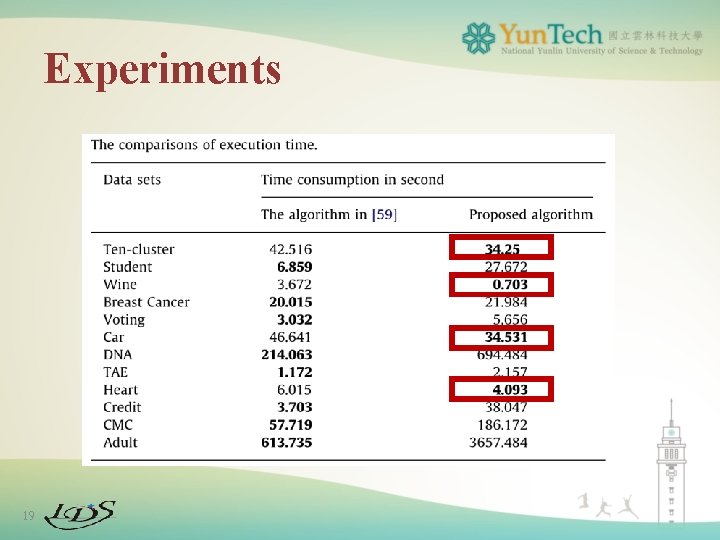

Experiments 19

Conclusions • The generalized mechanism and algorithm can cluster effectively and determine the optimal number of clusters for mixed data sets. 20

Comments • Advantages – The entropy can apply on mixed data set. • Applications – Cluster for mixed-type data 21

- Slides: 21