Detecting Unexpected Behaviour in Vacuum Gauges By Xavier

Detecting Unexpected Behaviour in Vacuum Gauges By: Xavier Weiss, Supervisor: Francesco Giordano 1

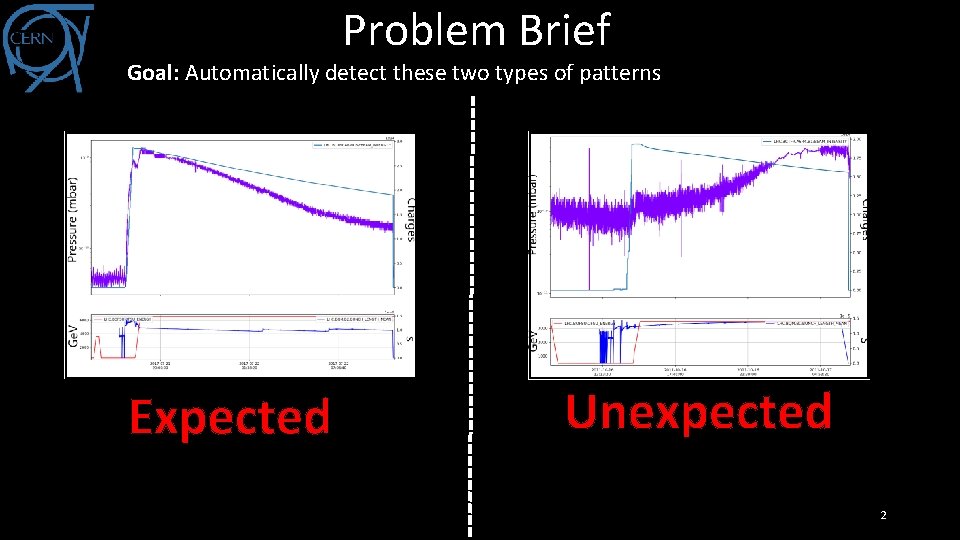

Problem Brief Goal: Automatically detect these two types of patterns Expected Unexpected 2

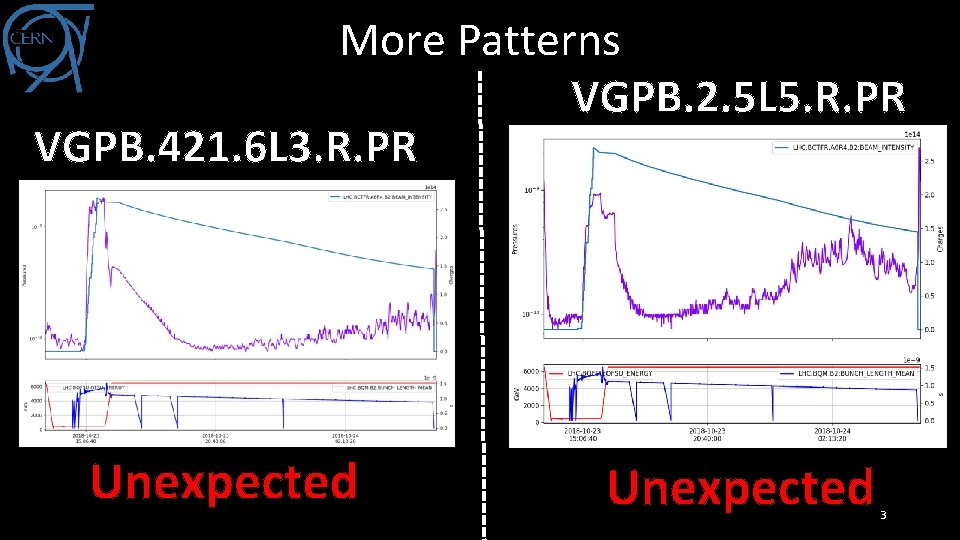

More Patterns VGPB. 2. 5 L 5. R. PR VGPB. 421. 6 L 3. R. PR Unexpected 3

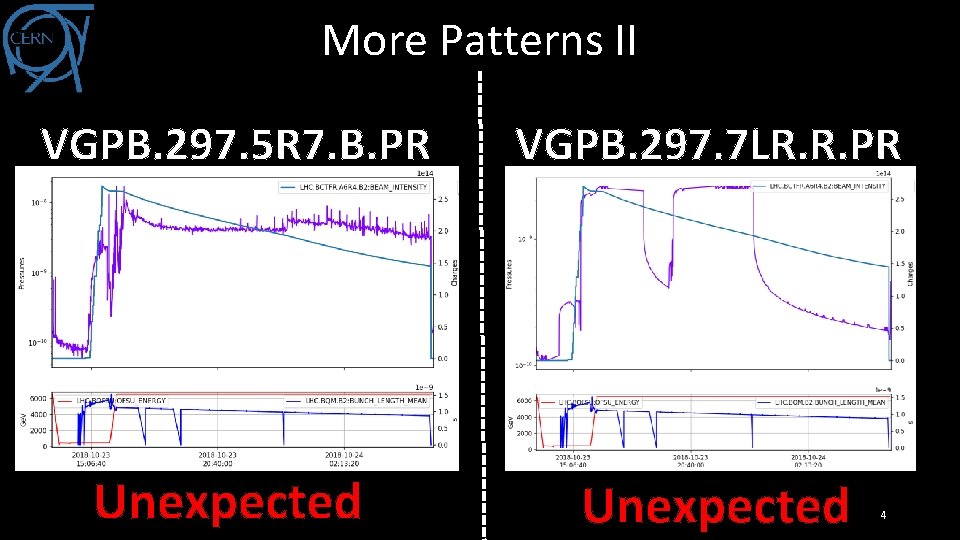

More Patterns II VGPB. 297. 5 R 7. B. PR VGPB. 297. 7 LR. R. PR Unexpected 4

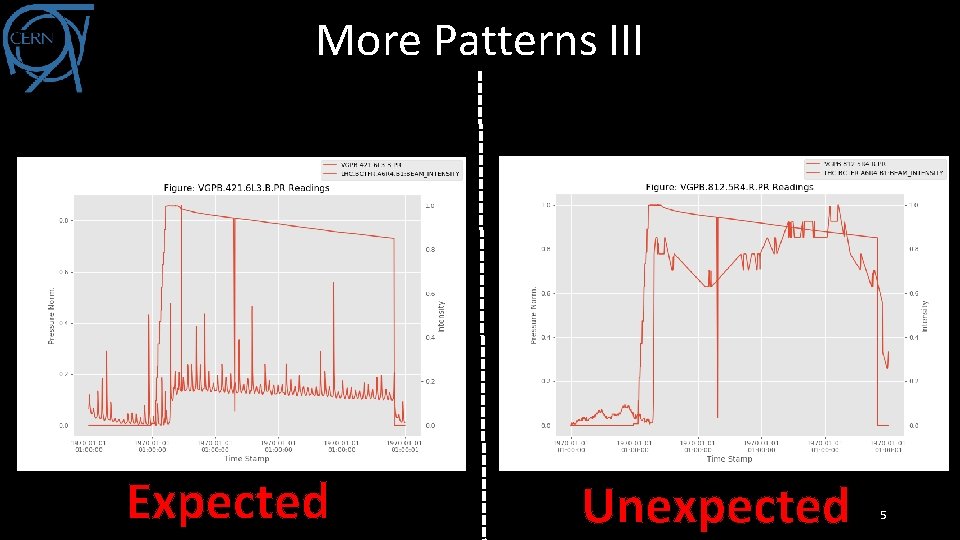

More Patterns III Expected Unexpected 5

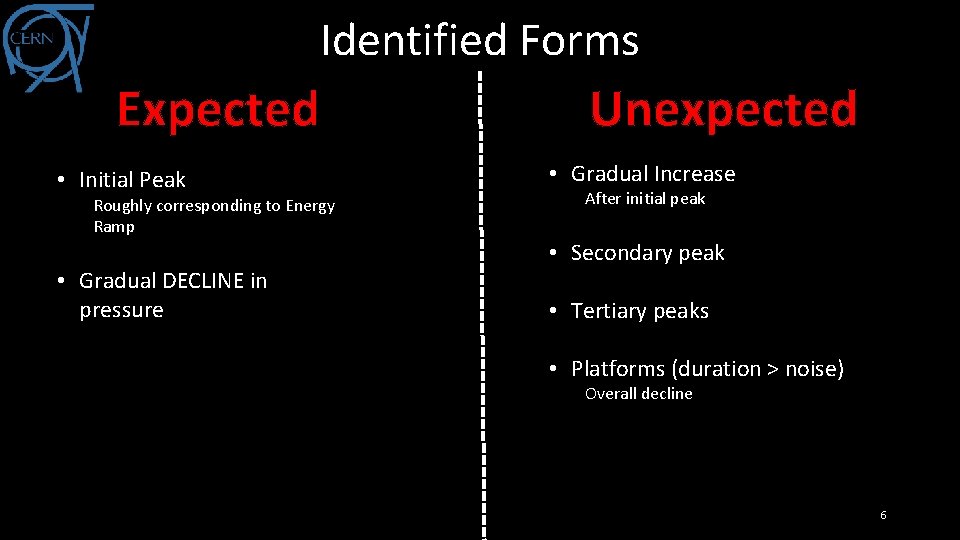

Identified Forms Expected • Initial Peak Roughly corresponding to Energy Ramp • Gradual DECLINE in pressure Unexpected • Gradual Increase After initial peak • Secondary peak • Tertiary peaks • Platforms (duration > noise) Overall decline 6

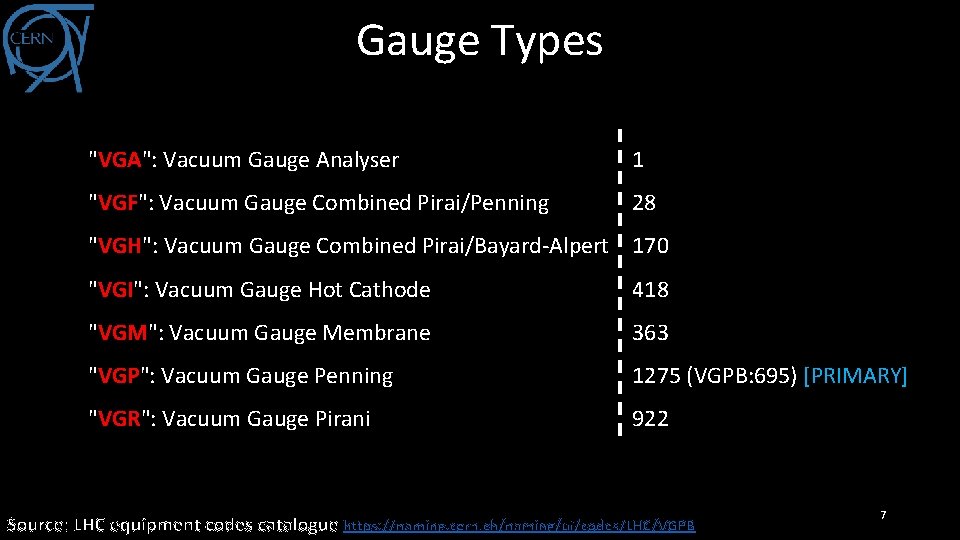

Gauge Types "VGA": Vacuum Gauge Analyser 1 "VGF": Vacuum Gauge Combined Pirai/Penning 28 "VGH": Vacuum Gauge Combined Pirai/Bayard-Alpert 170 "VGI": Vacuum Gauge Hot Cathode 418 "VGM": Vacuum Gauge Membrane 363 "VGP": Vacuum Gauge Penning 1275 (VGPB: 695) [PRIMARY] "VGR": Vacuum Gauge Pirani 922 Source: LHC equipment codes catalogue https: //naming. cern. ch/naming/ui/codes/LHC/VGPB 7

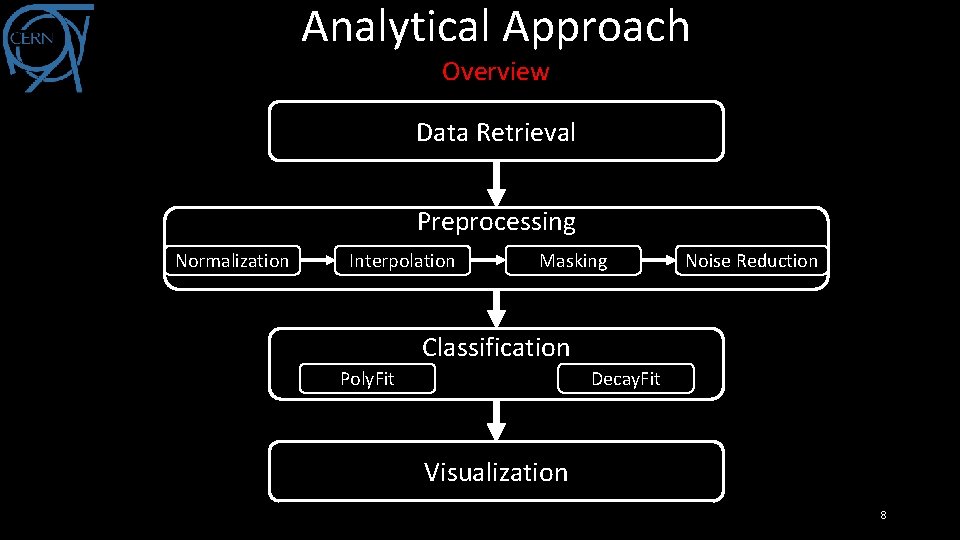

Analytical Approach Overview Data Retrieval Preprocessing Normalization Interpolation Masking Noise Reduction Classification Poly. Fit Decay. Fit Visualization 8

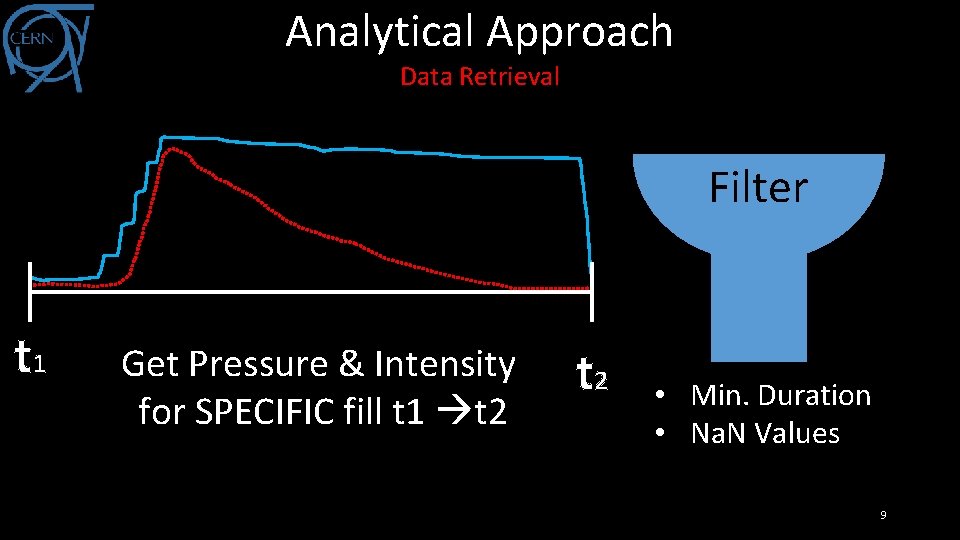

Analytical Approach Data Retrieval Filter t 1 Get Pressure & Intensity for SPECIFIC fill t 1 t 2 • Min. Duration • Na. N Values 9

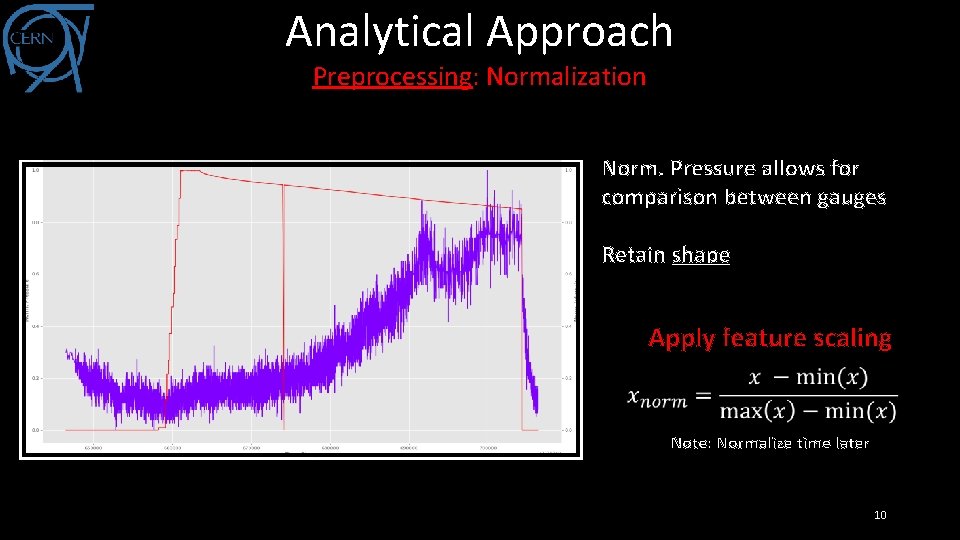

Analytical Approach Preprocessing: Normalization Norm. Pressure allows for comparison between gauges Retain shape Apply feature scaling Note: Normalize time later 10

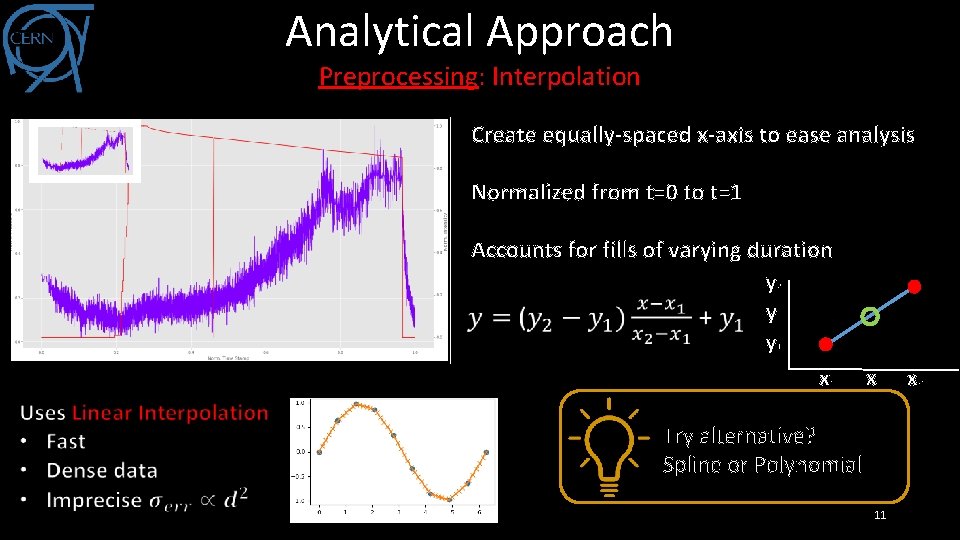

Analytical Approach Preprocessing: Interpolation Create equally-spaced x-axis to ease analysis Normalized from t=0 to t=1 Accounts for fills of varying duration y 2 y y 1 x Try alternative? Spline or Polynomial 11 x 2

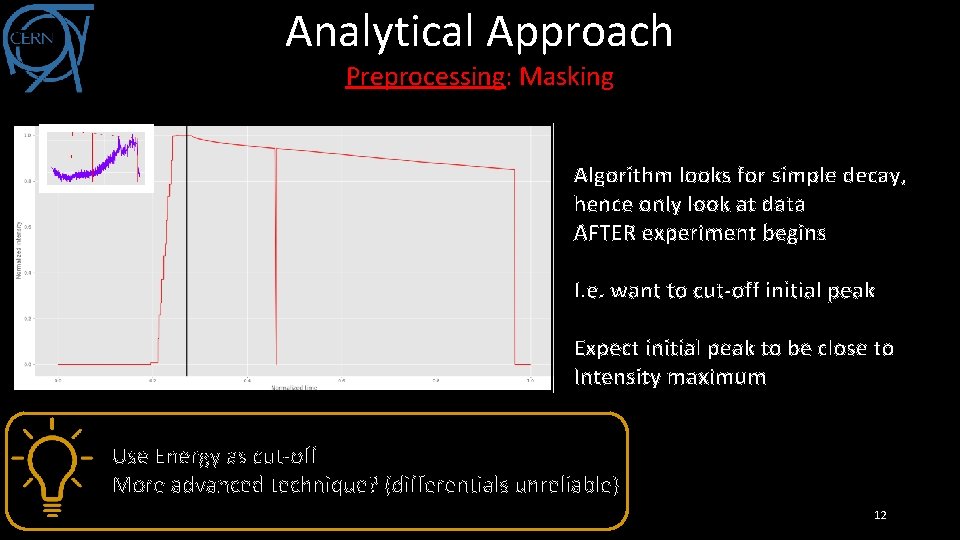

Analytical Approach Preprocessing: Masking Algorithm looks for simple decay, hence only look at data AFTER experiment begins I. e. want to cut-off initial peak Expect initial peak to be close to Intensity maximum Use Energy as cut-off More advanced technique? (differentials unreliable) 12

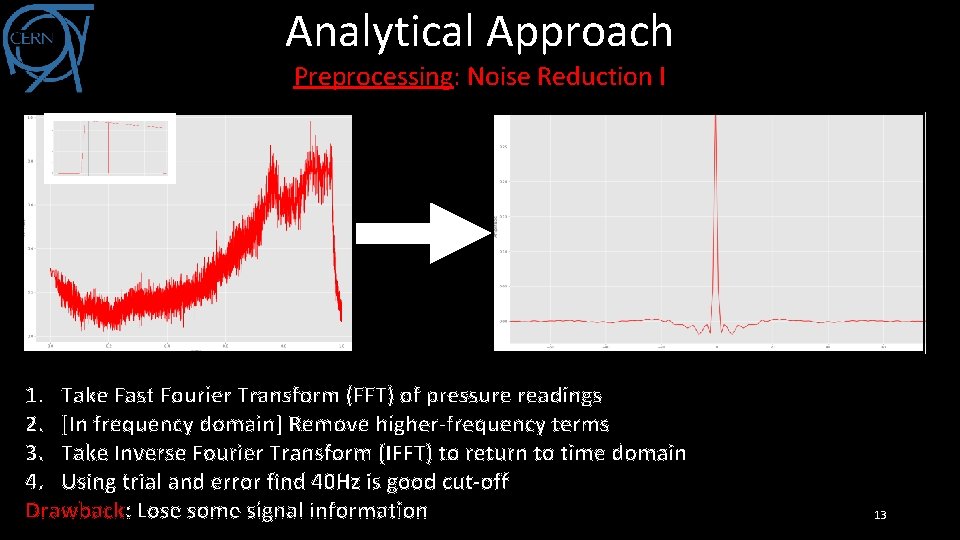

Analytical Approach Preprocessing: Noise Reduction I 1. Take Fast Fourier Transform (FFT) of pressure readings 2. [In frequency domain] Remove higher-frequency terms 3. Take Inverse Fourier Transform (IFFT) to return to time domain 4. Using trial and error find 40 Hz is good cut-off Drawback: Lose some signal information 13

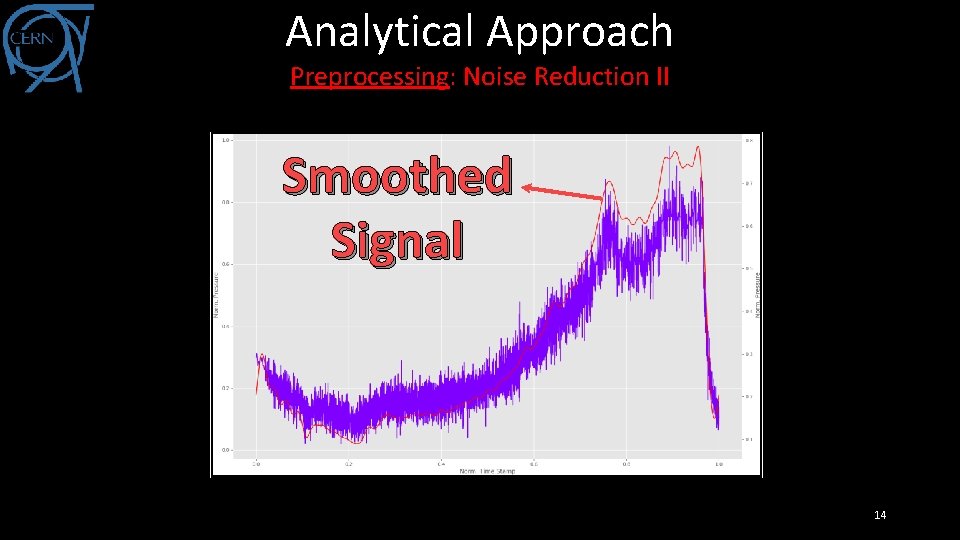

Analytical Approach Preprocessing: Noise Reduction II Smoothed Signal 14

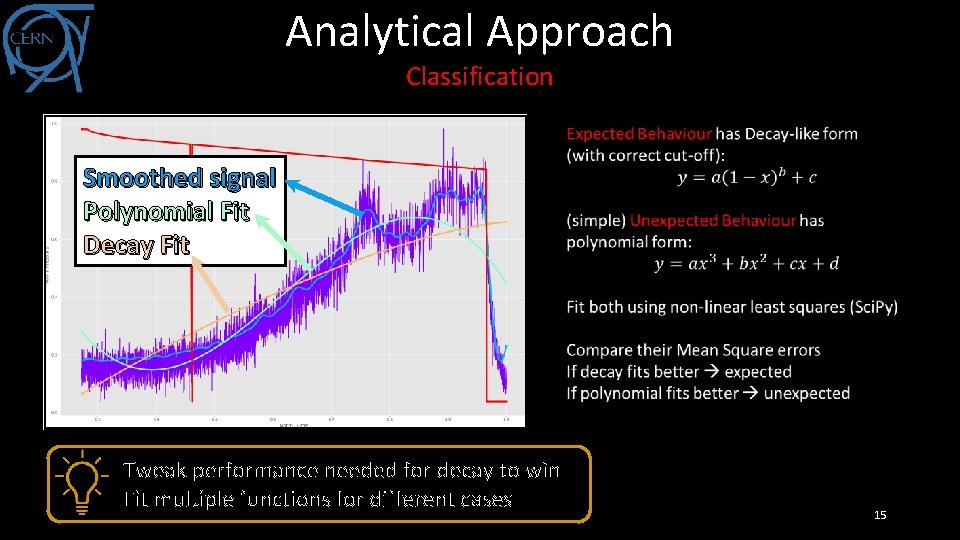

Analytical Approach Classification Smoothed signal Polynomial Fit Decay Fit Tweak performance needed for decay to win Fit multiple functions for different cases 15

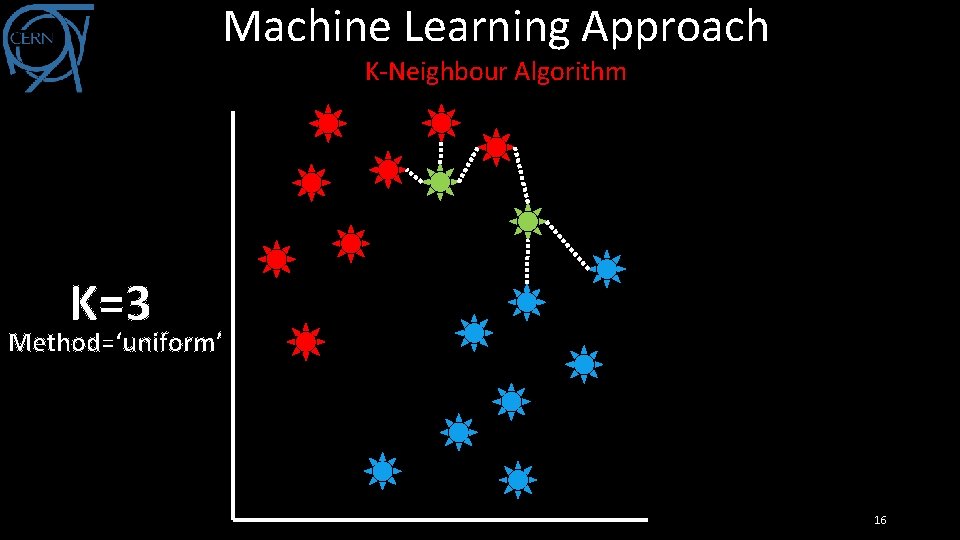

Machine Learning Approach K-Neighbour Algorithm K=3 Method=‘uniform’ 16

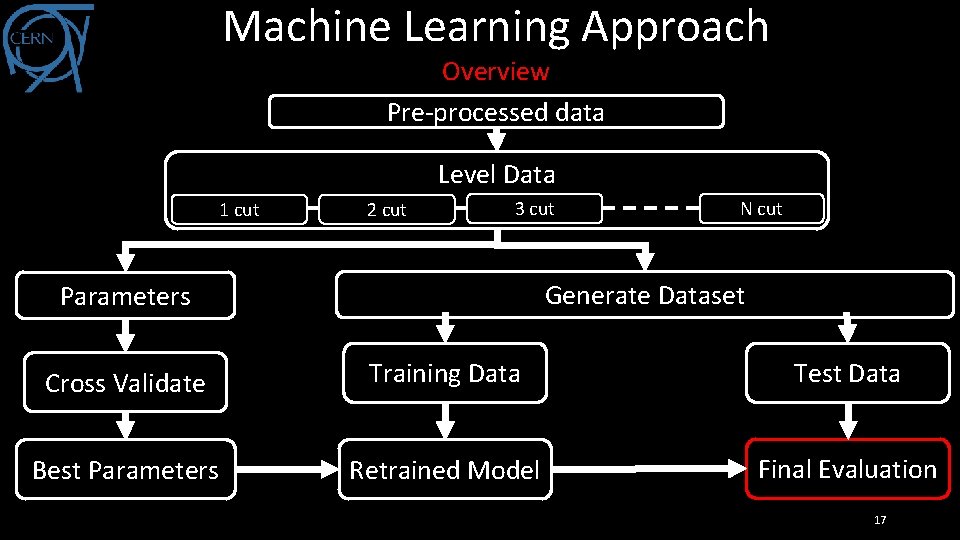

Machine Learning Approach Overview Pre-processed data Level Data 1 cut 2 cut 3 cut N cut Generate Dataset Parameters Cross Validate Training Data Test Data Best Parameters Retrained Model Final Evaluation 17

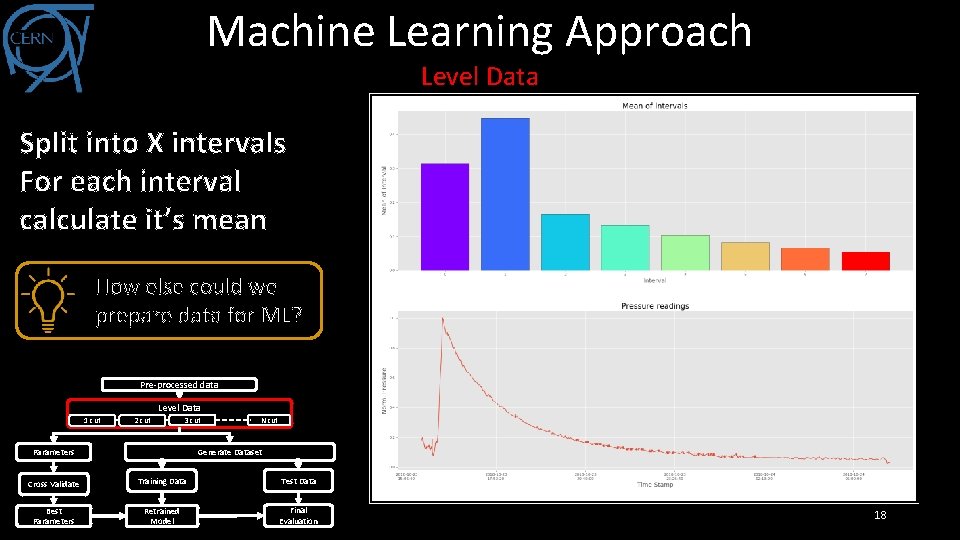

Machine Learning Approach Level Data Split into X intervals For each interval calculate it’s mean How else could we prepare data for ML? Pre-processed data Level Data 1 cut 2 cut 3 cut N cut Generate Dataset Parameters Cross Validate Training Data Test Data Best Parameters Retrained Model Final Evaluation 18

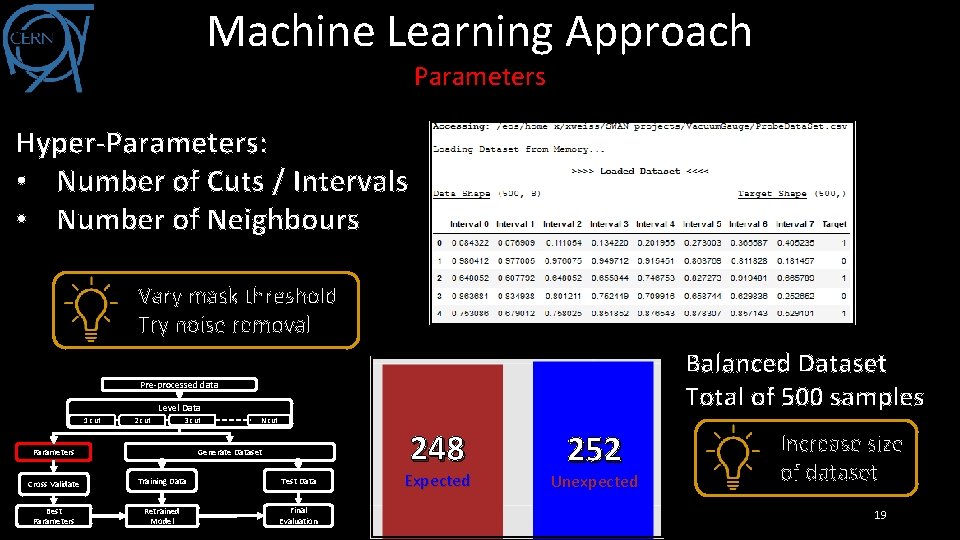

Machine Learning Approach Parameters Hyper-Parameters: • Number of Cuts / Intervals • Number of Neighbours Vary mask threshold Try noise removal Balanced Dataset Total of 500 samples Pre-processed data Level Data 1 cut 2 cut 3 cut N cut 248 Generate Dataset Parameters Cross Validate Training Data Test Data Best Parameters Retrained Model Final Evaluation Expected 252 Unexpected Increase size of dataset 19

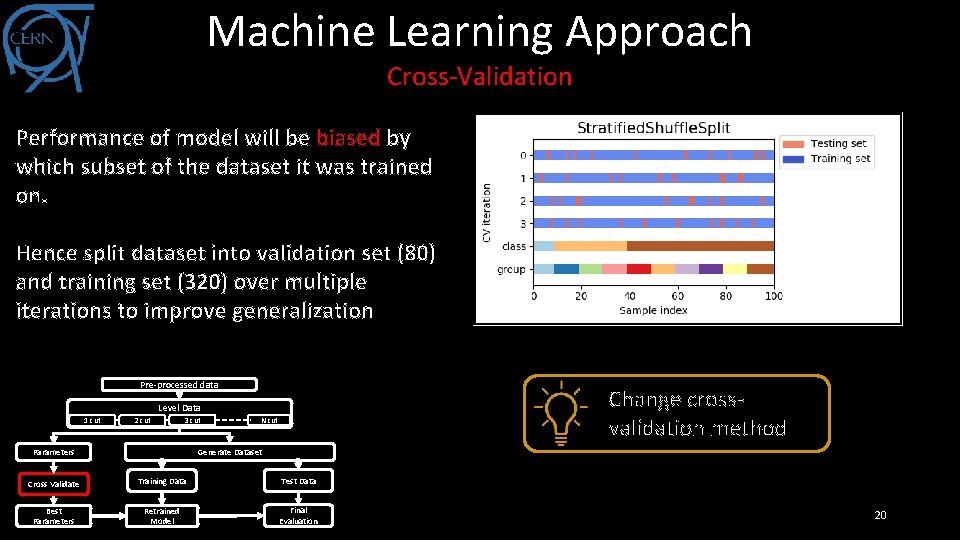

Machine Learning Approach Cross-Validation Performance of model will be biased by which subset of the dataset it was trained on. Hence split dataset into validation set (80) and training set (320) over multiple iterations to improve generalization Pre-processed data Change crossvalidation method Level Data 1 cut 2 cut 3 cut N cut Generate Dataset Parameters Cross Validate Training Data Test Data Best Parameters Retrained Model Final Evaluation 20

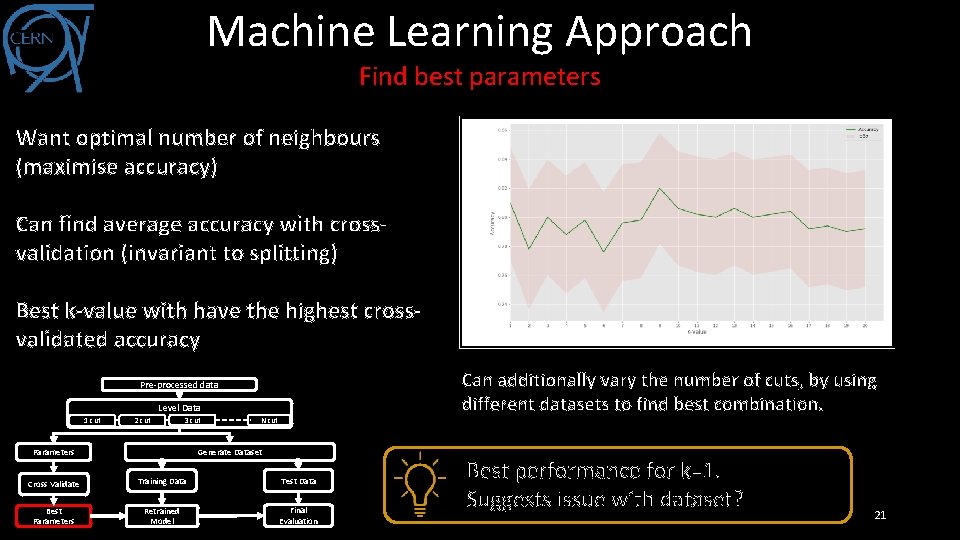

Machine Learning Approach Find best parameters Want optimal number of neighbours (maximise accuracy) Can find average accuracy with crossvalidation (invariant to splitting) Best k-value with have the highest crossvalidated accuracy Can additionally vary the number of cuts, by using different datasets to find best combination. Pre-processed data Level Data 1 cut 2 cut 3 cut N cut Generate Dataset Parameters Cross Validate Training Data Test Data Best Parameters Retrained Model Final Evaluation Best performance for k=1. Suggests issue with dataset? 21

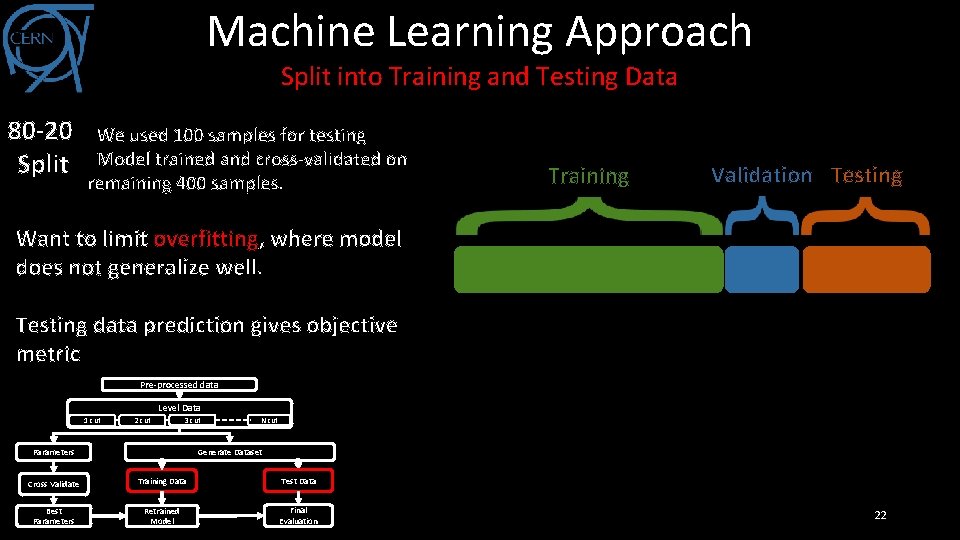

Machine Learning Approach Split into Training and Testing Data 80 -20 Split We used 100 samples for testing Model trained and cross-validated on remaining 400 samples. Training Validation Testing Want to limit overfitting, where model does not generalize well. Testing data prediction gives objective metric Pre-processed data Level Data 1 cut 2 cut 3 cut N cut Generate Dataset Parameters Cross Validate Training Data Test Data Best Parameters Retrained Model Final Evaluation 22

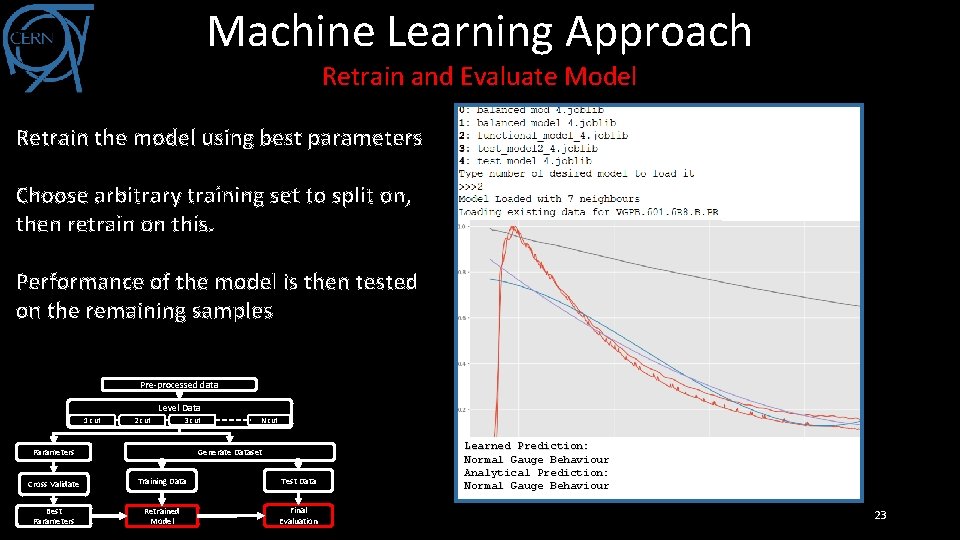

Machine Learning Approach Retrain and Evaluate Model Retrain the model using best parameters Choose arbitrary training set to split on, then retrain on this. Performance of the model is then tested on the remaining samples Pre-processed data Level Data 1 cut 2 cut 3 cut N cut Generate Dataset Parameters Cross Validate Training Data Test Data Best Parameters Retrained Model Final Evaluation Learned Prediction: Normal Gauge Behaviour Analytical Prediction: Normal Gauge Behaviour 23

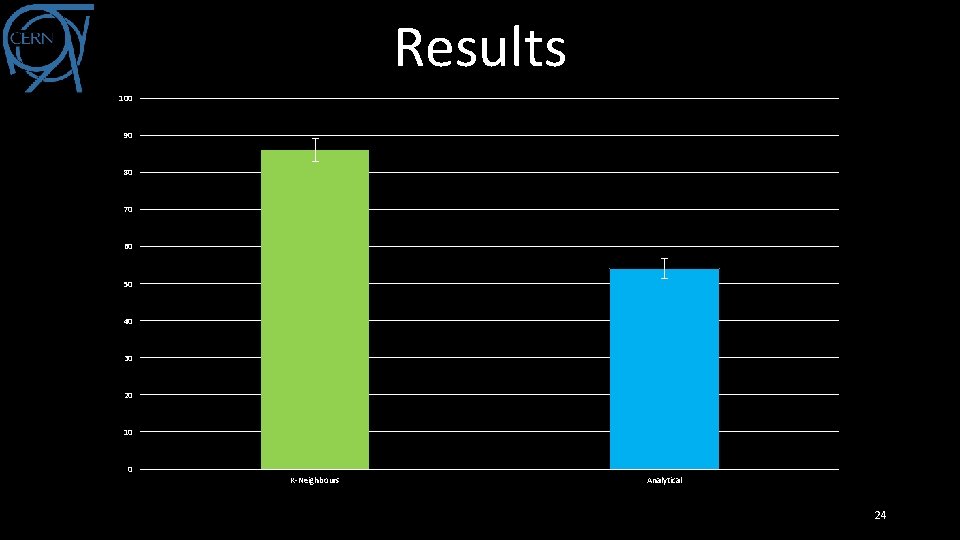

Results 100 90 80 70 60 50 40 30 20 10 0 K-Neighbours Analytical 24

Conclusion v The Problem: Binary Classification problem with diverse behaviour inside the classes v Analytical Approach: Fit curves to readings and compare how well they fit v Machine Learning Approach: Compare new sample with knearest neighbours v Performance Analytical Approach performs well under ‘normal’ conditions but does not generalize well to diverse behaviour Machine Learning Approach performs well on diverse behaviour but should improve for ‘normal’ conditions 25

Challenges v. Generalizing to all gauge types (dataset primarily VGPB) v. Other types less reliable? v. Reliable method for cutting off the initial peak v. Dealing with edge cases v. Stable pressure (not increasing or decreasing) v. Small plateaus in pressure (noise or coupling? ) v. Is levelling the best approach v. Simple and computationally efficient v. Lose some information but also reduces overfitting (training on noise) 26

- Slides: 26