DETECTING DATA ERRORS WHERE ARE WE AND WHAT

- Slides: 53

DETECTING DATA ERRORS : WHERE ARE WE AND WHAT NEEDS TO BE DONE ? ZIAWASCH ABEDJAN MIT CSAIL XU CHU UNIVERSITY OF WATERLOO RAUL CASTRO FERNANDEZ MIT CSAIL IHAB F. LLYAS UNIVERSITY OF WATERLOO MOURAD OUZZANI QATAR COMPUTING RESEARCH INSTITUTE, HBKU DONG DENG TSINGHUA UNIVERSITY PAOLO PAPOTTI ARIZONA STATE UNIVERSITY MICHAEL STONEBRAKER MIT CSAIL NAN TANG QATAR COMPUTING RESEARCH INSTITUTE, HBKU PROCEEDINGS OF THE VLDB ENDOWMENT, VOL. 9, NO. 12 161014 tabata

AUTHOR Ziawasch Abedjan § MIT CSAIL § Database § Data Integration § Data Profiling

Abstract

ABSTRACT Data Cleaning Technology § Detect and possibly repair certain classes of errors. § Data cleaning has played a critical role in ensuring data quality. Types of errors § Outlier § Duplicate § Missing value § Violations of integrity constraints →Different types of errors are in the same data set.

ABSTRACT Two questions 1. are these tools robust enough to capture most errors in real-world data sets? 2. what is the best strategy to holistically run mul- tiple tools to optimize the detection effort? Prepare five datasets § Using real world dataset Combine multiple tools and minimizing human effort in verifying results

Introduction

INTRODUCTION Tool categories 1. Rule-based detection algorithm § User can specify a collection of rules that clean data will obey. ex) multi-attribute functional dependencies 2. Pattern enforcement and transformation tool § By Inspecting semantic or syntactic in dataset, detect errors. 3. Quantitative error detection § Expose outliers, and glitches in the dataset. 4. Record linkage and de-duplication algorithm § Entity consolidation when multiple records have data for the same entity. § onflicting values for the same attribute can be found, indicating possible errors.

INTRODUCTION Several challenges 1. Synthetic data and errors § Most cleaning algorithms have been evaluated on synthetic or real-world data with synthetically injected errors. →However, these test soundness of algorithms, effectiveness of in the real is unclear. 2. Combination of error types and tools § There are many types of errors in the dataset, and same errors are detected by various tools. →If we don’t care about this, miss an opportunity to accumulate evidence from multiple tools. 3. Human involvement § Humans’ power is limited so we should minimize the cost of the humans’ task.

INTRODUCTION Our Evaluation Methodology § Obtained a collection of real-world data sets(§ 2) § It was important for this experiment to work with as many real-world data sets as possible. § Obtained full or partial ground truth for each data set. § Selected data cleaning tools(§ 3) § we are not interested in automatic repair. § Used tools in many strategies § Evaluate effectiveness by using precision and recall. (§ 4)

INTRODUCTION Our Evaluation Methodology § While we did our best in using a tool, we miss some errors. § Since we do not have an oracle and cannot optimally configure a tool, we use the known ground truth to estimate the upper-bound recall. § We count missed error which is detectable in the tool as recall of the tool. ex) When an error that can be captured by defining a new functional dependency, we count this error towards the upperbound recall of the rule-based tool.

INTRODUCTION Conclusion 1. There is no single dominant tool, so it is important to combine multiple tools. 2. Considering overlap of errors in various tool, we can reduce “false positive” by ordering tools. Due to large variance between tools, this ordering strategy must be data set specific. 3. The percentage of errors that can be found by the ordered application of all tools is well less than 100%. We tried to detect remaining errors by using some tools, but we didn’t find data errors that can be spotted by humans.

DATA ERRORS AND DATA SETS

DATA ERRORS AND DATA SETS Types of errors 1. Outlier § Values that deviate from the distribution of values in a column of a table. 2. Duplicates § Distinct records that refer to the same real-world entity, but attribute values do not match. 3. Rule violations § Values that violate any kind of integrity constraints. 4. Pattern violations § Values that violate syntactic and semantic constraints.

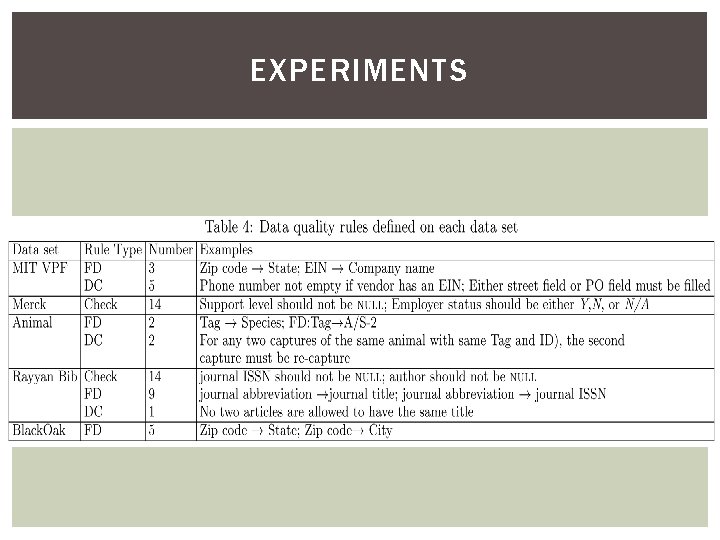

DATA ERRORS AND DATA SETS Datasets 1. MIT VPF § Maintains several finance databases, and related to procurement. § When MIT executes a purchase order, customer information is inserted automatically. →Some errors occur which don’t fit to the format. § To get ground truth, employees of VPF manually curated a random sample of 13, 603 records (more than half of the data set) and marked erroneous fields

DATA ERRORS AND DATA SETS Datasets 2. Merck § Provides dataset that describes IT services and software systems within the company that are partly managed by third parties. § The dataset has 68 attributes but is very sparse. § Various third parties insert values, so it is not consistently formatted. →Merck provided custom script that produce a cleaned version of the dataset. We used this version as a ground truth. § The script normalizes columns and allows for uniform value representation

DATA ERRORS AND DATA SETS Datasets 3. Animal § Provided by scientists at UC Berkeley who are studying the effects of firewood cutting on small terrestrial vertebrates. § Each column has information about animal’s tag number, weight, captured location, and date as attribute. § There occurs wrong number insertion. § ground truth made by human by fixing datasets.

DATA ERRORS AND DATA SETS Datasets 4. Rayyan Bib § These are literature reviews focused on a research question, and identify and synthesize all research evidence relevant to that question. § Users search various database and consolidate their search results into long lists § These references are coming from multiple source sand some users may manually manipulate these citations, the data is prone to errors.

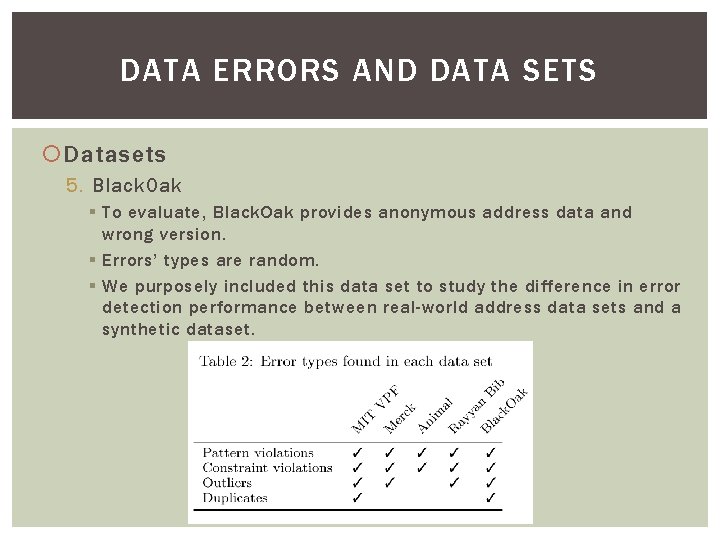

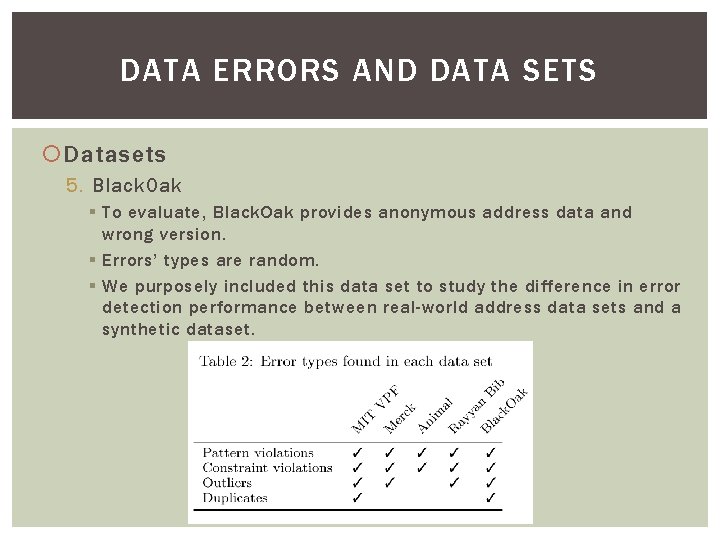

DATA ERRORS AND DATA SETS Datasets 5. Black. Oak § To evaluate, Black. Oak provides anonymous address data and wrong version. § Errors’ types are random. § We purposely included this data set to study the difference in error detection performance between real-world address data sets and a synthetic dataset.

DATA CLEANING TOOLS

DATA CLEANING TOOLS Outlier Detection § DBOOST § This tool uses histograms, Gaussian and multivariate Gaussian mixtures (GMM) § Histograms create a de facto distribution of the data. § Gaussian and GMM create distribution, if these are given mean and standard deviation. § it is necessary to configure the parameters of the different outlier detection methods: number of bins and their width for histograms.

DATA CLEANING TOOLS Rule-based Error Detection § DC-Clean § This tool focuses on denial constraints (DCs) and reports any cell that participates in at least one violation as erroneous. § We design a collection of DCs to capture the semantics of the data. Ex) for the animal data set, “if there are two captures of the same animal indicated by the same tag number, then the first capture must be marked as original”

DATA CLEANING TOOLS Pattern-based Detection § Open Refine § This tool can make group(called facet) columns by each values of attributes. § If there are not consistently formatted, the values are displayed an we can modify. § Trifacta § Detect various syntactic errors in dataset. § Detect outlier value that deviate strongly from the value lengths distribution. § KATARA § If a value violates a semantic pattern, the value is regarded as an error.

DATA CLEANING TOOLS Pattern-based Detection § PENTAHO § We can create graphs about ETL(Extract-Translate-Load) operation. § We can convert coded value to correct value. (automatic data cleaning) § KNIME § In addition to PENTAHO, KNIME includes node about machine learning operation. § Unlike OPEN REFINE and TRIFACTA, KNIME doesn’t automatically display outliers and type mismatches, so the user has to know exactly what kind of rules and patterns need to be verified.

DATA CLEANING TOOLS Duplicate Detection § TAMR is the tool for industrial strength data integration algorithms for record linkage and schema mapping. § TAMR detects duplications by machine learning based on expert sourcing and similarity metrics.

DATA CLEANING TOOLS Combination of Multiple Tools § Union All and Min-k § Union All…Use results of all tools. § Min-k…Exclude an error if less than k tools only can detect the error.

DATA CLEANING TOOLS Combination of Multiple Tools Ordering based on precision If combine multiple tools, we should verify whether truly error or not and the verification cost may be significant. →Two solutions

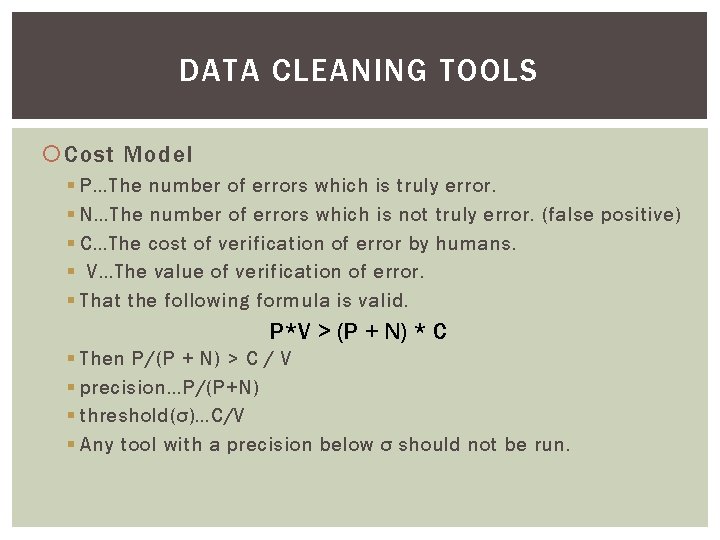

DATA CLEANING TOOLS Cost Model § P…The number of errors which is truly error. § N…The number of errors which is not truly error. (false positive) § C…The cost of verification of error by humans. § V…The value of verification of error. § That the following formula is valid. P*V > (P + N) * C § Then P/(P + N) > C / V § precision…P/(P+N) § threshold(σ)…C/V § Any tool with a precision below σ should not be run.

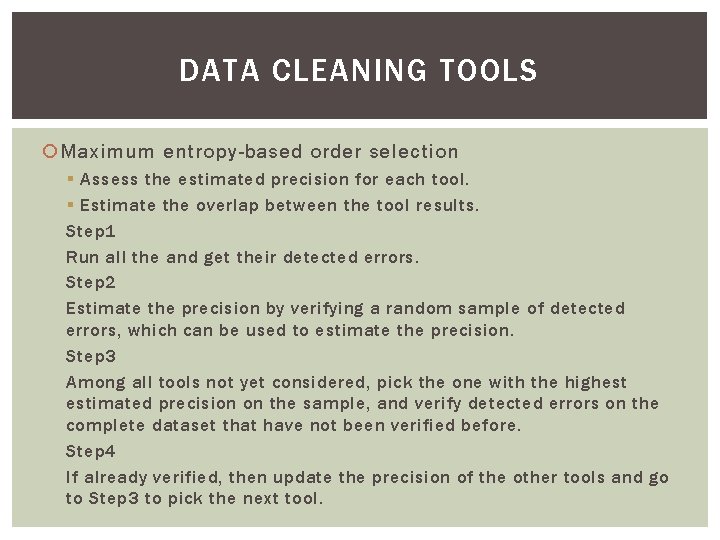

DATA CLEANING TOOLS Maximum entropy-based order selection § Assess the estimated precision for each tool. § Estimate the overlap between the tool results. Step 1 Run all the and get their detected errors. Step 2 Estimate the precision by verifying a random sample of detected errors, which can be used to estimate the precision. Step 3 Among all tools not yet considered, pick the one with the highest estimated precision on the sample, and verify detected errors on the complete dataset that have not been verified before. Step 4 If already verified, then update the precision of the other tools and go to Step 3 to pick the next tool.

EXPERIMENTS

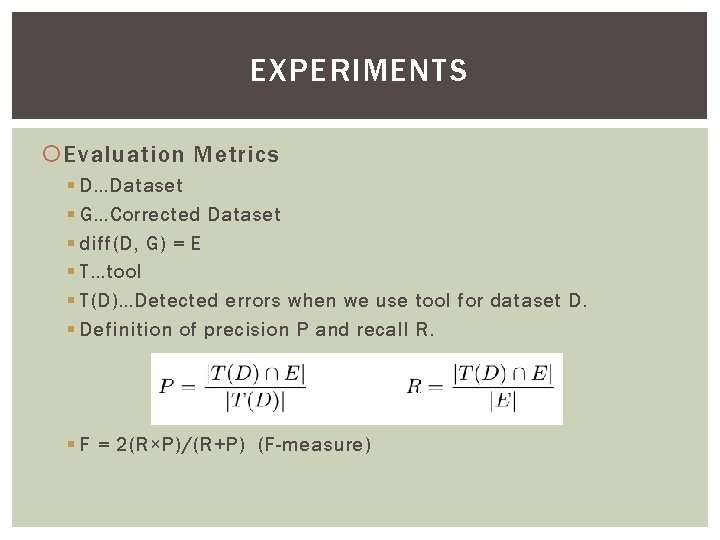

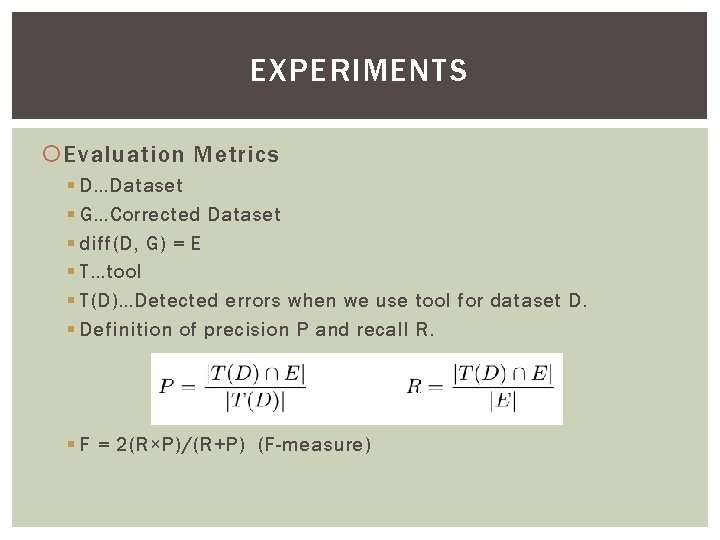

EXPERIMENTS Evaluation Metrics § D…Dataset § G…Corrected Dataset § diff(D, G) = E § T…tool § T(D)…Detected errors when we use tool for dataset D. § Definition of precision P and recall R. § F = 2(R×P)/(R+P) (F-measure)

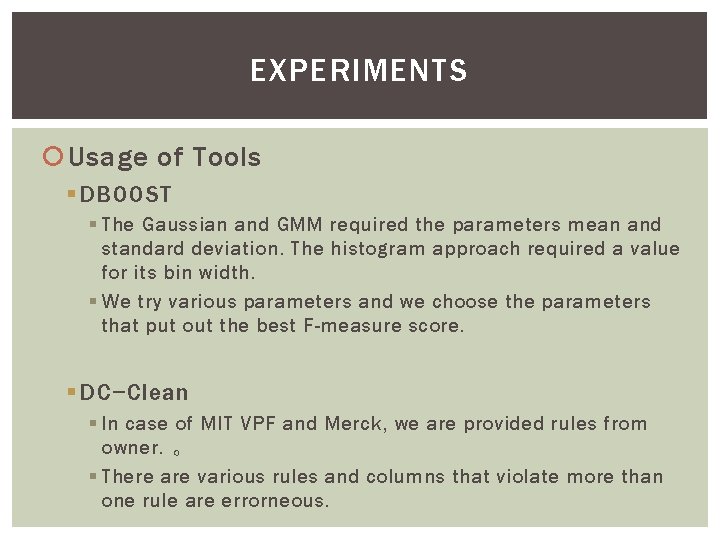

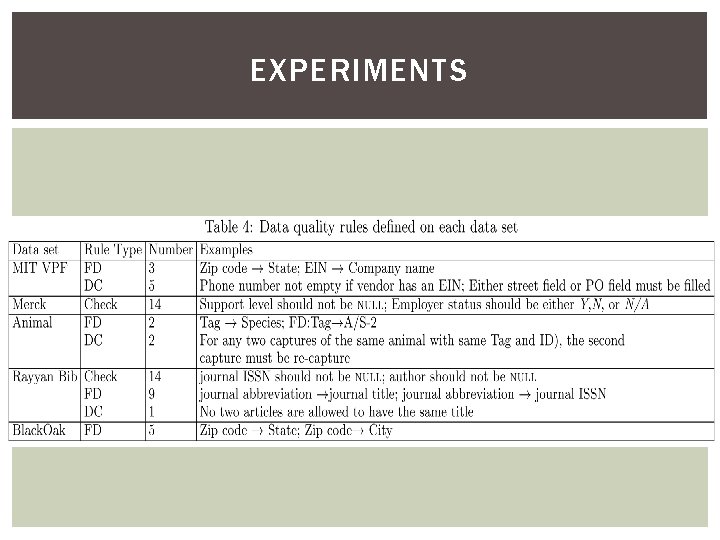

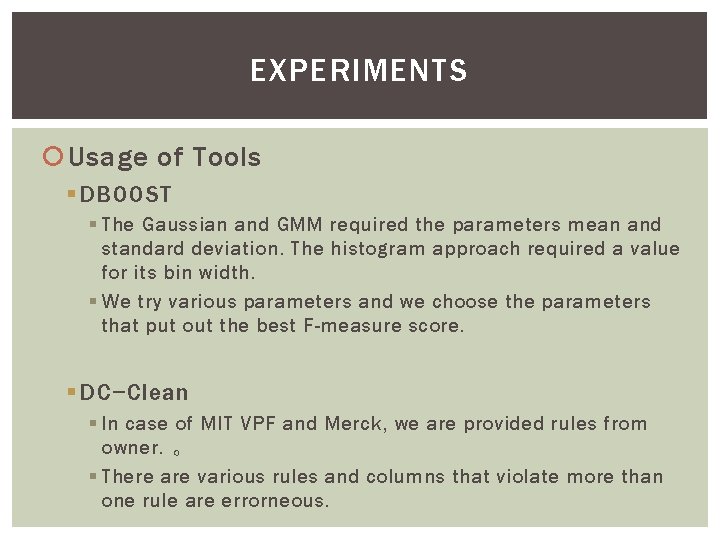

EXPERIMENTS Usage of Tools § DBOOST § The Gaussian and GMM required the parameters mean and standard deviation. The histogram approach required a value for its bin width. § We try various parameters and we choose the parameters that put out the best F-measure score. § DC−Clean § In case of MIT VPF and Merck, we are provided rules from owner. 。 § There are various rules and columns that violate more than one rule are errorneous.

EXPERIMENTS

EXPERIMENTS OPEN REFINE § Using facet, we can detect mismatch of notation. § Automatically correct values that are not consistently formatted. TRIFACTA § The same as OPEN REFINE, TRIFACTA detects inconsistent format. § Additionally, we considered the outlier detection and type-verification engines in TRIFACTA as additional ways of detecting errors.

EXPERIMENTS KATARA § If a cell is unfit the dataset semantically, we declare it to be an error. § Since semantic knowledge inevitably domain-specific, we manually constructed one for each data set. § Ex) We used an ISSN knowledge for Rayyan Bib data that contains journal ISSNs, journal names, journal abbreviations, journal languages, etc. PENTAHO and KNIME § However, we use the same purpose with OPEN REFINE and TRIFACTA, we have to manually configure ETL workflow. § The potential errors have been marked through transformations.

EXPERIMENTS TAMR § TAMR carry out operation that automatically search duplicated pair several times for training. § If they stable for more than two iterations, we stop training and run TAMR on the whole data set to discover clusters of duplicates.

EXPERIMENTS User involvement 1. Configure tools by specifying rules or patterns. 2. Can manipulate date to detect errors by TRIFACTA and OPEN REFINE. 3. Verify errors that are detected by tools. This verification is need to calculate recall and precision. 4. Classify remaining errors to existing types of errors. This Classification is need to calculate upper-bound recall.

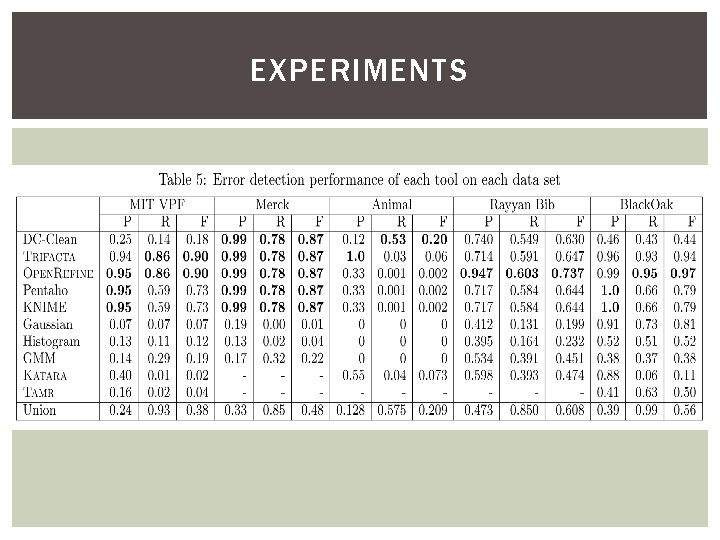

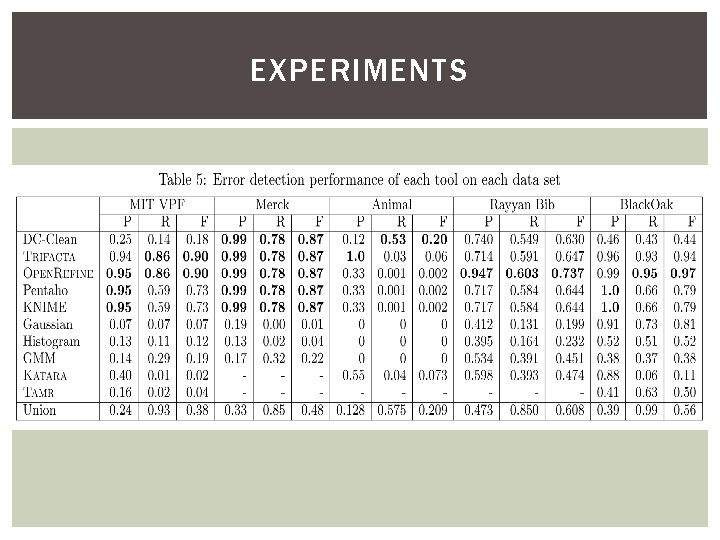

EXPERIMENTS Individual Tools Effectiveness 1. TRIFACTAとOPEN REFINE § MIT VPF, MERCK, BLACK Oak…high recall →The problem about format is the most important for these datasets. § Poor results on the Animal dataset. →Most errors are of semantic nature. § In all datasets, these tools are subsume the result of PENTAHO and KNIME →These tools automatically hint at data type mismatches and outliers.

EXPERIMENTS 2. DC−Clean § From the point of view of F-measure, this tool are good performance in MERCK, Animal and Rayyan Bib. →These datasets’ rules have columns that are prone to error. § With regard to Merck, the result is same to PENTAHO, KNIME, TRIFACTA, OPEN REFINE. →All patterns could be translated into DC check rules. § As for Black. Oak, the result of TRIFACTA and OPEN REFINEDC is greater than DC-Clean’s. →Black. Oak contains syntactic error that could not be anticipated with DCs.

EXPERIMENTS 3. Outlier detection § Do nothing on the Animal dataset. → There are no outliers. § Outlier detection would be more effective on high-quality data sets where errors are rather rare. 4. KATARA § BLACK Oak…good precision § MIT VPF…bad precision →In BLACK Oak dataset, location values could be easily verified via the semantic knowledge. In MIT VPF dataset, errors were mostly found in address values rather than in geographic locations

EXPERIMENTS 5. TAMR § MIT VPF…Detect all duplicated sets, but precision and recall is low. →There are few duplicated errors in whole errors. § BLACK Oak…Not thinking about other types of errors, recall became 98%. → 98 % of errors in the dataset contains duplicated records. § There are better performance in synthetic dataset than realworld dataset. →As a future work, should not use synthetic faults. § No single tool is dominant on all data sets.

EXPERIMENTS

EXPERIMENTS Tool Combination Effectiveness § Union All and Min-k § Union All…Good recall, and bad precision →Increasing of false positive § We use Min-k to raise precision. § When we use Min-k, recall down and precision goes up. § There is no k where the F-measure is the highest on all datasets. § For this and the following experiments, we excluded PENTAHO and KNIME. →Their results is subsumed results of OPEN REFINE and TRIFACTA.

EXPERIMENTS Tool Combination Effectiveness § Union All and Min-k § Animal…By increasing k, this strategy suffers significant loss of recall. →Almost all of errors are detected by single tool. § Black Oak…Recall gradually decreases. →There a strong overlap among tools. § Min-k strategy depends on a manually picked k, which can depend on the given data set and the set of tools.

EXPERIMENTS Tool Combination Effectiveness § Ordering based on Benefit and User Validation § They randomly sampled 5% of the detected errors for each tool and compared them with ground truth to estimate the precision of the tool. § Compare to Union All. § σ… 0. 1~0. 5 § Reducing the human amount of work at the expense of some of the recall.

EXPERIMENTS Tool Combination Effectiveness § Ordering based on Benefit and User Validation § In Merck dataset, the amount of human work is 35% of Union All and the number of detectable errors is 92% of Union All. MIT VPF also be same. →This strategy dynamically updates the estimation of the tools’ precision and drops those tools that have an estimated proportion of true errors in their non-validated errors below the threshold. § In MIT VPF, there is a tool that an error detection number is very high but precision is low. →We can exclude these tools by setting the σ to 0. 1.

EXPERIMENTS Tool Combination Effectiveness § Ordering based on Benefit and User Validation § Animal…Except rule-based tool, recall is low. By using rule-based tool, recall become high but precision become worst. →When set σ to 0. 2, recall down significantly. § Rayyan Bib…There are neither dominant tools nor extremely low precision tools. →By increasing σ, the rate of reduction of true error is lower than that of verification.

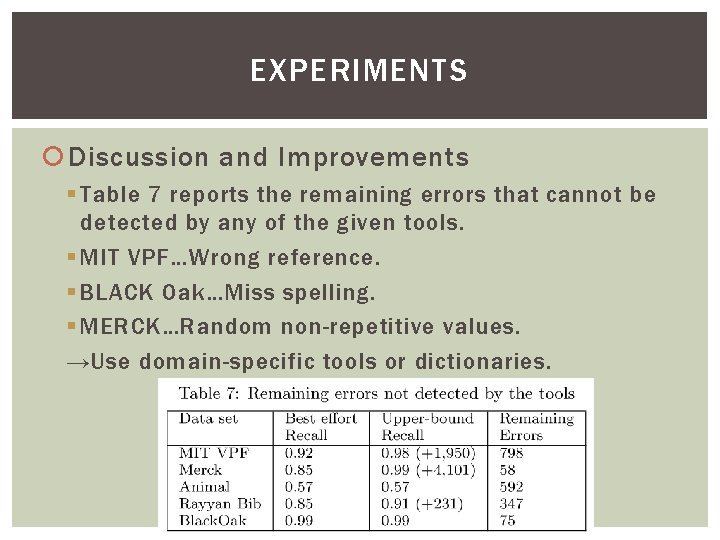

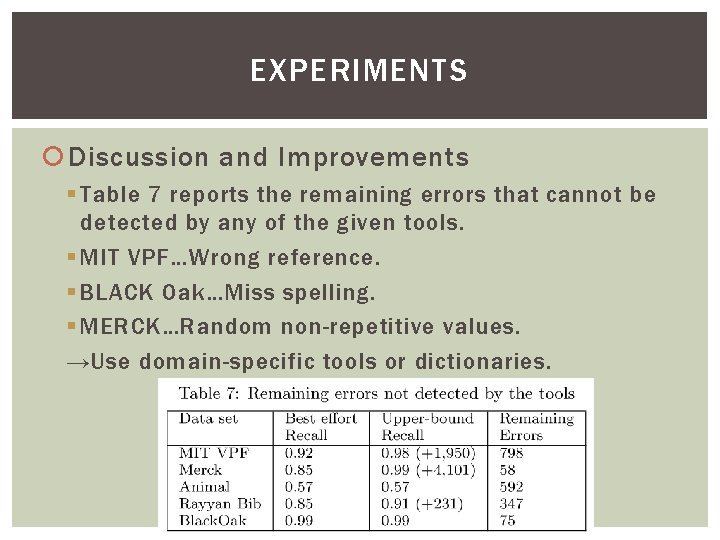

EXPERIMENTS Discussion and Improvements § Table 7 reports the remaining errors that cannot be detected by any of the given tools. § MIT VPF…Wrong reference. § BLACK Oak…Miss spelling. § MERCK…Random non-repetitive values. →Use domain-specific tools or dictionaries.

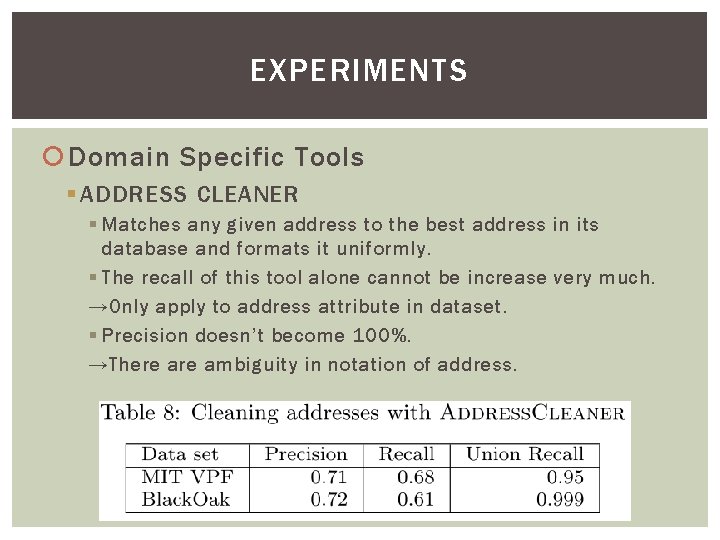

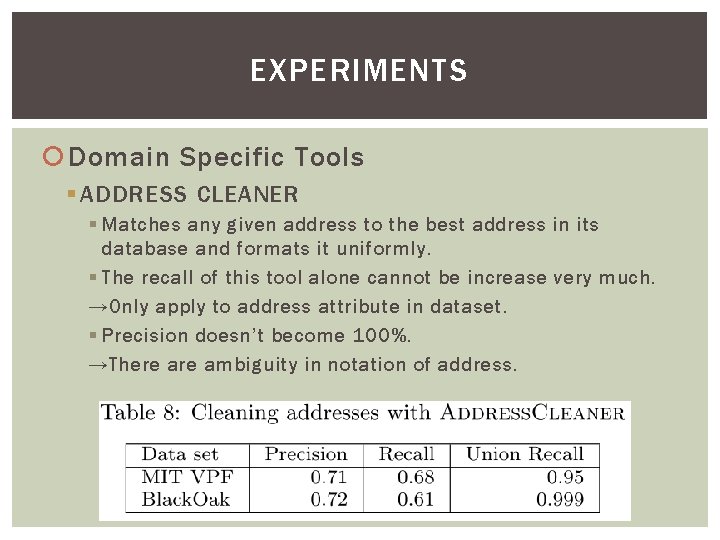

EXPERIMENTS Domain Specific Tools § ADDRESS CLEANER § Matches any given address to the best address in its database and formats it uniformly. § The recall of this tool alone cannot be increase very much. →Only apply to address attribute in dataset. § Precision doesn’t become 100%. →There ambiguity in notation of address.

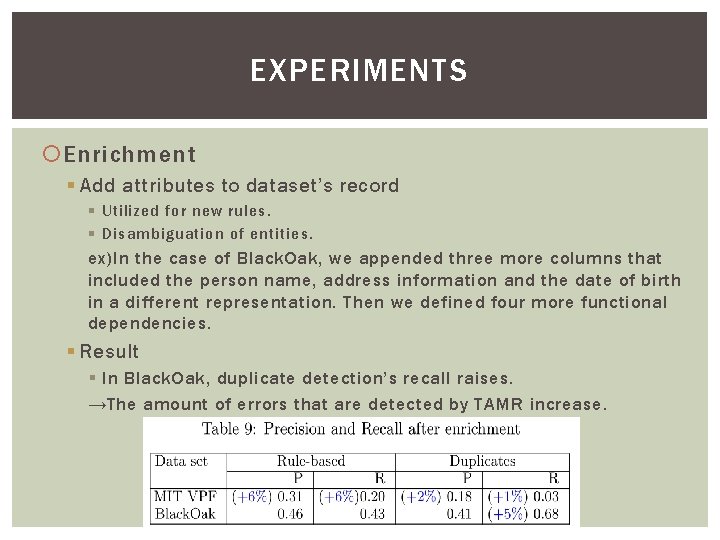

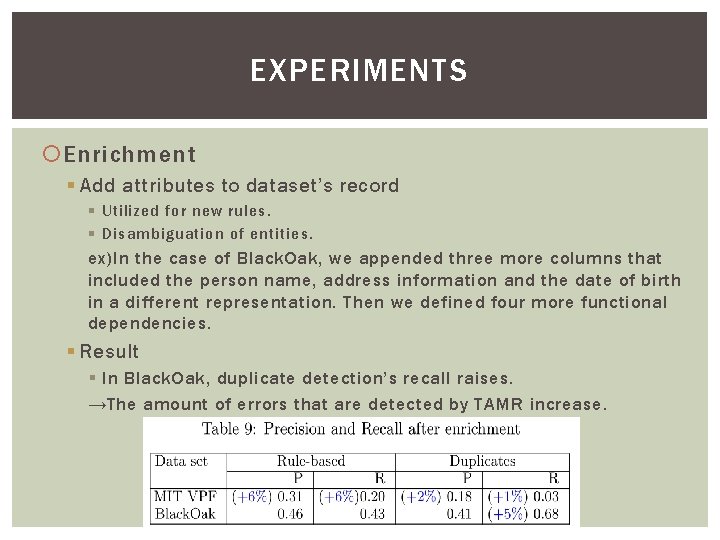

EXPERIMENTS Enrichment § Add attributes to dataset’s record § Utilized for new rules. § Disambiguation of entities. ex)In the case of Black. Oak, we appended three more columns that included the person name, address information and the date of birth in a different representation. Then we defined four more functional dependencies. § Result § In Black. Oak, duplicate detection’s recall raises. →The amount of errors that are detected by TAMR increase.

CONCLUSION AND FUTUREWORK

CONCLUSION AND FUTUREWORK There is no single dominant tool for the various data sets and diversified types of errors. Picking the right order in applying the tools can improve the precision and help reduce the cost of validation by humans. Domain specific tools can achieve high precision and recall compared to general-purpose tools. Rule-based systems and duplicate detection benefited from data enrichment.

CONCLUSION AND FUTUREWORK 1. A holistic combination of tools. § Holistic approaches to combining tools may be able to perform even better. 2. A data enrichment system. § There is need to find automatic approaches to enrichment via corporate knowledge bases. § Data sets with public information, could be enriched through web tables and other open data.

CONCLUSION AND FUTUREWORK 3. A novel interactive dashboard. § Devise novel interactive dashboards to help users better understand the data and be efficiently involved in the data cleaning process. 4. Reasoning on real-world data. § Shift the focus from optimizing single error-type tools towards creating end-to-end data quality solution for real-world data sets.