Designing Parallel Operating Systems via Parallel Programming Eitan

- Slides: 37

Designing Parallel Operating Systems via Parallel Programming Eitan Frachtenberg 1, Kei Davis 1, Fabrizio Petrini 1, Juan Fernández 1, 2 and José Carlos Sancho 1 1 Performance and Architecture Lab (PAL) 2 Grupo de Arquitectura y Computación Paralelas (GACOP) CCS-3 Modeling, Algorithms and Informatics Dpto. Ingeniería y Tecnología de Computadores Los Alamos National Laboratory, NM 87545, USA Universidad de Murcia, 30071 Murcia, SPAIN URL: http: //www. c 3. lanl. gov URL: http: //www. ditec. um. es email: juanf@um. es

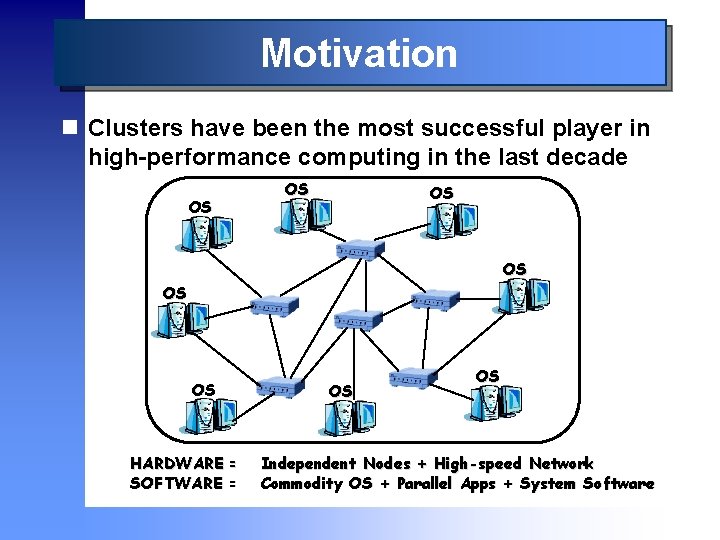

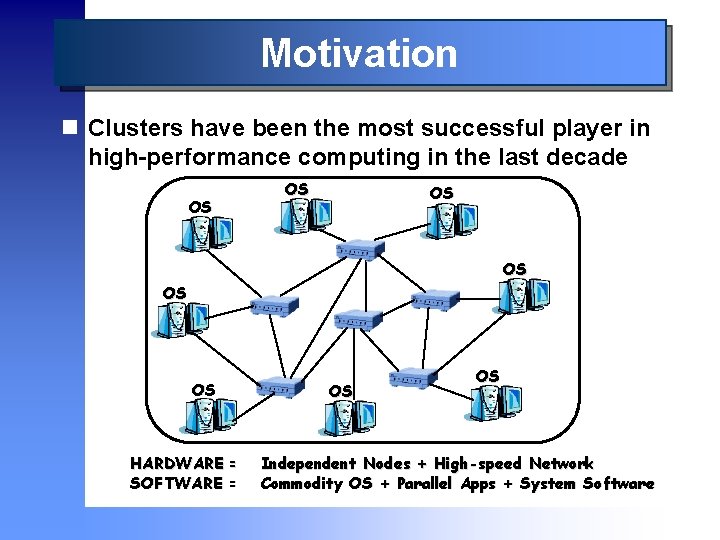

Motivation n Clusters have been the most successful player in high-performance computing in the last decade OS OS OS HARDWARE = SOFTWARE = OS OS Independent Nodes + High-speed Network Commodity OS + Parallel Apps + System Software

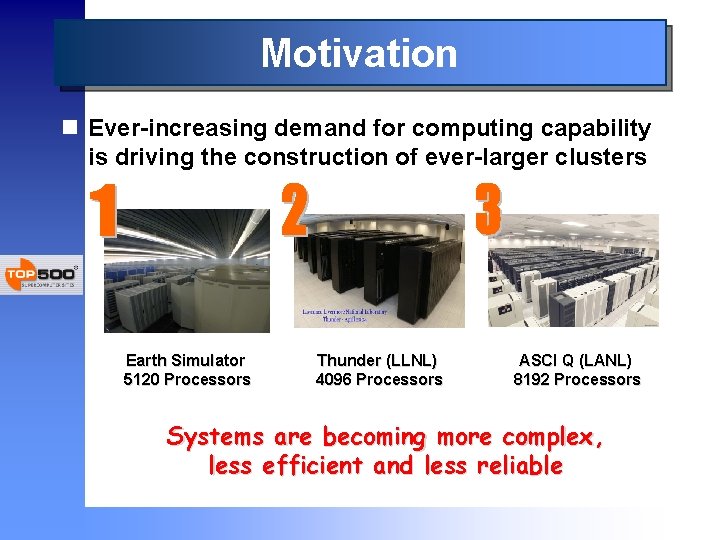

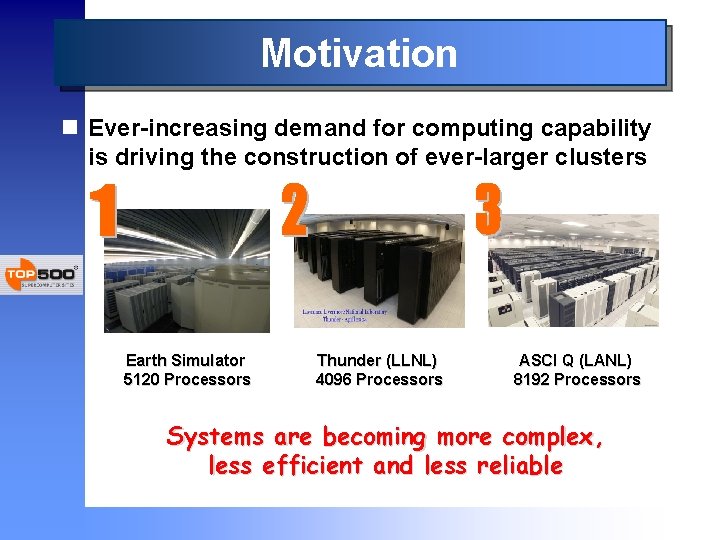

Motivation n Ever-increasing demand for computing capability is driving the construction of ever-larger clusters Earth Simulator 5120 Processors Thunder (LLNL) 4096 Processors ASCI Q (LANL) 8192 Processors Systems are becoming more complex, less efficient and less reliable

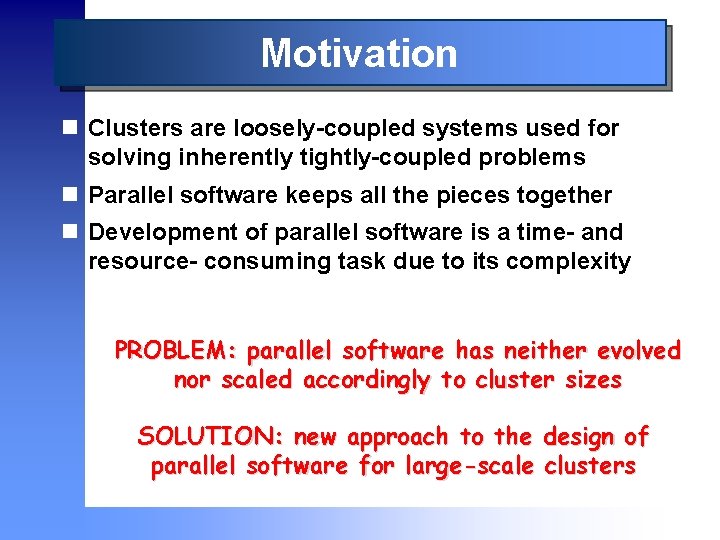

Motivation n Clusters are loosely-coupled systems used for solving inherently tightly-coupled problems n Parallel software keeps all the pieces together n Development of parallel software is a time- and resource- consuming task due to its complexity PROBLEM: parallel software has neither evolved nor scaled accordingly to cluster sizes SOLUTION: new approach to the design of parallel software for large-scale clusters

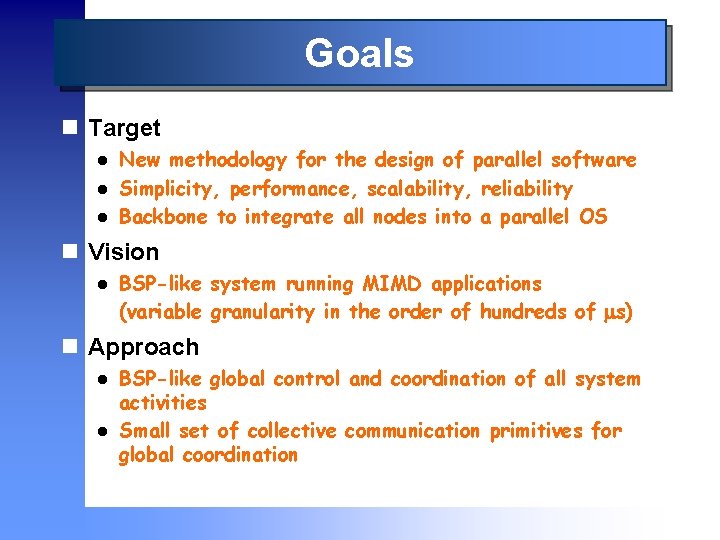

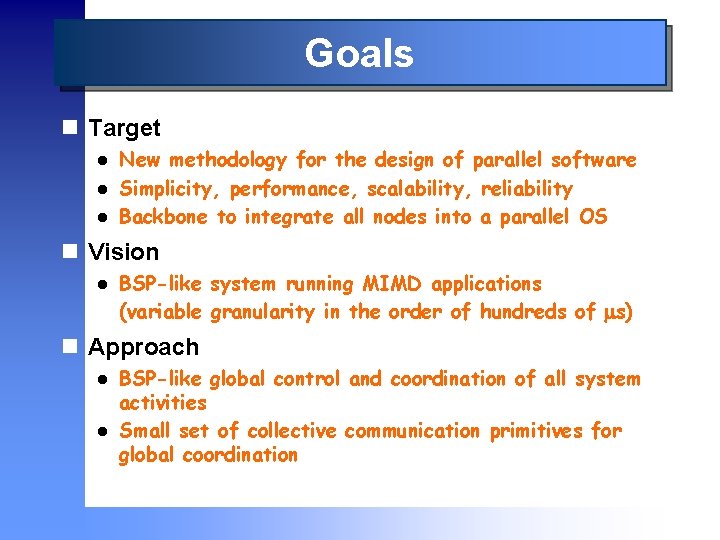

Goals n Target l l l New methodology for the design of parallel software Simplicity, performance, scalability, reliability Backbone to integrate all nodes into a parallel OS n Vision l BSP-like system running MIMD applications (variable granularity in the order of hundreds of s) n Approach l l BSP-like global control and coordination of all system activities Small set of collective communication primitives for global coordination

Outline n Motivation and Goals n Toward a Parallel Operating System n Core Primitives n Parallel Software Design n Case Studies n Concluding remarks

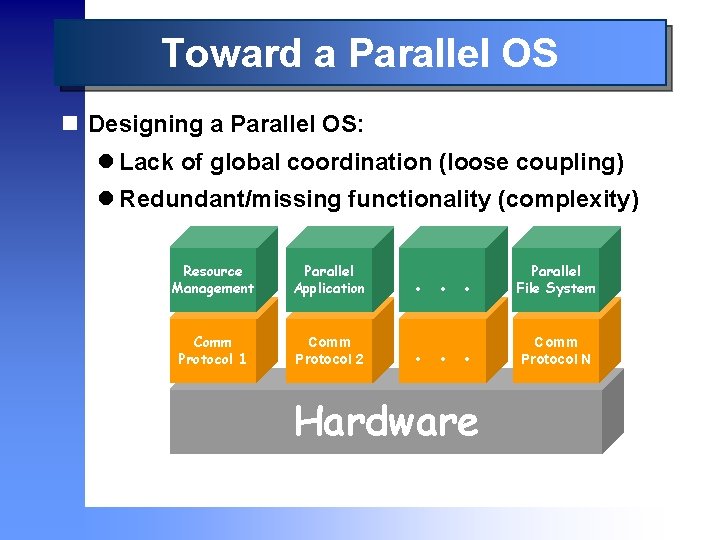

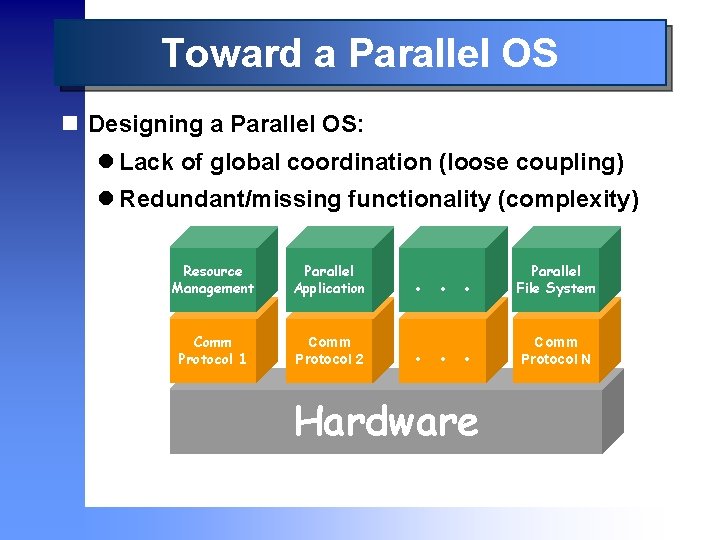

Toward a Parallel OS n Designing a Parallel OS: l Lack of global coordination (loose coupling) l Redundant/missing functionality (complexity) Resource Management Parallel Application . . . Parallel File System Comm Protocol 1 Comm Protocol 2 . . . Comm Protocol N Hardware

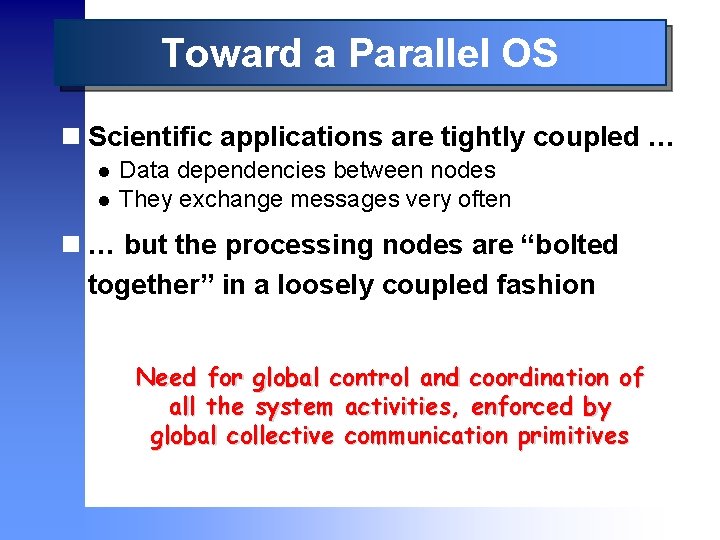

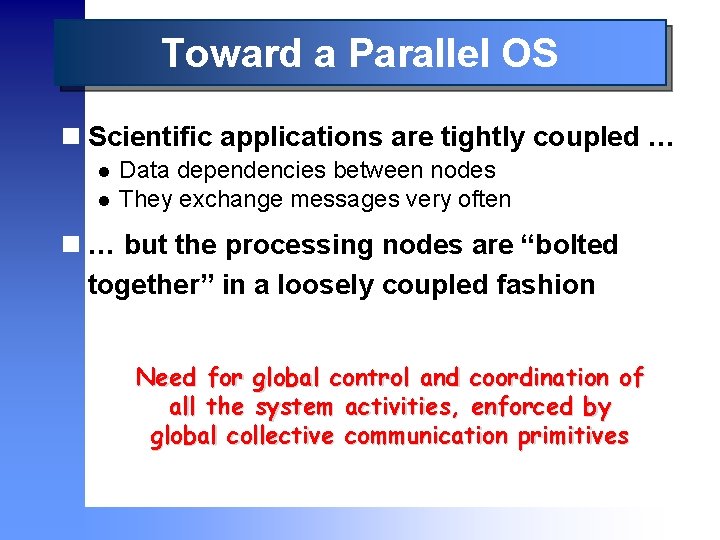

Toward a Parallel OS n Scientific applications are tightly coupled … l l Data dependencies between nodes They exchange messages very often n … but the processing nodes are “bolted together” in a loosely coupled fashion Need for global control and coordination of all the system activities, enforced by global collective communication primitives

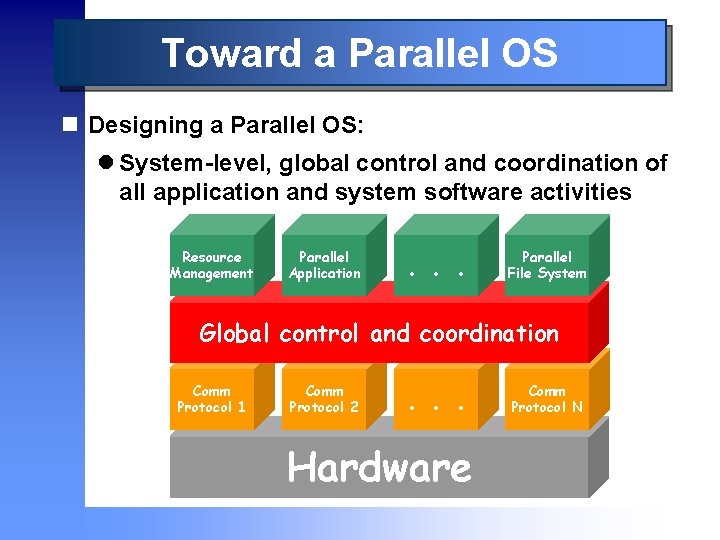

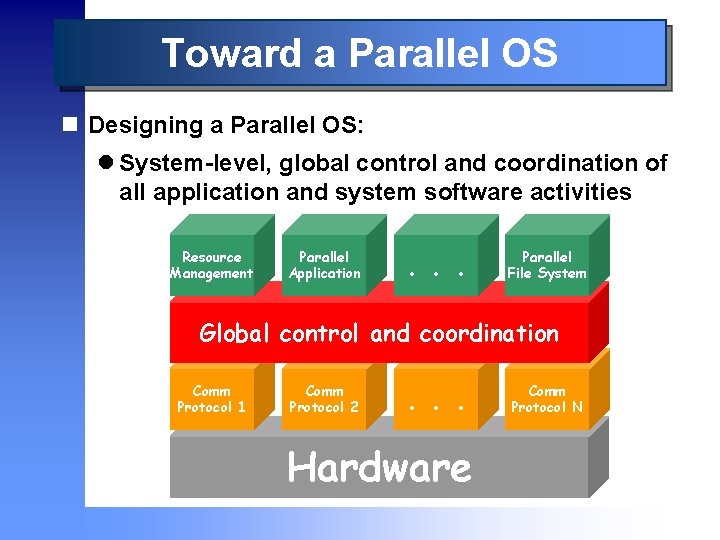

Toward a Parallel OS n Designing a Parallel OS: l System-level, global control and coordination of all application and system software activities Resource Management Parallel Application . . . Parallel File System Global control and coordination Comm Protocol 1 Comm Protocol 2 . . . Hardware Comm Protocol N

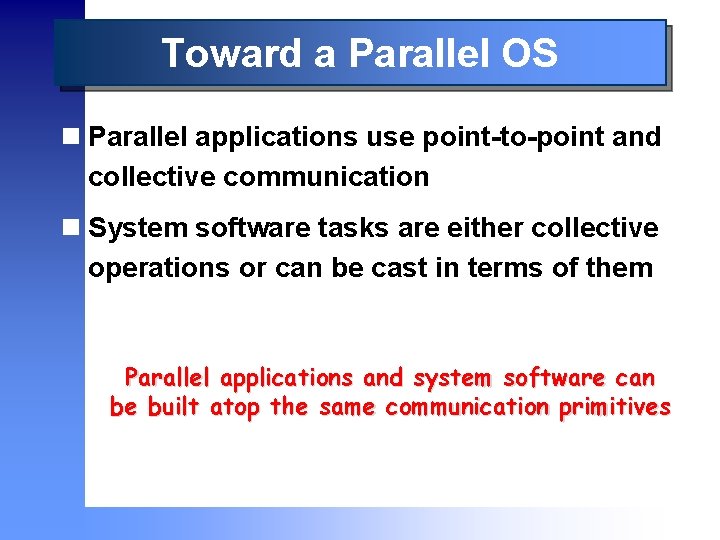

Toward a Parallel OS n Parallel applications use point-to-point and collective communication n System software tasks are either collective operations or can be cast in terms of them Parallel applications and system software can be built atop the same communication primitives

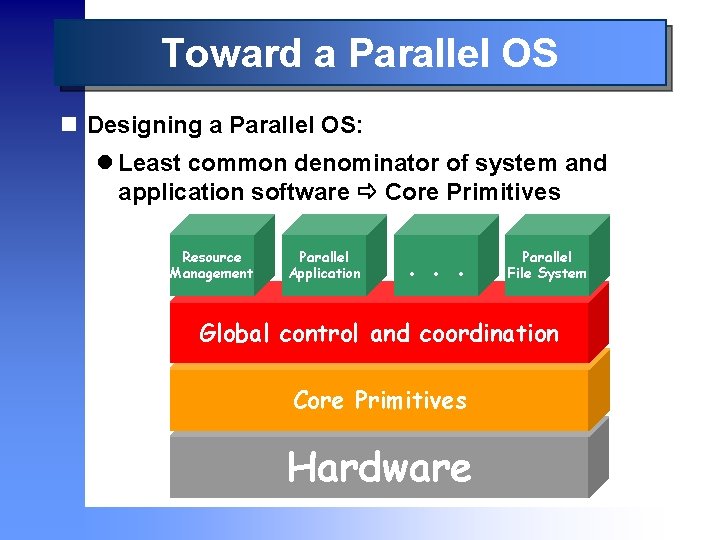

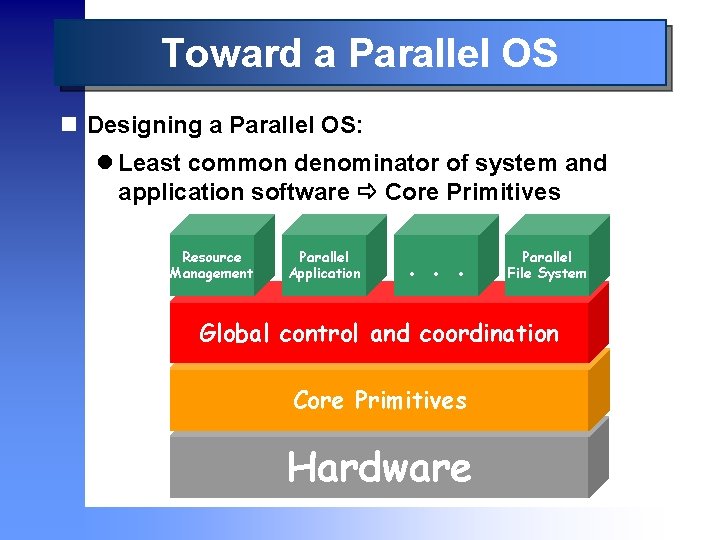

Toward a Parallel OS n Designing a Parallel OS: l Least common denominator of system and application software Core Primitives Resource Management Parallel Application . . . Parallel File System Global control and coordination Comm Protocol 1 Core Primitives. . . Comm Protocol 2 Hardware Comm Protocol N

Outline n Motivation and Goals n Toward a Parallel Operating System n Core Primitives n Parallel Software Design n Case Studies n Concluding remarks

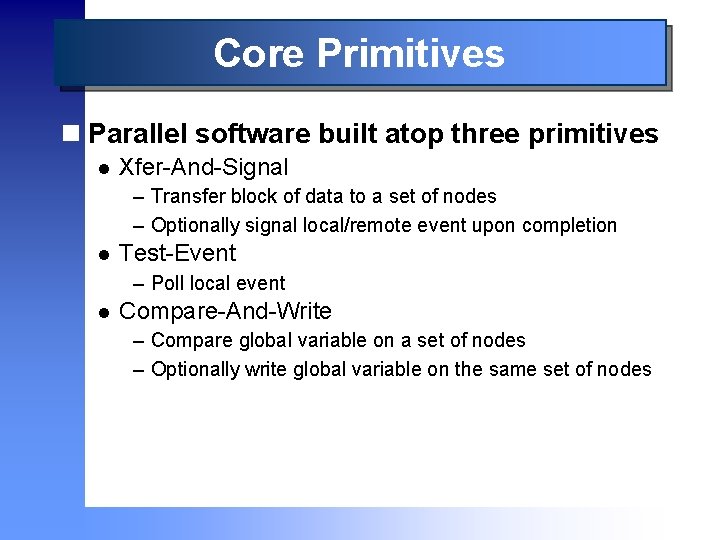

Core Primitives n Parallel software built atop three primitives l Xfer-And-Signal – Transfer block of data to a set of nodes – Optionally signal local/remote event upon completion l Test-Event – Poll local event l Compare-And-Write – Compare global variable on a set of nodes – Optionally write global variable on the same set of nodes

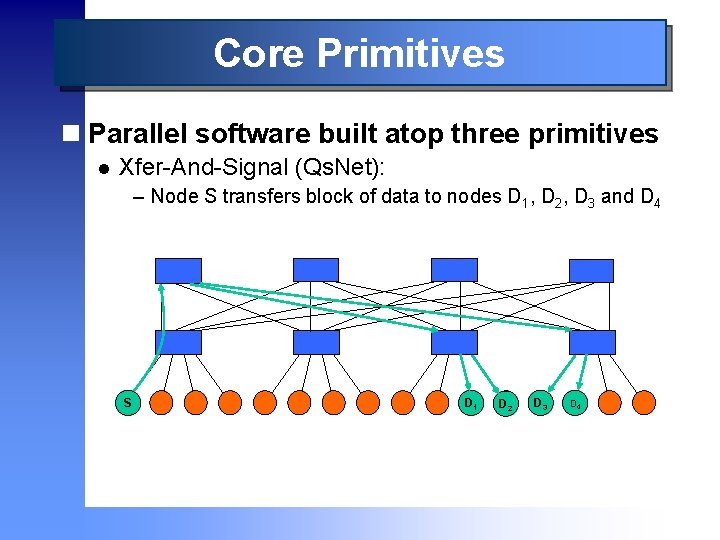

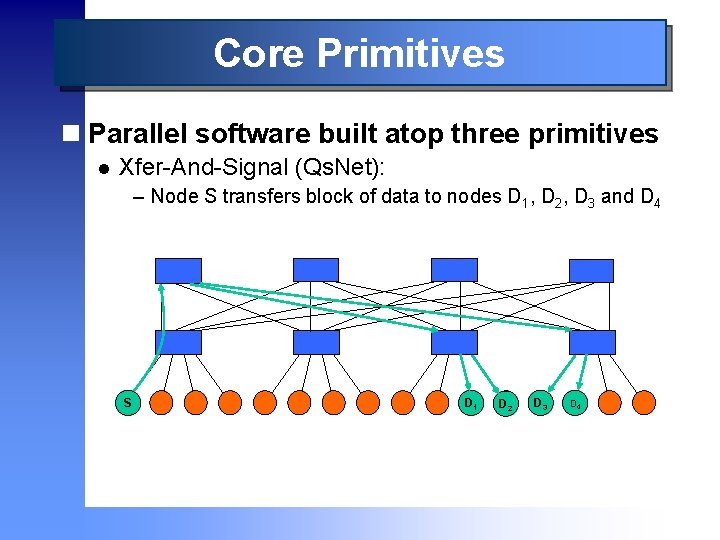

Core Primitives n Parallel software built atop three primitives l Xfer-And-Signal (Qs. Net): – Node S transfers block of data to nodes D 1, D 2, D 3 and D 4 S D 1 D 2 D 3 D 4

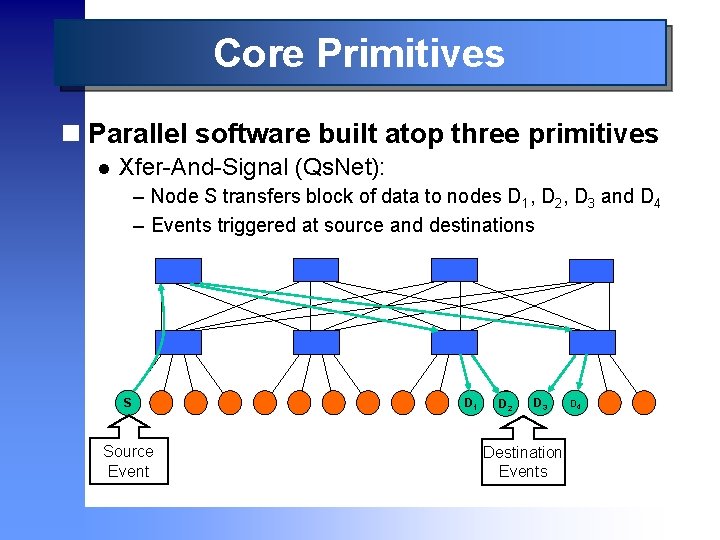

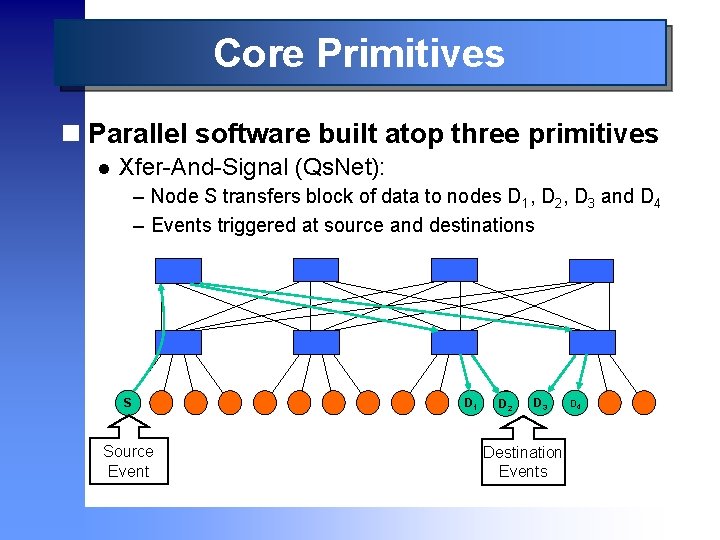

Core Primitives n Parallel software built atop three primitives l Xfer-And-Signal (Qs. Net): – Node S transfers block of data to nodes D 1, D 2, D 3 and D 4 – Events triggered at source and destinations S Source Event D 1 D 2 D 3 Destination Events D 4

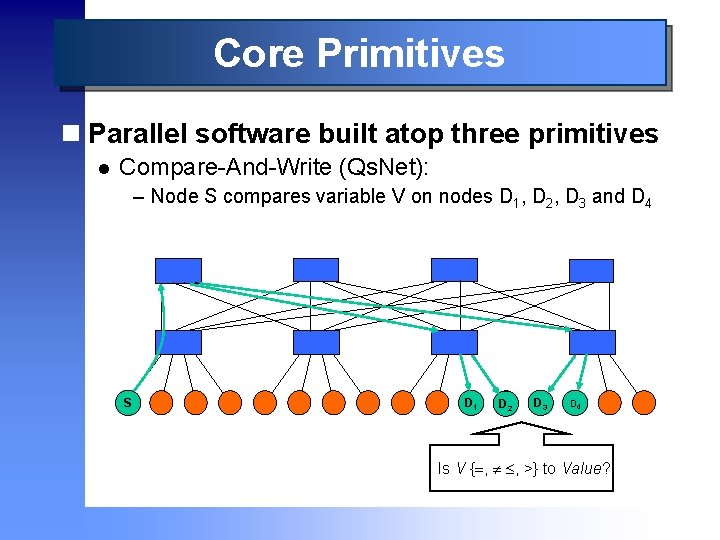

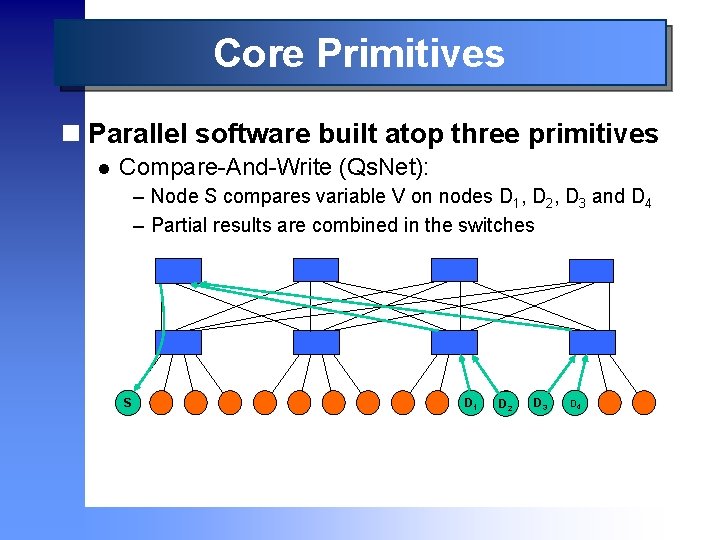

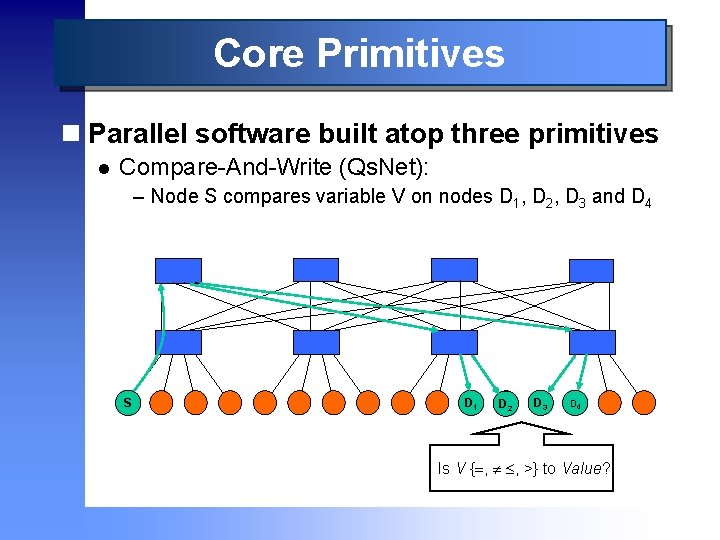

Core Primitives n Parallel software built atop three primitives l Compare-And-Write (Qs. Net): – Node S compares variable V on nodes D 1, D 2, D 3 and D 4 S D 1 D 2 D 3 D 4 • Is V { , , >} to Value?

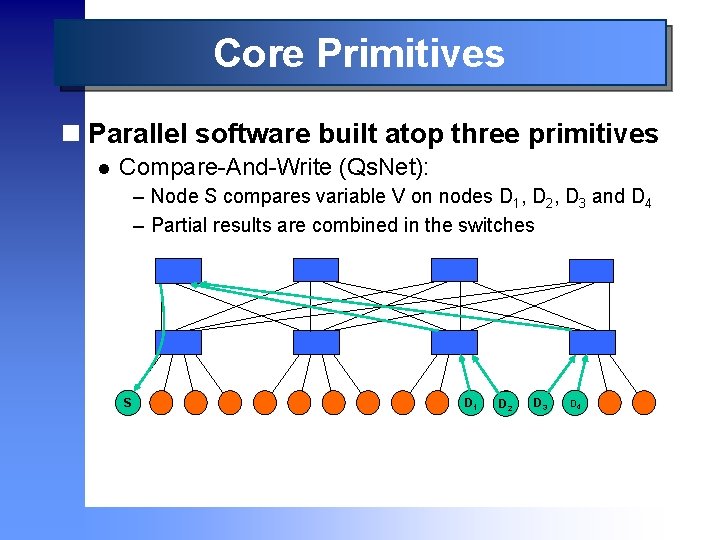

Core Primitives n Parallel software built atop three primitives l Compare-And-Write (Qs. Net): – Node S compares variable V on nodes D 1, D 2, D 3 and D 4 – Partial results are combined in the switches S D 1 D 2 D 3 D 4

Outline n Motivation and Goals n Toward a Parallel Operating System n Core Primitives n Parallel Software Design n Case Studies n Concluding remarks

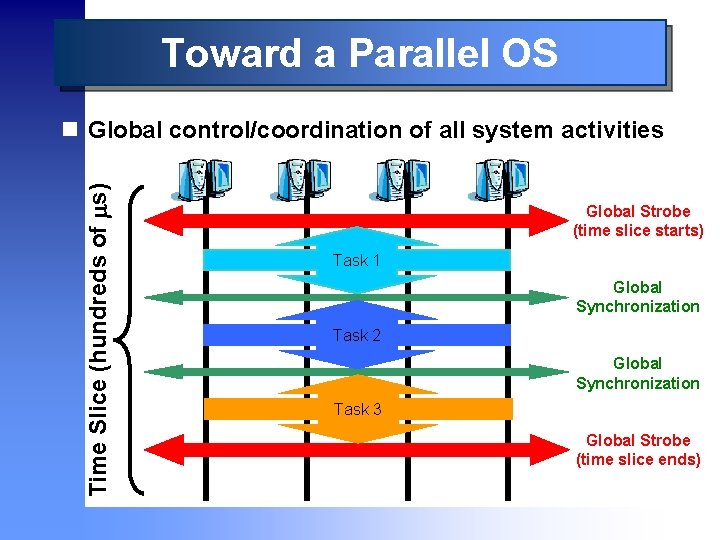

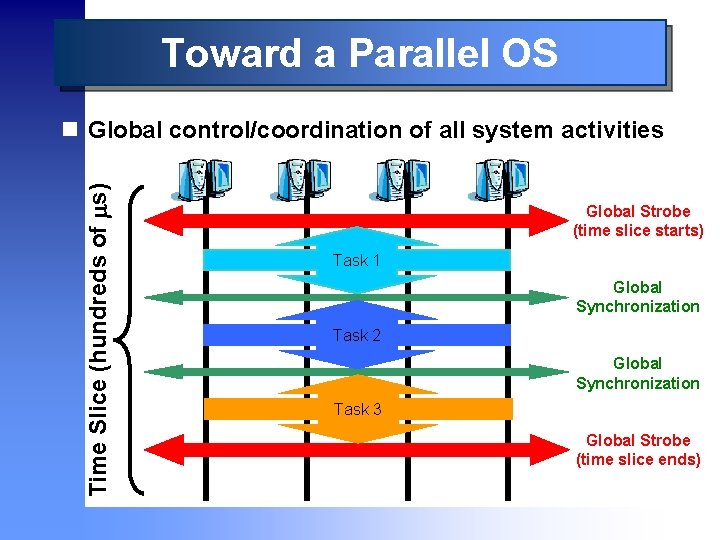

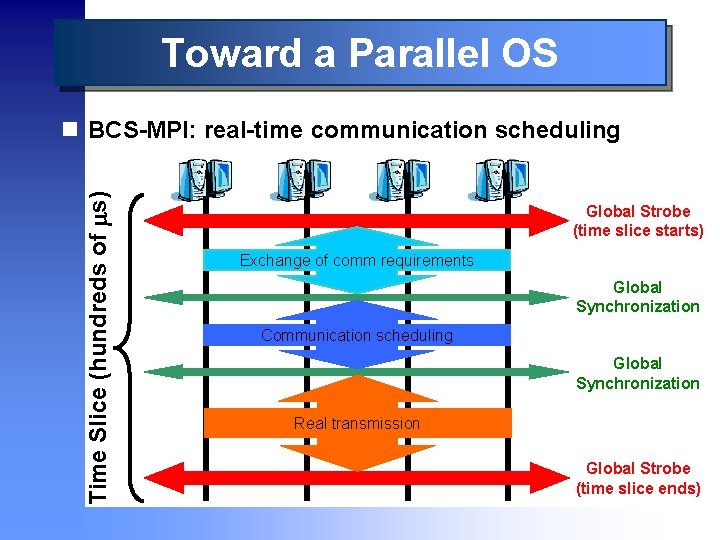

Toward a Parallel OS Time Slice (hundreds of s) n Global control/coordination of all system activities • Global Strobe • (time slice starts) Task 1 • Global • Synchronization Task 2 • Global • Synchronization Task 3 • Global Strobe • (time slice ends)

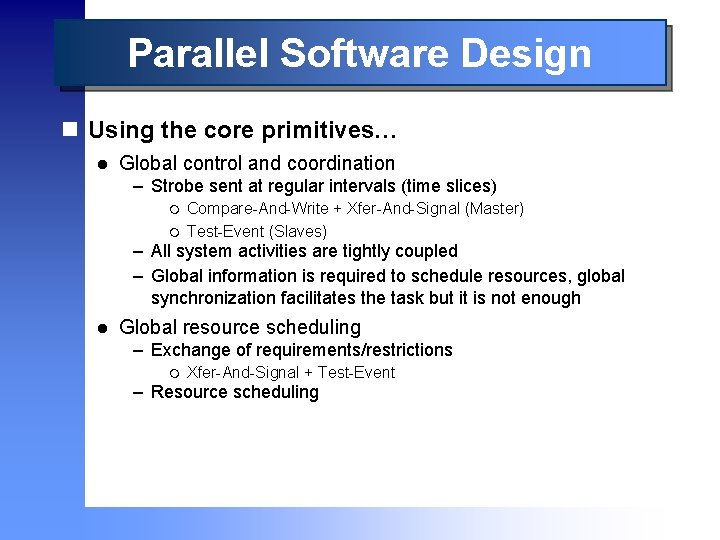

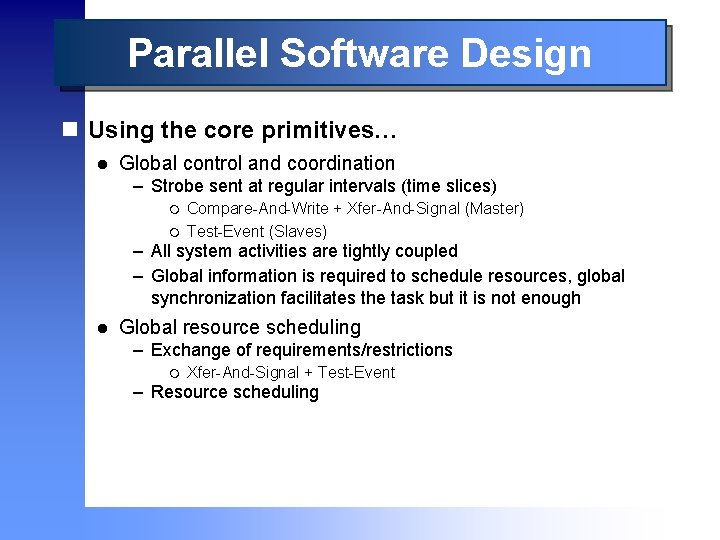

Parallel Software Design n Using the core primitives… l Global control and coordination – Strobe sent at regular intervals (time slices) m m Compare-And-Write + Xfer-And-Signal (Master) Test-Event (Slaves) – All system activities are tightly coupled – Global information is required to schedule resources, global synchronization facilitates the task but it is not enough l Global resource scheduling – Exchange of requirements/restrictions m Xfer-And-Signal + Test-Event – Resource scheduling

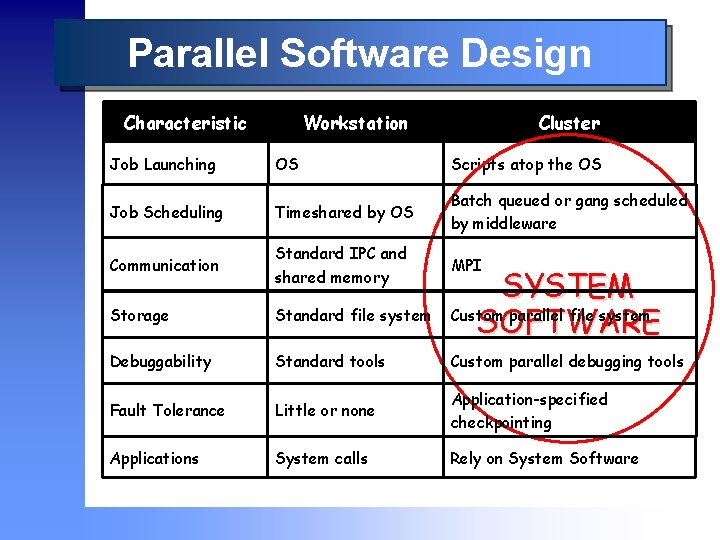

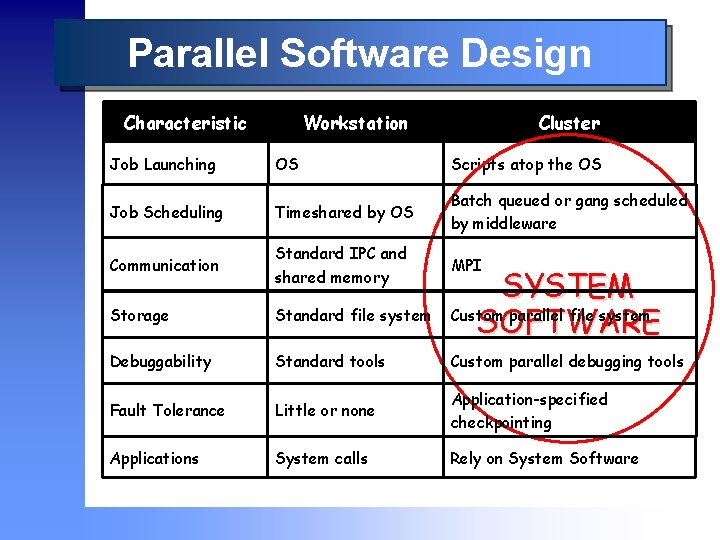

Parallel Software Design Characteristic Workstation Cluster Job Launching OS Scripts atop the OS Job Scheduling Timeshared by OS Batch queued or gang scheduled by middleware Communication Standard IPC and shared memory MPI Storage Standard file system Debuggability Standard tools Custom parallel debugging tools Fault Tolerance Little or none Application-specified checkpointing Applications System calls Rely on System Software SYSTEM Custom parallel file system SOFTWARE

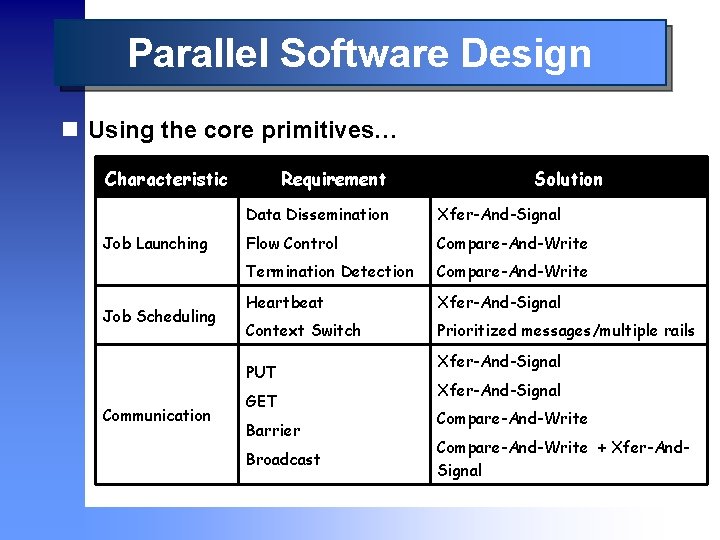

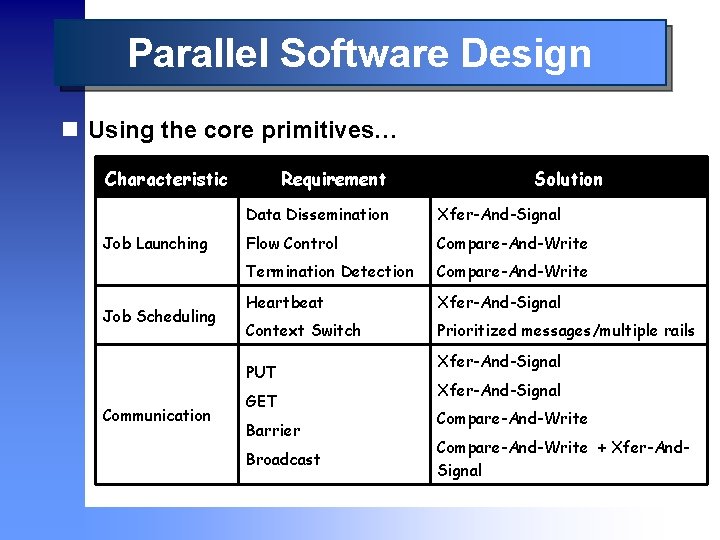

Parallel Software Design n Using the core primitives… Characteristic Job Launching Job Scheduling Requirement Data Dissemination Xfer-And-Signal Flow Control Compare-And-Write Termination Detection Compare-And-Write Heartbeat Xfer-And-Signal Context Switch Prioritized messages/multiple rails PUT Communication Solution GET Barrier Broadcast Xfer-And-Signal Compare-And-Write + Xfer-And. Signal

Parallel Software Design Can we really build system software using this new approach?

Outline n Motivation and Goals n Introduction n Core Primitives n Parallel Software Design n Case Studies n Concluding remarks

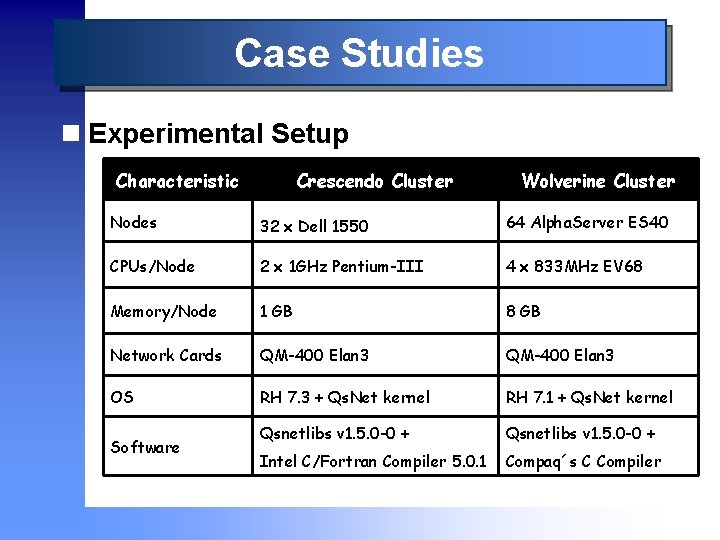

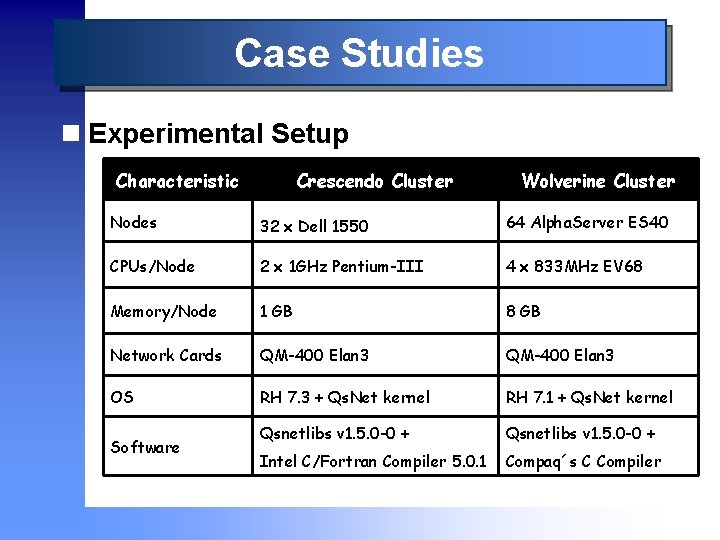

Case Studies n Experimental Setup Characteristic Crescendo Cluster Wolverine Cluster Nodes 32 x Dell 1550 64 Alpha. Server ES 40 CPUs/Node 2 x 1 GHz Pentium-III 4 x 833 MHz EV 68 Memory/Node 1 GB 8 GB Network Cards QM-400 Elan 3 OS RH 7. 3 + Qs. Net kernel RH 7. 1 + Qs. Net kernel Qsnetlibs v 1. 5. 0 -0 + Intel C/Fortran Compiler 5. 0. 1 Compaq´s C Compiler Software

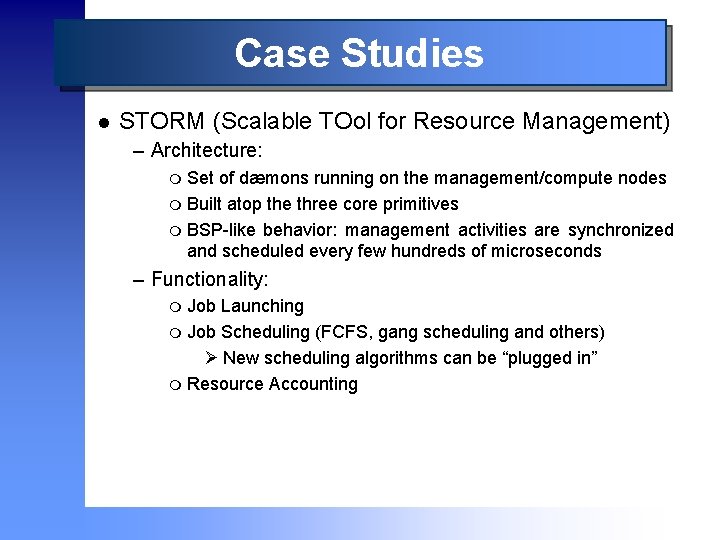

Case Studies l STORM (Scalable TOol for Resource Management) – Architecture: Set of dæmons running on the management/compute nodes m Built atop the three core primitives m BSP-like behavior: management activities are synchronized and scheduled every few hundreds of microseconds m – Functionality: Job Launching m Job Scheduling (FCFS, gang scheduling and others) Ø New scheduling algorithms can be “plugged in” m Resource Accounting m

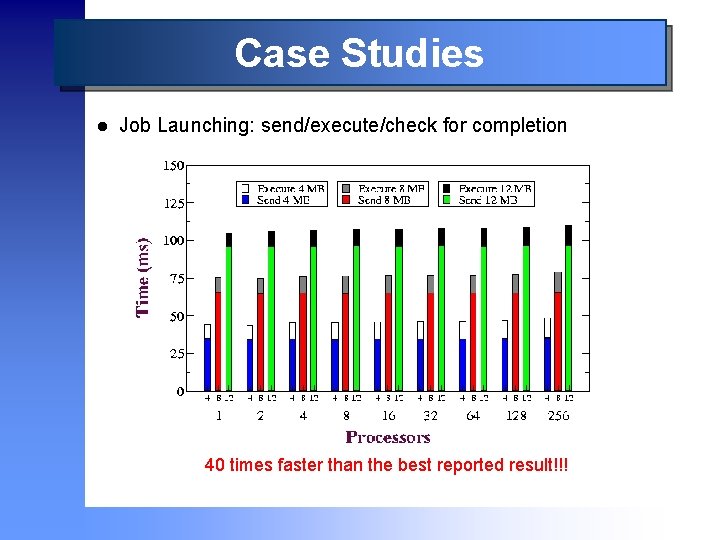

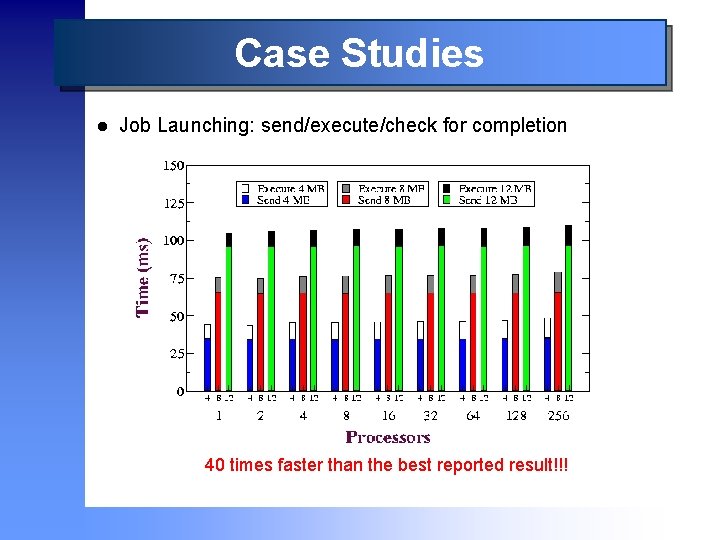

Case Studies l Job Launching: send/execute/check for completion 40 times faster than the best reported result!!!

Case Studies l BCS-MPI (Buffered Co. Scheduled MPI) – Architecture Set of cooperative threads running in the NIC m Built atop the three core primitives m BSP-like behavior: communications are synchronized and scheduled every few hundreds of microseconds m – Functionality: m Subset of the MPI standard – Paves the way to provide: Traffic segregation m Deterministic replay of user applications m System-level fault tolerance m

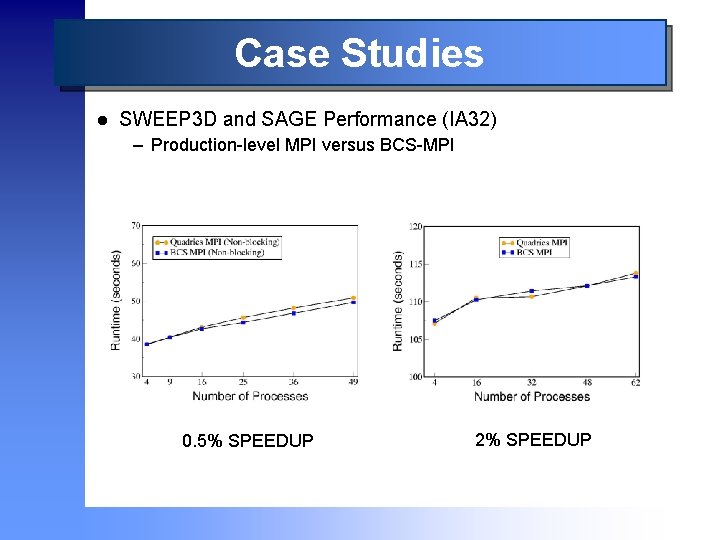

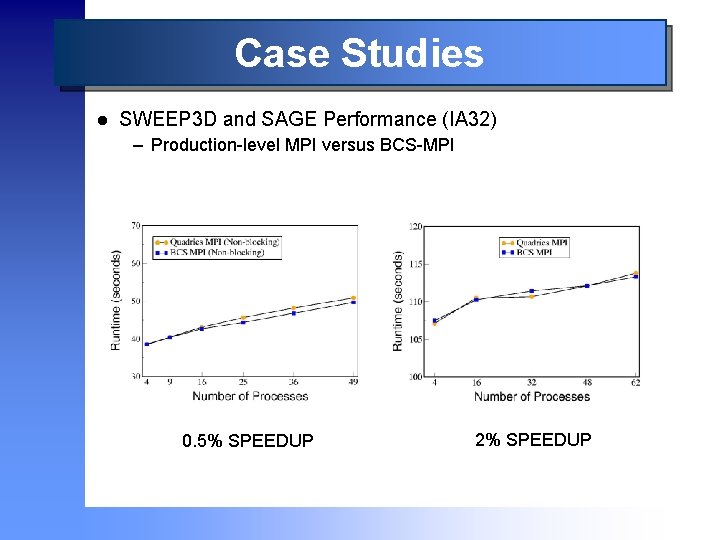

Case Studies l SWEEP 3 D and SAGE Performance (IA 32) – Production-level MPI versus BCS-MPI 0. 5% SPEEDUP 2% SPEEDUP

Outline n Motivation and Goals n Introduction n Core Primitives n Parallel Software Design n Case Studies n Concluding remarks

Concluding Remarks n Methodology for designing parallel software l l l Coordination of all system and application software activities in a BSP-like fashion Parallel applications and system software built atop a basic set of collective primitives for global coordination Backbone to integrate all nodes into a parallel OS n Promising preliminary results demonstrate that this approach is indeed feasible

Future Work n Kernel-level implementation l User-level solution is already working n Deterministic replay of MPI programs l Ordered resource scheduling may enforce reproducibility n Transparent fault tolerance l Global coordination simplifies the state of the machine

Designing Parallel Operating Systems via Parallel Programming Eitan Frachtenberg 1, Kei Davis 1, Fabrizio Petrini 1, Juan Fernández 1, 2 and José Carlos Sancho 1 1 Performance and Architecture Lab (PAL) 2 Grupo de Arquitectura y Computación Paralelas (GACOP) CCS-3 Modeling, Algorithms and Informatics Dpto. Ingeniería y Tecnología de Computadores Los Alamos National Laboratory, NM 87545, USA Universidad de Murcia, 30071 Murcia, SPAIN URL: http: //www. c 3. lanl. gov URL: http: //www. ditec. um. es email: juanf@um. es

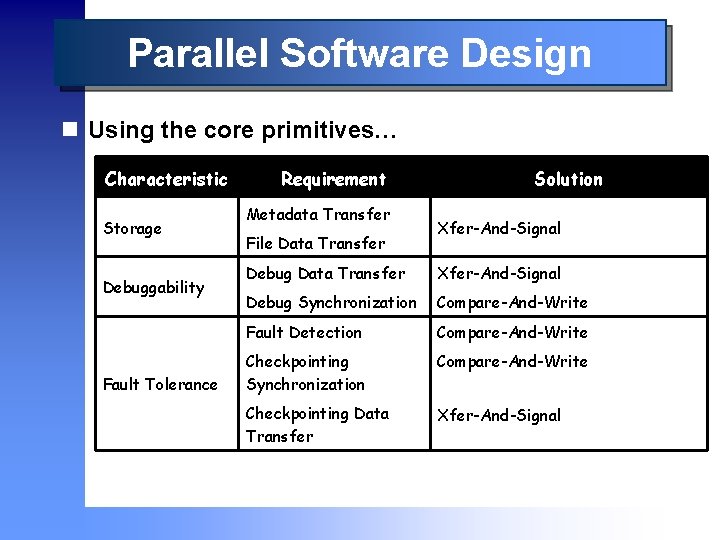

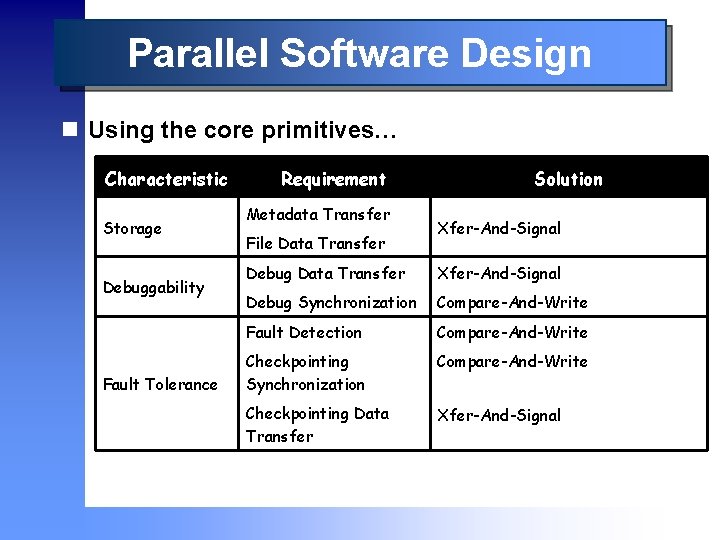

Parallel Software Design n Using the core primitives… Characteristic Storage Debuggability Fault Tolerance Requirement Metadata Transfer File Data Transfer Solution Xfer-And-Signal Debug Data Transfer Xfer-And-Signal Debug Synchronization Compare-And-Write Fault Detection Compare-And-Write Checkpointing Synchronization Compare-And-Write Checkpointing Data Transfer Xfer-And-Signal

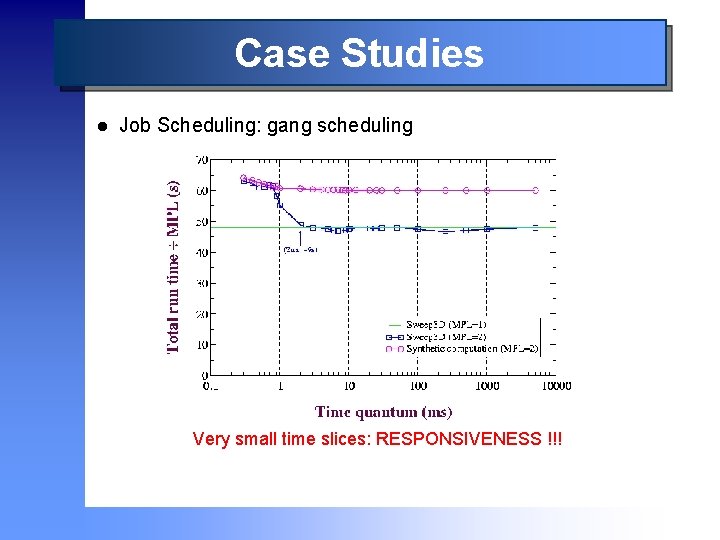

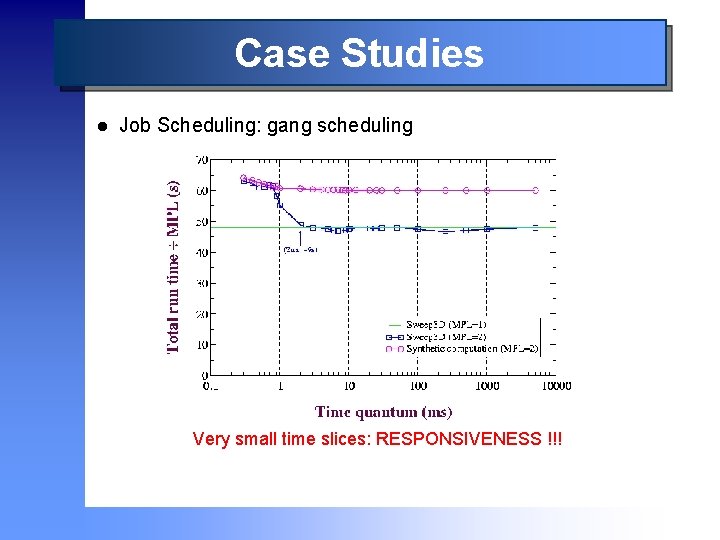

Case Studies l Job Scheduling: gang scheduling Very small time slices: RESPONSIVENESS !!!

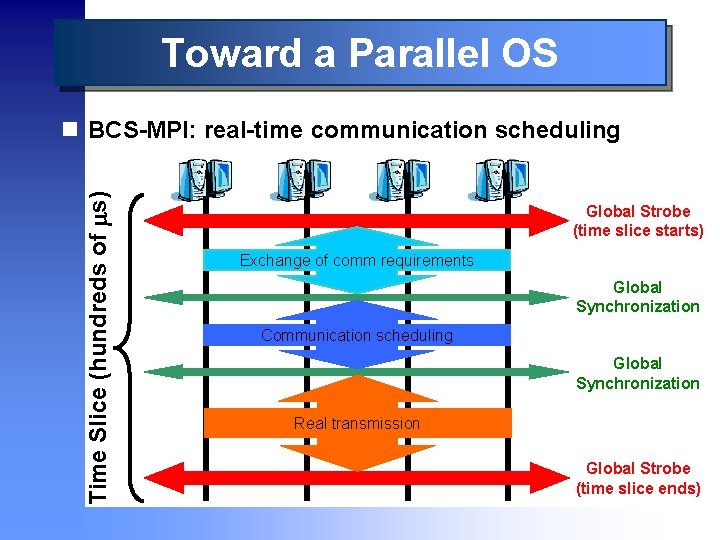

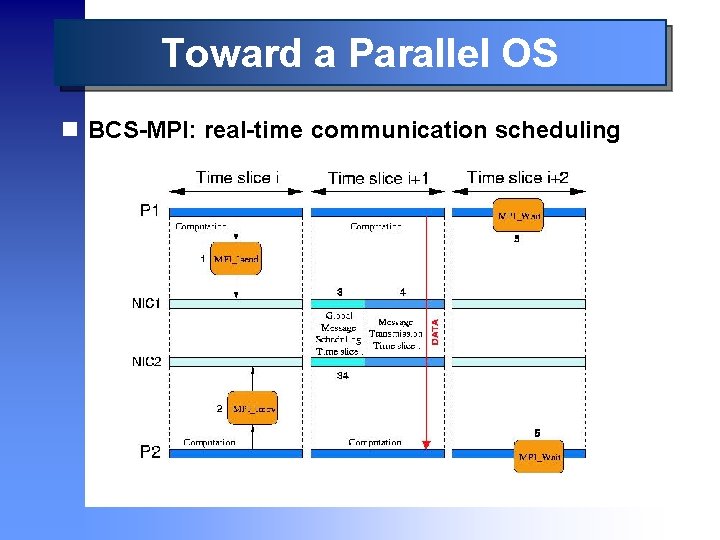

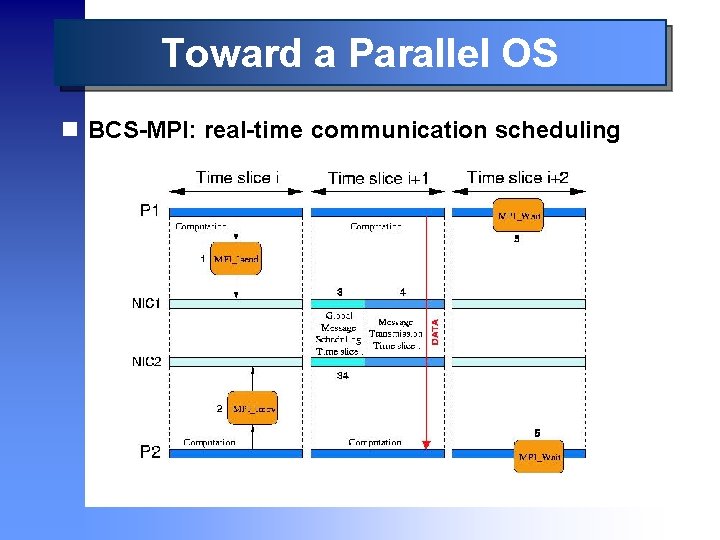

Toward a Parallel OS Time Slice (hundreds of s) n BCS-MPI: real-time communication scheduling • Global Strobe • (time slice starts) Exchange of comm requirements • Global • Synchronization Communication scheduling • Global • Synchronization Real transmission • Global Strobe • (time slice ends)

Toward a Parallel OS n BCS-MPI: real-time communication scheduling