Designing Large Scale Assessment to Support Learning Dylan

- Slides: 27

Designing Large. Scale Assessment to Support Learning Dylan Wiliam Institute of Education, University of London

Overview 1. 2. 3. 4. 5. 6. 7. 8. 9. The purposes of assessment The structure of the assessment system The locus of assessment The extensiveness of the assessment Assessment format Scoring models Quality issues The role of teachers Contextual issues

Functions of assessment • For evaluating institutions • For describing individuals • For supporting learning – Monitoring learning • Whether learning is taking place – Diagnosing (informing) learning • What is not being learnt – Forming learning • What to do about it

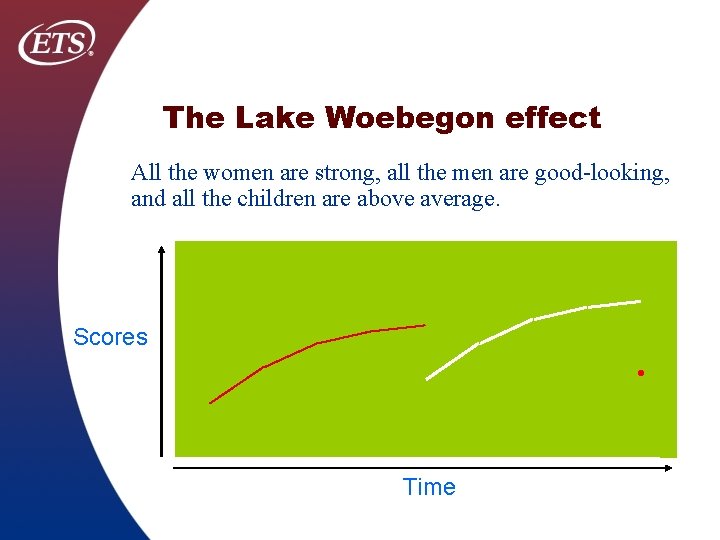

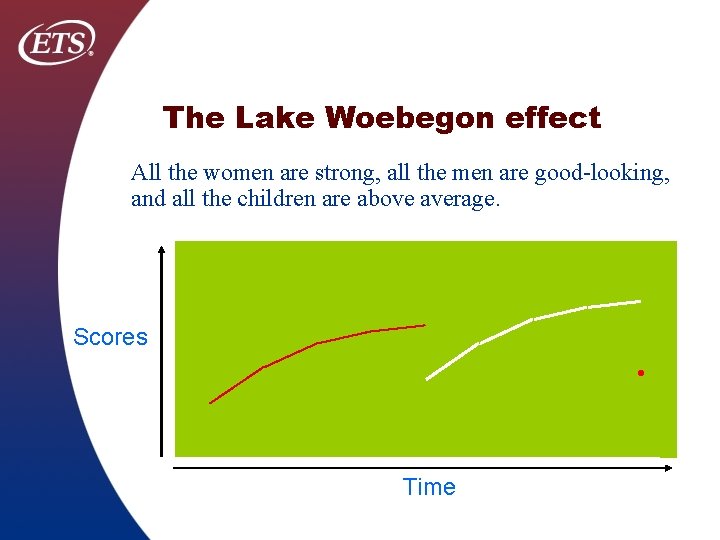

The Lake Woebegon effect All the women are strong, all the men are good-looking, and all the children are above average. Scores • Time

Goodhart’s law • All performance indicators lose their usefulness when used as objects of policy – Privatization of British Rail – Targets in the Health Service – “Bubble” students in high-stakes settings

Reconciling different pressures • The “high-stakes” genie is out of the bottle, and we cannot put it back • The only thing left to us is to try to develop “tests worth teaching to”

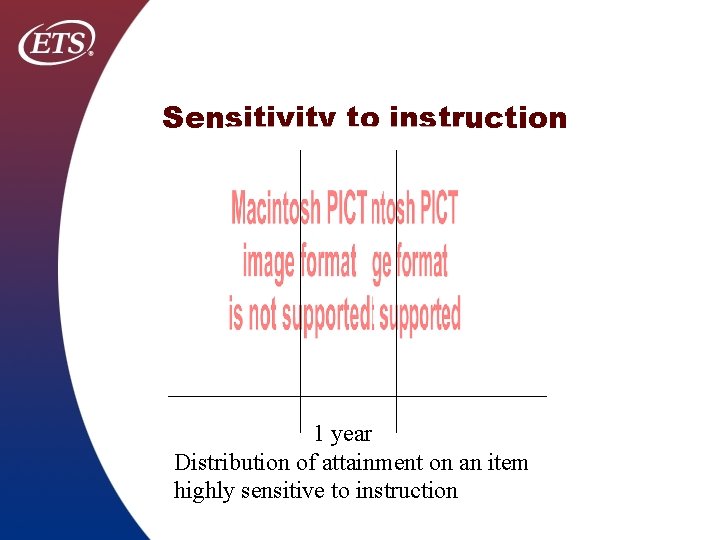

Sensitivity to instruction 1 year Distribution of attainment on an item highly sensitive to instruction

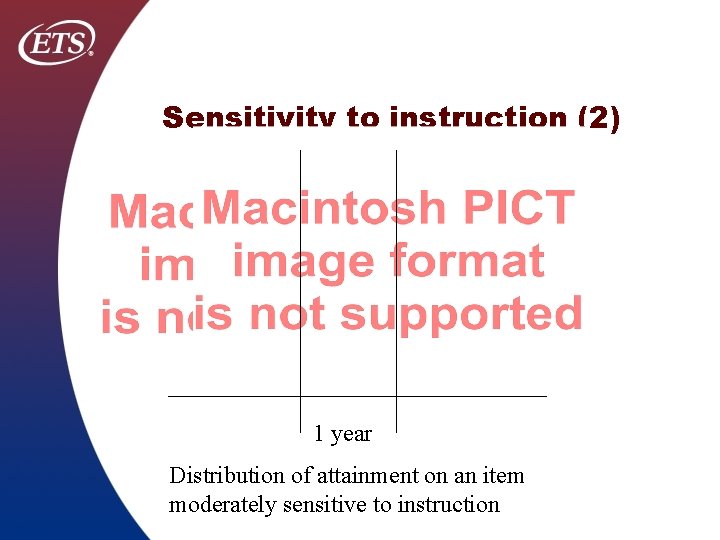

Sensitivity to instruction (2) 1 year Distribution of attainment on an item moderately sensitive to instruction

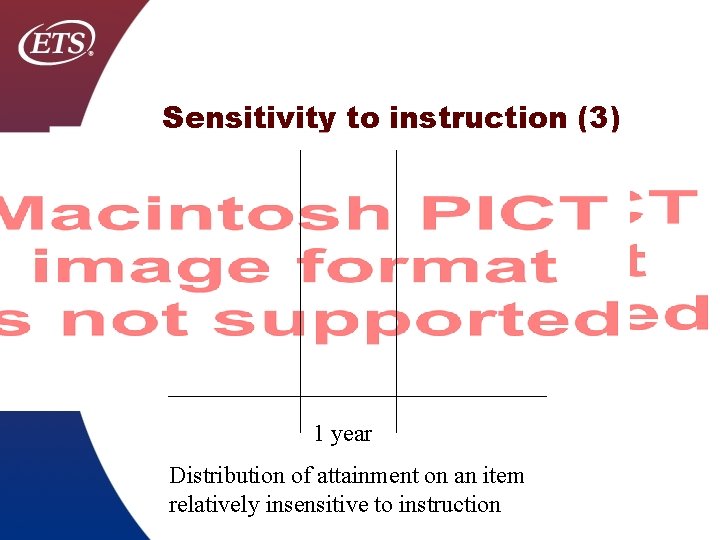

Sensitivity to instruction (3) 1 year Distribution of attainment on an item relatively insensitive to instruction

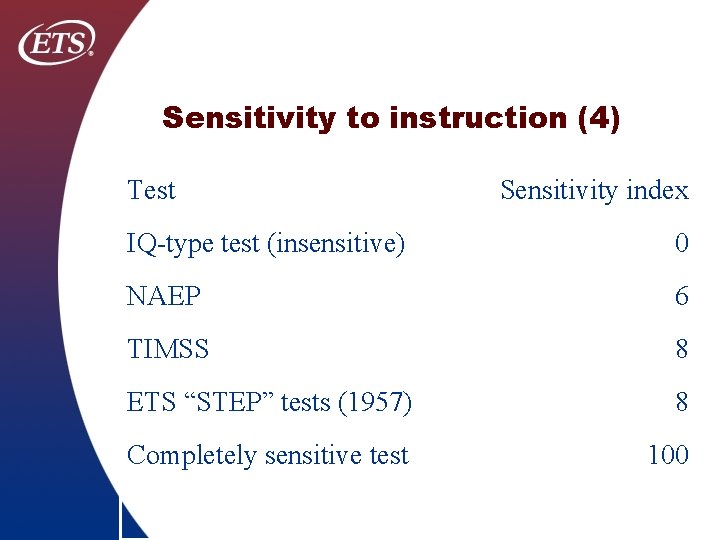

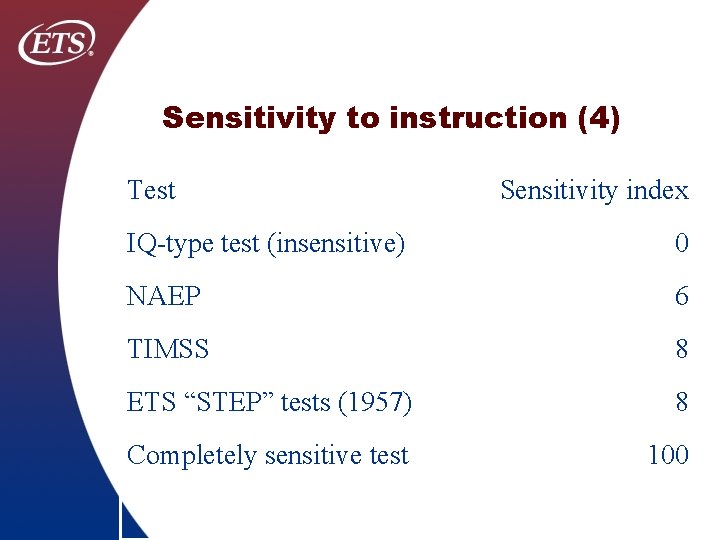

Sensitivity to instruction (4) Test Sensitivity index IQ-type test (insensitive) 0 NAEP 6 TIMSS 8 ETS “STEP” tests (1957) 8 Completely sensitive test 100

Consequences (1)

Consequences (2)

Consequences (3)

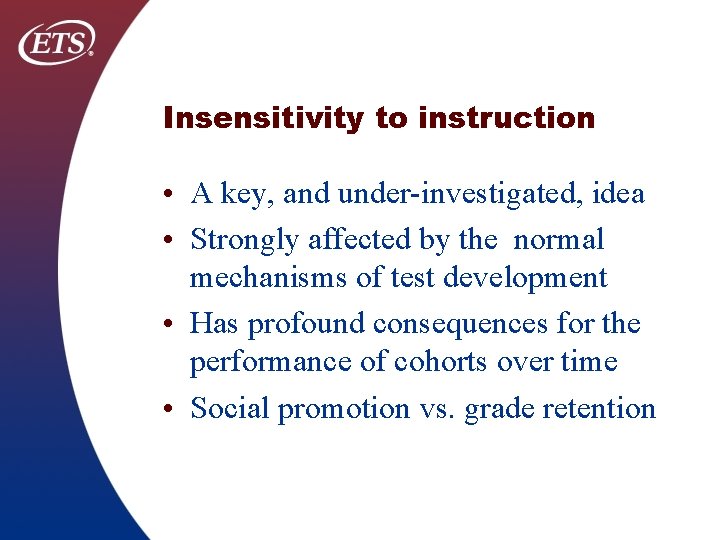

Insensitivity to instruction • A key, and under-investigated, idea • Strongly affected by the normal mechanisms of test development • Has profound consequences for the performance of cohorts over time • Social promotion vs. grade retention

Curriculum design • Curricula must be designed vertically, not horizontally • Key idea: when someone gets better, what is it that gets better? • Learning progressions

The locus of assessment • Authority – Who creates the assessment? • Resources – What resources does the student have? • Interactivity – Negotiation of meaning of response • Scoring – Externalization of standards increases accuracy

The extensiveness of assessment • Are all students assessed on the same basis? • Notions of “fairness” – Adaptations and adjustments for some – But not all – Treating different people the same is no fairer that treating similar people differently

Assessment formats • Multiple-choice – Larger (but unsystematic) coverage – Low cost – Negative backwash effects • Constructed response – Large item-student effects – High cost – Positive backwash effects

Item format and sexdifferences Boys do better Girls do better

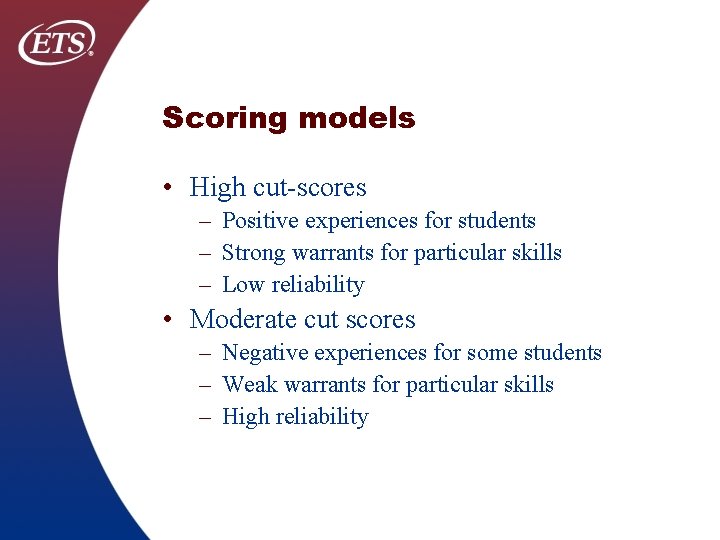

Scoring models • High cut-scores – Positive experiences for students – Strong warrants for particular skills – Low reliability • Moderate cut scores – Negative experiences for some students – Weak warrants for particular skills – High reliability

Quality issues • Threats to validity – Construct under-representation – Construct-irrelevant variance – Unreliability • Dynamic trade-offs between these

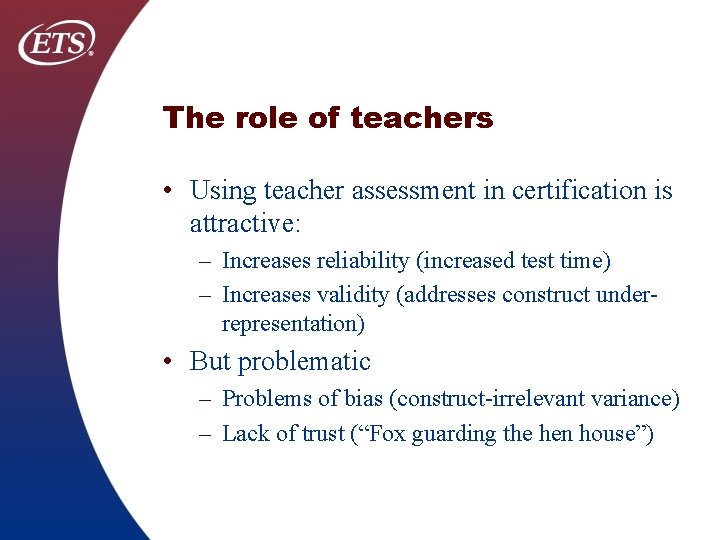

The role of teachers • Using teacher assessment in certification is attractive: – Increases reliability (increased test time) – Increases validity (addresses construct underrepresentation) • But problematic – Problems of bias (construct-irrelevant variance) – Lack of trust (“Fox guarding the hen house”)

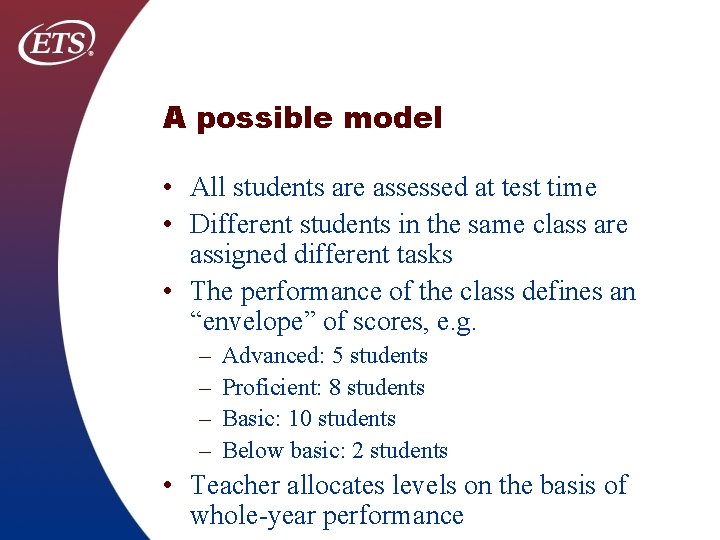

A possible model • All students are assessed at test time • Different students in the same class are assigned different tasks • The performance of the class defines an “envelope” of scores, e. g. – – Advanced: 5 students Proficient: 8 students Basic: 10 students Below basic: 2 students • Teacher allocates levels on the basis of whole-year performance

Benefits and problems • Benefits – The only way to teach to the test is to improve everyone’s performance on everything (which is what we want!) – Validity and reliability are enhanced • Problems – Students’ scores are not “inspectable” – Assumes student motivation

The effects of context • beliefs about what constitutes learning; • beliefs in the reliability and validity of the results of various tools; • a preference for and trust in numerical data, with bias towards a single number; • trust in the judgments and integrity of the teaching profession; • belief in the value of competition between students; • belief in the value of competition between schools; • belief that test results measure school effectiveness; • fear of national economic decline and education’s role in this; • belief that the key to schools’ effectiveness is strong topdown management;

Conclusion • There is no “perfect” assessment system anywhere. • Each nation’s assessment system is exquisitely tuned to local constraints and affordances • Assessment practices have impacts on teaching and learning which may be strongly amplified or attenuated by the national context. • The overall impact of particular assessment practices and initiatives is determined at least as much by culture and politics as it is by educational evidence and values.

Conclusion (2) • It is probably idle to draw up maps for the ideal assessment policy for a country, even although the principles and the evidence to support such an ideal might be clearly agreed within the ‘expert’ community. • Instead, focus on those arguments and initiatives which are least offensive to existing assumptions and beliefs, and which will nevertheless serve to catalyze a shift in them while at the same time improving some aspects of present practice.