Design Patterns Supporting Task Design by Scaffolding the

Design Patterns: Supporting Task Design by Scaffolding the Assessment Argument Robert J. Mislevy University of Maryland Geneva Haertel & Britte Haugan Cheng SRI International DR K-12 grant #0733172, “Application of Evidence-Centered Design to State Large-Scale Science Assessment. ” NSF Discovery Research K-12 PI meeting, November 10, Washington D. C. This material is based upon work supported by the National Science Foundation under Grant No. DRL- 0733172. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

Overview § Design patterns § Background § Evidence-Centered Design § Main idea § Layers § Assessment Arguments § Attributes of Design Patterns § How they inform task design

Design Patterns § Design Patterns in Architecture § Design Patterns in Software Engineering § Polti’s Thirty-Six Dramatic Situations

Messick’s Guiding Questions § § § What complex of knowledge, skills, or other attributes should be assessed? What behaviors or performances should reveal those constructs? What tasks or situations should elicit those behaviors? Messick, S. (1994). The interplay of evidence and consequences in the validation of performance assessments. Educational Researcher, 23(2), 13 -23.

Evidence-Centered Assessment Design § § § Organizing formally around Messick quote Principled framework for designing, producing, and delivering assessments Conceptual model, object model, design tools Connections among design, inference, and processes to create and deliver assessments. Particularly useful for new / complex assessments. Useful to think in terms of layers

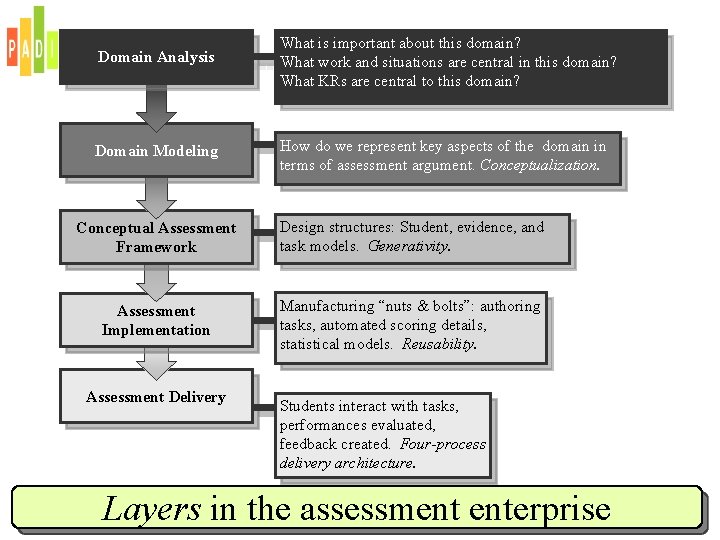

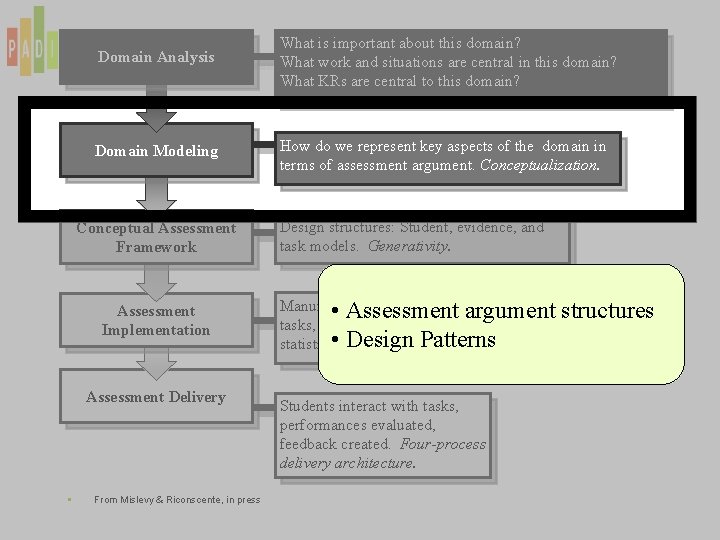

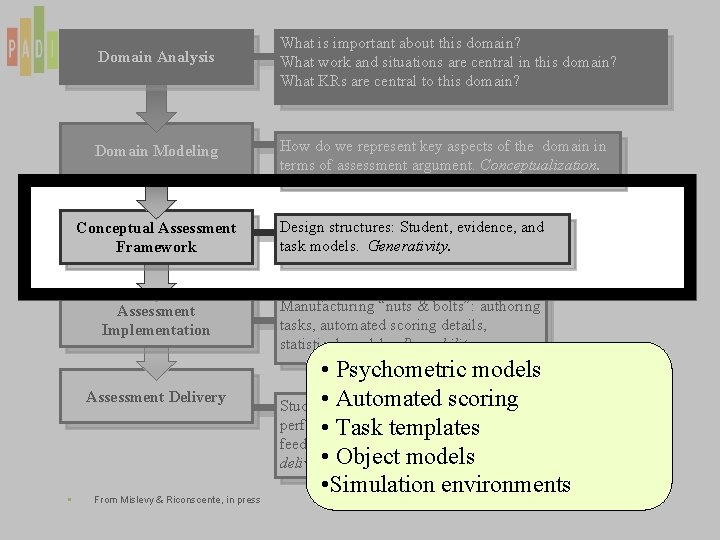

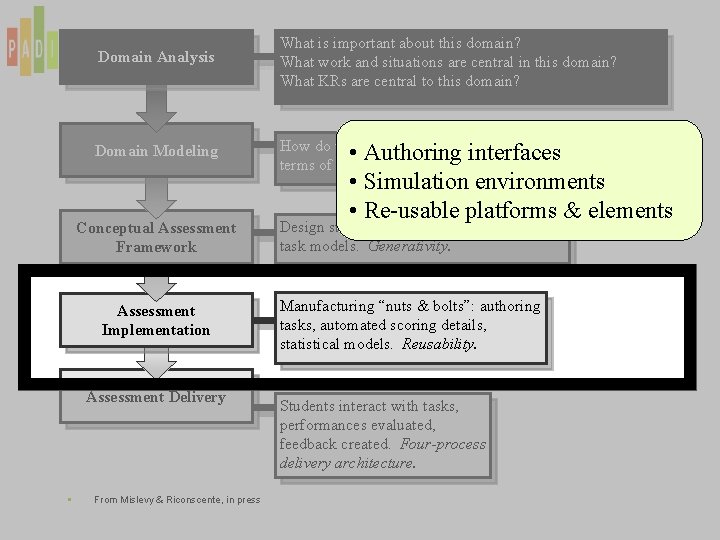

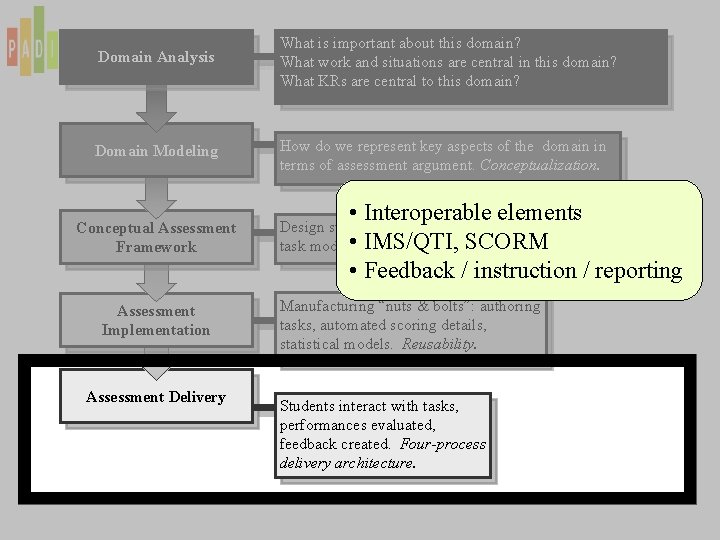

Domain Analysis What is important about this domain? What work and situations are central in this domain? What KRs are central to this domain? Domain Modeling How do we represent key aspects of the domain in terms of assessment argument. Conceptualization. Conceptual Assessment Framework Design structures: Student, evidence, and task models. Generativity. Assessment Implementation Manufacturing “nuts & bolts”: authoring tasks, automated scoring details, statistical models. Reusability. Assessment Delivery § Students interact with tasks, performances evaluated, feedback created. Four-process delivery architecture. Layers in the assessment enterprise From Mislevy & Riconscente, in press

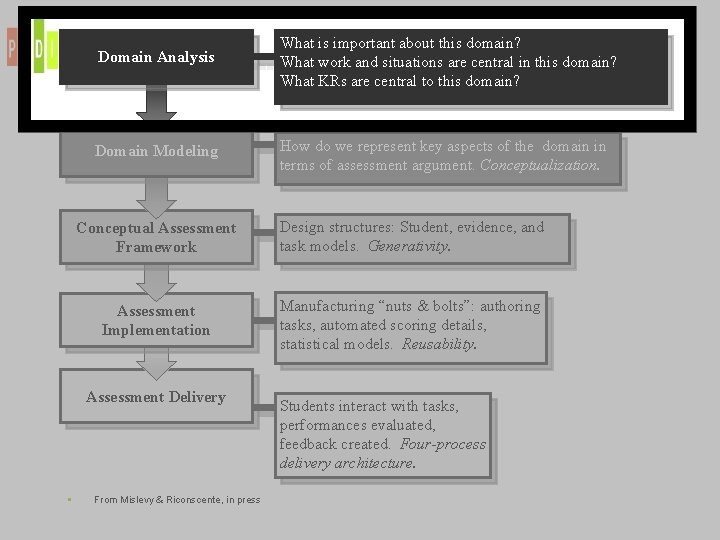

Domain Analysis What is important about this domain? What work and situations are central in this domain? What KRs are central to this domain? Domain Modeling How do we represent key aspects of the domain in terms of assessment argument. Conceptualization. Conceptual Assessment Framework Design structures: Student, evidence, and task models. Generativity. Assessment Implementation Manufacturing “nuts & bolts”: authoring tasks, automated scoring details, statistical models. Reusability. Assessment Delivery § From Mislevy & Riconscente, in press Students interact with tasks, performances evaluated, feedback created. Four-process delivery architecture.

Domain Analysis What is important about this domain? What work and situations are central in this domain? What KRs are central to this domain? Domain Modeling How do we represent key aspects of the domain in terms of assessment argument. Conceptualization. Conceptual Assessment Framework Assessment Implementation Assessment Delivery § From Mislevy & Riconscente, in press Design structures: Student, evidence, and task models. Generativity. Manufacturing “nuts & bolts”: authoring • Assessment argument tasks, automated scoring details, • models. Design. Reusability. Patterns statistical Students interact with tasks, performances evaluated, feedback created. Four-process delivery architecture. structures

Domain Analysis What is important about this domain? What work and situations are central in this domain? What KRs are central to this domain? Domain Modeling How do we represent key aspects of the domain in terms of assessment argument. Conceptualization. Conceptual Assessment Framework Design structures: Student, evidence, and task models. Generativity. Assessment Implementation Manufacturing “nuts & bolts”: authoring tasks, automated scoring details, statistical models. Reusability. Assessment Delivery § From Mislevy & Riconscente, in press • Psychometric models • Automated scoring Students interact with tasks, performances • Taskevaluated, templates feedback created. Four-process • architecture. Object models delivery • Simulation environments

Domain Analysis What is important about this domain? What work and situations are central in this domain? What KRs are central to this domain? Domain Modeling How do we represent key aspects of the domain in • Authoring interfaces terms of assessment argument. Conceptualization. Conceptual Assessment Framework Design structures: Student, evidence, and task models. Generativity. Assessment Implementation Manufacturing “nuts & bolts”: authoring tasks, automated scoring details, statistical models. Reusability. Assessment Delivery § • Simulation environments • Re-usable platforms & elements From Mislevy & Riconscente, in press Students interact with tasks, performances evaluated, feedback created. Four-process delivery architecture.

Domain Analysis What is important about this domain? What work and situations are central in this domain? What KRs are central to this domain? Domain Modeling How do we represent key aspects of the domain in terms of assessment argument. Conceptualization. Conceptual Assessment Framework Assessment Implementation Assessment Delivery § From Mislevy & Riconscente, in press • Interoperable elements Design structures: Student, evidence, and • IMS/QTI, task models. Generativity. SCORM • Feedback / instruction / reporting Manufacturing “nuts & bolts”: authoring tasks, automated scoring details, statistical models. Reusability. Students interact with tasks, performances evaluated, feedback created. Four-process delivery architecture.

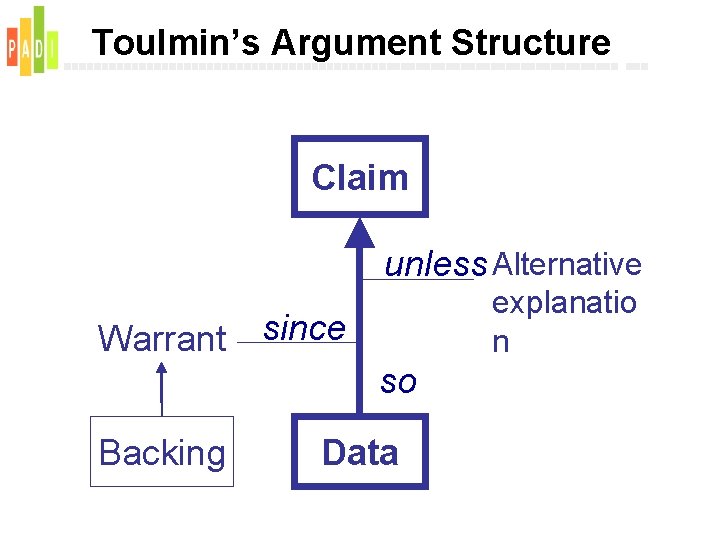

Toulmin’s Argument Structure Claim unless Alternative explanatio n Warrant since so Backing Data

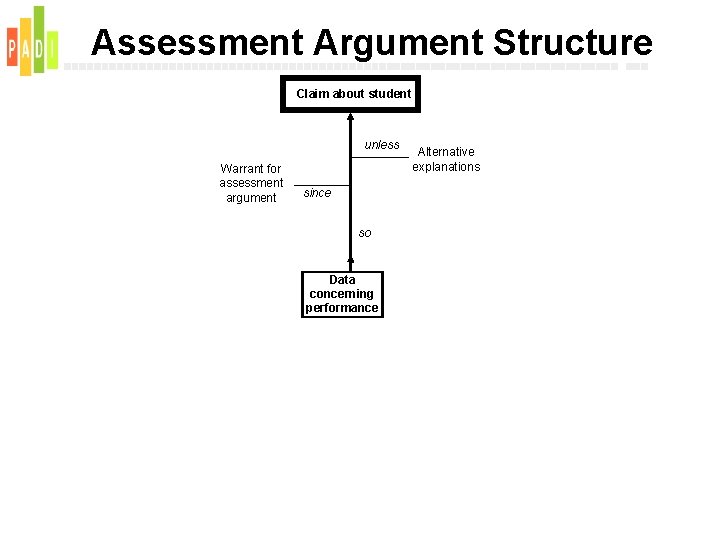

Assessment Argument Structure Claim about student unless Warrant for assessment argument since so Data concerning performance Alternative explanations

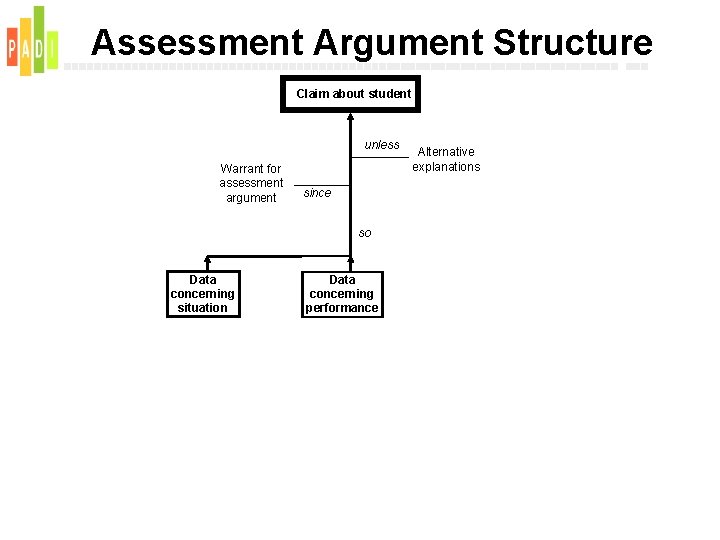

Assessment Argument Structure Claim about student unless Warrant for assessment argument since so Data concerning situation Data concerning performance Alternative explanations

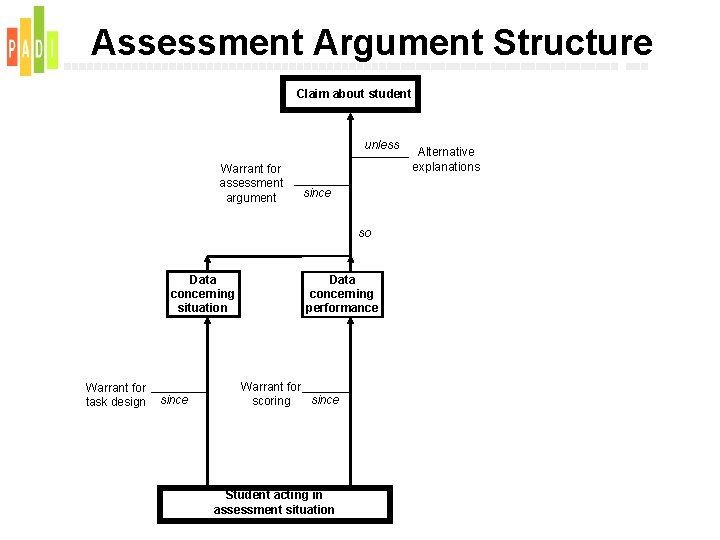

Assessment Argument Structure Claim about student unless Warrant for assessment argument since so Data concerning situation Warrant for task design since Data concerning performance Warrant for since scoring Student acting in assessment situation Alternative explanations

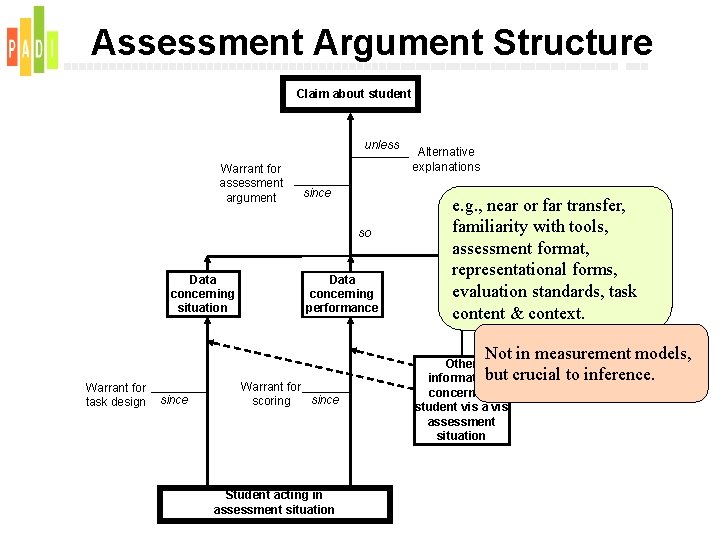

Assessment Argument Structure Claim about student unless Warrant for assessment argument since so Data concerning situation Warrant for task design since Data concerning performance Warrant for since scoring Student acting in assessment situation Alternative explanations e. g. , near or far transfer, familiarity with tools, assessment format, representational forms, evaluation standards, task content & context. Not in measurement models, crucial to inference. Other but information concerning student vis assessment situation

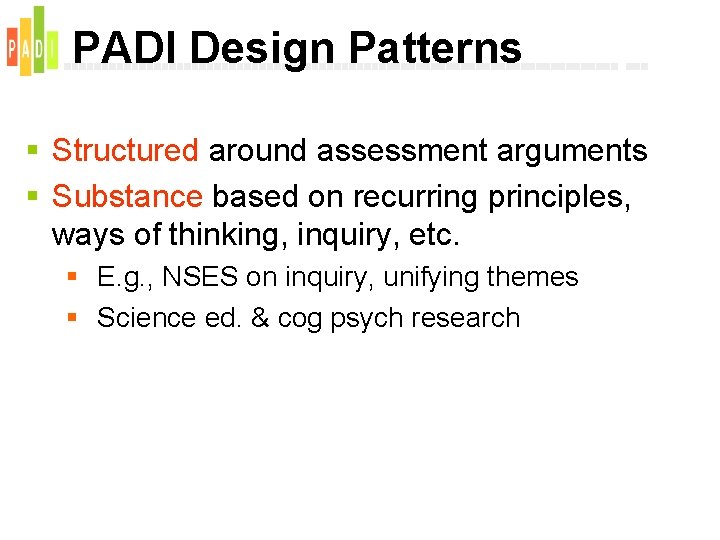

PADI Design Patterns § Structured around assessment arguments § Substance based on recurring principles, ways of thinking, inquiry, etc. § E. g. , NSES on inquiry, unifying themes § Science ed. & cog psych research

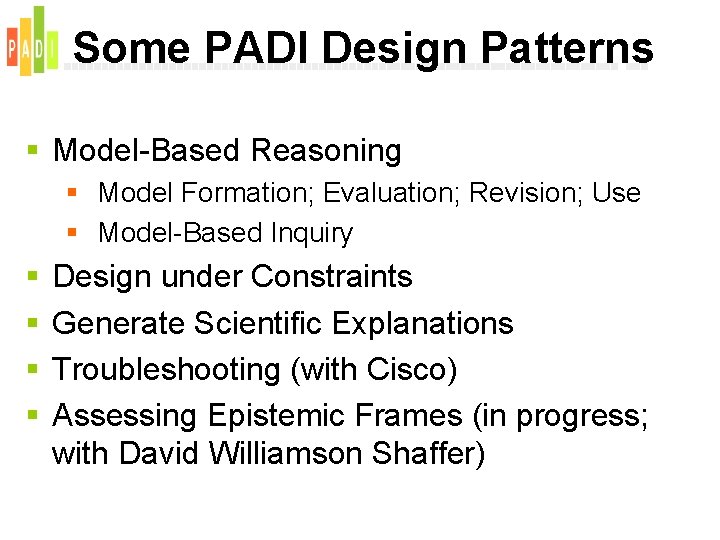

Some PADI Design Patterns § Model-Based Reasoning § Model Formation; Evaluation; Revision; Use § Model-Based Inquiry § § Design under Constraints Generate Scientific Explanations Troubleshooting (with Cisco) Assessing Epistemic Frames (in progress; with David Williamson Shaffer)

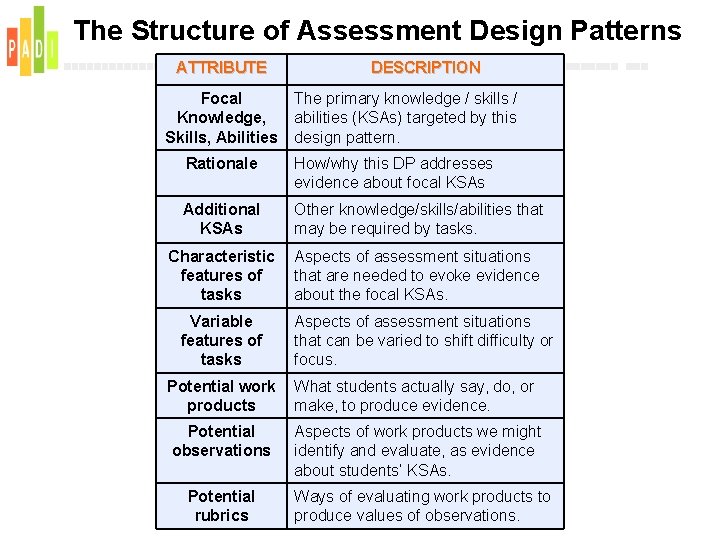

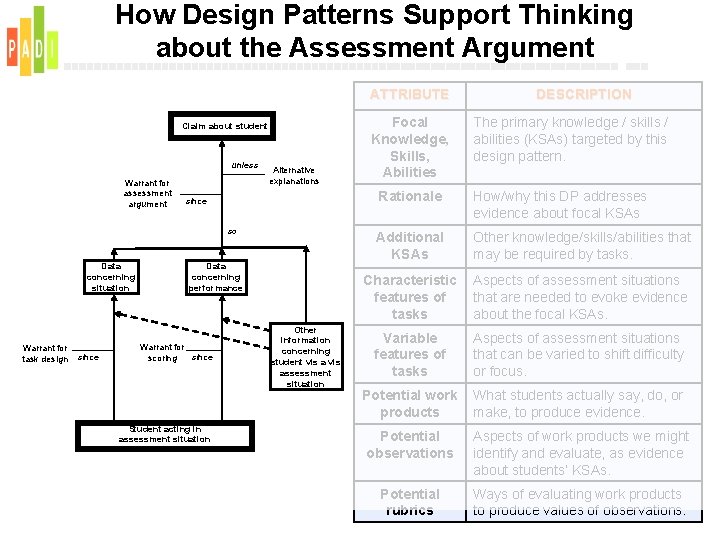

The Structure of Assessment Design Patterns ATTRIBUTE Focal Knowledge, Skills, Abilities DESCRIPTION The primary knowledge / skills / abilities (KSAs) targeted by this design pattern. Rationale How/why this DP addresses evidence about focal KSAs Additional KSAs Other knowledge/skills/abilities that may be required by tasks. Characteristic features of tasks Aspects of assessment situations that are needed to evoke evidence about the focal KSAs. Variable features of tasks Aspects of assessment situations that can be varied to shift difficulty or focus. Potential work products What students actually say, do, or make, to produce evidence. Potential observations Aspects of work products we might identify and evaluate, as evidence about students’ KSAs. Potential rubrics Ways of evaluating work products to produce values of observations.

How Design Patterns Support Thinking about the Assessment Argument ATTRIBUTE Claim about student unless Warrant for assessment argument Alternative explanations since so Data concerning situation Warrant for task design since Data concerning performance Warrant for since scoring Student acting in assessment situation Other information concerning student vis assessment situation Focal Knowledge, Skills, Abilities DESCRIPTION The primary knowledge / skills / abilities (KSAs) targeted by this design pattern. Rationale How/why this DP addresses evidence about focal KSAs Additional KSAs Other knowledge/skills/abilities that may be required by tasks. Characteristic features of tasks Aspects of assessment situations that are needed to evoke evidence about the focal KSAs. Variable features of tasks Aspects of assessment situations that can be varied to shift difficulty or focus. Potential work products What students actually say, do, or make, to produce evidence. Potential observations Aspects of work products we might identify and evaluate, as evidence about students’ KSAs. Potential rubrics Ways of evaluating work products to produce values of observations.

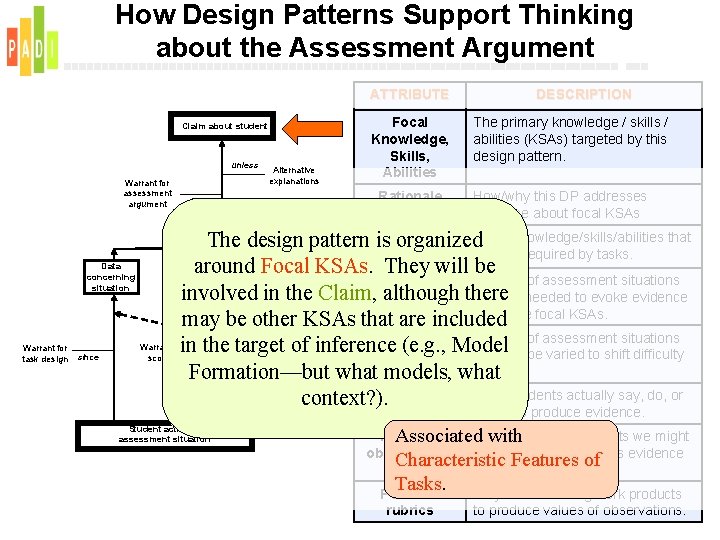

How Design Patterns Support Thinking about the Assessment Argument ATTRIBUTE Claim about student unless Warrant for assessment argument Alternative explanations Focal Knowledge, Skills, Abilities Rationale since DESCRIPTION The primary knowledge / skills / abilities (KSAs) targeted by this design pattern. How/why this DP addresses evidence about focal KSAs so Warrant for task design The design pattern Additional is organized. Other knowledge/skills/abilities that KSAs may be required by tasks. Data around Focal KSAs. They will be concerning Characteristic Aspects of assessment situations situation performance involved in the Claim, features although of there that are needed to evoke evidence about the focal KSAs. may be other. Other KSAs thattasks are included Variable Aspects of assessment situations information Warrant forin the targetconcerning of inference (e. g. , Model features of that can be varied to shift difficulty since scoring student vis assessment what models, tasks or focus. Formation—but what situation Potential work What students actually say, do, or context? ). products Student acting in assessment situation make, to produce evidence. Potential Associated. Aspects with of work products we might observations identify and evaluate, as evidence Characteristic Features of about students’ KSAs. Tasks. Potential rubrics Ways of evaluating work products to produce values of observations.

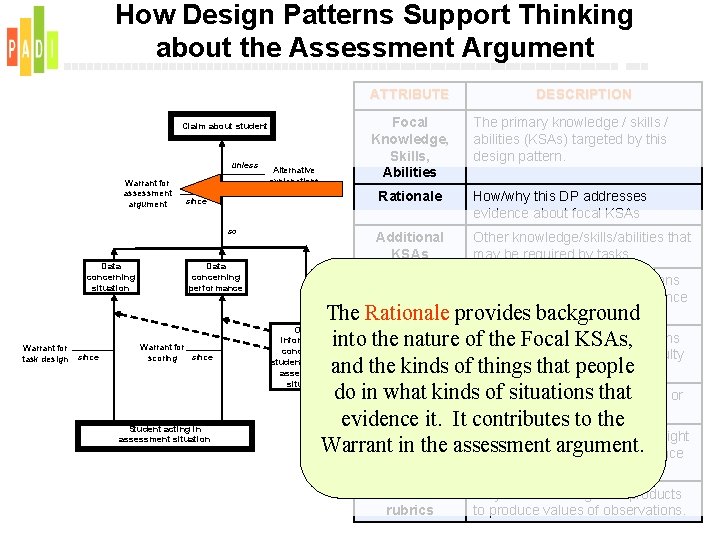

How Design Patterns Support Thinking about the Assessment Argument ATTRIBUTE Focal Knowledge, Skills, Abilities Claim about student unless Warrant for assessment argument Alternative explanations since so Data concerning situation Warrant for task design since Data concerning performance Warrant for since scoring Student acting in assessment situation DESCRIPTION The primary knowledge / skills / abilities (KSAs) targeted by this design pattern. Rationale How/why this DP addresses evidence about focal KSAs Additional KSAs Other knowledge/skills/abilities that may be required by tasks. Characteristic Aspects of assessment situations features of that are needed to evoke evidence tasks about the focal KSAs. The Rationale provides background Other information concerning student vis assessment situation Variable of assessment situations into the nature of. Aspects the Focal KSAs, features of that can be varied to shift difficulty and thetasks kinds of orthings focus. that people do in whatwork kinds. What of situations that Potential students actually say, do, or products make, to produce evidence it. It contributes to the Potential Aspects of work products we might Warrant in the assessment argument. observations identify and evaluate, as evidence about students’ KSAs. Potential rubrics Ways of evaluating work products to produce values of observations.

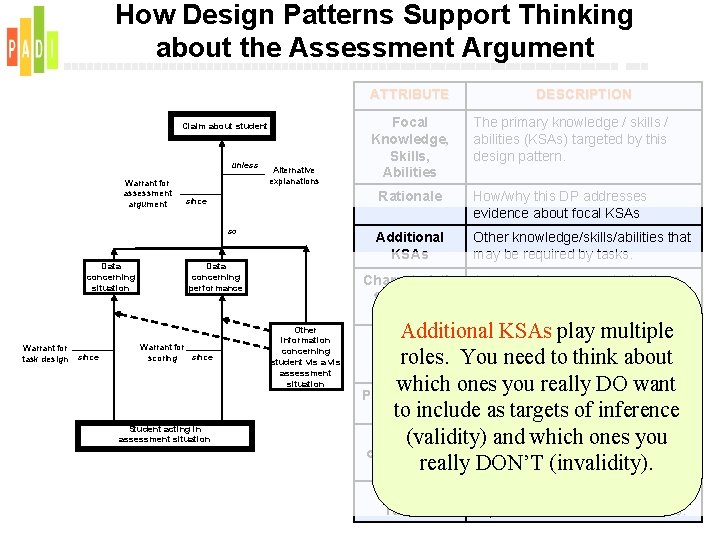

How Design Patterns Support Thinking about the Assessment Argument ATTRIBUTE Claim about student unless Warrant for assessment argument Alternative explanations since so Data concerning situation Warrant for task design since Data concerning performance Warrant for since scoring Student acting in assessment situation Other information concerning student vis assessment situation Focal Knowledge, Skills, Abilities DESCRIPTION The primary knowledge / skills / abilities (KSAs) targeted by this design pattern. Rationale How/why this DP addresses evidence about focal KSAs Additional KSAs Other knowledge/skills/abilities that may be required by tasks. Characteristic features of tasks Aspects of assessment situations that are needed to evoke evidence about the focal KSAs. Additional KSAs play multiple Variable Aspects of assessment situations features of You that can be varied to shiftabout difficulty roles. need to think tasks or focus. which ones you really DO want Potential work What students actually say, do, or products to produceof evidence. to includemake, as targets inference Potential Aspects work products might (validity) and ofwhich ones we you observations identify and evaluate, as evidence really about DON’T (invalidity). students’ KSAs. Potential rubrics Ways of evaluating work products to produce values of observations.

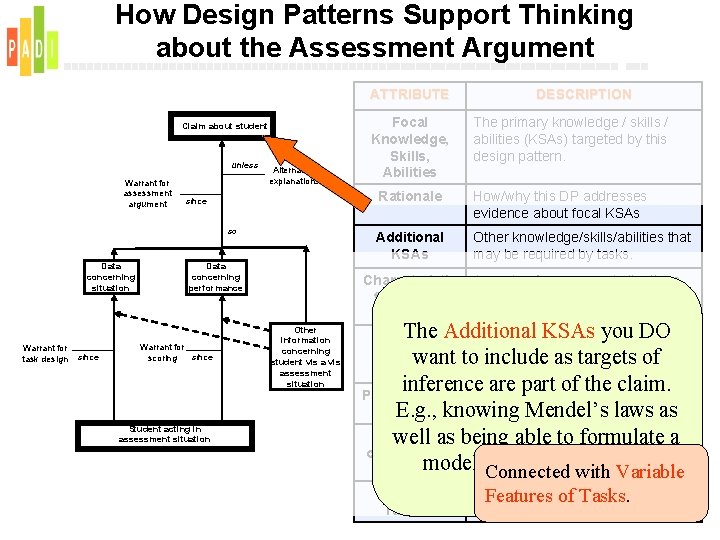

How Design Patterns Support Thinking about the Assessment Argument ATTRIBUTE Claim about student unless Warrant for assessment argument Alternative explanations since so Data concerning situation Warrant for task design since Data concerning performance Warrant for since scoring Student acting in assessment situation Other information concerning student vis assessment situation Focal Knowledge, Skills, Abilities DESCRIPTION The primary knowledge / skills / abilities (KSAs) targeted by this design pattern. Rationale How/why this DP addresses evidence about focal KSAs Additional KSAs Other knowledge/skills/abilities that may be required by tasks. Characteristic features of tasks Aspects of assessment situations that are needed to evoke evidence about the focal KSAs. The Additional you DO Variable Aspects of KSAs assessment situations features of tothat can be varied to shift difficulty want include as targets of tasks or focus. inference are part of the claim. Potential work What students actually say, do, or products make, to. Mendel’s produce evidence. E. g. , knowing laws as Potential well as observations Aspects of work we might being able to products formulate a identify and evaluate, as evidence modelabout in an investigation. students’ KSAs. Connected with Variable Potential rubrics Ways of evaluating work products Features of Tasks. to produce values of observations.

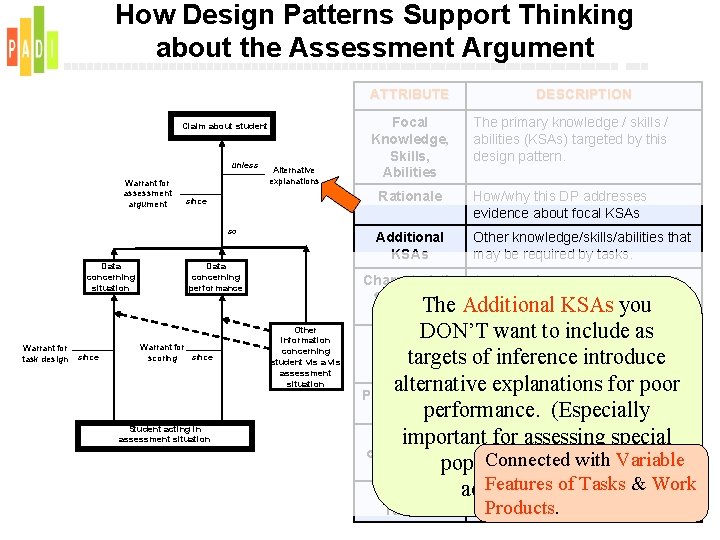

How Design Patterns Support Thinking about the Assessment Argument ATTRIBUTE Claim about student unless Warrant for assessment argument Alternative explanations since so Data concerning situation Warrant for task design since Data concerning performance Warrant for since scoring Student acting in assessment situation Focal Knowledge, Skills, Abilities The primary knowledge / skills / abilities (KSAs) targeted by this design pattern. Rationale How/why this DP addresses evidence about focal KSAs Additional KSAs Other knowledge/skills/abilities that may be required by tasks. Characteristic features of tasks. The Other information concerning student vis assessment situation DESCRIPTION Aspects of assessment situations that are needed to evoke evidence Additional about the focal. KSAs. you DON’T wantof assessment to includesituations as Variable Aspects features of thatinference can be variedintroduce to shift difficulty targets of tasks or focus. alternative explanations for poor Potential work What students actually say, do, or products make, to produce evidence. performance. (Especially Potential Aspects of work products we might important for assessing special observations identify and evaluate, as evidence Connected with Variable populations – UDL about students’ KSAs. & Potential rubrics Features of Tasks & Work acommodations. ) Ways of evaluating work products to. Products. produce values of observations.

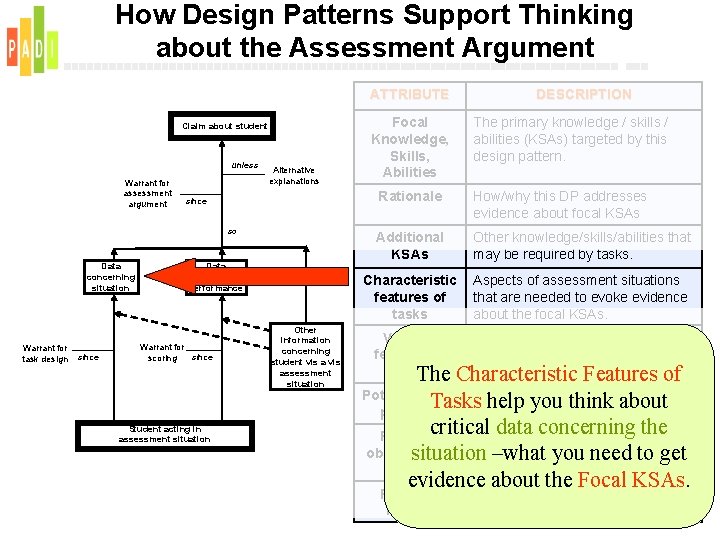

How Design Patterns Support Thinking about the Assessment Argument ATTRIBUTE Claim about student unless Warrant for assessment argument Alternative explanations since so Data concerning situation Warrant for task design since Data concerning performance Warrant for since scoring Student acting in assessment situation Other information concerning student vis assessment situation DESCRIPTION Focal Knowledge, Skills, Abilities The primary knowledge / skills / abilities (KSAs) targeted by this design pattern. Rationale How/why this DP addresses evidence about focal KSAs Additional KSAs Other knowledge/skills/abilities that may be required by tasks. Characteristic features of tasks Aspects of assessment situations that are needed to evoke evidence about the focal KSAs. Variable features of tasks The Aspects of assessment situations that can be varied to shift difficulty or focus. Characteristic Features of Potential work What students actually say, do, or Tasksmake, helpto you think about products produce evidence. critical dataofconcerning the Potential Aspects work products we might observations identify and evaluate, as evidence situation –what you need to get about students’ KSAs. evidence about the Focal KSAs. Potential rubrics Ways of evaluating work products to produce values of observations.

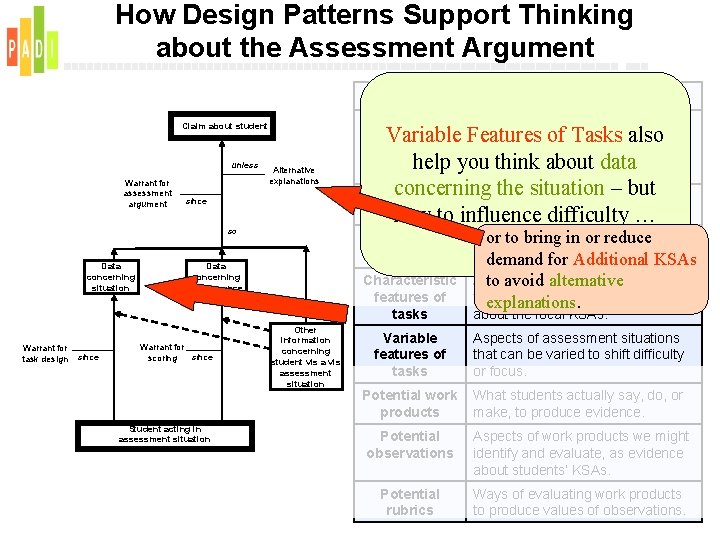

How Design Patterns Support Thinking about the Assessment Argument ATTRIBUTE Claim about student unless Warrant for assessment argument Alternative explanations since so Data concerning situation Warrant for task design since Warrant for since scoring Student acting in assessment situation Focal The primary knowledge / skills / Variable Features of Tasks also Knowledge, abilities (KSAs) targeted by this Skills, design pattern. help you think about data Abilities concerning the situation – but Rationale How/why this DP addresses evidence about focal KSAs… now to influence difficulty Additional KSAs Data concerning performance Other information concerning student vis assessment situation DESCRIPTION Other or toknowledge/skills/abilities bring in or reduce that may be required by tasks. KSAs demand for Additional Characteristic features of tasks Aspects of assessment to avoid alternativesituations that are needed to evoke evidence explanations. about the focal KSAs. Variable features of tasks Aspects of assessment situations that can be varied to shift difficulty or focus. Potential work products What students actually say, do, or make, to produce evidence. Potential observations Aspects of work products we might identify and evaluate, as evidence about students’ KSAs. Potential rubrics Ways of evaluating work products to produce values of observations.

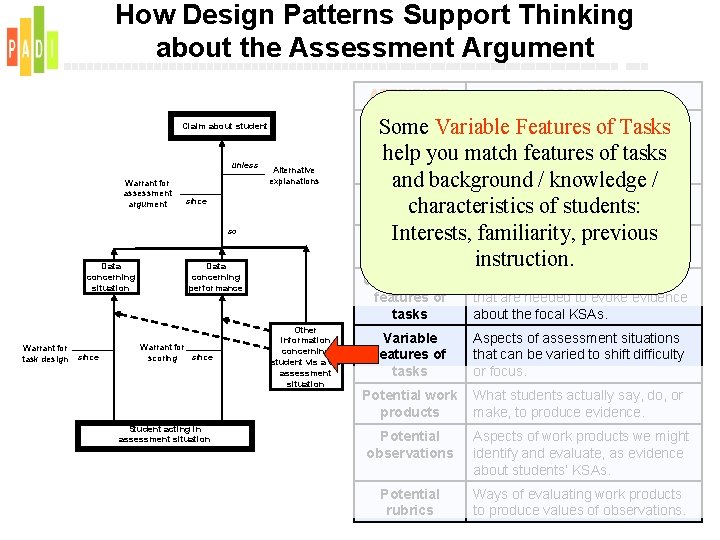

How Design Patterns Support Thinking about the Assessment Argument ATTRIBUTE Claim about student unless Warrant for assessment argument Alternative explanations since so Data concerning situation Warrant for task design since Data concerning performance Warrant for since scoring Student acting in assessment situation Other information concerning student vis assessment situation DESCRIPTION Focal Variable The primary knowledge / skills / Some Features of Tasks Knowledge, abilities (KSAs) targeted by this help features of tasks Skills, you match design pattern. Abilities and background / knowledge / Rationale How/why this DP addresses characteristics of students: evidence about focal KSAs Interests, Other familiarity, previous that Additional knowledge/skills/abilities KSAs may be required by tasks. instruction. Characteristic features of tasks Aspects of assessment situations that are needed to evoke evidence about the focal KSAs. Variable features of tasks Aspects of assessment situations that can be varied to shift difficulty or focus. Potential work products What students actually say, do, or make, to produce evidence. Potential observations Aspects of work products we might identify and evaluate, as evidence about students’ KSAs. Potential rubrics Ways of evaluating work products to produce values of observations.

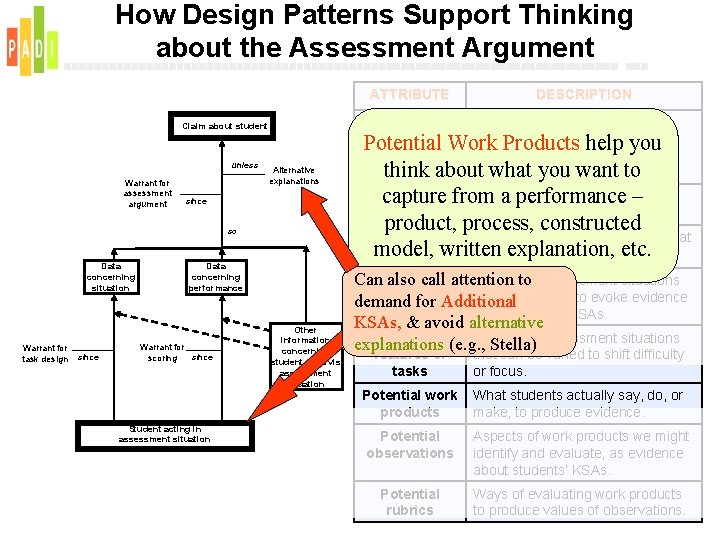

How Design Patterns Support Thinking about the Assessment Argument ATTRIBUTE Claim about student unless Warrant for assessment argument Alternative explanations since so Data concerning situation Warrant for task design since Data concerning performance Warrant for since scoring Student acting in assessment situation Other information concerning student vis assessment situation DESCRIPTION Focal The primary knowledge / skills / Knowledge, Work abilities (KSAs) targeted by this Potential Products help you Skills, design pattern. think about what you want to Abilities Rationale capture How/why this DP addresses from a performance – evidence about focal KSAs product, process, constructed Additional Other knowledge/skills/abilities that model, explanation, etc. KSAs written may be required by tasks. Characteristic Aspectstoof assessment situations Can also call attention features that are needed to evoke evidence demand forof. Additional tasks about the focal KSAs, & avoid alternative Variable Aspects of assessment situations explanations (e. g. , Stella) features of tasks that can be varied to shift difficulty or focus. Potential work products What students actually say, do, or make, to produce evidence. Potential observations Aspects of work products we might identify and evaluate, as evidence about students’ KSAs. Potential rubrics Ways of evaluating work products to produce values of observations.

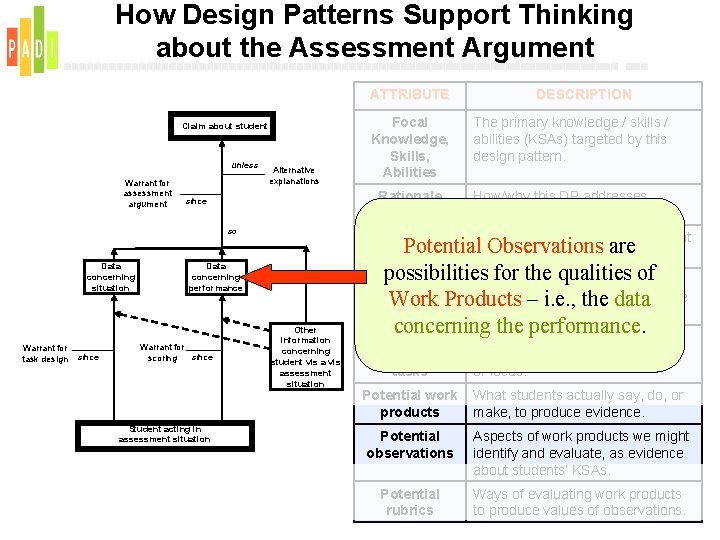

How Design Patterns Support Thinking about the Assessment Argument ATTRIBUTE Claim about student unless Warrant for assessment argument Alternative explanations Rationale since so Data concerning situation Warrant for task design since Student acting in assessment situation The primary knowledge / skills / abilities (KSAs) targeted by this design pattern. How/why this DP addresses evidence about focal KSAs Additional Other knowledge/skills/abilities that Potential Observations are KSAs may be required by tasks. possibilities for the qualities of Characteristic Aspects of assessment situations features that are needed evoke evidence Workof. Products – i. e. , tothe data tasks about the focal KSAs. Data concerning performance Warrant for since scoring Focal Knowledge, Skills, Abilities DESCRIPTION Other information concerning student vis assessment situation concerning the performance. Aspects of assessment situations Variable features of tasks that can be varied to shift difficulty or focus. Potential work products What students actually say, do, or make, to produce evidence. Potential observations Aspects of work products we might identify and evaluate, as evidence about students’ KSAs. Potential rubrics Ways of evaluating work products to produce values of observations.

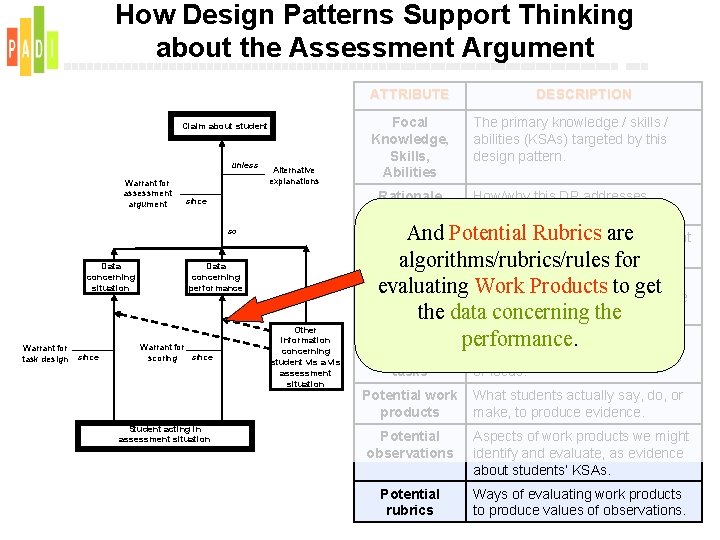

How Design Patterns Support Thinking about the Assessment Argument ATTRIBUTE Claim about student unless Warrant for assessment argument Alternative explanations Rationale since And Additional KSAs so Data concerning situation Warrant for task design since Data concerning performance Warrant for since scoring Student acting in assessment situation Focal Knowledge, Skills, Abilities Other information concerning student vis assessment situation DESCRIPTION The primary knowledge / skills / abilities (KSAs) targeted by this design pattern. How/why this DP addresses evidence about focal KSAs Potential Rubrics are Other knowledge/skills/abilities that may be required by tasks. algorithms/rubrics/rules for Characteristic Aspects of assessment situations evaluating that Work Products to get features of are needed to evoke evidence tasks about the focal KSAs. the data concerning the Variable Aspects of assessment situations performance. features of tasks that can be varied to shift difficulty or focus. Potential work products What students actually say, do, or make, to produce evidence. Potential observations Aspects of work products we might identify and evaluate, as evidence about students’ KSAs. Potential rubrics Ways of evaluating work products to produce values of observations.

For more information… § PADI: Principled Assessment Design for Inquiry § § http: //padi. sri. com NSF project, collaboration with SRI et al. Links to follow-on projects Bob Mislevy home page § § § http: //www. education. umd. edu/EDMS/mislevy/ Links to papers on ECD Cisco applications

Now for the Good Stuff … § Examples of design patterns with content § Different projects § Different grain sizes § Different users § How they evolved to suit needs of users § Same essential structure § Representations, language, emphases, and affordances tuned to users and needs § How they are being used

Use of Design Patterns in STEM Research and Development Projects Britte Haugan Cheng and Geneva Haertel DRK-12 PI Meeting, November 2009

Current Catalog of Design Patterns q ECD/PADI related projects have produced over 100 Design Patterns q Domains include: science inquiry, science content, mathematics, economics, model-based reasoning q Design Patterns span grades 3 -16+ q Organized around themes, models, and processes, not surface features or formats of tasks q Support the design of scenario-based, multiple choice, and performance tasks q The following examples show projects have used and customized Design Patterns in ways that suit their needs and users

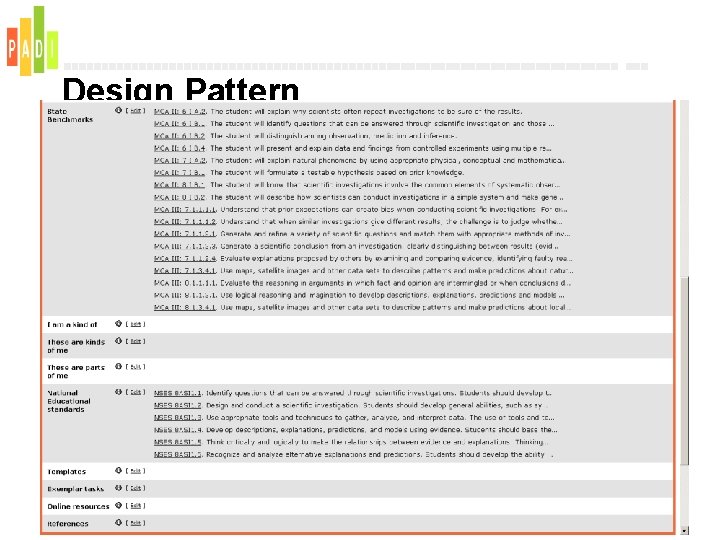

Example 1 DRK-12 Project An Application of ECD to a State, Large-scale Science Assessment q Challenge in Minnesota Comprehensive Assessment of science: q How to design scenario-based tasks, technology-enhanced interactions, grounded in standards both EFFICIENTLY and VALIDLY. q Design Patterns support storyboard writing and task authoring q Designers are committee of MN teachers, supported by Pearson q Project focuses on a small number of Design Patterns for “hard-to-assess” science content/inquiry q Based on Minnesota state science standards and benchmarks and the NSES inquiry standards q Design Patterns are Web-based and interactive

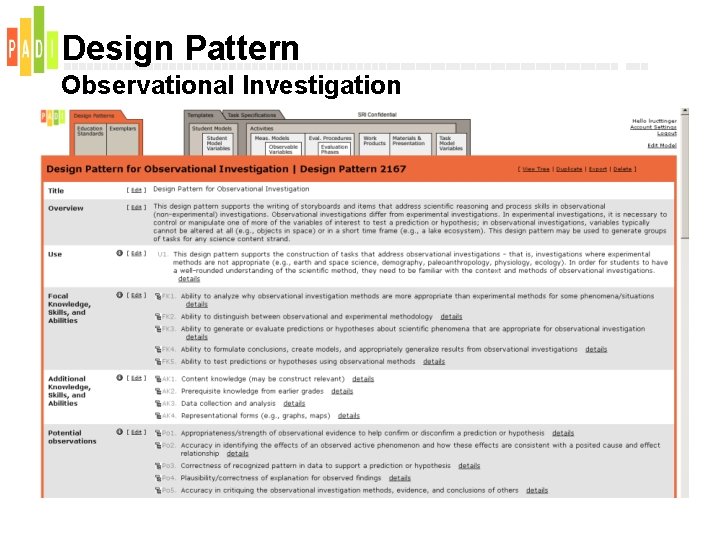

Design Pattern Observational Investigation q Relates science content/processes to components of assessment argument q Higher-level, cross-cutting themes, ways of thinking, ways of using science, rather than many finer-grained standards q Related to relevant standards and benchmarks q Interactive Features: q Examples and details q Activate pedagogical content knowledge q Presents exemplar assessment tasks q Provides selected knowledge representations q Links among associated assessment argument components

Design Pattern Observational Investigation

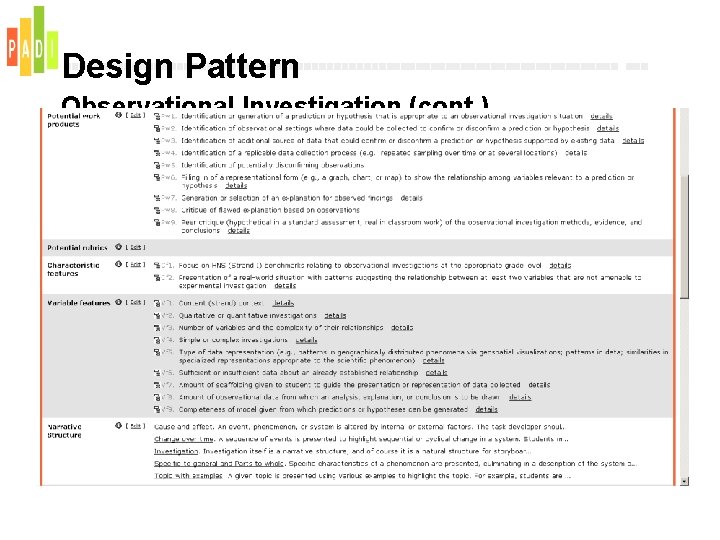

Design Pattern Observational Investigation (cont. )

Design Pattern Observational Investigation (cont. )

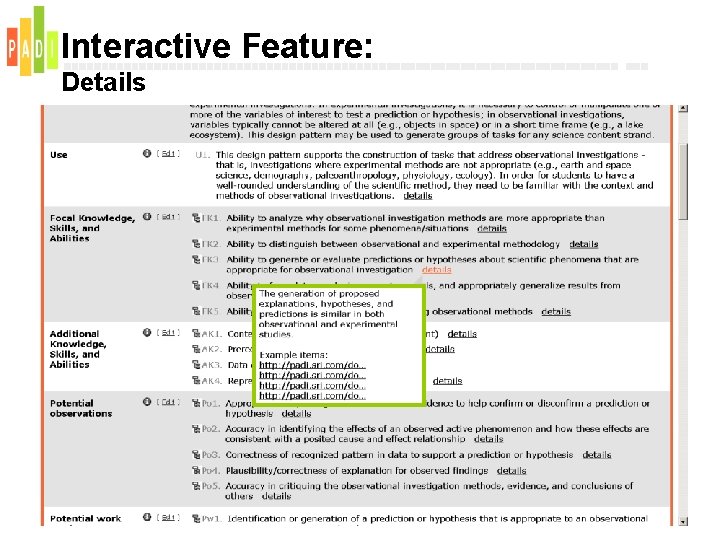

Interactive Feature: Details

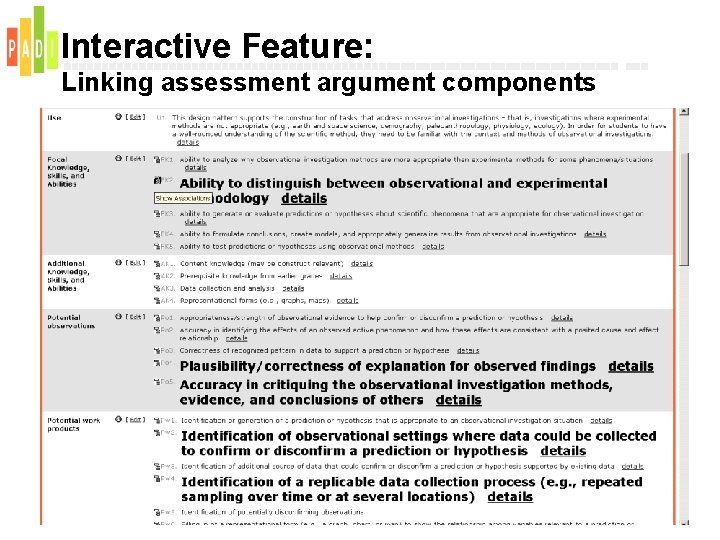

Interactive Feature: Linking assessment argument components

Design Pattern Highlights Observational Investigation q Relates science content/processes to components of assessment argument q Higher-level, cross-cutting themes, ways of thinking, ways of using science, rather than many fine-grained standards q Interactive Features: q Examples and details q Activates pedagogical content knowledge q Presents exemplar assessment tasks q Provides selected knowledge representations q Relates relevant standards and benchmarks q Links among associated assessment argument components

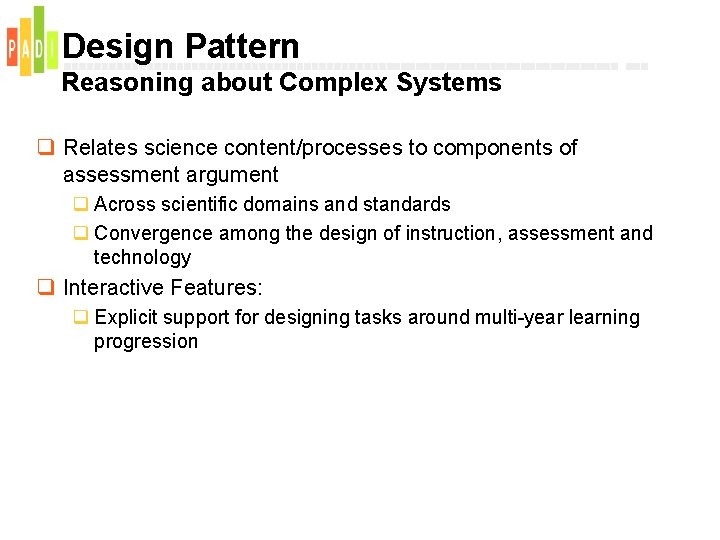

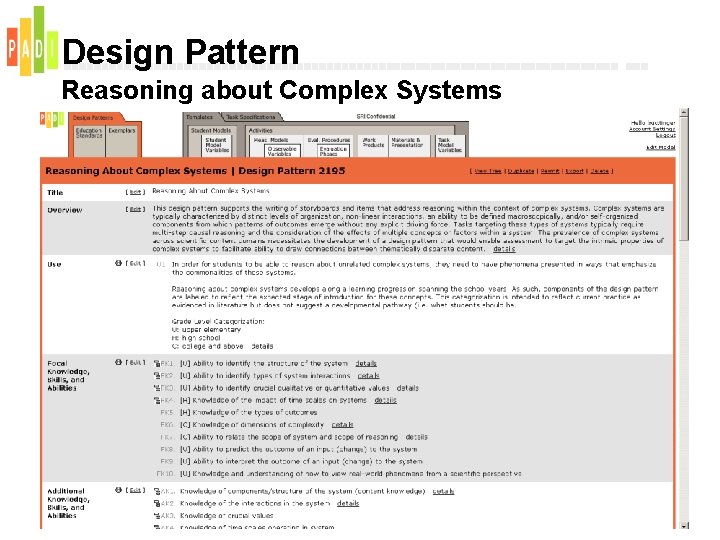

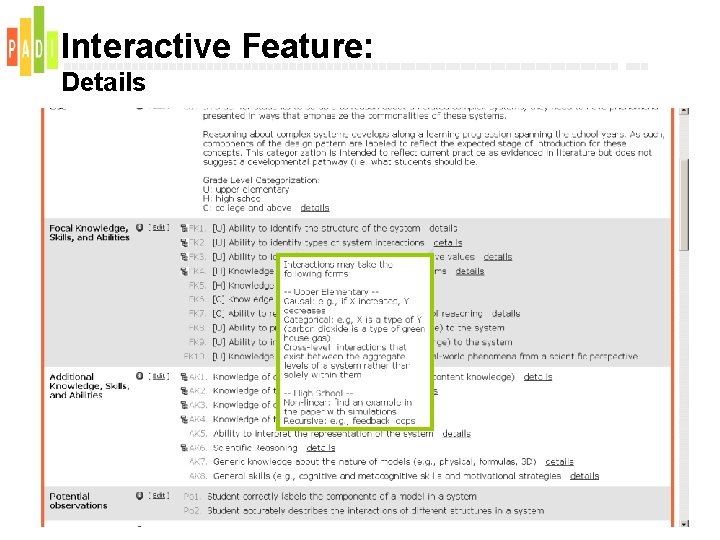

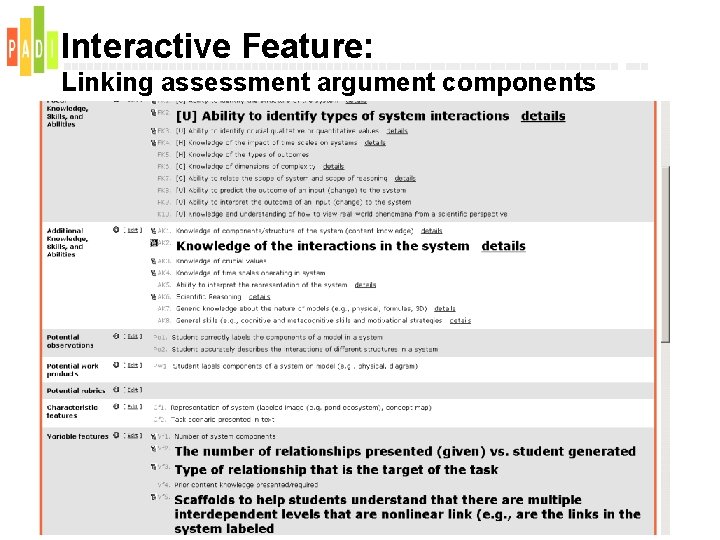

Design Pattern Reasoning about Complex Systems q Relates science content/processes to components of assessment argument q Across scientific domains and standards q Convergence among the design of instruction, assessment and technology q Interactive Features: q Explicit support for designing tasks around multi-year learning progression

Design Pattern Reasoning about Complex Systems

Interactive Feature: Details

Interactive Feature: Linking assessment argument components

Design Pattern Highlights Reasoning about Complex Systems q Relates science content/processes to components of assessment argument q Across scientific domains and standards q Convergence among the design of instruction, assessment and technology q Interactive Feature: q Explicit support for designing tasks around multi-year learning progression

Example 2 Principled Assessment Designs in Inquiry Model-Based Reasoning Suite q Relates science content/processes to components of assessment argument q A suite of seven related Design Patterns support curriculum-based assessment design q Theoretically and empirically motivated by Stewart and Hafner (1994), Research on Problem-Solving: Genetics. In D. L. Gable (Ed. ), Handbook of research on science teaching and learning. New York: Mac. Millan Publishing. q Aspects of model-based reasoning including model formation, model use, model revision, and coordination among aspects of model-based reasoning q Multivariate student model: scientific reasoning and science content q Interactive Feature: q Support the design of both: q Independent tasks associated with an aspect of model-based reasoning q Steps in a larger investigation comprised of several aspects including

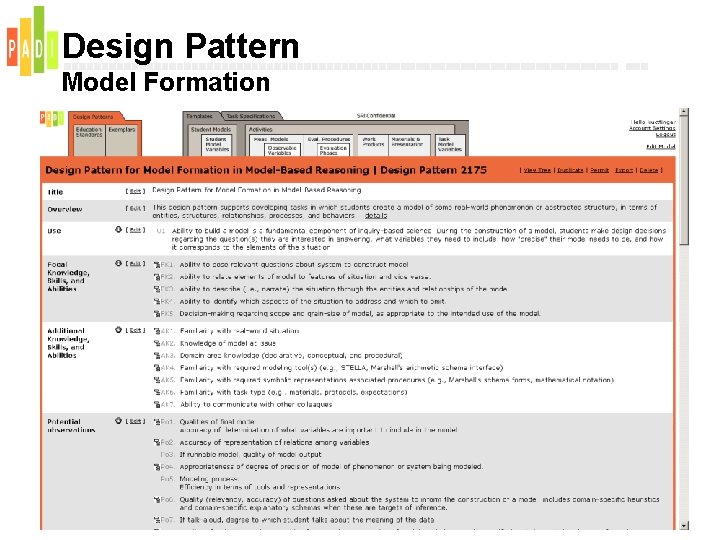

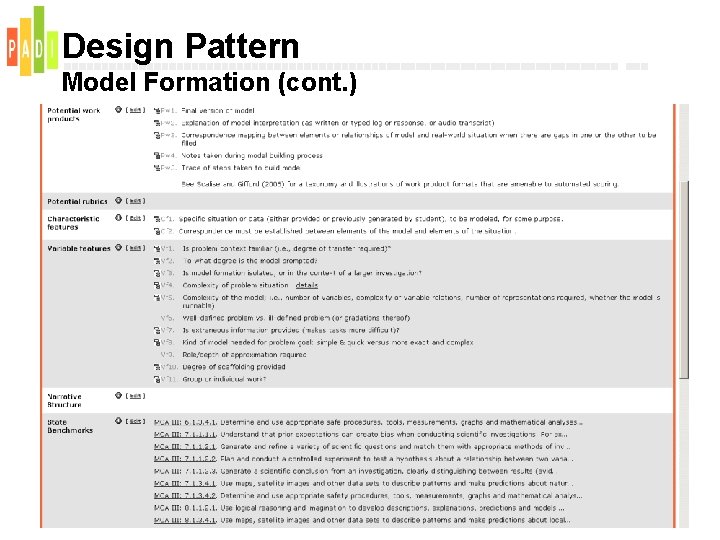

Design Pattern Model Formation

Design Pattern Model Formation (cont. )

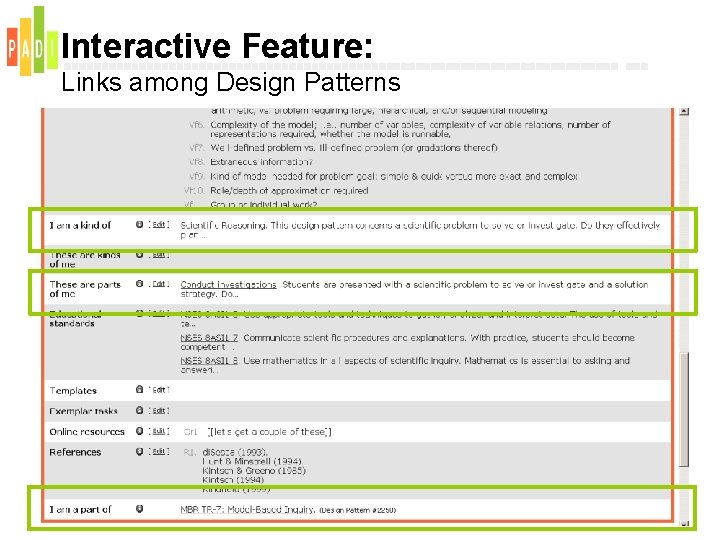

Interactive Feature: Links among Design Patterns

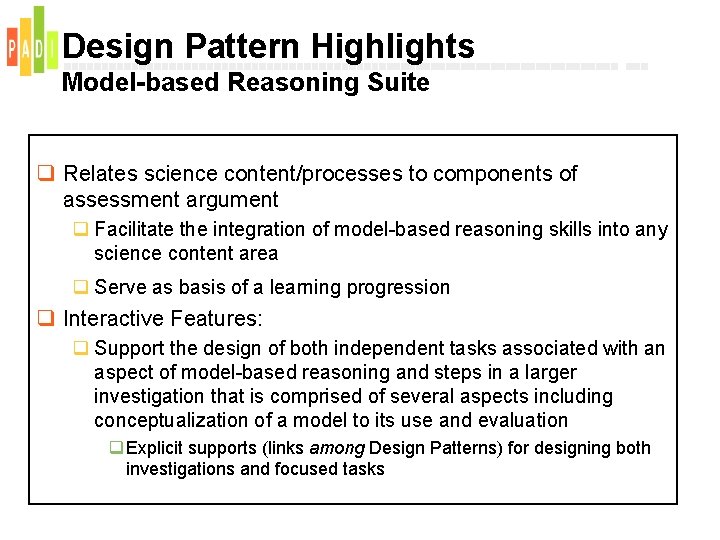

Design Pattern Highlights Model-based Reasoning Suite q Relates science content/processes to components of assessment argument q Facilitate the integration of model-based reasoning skills into any science content area q Serve as basis of a learning progression q Interactive Features: q Support the design of both independent tasks associated with an aspect of model-based reasoning and steps in a larger investigation that is comprised of several aspects including conceptualization of a model to its use and evaluation q Explicit supports (links among Design Patterns) for designing both investigations and focused tasks

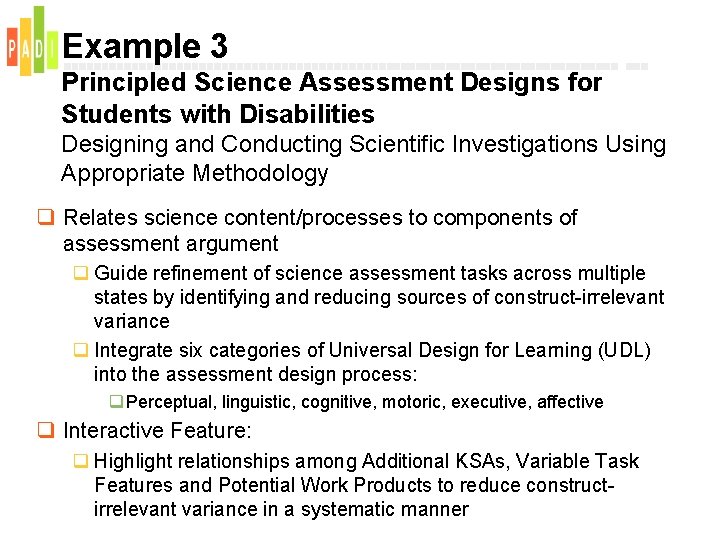

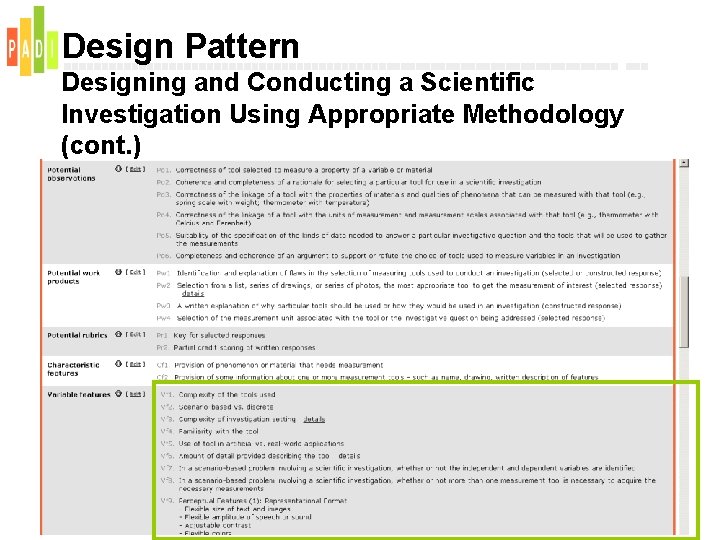

Example 3 Principled Science Assessment Designs for Students with Disabilities Designing and Conducting Scientific Investigations Using Appropriate Methodology q Relates science content/processes to components of assessment argument q Guide refinement of science assessment tasks across multiple states by identifying and reducing sources of construct-irrelevant variance q Integrate six categories of Universal Design for Learning (UDL) into the assessment design process: q Perceptual, linguistic, cognitive, motoric, executive, affective q Interactive Feature: q Highlight relationships among Additional KSAs, Variable Task Features and Potential Work Products to reduce constructirrelevant variance in a systematic manner

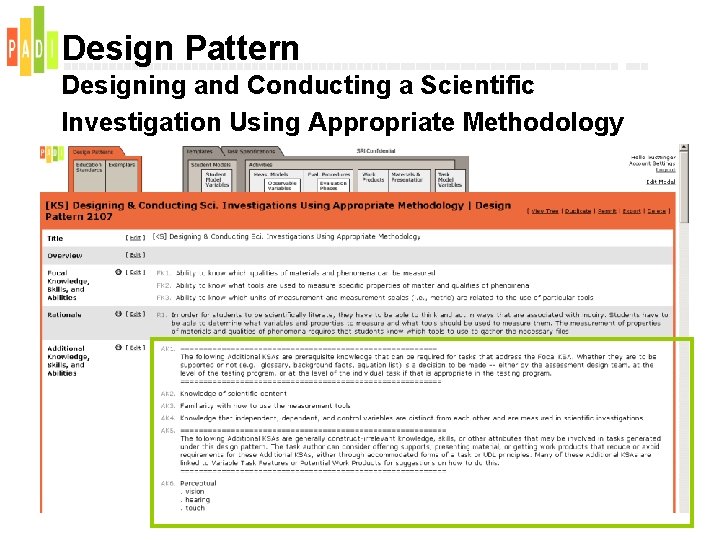

Design Pattern Designing and Conducting a Scientific Investigation Using Appropriate Methodology

Design Pattern Designing and Conducting a Scientific Investigation Using Appropriate Methodology (cont. )

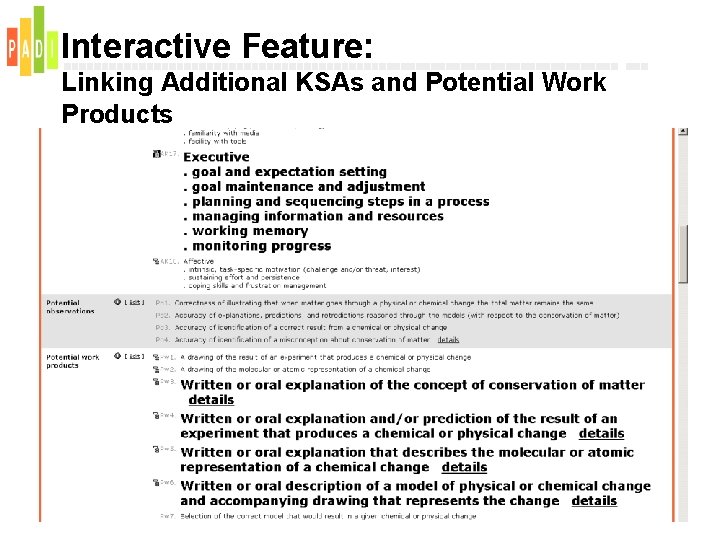

Interactive Feature: Linking Additional KSAs and Potential Work Products

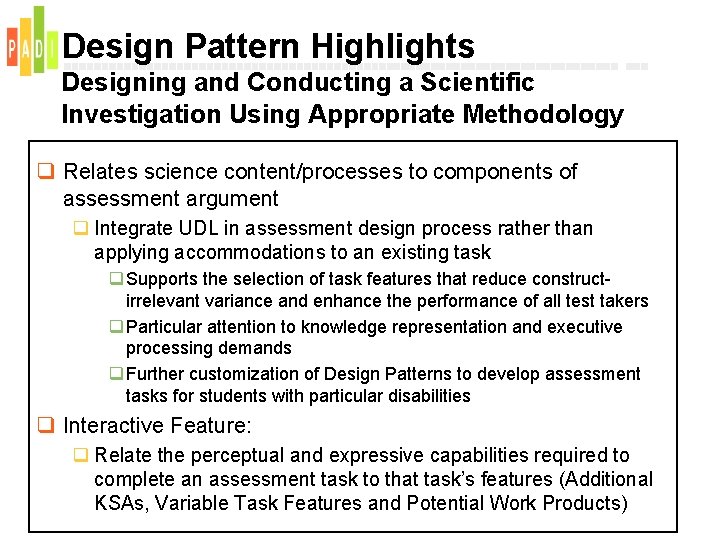

Design Pattern Highlights Designing and Conducting a Scientific Investigation Using Appropriate Methodology q Relates science content/processes to components of assessment argument q Integrate UDL in assessment design process rather than applying accommodations to an existing task q Supports the selection of task features that reduce constructirrelevant variance and enhance the performance of all test takers q Particular attention to knowledge representation and executive processing demands q Further customization of Design Patterns to develop assessment tasks for students with particular disabilities q Interactive Feature: q Relate the perceptual and expressive capabilities required to complete an assessment task to that task’s features (Additional KSAs, Variable Task Features and Potential Work Products)

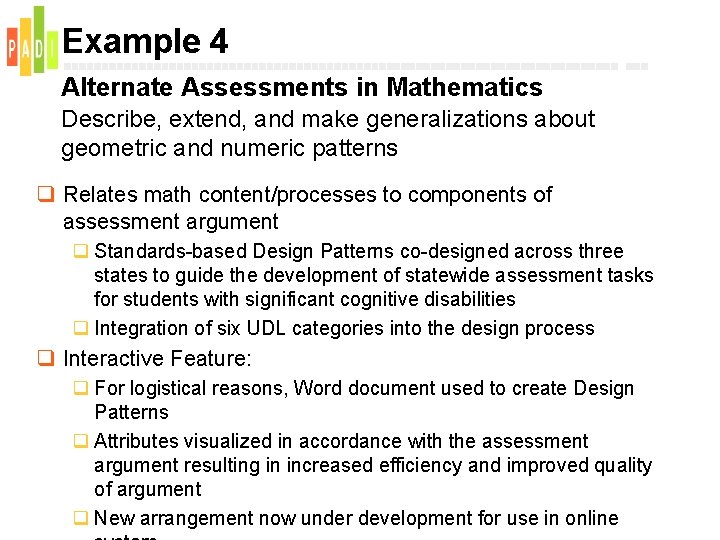

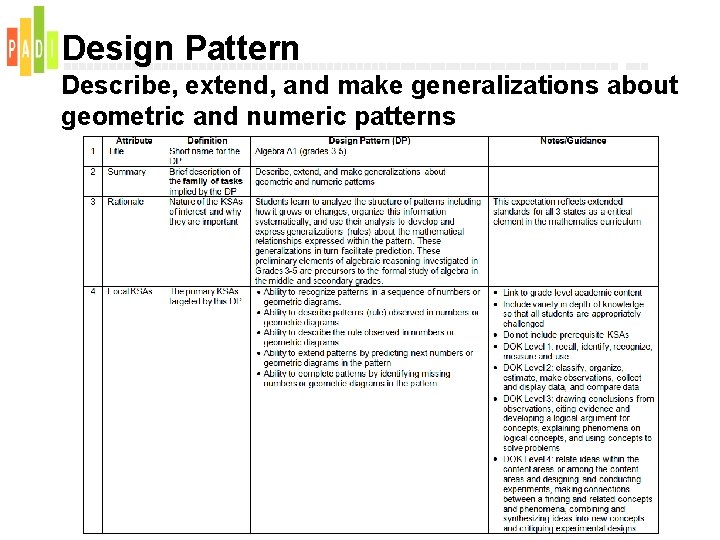

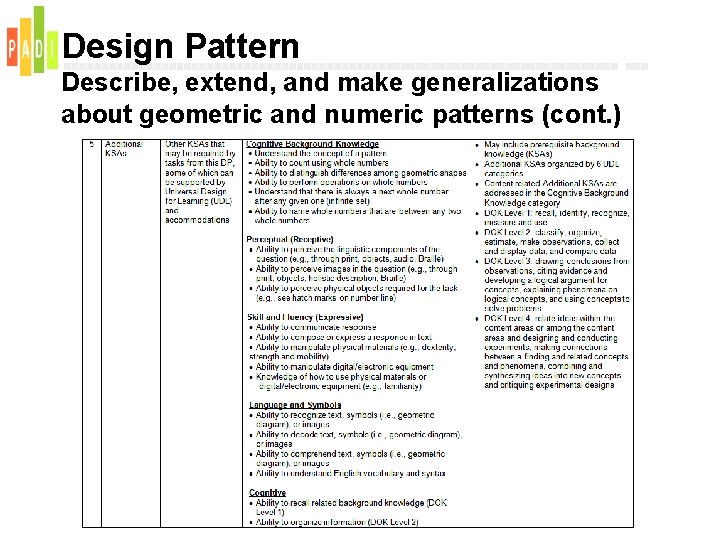

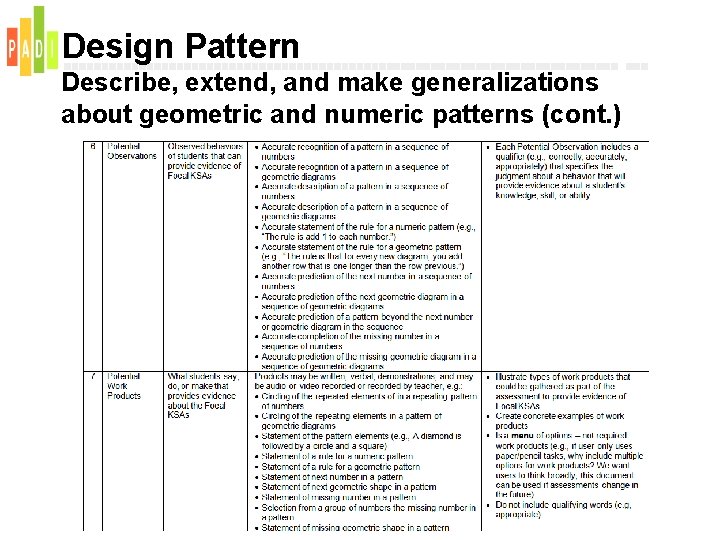

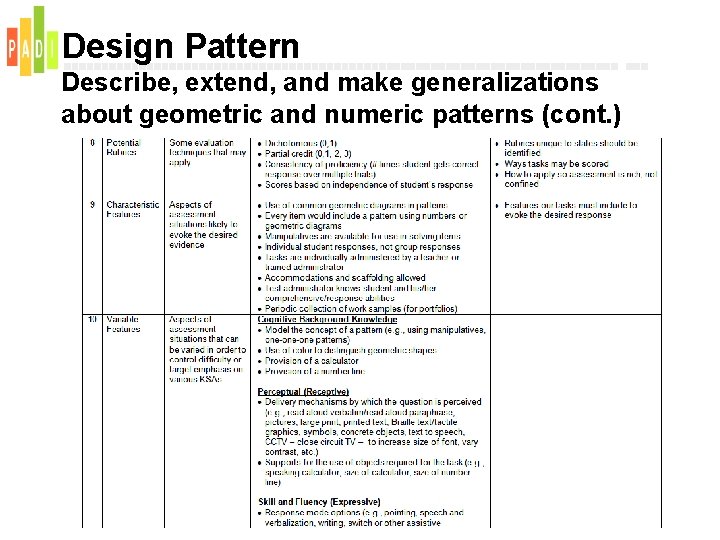

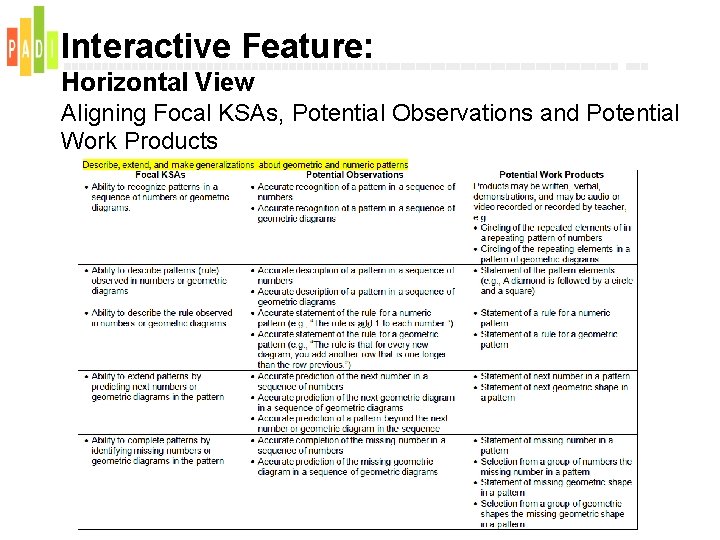

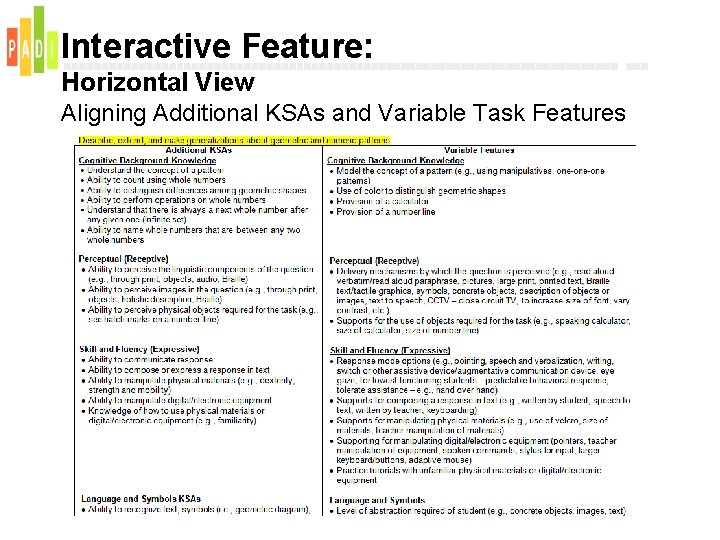

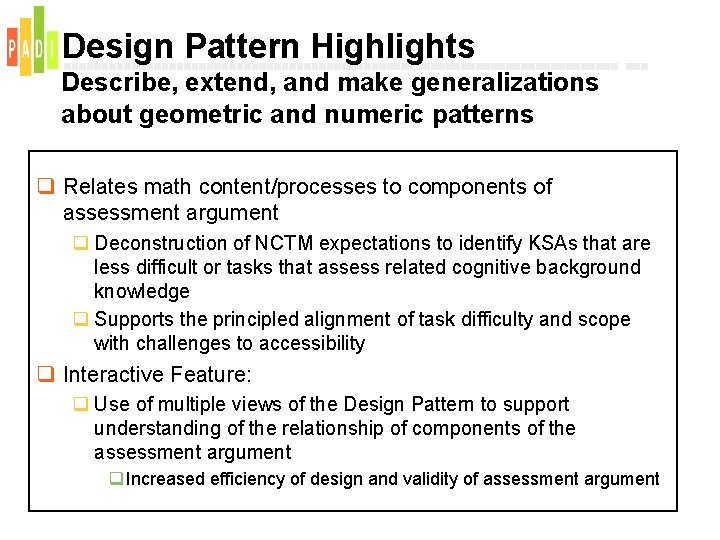

Example 4 Alternate Assessments in Mathematics Describe, extend, and make generalizations about geometric and numeric patterns q Relates math content/processes to components of assessment argument q Standards-based Design Patterns co-designed across three states to guide the development of statewide assessment tasks for students with significant cognitive disabilities q Integration of six UDL categories into the design process q Interactive Feature: q For logistical reasons, Word document used to create Design Patterns q Attributes visualized in accordance with the assessment argument resulting in increased efficiency and improved quality of argument q New arrangement now under development for use in online

Design Pattern Describe, extend, and make generalizations about geometric and numeric patterns

Design Pattern Describe, extend, and make generalizations about geometric and numeric patterns (cont. )

Design Pattern Describe, extend, and make generalizations about geometric and numeric patterns (cont. )

Design Pattern Describe, extend, and make generalizations about geometric and numeric patterns (cont. )

Interactive Feature: Horizontal View Aligning Focal KSAs, Potential Observations and Potential Work Products

Interactive Feature: Horizontal View Aligning Additional KSAs and Variable Task Features

Design Pattern Highlights Describe, extend, and make generalizations about geometric and numeric patterns q Relates math content/processes to components of assessment argument q Deconstruction of NCTM expectations to identify KSAs that are less difficult or tasks that assess related cognitive background knowledge q Supports the principled alignment of task difficulty and scope with challenges to accessibility q Interactive Feature: q Use of multiple views of the Design Pattern to support understanding of the relationship of components of the assessment argument q Increased efficiency of design and validity of assessment argument

Summary q Design Patterns are organized around assessments and key ideas in science and math, as opposed to surface features of assessment tasks. q Support designing tasks that move in ways NSES and NCTM advocate in ways that build on research and experience q Design Patterns support task design for different purposes and different formats (e. g. , learning, summative, classroom, large-scale, hands-on, P&P, simulations). q Especially important for newer forms of assessment q Technology-based, scenario based tasks in Minnesota q Scenario-based learning & assessment (Foothill-De. Anza project) q Simulation-based tasks (network troubleshooting, with Cisco) q Games-based assessment (just starting, with Mac. Arthur project)

Summary q Design Patterns are eclectic—they are not associated with any particular underlying theory of learning or cognition; all psychological perspectives can be represented q Document design decisions q Represent hierarchical relationships among Focal KSAs, sequential steps required for the completion of complex tasks, or superordinate, subordinate, and coordinate relations among concepts q Re-usable; a family of assessment tasks can be produced from a single Design Pattern q Enhance the integration of UDL with the evidence-centered design process q Technology makes evident the relationships among Design Pattern attributes and their role in the assessment argument

- Slides: 68