Design of the data quality control system 2

- Slides: 1

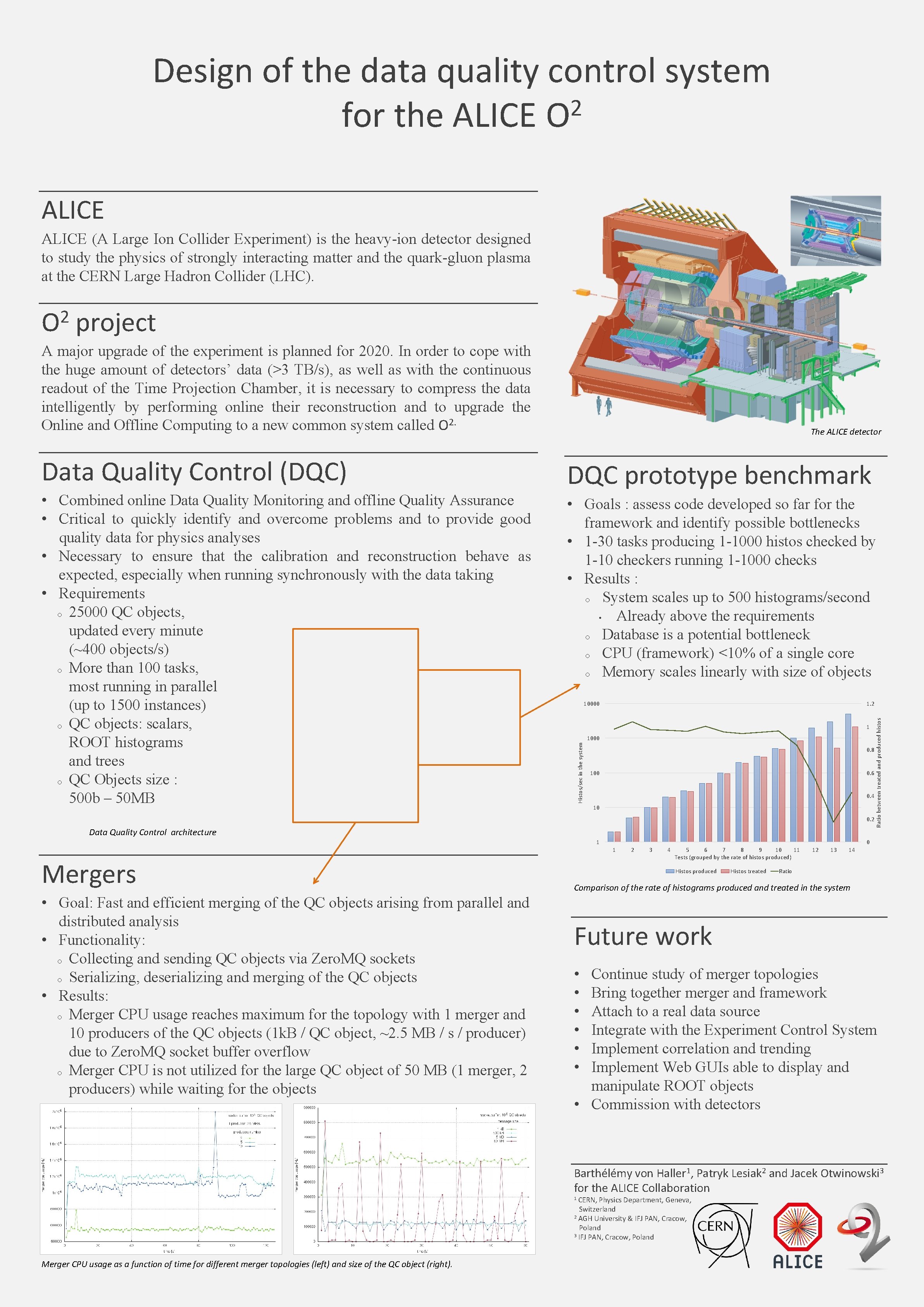

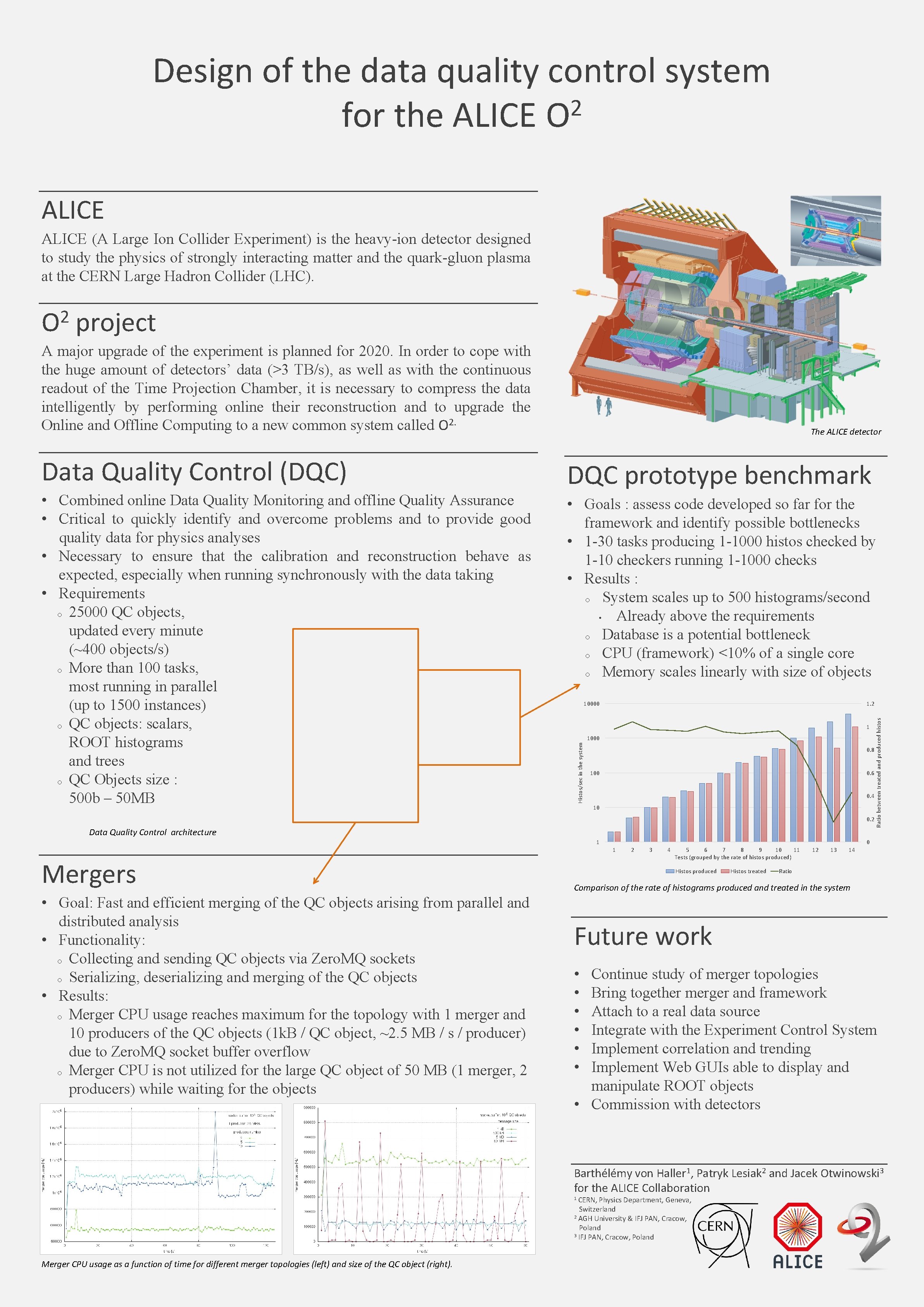

Design of the data quality control system 2 for the ALICE O ALICE (A Large Ion Collider Experiment) is the heavy-ion detector designed to study the physics of strongly interacting matter and the quark-gluon plasma at the CERN Large Hadron Collider (LHC). project A major upgrade of the experiment is planned for 2020. In order to cope with the huge amount of detectors’ data (>3 TB/s), as well as with the continuous readout of the Time Projection Chamber, it is necessary to compress the data intelligently by performing online their reconstruction and to upgrade the Online and Offline Computing to a new common system called O 2. • Combined online Data Quality Monitoring and offline Quality Assurance • Critical to quickly identify and overcome problems and to provide good quality data for physics analyses • Necessary to ensure that the calibration and reconstruction behave as expected, especially when running synchronously with the data taking • Requirements o 25000 QC objects, updated every minute (~400 objects/s) o More than 100 tasks, most running in parallel (up to 1500 instances) o QC objects: scalars, ROOT histograms and trees o QC Objects size : 500 b – 50 MB DQC prototype benchmark • Goals : assess code developed so far for the framework and identify possible bottlenecks • 1 -30 tasks producing 1 -1000 histos checked by 1 -10 checkers running 1 -1000 checks • Results : o System scales up to 500 histograms/second • Already above the requirements o Database is a potential bottleneck o CPU (framework) <10% of a single core o Memory scales linearly with size of objects 10000 1. 2 1 1000 Histos/sec in the system Data Quality Control (DQC) The ALICE detector 0. 8 100 0. 6 0. 4 10 0. 2 Data Quality Control architecture 1 0 1 Mergers • Goal: Fast and efficient merging of the QC objects arising from parallel and distributed analysis • Functionality: o Collecting and sending QC objects via Zero. MQ sockets o Serializing, deserializing and merging of the QC objects • Results: o Merger CPU usage reaches maximum for the topology with 1 merger and 10 producers of the QC objects (1 k. B / QC object, ~2. 5 MB / s / producer) due to Zero. MQ socket buffer overflow o Merger CPU is not utilized for the large QC object of 50 MB (1 merger, 2 producers) while waiting for the objects Ratio between treated and produced histos 2 O 2 3 4 5 6 7 8 9 10 11 Tests (grouped by the rate of histos produced) Histos produced Histos treated 12 13 14 Ratio Comparison of the rate of histograms produced and treated in the system Future work • • • Continue study of merger topologies Bring together merger and framework Attach to a real data source Integrate with the Experiment Control System Implement correlation and trending Implement Web GUIs able to display and manipulate ROOT objects • Commission with detectors Barthélémy von Haller 1, Patryk Lesiak 2 and Jacek Otwinowski 3 for the ALICE Collaboration CERN, Physics Department, Geneva, Switzerland 2 AGH University & IFJ PAN, Cracow, Poland 3 IFJ PAN, Cracow, Poland 1 Merger CPU usage as a function of time for different merger topologies (left) and size of the QC object (right).