Design of Machine Learning Algorithm for Behavioral Prediction

Design of Machine Learning Algorithm for Behavioral Prediction of Objects for Self-Driving Cars Yevgeniy Byeloborodov, Sherif Rashad School of Engineering and Computer Science Morehead State University Morehead, KY, USA

Outline 1. Background and Related Work 2. Proposed Methodology 3. Machine Learning Techniques 4. Datasets 5. Initial Results 6. Future Work and Conclusion

Background and Related Work History and current state ▶ ▶ ▶ Research in self driving cars has been exponentially growing in recent years Environmental, economic, social and safety reasons Sensory technology and computing power accelerate the development By Dllu - Own work, CC BY-SA 4. 0, https: //commons. wikimedia. org/w/index. php? curid=64517567

Background and Related Work History and current state SAE (Society of Automotive Engineers) established six levels of autonomy for automated driving systems (ADS) ▶ Level 0 – no automation ▶ Level 1 – assistance by driver ▶ Level 2 – partial driving automation ▶ Level 3 – conditional driving automation ▶ Level 4 – high driving automation ▶ Level 5 – full driving automation After Level 2 ADS performs the entire Dynamic Driving Task (DDT) with Object and Event Detection and Response (OEDR) functionality.

Background and Related Work History and current state Contribution to automated driving studies ▶ ▶ ▶ Eureka Project PROMETHEUS which led to successful automatically driving VITA II system by Daimler-Benz (1987 -1995) American Defense Advanced Research Projects Agency (DARPA) Grand Challenge organized by the US Department of Defense (2005) Competitions showed the use and need of improvement of various sensors like RADAR, LIDAR, infrared and full spectrum cameras, ultrasonic sensors ▶ Different data processing techniques were tested ▶ Accidents hurt trust in this technology ▶ Safety is the most critical for self-driving cars

Background and Related Work History and current state Computer Vision is implemented for image and video processing ▶ Typical computer vision tasks involve: ▪ Object recognition ▪ Identification ▪ Detection ▶ Deep Neural Networks are the most common ML techniques used for object detection for automated driving systems

Background and Related Work Literature review: OBJECT DETECTION AND RECOGNITION ▶ “A Survey of Autonomous Driving: Common Practices and Emerging Technologies” by E. Yurtsever et al. gives a review of all state-of-the-art technologies and techniques used for autonomous vehicles. ▪ Deep convolutional neural network (DCNN). All latest methods rely on DCNN and they are divided into two types: single-stage detection and regional proposal detection frameworks. ▪ Most popular algorithm – YOLO (You Only Look Once) ▪ Single Shot Detector (SSD) algorithm ▪ Region Proposal Network (RPN) – for more powerful computers

Background and Related Work Literature review: PEDESTRIAN AGE CLASSIFICATION ▶ “Child And Adult Classification Using Biometric Features Based On Video Analytics” by O. Ince, et al. describes classification of CCTV images of pedestrians ▪ Method based on head-to-body ratio ▪ Uses Ada. Boost algorithm based on Haar cascaded classifiers to detect the whole body and then the head is detected with Local Binary Patterns (LBP) algorithm ▶ “Fine-Grained Classification of Pedestrians in Video: Benchmark and State of the Art” by David Hall and Pietro Perona from California Institute of Technology introduced a fine-grained categorization of pedestrians in a video dataset. ▪ Based on fine-grained labeling of Caltech Roadside Pedestrians (CRP) dataset ▪ 5 age classes ▶ “Joint Attention in Autonomous Driving (JAAD)” by Iuliia Kotseruba, Amir Rasouli and John K. Tsotsos from York University produced a dataset for demonstration of behavioral variability of traffic participants. ▪ 4 age classes: Child, Young, Adult, Senior.

Background and Related Work Bright examples of development and implementation Autonomous vehicle companies that are ahead in development of the selfdriving technology and perform real-world testing: ▶ Waymo ▶ GM Cruise ▶ Argo AI ▶ Tesla ▶ Baidu ▶ Also, significant input from these companies: Apple, Daimler, BMW, Audi, Volkswagen, Lyft, Uber, Amazon, Nuro, Aptiv and more.

Proposed Methodology ▶ ▶ Available researches for self-driving vehicles and their interactions with pedestrians focus primarily on the performance of object detection and recognition algorithms, pedestrian position estimation and movement tracking. Observation and classification of living objects like pedestrians of different ages and different kinds of animals can improve the safety of self-driving vehicles by offering a behavioral prediction of such objects.

Proposed Methodology ▶ ▶ We propose an algorithm that will give a self-driving vehicle behavior like it was driven by a human driver. This algorithm shall detect living objects whether it would be a human or an animal. Then it shall categorize pedestrians by age group and animals by type. And finally, give a prediction on potential object behavior. Such systems improvement can increase the safety of self-driving vehicles, safety of pedestrians and animals that actively and passively interact with the vehicle. It may bring positive social impact and accelerate acceptability of the technology

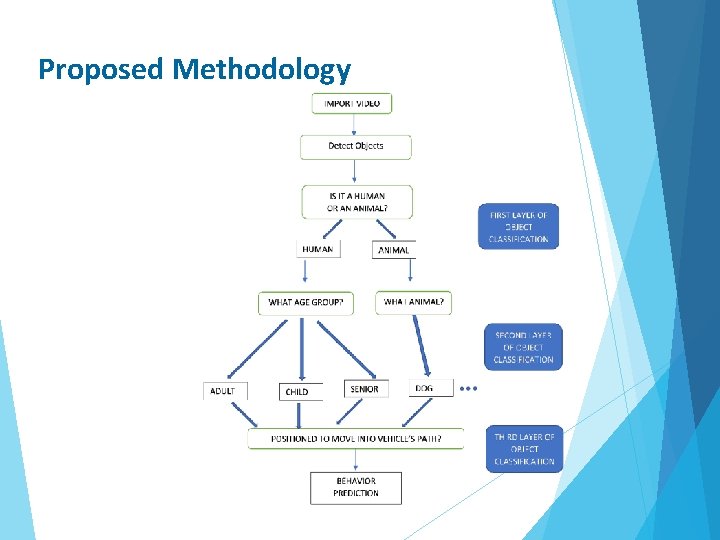

Proposed Methodology

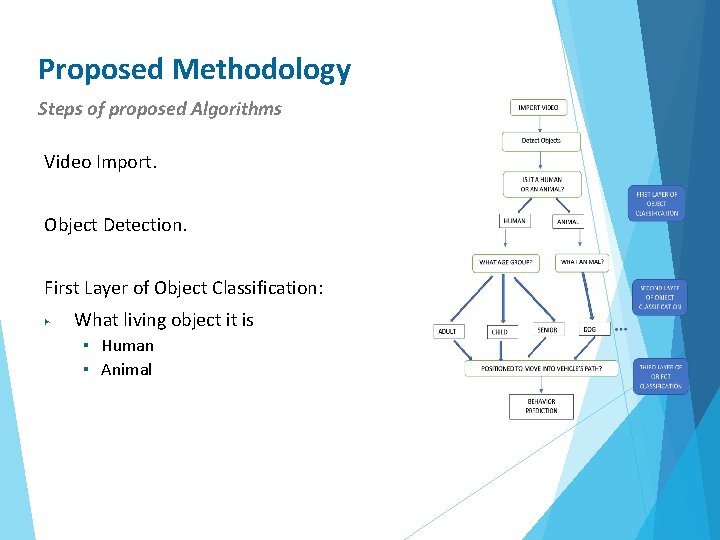

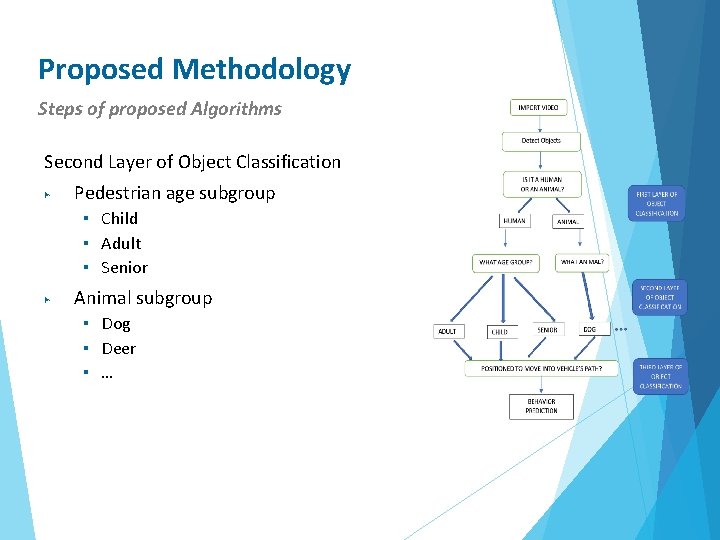

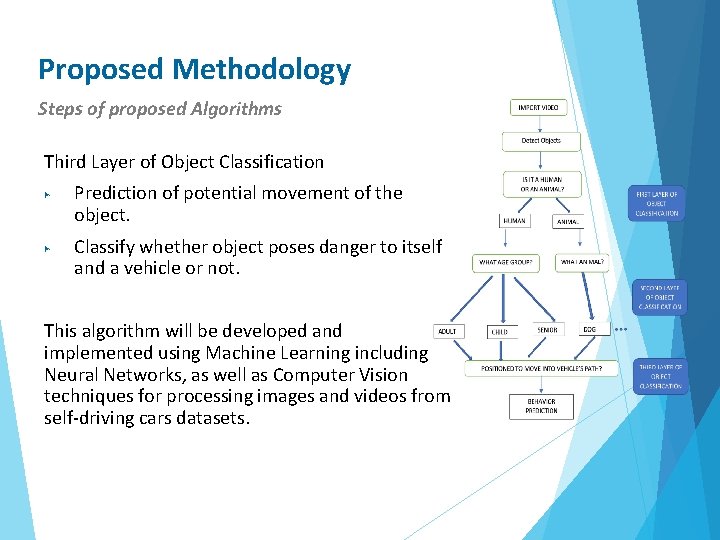

Proposed Methodology Steps of proposed Algorithms Video Import. Object Detection. First Layer of Object Classification: ▶ What living object it is ▪ Human ▪ Animal

Proposed Methodology Steps of proposed Algorithms Second Layer of Object Classification ▶ Pedestrian age subgroup ▪ Child ▪ Adult ▪ Senior ▶ Animal subgroup ▪ Dog ▪ Deer ▪ …

Proposed Methodology Steps of proposed Algorithms Third Layer of Object Classification ▶ ▶ Prediction of potential movement of the object. Classify whether object poses danger to itself and a vehicle or not. This algorithm will be developed and implemented using Machine Learning including Neural Networks, as well as Computer Vision techniques for processing images and videos from self-driving cars datasets.

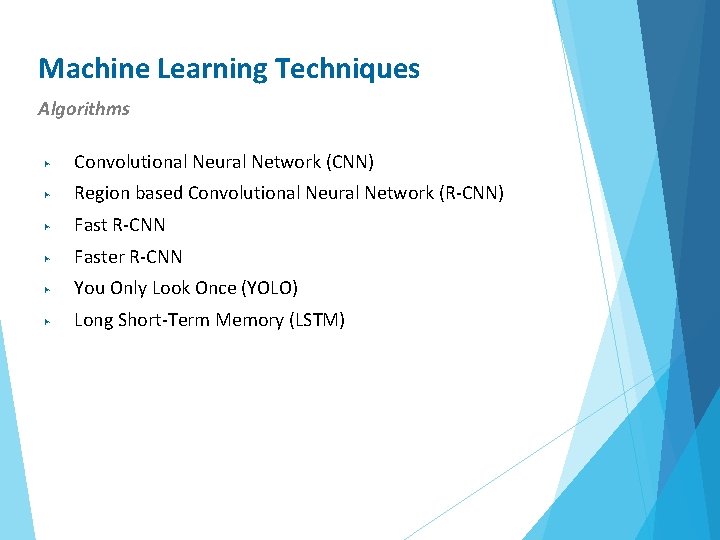

Machine Learning Techniques Algorithms ▶ Convolutional Neural Network (CNN) ▶ Region based Convolutional Neural Network (R-CNN) ▶ Fast R-CNN ▶ Faster R-CNN ▶ You Only Look Once (YOLO) ▶ Long Short-Term Memory (LSTM)

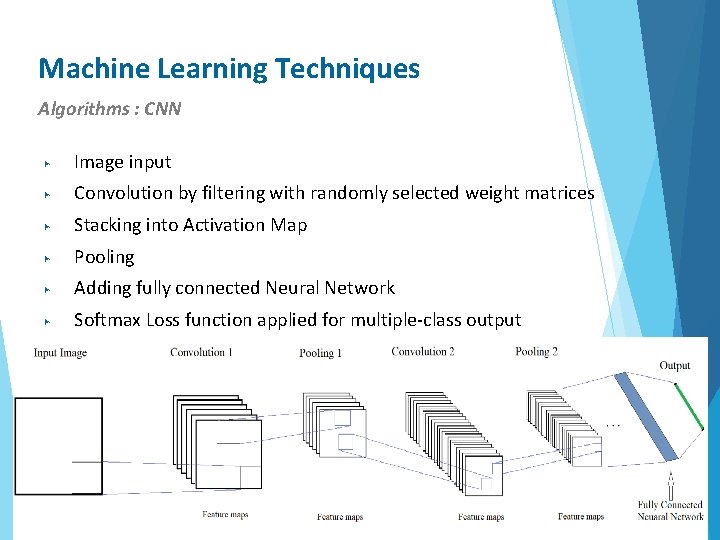

Machine Learning Techniques Algorithms : CNN ▶ Image input ▶ Convolution by filtering with randomly selected weight matrices ▶ Stacking into Activation Map ▶ Pooling ▶ Adding fully connected Neural Network ▶ Softmax Loss function applied for multiple-class output

Machine Learning Techniques Algorithms: R-CNN, Faster R-CNN and YOLO ▶ ▶ ▶ Region based Convolutional Neural Network (R-CNN) - ~2000 regions of interest (Ro. I) are passed through CNN Fast R-CNN – Ro. I selected after creating convolutional feature map Faster R-CNN – after CNN generated feature map it is processed through Region Proposal Network

Machine Learning Techniques Algorithms: YOLO ▶ ▶ An input image is marked by a grid and divided into equal regions/cells The image is put through CNN and each cell will have an output that contains: ▪ ▪ Information on the probability of object presence A coordinate of the center Size of the anchor box Information about the class it belongs to ▶ Anchoring boxes determined ▶ Bounding boxes predicted

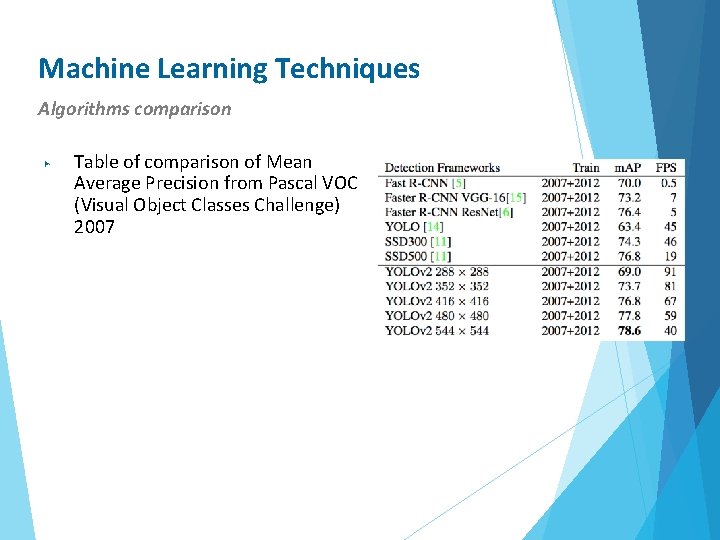

Machine Learning Techniques Algorithms comparison ▶ Table of comparison of Mean Average Precision from Pascal VOC (Visual Object Classes Challenge) 2007

Machine Learning Techniques Algorithms ▶ Other algorithms that may be considered for use for the purpose of this research: ▪ Single Shot Detector (SSD) ▪ Mask R-CNN - for estimation of human pose

Datasets ▶ For self-driving cars: ▪ Oxford Robot. Car Dataset – from Oxford, UK ▪ Berkley Deep Drive – by UC Berkeley, Cornell University, UC San Diego, Element, Inc ▪ KITTI -project of Karlsruhe Institute of Technology and Toyota Technological Institute at Chicago ▪ WAYMO – formerly Google ▶ For pedestrian age: ▪ Caltech Roadside Pedestrians (CRP) – fine-grained categorization of people using the entire human body ▪ Joint Attention in Autonomous Driving (JAAD) - dataset is produced with the intention of demonstrating the behavioral variability of traffic participants

Datasets Selected dataset for self-driving cars ▶ Berkley Deep Drive ▪ ▪ 100, 000 40 -second HD video sequences 1, 100 hours of driving Covers New York, San Francisco, Bay Area and Berkeley areas 86, 047 people detected in the dataset

Datasets Dataset for pedestrian age Caltech Roadside Pedestrians (CRP) ▶ Fine-grained categorization of people using the entire human body ▶ 27, 454 bounding box and pose labels – suitable for training deep-networks. ▶ 5 age classes: ▪ ▪ ▪ ▶ Child Teen Young adult Middle aged Senior Additional labeled categories: ▪ Sex ▪ Weight ▪ Clothing type

Datasets Dataset for pedestrian age Joint Attention in Autonomous Driving (JAAD) ▶ ▶ ▶ Produced with the intention of demonstrating the behavioral variability of traffic participants Each participant in the driver-pedestrian interaction is labeled 4 age classes: ▪ Child ▪ Young ▪ Adult ▪ Senior Additional labeled categories: ▪ age_gender ▪ designated_crossing Behavior events: ▪ Crossing, Stopped, Moving fast, Moving slow, Speed up, Slow down, Clear path, Looking

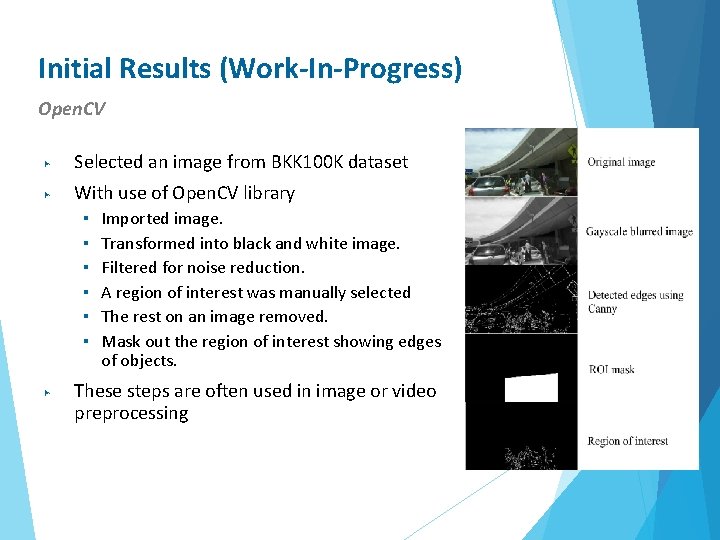

Initial Results (Work-In-Progress) Open. CV ▶ Selected an image from BKK 100 K dataset ▶ With use of Open. CV library ▪ ▪ ▪ ▶ Imported image. Transformed into black and white image. Filtered for noise reduction. A region of interest was manually selected The rest on an image removed. Mask out the region of interest showing edges of objects. These steps are often used in image or video preprocessing

Initial Results (Work-In-Progress) YOLOV 3 ▶ Selected a video from BKK 100 K dataset. ▶ Use of YOLO Version 3 for object detection. ▶ ▶ YOLOV 3 is trained using COCO dataset to distinguish between class “person”, several types of animals like “dog”, “deer”, and others. Bounding boxes and labels for detected objects are shown on the sample frame from video with detected objects.

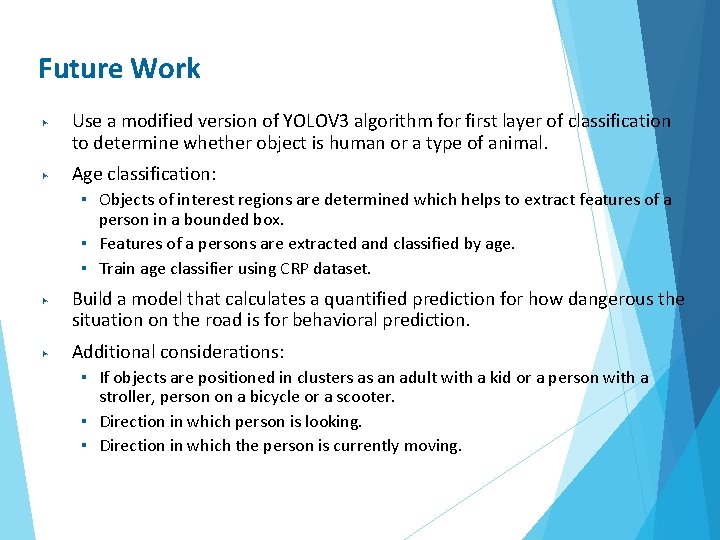

Future Work ▶ ▶ Use a modified version of YOLOV 3 algorithm for first layer of classification to determine whether object is human or a type of animal. Age classification: ▪ Objects of interest regions are determined which helps to extract features of a person in a bounded box. ▪ Features of a persons are extracted and classified by age. ▪ Train age classifier using CRP dataset. ▶ ▶ Build a model that calculates a quantified prediction for how dangerous the situation on the road is for behavioral prediction. Additional considerations: ▪ If objects are positioned in clusters as an adult with a kid or a person with a stroller, person on a bicycle or a scooter. ▪ Direction in which person is looking. ▪ Direction in which the person is currently moving.

Conclusions ▶ ▶ ▶ Computer vision is an extremely powerful technology that is progresses every day with help of improvements in computing power and advances in image, video and sensory data processing algorithms. Amount and quality of available research results, algorithms and public datasets is constantly growing and gives more research opportunity for different knowledge levels. With the combination of Machine Learning techniques and available datasets it will be possible to create a model that predicts behavior of an object in front of a self-driving car based on detection of specific objects of interest as human or an animal, classification of humans by age and taking in consideration the combination of these objects together.

![References [1] SAE, “Taxonomy and definitions for terms related to driving automation systems for References [1] SAE, “Taxonomy and definitions for terms related to driving automation systems for](http://slidetodoc.com/presentation_image_h2/9e0f491e8f54a832bbdf1ad787bc008e/image-30.jpg)

References [1] SAE, “Taxonomy and definitions for terms related to driving automation systems for on-road motor vehicles, ” SAE J 3016, 2018, Tech. Rep [2] E. Yurtsever, J. Lambert, A. Carballo, K. Takeda, “A Survey of Autonomous Driving: Common Practices and Emerging Technologies” IEEE Access 8 (2020): 58443– 58469. Crossref. Web. [12] D. Barnes, M. Gadd, P. Murcutt, P. Newman and I. Posner, "The Oxford Radar Robot. Car Dataset: A Radar Extension to the Oxford Robot. Car Dataset, " 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 2020, pp. 6433 -6438, doi: 10. 1109/ICRA 40945. 2020. 9196884. [13] A. Geiger, P. Lenz and R. Urtasun, "Are we ready for autonomous driving? The KITTI vision benchmark suite, " 2012 IEEE Conference on Computer Vision [3] R. Mc. Allister, Y. Gal, A. Kendall, M. Van Der Wilk, A. Shah, R. Cipolla, and A. and Pattern Recognition, Providence, RI, 2012, pp. 3354 -3361, doi: V. Weller, “Concrete problems for autonomous vehicle safety: advantages of 10. 1109/CVPR. 2012. 6248074. bayesian deep learning. ” International Joint Conferences on Artificial Intelligence, [14] P. Sun et al. , "Scalability in Perception for Autonomous Driving: Waymo Inc. , 2017 Open Dataset, " 2020 IEEE/CVF Conference on Computer Vision and Pattern [4] J. D’Onfro. “I hate them’: Locals reportedly are frustrated with alphabet’s self. Recognition (CVPR), Seattle, WA, USA, 2020, pp. 2443 -2451, doi: driving cars. ” CNBC, Aug 29, 2018. [Online]. Available: 10. 1109/CVPR 42600. 2020. 00252. https: //www. cnbc. com/2018/08/28/locals-reportedly-frustrated-withalphabets[15] F. Yu et al. , "BDD 100 K: A Diverse Driving Dataset for Heterogeneous waymo-self-driving-cars. html. [Accessed Feb 26, 2020]. Multitask Learning, " 2020 IEEE/CVF Conference on Computer Vision and [5] Dalal, N. , Triggs, B. , “Histograms of oriented gradients for human detection, ” Pattern Recognition (CVPR), Seattle, WA, USA, 2020, pp. 2633 -2642, doi: in: Computer Vision and Pattern Recognition, 2005. CVPR 2005. IEEE Computer 10. 1109/CVPR 42600. 2020. 00271. Society Conference on. Vol. 1. pp. 886– 893 vol. 1. [16] T. -Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollar, [6] R. Girshick, J. Donahue, T. Darrell and J. Malik, "Rich feature hierarchies for and C. L. Zitnick. “Microsoft COCO: Common objects in context” in European accurate object detection and semantic segmentation", Proc. IEEE Conf. Comput. conference on computer vision, pages 740– 755. Springer, 2014. Vis. Pattern Recognit. , pp. 580 -587, Jun. 2014. [17] D. Hall and P. Perona, "Fine-grained classification of pedestrians in video: [7] R. Girshick, "Fast R-CNN", Proc. IEEE Int. Conf. Comput. Vis. , pp. 1440 Benchmark and state of the art", Proc. IEEE Conf. Comput. Vis. Pattern Recog. , 1448, 2015. pp. 176 -183, 2015. [8] S. Ren, K. He, R. Girshick and J. Sun, "Faster R-CNN: Towards real-time [18] J. Redmon, A. Farhadi, “YOLOv 3: An Incremental Improvement, ” [Online]. object detection with region proposal networks", IEEE Trans. Pattern Anal. Mach. Available: https: //pjreddie. com/media/files/papers/YOLOv 3. pdf [Accessed Sep. Intell. , vol. 39, no. 6, pp. 1137 -1149, Jun. 2017. 20, 2020]. [9] J. Redmon, S. Divvala, R. Girshick and A. Farhadi, "You Only Look Once: Unified real-time object detection", IEEE Conference Computer. Vision and Pattern Recognition (CVPR), 2016. [19] I. Kotseruba, A. Rasouli, J. k. Tsotsos, “Joint Attention in Autonomous Driving (JAAD)” [Online]. Available: https: //arxiv. org/pdf/1609. 04741. pdf [20] Y. Byeloborodov and S. Rashad, "Design of Machine Learning Algorithms for [10] O. Ince, I. Ince, J. Park, J. Song and B. Yoon, “Child and adult classification Behavioral Prediction of Objects for Self-Driving Cars, " 2020 11 th IEEE Annual using biometric features based on video analytics, ” ICIC International, 2017 ISSN Ubiquitous Computing, Electronics & Mobile Communication Conference 2185 -2766, pp. 819– 825. (UEMCON), New York, NY, USA, 2020, pp. 0101 -0105, doi: [11] D. A. Ridel, N. Deo, D. F. Wolf and M. M. Trivedi, "Understanding pedestrian 10. 1109/UEMCON 51285. 2020. 9298139. Available: -vehicle interactions with vehicle mounted vision: An LSTM model and empirical https: //ieeexplore. ieee. org/document/9298139 analysis", Proc. IEEE Intell. Vehicles Symp, pp. 913 -918, 2019.

A paper on this research was published in IEEE UEMCON 2020 (available at https: //ieeexplore. ieee. org/document/9298139) Feel free to reach out if you have more questions! y. byeloborodov@moreheadstate. edu yevgeniy. byelob@outlook. com

- Slides: 31