Design decisions and lessons learned in the Improving

- Slides: 21

Design decisions and lessons learned in the Improving Chronic Illness Care Evaluation Emmett Keeler September 2005 emmett@rand. org 1 6/01 RAND

Evaluation questions • Did the Collaboratives induce positive changes? • Did implementing the Chronic Care Model (CCM) improve processes of care and patient health? • What did participation and implementation cost? • What factors were associated with success? www. improvingchroniccare. org is a great website for information about the Chronic care model. 2 6/01 RAND

ICICE results in 1 slide • Collaborative sites made more than 30 systemic changes over the year, on average • These changes let us test if moving towards the CCM is good for patients • Process, self management and some outcomes improved more for intervention patients than control sites Ù improving most in emphasized areas such as patient goal-setting http: //www. rand. org/health/ICICE presents findings from the 15 accepted papers and other information about the study. 3 6/01 RAND

Outline � Design considerations and what we did Improving science base for QI evaluations ÙDealing with challenges to validity ÙReducing per subject costs 4 6/01 RAND

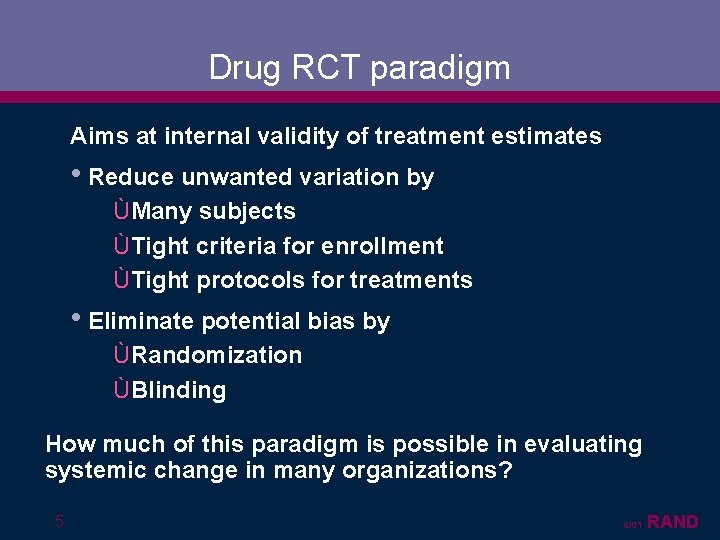

Drug RCT paradigm Aims at internal validity of treatment estimates • Reduce unwanted variation by ÙMany subjects ÙTight criteria for enrollment ÙTight protocols for treatments • Eliminate potential bias by ÙRandomization ÙBlinding How much of this paradigm is possible in evaluating systemic change in many organizations? 5 6/01 RAND

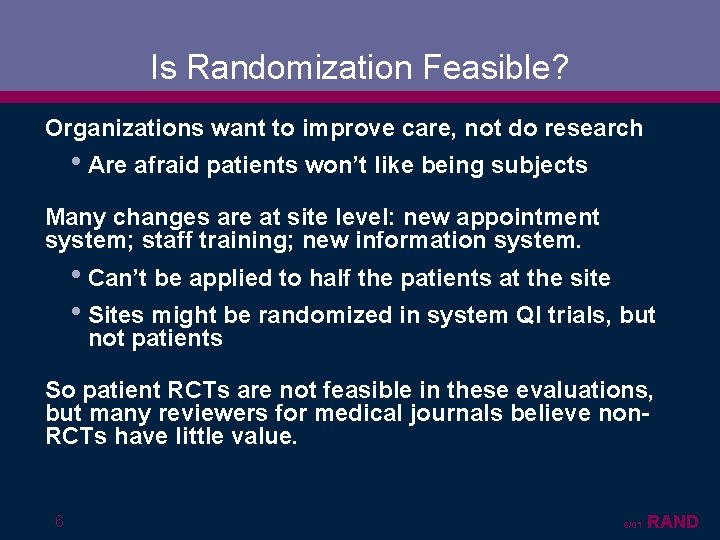

Is Randomization Feasible? Organizations want to improve care, not do research • Are afraid patients won’t like being subjects Many changes are at site level: new appointment system; staff training; new information system. • Can’t be applied to half the patients at the site • Sites might be randomized in system QI trials, but not patients So patient RCTs are not feasible in these evaluations, but many reviewers for medical journals believe non. RCTs have little value. 6 6/01 RAND

Components of alternate strong studies Before and after with a matched control group Multiple sources of data and an evaluation logic model Planning for and testing potential biases 7 6/01 RAND

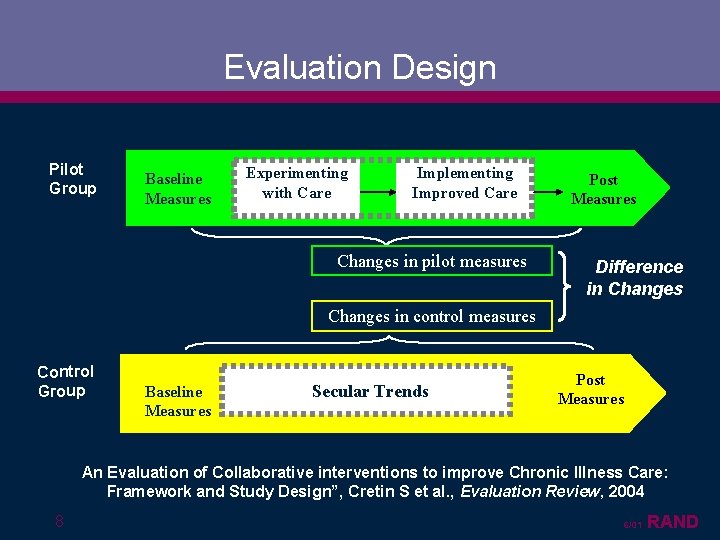

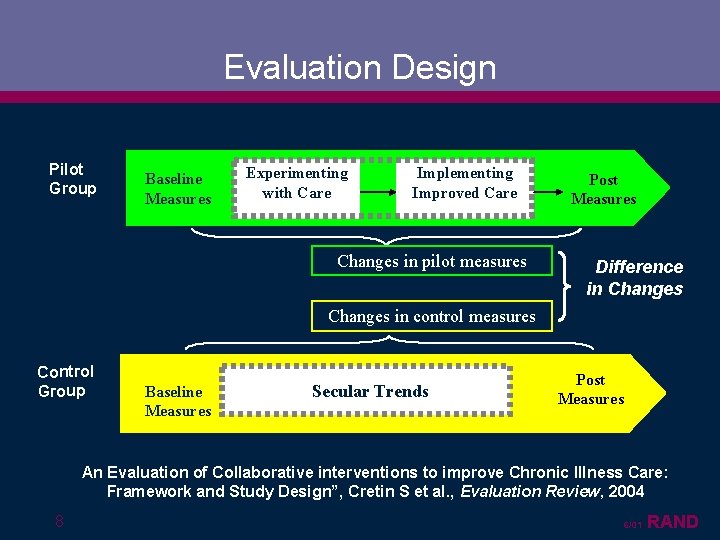

Evaluation Design Pilot Group Baseline Measures Experimenting with Care Implementing Improved Care Changes in pilot measures Post Measures Difference in Changes in control measures Control Group Baseline Measures Secular Trends Post Measures An Evaluation of Collaborative interventions to improve Chronic Illness Care: Framework and Study Design”, Cretin S et al. , Evaluation Review, 2004 8 6/01 RAND

Picking a control group External control sites • No contamination from intervention • But a different set of shocks • Less likely to cooperate, more expensive to include We tried to pick internal sites in the same organization • Asked for a similar site, that was not likely to get CCM this year. 9 6/01 RAND

Patient Sampling Use sampling frame from site registry of patients with disease of interest • Registries are needed for the CCM interventions • A few sites had to develop them, which we helped We usually took everyone in registry at our sites. • Not a “tight” design, but better for external validity We later discarded patients who said they did not have disease, or did not get care at the sites. 10 6/01 RAND

Evaluation Data Sources Patient Telephone Surveys Record Medical Record Abstraction Staff Survey Clinical & Administrative Staff Surveys IHI Monthly Progress Reports Final Calls with Leader 11 6/01 RAND

Telephone Survey Patient is best source for what care provider does: • Education, Knowledge, Adherence, • Communication, Satisfaction, • General and disease specific health, limitations • Utilization, Demographics and insurance. Can use to see if improvements in charts are just documentation or are real. Cost ~$100 each for the ~4000 patients phoned. 12 6/01 RAND

Advantages of charts IRB and consent difficulties delayed recruitment • Phone surveys were at end of collaborative, but • Charts still provide true before and after Ùcan add baseline variables from charts to analyses of measures from phone surveys. • Cost ~ $300 each, but give before and after. 13 6/01 RAND

IHI Monthly progress reports The collaborative asked the team at each intervention site to fill out a brief report each month -- for their leaders, and for IHI • • what they had done that month, tracking their most important statistics. Very helpful in finding out what sites did. • We needed to develop method to code change activities • Used changes as both dependent and independent variable. Lack of Standardization reduced value of their statistics to us. • e. g. for depression, 3 time periods for “in treatment”, 3 for time for a follow-up , 2 for big improvement at N months. 14 6/01 RAND

Outline Design considerations and what we did � Improving science base for QI evaluations Dealing with challenges to validity Reducing per subject costs 15 6/01 RAND

Longer Run effects ? We mainly looked at care before and during the collaborative to shorten the study, saving time-to-results and resources. How can one get longer run effects? • Use risk factors as a proxy for future health? Ù Cholesterol, Hb. A 1 c, blood pressure in people with diabetes. • Check in a year later with key staff for: Ù Reflections on successes and barriers Ù See what happened next (maintenance, spread of quality improvements). 16 6/01 RAND

Evaluators need to know what control sites are doing In the first collaborative, control sites made big improvements, making intervention sites insignificantly better. What was up? Bleed of collaborative quality improvement to controls: • We measured how “close” the control sites were using a scale including geography, overlap of staff, and of organizations. (only 6 control sites were truly external). • This scale was a mild predictor of control sites doing better. Other Quality improvement activities: QI activities unique to control site reduce the estimates of intervention success. • asking about QI was a big part of the control exit phone call. 17 6/01 RAND

Bias from volunteer organizations and teams? External validity: Organizations volunteered to improve their care • We do not know how effective the intervention would be on organizations that do not care to improve quality. internal Validity: Site staff in the organization often volunteered to go first. • 18 We compared staff attitudes of the chronic care delivery teams in intervention and control sites. ÙSimilar attitudes towards quality improvement 6/01 RAND

Selection Bias from organizations agreeing to be evaluated? Compare organizations in the collaborative that participated in the evaluation with non-participating. IHI Faculty gave 1 -5 ratings of success at end. • Participating organizations averaged 4. 1, nonparticipating had 3. 9, non-significant • None of the 7 organizations that dropped out of the collaboratives had signed up to participate. 19 6/01 RAND

Bias from Funder’s pressure • Real and perceived problem that increases with less rigid designs. • Separate evaluation team from the intervention team Ù But we need their help to enhance cooperation and help us understand the intervention process • Try to ensure independence up-front in contract Ù Funders can review but not censor results Ù Evaluators share “unpleasant”results ASAP and • work with funder to understand present them • But, researchers and funders are in long term relationship 20 6/01 RAND

Lowering cost of evaluations Reducing multi-site IRB and consent costs: • Study QI in organizations Ù with many sites Ù with prior patient consent for quality improvement and QI research activities. Reducing data collection costs • Electronic medical record • Clever use of existing data, like claims • Web-based and other unconventional surveys 21 6/01 RAND