Design and Performance Studies of an Adaptive Cache

- Slides: 28

Design and Performance Studies of an Adaptive Cache Retrieval Scheme in a Mobile Computing Environment Paper by: Wen-Chih Peng and Ming-Syan Chen Presented by: Arseny Bogomolov October 25, 2005

Presentation Outline u u u u Setting the Context Problem Statement Related Work Preliminaries Cache Retrieval Models Cost Analysis for an Adaptive Scheme Performance Study Conclusions 12/13/2021 2

The Typical Mobile Environment u u A traveling mobile user (MU) A running application Communication with application server MU moves to a new service area in a distributed server architecture • • • “Service Handoff” <- Must be seamless! Minimizes communication overhead Minimizes application delays Balances workload Fault tolerance 12/13/2021 3

An Example Application u Traffic Reports • Shortest distance routes • Up-to-date traffic status • Location-dependent information • Live traffic video – streaming wirelessly Temporal Locality? u What and where can be cached? u 12/13/2021 4

System Architecture u u SA – Application Server LBn – Local Buffer • One per MU • Caches data • For one Ui u Coordinator • • 12/13/2021 Concurrency control Transaction monitoring Can also cache data For Ui. . n 5

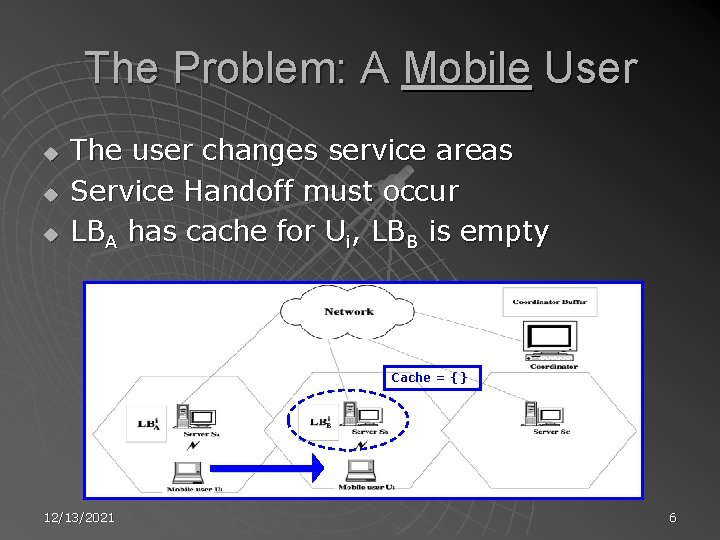

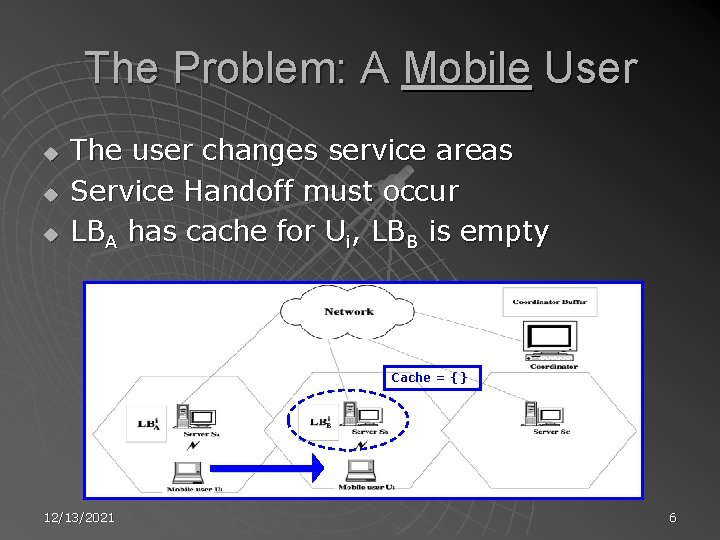

The Problem: A Mobile User u u u The user changes service areas Service Handoff must occur LBA has cache for Ui, LBB is empty Cache = {} 12/13/2021 6

The Proposed Solution u Three caching schemes 1. 2. 3. u From Local Buffer (FLB) Coordination Buffer (FCB) Previous Server (FPS) Which one to use? • • 12/13/2021 Depends on transaction properties Temporal Locality Cost of cache miss Authors propose DAR 7

Dynamic & Adaptive Cache Retrieval Scheme (DAR) Authors’ main objective & contribution u A set of decision rules for service handoff u Selects most appropriate of the 3 schemes u • For each phase of transaction • Based on transaction properties 12/13/2021 8

Paper Contributions: Summary Evaluates properties of FLB, FCB, and FPS u Proposes DAR scheme for service handoffs u Comparative analysis of the 4 schemes u Simulations to validate the results u 12/13/2021 9

Related Work u Caching at the proxy servers • Service handoff not considered u Cache invalidation schemes • • u Impact of client disconnects on performance Energy-efficient schemes Adaptive schemes adjust size of report For the most part all schemes are static Previous use of coordinator • Concurrency control • Transaction execution monitoring • Not used for caching 12/13/2021 10

Preliminaries u Temporal Locality – tendency of data pages to be referenced again “soon” • Intra-transaction Locality – same data pages referenced in same transaction • Inter-transaction Locality – same data pages referenced by consecutive transactions u Type of temporal locality influences type of caching scheme 12/13/2021 11

From Local Buffer One per each MU u Created when MU enters service area u First transaction triggers warm-up u No pre-fetching is done u • Best when no temporal locality • “Find shortest distance to X from where I am” • Saves on pre-fetching costs 12/13/2021 12

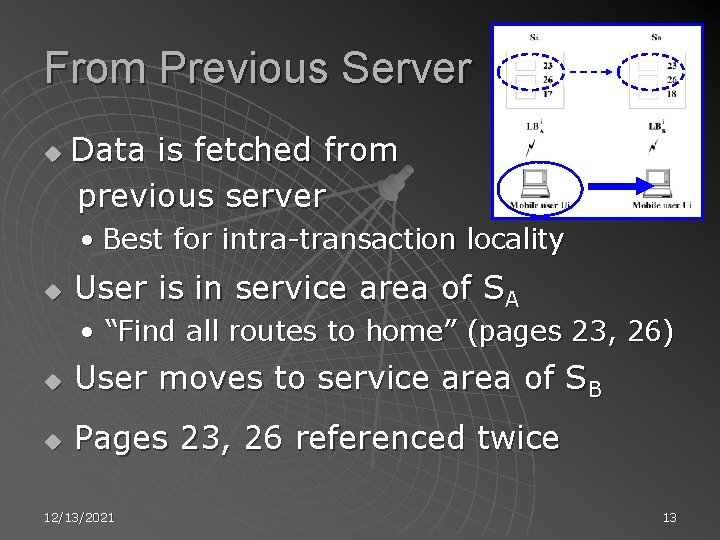

From Previous Server u Data is fetched from previous server • Best for intra-transaction locality u User is in service area of SA • “Find all routes to home” (pages 23, 26) u User moves to service area of SB u Pages 23, 26 referenced twice 12/13/2021 13

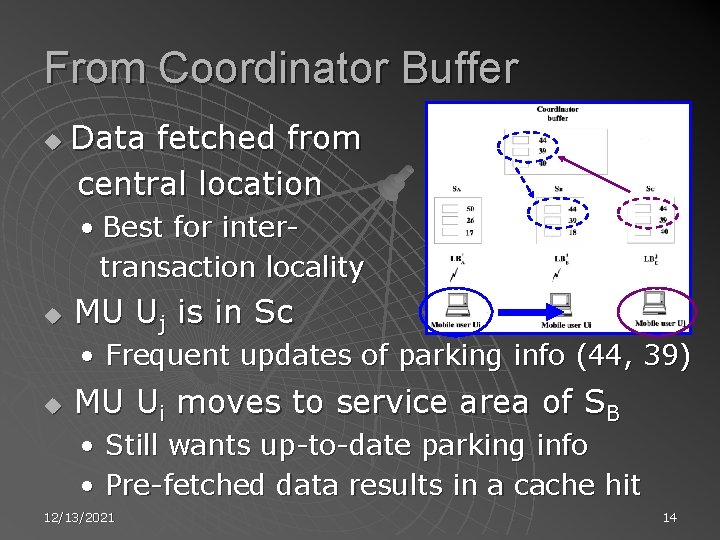

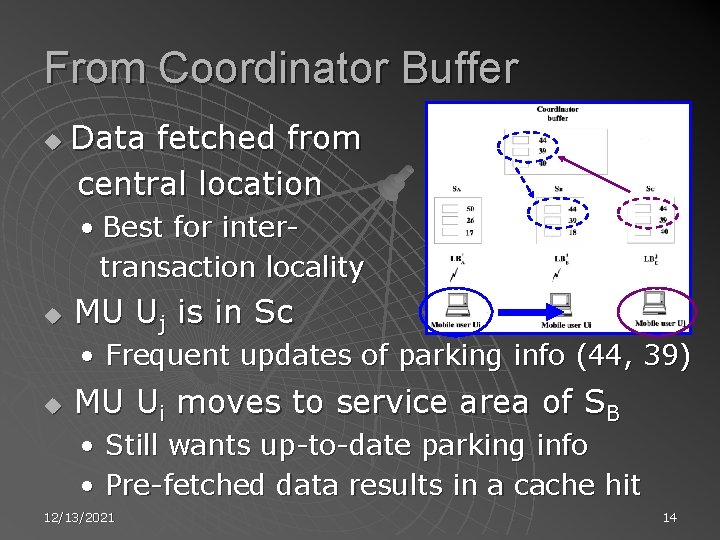

From Coordinator Buffer u Data fetched from central location • Best for intertransaction locality u MU Uj is in Sc • Frequent updates of parking info (44, 39) u MU Ui moves to service area of SB • Still wants up-to-date parking info • Pre-fetched data results in a cache hit 12/13/2021 14

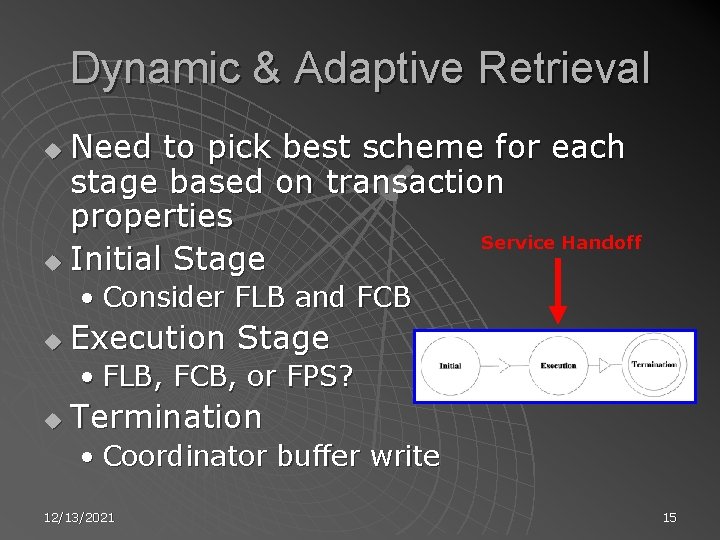

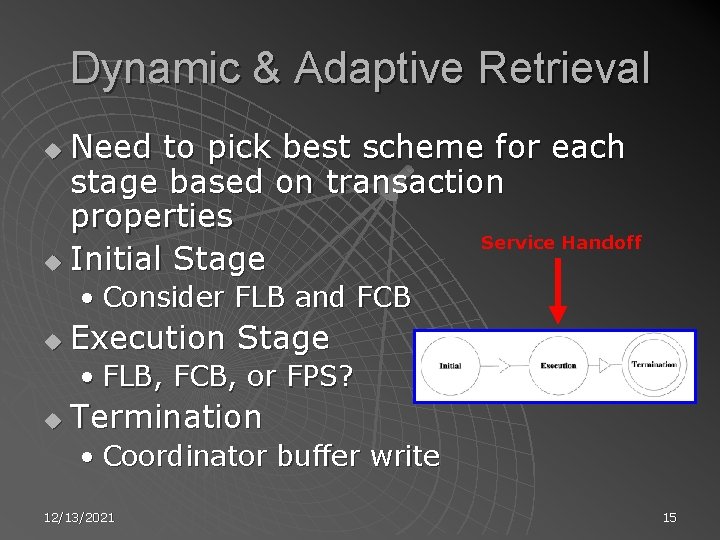

Dynamic & Adaptive Retrieval Need to pick best scheme for each stage based on transaction properties Service Handoff u Initial Stage u • Consider FLB and FCB u Execution Stage • FLB, FCB, or FPS? u Termination • Coordinator buffer write 12/13/2021 15

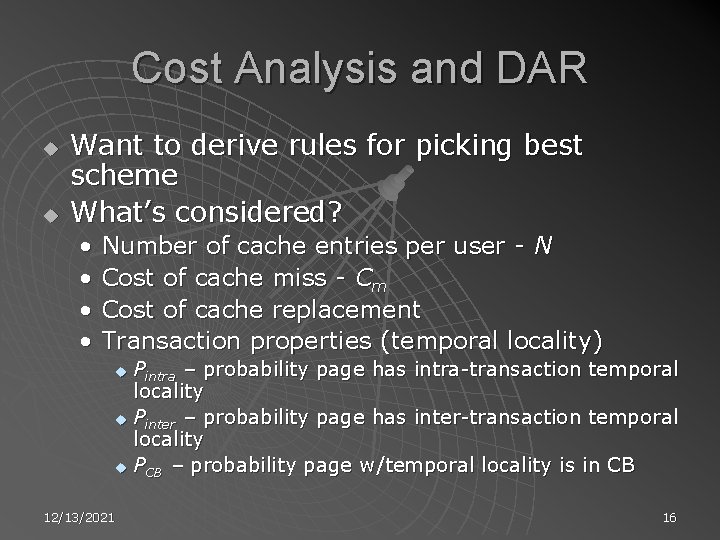

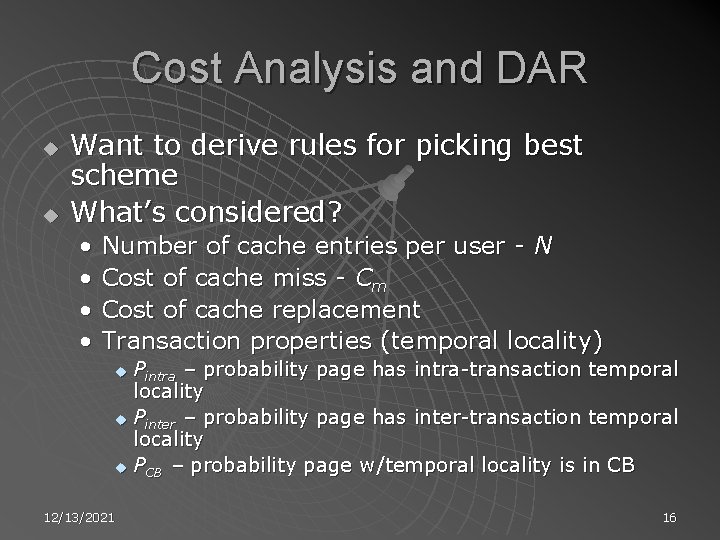

Cost Analysis and DAR u u Want to derive rules for picking best scheme What’s considered? • • Number of cache entries per user - N Cost of cache miss - Cm Cost of cache replacement Transaction properties (temporal locality) Pintra – probability page has intra-transaction temporal locality u Pinter – probability page has inter-transaction temporal locality u PCB – probability page w/temporal locality is in CB u 12/13/2021 16

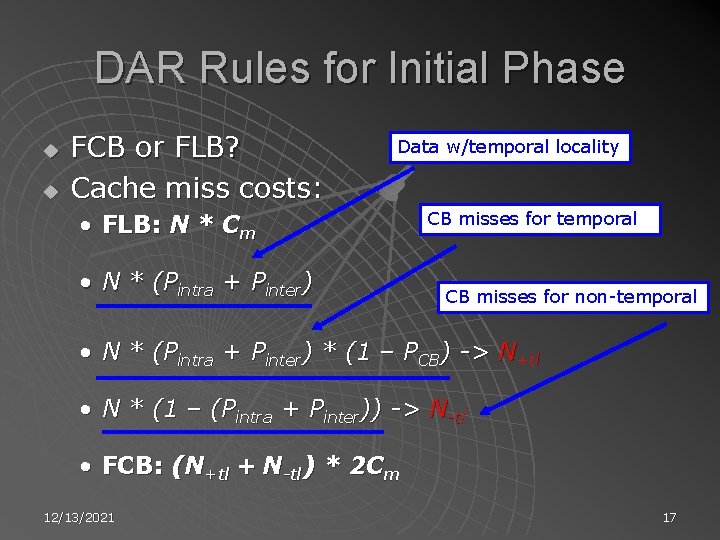

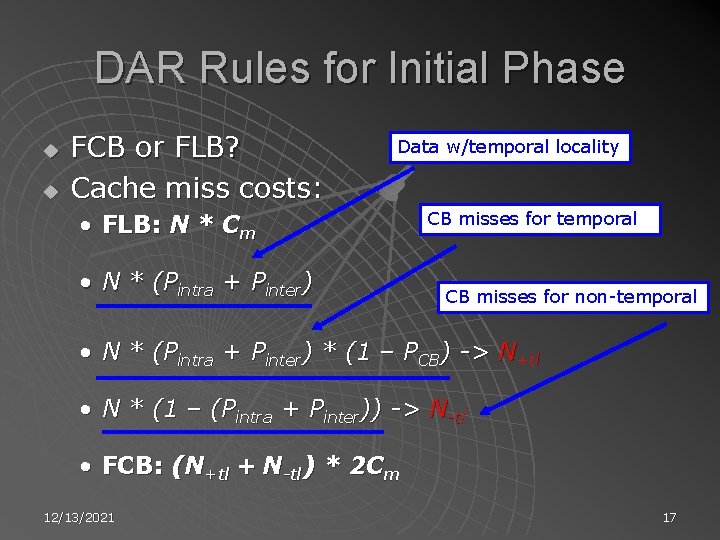

DAR Rules for Initial Phase u u FCB or FLB? Cache miss costs: Data w/temporal locality • FLB: N * Cm • N * (Pintra + Pinter) CB misses for temporal CB misses for non-temporal • N * (Pintra + Pinter) * (1 – PCB) -> N+tl • N * (1 – (Pintra + Pinter)) -> N-tl • FCB: (N+tl + N-tl) * 2 Cm 12/13/2021 17

Rules for Initial Phase (cont’d) u FCB profitable if: • Cost of FCB < Cost of FLB • (N+tl + N-tl) * 2 Cm < N * Cm • (Pintra + Pinter) > 0. 5 / PCB If temporal locality is prominent, FCB is used (CB has more frequently used pages) u If it is not, use FLB to avoid unnecessary pre-fetching costs u 12/13/2021 18

DAR Rules for Execution Phase FCB, FLB, or FPS? u If no temporal locality, go with FLB (same as for Initial Phase) u Otherwise, decide based on ratio of intra- to interu Use threshold φ to decide: u Pintra/Pinter ≥ φ Pintra/Pinter < φ 12/13/2021 FPS FCB 19

DAR Rules Summary u Initial Phase u Execution Phase 12/13/2021 20

Performance Study u u 64 servers - 8 x 8 mesh topology MU moves randomly between servers TXNSIZE: # of data objects (4 K page) in transaction (20 -30) Cache sizes: • • u Local Buffer: 1% of DBSIZE Coordinator Buffer: variant DB has K data objects: 1. Pintra * K (intra-transaction objects) 2. Pinter * K (inter-transaction objects) 3. Ppseudo * K (objects w/no temporal locality) 12/13/2021 21

Impact of Temporal Locality u Pinter fixed at 10%, Pintra varied (Pintra + Pinter) > 0. 5 / PCB ~ 0. 1 u Pintra fixed at 10%, Pinter varied 12/13/2021 22

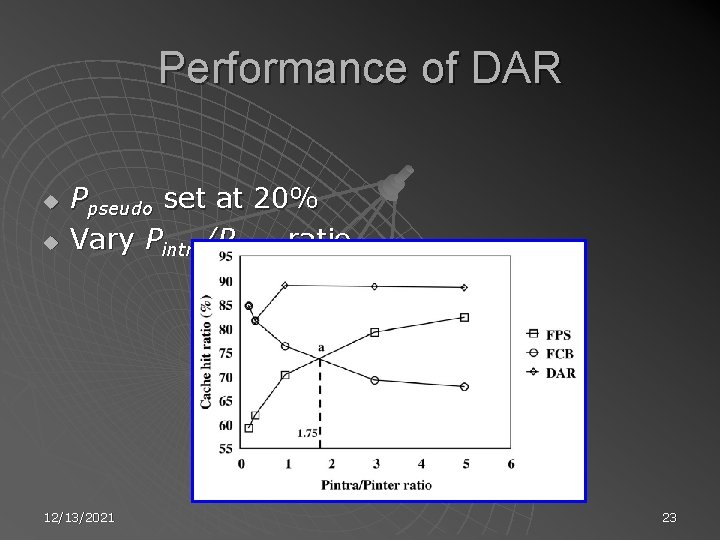

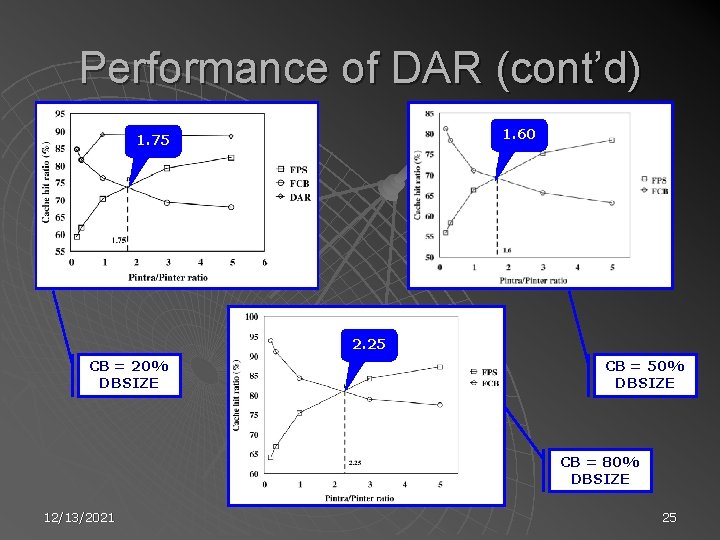

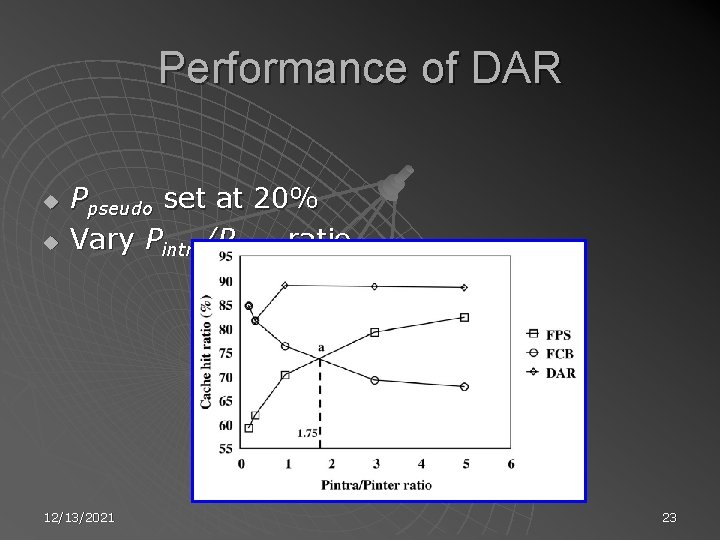

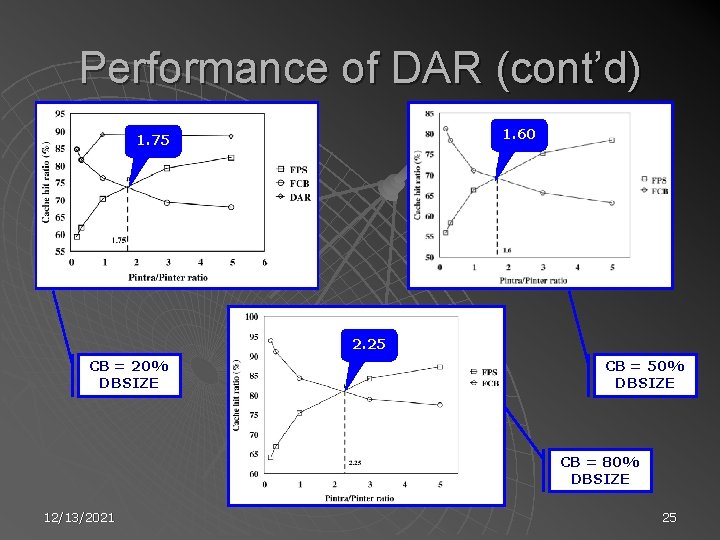

Performance of DAR u u Ppseudo set at 20% Vary Pintra/Pinter ratio 12/13/2021 23

Performance of DAR (cont’d) Can adapt to transaction properties u Higher cache hit ratio regardless of whether MU’s transactions have more inter- or intra- pages u Employs the appropriate cache retrieval method dynamically u Pintra/Pinter ≥ φ Pintra/Pinter < φ u FPS FCB What should the value of φ be? 12/13/2021 24

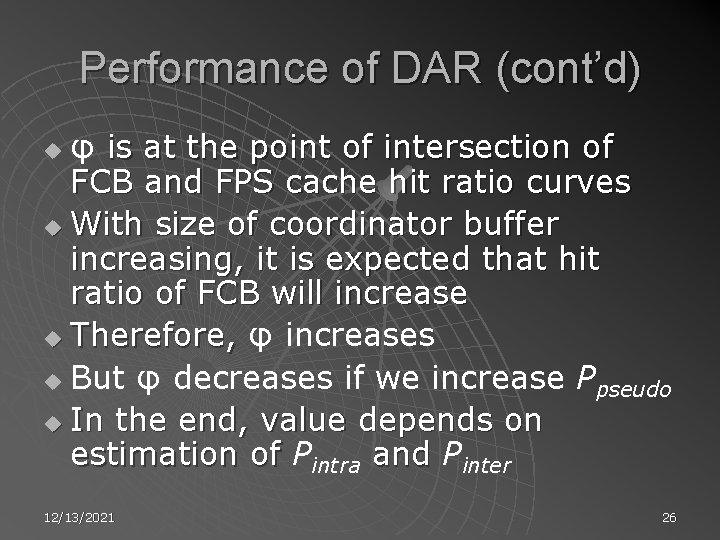

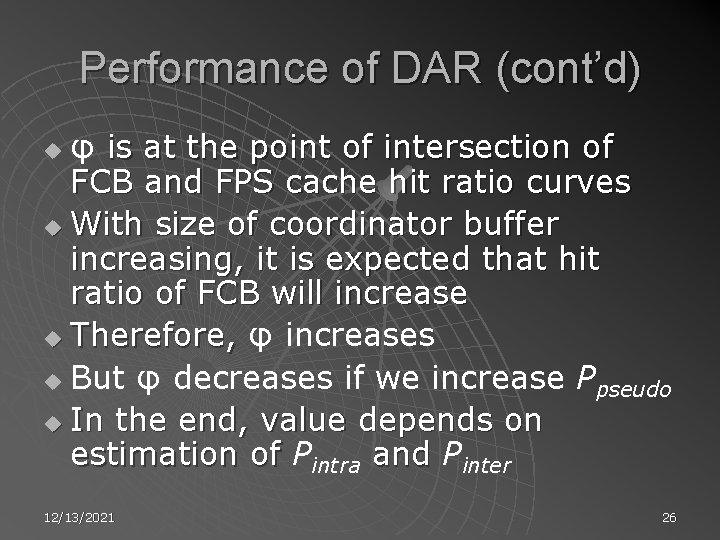

Performance of DAR (cont’d) 1. 60 1. 75 2. 25 CB = 20% DBSIZE CB = 50% DBSIZE CB = 80% DBSIZE 12/13/2021 25

Performance of DAR (cont’d) φ is at the point of intersection of FCB and FPS cache hit ratio curves u With size of coordinator buffer increasing, it is expected that hit ratio of FCB will increase u Therefore, φ increases u But φ decreases if we increase Ppseudo u In the end, value depends on estimation of Pintra and Pinter u 12/13/2021 26

Paper Summary u u u Examines several cache retrieval schemes to improve performance during service handoff Presents a cost model for evaluating the schemes presented Analyzes impact of temporal locality Analyzes impact of coordinator buffer size Performance analysis of all schemes presented, including validation on simulator 12/13/2021 27

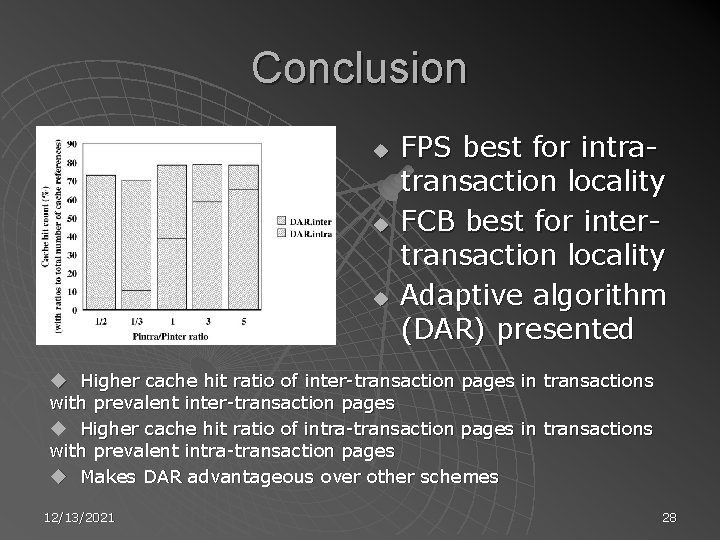

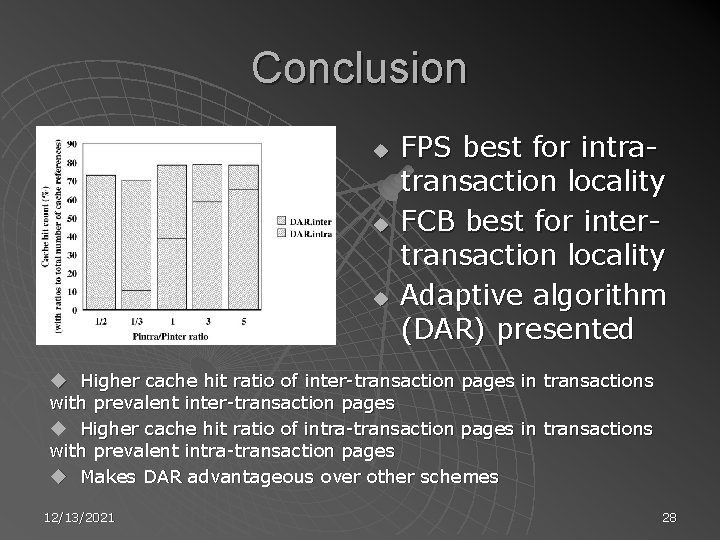

Conclusion u u u FPS best for intratransaction locality FCB best for intertransaction locality Adaptive algorithm (DAR) presented u Higher cache hit ratio of inter-transaction pages in transactions with prevalent inter-transaction pages u Higher cache hit ratio of intra-transaction pages in transactions with prevalent intra-transaction pages u Makes DAR advantageous over other schemes 12/13/2021 28